How to make an elephant out of a fly

Many people know such an old kind game with words, to make an elephant out of a fly. Its essence is that you need to make the final one from the initial word, changing only one letter at each step, and at the same time getting a meaningful noun at each step.

The well-known author of books on entertaining mathematics E. Ya. Gik in his book “ Entertaining math games ” published a 16-way solution, how to make an elephant from a fly: a fly-mura-tour-tara-kara-kare-cafe-kafr-kayur- Kayuk-hook-apricot-lesson-term-stock-moan-elephant.

')

And so, one day I happened to take up the solution of this problem in software.

Honestly, an elephant from a fly came out pretty quickly.

The general idea of the solution:

- take a dictionary of nouns

- iterative algorithm to go from the source word to the end, if it is possible to achieve the last

- give the resultant chain, and it is desirable that it strives for the minimum length

So…

It turned out that even with the first item there are problems - finding a worthwhile dictionary of nouns turned out to be a separate subtask. I do not remember where exactly, but I found a tolerable ready-made dictionary. One word per line format, utf8, delimiters \ r \ n - and left it in the future.

Naturally, casually inquired whether the problem was solved by flies and elephants, and how. A good solution was found here planetcalc.ru/544 . For only 4 alphabetic words, under javascript (that in fact the idea is correct for this application - it makes no sense to drive server capacity, when client hardware in the browser can do the search). However, the source did not look, but looked at the sound reasoning below in the article.

Indeed, if one uses the construction of a tree with all variants as an algorithm, until the required word is found when laying the next level, then there will not be enough resources.

For example, the word KORA has 19 transitions only from common words that have come to mind, from "mountain" to "court."

Even if we give a very optimistic estimate for the average number of modifications in 5 (total!), Then if a minimum path to a word is 10 steps, then a tree of 5 10 ~ = 10 million nodes should fit in the memory. Considering the overhead of maintaining the tree structure (at least 2 pointers to descendants from the ancestor each are 4/8 bytes) and to the actual storage of node data (language / structure variable wrapper + data itself: characters in utf8 are even more than 10 bytes) we get The RAM requirement for such conditions is at least 200-300 MB. And conditions can be much worse.

Genetic algorithm selected.

Moreover, he simply begs here - changing a letter in a word is essentially a mutation. The condition of the survival of the descendant during the "birth" is the existence in the dictionary. The condition of successful competition, fitness - the degree of similarity with the desired word.

Fairly I took the weight function from the description on the same planetcalc.ru/544 . I thought about why it is, for myself understood this:

- the identity of the letters in the correct positions 3 points: No comment, the maximum score here is logical

- vowel, albeit different, in the right position 2 points: The vowel in the right place is much harder to drag, but then it is using mutations of consonants, and there are more such options, it is easier to embarrass already in the right vowel. In addition, the vowel “sets the tone” - consonants spin around it more easily, including those necessary for the word being searched for.

- the presence of a vowel in general 1 point: Similar reasoning with the above, the vowel is much more difficult to mutate than the consonants.

Separately, I note that at all stages of the search the reference word, which should be obtained and with which there is a constant comparison, is one and the same. For example, the same elephant . Therefore, it is logical in such an anatomical form that it is “logical to disassemble an elephant that has been taken apart into pieces for comparing an elephant” (a poor animal).

For simplicity and without thinking twice, I built the simplest cache right in the evaluation function.

The search for new variants of mutations goes according to the dictionary and sub-vocabulary, starting from a given word. At the same time there are several additional logical limitations.

The first limitation is the valid letter positions for the mutation. In fact, if at the last step we, for example, changed the 3rd letter, making the move “muHa” - “muza”, then at the next step the mutation “muza” - “mura” is meaningless. After all, we are interested in the shortest possible chain. And in this way, we obviously make an extra step, because you could immediately make the move of MuHa - MuRa. Therefore, one of the parameters of the function is the position of the past mutation.

The second limitation is the uniqueness of the words in the chain. Explained too simply. Suppose there is a chain "fly" - " flour " - "beech" - "borax" - "mura" - " flour " - "hand". It is obvious that a piece of " flour " - "beech" - "borax" - "mura" was superfluous in the chain. And even if it reaches the final desired "elephant", it is exactly the same, but the chain of unique words with ejected repetitions will be shorter. It means better. So such cycles because of repetitions, we do not need. Therefore, one more of the parameters of the function of searching for variants of mutations is making an array of words (in this case, word id), which have already been used in the chain.

The word length parameter is me on matches mb_strlen saved. This is how the method was conceived as private, but for tests and tests it was published. Do not let such things in production :) In any case, without covering checks.

And the final word ... maybe some kind of human reflection, and maybe intuition, left the possibility of using it for later. Yet it is logical to expect from the function of receiving a set of descendants of some kind depending on whom they should be similar to. Nothing prevents you from doing the primary screening right here. But for now - not used.

The algorithm is good, but we have a source of data (words) in the form of a file, with which it is necessary to work effectively and a lot from the search algorithm.

Yes, this dictionary file is sorted alphabetically in ascending order. And it is not so gigantic, only about 1 MB, so that we can safely download it to work in the entire RAM.

In this case, of course, it should be understood that in the "loaded and decomposed" into the data structure in the form of an array, depending on the language, the dictionary will occupy more memory. For PHP 5.4 it turned out that the dictionary in the loaded form weighs about 6 MB.

[Here is the first thought about the logical use of the database. But I decided to try it first without her.]

But:

- in PHP array_search, stupid selector, tell him “hey, the array is sorted, search binary” is not possible, there are no other suitable functions out of the box, you could not play with a crutch flip-keyexists and it was difficult to apply

- even if there was a quick binary search function in a sorted array, there is a mask search problem with an embossed character.

The first is solved by the binary search bike for PHP. I borrowed a ride from a friend, terenceyim.wordpress.com/2011/02/01/all-purpose-binary-search-in-php .

It should be noted that this version of binary search is the most common, arithmetic, suitable for working in a sorted array with sequential integer numbering (keys from 0 to N-1 for example).

I used the truth not as it is, but modified the search. In the case of an array of non-unique elements, the posik stopped at the first equal item to be found. And I needed him to give the position of the very first key from the identical elements of the array. The point is to simplify the subsequent algorithm, and when sorting out a set of equals, just follow the found key down the array.

Example: we are looking for the MUA, there is an array (see below) [... 99-MS (t) ITEL, 100-MU (c) A, 101-MU (k) A, 102-MU (p) A, 103-MU ( r) A, 104-MU (x) A, 105-MU (p) AVEY, 106-MU (p) AVEYNIK ...] Ordinary binary search falls into the next iteration, say, into the key 102. The value of the element (MUA, is obtained from the word MURA ) is equal to the required (MUA, we are looking for descendants for MUHA) and this key would have come to us. And then clutter the logic over and up and down. The modified algorithm finds the very first one, the key 100, and then you can go successively down the array until the element == is searched for.

Secondly, I figured that the “RAM would fully survive” and decided to do it through sub-dictionary. Where the i-th sub-dictionary is built from the main dictionary with cutting out the i-th letter from a word. Type MACHINE => (i = 2) MRYNA. For such dictionaries, you can use the same binary search for cases of embossed letters in positions for which there are dictionaries.

In the case when the embossed letter is too far from the beginning of the word, and there is no sub-dictionary for such a position, we proceed as follows:

- in the main dictionary we are looking for “non-beat beginning”

- from the position found, we simply go down through the array, until the element (word) begins as we search, has the required length and collects all of these words into variants, which end as it should.

Since the search proceeds along a limited part of the dictionary, where the first position is determined by binary search at the start, even with three dictionaries, the search for a 4th letter and older is no longer a fatal bottleneck.

An acceptable compromise on memory / speed was the use of 3-4 subloviers.

The numbers for the transformation "fly" - "elephant":

Chain: "fly" - "Mura" - "wagon" - "handicap" - "bark" - "root" - "koan" - "clan" - "clone" - "elephant" (9 transitions)

Of course, it is absolutely logical that the 5th sub-dictionary (where the 5th letter was removed from the words and turned out to be re-sorted) is not needed for the transformation of a 4-letter fly and an elephant, and is only a burden. But let's look at another example:

For comparison, the transformation from "pine" to "protein":

- for 4 subloviers: download 2.41 seconds, search 1.07 seconds, total 3.48 seconds

- for 5 sublovie: download 3.01 seconds, search 0.36 seconds, total 3.37 seconds

Those. The 5th sub-dictionary can be added except after optimizing the storage of dictionaries, caching, and algorithm. And now it’s just extra consumption of RAM.

But ... But "just somehow tolerably working option on the knee" did not suit me. And I continued to improve the conversion of flies into elephants.

1st version (PHP 5.4)

I combed a lot.

Added checks.

Added throwing exceptions instead of silently incomprehensible dying.

Selected a config.

I am ready to switch to the database - I rendered the data logic into a separate mapper.

Etc. on architecture.

But this is not interesting. The most interesting changes here are a parser, a randomness factor and an evaluation function based on the frequency characteristics of the letters.

I noticed that the source dictionary, though quite large, but for some reason there are not even some commonly used words. And attention))) there was no elephant ! There was an elephant , an elephant was, and an elephant was oops. Discrimination.

Yes, for the fulfillment of the goal (to make an elephant out of a fly) for the first version, we had to google typical chain answers, make sure, surprisingly, that many words, again, are not in the dictionary, and add several pieces manually to the appropriate positions.

[And yes, in this dictionary I stumbled for the first time (from the subsequent php sort cones, despite the correct locale for setlocale and mb_string) that the words in E were suddenly at the end of the dictionary.]

I decided to correct this point by taking additional words, albeit not from a dictionary ready for technical use, but at least from where.

A lot of links led to chyjik.narod.ru/index.htm , but he suddenly found himself already some year into oblivion by the evil Yandex, who bought Narod.ru at one time and broke it in pursuit of ferting.

But then the great web archive helped, for which he thanks.

He pumped out all that was, the whole preserved “crossword writer’s dictionary”, saved it in data / psrc /, wrote parse.php on a regular schedule (which he then corrected several times, because the site was with a person, it seems, MS Word was written, and were not completely identical in layout), - expanded the dictionary of percent by 50%.

The chain was always the same, which is, in general, obvious. To be able to "play" and "suddenly find better," he introduced the randomness factor on mt_rand into the evaluation function. It became more interesting. Sometimes new, shorter chains, which I had never guessed before, were really visible.

Of course, there is a downside: for uncomfortable pairs, there is a search and does not find a chain. Or is a little longer than usual. But still the main case is quite stable.

More specifically, the randomness is introduced “very slightly” - to the comparison function when organizing according to the fitness of the new generation - words having the same assessment of fitness began to be placed in a random order.

From MOON, ELEPHANT was quite lively and pretty, within 10 transitions.

But (!)

from the FLY the word SLOGH turned out ... obstinately transitions to 60-70 (!).

But it is obvious that it should be only 1 longer than in the ELEPHANT. Man is obvious. No car, it is according to the algorithm. Algorithm error. Error evaluation function.

I didn’t hide it a lot.

It turned out well by 5 positions to shorten the chain with dubious changes in the alignment. But this is not the result that is needed.

Obviously, the problem was not solved with the summer of adjustment, I thought. What's the matter. What is the difference. The difference in the last letter, yes, a fact. There is a dream, and here is a word. What these letters are so different that everything is so bad ...

Right. Frequency of use! And according to the number of related mutations. That is, “a full hit on G = G” may be worse, or at least not much better for assessing “fitness” than “not hit H, M, K, ...! = G”. But, of course, it is much better than “not hits Y, b, y, ...! = G”.

I took a table of frequencies of use of letters from the wiki. (In fact, this is not entirely correct, it is necessary to count the frequency according to the existing dictionary of nouns, but there would hardly be any cardinal differences). I drove as it is in the code. It’s not very beautiful, yes, but it’s for now. He cut the frequencies of letters into normalized arrays according to vowels and consonants, with normalization by the most commonly used vowel / consonant. Rewrote the evaluation function. THERE IS! ELEPHANT + 1!

Yes, and the ELEPHANT began to get another step or two faster.

New numbers for the transformation "fly" - "elephant":

Chain: "fly" - "muna" - "mine" - "lina" - "lynn" - "lyon" - "zion" - "elephant" (7 transitions).

As you can see, the dictionary (from 0.68 MB to 1.03 MB, + 51%), and with it the dictionaries and the final zhor of the RAM. Combinatorics improved, and even though at each step mutations began to crumble more, which means they began to walk more slowly - the final goal, with reachability, began to be obtained faster, in a smaller number of steps.

However, in terms of time, the search turned out not at all faster, even for 4 subloviers. One factor did not compensate for the other. Nevertheless, in essence, expanding the dictionary is an absolutely correct and necessary move to get closer to real combat conditions. And there are many other crossword dictionaries and dictionaries, including online ones, with which you can work to expand our vocabulary.

2nd version (same PHP 5.4).

Honestly, I expected more from the version. Still, the base (it was MySQL 5.5 at hand) should, well, it should have provided a takeoff at least several times, if not by orders of magnitude.

Apparently, in the version with files, I actually achieved the memcache effect — a bunch of dictionaries in memory, and the algorithm, in general, is the same for both file and mysql versions. In the construction of the base I think I think, all the necessary indices for the work have been affixed.

The files themselves slowed me down only at the stage of loading them into the RAM - about 4 seconds. And the search from a molehill was about 0.8 seconds. So overall, the MySQL version wins, with a “load” of about 0.002 seconds and a search of about 0.95 seconds. It’s clear that she doesn’t need to download anything, after one or two past script downloads, the cache is warm and everything is already loaded and waiting.

Anyway, the answer in 1 second is better than in 5 seconds.

Initially, however, it was under 2 seconds, because of the plentiful SELECT queries, the word software id. Aggressive caching in the MySQL mapper eliminated this problem. Also, of course, not in an optimal way, but this is still not a highload production, but an experiment. It is quite tolerant at this stage.

And by the way, as a result of the excellently shown evaluation function taking into account the frequencies of the letters, it was possible to reduce the population number in the generation from 100 to 50 without affecting the result. By the way, even the population of 20 showed itself not much worse, but left 50.

This made it possible to significantly reduce the search time. For example, the same flies and elephants from about 1 second to 0.5-0.6 seconds.

So,

3rd version (Again PHP 5.4, but already with MySQL 5.5)

In the third step, we have a solution for PHP 5.4 + MySQL 5.5, which requires about 0.5 seconds. to turn a fly into an elephant in 9 iterations:

The script does not consume as much horse operation as in the version with pure PHP and dictionary files (under 100 MB), the consumption is the most usual. The same data stored in the DBMS, behave more decently for memory appetites.

Of course, the solution is not perfect, I know. Much more can be done:

The theme is addictive ... Maybe the story will have a sequel. In any case, I was hooked strongly, no less than Diamond-Square .

PS:

Of course, in this article it is impossible not to leave a link to how artists make an elephant out of a fly .

Comments and comments are welcome! Maybe missed some simple and important things. Thank you for attention.

The well-known author of books on entertaining mathematics E. Ya. Gik in his book “ Entertaining math games ” published a 16-way solution, how to make an elephant from a fly: a fly-mura-tour-tara-kara-kare-cafe-kafr-kayur- Kayuk-hook-apricot-lesson-term-stock-moan-elephant.

')

And so, one day I happened to take up the solution of this problem in software.

From an elephant fly, the first version

Honestly, an elephant from a fly came out pretty quickly.

The general idea of the solution:

- take a dictionary of nouns

- iterative algorithm to go from the source word to the end, if it is possible to achieve the last

- give the resultant chain, and it is desirable that it strives for the minimum length

So…

1) Dictionary of nouns

It turned out that even with the first item there are problems - finding a worthwhile dictionary of nouns turned out to be a separate subtask. I do not remember where exactly, but I found a tolerable ready-made dictionary. One word per line format, utf8, delimiters \ r \ n - and left it in the future.

2) Algorithm

Naturally, casually inquired whether the problem was solved by flies and elephants, and how. A good solution was found here planetcalc.ru/544 . For only 4 alphabetic words, under javascript (that in fact the idea is correct for this application - it makes no sense to drive server capacity, when client hardware in the browser can do the search). However, the source did not look, but looked at the sound reasoning below in the article.

Indeed, if one uses the construction of a tree with all variants as an algorithm, until the required word is found when laying the next level, then there will not be enough resources.

For example, the word KORA has 19 transitions only from common words that have come to mind, from "mountain" to "court."

Even if we give a very optimistic estimate for the average number of modifications in 5 (total!), Then if a minimum path to a word is 10 steps, then a tree of 5 10 ~ = 10 million nodes should fit in the memory. Considering the overhead of maintaining the tree structure (at least 2 pointers to descendants from the ancestor each are 4/8 bytes) and to the actual storage of node data (language / structure variable wrapper + data itself: characters in utf8 are even more than 10 bytes) we get The RAM requirement for such conditions is at least 200-300 MB. And conditions can be much worse.

Genetic algorithm selected.

Moreover, he simply begs here - changing a letter in a word is essentially a mutation. The condition of the survival of the descendant during the "birth" is the existence in the dictionary. The condition of successful competition, fitness - the degree of similarity with the desired word.

Genetic search function word chain

/** * * * * * @param string $wordFrom - * @param string $wordTo - * @return array * @throws WordWizardException */ public function FindMutationChain($wordFrom, $wordTo, $maxSteps = 1000, $maxPopulation = 100) { $resultKeysChain = []; $resultChain = []; // $wordFrom = mb_convert_case($wordFrom, MB_CASE_LOWER); $wordTo = mb_convert_case($wordTo, MB_CASE_LOWER); $fromLen = mb_strlen($wordFrom); $toLen = mb_strlen($wordTo); // if ($fromLen != $toLen) { throw new WordWizardException(" ."); } // $wordFromKey = binary_search($this->_dictionary, $wordFrom); // , , $wordToKey = binary_search($this->_dictionary, $wordTo); if ($wordToKey < 0) { throw new WordWizardException(" \"$wordTo\" ."); } // $mutationChains = [ [ 'keys' => [$wordFromKey], 'mutatedPositions' => [-1] ] ]; $population = 1; // for ($step = 0; $step < $maxSteps; $step++) { // ? foreach ($mutationChains as $mutationChain) { if (end($mutationChain['keys']) == $wordToKey) { // ( ) $resultKeysChain = $mutationChain['keys']; break 2; } } // $newMutationChains = []; foreach ($mutationChains as $mutationChain) { $lastKey = end($mutationChain['keys']); $lastMutatedPos = end($mutationChain['mutatedPositions']); $lastWord = $this->_dictionary[$lastKey]; $nextMutations = $this->FindMutationVariants($lastWord, $wordTo, $fromLen, $lastMutatedPos, $mutationChain['keys']); foreach ($nextMutations as $mutation) { list($nextKey, $nextMutatedPos) = $mutation; $nextWord = $this->_dictionary[$nextKey]; $score = $this->GetWordScore($nextWord, $wordTo); // $newMutationChain = $mutationChain; $newMutationChain['keys'][] = $nextKey; $newMutationChain['mutatedPositions'][] = $nextMutatedPos; $newMutationChain['score'] = $score; $newMutationChains[] = $newMutationChain; } } // $mutationChains = $newMutationChains; // .. if (empty($mutationChains)) { throw new WordWizardException(" $step ( $maxSteps) . ."); } // " " ( ) usort($mutationChains, function($a, $b) { return $b['score'] - $a['score']; }); // - array_splice($mutationChains, $maxPopulation); } // ? if ($step == $maxSteps) { throw new WordWizardException(" ($maxSteps), ."); } // if ($resultKeysChain) { foreach ($resultKeysChain as $key) { $resultChain[] = $this->_dictionary[$key]; } } return $resultChain; } Fairly I took the weight function from the description on the same planetcalc.ru/544 . I thought about why it is, for myself understood this:

- the identity of the letters in the correct positions 3 points: No comment, the maximum score here is logical

- vowel, albeit different, in the right position 2 points: The vowel in the right place is much harder to drag, but then it is using mutations of consonants, and there are more such options, it is easier to embarrass already in the right vowel. In addition, the vowel “sets the tone” - consonants spin around it more easily, including those necessary for the word being searched for.

- the presence of a vowel in general 1 point: Similar reasoning with the above, the vowel is much more difficult to mutate than the consonants.

Separately, I note that at all stages of the search the reference word, which should be obtained and with which there is a constant comparison, is one and the same. For example, the same elephant . Therefore, it is logical in such an anatomical form that it is “logical to disassemble an elephant that has been taken apart into pieces for comparing an elephant” (a poor animal).

For simplicity and without thinking twice, I built the simplest cache right in the evaluation function.

The function of assessing the similarity of a pair of words

/** * * * @param string $word - * @param string $comparationWord - * @return int */ public function GetWordScore($word, $comparationWord) { // - , - static $cachedComparationWord = ''; static $wordLen = 0; static $cwLetters = []; if ($cachedComparationWord != $comparationWord) { $cachedComparationWord = $comparationWord; $wordLen = mb_strlen($comparationWord); $cwLetters = []; for ($i = 0; $i < $wordLen; $i++) { $letter = mb_substr($comparationWord, $i, 1); $cwLetters[$i] = [ 'letter' => $letter, 'isVovel' => false === mb_strpos($this->_vovels, $letter) ? false : true, ]; } } $score = 0; for ($i = 0; $i < $wordLen; $i++) { $letter = mb_substr($word, $i, 1); // = 3 if ($letter == $cwLetters[$i]['letter']) { $score += 3; continue; } $isVovel = (false === mb_strpos($this->_vovels, $letter) ? false : true); if ($isVovel) { if ($cwLetters[$i]['isVovel']) { // = 2 $score += 2; } else { // = 1 $score += 1; } } } return $score; } The search for new variants of mutations goes according to the dictionary and sub-vocabulary, starting from a given word. At the same time there are several additional logical limitations.

The first limitation is the valid letter positions for the mutation. In fact, if at the last step we, for example, changed the 3rd letter, making the move “muHa” - “muza”, then at the next step the mutation “muza” - “mura” is meaningless. After all, we are interested in the shortest possible chain. And in this way, we obviously make an extra step, because you could immediately make the move of MuHa - MuRa. Therefore, one of the parameters of the function is the position of the past mutation.

The second limitation is the uniqueness of the words in the chain. Explained too simply. Suppose there is a chain "fly" - " flour " - "beech" - "borax" - "mura" - " flour " - "hand". It is obvious that a piece of " flour " - "beech" - "borax" - "mura" was superfluous in the chain. And even if it reaches the final desired "elephant", it is exactly the same, but the chain of unique words with ejected repetitions will be shorter. It means better. So such cycles because of repetitions, we do not need. Therefore, one more of the parameters of the function of searching for variants of mutations is making an array of words (in this case, word id), which have already been used in the chain.

The word length parameter is me on matches mb_strlen saved. This is how the method was conceived as private, but for tests and tests it was published. Do not let such things in production :) In any case, without covering checks.

And the final word ... maybe some kind of human reflection, and maybe intuition, left the possibility of using it for later. Yet it is logical to expect from the function of receiving a set of descendants of some kind depending on whom they should be similar to. Nothing prevents you from doing the primary screening right here. But for now - not used.

The function of obtaining possible mutations

/** * {id , } * * * * @param string $wordFrom - * @param string $wordTo - * @param int $wordLen - * @param int $disabledMutationPos - , ( ) * @param array $excludedWordKeys - * @return array */ public function FindMutationVariants($wordFrom, $wordTo, $wordLen, $disabledMutationPos, $excludedWordKeys) { $variants = []; for ($mutPos = 0; $mutPos < $wordLen; $mutPos++) { // ( , . ) if ($mutPos == $disabledMutationPos) continue; // $mutPos- $wordBeginning = mb_substr($wordFrom, 0, $mutPos); $wordEnding = mb_substr($wordFrom, $mutPos + 1); // if ($mutPos < self::SUB_DICTIONARIES_MAX) { // , $subDictionary = $this->_subDictionaries[$mutPos + 1]; $pseudoWord = $wordBeginning . $wordEnding; $pseudoWordKey = binary_search($subDictionary, $pseudoWord, 0, NULL, [$this, 'SubDictionaryWordsCmp']); if ($pseudoWordKey >= 0) { // PHP , // $row = $subDictionary[$pseudoWordKey]; foreach ($row[self::_SDW_WORDS_KEYS] as $key) { // - if (in_array($key, $excludedWordKeys)) continue; $variants[$key] = [$key, $mutPos]; } } } else { // - , if ($mutPos == 0) { // , // , - // ( ) $key = 0; } else { // $key = binary_search($this->_dictionary, $wordBeginning); if ($key < 0) { $key = -$key; } } // for ($key; isset($this->_dictionary[$key]); $key++) { $word = $this->_dictionary[$key]; // , if (mb_substr($word, 0, $mutPos) != $wordBeginning) { break; } // ( ) if (mb_strlen($word) != $wordLen) { continue; } // , if (mb_substr($word, $mutPos + 1) != $wordEnding) { continue; } // - if (in_array($key, $excludedWordKeys)) continue; // , $variants[$key] = [$key, $mutPos]; } } } return $variants; } 3) Work with a dictionary

The algorithm is good, but we have a source of data (words) in the form of a file, with which it is necessary to work effectively and a lot from the search algorithm.

Yes, this dictionary file is sorted alphabetically in ascending order. And it is not so gigantic, only about 1 MB, so that we can safely download it to work in the entire RAM.

In this case, of course, it should be understood that in the "loaded and decomposed" into the data structure in the form of an array, depending on the language, the dictionary will occupy more memory. For PHP 5.4 it turned out that the dictionary in the loaded form weighs about 6 MB.

Here too.

Looking ahead, the underclothes weigh even more.

[Here is the first thought about the logical use of the database. But I decided to try it first without her.]

But:

- in PHP array_search, stupid selector, tell him “hey, the array is sorted, search binary” is not possible, there are no other suitable functions out of the box, you could not play with a crutch flip-keyexists and it was difficult to apply

- even if there was a quick binary search function in a sorted array, there is a mask search problem with an embossed character.

3.1) Quick search in a sorted array of the first of non-unique values

The first is solved by the binary search bike for PHP. I borrowed a ride from a friend, terenceyim.wordpress.com/2011/02/01/all-purpose-binary-search-in-php .

It should be noted that this version of binary search is the most common, arithmetic, suitable for working in a sorted array with sequential integer numbering (keys from 0 to N-1 for example).

I used the truth not as it is, but modified the search. In the case of an array of non-unique elements, the posik stopped at the first equal item to be found. And I needed him to give the position of the very first key from the identical elements of the array. The point is to simplify the subsequent algorithm, and when sorting out a set of equals, just follow the found key down the array.

Example: we are looking for the MUA, there is an array (see below) [... 99-MS (t) ITEL, 100-MU (c) A, 101-MU (k) A, 102-MU (p) A, 103-MU ( r) A, 104-MU (x) A, 105-MU (p) AVEY, 106-MU (p) AVEYNIK ...] Ordinary binary search falls into the next iteration, say, into the key 102. The value of the element (MUA, is obtained from the word MURA ) is equal to the required (MUA, we are looking for descendants for MUHA) and this key would have come to us. And then clutter the logic over and up and down. The modified algorithm finds the very first one, the key 100, and then you can go successively down the array until the element == is searched for.

Modified binary search

/** * * * @param array $a - ( ) * @param mixed $needle - , * @param int $first - () * @param int $last - ( ) * @param string $compare - . usort * * @return int * . * -(insert_index + 1). * insert_index , * , sizeof($a) * . */ function binary_search(array $a, $needle, $first = 0, $last = NULL, $compare = 'default_cmp') { if ($last === NULL) { $last = count($a); } $lo = $first; $hi = $last - 1; while ($lo <= $hi) { $mid = (int)(($hi - $lo) / 2) + $lo; $cmp = call_user_func($compare, $a[$mid], $needle); if ($cmp < 0) { $lo = $mid + 1; } elseif ($cmp > 0) { $hi = $mid - 1; } else { $hi = $mid - 1; // $mid // , . //return $mid; } } $cmp = call_user_func($compare, $a[$lo], $needle); return $cmp == 0 ? $lo : -($lo + 1); } /** * * * @param mixed $a - * @param mixed $b - * @return int : -1 , 0 , 1 */ function default_cmp($a, $b) { return ($a < $b) ? -1 : (($a > $b) ? 1 : 0); } 3.2) Auxiliary pseudowords dictionaries

Secondly, I figured that the “RAM would fully survive” and decided to do it through sub-dictionary. Where the i-th sub-dictionary is built from the main dictionary with cutting out the i-th letter from a word. Type MACHINE => (i = 2) MRYNA. For such dictionaries, you can use the same binary search for cases of embossed letters in positions for which there are dictionaries.

In the case when the embossed letter is too far from the beginning of the word, and there is no sub-dictionary for such a position, we proceed as follows:

- in the main dictionary we are looking for “non-beat beginning”

- from the position found, we simply go down through the array, until the element (word) begins as we search, has the required length and collects all of these words into variants, which end as it should.

Since the search proceeds along a limited part of the dictionary, where the first position is determined by binary search at the start, even with three dictionaries, the search for a 4th letter and older is no longer a fatal bottleneck.

An acceptable compromise on memory / speed was the use of 3-4 subloviers.

The numbers for the transformation "fly" - "elephant":

| Configuration | T download dictionaries | T search | T total | Consumption of ram |

|---|---|---|---|---|

| Main dictionary only | 0.02 sec | 137 sec | 137 sec | 6 MB |

| 1 subdiction | 0.61 sec | 16.40 seconds | 17.01 seconds | 25 MB |

| 2 subbooks | 1.20 seconds | 4.73 seconds | 5.93 seconds | 44 MB |

| 3 subbooks | 1.85 seconds | 2.72 seconds | 4.57 seconds | 62 MB |

| 4 subbooks | 2.42 seconds | 0.82 sec | 3.24 seconds | 79 MB |

| 5 sublovie | 2.98 seconds | 0.77 seconds | 3.75 seconds | 97 MB |

Chain: "fly" - "Mura" - "wagon" - "handicap" - "bark" - "root" - "koan" - "clan" - "clone" - "elephant" (9 transitions)

Of course, it is absolutely logical that the 5th sub-dictionary (where the 5th letter was removed from the words and turned out to be re-sorted) is not needed for the transformation of a 4-letter fly and an elephant, and is only a burden. But let's look at another example:

For comparison, the transformation from "pine" to "protein":

- for 4 subloviers: download 2.41 seconds, search 1.07 seconds, total 3.48 seconds

- for 5 sublovie: download 3.01 seconds, search 0.36 seconds, total 3.37 seconds

Those. The 5th sub-dictionary can be added except after optimizing the storage of dictionaries, caching, and algorithm. And now it’s just extra consumption of RAM.

But ... But "just somehow tolerably working option on the knee" did not suit me. And I continued to improve the conversion of flies into elephants.

1st version (PHP 5.4)

*

* It would be nice to pause here to rest your eyes, a cup of coffee, and in that spirit

*

Elephant lipstick painted ear

The second, trunk, tail and now

A pink fly flies in the bedroom,

Humming and beating his head against the door.

kekc @ hohmodrom.ru

Version two

I combed a lot.

Added checks.

Added throwing exceptions instead of silently incomprehensible dying.

Selected a config.

I am ready to switch to the database - I rendered the data logic into a separate mapper.

Etc. on architecture.

But this is not interesting. The most interesting changes here are a parser, a randomness factor and an evaluation function based on the frequency characteristics of the letters.

1) Parser

I noticed that the source dictionary, though quite large, but for some reason there are not even some commonly used words. And attention))) there was no elephant ! There was an elephant , an elephant was, and an elephant was oops. Discrimination.

Yes, for the fulfillment of the goal (to make an elephant out of a fly) for the first version, we had to google typical chain answers, make sure, surprisingly, that many words, again, are not in the dictionary, and add several pieces manually to the appropriate positions.

[And yes, in this dictionary I stumbled for the first time (from the subsequent php sort cones, despite the correct locale for setlocale and mb_string) that the words in E were suddenly at the end of the dictionary.]

I decided to correct this point by taking additional words, albeit not from a dictionary ready for technical use, but at least from where.

A lot of links led to chyjik.narod.ru/index.htm , but he suddenly found himself already some year into oblivion by the evil Yandex, who bought Narod.ru at one time and broke it in pursuit of ferting.

But then the great web archive helped, for which he thanks.

He pumped out all that was, the whole preserved “crossword writer’s dictionary”, saved it in data / psrc /, wrote parse.php on a regular schedule (which he then corrected several times, because the site was with a person, it seems, MS Word was written, and were not completely identical in layout), - expanded the dictionary of percent by 50%.

2) Factor of chance

The chain was always the same, which is, in general, obvious. To be able to "play" and "suddenly find better," he introduced the randomness factor on mt_rand into the evaluation function. It became more interesting. Sometimes new, shorter chains, which I had never guessed before, were really visible.

Of course, there is a downside: for uncomfortable pairs, there is a search and does not find a chain. Or is a little longer than usual. But still the main case is quite stable.

More specifically, the randomness is introduced “very slightly” - to the comparison function when organizing according to the fitness of the new generation - words having the same assessment of fitness began to be placed in a random order.

Random Items

// " " ( ) usort($mutationChains, function($a, $b) { $diff = $b['score'] - $a['score']; return $diff ? $diff : mt_rand(-1, 1); }); 3) Evaluation function

From MOON, ELEPHANT was quite lively and pretty, within 10 transitions.

But (!)

from the FLY the word SLOGH turned out ... obstinately transitions to 60-70 (!).

But it is obvious that it should be only 1 longer than in the ELEPHANT. Man is obvious. No car, it is according to the algorithm. Algorithm error. Error evaluation function.

I didn’t hide it a lot.

It turned out well by 5 positions to shorten the chain with dubious changes in the alignment. But this is not the result that is needed.

Obviously, the problem was not solved with the summer of adjustment, I thought. What's the matter. What is the difference. The difference in the last letter, yes, a fact. There is a dream, and here is a word. What these letters are so different that everything is so bad ...

Right. Frequency of use! And according to the number of related mutations. That is, “a full hit on G = G” may be worse, or at least not much better for assessing “fitness” than “not hit H, M, K, ...! = G”. But, of course, it is much better than “not hits Y, b, y, ...! = G”.

I took a table of frequencies of use of letters from the wiki. (In fact, this is not entirely correct, it is necessary to count the frequency according to the existing dictionary of nouns, but there would hardly be any cardinal differences). I drove as it is in the code. It’s not very beautiful, yes, but it’s for now. He cut the frequencies of letters into normalized arrays according to vowels and consonants, with normalization by the most commonly used vowel / consonant. Rewrote the evaluation function. THERE IS! ELEPHANT + 1!

Yes, and the ELEPHANT began to get another step or two faster.

Work with letter frequencies

At initialization:

Evaluation function:

public function __construct() { //$this->_mprDictionary = null; $this->_mprDictionary = new DictionaryFileMapper(); $this->_vovels = ""; $this->LoadLetterFrequencies(); } private function LoadLetterFrequencies() { $this->_lettersFrequences = [ '' => 0.10983, '' => 0.08483, '' => 0.07998, '' => 0.07367, '' => 0.06700, '' => 0.06318, '' => 0.05473, '' => 0.04746, '' => 0.04533, '' => 0.04343, '' => 0.03486, '' => 0.03203, '' => 0.02977, '' => 0.02804, '' => 0.02615, '' => 0.02001, '' => 0.01898, '' => 0.01735, '' => 0.01687, '' => 0.01641, '' => 0.01592, '' => 0.01450, '' => 0.01208, '' => 0.00966, '' => 0.00940, '' => 0.00718, '' => 0.00639, '' => 0.00486, '' => 0.00361, '' => 0.00331, '' => 0.00267, '' => 0.00037, '' => 0.00013, ]; // $this->_lettersFrequencesVovel = []; $this->_lettersFrequencesConsonant = []; foreach ($this->_lettersFrequences as $letter => $frequency) { if ($this->IsVovel($letter)) { $this->_lettersFrequencesVovel[$letter] = $frequency; } else { $this->_lettersFrequencesConsonant[$letter] = $frequency; } } // . // , $maxFrequency = reset($this->_lettersFrequencesVovel); foreach ($this->_lettersFrequencesVovel as $letter => &$frequency) { $frequency /= $maxFrequency; } $maxFrequency = reset($this->_lettersFrequencesConsonant); foreach ($this->_lettersFrequencesConsonant as $letter => &$frequency) { $frequency /= $maxFrequency; } } /** * * * @param string $letter - * @return bool */ public function IsVovel($letter) { return false === mb_strpos($this->_vovels, $letter) ? false : true; } Evaluation function:

/** * * * @param string $word - * @param string $comparationWord - * @return int */ public function GetWordScore($word, $comparationWord) { // - , - static $cachedComparationWord = ''; static $wordLen = 0; static $cwLetters = []; if ($cachedComparationWord != $comparationWord) { $cachedComparationWord = $comparationWord; $wordLen = mb_strlen($comparationWord); $cwLetters = []; for ($i = 0; $i < $wordLen; $i++) { $letter = mb_substr($comparationWord, $i, 1); $cwLetters[$i] = [ 'letter' => $letter, 'isVovel' => false === mb_strpos($this->_vovels, $letter) ? false : true, ]; } } $score = 0; for ($i = 0; $i < $wordLen; $i++) { $letter = mb_substr($word, $i, 1); $isVovel = $this->IsVovel($letter); // = 3 if ($letter == $cwLetters[$i]['letter']) { $score += 1; if ($isVovel) { $score += 2 + 1 * $this->_lettersFrequencesVovel[$letter]; } else { $score += 0 + 3 * $this->_lettersFrequencesConsonant[$letter]; } continue; } if ($isVovel) { if ($cwLetters[$i]['isVovel']) { // = 2 $score += 2 + 2 * $this->_lettersFrequencesVovel[$letter]; } else { // = 1 $score += 2 * $this->_lettersFrequencesVovel[$letter]; } } else { if (isset($this->_lettersFrequencesConsonant[$letter])) { $score += 3 * $this->_lettersFrequencesConsonant[$letter]; } } } return round($score); } New numbers for the transformation "fly" - "elephant":

| Configuration | T download dictionaries | T search | T total | Consumption of ram |

|---|---|---|---|---|

| Main dictionary only | 0.04 seconds | 210 sec | 210 sec | 9 MB |

| 1 subdiction | 0.98 seconds | 26.16 seconds | 27.14 seconds | 42 MB |

| 2 subbooks | 1.97 seconds | 9.97 seconds | 11.94 seconds | 72 MB |

| 3 subbooks | 2.98 seconds | 4.72 seconds | 7.70 seconds | 102 MB |

| 4 subbooks | 3.97 seconds | 1.37 seconds | 5.34 seconds | 130 MB |

| 5 sublovie | 4.96 seconds | 1.30 seconds | 6.26 sec | 158 MB |

Chain: "fly" - "muna" - "mine" - "lina" - "lynn" - "lyon" - "zion" - "elephant" (7 transitions).

As you can see, the dictionary (from 0.68 MB to 1.03 MB, + 51%), and with it the dictionaries and the final zhor of the RAM. Combinatorics improved, and even though at each step mutations began to crumble more, which means they began to walk more slowly - the final goal, with reachability, began to be obtained faster, in a smaller number of steps.

However, in terms of time, the search turned out not at all faster, even for 4 subloviers. One factor did not compensate for the other. Nevertheless, in essence, expanding the dictionary is an absolutely correct and necessary move to get closer to real combat conditions. And there are many other crossword dictionaries and dictionaries, including online ones, with which you can work to expand our vocabulary.

2nd version (same PHP 5.4).

*

* Generally, this task seems endless.

* And in this long road to perfection,

* Perhaps, on this patch it is worth taking another smoke break.

*

A certain science activist

DOED ELEPHANT from the FLY:

Inflated, inflated, The

people called out to see .

He wanted to surprise everyone ...

Well, the elephant flew away!

Version three

Honestly, I expected more from the version. Still, the base (it was MySQL 5.5 at hand) should, well, it should have provided a takeoff at least several times, if not by orders of magnitude.

1) Base and speed?

Apparently, in the version with files, I actually achieved the memcache effect — a bunch of dictionaries in memory, and the algorithm, in general, is the same for both file and mysql versions. In the construction of the base I think I think, all the necessary indices for the work have been affixed.

The files themselves slowed me down only at the stage of loading them into the RAM - about 4 seconds. And the search from a molehill was about 0.8 seconds. So overall, the MySQL version wins, with a “load” of about 0.002 seconds and a search of about 0.95 seconds. It’s clear that she doesn’t need to download anything, after one or two past script downloads, the cache is warm and everything is already loaded and waiting.

Base structure

-- -- : `metagram` -- -- -------------------------------------------------------- -- -- `dictionary` -- CREATE TABLE IF NOT EXISTS `dictionary` ( `id` int(11) NOT NULL AUTO_INCREMENT, `name` varchar(255) NOT NULL, `lang` varchar(30) NOT NULL, PRIMARY KEY (`id`), UNIQUE KEY `name` (`name`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8 AUTO_INCREMENT=1 ; -- -------------------------------------------------------- -- -- `word` -- CREATE TABLE IF NOT EXISTS `word` ( `id` int(11) NOT NULL AUTO_INCREMENT, `value` varchar(50) NOT NULL, `description` varchar(255) DEFAULT NULL, `dictionary_id` int(11) NOT NULL, `length` smallint(6) NOT NULL, PRIMARY KEY (`id`), UNIQUE KEY `value` (`value`), KEY `dictionary_id` (`dictionary_id`), KEY `lenght` (`length`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8 AUTO_INCREMENT=1 ; -- -------------------------------------------------------- -- -- `word_pseudo` -- CREATE TABLE IF NOT EXISTS `word_pseudo` ( `id` int(11) NOT NULL AUTO_INCREMENT, `value` varchar(50) NOT NULL, `word_id` int(11) NOT NULL, `deleted_symbol_pos` smallint(6) NOT NULL, PRIMARY KEY (`id`), UNIQUE KEY `value_word_id` (`deleted_symbol_pos`,`value`,`word_id`), KEY `word_id` (`word_id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8 AUTO_INCREMENT= 1 ; Anyway, the answer in 1 second is better than in 5 seconds.

2) Caching an obvious bottleneck

Initially, however, it was under 2 seconds, because of the plentiful SELECT queries, the word software id. Aggressive caching in the MySQL mapper eliminated this problem. Also, of course, not in an optimal way, but this is still not a highload production, but an experiment. It is quite tolerant at this stage.

Somewhere in the DictionaryMysqlMapper class

... private $_cachedWords; private $_cachedWordKeys; ... /** * * * @param string $key () * @return string|false|null */ public function GetWord($key) { if (isset($this->_cachedWords[$key])) { return $this->_cachedWords[$key]; } $wordRow = $this->_db->FetchOne("SELECT * FROM `word` WHERE `id` = " . $this->_db->QuoteValue($key)); $this->_cachedWords[$key] = $wordRow['value']; return $wordRow['value']; } 3) Tuning population size

And by the way, as a result of the excellently shown evaluation function taking into account the frequencies of the letters, it was possible to reduce the population number in the generation from 100 to 50 without affecting the result. By the way, even the population of 20 showed itself not much worse, but left 50.

This made it possible to significantly reduce the search time. For example, the same flies and elephants from about 1 second to 0.5-0.6 seconds.

So,

3rd version (Again PHP 5.4, but already with MySQL 5.5)

*

* Here, the article itself, according to the raised problem and scope, has become “out of a fly an elephant”)

* It's time to sum up.

*

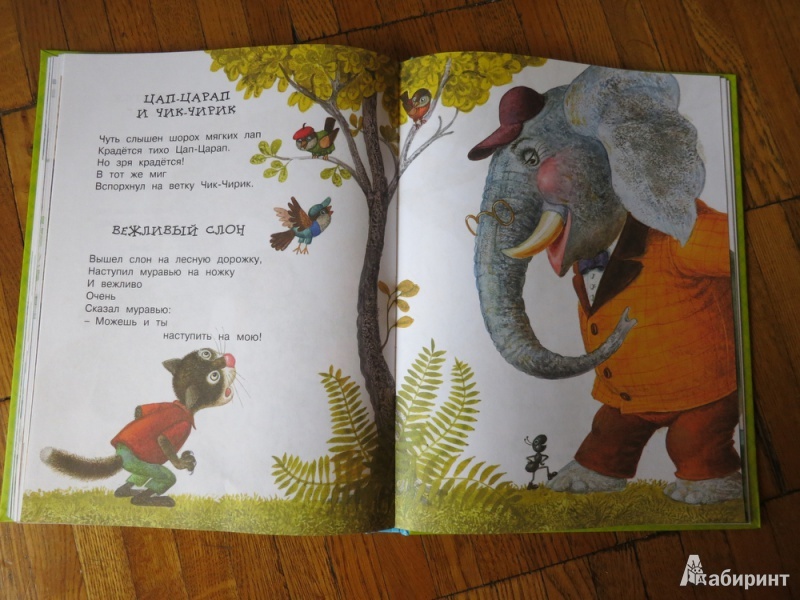

An elephant came out on a forest path,

Came on an ant on a leg

And politely

Very

Told an ant:

- You can

step on mine!

(c) Rinat Mucha, "Polite Elephant"

Results

In the third step, we have a solution for PHP 5.4 + MySQL 5.5, which requires about 0.5 seconds. to turn a fly into an elephant in 9 iterations:

'from' => "" 'to' => "" 'runMode' => "MySQL" 'list' ... '0' => "" '1' => "" '2' => "" '3' => "" '4' => "" '5' => "" '6' => "" '7' => "" '8' => "" 'timeLoad' => ", : 0,008000 ." 'time' => " : 0,551032 ." 'status' => "." The script does not consume as much horse operation as in the version with pure PHP and dictionary files (under 100 MB), the consumption is the most usual. The same data stored in the DBMS, behave more decently for memory appetites.

What's next?

Of course, the solution is not perfect, I know. Much more can be done:

- , : , . , 2-4 . , , .

- ? . . , — . . , , .

- Redis? Memcached?

- N ,

- ? , . .

- CP1251? Utf-8 . , , , . 1 , 2 . .

- JavaScript ? , , — , , .

- C++?

- And certainly other moves that have not yet occurred to the head.

The theme is addictive ... Maybe the story will have a sequel. In any case, I was hooked strongly, no less than Diamond-Square .

PS:

Of course, in this article it is impossible not to leave a link to how artists make an elephant out of a fly .

Comments and comments are welcome! Maybe missed some simple and important things. Thank you for attention.

Source: https://habr.com/ru/post/270845/

All Articles