Why upgrade to Data ONTAP Cluster Mode?

As I wrote in my previous posts, Data ONTAP v8.3.x is one of the most significant releases of the operating system for NetApp FAS storage systems.

In this article, I will present the most significant, from my point of view, new functions of NetApp storage systems in the most recent version of Clustered Data ONTAP. By tradition, I will give an example on cars: Imagine you have a Tesla car, you updated the firmware and got autopilot with autoparking for free, although it was not there before. Really nice? So, the most important arguments for upgrading your system to Cluster-Mode are saving investments and the opportunity to get the most up-to-date functionality on the old hardware:

')

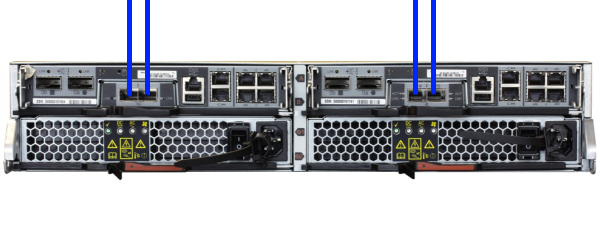

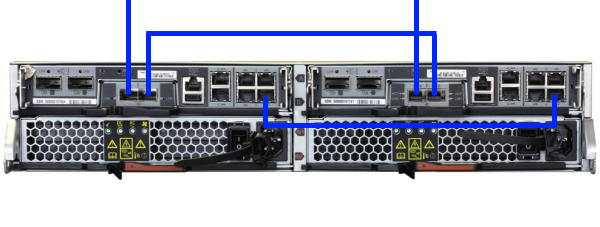

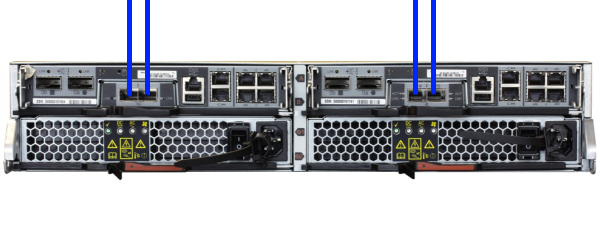

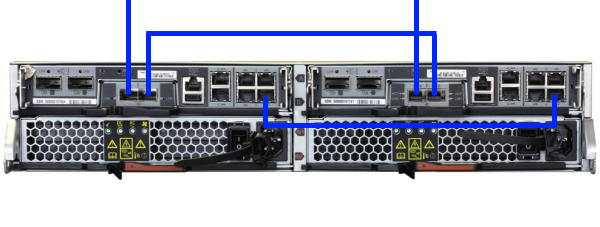

Network connection 7-Mode (above) and Cluster-Mode (below) for FAS2240-2.

First, you must have a 10Gbit Mezzanine adapter. If you have FC Mezzanine, you will need to replace it with 10Gbit and switch to iSCSI.

Secondly, be prepared to donate one 10Gbit port from each such controller to the needs of Cluster-Interconnect. This is a required minimum. You can give one or two 10Gbit ports from the controller, if one 10Gbit port is allocated for cluster interconnect, don’t be lucky to give another 1Gbit port for the same needs, although this is no longer a requirement. In other words, an update may entail a change in the design of your network connection.

If you have already used the Active-Passive configuration, then you can save 3-4 disks freed from the Root aggregate thanks to ADP Root-Data partitioning.

Instead of four 10Gbit ports from two controllers in 7-Mode, we get two 10Gbit ports in Cluster-Mode: on the bottom port from the controller, to connect the hosts.

Firstly, the fault tolerance of such a solution does not suffer because the LIF interfaces in the event of a link or controller failure move along with the IP and MAC addresses to the second controller online. If it is iSCSI, then from the host side, the path to the storage is simply switched, at the multi-passing driver level (SAN LIFs are not driven, instead, multi-passing works).

Secondly, some users tried to run a synthetic load and failed to load two of four links by more than 40% with 48x SAS 10k disks, the other two were idle anyway. And in the normal mode of operation, the utilization of links from the same user did not reach 10%. Another customer lives on cDOT 8.3.1 and the FAS2240 20x900 +4 SSD system in the Active-Passive configuration (i.e. in fact only one 10Gbit link is used, the second one waits passively when it is needed in an emergency case) and does not observe any negative changes in the speed of access to the storage after the transition to Cluster-Mode, while having all its own, not small, virtual infrastructure living on this storage.

When upgrading your storage system from 7-Mode to Cluster-Mode with data transfer to a temporary storage system and then online migration of data back, you can use the improvised switches .

Updating older systems, as a rule, does not lead to any changes in the storage connectivity to the network, as they either have 10Gbit ports for Cluster Interconnect on board, or they can be delivered there in a free PCI slot.

There are no 10Gbit ports on 32XX systems on board, but they can be delivered to a free PCI slot.

In the 6XXX systems, there is already 10Gbit on board and you can either use them or you will need to purchase 10Gbgit NIC if there are no free ports.

The ability to update your old NetApp storage systems is a way to save investments, even taking into account the fact that on the FAS22XX systems one 10Gbit port is lost from the controller, the game is worth the trouble, thanks to the extensive additional functionality. For older systems, conversion, as a rule, does not at all entail changes in network connectivity. Many other reputable vendors, the upgrade process is usually a complete replacement of iron, including disk shelves. NetApp in this regard is much more flexible due to the ability to update the old hardware with the newest firmware and the ability to connect all the shelves from its old storage systems, and there is simply no distinction between the compatibility of the disk shelves between the Low / Mid / High-End classes. The immortal cluster allows you to combine different models and non-stop it vertically and horizontally to scale, as well as update and upgrade.

I ask to send messages on errors in the text to the LAN .

Comments, additions and questions on the article on the contrary, please in the comments .

In this article, I will present the most significant, from my point of view, new functions of NetApp storage systems in the most recent version of Clustered Data ONTAP. By tradition, I will give an example on cars: Imagine you have a Tesla car, you updated the firmware and got autopilot with autoparking for free, although it was not there before. Really nice? So, the most important arguments for upgrading your system to Cluster-Mode are saving investments and the opportunity to get the most up-to-date functionality on the old hardware:

- Online detection (deduplication) of zeros on the go , which can be very useful in the case of database and provisioning of virtual machines.

- Online deduplication for FlashPool (and AFF) systems, which will extend the life of SSD drives. The feature is available starting from 8.3.2.

- If you upgrade to VMWare vSphere 6, you will have vVOL support from both NAS and SAN

- QoS - setting the maximum threshold for I / O operations or Mb / s on files, moons, bills and SVM.

- NFS4.1 support, which is also present in VMware vSphere 6

- Support for pNFS which allows NFS to be parallelized and switch between paths from the client to the file sphere without remounting it, is supported with RHEL 6.4 and higher.

- SMB (CIFS) 3.0 support that works with clients since Win 8 and Win 2012

- Support for closing files and sessions for SMB 3.0 from Data ONTAP

- SMB 3.0 Encription support.

- SMB Continuous Availability (SMB CA), provides the ability to switch between paths and storage controllers without disconnecting, which is very important for SQL / Hyper-V operation

- ODX when working with Microsoft SAN / NAS allows you to download routine tasks, such as scoring a data block with a specific pattern, and allowing you not to drive unnecessary data between the host and the repository.

- Online migration of volyumov by aggregates, including on other nodes of the cluster

- Online migration of moons along wolums, including other cluster nodes

- Online switching units between the nodes of a pair of HA

- The ability to combine heterogeneous systems into one cluster. Thus, the upgrade is carried out without stopping access to data, thanks to this opportunity NetApp calls its cluster Immortal . At the time of the cluster update, its nodes can consist of different versions of cDOT. I can not miss the opportunity and not to mention that most competitors have clustering in general, then it is very limited in the number of nodes in the first place, and secondly all nodes in the cluster must be identical (homogeneous cluster).

- ADP StoragePool - technology for a more rational distribution of SSD under the cache (hybrid units). For example, you have only 4 SSDs, and you want 2, 3 or four units to benefit from caching on SSDs.

- ADP Root-Data Partitioning will allow you to abandon the root-allocated units for systems FAS22XX / 25XX / 2600 and AFF8XXX / A200 / A300 / A700

- Space Reclamation for SAN - returns remote blocks to storage. Let me remind you that without SCSI3 UNMAP, if the data blocks on your moon were deleted, on the thin moon on the storage itself these blocks were still marked as used and still occupied disk space, and any thin moon could only grow earlier, storage and host feedback mechanism. To support Space Reclamation, hosts must be ESXi 5.1 or higher, Win 2012 or higher, RHEL 6.2 or higher.

- Adaptive compression - improves the speed of reading compressed data.

- FlexClone improvements for files and moons. Now it is possible to set policies for deleting clones of files or moons (for example, with vVOL ).

- The ability to authenticate storage administrators using Active Directory (CIFS license is not required).

- Kerberos 5 support : 128-bit AES and 256-bit AES encryption, support for IPv6.

- SVM DR support (based on SnapMirror ). Those. the ability to replicate the entire SVM to the backup site. An important point is the possibility at the stage of setting up replication relationships to pre-set new network addresses ( Identity discard mode), since on the backup site, often used are network address ranges. The Identity discard function will be very convenient for small companies that cannot afford equipment and communication channels in order to stretch the L2 domain from the main site to the spare one. In order for clients to switch to new network addresses, it is enough to change the DNS records (which can be easily automated with the help of a simple script). Identity preserve mode is also supported when all LIF, volume, LUN settings are saved on a remote site.

- The ability to restore a file or the moon from a SnapVault backup without restoring the entire volume.

- The ability to integrate storage systems with anti-virus systems to check file globes. Computer Associates, McAfee, Sophos, Symantec, Trend Micro and Kaspersky are supported.

- Optimized the operation of FlashPool / FlashCache. Allows you to cache compressed data and large blocks (previously both of these types of data did not fall into the cache).

')

Network connection 7-Mode (above) and Cluster-Mode (below) for FAS2240-2.

FAS22XX Update

First, you must have a 10Gbit Mezzanine adapter. If you have FC Mezzanine, you will need to replace it with 10Gbit and switch to iSCSI.

Secondly, be prepared to donate one 10Gbit port from each such controller to the needs of Cluster-Interconnect. This is a required minimum. You can give one or two 10Gbit ports from the controller, if one 10Gbit port is allocated for cluster interconnect, don’t be lucky to give another 1Gbit port for the same needs, although this is no longer a requirement. In other words, an update may entail a change in the design of your network connection.

If you have already used the Active-Passive configuration, then you can save 3-4 disks freed from the Root aggregate thanks to ADP Root-Data partitioning.

Why it makes sense to upgrade FAS22XX to cDOT

Instead of four 10Gbit ports from two controllers in 7-Mode, we get two 10Gbit ports in Cluster-Mode: on the bottom port from the controller, to connect the hosts.

Firstly, the fault tolerance of such a solution does not suffer because the LIF interfaces in the event of a link or controller failure move along with the IP and MAC addresses to the second controller online. If it is iSCSI, then from the host side, the path to the storage is simply switched, at the multi-passing driver level (SAN LIFs are not driven, instead, multi-passing works).

Secondly, some users tried to run a synthetic load and failed to load two of four links by more than 40% with 48x SAS 10k disks, the other two were idle anyway. And in the normal mode of operation, the utilization of links from the same user did not reach 10%. Another customer lives on cDOT 8.3.1 and the FAS2240 20x900 +4 SSD system in the Active-Passive configuration (i.e. in fact only one 10Gbit link is used, the second one waits passively when it is needed in an emergency case) and does not observe any negative changes in the speed of access to the storage after the transition to Cluster-Mode, while having all its own, not small, virtual infrastructure living on this storage.

View the current load for Ethernet ports on 7-Mode

We are most interested in the Bytes / second field in the RECIVE and TRANSMIT section:

system1> ifstat e1a -- interface e1a (8 days, 20 hours, 10 minutes, 27 seconds) -- RECEIVE Frames/second: 12921 | Bytes/second: 46621k | Errors/minute: 0 Discards/minute: 0 | Total frames: 11134k | Total bytes: 38471m Total errors: 0 | Total discards: 0 | Multi/broadcast: 0 No buffers: 0 | Non-primary u/c: 0 | Bad UDP cksum 0 Good UDP cksum 2044 | Redo UDP cksum 0 | Bad TCP cksum 0 Good TCP cksum 0 | Redo TCP cksum 0 | Tag drop: 0 Vlan tag drop: 0 | Vlan untag drop: 0 | Mac octets 9472k UCast pkts: 72750k | MCast pkts: 187k | BCast pkts: 15181 CRC errors: 0 | Bus overrun: 0 | Alignment errors: 0 Long frames: 0 | Jabber: 0 | Pause frames: 0 Runt frames: 0 | Symbol errors: 0 | Jumbo frames: 42959k TRANSMIT Frames/second: 12457 | Bytes/second: 2936k | Errors/minute: 0 Discards/minute: 0 | Total frames: 10710k | Total bytes: 2528m Total errors: 0 | Total discards: 0 | Multi/broadcast: 971 Queue overflows: 0 | No buffers: 0 | Frame Queues: 0 Buffer coalesces: 0 | MTUs too big: 0 | HW UDP cksums: 0 HW TCP cksums: 0 | Mac octets: 110k | UCast pkts: 974 MCast pkts 4 | BCast pkts: 967 | Bus underruns: 0 Pause fraMes: 0 | Jumbo frames: 0 LINK_INFO Current state: up | Up to downs: 1 | Speed: 10000m Duplex: full | Flowcontrol: full When upgrading your storage system from 7-Mode to Cluster-Mode with data transfer to a temporary storage system and then online migration of data back, you can use the improvised switches .

Upgrading 32XX and 6XXX Systems

Updating older systems, as a rule, does not lead to any changes in the storage connectivity to the network, as they either have 10Gbit ports for Cluster Interconnect on board, or they can be delivered there in a free PCI slot.

There are no 10Gbit ports on 32XX systems on board, but they can be delivered to a free PCI slot.

In the 6XXX systems, there is already 10Gbit on board and you can either use them or you will need to purchase 10Gbgit NIC if there are no free ports.

findings

The ability to update your old NetApp storage systems is a way to save investments, even taking into account the fact that on the FAS22XX systems one 10Gbit port is lost from the controller, the game is worth the trouble, thanks to the extensive additional functionality. For older systems, conversion, as a rule, does not at all entail changes in network connectivity. Many other reputable vendors, the upgrade process is usually a complete replacement of iron, including disk shelves. NetApp in this regard is much more flexible due to the ability to update the old hardware with the newest firmware and the ability to connect all the shelves from its old storage systems, and there is simply no distinction between the compatibility of the disk shelves between the Low / Mid / High-End classes. The immortal cluster allows you to combine different models and non-stop it vertically and horizontally to scale, as well as update and upgrade.

I ask to send messages on errors in the text to the LAN .

Comments, additions and questions on the article on the contrary, please in the comments .

Source: https://habr.com/ru/post/270625/

All Articles