“Big data” - is it boring?

We continue the story about development methodologies in the field of Big Data, used in the MegaFon company (the first part of the article is here ). Every day brings us new challenges that require new solutions. Therefore, the organization development techniques are constantly being improved.

The practical implementation of "Kanban" is the results with extremely rapid feedback. These requirements are met by the concept of Continuous Delivery (CD), which can be visualized as a conveyor:

The development methodology we adopted implies very short iteration periods. For this reason, the CD pipeline is as automated as possible: all three stages of testing are conducted without human intervention on the continuous integration server. He takes all the changes made at the “Development” stage, applies them, conducts tests, and then issues a report on the success of the test. The final stage, “Deployment,” is also done automatically on developer test environments, and a human team is required for deployment to user environments.

')

If we talk about a single application (for example, a simple website is created), the CD process does not lead to any difficulties. But in the case of a platform development, the situation changes: the platform can have many different applications. It will be even more difficult to test all changes when creating a data processing platform: to get accurate results, you will need to download a couple of tens of terabytes of data. This significantly extends the cycle of continuous integration, so it needs to be divided into smaller tasks and tested on small amounts of data.

What exactly is delivered as part of the CD process :

• Regular processes running on Hadoop ( ETL ).

• Real-time analytical services.

• Interfaces for consumers of analytics results.

A typical business requirement covers all three sets of delivery: regular and online (real-time) processes need to be adjusted, and access to the results must be provided - interfaces are created. A variety of interfaces significantly complicates the development process, they all require mandatory testing as part of the CD process.

Non-stop integration

Product testability is one of the main requirements of the CD methodology. To do this, we created a toolkit that allows you to automatically test items supplied on a development machine, on a continuous integration server, and in an acceptance testing environment. For example, for processes developed in the Apache Pig programming language, we developed a maven plugin that allows you to test scripts locally, also written in Apache Pig, as if they were running on a large cluster. This saves time.

We also developed our own installer. It is designed as a Groovy DSL language and allows you to very easily and visually indicate where to send each item of delivery. All information about the available environments - test, preproduction and production - is stored in the configuration service created by us. This service is an executive intermediary between the installer and environments.

After the deployment of items of delivery is carried out automated acceptance testing. During this process, all possible user actions are simulated: moving the mouse cursor, clicking on links in interfaces and on web pages. That is, the correctness of the system is checked from the user's point of view. In fact, business requirements are clearly recorded in the form of acceptance tests. Items are also subject to automated load testing. Its purpose is to confirm compliance with performance requirements. For this we have allocated a special environment.

The next step is a statistical analysis of the quality of the code for the style and typical coding errors. The code should be correct from the point of view of the compiler, it should not contain logical errors, bad names and other style flaws. The quality of the code is controlled by all developers, but in our field, the application of such an analysis to the items supplied is not a standard step.

Infrastructure

Upon successful completion of testing, the deployment phase begins. In the process of sending the objects of delivery into commercial operation, management occurs automatically, without human intervention. Our server fleet consists of more than 200 machines; Puppet is used to manage server configurations. It is enough to physically install the server in the rack, specify the management environment for the connection and the server role, and then everything happens automatically: downloading all the settings, installing the software, connecting to the cluster, starting the server components corresponding to the desired role. This approach allows dozens of servers to be connected and disconnected, and not individually.

We use a simple environment configuration :

• Working servers (worker nodes) in the form of ordinary “iron” machines.

• Cloud virtual machines for various utilitarian tasks that do not require large capacity. For example, metadata management, repository of artifacts, monitoring.

Thanks to this approach, utilitarian tasks do not take up the capacity of physical servers, and service services are reliably protected from failures, virtual machines are restarted automatically. At the same time, working servers are not the only points of failure and can be replaced or reconfigured without serious consequences. You can often hear about platforms where emphasis is placed on the cloud ecosystem when building clusters. But using the cloud to solve “heavy” analytical tasks with large amounts of data is less efficient in terms of cost. The environment construction scheme we use provides savings in infrastructure costs, since a regular, non-virtual, machine is more efficient on I / O operations from local disks.

Each environment consists of a part of a virtual machine cloud and a number of working servers. Including we have test environments in virtual machines on request, which are temporarily deployed to solve some problems. These machines can even be created on the local developer’s machine. For automatic deployment of virtual machines, we use the Vagrant application.

In addition to development test environments, we support three important environments :

• Acceptance test environment - UAT.

• Medium for load testing - Performance.

• Industrial environment - Production.

Switching working servers from one environment to another takes hours. This is a simple process that requires minimal human intervention.

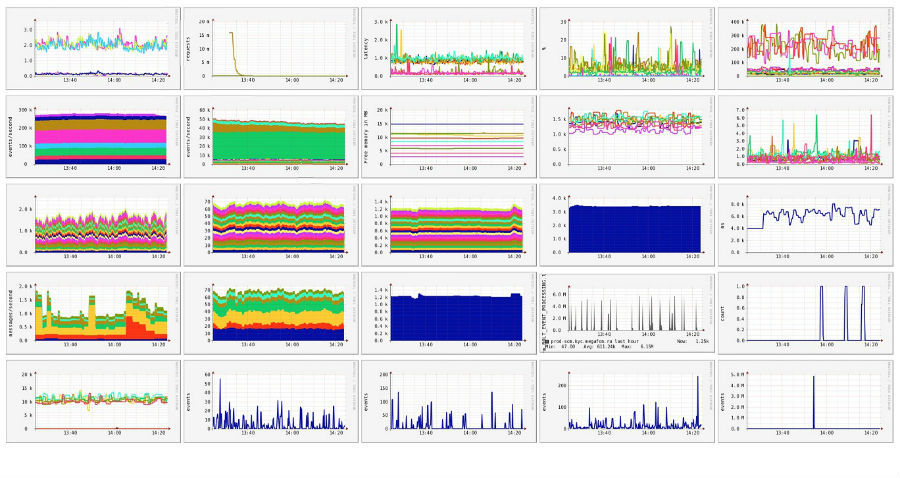

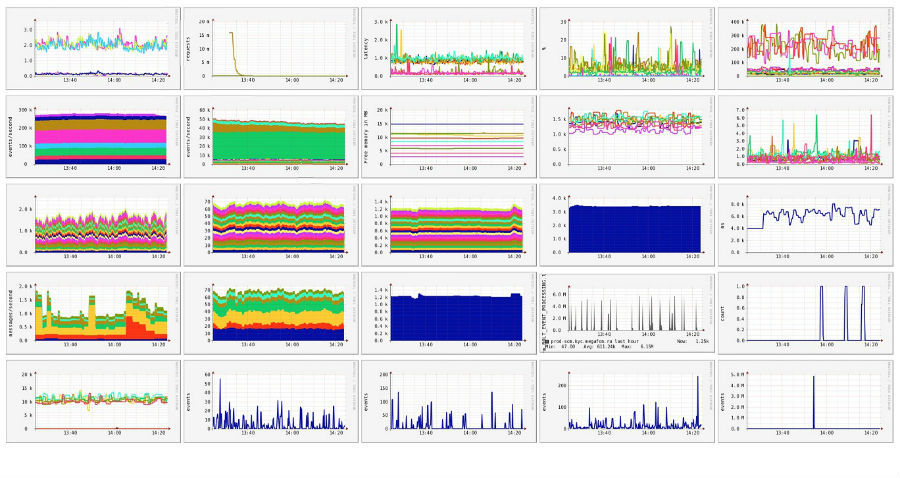

For monitoring, the distributed system Ganglia is used , the logs are aggregated in Elastic, for alerting, Nagios is used. All information is displayed on a video wall, consisting of large TVs running microcomputers Raspberry Pi. Each of them is responsible for a separate fragment of a common large image. An effective and very affordable solution: the panel displays a general visual picture of the state of media and the process of continuous delivery. One glance is enough to get accurate information on how the development process is going on, how services feel in industrial operation.

The volume of data processed by us exceeds 500,000 messages per second. For 50,000 of them, the system responds in less than a second. The accumulated database for analysis takes about 5 petabytes, in the future it will grow to 10 petabytes.

Each server sends an average of 50 metrics per minute to monitoring. The number of indicators by which the permissible parameters are monitored and the alert is more than 1600.

Big Data is an invaluable source of information: transforming numbers into knowledge, you can develop new products for subscribers, improve existing ones, respond quickly to changing situations or, for example, user behavioral models.

Here are just a few examples from a fairly large list of applicability of Big Data:

• Geospatial analysis of the distribution of the load on the network: where, how and why the load on the network increases.

• Behavioral analysis of devices in the cellular network.

• The dynamics of the emergence of new devices.

In particular, we use the results of Big Data analysis in the radio planning and modernization of our own network infrastructure. We have also created a number of services that provide various analytics in real time.

For the open market, we (for the first time in the Russian telecommunications market!) Launched in November 2013 a geospatial analysis service for urban flows, including pedestrians and public transport. There are only a handful of examples of such non-GPS based commercial services in the world.

Separately tell a little about our team. In addition to the R & D team, which deals directly with the development of services, we have DevOps, which is responsible for the performance of all solutions.

They are on a par with customers involved in the process of setting tasks, offer their own improvements for each of the services. They also impose requirements on the quality and functionality of the development and participate in testing and acceptance. A little more about this area can be found here .

About our Moscow office has repeatedly written in various publications (for example, here ), but it should be noted that developers, DevOps and analysts are working at a distance from each other): in Moscow, Nizhny Novgorod and Yekaterinburg. To ensure that the process does not suffer, we use a lot of paid and free tools that make life much easier for everyone.

Slack helps us a lot to communicate both within the development team and with contractors. Now it is generally fashionable hipster trend, but as a tool for communication, he is very, very good. In addition, we switched to internal GitLab, integrated all processes with Jira and Confluence. For all offices, a uniform development standard, uniform rules for setting tasks, a unified approach to providing employees with equipment and other things necessary for work have been implemented.

Over time, we face more and more tasks. Therefore, our team is replenished with new professionals who are able to benefit in various fields. Working with Big Data in a large telecommunications operator is an interesting, ambitious task. And we are optimistic about the future - there are many interesting things ahead of us.

The subsidiaries of Russian Railways received for use the test version of the service for analyzing passenger traffic developed by MegaFon. It is a tool that helps determine the size and detailed characteristics of the shipping market. Presumably the commercial launch of the project will take place in 2016.

The service offered by MegaFon makes it possible for Russian Railways to manage passenger traffic: to motivate people to purchase tickets for one direction or another, to analyze a fall or increase in sales, occupancy of cars. Analysis of the information obtained allows you to quickly make adjustments: vary ticket prices (for example, making them attractive both at certain times of the day and during the low season), optimize the train schedule (add additional trains during peak hours and, on the contrary, remove those who don’t demand at certain hours of compositions).

For example, the MegaFon service analyzed the route Moscow - Volgograd - Moscow during the May holidays of this year: demand grew by 6.8% compared to the same period last year. At the same time, the service showed that the loss of regular customers on the Moscow-Volgograd route over the last year was 8.3%.

MegaFon estimated that transport companies in Russia spend more than 1.2 billion rubles on such research. annually. At the same time, the companies themselves can collect only part of the data available to them, and the service of the cellular operator makes it possible to see the whole picture of the market as a whole, thanks to which the carrier increases its share in the general market of passenger traffic by 1.5–2%. And this is billions of rubles.

How we work now

Continuous delivery

The practical implementation of "Kanban" is the results with extremely rapid feedback. These requirements are met by the concept of Continuous Delivery (CD), which can be visualized as a conveyor:

The development methodology we adopted implies very short iteration periods. For this reason, the CD pipeline is as automated as possible: all three stages of testing are conducted without human intervention on the continuous integration server. He takes all the changes made at the “Development” stage, applies them, conducts tests, and then issues a report on the success of the test. The final stage, “Deployment,” is also done automatically on developer test environments, and a human team is required for deployment to user environments.

')

If we talk about a single application (for example, a simple website is created), the CD process does not lead to any difficulties. But in the case of a platform development, the situation changes: the platform can have many different applications. It will be even more difficult to test all changes when creating a data processing platform: to get accurate results, you will need to download a couple of tens of terabytes of data. This significantly extends the cycle of continuous integration, so it needs to be divided into smaller tasks and tested on small amounts of data.

Items of delivery

What exactly is delivered as part of the CD process :

• Regular processes running on Hadoop ( ETL ).

• Real-time analytical services.

• Interfaces for consumers of analytics results.

A typical business requirement covers all three sets of delivery: regular and online (real-time) processes need to be adjusted, and access to the results must be provided - interfaces are created. A variety of interfaces significantly complicates the development process, they all require mandatory testing as part of the CD process.

Non-stop integration

Product testability is one of the main requirements of the CD methodology. To do this, we created a toolkit that allows you to automatically test items supplied on a development machine, on a continuous integration server, and in an acceptance testing environment. For example, for processes developed in the Apache Pig programming language, we developed a maven plugin that allows you to test scripts locally, also written in Apache Pig, as if they were running on a large cluster. This saves time.

We also developed our own installer. It is designed as a Groovy DSL language and allows you to very easily and visually indicate where to send each item of delivery. All information about the available environments - test, preproduction and production - is stored in the configuration service created by us. This service is an executive intermediary between the installer and environments.

After the deployment of items of delivery is carried out automated acceptance testing. During this process, all possible user actions are simulated: moving the mouse cursor, clicking on links in interfaces and on web pages. That is, the correctness of the system is checked from the user's point of view. In fact, business requirements are clearly recorded in the form of acceptance tests. Items are also subject to automated load testing. Its purpose is to confirm compliance with performance requirements. For this we have allocated a special environment.

The next step is a statistical analysis of the quality of the code for the style and typical coding errors. The code should be correct from the point of view of the compiler, it should not contain logical errors, bad names and other style flaws. The quality of the code is controlled by all developers, but in our field, the application of such an analysis to the items supplied is not a standard step.

Infrastructure

Upon successful completion of testing, the deployment phase begins. In the process of sending the objects of delivery into commercial operation, management occurs automatically, without human intervention. Our server fleet consists of more than 200 machines; Puppet is used to manage server configurations. It is enough to physically install the server in the rack, specify the management environment for the connection and the server role, and then everything happens automatically: downloading all the settings, installing the software, connecting to the cluster, starting the server components corresponding to the desired role. This approach allows dozens of servers to be connected and disconnected, and not individually.

We use a simple environment configuration :

• Working servers (worker nodes) in the form of ordinary “iron” machines.

• Cloud virtual machines for various utilitarian tasks that do not require large capacity. For example, metadata management, repository of artifacts, monitoring.

Thanks to this approach, utilitarian tasks do not take up the capacity of physical servers, and service services are reliably protected from failures, virtual machines are restarted automatically. At the same time, working servers are not the only points of failure and can be replaced or reconfigured without serious consequences. You can often hear about platforms where emphasis is placed on the cloud ecosystem when building clusters. But using the cloud to solve “heavy” analytical tasks with large amounts of data is less efficient in terms of cost. The environment construction scheme we use provides savings in infrastructure costs, since a regular, non-virtual, machine is more efficient on I / O operations from local disks.

Each environment consists of a part of a virtual machine cloud and a number of working servers. Including we have test environments in virtual machines on request, which are temporarily deployed to solve some problems. These machines can even be created on the local developer’s machine. For automatic deployment of virtual machines, we use the Vagrant application.

In addition to development test environments, we support three important environments :

• Acceptance test environment - UAT.

• Medium for load testing - Performance.

• Industrial environment - Production.

Switching working servers from one environment to another takes hours. This is a simple process that requires minimal human intervention.

For monitoring, the distributed system Ganglia is used , the logs are aggregated in Elastic, for alerting, Nagios is used. All information is displayed on a video wall, consisting of large TVs running microcomputers Raspberry Pi. Each of them is responsible for a separate fragment of a common large image. An effective and very affordable solution: the panel displays a general visual picture of the state of media and the process of continuous delivery. One glance is enough to get accurate information on how the development process is going on, how services feel in industrial operation.

Metrics

The volume of data processed by us exceeds 500,000 messages per second. For 50,000 of them, the system responds in less than a second. The accumulated database for analysis takes about 5 petabytes, in the future it will grow to 10 petabytes.

Each server sends an average of 50 metrics per minute to monitoring. The number of indicators by which the permissible parameters are monitored and the alert is more than 1600.

Using analysis results

Big Data is an invaluable source of information: transforming numbers into knowledge, you can develop new products for subscribers, improve existing ones, respond quickly to changing situations or, for example, user behavioral models.

Here are just a few examples from a fairly large list of applicability of Big Data:

• Geospatial analysis of the distribution of the load on the network: where, how and why the load on the network increases.

• Behavioral analysis of devices in the cellular network.

• The dynamics of the emergence of new devices.

In particular, we use the results of Big Data analysis in the radio planning and modernization of our own network infrastructure. We have also created a number of services that provide various analytics in real time.

For the open market, we (for the first time in the Russian telecommunications market!) Launched in November 2013 a geospatial analysis service for urban flows, including pedestrians and public transport. There are only a handful of examples of such non-GPS based commercial services in the world.

Team

Separately tell a little about our team. In addition to the R & D team, which deals directly with the development of services, we have DevOps, which is responsible for the performance of all solutions.

They are on a par with customers involved in the process of setting tasks, offer their own improvements for each of the services. They also impose requirements on the quality and functionality of the development and participate in testing and acceptance. A little more about this area can be found here .

About our Moscow office has repeatedly written in various publications (for example, here ), but it should be noted that developers, DevOps and analysts are working at a distance from each other): in Moscow, Nizhny Novgorod and Yekaterinburg. To ensure that the process does not suffer, we use a lot of paid and free tools that make life much easier for everyone.

Slack helps us a lot to communicate both within the development team and with contractors. Now it is generally fashionable hipster trend, but as a tool for communication, he is very, very good. In addition, we switched to internal GitLab, integrated all processes with Jira and Confluence. For all offices, a uniform development standard, uniform rules for setting tasks, a unified approach to providing employees with equipment and other things necessary for work have been implemented.

Over time, we face more and more tasks. Therefore, our team is replenished with new professionals who are able to benefit in various fields. Working with Big Data in a large telecommunications operator is an interesting, ambitious task. And we are optimistic about the future - there are many interesting things ahead of us.

PS ON ONE RAILS

The subsidiaries of Russian Railways received for use the test version of the service for analyzing passenger traffic developed by MegaFon. It is a tool that helps determine the size and detailed characteristics of the shipping market. Presumably the commercial launch of the project will take place in 2016.

The service offered by MegaFon makes it possible for Russian Railways to manage passenger traffic: to motivate people to purchase tickets for one direction or another, to analyze a fall or increase in sales, occupancy of cars. Analysis of the information obtained allows you to quickly make adjustments: vary ticket prices (for example, making them attractive both at certain times of the day and during the low season), optimize the train schedule (add additional trains during peak hours and, on the contrary, remove those who don’t demand at certain hours of compositions).

For example, the MegaFon service analyzed the route Moscow - Volgograd - Moscow during the May holidays of this year: demand grew by 6.8% compared to the same period last year. At the same time, the service showed that the loss of regular customers on the Moscow-Volgograd route over the last year was 8.3%.

MegaFon estimated that transport companies in Russia spend more than 1.2 billion rubles on such research. annually. At the same time, the companies themselves can collect only part of the data available to them, and the service of the cellular operator makes it possible to see the whole picture of the market as a whole, thanks to which the carrier increases its share in the general market of passenger traffic by 1.5–2%. And this is billions of rubles.

Source: https://habr.com/ru/post/270515/

All Articles