Half a Century to "Universal Machine Languages" (1966–2016): Past, Present, Future

Past

The story can start in 1962, when work began at the University of Cambridge on the CPL ("Cambridge Programming Language") - an "improved version" of ALGOL-60. Postgraduate student Martin Richards joined the work on the language; the main difficulty in the implementation of the new PL seemed to him the need to manually port the compiler for different computer platforms. In particular, when Cambridge EDSAC-2 was replaced with Atlas-2 , the CPL developers spent a lot of time porting their compiler to the new platform.

Martin's dissertation was devoted to the “self-compiled” CPL: the compiler developed by Martin was written in a strongly simplified version of CPL, the compiler of which was not hard to write on the macro-assembler at that time. Transferring the CPL to the new platform can now be performed in two steps:

- Manually writing a “simplified CPL” compiler;

- Compile them with the “full CPL” compiler.

Martin didn’t stop there, and he developed BCPL , a system for developing portable compilers. The BCPL compiler generated pseudocode , called Martin "OCODE" .

OCODE looked like this:

(To save space, command sequences are written in one line. Martin does the same thing in his BCPL manual.)

')

BCPL Source Code:

Among the “universal machine languages”, OCODE is unique in that the labels in it are determined by special instructions — that is, To interpret the program, you must first load it all into memory, and find labels in it.

- and a separate program, a code generator , turned the file with such pseudo-code into an executable program for the final processor. OCODE was saved as a text file of decimal numbers separated by spaces and line breaks: while OCODE was developed, binding the file format to a specific byte size would limit the portability of such a file.| OCODE | "Decoding" ("procode") | |

|---|---|---|

94 5 L1 83 73 69 86 69 95 4 42 0 42 0 40 2 14 83 42 0 42 1 40 2 14 83 42 2 40 3 42 1 15 92 85 L5 90 L6 42 1 40 4 40 2 14 83 40 4 42 1 14 80 4 90 5 40 4 40 5 88 L6 91 4 42 2 40 3 42 1 15 92 85 L7 90 L8 40 4 40 2 14 8 87 L9 40 4 42 2 11 92 85 L11 90 L10 42 0 40 6 40 2 14 83 40 4 40 6 14 80 6 90 L11 40 6 40 3 22 86 L10 91 6 90 L9 40 4 42 1 14 80 4 90 L7 40 4 40 5 88 L8 91 4 97 103 0 | ENTRY 5 L1 'S' 'I' 'E' 'V' 'E' SAVE 4 Ln 0 LN 0 LP 2 PLUS STIND LN 0 LN 1 LP 2 PLUS STIND Ln 2 LP 3 LN 1 MINUS STORE JUMP L5 LAB L6 LN 1 LP 4 LP 2 PLUS STIND LP 4 LN 1 PLUS SP 4 LAB L5 LP 4 LP 5 ENDFOR L6 STACK 4 LN 2 LP 3 LN 1 MINUS STORE JUMP L7 LAB L8 LP 4 LP 2 PLUS RV JF L9 LP 4 LN 2 MULT STORE JUMP L11 LAB L10 LN 0 LP 6 LP 2 PLUS STIND LP 4 LP 6 PLUS SP 6 LAB L11 LP 6 LP 3 LS JT L10 STACK 6 LAB L9 LP 4 LN 1 PLUS SP 4 LAB L7 LP 4 LP 5 ENDFOR L8 STACK 4 RTRN ENDPROC 0 |

')

BCPL Source Code:

LET sieve (workvec, vecsize) BE

{

workvec! 0: = 0

workvec! 1: = 0

FOR i = 2 TO vecsize-1 DO workvec! I: = 1

FOR i = 2 TO vecsize-1 DO

IF workvec! I DO

{LET j = 2 * i

WHILE j <vecsize DO

{workvec! j: = 0

j: = j + i

}

}

}

ENDFOR opcode has also been removed - a LE JT pair is generated instead.Among the “universal machine languages”, OCODE is unique in that the labels in it are determined by special instructions — that is, To interpret the program, you must first load it all into memory, and find labels in it.

The BCPL (1) compiler was supplied as OCODE, and to transfer it to a new platform, it was necessary:

The BCPL (1) compiler was supplied as OCODE, and to transfer it to a new platform, it was necessary:- Manually write the pseudocode interpreter (2) (in any language, even in BASIC);

- Adapt a code generator, (3) written in BCPL, for its platform;

- Launch the BCPL compiler (1) under the interpreter (2), feed the code generator (3) to it, and get the executable code generator file (4) at the output;

- Interpreter (2) is no longer needed from this moment on.

- Run the compiler pseudocode (1) through the code generator (4), and get the executable file of the compiler at the output.

This approach meant that to transfer the compiler to a new platform, only the minimum of low-level programming is required; and indeed, the implementation of BCPL was completed by 1967 — earlier than the CPL implementation started a few years earlier!

The merits of BCPL as applied to system programming inspired Ken Thompson to create the Bee language, and he inspired Ken's colleague, Dennis Ritchie, to create C. It was from BCPL that the tradition of denoting

{ curly brackets } program blocks followed, and it was on BCPL that the first program “Hello, World!” Was written . GET "libhdr"

LET start () = VALOF

{writef ("Hello * n")

RESULTIS 0

}

BCPL and its OCODE never gained popularity outside of the United Kingdom, but the idea of WORA caught on. In 1974, already on the Continent, at ETH Zürich , under the leadership of Niklaus Virta, Pascal-P was developed with compilation into an intermediate p-code, and then compiling this p-code into executable code for a specific hardware platform . On the basis of Pascal-P, on the other side of the ocean, in UCSD , the p-System OS (1978) was created, where there was no code generator at all: the Pascal p-code itself was the target compiler platform, and the OS kernel was actually a p-code interpreter, working on bare metal. All system utilities of p-System were supplied as cross-platform p-code, and to adapt p-System to a new hardware platform, it was enough to write an interpreter of p-code for it - there is nothing to recompile!

P-code example

Pascal source code:

For the compactness of the p-code, many instructions have “abbreviated opcodes” for small, frequently used argument values.

0000: D8 p2_0: SLDL 1 0001: 00 SLDC 0 0002: 9A STO 0003: 02 SLDC 2 0004: CC 03 STL 3 0006: DA p2_2: SLDL 3 0007: 31 SLDC 49 0008: C8 LEQI 0009: A1 12 FJP p2_5 000B: D8 SLDL 1 000C: DA SLDL 3 000D: 01 SLDC 1 000E: 32 SLDC 50 000F: 88 CHK 0010: 01 SLDC 1 0011: 95 SBI 0012: A4 01 IXA 1 0014: 01 SLDC 1 0015: 9A STO 0016: DA SLDL 3 0017: 01 SLDC 1 0018: 82 ADI 0019: CC 03 STL 3 001B: B9 F6 UJP p2_2 001D: 02 p2_5: SLDC 2 001E: CC 03 STL 3 0020: DA p2_4: SLDL 3 0021: 31 SLDC 49 0022: C8 LEQI 0023: A1 2F FJP p2_1 0025: D8 SLDL 1 0026: DA SLDL 3 0027: 01 SLDC 1 0028: 32 SLDC 50 0029: 88 CHK 002A: 01 SLDC 1 002B: 95 SBI 002C: A4 01 IXA 1 002E: F8 SIND 0 002F: A1 1C FJP p2_6 0031: 02 SLDC 2 0032: DA SLDL 3 0033: 8F MPI 0034: CC 02 STL 2 0036: D9 p2_3: SLDL 2 0037: 32 SLDC 50 0038: C9 LESI 0039: A1 12 FJP p2_6 003B: D8 SLDL 1 003C: D9 SLDL 2 003D: 01 SLDC 1 003E: 32 SLDC 50 003F: 88 CHK 0040: 01 SLDC 1 0041: 95 SBI 0042: A4 01 IXA 1 0044: 00 SLDC 0 0045: 9A STO 0046: D9 SLDL 2 0047: DA SLDL 3 0048: 82 ADI 0049: CC 02 STL 2 004B: B9 F4 UJP p2_3 004D: DA p2_6: SLDL 3 004E: 01 SLDC 1 004F: 82 ADI 0050: CC 03 STL 3 0052: B9 F2 UJP p2_4 0054: AD 00 p2_1: RNP 0 |

Pascal source code:

const data_size = 50;

type data_array = array [1..data_size] of boolean;

procedure sieve (var workvec: data_array);

var i, j: integer;

begin

workvec [1]: = false;

for i: = 2 to data_size-1 do workvec [i]: = true;

for i: = 2 to data_size-1 do

if workvec [i] then begin

j: = 2 * i;

while j <data_size do begin

workvec [j]: = false;

j: = j + i;

end

end

end;

For the compactness of the p-code, many instructions have “abbreviated opcodes” for small, frequently used argument values.

The p-System turned out to be quite popular: several Apple II models and several IBM computers, including the original IBM PC (1981), were offered with the p-System as the OS; and since 1979, Western Digital has released Pascal MicroEngine minicomputers, in which the implementation of the p-code was implemented in hardware. Thus, the p-code from the abstract "universal machine language" has become a real machine language of a real computer.

Although by the nineties the p-System had already disappeared from the market, it managed to inspire James Gosling to create a Java virtual machine in 1996. “Write once, run anywhere!” Turned into an advertised advertising slogan, and implementations of the “universal machine language” ( “bytecode” ) developed in parallel in two directions:

- Acceleration of software implementation ( JIT compilation in JDK 1.1, 1997)

- Attempts to hardware implementation:

- Sun microJava701 (1998)

- Sun MAJC 5200 (1999)

- aJile aJ-100 (2000)

- Imsys Cjip (2000)

- ARM Jazelle (2001)

It is not surprising that none of the “bytecode processors” - neither for p-code, nor for Java - has become commercially successful. (This also includes the much earlier Intel iAPX 432 (1981) processor — a hardware implementation of Ada's bytecode.) Java bytecode, like Virta's p-code, like OCODE - all of them are stack-oriented , because just such an intermediate code the easiest to generate and easiest to interpret. The “virtual machine” stack is unlimited; the “iron” processor, on the contrary, has a fixed number of registers, and one of the most important tasks of the compiler is to distribute the data among the registers so that memory accesses are performed as rarely as possible. In order to “in hardware” monitor the dependencies between the data, distribute them to the “iron” registers, and rearrange references to them in order to meet the “iron” restrictions - very complex mechanisms are required. It turns out that with the same level of semiconductor technology, it is more efficient to create a processor for a simple ISA, and to implement bytecode translation on it - than to perform the same bytecode "in hardware". Again and again, dreamy IT-entrepreneurs were convinced that turning a “universal machine language” into a real one — albeit technically possible, but commercially unpromising.

Java bytecode example

Source:

The bytecode, even more than the Virta p-code, is designed to be compact: for example,

2a be 3c 2a 03 03 54 2a 04 03 54 05 3d 1c 1b a2 00 0d 2a 1c 04 54 84 02 01 a7 ff f4 05 3d 1c 1b a2 00 23 2a 1c 33 99 00 17 05 1c 68 3e 1d 1b a2 00 0e 2a 1d 03 54 1d 1c 60 3e a7 ff f3 84 02 01 a7 ff de b1 | private static void sieve (boolean []);

Code:

0: aload_0 // put a link taken from the stack frame at index 0, i.e. parameter

1: arraylength // push stack the length of the transferred array

2: istore_1 // save to the stack frame at index 1 integer from the stack

3: aload_0

4: iconst_0 // put an integer on the stack - constant 0

5: iconst_0

6: bastore // write to byte or boolean array zero at zero index

7: aload_0

8: iconst_1

9: iconst_0

10: bastore // write to the same array zero by index 1

11: iconst_2

12: istore_2 // save to stack frame at index 2 integer value 2

13: iload_2 // put the newly saved value onto the stack

14: iload_1 // push local variable # 1 onto the stack

15: if_icmpge 28 // compare two integers; if value = variable, go to instruction 28

18: aload_0

19: iload_2

20: iconst_1

21: bastore // write to a byte or boolean array unit by index from a local variable

22: iinc 2, 1 // increase local variable No. 2 by one

25: goto 13 // go to instruction 13

28: iconst_2

29: istore_2 // write integer value 2 to local variable №2

30: iload_2

31: iload_1

32: if_icmpge 67 // if variable # 2 is greater than or equal to variable # 1, go to instruction 67

35: aload_0

36: iload_2

37: baload // read byte or boolean array element by index from this variable

38: ifeq 61 // if the element read is zero, go to instruction 61

41: iconst_2

42: iload_2

43: imul // multiply the value of local variable №2 by two

44: istore_3 // save the result to the stack frame at index 3

45: iload_3

46: iload_1

47: if_icmpge 61 // if local variable # 3> = variable # 1, go to instruction 61

50: aload_0

51: iload_3

52: iconst_0

53: bastore // write to byte or boolean array zero by index from variable # 3

54: iload_3

55: iload_2

56: iadd // add variable # 2 to the value of local variable # 3

57: istore_3 // write the result back to the stack frame

58: goto 45 // go to instruction 45

61: iinc 2, 1 // increase local variable No. 2 by one

64: goto 30 // go to instruction 30

67: return // return from the procedure

|

Source:

private static void sieve(boolean[] workvec) { int vecsize = workvec.length; workvec[0] = false; workvec[1] = false; for(int i = 2; i<vecsize; i++) workvec[i] = true; for(int i = 2; i<vecsize; i++) if(workvec[i]) { int j = 2 * i; while(j < vecsize) { workvec[j] = false; j = j + i; } } } The bytecode, even more than the Virta p-code, is designed to be compact: for example,

iflt (comparison with zero and conditional transition) corresponds to the three SLDC 0; GEQI; FJP instructions SLDC 0; GEQI; FJP SLDC 0; GEQI; FJP SLDC 0; GEQI; FJP , and iinc replaces four SLDL; SLDC; ADI; STL instructions SLDL; SLDC; ADI; STL SLDL; SLDC; ADI; STL SLDL; SLDC; ADI; STL .There is, however, an opposite example. It is much more profitable for a processor manufacturer to implement a popular ISA so that existing programs can run on a new processor — rather than creating a new ISA, compilers for it, and waiting for the required minimum of software for the new platform to appear. For a long time, IA-32 remained such a “universal machine language”: the volume of existing software outweighed the possible benefits of switching to a new ISA, and starting with Pentium Pro (1995), Intel processors, in fact, implemented the “hardware emulation” of the old ISA. Instructions IA-32, operating on eight “classical” registers, are transmitted by the processor to internal RISC microcommands, operating on 40 “iron” registers; and pipelines inside the processor perform these RISC microcommands in several streams — arbitrarily rearranging microcommands, if this does not break the dependencies between the data. In the Transmeta Crusoe processor (2000), whose development group included Linus Torvalds, the idea of ISA hardware emulation was further developed: out of the box, he supported IA-32, but it could be reprogrammed to work with any other ISA - to demonstrate this Transmeta had a Crusoe “advertising sample” with Java bytecode hardware support.

Sample IA-32 Machine Code

Notice how compact the code is than in any of the preceding examples.

| Machine code | Assembler |

|---|---|

55 89 e5 56 66 c7 01 00 00 b8 02 00 00 00 be 02 00 00 00 eb 05 c6 04 31 01 46 39 d6 7c f7 eb 01 40 39 d0 7d 17 80 3c 01 00 74 f5 8d 34 00 eb 06 c6 04 31 00 01 c6 39 d6 7c f6 eb e4 5e 5d c3 | .type _ZL5sievePbi, @ function _ZL5sievePbi: # pointer to the array is passed to ecx, array length is to edx #% entry pushl% ebp # save previous ebp value movl% esp,% ebp # now ebp points to the current stack frame pushl% esi # save previous esi value movw $ 0, (% ecx) # write the zero word at ecx movl $ 2,% eax # (i.e., two array elements zeroed by one instruction) movl $ 2,% esi # save deuce to eax and to esi jmp .LBB1_1 # go to label .LBB1_1 .LBB1_2: #% for.body movb $ 1, (% ecx,% esi) # write a single byte at ecx + esi incl% esi # increase esi by one .LBB1_1: #% for.cond cmpl% edx,% esi # compare esi and edx jl .LBB1_2 # if edx <esi, go to the label .LBB1_2 jmp .LBB1_4 # otherwise go to the label .LBB1_4 .LBB1_3: #% for.inc.11 incl% eax # increase eax by one .LBB1_4: #% for.cond.4 cmpl% edx,% eax # compare eax and edx jge .LBB1_9 # if edx> = esi, go to the label .LBB1_9 #% for.body.7 cmpb $ 0, (% ecx,% eax) # compare with zero byte at ecx + esi je .LBB1_3 # if zero, go to .LBB1_3 #% if.then leal (% eax,% eax),% esi # write to esi twice the value of eax jmp .LBB1_7 # go to label .LBB1_7 .LBB1_8: #% while.body movb $ 0, (% ecx,% esi) # write zero byte at ecx + esi addl% eax,% esi # add eax to esi .LBB1_7: #% while.cond cmpl% edx,% esi # compare esi and edx jl .LBB1_8 # if edx <esi, go to the label .LBB1_8 jmp .LBB1_3 # otherwise go to the label .LBB1_3 .LBB1_9: #% for.cond.cleanup.6 popl% esi # restore old esi value popl% ebp # restore old ebp value retl # return from the procedure |

It is hardly coincidental that in the only successful examples of the hardware implementation of the “universal machine language”, the implemented ISA was not a stack, but a registered one. Martin Richards to replace his OCODE he developed a new register uni-ISA called INTCODE (1972). INTCODE is extremely simple: six registers, eight operations, and several addressing modes are supported; instructions, depending on the addressing mode, occupy either one or two words. In 1980, Martin developed a version of this uni-ISA, designed for 16-bit microcomputers. The new uni-ISA — still with six registers, but with a more complex and less orthogonal instruction set — was named Cintcode, and was extremely compact: advertising brochures stated that Cintcode programs usually take up to three times less memory than when compiled into machine codes 6502 . For BBC Micro and Amiga computers, OSs were popular, supporting Cintcode execution along with machine code.

Cintcode Example

Register

This code was obtained using the latest (2014) version of BCPL Cintcode System from the above implementation on BCPL. The compactness of such a code is promoted by the presence in Cintcode of double assignment instructions (registers A and B immediately) and double dereferencing (the address given is obtained by adding the register A and the values in the stack).

P - stack pointer; at the entrance to the function P[3] contains a pointer to the array, P[4] - its length.0: 10 L0; A: = 0 1: DB ST0P3; P [3] [0]: = A (zero the zero element of the array) 2: DD ST1P3; P [3] [1]: = A (zero the first element of the array) 3: 0F LM1; A: = -1 4: C4 AP4; A: = A + P [4] (one less than the length of the array) 5: A6 SP6; P [6]: = A (save to local variable) 6: 12 L2; B: = A; A: = 2 7: A5 SP5; P [5]: = A 8: 5C0A JLS 10; IF B <A GOTO 19 10: 11 L1; A: = 1 11: 83 LP3; B: = A; A: = P [3] 12: 9A STP5; P [5] [A]: = B (write unit by index from P [5]) 13: B5 XCH; A: = B 14: C5 AP5; A: = A + P [5] 15: A5 SP5; P [5]: = A (increase the value of P [5] by one) 16: 86 LP6; B: = A; A: = P [6] 17: 9CF8 JLE -8; IF B <= A GOTO 10 19: 0F LM1; A: = -1 20: C4 AP4; A: = A + P [4] 21: A6 SP6; P [6]: = A (the same upper bound of the cycle) 22: 12 L2; B: = A; A: = 2 23: A5 SP5; P [5]: = A 24: 5C1B JLS 27; IF B <A GOTO 52 26: 83 LP3; A: = P [3] 27: D8 RVP5; A: = P [5] [A] (read element by index from P [5]) 28: 1E11 JEQ0 17; IF A = 0 GOTO 46 30: 85 LP5; A: = P [5] 31: 12 L2; B: = A; A: = 2 32: 34 MUL; A: = A * B (double the value of P [5]) 33: A7 SP7; P [7]: = A 34: 84 LP4; B: = A; A: = P [4] (in A, the length of the array) 35: BC0A JGE 10; IF B> = A GOTO 46 37: 10 L0; A: = 0 38: 87 LP7; B: = A; A: = P [7] 39: 98 STP3; P [3] [A]: = B (write zero at index from P [7]) 40: 87 LP7; A: = P [7] 41: C5 AP5; A: = A + P [5] 42: A7 SP7; P [7]: = A (increase the value of P [7] by P [5]) 43: 84 LP4; B: = A; A: = P [4] (in A, the length of the array) 44: 5CF8 JLS -8; IF B <A GOTO 37 46: 11 L1; A: = 1 47: C5 AP5; A: = A + P [5] 48: A5 SP5; P [5]: = A (increase the value of P [5] by one) 49: 86 LP6; B: = A; A: = P [6] (A is 1 less than the length of the array) 50: 9CE7 JLE -25; IF B <= A GOTO 26 52: 7B RTN

This code was obtained using the latest (2014) version of BCPL Cintcode System from the above implementation on BCPL. The compactness of such a code is promoted by the presence in Cintcode of double assignment instructions (registers A and B immediately) and double dereferencing (the address given is obtained by adding the register A and the values in the stack).

MSIL (2001; officially called CIL) was the pinnacle of stack uni-isa development - or, if you look at it from the other side, a dead end in their development. MSIL is very similar to Java bytecode, but adds a number of additional features . Attempts to implement MSIL hardware, as far as I know, have not been undertaken; Microsoft did not even try to offer an alternative to Java to create cross-platform, full-featured web applications. MSIL remained the “machine language of the Microsoft platforms, and no others.

MSIL Example

C# Java

, MSIL «»,

.method private hidebysig static

void sieve (bool [] workvec) cil managed

{

.maxstack 3

.locals init ([0] int32 vecsize,

[1] int32 i,

[2] int32 V_2,

[3] int32 j)

IL_0000: / * 02 | * / ldarg.0 // put parameter # 0 (unique) onto the stack

IL_0001: / * 8E | * / ldlen // calculate array length (unsigned integer)

IL_0002: / * 69 | * / conv.i4 // convert it to int32 (signed integer)

IL_0003: / * 0A | * / stloc.0 // save result to local variable # 0

IL_0004: / * 02 | * / ldarg.0 // push parameter to stack

IL_0005: / * 16 | * / ldc.i4.0 // put the constant 0 (signed integer) on the stack

IL_0006: / * 16 | * / ldc.i4.0

IL_0007: / * 9C | * / stelem.i1 // write zero into zero byte array into byte array

IL_0008: / * 02 | * / ldarg.0

IL_0009: / * 17 | */ ldc.i4.1 // 1 ( )

IL_000a: /* 16 | */ ldc.i4.0

IL_000b: /* 9C | */ stelem.i1 // (int8) 1

IL_000c: /* 18 | */ ldc.i4.2

IL_000d: /* 0B | */ stloc.1 // №1

IL_000e: /* 2B | 08 */ br.s IL_0018 // IL_0019

IL_0010: /* 02 | */ ldarg.0

IL_0011: /* 07 | */ ldloc.1 //

IL_0012: /* 17 | */ ldc.i4.1

IL_0013: /* 9C | */ stelem.i1 //

IL_0014: /* 07 | */ ldloc.1

IL_0015: /* 17 | */ ldc.i4.1

IL_0016: /* 58 | */ add //

IL_0017: /* 0B | */ stloc.1 //

IL_0018: /* 07 | */ ldloc.1

IL_0019: /* 06 | */ ldloc.0 // №0

IL_001a: /* 32 | F4 */ blt.s IL_0010 // №1 < №0, IL_0010

IL_001c: /* 18 | */ ldc.i4.2

IL_001d: /* 0C | */ stloc.2 // №2

IL_001e: /* 2B | 1B */ br.s IL_003b // IL_003b

IL_0020: /* 02 | */ ldarg.0

IL_0021: /* 08 | */ ldloc.2

IL_0022: /* 90 | */ ldelem.i1 //

IL_0023: /* 2C | 12 */ brfalse.s IL_0037 // , IL_0037

IL_0025: /* 18 | */ ldc.i4.2

IL_0026: /* 08 | */ ldloc.2

IL_0027: /* 5A | */ mul // №2

IL_0028: /* 0D | */ stloc.3 // №3

IL_0029: /* 2B | 08 */ br.s IL_0033 // IL_0033

IL_002b: /* 02 | */ ldarg.0

IL_002c: /* 09 | */ ldloc.3

IL_002d: /* 16 | */ ldc.i4.0

IL_002e: /* 9C | */ stelem.i1 // 0 №3

IL_002f: /* 09 | */ ldloc.3

IL_0030: /* 08 | */ ldloc.2

IL_0031: /* 58 | */ add // №2 №2

IL_0032: /* 0D | */ stloc.3 // №2

IL_0033: /* 09 | */ ldloc.3

IL_0034: /* 06 | */ ldloc.0

IL_0035: /* 32 | F4 */ blt.s IL_002b // №3 < №0, IL_002b

IL_0037: /* 08 | */ ldloc.2

IL_0038: /* 17 | */ ldc.i4.1

IL_0039: /* 58 | */ add // №2

IL_003a: /* 0C | */ stloc.2 //

IL_003b: /* 08 | */ ldloc.2

IL_003c: /* 08 | */ ldloc.0

IL_003d: /* 32 | E1 */ blt.s IL_0020 // №2 < №0, IL_0020

IL_003f: /* 2A | */ ret //

}

C# Java

boolean → bool . Java- MSIL : -, , . -, (/ , , ..), MSIL, add , «», .. ; (, — int32) . , MSIL JIT-, MSIL- ., MSIL «»,

iflt iinc .There were no more stack contenders for “universal machine languages” in this millennium.

The present

In 2000, Chris Lattner, an undergraduate student at UIUC , as a graduation project began developing the “universal compiler” of the LLVM , consisting of completely independent “frontends” that translate the code into HLW into a universal “intermediate representation” (intermediate representation, IR) - and “ backends, which translate IR into executable code for specific ISA.

As an intermediate representation, LLVM-IR, a single assignment form was selected ( “SSA-form”): each variable is assigned a value once, and during program operation it does not change. This form makes it easier to keep track of dependencies between data, makes it easy to rearrange and replace individual instructions, find and delete duplicate or “dead” instructions, and so on.

LLVM-IR Example

(BB), ( ). BB . — . BB , .. BB, .

IA-32 LLVM IR — , , , BB .

, — «» MSIL.

C++:

; Function Attrs: minsize noinline nounwind optsize

define internal fastcc void @_ZL5sievePbi(i8* nocapture %workvec, i32 %vecsize) #0 {

; #0 -- ( )

entry:

store i8 0, i8* %workvec, align 1, !tbaa !1 ;

%arrayidx1 = getelementptr inbounds i8, i8* %workvec, i32 1 ;

store i8 0, i8* %arrayidx1, align 1, !tbaa !1 ;

br label %for.cond ; BB

for.cond: ; preds = %for.body, %entry

%i.0 = phi i32 [ 2, %entry ], [ %inc, %for.body ]

; %i.0 2, BB %entry, %inc, %for.body

%cmp = icmp slt i32 %i.0, %vecsize

; %i.0 %vecsize , %cmp 1 0

br i1 %cmp, label %for.body, label %for.cond.4 ; %for.body %for.cond.4

for.body: ; preds = %for.cond

%arrayidx2 = getelementptr inbounds i8, i8* %workvec, i32 %i.0 ; %i.0-

store i8 1, i8* %arrayidx2, align 1, !tbaa !1 ;

%inc = add nuw nsw i32 %i.0, 1 ; %inc %i.0

; nuw nsw , ( ),

br label %for.cond ; BB

for.cond.4: ; preds = %for.cond, %for.inc.11

%i3.0 = phi i32 [ %inc12, %for.inc.11 ], [ 2, %for.cond ]

; %i3.0 2, BB %for.body, %inc12, %for.inc.11

%cmp5 = icmp slt i32 %i3.0, %vecsize ; %i3.0 %vecsize

br i1 %cmp5, label %for.body.7, label %for.cond.cleanup.6 ; , %i3.0 %vecsize

for.cond.cleanup.6: ; preds = %for.cond.4

ret void ; ,

for.body.7: ; preds = %for.cond.4

%arrayidx8 = getelementptr inbounds i8, i8* %workvec, i32 %i3.0

%0 = load i8, i8* %arrayidx8, align 1, !tbaa !1, !range !5 ; %i3.0

%tobool = icmp eq i8 %0, 0 ;

br i1 %tobool, label %for.inc.11, label %if.then

if.then: ; preds = %for.body.7

%mul = shl nsw i32 %i3.0, 1 ; %mul %i3.0

br label %while.cond ; BB

while.cond: ; preds = %while.body, %if.then

%j.0 = phi i32 [ %mul, %if.then ], [ %add, %while.body ]

%cmp9 = icmp slt i32 %j.0, %vecsize ; %j.0 %vecsize

br i1 %cmp9, label %while.body, label %for.inc.11 ; %j.0 < %vecsize, %while.body

while.body: ; preds = %while.cond

%arrayidx10 = getelementptr inbounds i8, i8* %workvec, i32 %j.0

store i8 0, i8* %arrayidx10, align 1, !tbaa !1 ; %j.0-

%add = add nsw i32 %j.0, %i3.0 ; %add %j.0 %i3.0

br label %while.cond ; BB

for.inc.11: ; preds = %while.cond, %for.body.7

%inc12 = add nuw nsw i32 %i3.0, 1 ; %inc12 %i3.0

br label %for.cond.4

}

IA-32 LLVM IR — , , , BB .

!tbaa !1 — alias analysis ; !range !5 — ( bool — 0 1)., — «» MSIL.

C++:

static void sieve(bool workvec[], int vecsize) { workvec[0] = false; workvec[1] = false; for(int i = 2; i<vecsize; i++) workvec[i] = true; for(int i = 2; i<vecsize; i++) if(workvec[i]) { int j = 2 * i; while(j < vecsize) { workvec[j] = false; j = j + i; } } } Since LLVM-IR was developed primarily as an effective way of transferring code from the frontend to the backend, and not as a uni-ISA, there are a number of disadvantages in the role of un-ISA. First, the features of the target platform can affect the generation of LLVM-IR: a program for a 32-bit system will be compiled into a different LLVM-IR program than a program for a 64-bit one; and the program for Windows will be compiled into another LLVM-IR than the program for Linux. Secondly, LLVM-IR is unstable: any LLVM developer can add new instructions or change the value of existing ones, if this allows for more efficient compilation of important PLs on its important platforms. This means that LLVM-IR, generated by some specific version of the frontend, can be used only with the corresponding version of the backend, and with no other. Third, LLVM-IR allows you to present a code with "undefined behavior"- Each backend has the right to transmit such code in any convenient manner in order to “squeeze” the maximum performance out of the program. Code with undefined C or C ++ behavior is usually compiled into code with undefined behavior on LLVM-IR — i.e. in fact, the semantics of such a code are not determined by the frontend, but by the backend, in an optimal way for a particular platform. Naturally, code with undefined behavior contradicts the “Write once, run anywhere” paradigm , under which uni-ISA is promoted. Nevertheless, Google, being one of the active developers of LLVM, considered LLVM-IR as an adequate format for the distribution of cross-platform applications written in any of the many PLs supported in LLVM. Starting from version 31 (2013), Google Chrome includes support for PNaCl- LLVM-IR subsets with stable instruction semantics and without platform dependent constructions and optimizations.

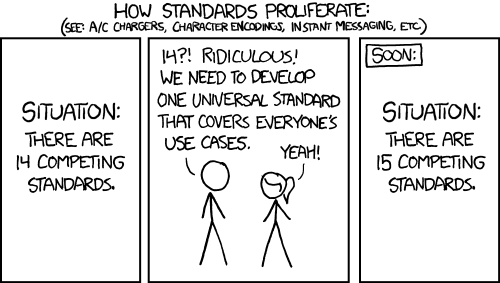

It would seem: why are PNaCl applications better than Java applets that were invented fifteen years earlier for the exact same purpose — creating cross-platform, full-featured web applications? (KDPV at the beginning of this topic is just a topic.) I know two advantages of PNaCl: first, LLVM-IR, compared to Java bytecode, simplifies code optimization when a browser translates a PNaCl application into the machine code of a specific target platform. The second and, as far as I understand, the main argument is the openness and licensed purity of LLVM: its owners (Sun and then Oracle) were suing everybody they met, including Google. LLVM and its IR, by contrast, are fully open; and, on the one hand, Google receives in its PNaCl compilers for free all sorts of buns and new optimizations implemented by other LLVM members;On the other hand, any other developers have the right to add support for PNaCl applications to their browsers and not be afraid of lawsuits.

So far, there are no people willing to follow Google’s example and implement PNaCl support. On the contrary: Mozilla for the same purpose - creating cross-platform, full-featured web applications - has developed its own un-ISA called asm.js (2013). The generation of asm.js was implemented as a new backend for LLVM . Work on asm.js and PNaCl went almost simultaneously, and in Google Chrome support for asm.js appeared even earlier than support for PNaCl - from version 28 (2013).

asm.js implements a very elegant idea: the program on it is the correct code on (heavily trimmed) JavaScript, and accordingly, such a program should work in any browser that supports JavaScript. On the other hand, browsers capable of recognizing asm.js constructs and compiling them into machine code for the target platform “on the fly” will execute such a program many times faster than browsers running JavaScript head-on. Thus, both old browsers are able to execute new code on asm.js, and new browsers are able to execute old code on “classic” JavaScript: if the script does not have a prologue

"use asm";, it is executed in the old-fashioned way.Asm.js example

C++, , Emscripten:

function __ZL5sievePbi($workvec,$vecsize) { $workvec = $workvec|0; // |0 $vecsize = $vecsize|0; var $0 = 0, $1 = 0, $10 = 0, $11 = 0, $12 = 0, $13 = 0, $14 = 0, $15 = 0, $16 = 0, $17 = 0, $18 = 0, $19 = 0, $2 = 0, $20 = 0, $21 = 0, $22 = 0, $23 = 0, $24 = 0, $25 = 0, $26 = 0; var $27 = 0, $28 = 0, $29 = 0, $3 = 0, $30 = 0, $31 = 0, $32 = 0, $33 = 0, $4 = 0, $5 = 0, $6 = 0, $7 = 0, $8 = 0, $9 = 0, $i = 0, $i1 = 0, $j = 0, label = 0, sp = 0; sp = STACKTOP; STACKTOP = STACKTOP + 32|0; if ((STACKTOP|0) >= (STACK_MAX|0)) abort(); $0 = $workvec; $1 = $vecsize; $2 = $0; HEAP8[$2>>0] = 0; // >>0 $3 = $0; $4 = ((($3)) + 1|0); // HEAP8[$4>>0] = 0; $i = 2; while(1) { $5 = $i; $6 = $1; $7 = ($5|0)<($6|0); // if (!($7)) { break; } $8 = $i; $9 = $0; $10 = (($9) + ($8)|0); HEAP8[$10>>0] = 1; $11 = $i; $12 = (($11) + 1)|0; $i = $12; } $i1 = 2; while(1) { $13 = $i1; $14 = $1; $15 = ($13|0)<($14|0); if (!($15)) { break; } $16 = $i1; $17 = $0; $18 = (($17) + ($16)|0); $19 = HEAP8[$18>>0]|0; // $20 = $19&1; L8: do { if ($20) { $21 = $i1; $22 = $21<<1; $j = $22; while(1) { $23 = $j; $24 = $1; $25 = ($23|0)<($24|0); if (!($25)) { break L8; } $26 = $j; $27 = $0; $28 = (($27) + ($26)|0); HEAP8[$28>>0] = 0; $29 = $j; $30 = $i1; $31 = (($29) + ($30))|0; $j = $31; } } } while(0); $32 = $i1; $33 = (($32) + 1)|0; $i1 = $33; } STACKTOP = sp;return; } Interestingly, when asm.js in its development “stuck” into the limitations of JavaScript (lack of support for 64-bit numbers, multithreading, SIMD instructions, etc.), then the missing constructions were added to the JavaScript standard. Thus, the compatibility of asm.js with standard JavaScript is the result of the efforts of not only the developers of asm.js, but also the developers of the standard of the language.

In addition to the reasons already mentioned, to use instead of Java applets a new technology based on LLVM, supporters of asm.js give the following argument:“JavaScript has a built-in virtual machine, so adding another will result in a second set of API connections to give the virtual machine access to DOM, networks, sensors, input devices, etc. For this you have to sacrifice something. For example, how will the processes in a virtual machine distribute the available resources among themselves? It is more difficult to answer this question than it seems. ” (Original spelling) In particular, this means that new engine features are simultaneously available from asm.js and from“ classical ”JavaScript; they do not need to be implemented twice.

Future

Compared to the “raw” LLVM-IR, on which Google and Apple were made, asm.js has a lot of advantages; but there are drawbacks. First, the code on asm.js is incredibly increasing in size - the addition of two integers takes more than twenty bytes! Secondly, to execute code on asm.js, it is necessary to re-execute its syntactic parsing. It would be much more efficient to save the compilation result as an AST , rather than generate a JavaScript code from it, and then restore the AST using this code. So in the summer of 2015, a new "universal machine language" called WebAssembly appearedwho retained some of the strengths of asm.js (for example, integration with the JavaScript engine), eliminated the two disadvantages mentioned, and refused to be directly compatible with the old JavaScript engines (as vsb commented , "Or, the game in the browser did not start at all, or it started with 1 fps - there is no difference, in any case, it will not be able to play it. " ) Instead, the developers of WebAssembly implemented a " putty " - a JavaScript library that reads the code on WebAssembly and" on the fly "generates code from it classic »asm.js; and its old browser versions are already able to perform.

WebAssembly example

C++, , WebAssembly LLVM:

LLVM-IR IA-32, ; WebAssembly , .

, , VEG - — « WebAssembly .» — .

_Z5sievePbi: .param i32 # $0 $1 .param i32 .local i32, i32, i32, i32 # $2, $3, $4, $5 # %entry block BB0_9 # -- i32.const $2, 0 # i32.store8 $0, $2 # i32.const $3, 1 i32.add $push0, $0, $3 # -- i32.store8 $pop0, $2 # i32.const $4, 2 set_local $5, $4 BB0_1: # %for.cond loop BB0_3 i32.ge_s $push1, $5, $1 # -- br_if $pop1, BB0_3 # %for.body i32.add $push7, $0, $5 # $pushN $popN -- "" i32.store8 $pop7, $3 # ( ) i32.add $5, $5, $3 # br BB0_1 BB0_3: # %for.cond.4 loop BB0_9 block BB0_8 block BB0_4 block BB0_3 # , (? ?) i32.lt_s $push2, $4, $1 br_if $pop2, BB0_4 br BB0_9 BB0_4: # %for.body.7 i32.add $push3, $0, $4 i32.load8_u $5, $pop3 # $4 i32.eq $push4, $5, $2 # br_if $pop4, BB0_8 # %if.then i32.shl $5, $4, $3 # $4 , .. BB0_6: # %while.cond loop BB0_8 i32.ge_s $push5, $5, $1 br_if $pop5, BB0_8 # %while.body i32.add $push6, $0, $5 i32.store8 $pop6, $2 i32.add $5, $5, $4 br BB0_6 BB0_8: # %for.inc.11 i32.add $4, $4, $3 br BB0_3 BB0_9: # %for.cond.cleanup.6 return

LLVM-IR IA-32, ; WebAssembly , .

, , VEG - — « WebAssembly .» — .

One of the main developers of WebAssembly was Google - they say that the entire experience gained during the development of PNaCl will be taken into account in the new “universal machine language” based on LLVM. PNaCl itself, they are no longer going to develop. Another major developer, Mozilla, emphasizes that WebAssembly, in contrast to asm.js, is not tied - either at the syntactic or semantic level - to any particular PL; it may be that, in a dozen or two years, JavaScript will disappear into non-existence, and browsers will no longer support scripts in the source code — instead, WebAssembly will become the “native” format of web applications for browsers. PC programs made this “qualitative leap” in the 1980s, when software developers moved from spreading multipage listings in BASIC and Pascal to distributing executable code - and, finally,it became possible to use a PC without knowing any programming language at least at the level of “how can I compile and run all this?”

Most users of web applications already have no idea about JavaScript; Well, what's the point of distributing web applications as source code?

Manufacturers of processors for mobile devices quickened. If you implement “in hardware”, the execution of code in the new “universal machine language”, then the performance of web applications — for the sake of which mobile devices are acquired and acquired — can grow many times! WebAssembly, though a high-level machine language, but not stack; therefore, there is a chance that its hardware implementation could become more successful than previous attempts to implement uni-ISA stacks. The most important thing is that a single machine language for all mobile applications could transform the entire ecosystem - just as x86 at one time transformed the PC world. Prior to the “hegemony” of x86, software for PC came out in a dozen more or less compatible versions for various platforms - for example, Prince of Persia had separate official versions for Amiga, Amstrad, Apple II, Atari, PCFM Towns, IBM PC, Macintosh Quadra, NEC PC-9801, SAM Coupé, and Sharp X68000; but at some point the phrase “program for PC” began to denote the “program for x86”. Similarly, a “single mobile ISA” would untie software developers, device manufacturers (OEMs), and processor manufacturers from each other: OEMs could choose processors from any manufacturers for their devices, or even use different processors in devices of the same line. manufacturers; and developers would no longer need to spend money on the transfer of applications between different platforms. Ultimately, the user wins if any of the programs he needs is launched on any of his devices.Similarly, a “single mobile ISA” would untie software developers, device manufacturers (OEMs), and processor manufacturers from each other: OEMs could choose processors from any manufacturers for their devices, or even use different processors in devices of the same line. manufacturers; and developers would no longer need to spend money on the transfer of applications between different platforms. Ultimately, the user wins if any of the programs he needs is launched on any of his devices.Similarly, a “single mobile ISA” would untie software developers, device manufacturers (OEMs), and processor manufacturers from each other: OEMs could choose processors from any manufacturers for their devices, or even use different processors in devices of the same line. manufacturers; and developers would no longer need to spend money on the transfer of applications between different platforms. Ultimately, the user wins if any of the programs he needs is launched on any of his devices.and developers would no longer need to spend money on the transfer of applications between different platforms. Ultimately, the user wins if any of the programs he needs is launched on any of his devices.and developers would no longer need to spend money on the transfer of applications between different platforms. Ultimately, the user wins if any of the programs he needs is launched on any of his devices.

Manufacturers of processors for mobile devices quickened. If you implement “in hardware”, the execution of code in the new “universal machine language”, then the performance of web applications — for the sake of which mobile devices are acquired and acquired — can grow many times! WebAssembly, though a high-level machine language, but not stack; therefore, there is a chance that its hardware implementation could become more successful than previous attempts to implement uni-ISA stacks. The most important thing is that a single machine language for all mobile applications could transform the entire ecosystem - just as x86 at one time transformed the PC world. Prior to the “hegemony” of x86, software for PC came out in a dozen more or less compatible versions for various platforms - for example, Prince of Persia had separate official versions for Amiga, Amstrad, Apple II, Atari, PCFM Towns, IBM PC, Macintosh Quadra, NEC PC-9801, SAM Coupé, and Sharp X68000; but at some point the phrase “program for PC” began to denote the “program for x86”. Similarly, a “single mobile ISA” would untie software developers, device manufacturers (OEMs), and processor manufacturers from each other: OEMs could choose processors from any manufacturers for their devices, or even use different processors in devices of the same line. manufacturers; and developers would no longer need to spend money on the transfer of applications between different platforms. Ultimately, the user wins if any of the programs he needs is launched on any of his devices.Similarly, a “single mobile ISA” would untie software developers, device manufacturers (OEMs), and processor manufacturers from each other: OEMs could choose processors from any manufacturers for their devices, or even use different processors in devices of the same line. manufacturers; and developers would no longer need to spend money on the transfer of applications between different platforms. Ultimately, the user wins if any of the programs he needs is launched on any of his devices.Similarly, a “single mobile ISA” would untie software developers, device manufacturers (OEMs), and processor manufacturers from each other: OEMs could choose processors from any manufacturers for their devices, or even use different processors in devices of the same line. manufacturers; and developers would no longer need to spend money on the transfer of applications between different platforms. Ultimately, the user wins if any of the programs he needs is launched on any of his devices.and developers would no longer need to spend money on the transfer of applications between different platforms. Ultimately, the user wins if any of the programs he needs is launched on any of his devices.and developers would no longer need to spend money on the transfer of applications between different platforms. Ultimately, the user wins if any of the programs he needs is launched on any of his devices.On the other hand, a single machine language imposes a rigid framework on processor manufacturers: you cannot add a new feature to a new processor until it is accepted into the “single ISA” and implemented by other manufacturers. Further, as a single machine language develops, situations are inevitable when one of the manufacturers will try to push into the language such features that are specifically beneficial for him. For example, the integer division instruction in Intel processors generates an exception when dividing by zero; in ARM processors, returns zero as a result: ARM engineers considered that it is better to pass the divider check to the compiler, and thereby speed up the division in cases where the divider is obviously non-zero. Thirdly, what about graphics support? WebAssembly is not even tryingto offer a single ISA for graphics processors — there are even greater differences between the preferences of different manufacturers than between Intel and ARM command systems.

However, all these arguments rely on the fact that WebAssembly will take root, and will not be abandoned in a couple of years, like PNaCl, and will not become isolated in a separate niche, like Java and MSIL.

We'll see.

Source: https://habr.com/ru/post/270379/

All Articles