Evaluation of test coverage on the project

The best way to evaluate whether we have tested the product well is to analyze the missing defects. Those faced by our users, implementers, business. You can appreciate a lot from them: what we did not check thoroughly, which areas of the product should be given more attention, what percentage of omissions in general, and what is the dynamics of its changes. With this metric (perhaps the most common test), everything is fine, but ... When we released the product, and learned about the missed errors, it may be too late: an angry article appeared on us, competitors quickly spread criticism, customers lost trust in us, the management is dissatisfied.

To prevent this from happening, we usually in advance, prior to release, try to evaluate the quality of testing: how well and thoroughly do we check the product? What areas do not have enough attention, where are the main risks, what progress? And to answer all these questions, we evaluate the test coverage.

Any evaluation metrics are a waste of time. At this time, you can test, start up bugs, prepare autotests. What kind of magical benefit do we get thanks to the test coverage metrics to donate time for testing?

Before introducing any metric, it is important to decide how you will use it. Start by answering this question - most likely, you will immediately understand how to best consider it. And I will only share in this article some examples and my experience on how to do this. Not in order to blindly copy solutions - but in order for your imagination to rely on this experience, thinking through the ideal solution for you.

')

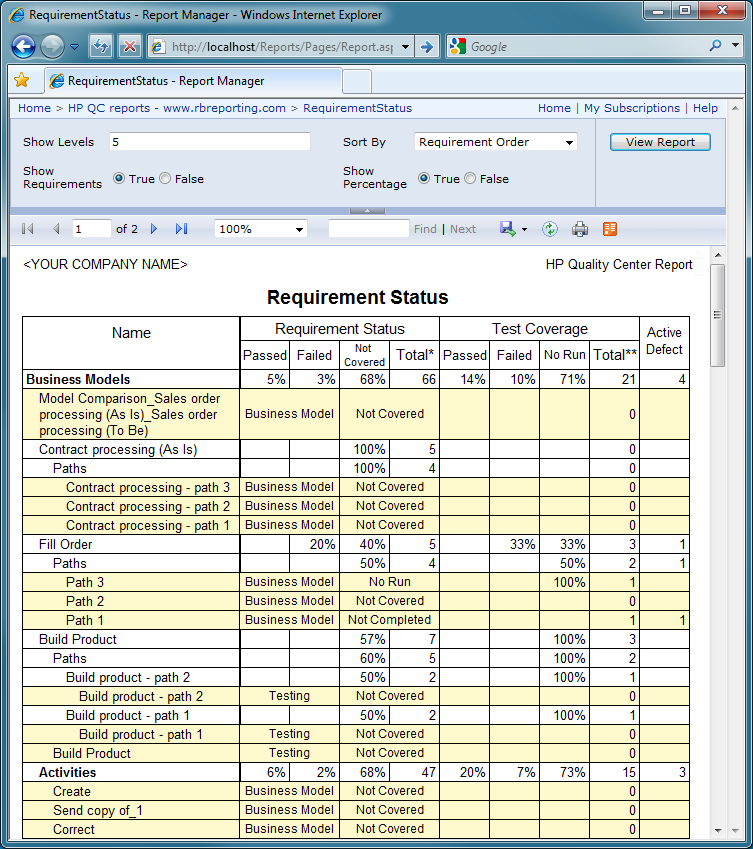

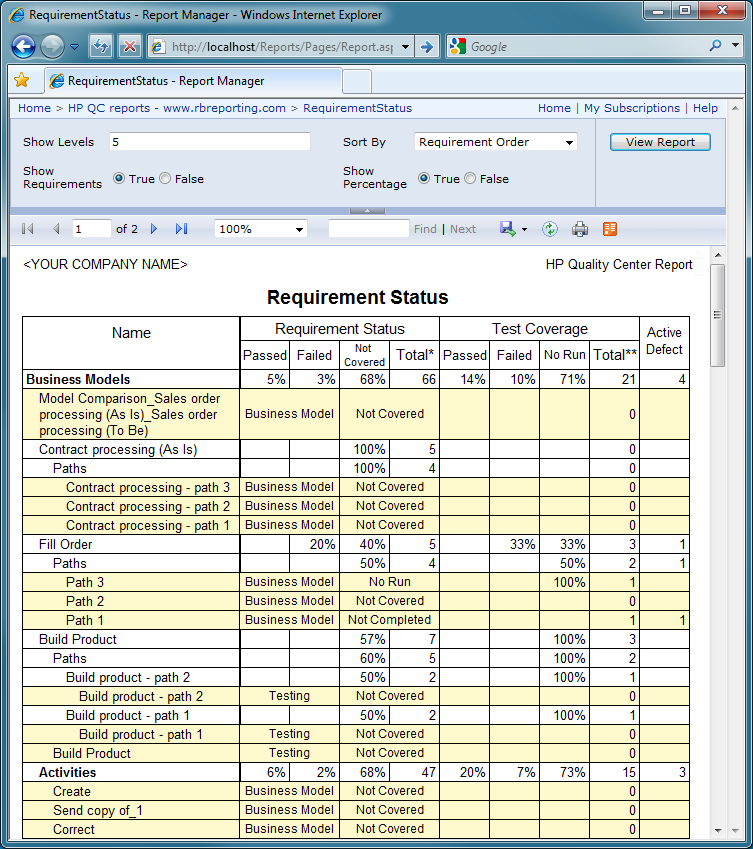

Suppose you have analysts in your team, and they are not wasting their working time. Based on the results of their work, requirements were created in RMS (Requirements Management System) - HP QC, MS TFS, IBM Doors, Jira (with additional plug-ins), etc. In this system, they make the requirements that meet the requirements for the requirements (sorry for the tautology). These requirements are atomic, traceable, specific ... In general, the ideal conditions for testing. What can we do in this case? When using the scripting approach - link requirements and tests. We conduct tests in the same system, make a requirement-test bundle, and at any time we can look at the report, for what requirements there are tests, for which - no, when these tests were passed, and with what result.

We get a coverage map, we cover all uncovered requirements, everyone is happy and satisfied, we don’t miss mistakes ...

Okay, let's go back from heaven to earth. Most likely, you do not have detailed requirements, they are not atomic, some of the requirements are completely lost, and there is no time to document every test, or at least every second one. You can despair and cry, but you can admit that testing is a compensatory process, and the worse we are with analytics and development on the project, the more we should try and compensate for the problems of other participants in the process. Let's sort out the problems separately.

Analysts, too, sometimes sin in the head with a vinaigrette, and usually this is fraught with problems with the whole project. For example, you are developing a text editor, and you may have (among others) two requirements: “html formatting must be supported” and “when opening a file of unsupported format, a pop-up window should appear with the question”. How many tests are required for basic verification of the 1st requirement? And for the 2nd? The difference in the answers is most likely about a hundred times !!! We cannot say that in the presence of at least the 1st test on the 1st requirement, this is enough - but about the 2nd, most likely, completely.

Thus, the availability of a test for the requirement does not guarantee us anything at all! What does our coverage statistics mean in this case? About nothing! We'll have to decide!

Of course, such a harmonization process requires a lot of resources and time, especially at first, before the practice is developed. Therefore, spend on it only the high-priority requirements, and new improvements. Over time, the rest of the requirements tighten, and everyone will be happy! But ... and if there are no requirements at all?

They are absent on the project, discussed verbally, everyone does what he wants / can and how he understands. We test the same way. As a result, we get a huge number of problems not only in testing and development, but also initially incorrect implementation of features - they wanted a completely different one! Here I can advise the option “define and document the requirements yourself”, and even used this strategy a couple of times in my practice, but in 99% of cases there are no such resources in the testing team - so let's go in a much less resource-intensive way:

But ... What if the requirements are maintained, but not in a traceable format?

The project has a huge amount of documentation, analysts print at a speed of 400 characters per minute, you have specifications, specifications, instructions, references (most often this happens at the request of the customer), and all this acts as requirements, and everything has been on the project for a long time confused where to look for what information?

Repeat the previous section, helping the whole team to restore order!

But ... Not for long ... It seems that last week analysts on customer appeals updated 4 different specifications !!!

Of course, it would be good to test some fixed system, but our products are usually live. Something was asked by the customer, something has changed in the legislation external to our product, but somewhere analysts have found an error analyzing the year before last ... The requirements live their own lives! What to do?

In this case, we get all the benefits of the test coverage assessment, and even in the dynamics! Everyone is happy!!! But…

But you paid so much attention to the work with the requirements that now you don’t have enough time either for testing or for documenting tests. In my opinion (and there is a place for a religious dispute!), The requirements are more important than the tests, and it’s better that way! At least they are in order, and the whole team is up to date, and the developers are doing exactly what they need. BUT DOCUMENTATION OF TIME TESTS DOES NOT REMAIN!

In fact, the source of this problem may not only be a lack of time, but also your quite conscious choice not to document them (we do not like it, we avoid the effect of the pesticide, the product changes too often, etc.). But how to evaluate the test coverage in this case?

But ... What else is a "but"? What is ???

Speak, we will do everything, and may quality products be with us!

To prevent this from happening, we usually in advance, prior to release, try to evaluate the quality of testing: how well and thoroughly do we check the product? What areas do not have enough attention, where are the main risks, what progress? And to answer all these questions, we evaluate the test coverage.

Why evaluate?

Any evaluation metrics are a waste of time. At this time, you can test, start up bugs, prepare autotests. What kind of magical benefit do we get thanks to the test coverage metrics to donate time for testing?

- Search for your weak areas. Naturally, we need it? not just to grieve, but to know where improvements are needed. What functional areas are not covered by tests? What have we not checked? Where are the greatest risks of skipping errors?

- Rarely, we get 100% from the results of the coverage assessment What to improve? Where to go? What is the percentage now? How do we increase it by any task? How fast will we get to 100? All these questions bring transparency and clarity to our process , and the answers to them are assessed by coverage.

- Focus of attention. For example, in our product there are about 50 different functional areas. A new version comes out, and we start testing the first of them, and we find typos there, and buttons that have slipped a couple of pixels, and other trifles ... And now the testing time is complete, and this functionality has been tested in detail ... And the remaining 50? Coverage assessment allows us to prioritize tasks based on current realities and deadlines.

How to evaluate?

Before introducing any metric, it is important to decide how you will use it. Start by answering this question - most likely, you will immediately understand how to best consider it. And I will only share in this article some examples and my experience on how to do this. Not in order to blindly copy solutions - but in order for your imagination to rely on this experience, thinking through the ideal solution for you.

')

Evaluate test coverage

Suppose you have analysts in your team, and they are not wasting their working time. Based on the results of their work, requirements were created in RMS (Requirements Management System) - HP QC, MS TFS, IBM Doors, Jira (with additional plug-ins), etc. In this system, they make the requirements that meet the requirements for the requirements (sorry for the tautology). These requirements are atomic, traceable, specific ... In general, the ideal conditions for testing. What can we do in this case? When using the scripting approach - link requirements and tests. We conduct tests in the same system, make a requirement-test bundle, and at any time we can look at the report, for what requirements there are tests, for which - no, when these tests were passed, and with what result.

We get a coverage map, we cover all uncovered requirements, everyone is happy and satisfied, we don’t miss mistakes ...

Okay, let's go back from heaven to earth. Most likely, you do not have detailed requirements, they are not atomic, some of the requirements are completely lost, and there is no time to document every test, or at least every second one. You can despair and cry, but you can admit that testing is a compensatory process, and the worse we are with analytics and development on the project, the more we should try and compensate for the problems of other participants in the process. Let's sort out the problems separately.

Problem: requirements are not atomic.

Analysts, too, sometimes sin in the head with a vinaigrette, and usually this is fraught with problems with the whole project. For example, you are developing a text editor, and you may have (among others) two requirements: “html formatting must be supported” and “when opening a file of unsupported format, a pop-up window should appear with the question”. How many tests are required for basic verification of the 1st requirement? And for the 2nd? The difference in the answers is most likely about a hundred times !!! We cannot say that in the presence of at least the 1st test on the 1st requirement, this is enough - but about the 2nd, most likely, completely.

Thus, the availability of a test for the requirement does not guarantee us anything at all! What does our coverage statistics mean in this case? About nothing! We'll have to decide!

- Automatic calculation of the coverage of requirements by tests in this case can be removed - it still does not carry the semantic load.

- For each requirement, starting with the highest priority, we prepare tests. When preparing we analyze what tests will be required for this requirement, how much will be enough? We carry out a full-fledged test analysis, and do not dismiss "one test is, well, okay."

- Depending on the system used, we do export / unload tests on demand and ... we test these tests! Are they enough? Ideally, of course, such testing should be carried out with the analyst and the developer of this functionality. Print out the tests, lock the colleagues in the negotiation room, and don't let go until they say “yes, these tests are enough” (this happens only with written approval, when these words are spoken for unsubscribe, even without analyzing the tests. During an oral discussion, your colleagues will pour out the tub critics, missed tests, misunderstood requirements, etc. - this is not always pleasant, but very useful for testing!)

- After completing the tests on demand and agreeing on their completeness, in the system this requirement can be assigned the status “covered by tests”. This information will mean much more than “there is at least 1 test”.

Of course, such a harmonization process requires a lot of resources and time, especially at first, before the practice is developed. Therefore, spend on it only the high-priority requirements, and new improvements. Over time, the rest of the requirements tighten, and everyone will be happy! But ... and if there are no requirements at all?

Problem: there are no requirements at all.

They are absent on the project, discussed verbally, everyone does what he wants / can and how he understands. We test the same way. As a result, we get a huge number of problems not only in testing and development, but also initially incorrect implementation of features - they wanted a completely different one! Here I can advise the option “define and document the requirements yourself”, and even used this strategy a couple of times in my practice, but in 99% of cases there are no such resources in the testing team - so let's go in a much less resource-intensive way:

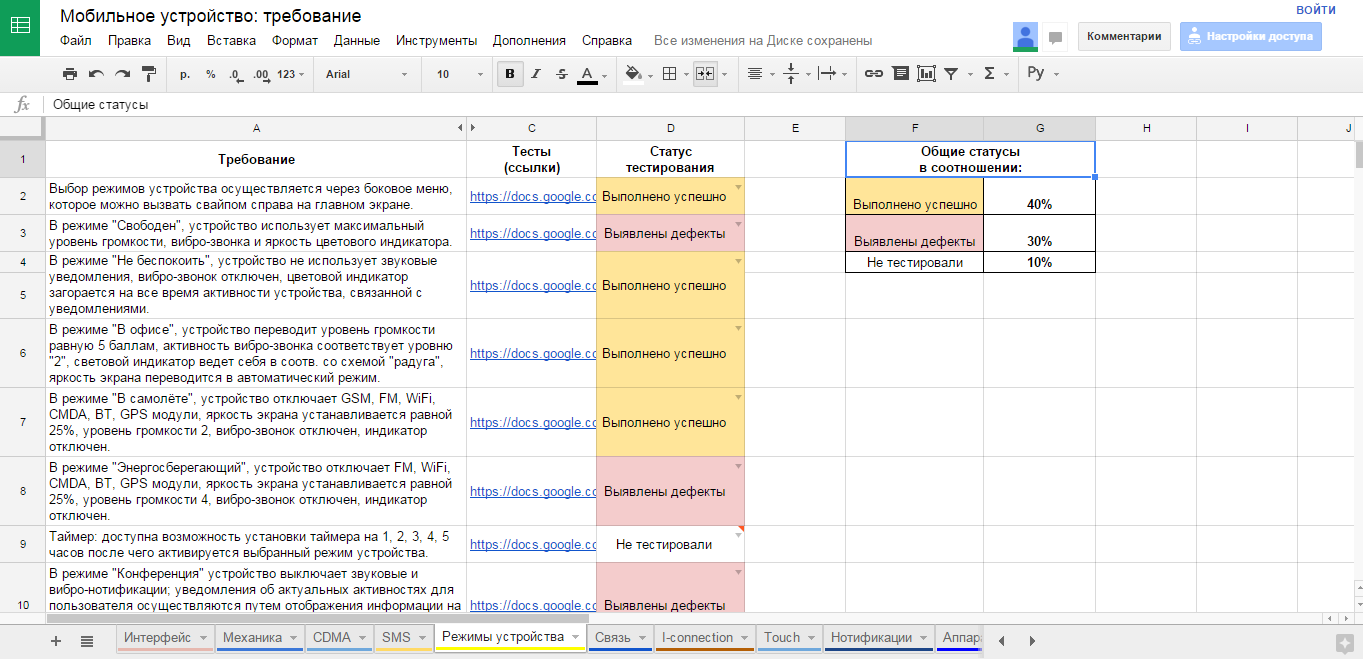

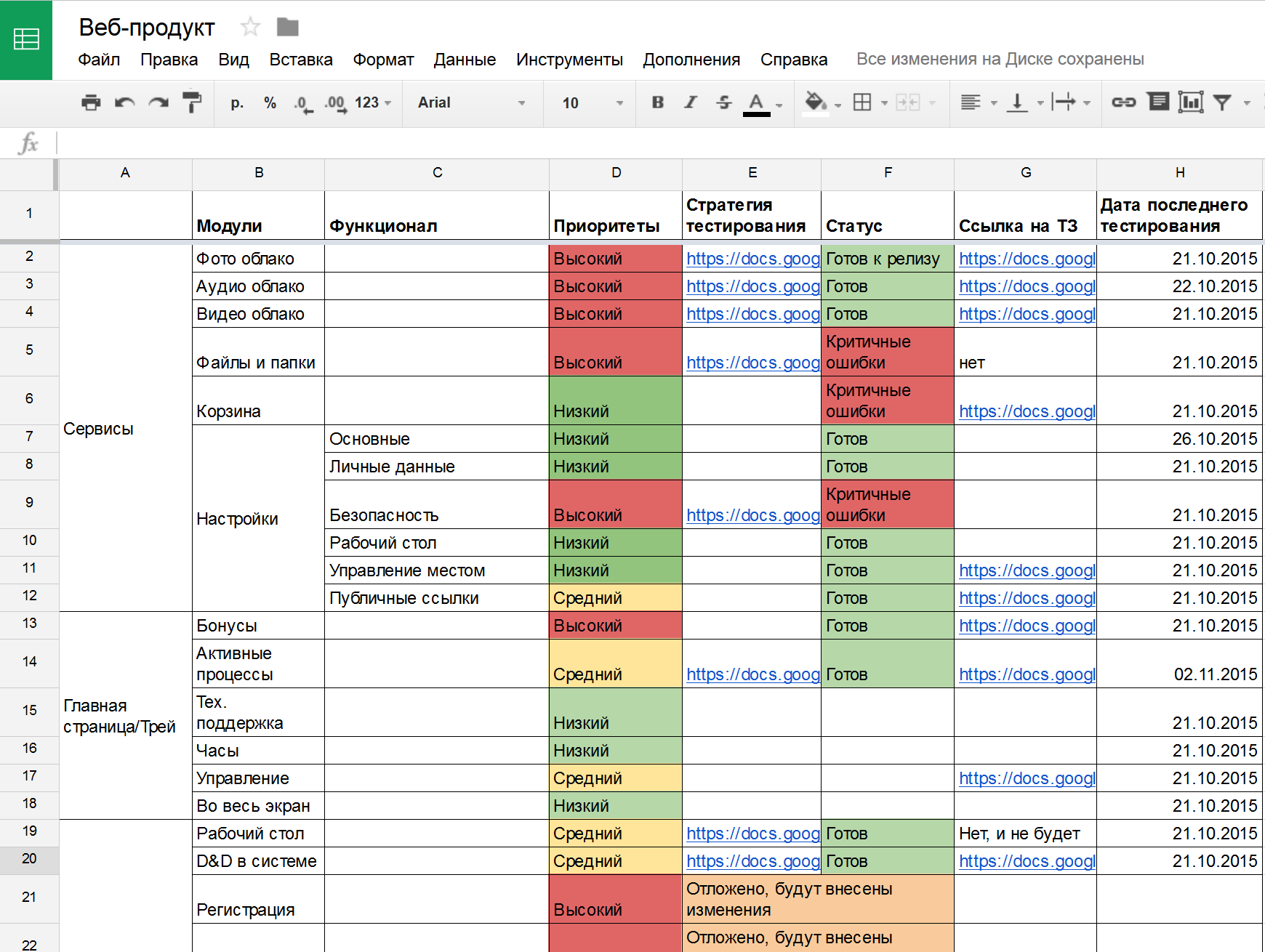

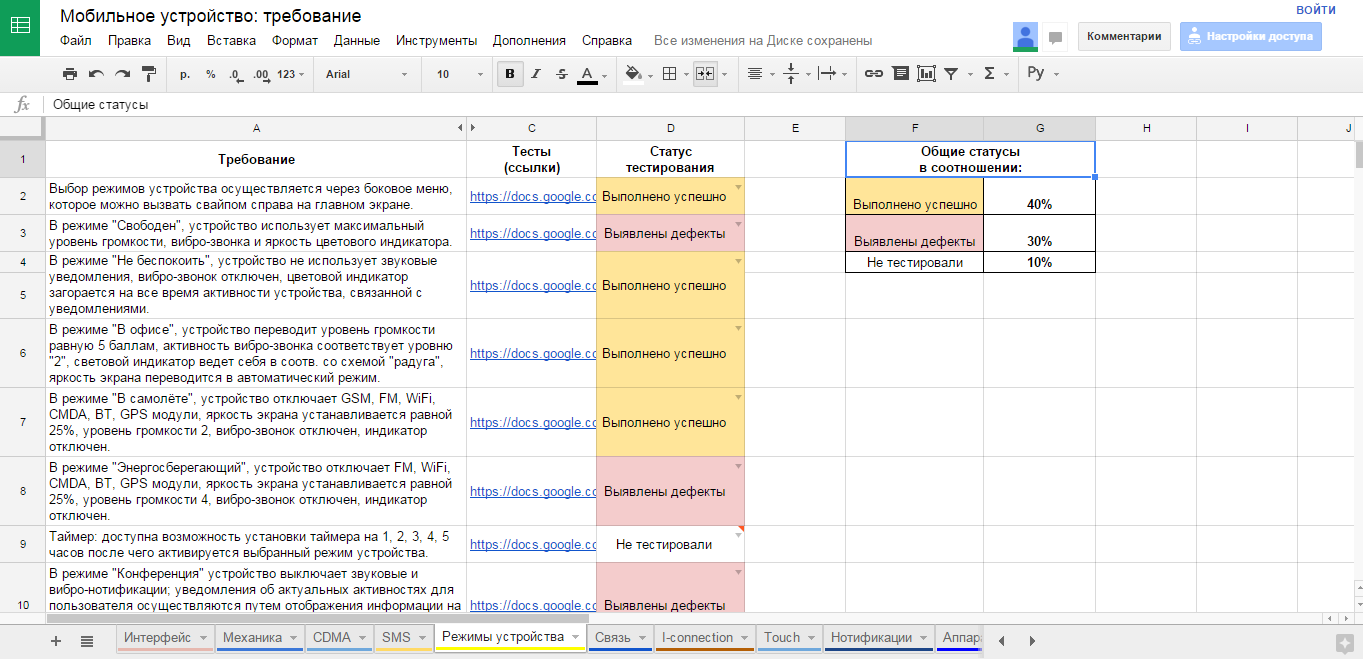

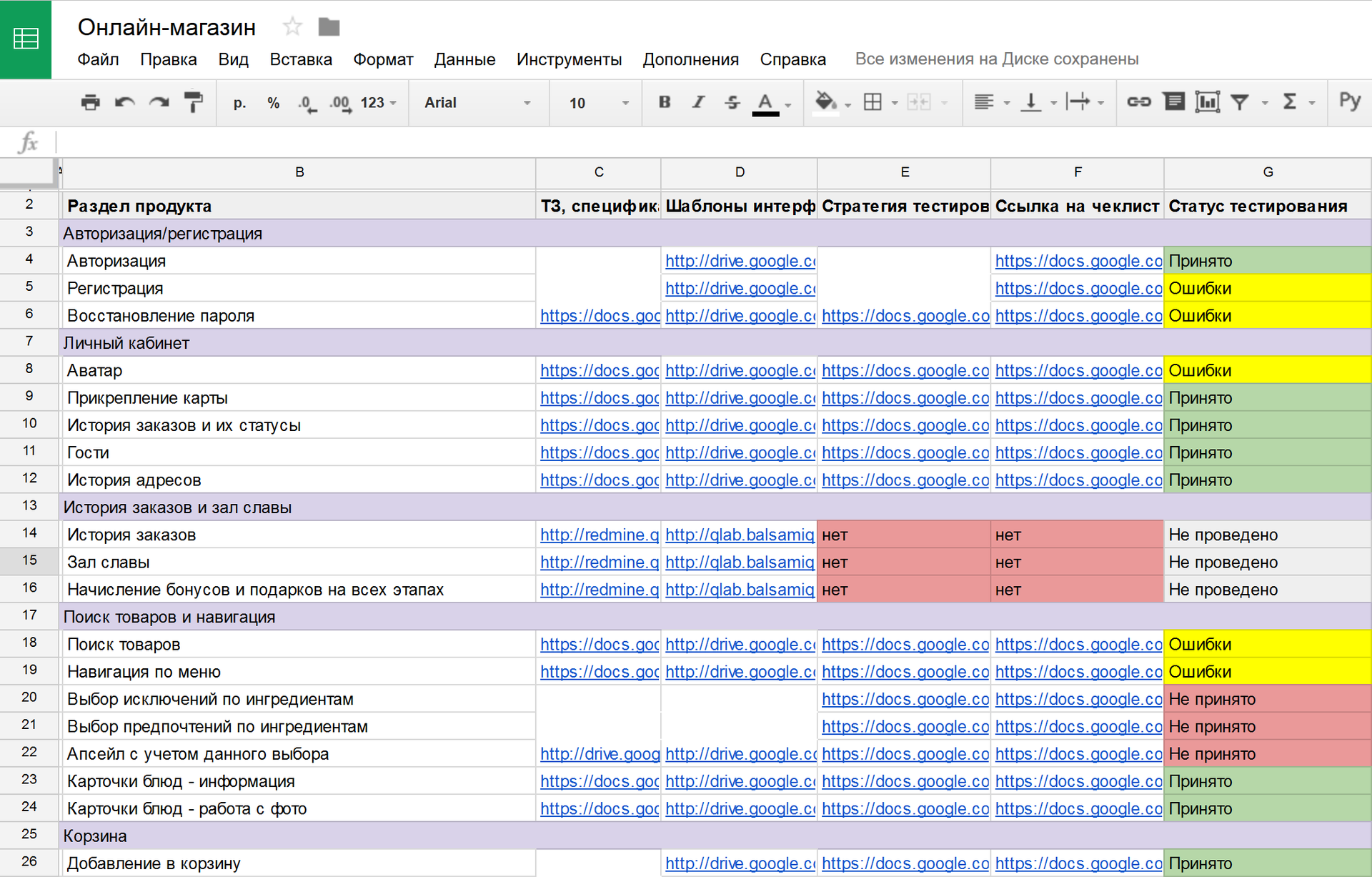

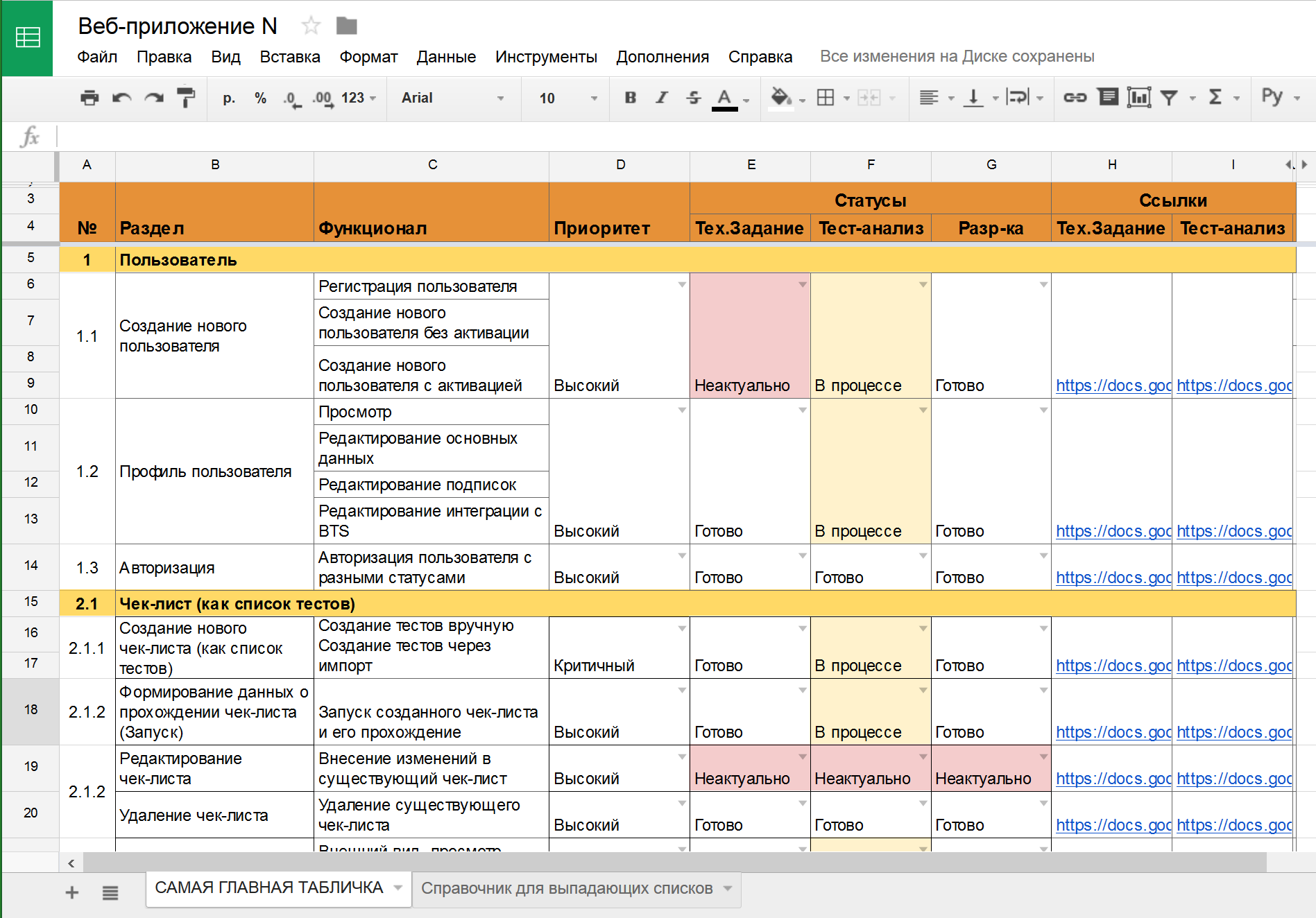

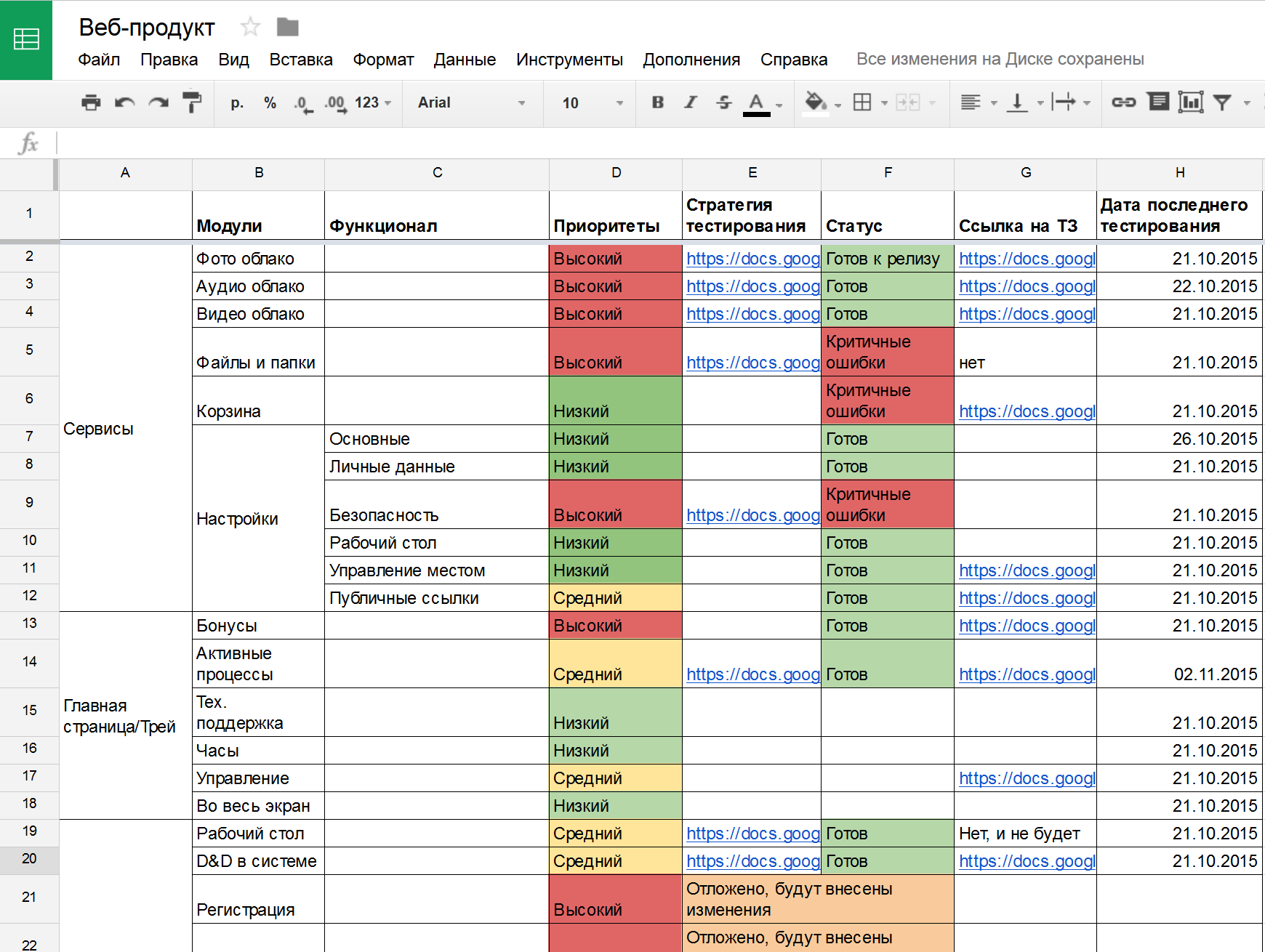

- Create a feature list. Yourself! In the form of google-plates, in the PBI format in TFS - choose any, if only not a text format. We still need to collect statuses! We’ll add all the functional areas of the product to this list, and try to choose one common level of decomposition (you can write out software objects, or custom scripts, or modules, or web pages, or API methods, or screen forms ...) - but not all at once ! ONE decomposition format, which will allow you to more easily and clearly not to miss the important one.

- We agree on the COMPLETENESS of this list with analysts, developers, business, within your team ... Try to do everything not to lose important parts of the product! How deeply to carry out the analysis - you decide. In my practice, only a few times there were products for which we created more than 100 pages in the table, and these were giant products. Most often, 30-50 lines is an achievable result for subsequent thorough processing. In a small team without dedicated test analysts, a greater number of features of the feature developer will be too difficult to maintain.

- After that, we go on priorities, and we process each line of the feature developer as in the requirements section described above. We write tests, discuss, agree on sufficiency. We mark statuses, on what feature of tests suffices. We get the status and progress, and the expansion of tests by communicating with the team. Everyone is happy!

But ... What if the requirements are maintained, but not in a traceable format?

Problem: Requirements are not traceable.

The project has a huge amount of documentation, analysts print at a speed of 400 characters per minute, you have specifications, specifications, instructions, references (most often this happens at the request of the customer), and all this acts as requirements, and everything has been on the project for a long time confused where to look for what information?

Repeat the previous section, helping the whole team to restore order!

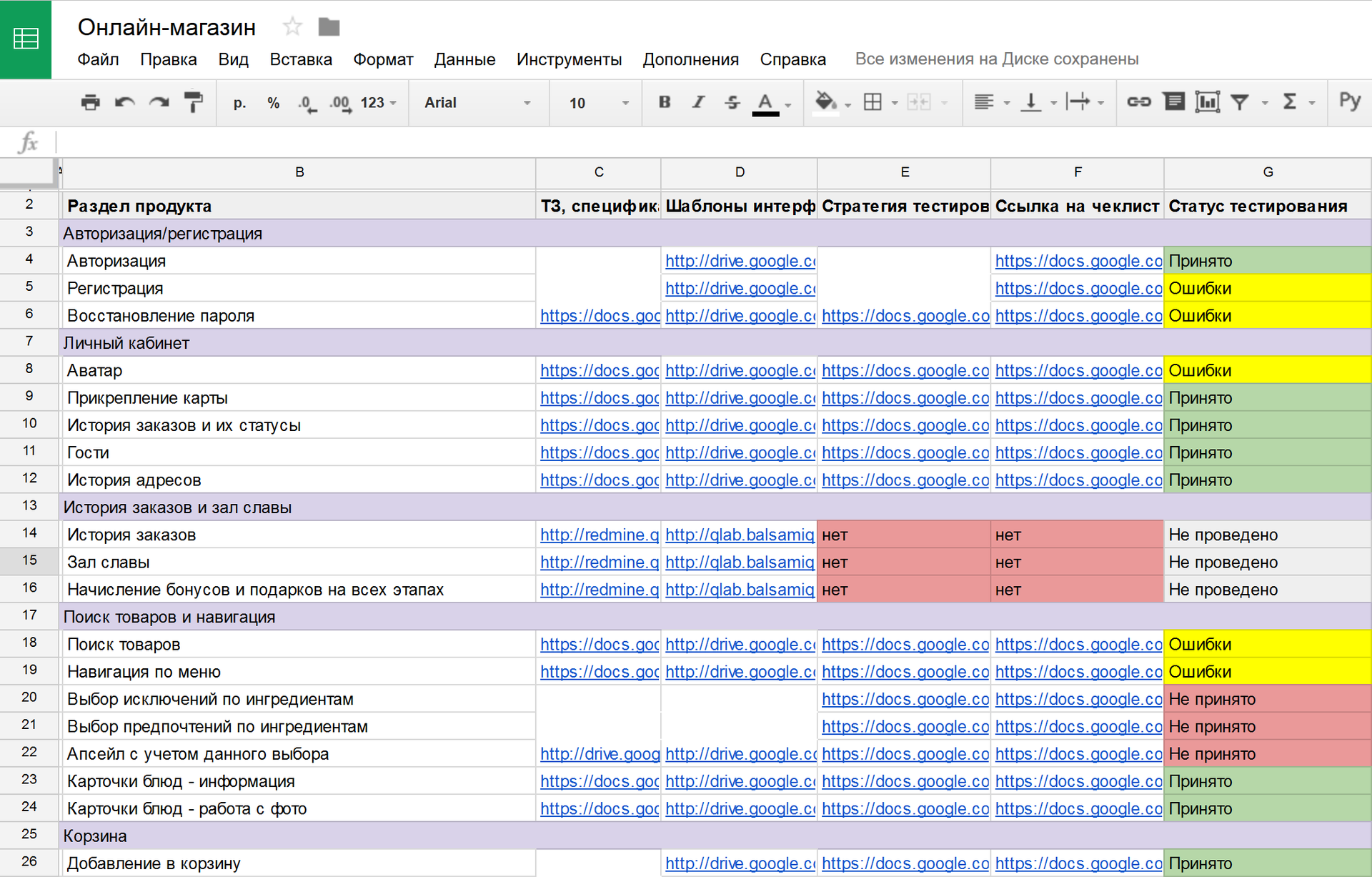

- Create a featurer (see above), but without a detailed description of the requirements.

- For each feature, we collect together references to TK, specifications, instructions, and other documents.

- We go on priorities, prepare tests, agree on their completeness. All the same, only by combining all the documents into one tablet, we increase the ease of access to them, transparent statuses and consistency of tests. In the end, we are all super, and everyone is happy!

But ... Not for long ... It seems that last week analysts on customer appeals updated 4 different specifications !!!

Problem: requirements change all the time.

Of course, it would be good to test some fixed system, but our products are usually live. Something was asked by the customer, something has changed in the legislation external to our product, but somewhere analysts have found an error analyzing the year before last ... The requirements live their own lives! What to do?

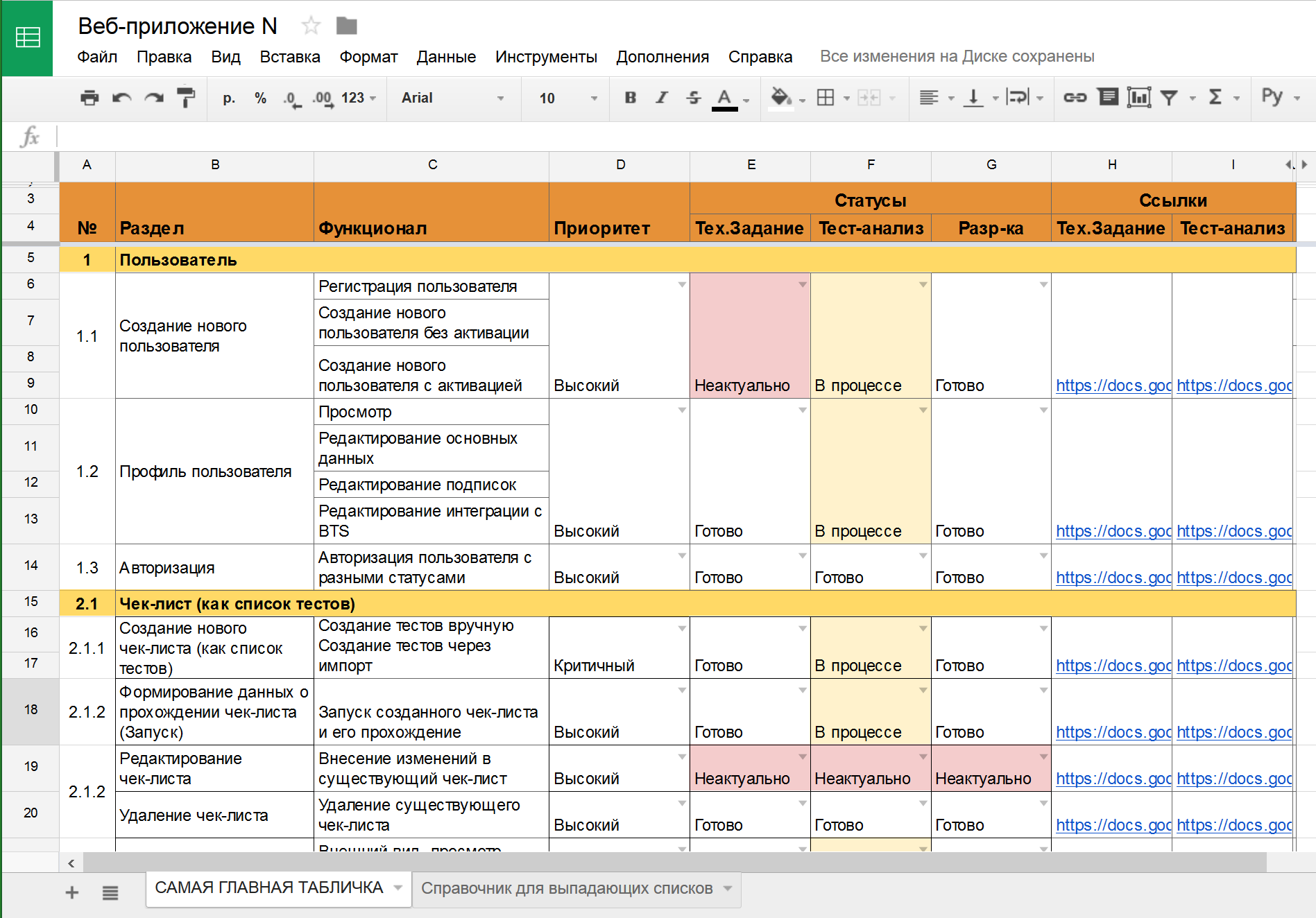

- Suppose you already have links to TK and specifications in the form of a feature table, PBI, requirements, notes in the Wiki, etc. Suppose you already have tests for these requirements. And so, the requirement is changing! This may mean a change in the RMS, or a task in the TMS (Task Management System), or a letter in the mail. In any case, this leads to the same consequence: your tests are irrelevant! Or may be irrelevant. So, they need to be updated (the test coverage of the old version of the product is somehow not very considered, right?)

- In feature programs, in RMS, in TMS (Test Management System - testrails, sitechco, etc), tests must be immediately and immediately marked as irrelevant! In HP QC or MS TFS, this can be done automatically when requirements are updated, and in a google-tablet or wiki you have to put handles on it. But you should see right away: tests are irrelevant! So, we are waiting for a complete repeated path: update, re-test, rewrite the tests, agree on the changes, and only after that mark the feature / requirement again as “covered by the tests”.

In this case, we get all the benefits of the test coverage assessment, and even in the dynamics! Everyone is happy!!! But…

But you paid so much attention to the work with the requirements that now you don’t have enough time either for testing or for documenting tests. In my opinion (and there is a place for a religious dispute!), The requirements are more important than the tests, and it’s better that way! At least they are in order, and the whole team is up to date, and the developers are doing exactly what they need. BUT DOCUMENTATION OF TIME TESTS DOES NOT REMAIN!

Problem: not enough time to document the tests.

In fact, the source of this problem may not only be a lack of time, but also your quite conscious choice not to document them (we do not like it, we avoid the effect of the pesticide, the product changes too often, etc.). But how to evaluate the test coverage in this case?

- You still need the requirements, as complete requirements or as a feature list, so some of the above sections, depending on the work of analysts on the project, will still be necessary. Received requirements / featurelist?

- We briefly describe and verbally agree on a testing strategy, without documenting specific tests! This strategy can be specified in a table column, on a wiki page, or in an RMS requirement, and it must be reconciled again. As part of this strategy, the checks will be conducted differently, but you will know: when was it last tested and for which strategy? And this, you see, is not bad either! And everyone will be happy.

But ... What else is a "but"? What is ???

Speak, we will do everything, and may quality products be with us!

Source: https://habr.com/ru/post/270365/

All Articles