Download and convert video to Rutube: from crutches to meta-programming

As a theater with a hanger, video hosting begins with downloading and converting video. Therefore, in our second article we decided to stop on these components of the platform. It’s good that in ancient times there were no problems with the final format (non-alternative flash in the browser), and the variety of source codes was not the same as now - otherwise the first years of the Rutube would be even more fun.

But the remaining elements of the system of loading and converting fully compensated for the temporary calm.

The problems began with the actual "physical" download source through the uploader.

')

In 2009, the host was nginx with nginx-upload-module, which supported the current versions of the web server and could correctly render the progress of the file upload, but ... only within one machine. And since there were more than one machine (actually, two), progress worked exactly one time.

After downloading, the files were transferred to the NFS-ball, and the corresponding clips in the database were assigned the status “ready for conversion”. The converter downloaded the file from NFS to a local folder, made 700k @ 360p of it in flv format, “if” rolled over the iflv-index and downloaded via SSH to the FileCluster server (see more about FileCluster here) ).

With the growing number of clips, the converter scheduler started having problems: the organization of conversion queues on the statuses of the site’s main table (track) began to create deadlocks and transaction timeouts en masse.

Just at that moment, the Beanstalkd queue server appeared in the field of view, and the idea arose to use it in the conversion system. In the new version of the uploader, upon completion of the download, the task was set in beanstalkd. The task was taken by one of the free workers, and, in close cooperation with the site database, he drove the video through the video processing algorithms.

In the meantime, life did not stand still and brought both new formats and devices for viewing, as well as new types of source files. In 2011, the question arose of switching from RTMP to HDS, and accordingly, from the original iflv format to mp4. A “de-indexer” was added to the system, making iflv a normal “machine-readable” flv, which was then repacked into mp4.

The result of the change in the surrounding reality was a strong proliferation of the code base, which deals with the circumvention of these featuresjambs .

The entire volume of crutches and logical branches soon ceased to get into the developer’s head as a code, and was rewritten using meta-programming on graphs.

Tells tumbler :

With the advent of multi-bitrate support, the conversion management system faced even more difficulties: there was a need to process one video on several servers at once, the tricky conditions for selecting a list of qualities depending on the characteristics of the source file, logical problems became apparent with synchronization of parallel tasks in the absence of coordinating center.

But these are still “flowers” compared to the calls received by the download and conversion system from the Licensing Department: support for various DRMs; custom settings for conversion parameters and priorities; converting existing clips, recording VOD from LIVE-streams; Download files in professional formats such as mxf (accompanied by a file with a markup of logical pauses that must be removed during conversion); sending conversion results not only to Rutube storages, but also to external archive servers; adding logos to the video stream. And the creative competition started the 8th season, and the exquisite experiments in source formats continued.

It became clear that one meta-programming is no longer saved - more refactoring is needed!

At the moment, any administrative logic has been cut out of the converter and it deals directly with conversion. The administration of the conversion process is handled by the DUCK service - Download, Upload, Convert King .

DUCK at the request of different subsystems creates sessions, sets tasks in celery, monitors the health and server load, and most importantly, handles all events from tasks — mainly to track errors that occur. It turned out generally a separate service, which can say: "Hey, dude, take the file" here. " Make “this” out of it and add “here”. How do you do, give up this link.

After receiving the task, the converter launches DUCK workers on the processing servers and “replaces” the empty bodies of the celery tasks with the processing code.

For example, the task code “duck.download” deals with downloading via FTP / HTTP, monitors errors and timeouts. The “duck.encode.images” code is creating screenshots from source files.

All these tasks are wrapped in “chain” and “chord” from the “celery.canvas” module, and the base class “celery.Task” has acquired additional functionality: under certain conditions, the long chain can be skipped to its final part - “duck.cleanup "; This allows you to do without going through all the tasks of the chain, if for example, at the stage of creating a video, it turned out that the file is broken.

On the other hand, all unknown errors automatically fall into the WTF queue. It is raked by developers (usually there are few such cases - but they are interesting): and either turns into bugs in the bug tracker, or tasks are performed again from the beginning of the chain (conveniently, for example, if the server on which the file was processed was “dead”).

DUCK monitors the passage of tasks with the help of celery “cameras”: the conversion session changes the status in the database depending on the events that came to the “camera”; errors and the progress of task processing are automatically processed. In this case, the most important events, such as the registration of the fact that the processed file was successfully saved to FileHeap, are done via RPC: this way the files will not be lost.

DUCK also stores conversion settings for different users. These can be as priority settings (for example, the highlights from live sports broadcasts should be made available for viewing immediately , and the archive of past years can be filled in the background for several weeks); and restrictions on the number of rollers processed and converted. Custom settings for converting each of the qualities can be specified in the settings (do you need 4K? - Welcome!); the need to trim the “black bars” (a frequent problem of “television” sources), normalize the sound and add a logo to the video stream, automatically “cut out” unnecessary fragments.

Of course, we would like to wrap up the possibilities of user editing with a front-end and make it accessible to everyone (gradually doing this as part of the Dashboard project), but so far the immediate goal is to significantly speed up the conversion. According to preliminary estimates - almost 10 times. We will tell about the results!

But the remaining elements of the system of loading and converting fully compensated for the temporary calm.

Childhood diseases

The problems began with the actual "physical" download source through the uploader.

')

In 2009, the host was nginx with nginx-upload-module, which supported the current versions of the web server and could correctly render the progress of the file upload, but ... only within one machine. And since there were more than one machine (actually, two), progress worked exactly one time.

After downloading, the files were transferred to the NFS-ball, and the corresponding clips in the database were assigned the status “ready for conversion”. The converter downloaded the file from NFS to a local folder, made 700k @ 360p of it in flv format, “if” rolled over the iflv-index and downloaded via SSH to the FileCluster server (see more about FileCluster here) ).

Marginal notes: The first mistake was to rely on the progress of nginx-upload-module in the memory of one process.

Even rewriting uploader to Twisted, we did not get the desired stability - we had to spend a lot of time setting up hardware balancing so that progress would not fall off due to requests to another server. It would seem that sticky sessions solve the problem, but no - they tried it and this raises the issue of load imbalance. Now download progress is periodically dropped in Redis and is available from any machine.

The clouds are gathering

With the growing number of clips, the converter scheduler started having problems: the organization of conversion queues on the statuses of the site’s main table (track) began to create deadlocks and transaction timeouts en masse.

Just at that moment, the Beanstalkd queue server appeared in the field of view, and the idea arose to use it in the conversion system. In the new version of the uploader, upon completion of the download, the task was set in beanstalkd. The task was taken by one of the free workers, and, in close cooperation with the site database, he drove the video through the video processing algorithms.

Marginal notes: Now we know that Beanstalkd is not a good choice for ensuring the reliability of task processing. In addition to binlog, he has no means of backup and duplication, and also binlog “with surprises”.

For example, the simplest DOS in the case of a loaded server can be organized by putting one (!) Task in a queue that no one will listen to. Binlog at the same time will begin to grow until it takes up all the available space, or until “The Same Task” is removed from the queue. Our record is 170GB. But after all, they only managed to put the task in the status of Buried into a “working” queue - postponed forever. If the beanstalk had died, he would hardly have risen with such a binlog.

In the meantime, life did not stand still and brought both new formats and devices for viewing, as well as new types of source files. In 2011, the question arose of switching from RTMP to HDS, and accordingly, from the original iflv format to mp4. A “de-indexer” was added to the system, making iflv a normal “machine-readable” flv, which was then repacked into mp4.

Marginal notes: UGC-content is generally a complete set of tests: video without audio, audio with tambneils and just “broken” files.

If earlier originality was limited only to flash-videos a la “Masyanya” and PowerPoint presentations, now everything is much more fun. There are files in the metadata of which the audio offset relative to the video and the video relative to the audio were simultaneously indicated - and different!

There was a fashion to record, okay, just a vertical video, so also change the orientation many times during the shooting process, and then (previously) edit the unfortunate video file in unfinished shareware-editors.

Advice: if this extreme is not enough for you, organize a creative video contest.

The result of the change in the surrounding reality was a strong proliferation of the code base, which deals with the circumvention of these features

Saving meta-programming

The entire volume of crutches and logical branches soon ceased to get into the developer’s head as a code, and was rewritten using meta-programming on graphs.

Tells tumbler :

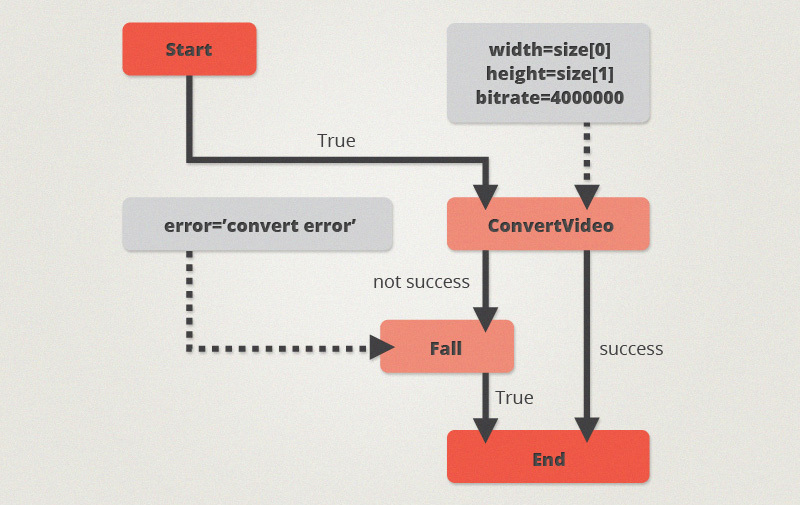

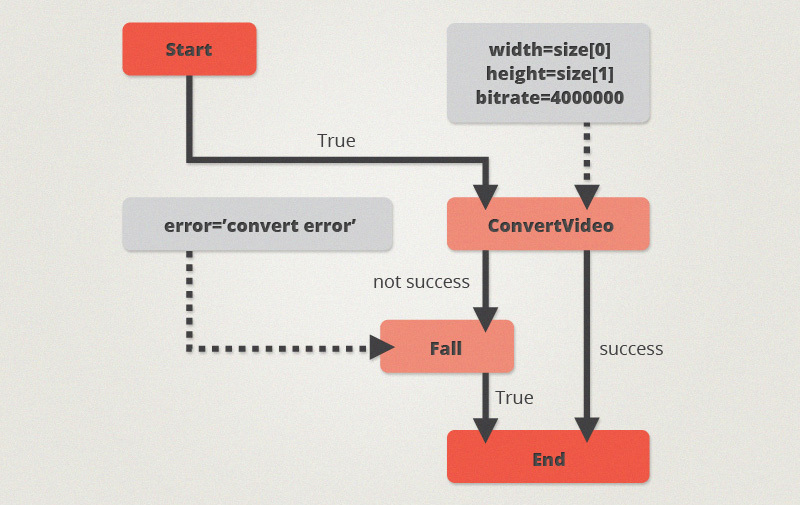

- In general, it was not even the case. First, the entire file processing algorithm was manually drawn in the yEd editor: just to understand and-simple-to-understand. The result is a large XML file, which is essentially a description of a directed graph with text data attached to vertices and edges.

Then the idea came not to encode from the graph manually, but to generate a state machine on python, the result of which would be a set of side effects - video files and pictures. The vertices of the graph turned into the names of called functions, edges - into conditional transitions.

Arguments of functions also fit neatly on the graph (at the vertices of another type).

Marginal notes: Unlike the code, the entire graph fit perfectly on two A4 sheets and was quite convenient for changing and analyzing. The only drawback that we have not overcome so far is the impossibility of conducting a review of changes to this graph using existing tools.

With the advent of multi-bitrate support, the conversion management system faced even more difficulties: there was a need to process one video on several servers at once, the tricky conditions for selecting a list of qualities depending on the characteristics of the source file, logical problems became apparent with synchronization of parallel tasks in the absence of coordinating center.

But these are still “flowers” compared to the calls received by the download and conversion system from the Licensing Department: support for various DRMs; custom settings for conversion parameters and priorities; converting existing clips, recording VOD from LIVE-streams; Download files in professional formats such as mxf (accompanied by a file with a markup of logical pauses that must be removed during conversion); sending conversion results not only to Rutube storages, but also to external archive servers; adding logos to the video stream. And the creative competition started the 8th season, and the exquisite experiments in source formats continued.

It became clear that one meta-programming is no longer saved - more refactoring is needed!

Current architecture

At the moment, any administrative logic has been cut out of the converter and it deals directly with conversion. The administration of the conversion process is handled by the DUCK service - Download, Upload, Convert King .

DUCK at the request of different subsystems creates sessions, sets tasks in celery, monitors the health and server load, and most importantly, handles all events from tasks — mainly to track errors that occur. It turned out generally a separate service, which can say: "Hey, dude, take the file" here. " Make “this” out of it and add “here”. How do you do, give up this link.

After receiving the task, the converter launches DUCK workers on the processing servers and “replaces” the empty bodies of the celery tasks with the processing code.

For example, the task code “duck.download” deals with downloading via FTP / HTTP, monitors errors and timeouts. The “duck.encode.images” code is creating screenshots from source files.

All these tasks are wrapped in “chain” and “chord” from the “celery.canvas” module, and the base class “celery.Task” has acquired additional functionality: under certain conditions, the long chain can be skipped to its final part - “duck.cleanup "; This allows you to do without going through all the tasks of the chain, if for example, at the stage of creating a video, it turned out that the file is broken.

On the other hand, all unknown errors automatically fall into the WTF queue. It is raked by developers (usually there are few such cases - but they are interesting): and either turns into bugs in the bug tracker, or tasks are performed again from the beginning of the chain (conveniently, for example, if the server on which the file was processed was “dead”).

DUCK monitors the passage of tasks with the help of celery “cameras”: the conversion session changes the status in the database depending on the events that came to the “camera”; errors and the progress of task processing are automatically processed. In this case, the most important events, such as the registration of the fact that the processed file was successfully saved to FileHeap, are done via RPC: this way the files will not be lost.

DUCK also stores conversion settings for different users. These can be as priority settings (for example, the highlights from live sports broadcasts should be made available for viewing immediately , and the archive of past years can be filled in the background for several weeks); and restrictions on the number of rollers processed and converted. Custom settings for converting each of the qualities can be specified in the settings (do you need 4K? - Welcome!); the need to trim the “black bars” (a frequent problem of “television” sources), normalize the sound and add a logo to the video stream, automatically “cut out” unnecessary fragments.

Of course, we would like to wrap up the possibilities of user editing with a front-end and make it accessible to everyone (gradually doing this as part of the Dashboard project), but so far the immediate goal is to significantly speed up the conversion. According to preliminary estimates - almost 10 times. We will tell about the results!

Source: https://habr.com/ru/post/270223/

All Articles