Pytest

Foreword

According to my historical vocation, I am a SQL boxer. However, fate brought me to BigData and after that suffered a curve - I mastered both Java, and Python, and functional programming (the study of Scala is on the list). Actually on one of the pieces of the project there was a need to test the code in Python. The guys from QA advised PyTest for these purposes, but even they didn’t really know how to respond to this beast. Unfortunately, in the Russian-speaking segment of the information on this issue is not so much: how it is used in Yandex and everything is good. At the same time described in this article looks quite difficult for a person who begins a journey along this path. Not to mention the official documentation - it acquired a meaning for me only after I dealt with the module itself from other sources. I do not argue, interesting things are written there, but, unfortunately, not at all for the start.

Python unit testing

What it is and why I don’t see any reason to tell - Wikipedia still knows more. Concerning the existing modules for Python it is well described on Habré .

Introduction to the necessary knowledge

At the time I was describing, the knowledge of Python was rather superficial - I wrote some simple modules and knew standard things. But when colliding with PyTest, I had to replenish the knowledge base of decorators here and here and with the yield construct.

')

Advantages and disadvantages of PyTest

1) API independence (no boilerplate). How the code looks in the same unittest:

Code

import unittest class TestUtilDate(unittest.TestCase): def setUp(self): #init_something() pass def tearDown(self): #teardown_something() pass def test_upper(self): self.assertEqual('foo'.upper(), 'FOO') def test_isupper(self): self.assertTrue('FOO'.isupper()) def test_failed_upper(self): self.assertEqual('foo'.upper(), 'FOo') if __name__ == '__main__': suite = unittest.TestLoader().loadTestsFromTestCase(TestUtilDate) unittest.TextTestRunner(verbosity=2).run(suite) The same thing in PyTest:

Code

import pytest def setup_module(module): #init_something() pass def teardown_module(module): #teardown_something() pass def test_upper(): assert 'foo'.upper() == 'FOO' def test_isupper(): assert 'FOO'.isupper() def test_failed_upper(): assert 'foo'.upper() == 'FOo' 2) Detailed report. Including unloading in JUnitXML (for integration with Jenkins). The report itself can be changed (including colors) with additional modules (they will be discussed separately later). Well, in general, the color report in the console looks more convenient - red FAILEDs are immediately visible.

3) Convenient assert (standard from Python). You do not have to keep in mind the whole bunch of different assertions.

4) Dynamic fixtures of all levels, which can be called both automatically and for specific tests.

5) Additional fixture options (return value, finalizers, scope, request object, auto-use, nested fixtures)

6) Parameterization of tests, that is, the launch of the same test with different sets of parameters. Generally, this refers to item 5 “Additional features of fixtures”, but the possibility is so good that it deserves a separate item.

7) Marks (marks), allowing you to skip any test, mark a test as falling (and this is its expected behavior, which is useful during development), or simply call the test suite so that you can run it only by name.

8) Plugins. This module has a fairly large list of additional modules that can be installed separately.

9) Ability to run tests written in unittest and nose, that is, full backward compatibility with them.

About disadvantages, even if there are not many of them, I can say the following:

1) Lack of additional nesting level: For modules, classes, methods, functions in tests there is an appropriate level. But the logic requires an additional level of testcase, when the same single function can have several testcases (for example, checking returned values and errors). This is partially compensated by the pytest-describe additional module (plug-in), but there arises the problem of the lack of an appropriate level of fixture (scope = “describe”). Of course, you can live with this, but in some situations it may violate the main principle of PyTest - “everything for simplicity and convenience”.

2) The need for a separate installation of the module, including in the production. Still, unittest and doctest are part of the basic Python toolkit and do not require additional gestures.

3) To use PyTest, you need a little more knowledge of Python than for the same unittest (see. "Introduction to the necessary knowledge").

A detailed description of the module and its capabilities under the cat.

Running tests

For unittest, the main function call is used. Therefore, the launch is “python unittest_example.py”. At the same time to run a test suite, you have to separately combine them into TestSuit and run through it. PyTest collects all the tests on its own by the name test_ * (Test_ * for class names) for all files in the folder (recursively bypassing the subfolders) or for the specified file. That is, an example call will have the form "py.test -v pytest_example.py"

Basic fixtures

In this case, I call fixtures functions and methods that are run to create the appropriate environment for the test. PyTest, like unittest, has names for fixtures of all levels:

import pytest def setup(): print ("basic setup into module") def teardown(): print ("basic teardown into module") def setup_module(module): print ("module setup") def teardown_module(module): print ("module teardown") def setup_function(function): print ("function setup") def teardown_function(function): print ("function teardown") def test_numbers_3_4(): print "test 3*4" assert 3*4 == 12 def test_strings_a_3(): print "test a*3" assert 'a'*3 == 'aaa' class TestUM: def setup(self): print ("basic setup into class") def teardown(self): print ("basic teardown into class") def setup_class(cls): print ("class setup") def teardown_class(cls): print ("class teardown") def setup_method(self, method): print ("method setup") def teardown_method(self, method): print ("method teardown") def test_numbers_5_6(self): print "test 5*6" assert 5*6 == 30 def test_strings_b_2(self): print "test b*2" assert 'b'*2 == 'bb' To see all output from the print command, you need to run the test with the -s flag:

tmp>py.test -s basic_fixtures.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 rootdir: tmp/, inifile: collected 4 items basic_fixtures.py module setup function setup basic setup into module test 3*4 .basic teardown into module function teardown function setup basic setup into module test a*3 .basic teardown into module function teardown class setup method setup basic setup into class test 5*6 .basic teardown into class method teardown method setup basic setup into class test b*2 .basic teardown into class method teardown class teardown module teardown ========================== 4 passed in 0.03 seconds This example quite fully shows the hierarchy and repeatability of each level of fixtures (for example, setup_function is called before each function call, and setup_module only once for the entire module). You can also see that the default fixture level is a function / method (the setup and teardown fixtures).

Extended fixtures

The question is what to do if for a part of the tests you need a certain environment, but for others you don’t? Spread across different modules or classes? Does not look very comfortable and beautiful. The extended PyTest fixtures come to the rescue.

So, to create an extended fixture in PyTest, you need:

1) import the pytest module

2) use the decorator @ pytest.fixture () to indicate that this function is a fixture

3) set the level of the fixture (scope). Possible values are “function”, “cls”, “module”, “session”. Default = “function”.

4) if you need a teardown call for this fixture, then you need to add a finalizer to it (via the addfinalizer method of the request object passed to the fixture or using the yield construction)

5) add the name of this fixture to the function parameter list

import pytest @pytest.fixture() def resource_setup(request): print("resource_setup") def resource_teardown(): print("resource_teardown") request.addfinalizer(resource_teardown) def test_1_that_needs_resource(resource_setup): print("test_1_that_needs_resource") def test_2_that_does_not(): print("test_2_that_does_not") def test_3_that_does_again(resource_setup): print("test_3_that_does_again") Run:

tmp>py.test -s extended_fixture.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 rootdir: tmp/, inifile: collected 3 items extended_fixture.py resource_setup test_1_that_needs_resource .resource_teardown test_2_that_does_not .resource_setup test_3_that_does_again .resource_teardown ========================== 3 passed in 0.01 seconds Call Extended Fixtures

It should be added that extended fixtures can be called in two more ways:

1) decorating dough decorator @ pytest.mark.usefixtures ()

2) use the autouse flag for fixture. However, you should use this feature with caution, as you may end up with unexpected test behavior.

3) the method described above itself through the test parameters

This is what the previous example will look like:

import pytest @pytest.fixture() def resource_setup(request): print("resource_setup") def resource_teardown(): print("resource_teardown") request.addfinalizer(resource_teardown) @pytest.fixture(scope="function", autouse=True) def another_resource_setup_with_autouse(request): print("another_resource_setup_with_autouse") def resource_teardown(): print("another_resource_teardown_with_autouse") request.addfinalizer(resource_teardown) def test_1_that_needs_resource(resource_setup): print("test_1_that_needs_resource") def test_2_that_does_not(): print("test_2_that_does_not") @pytest.mark.usefixtures("resource_setup") def test_3_that_does_again(): print("test_3_that_does_again") Run:

tmp>py.test -s call_extended_fixtures.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 rootdir: tmp/, inifile: collected 3 items call_extended_fixtures.py another_resource_setup_with_autouse resource_setup test_1_that_needs_resource .resource_teardown another_resource_teardown_with_autouse another_resource_setup_with_autouse test_2_that_does_not .another_resource_teardown_with_autouse another_resource_setup_with_autouse resource_setup test_3_that_does_again .resource_teardown another_resource_teardown_with_autouse ========================== 3 passed in 0.01 seconds teardown enhanced fixture

As mentioned above, if you need a teardown call for a specific extended fixture, you can implement it in two ways:

1) adding a finalizer to the fixture (via the addfinalizer method of the request object passed to the fixture

2) through the use of the yield construct (starting with PyTest version 2.4)

We considered the first method in the example of creating an extended fixture. Now we will simply demonstrate the same functionality through the use of yield. It should be noted that to use yield when decorating a function as a fixture, you must use the decorator @ pytest.yield_fixture (), and not @ pytest.fixture ():

import pytest @pytest.yield_fixture() def resource_setup(): print("resource_setup") yield print("resource_teardown") def test_1_that_needs_resource(resource_setup): print("test_1_that_needs_resource") def test_2_that_does_not(): print("test_2_that_does_not") def test_3_that_does_again(resource_setup): print("test_3_that_does_again") The conclusion will not be added to the text due to the fact that it coincides with the option with the finalizer.

Fixture return value

As an opportunity, a fixture in PyTest can return something to the test through return. Whether it is a state or an object (for example, a file).

import pytest @pytest.fixture(scope="module") def resource_setup(request): print("\nconnect to db") db = {"Red":1,"Blue":2,"Green":3} def resource_teardown(): print("\ndisconnect") request.addfinalizer(resource_teardown) return db def test_db(resource_setup): for k in resource_setup.keys(): print "color {0} has id {1}".format(k, resource_setup[k]) def test_red(resource_setup): assert resource_setup["Red"] == 1 def test_blue(resource_setup): assert resource_setup["Blue"] != 1 Run:

tmp>py.test -v -s return_value.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp/, inifile: collected 3 items return_value.py::test_db connect to db color Blue has id 2 color Green has id 3 color Red has id 1 PASSED return_value.py::test_red PASSED return_value.py::test_blue PASSED disconnect ========================== 3 passed in 0.02 seconds Fixture level (scope)

The fixture level can take the following possible values “function”, “cls”, “module”, “session”. Default = “function”. \

function - the fixture is started for each test.

cls - fixture starts for each class

module - the fixture is launched for each module.

session - the fixture is started for each session (that is, actually once)

For example, in the previous example, you can change the scope to function and call the database connection and disconnect for each test:

import pytest @pytest.fixture(scope="function") def resource_setup(request): print("\nconnect to db") db = {"Red":1,"Blue":2,"Green":3} def resource_teardown(): print("\ndisconnect") request.addfinalizer(resource_teardown) return db def test_db(resource_setup): for k in resource_setup.keys(): print "color {0} has id {1}".format(k, resource_setup[k]) def test_red(resource_setup): assert resource_setup["Red"] == 1 def test_blue(resource_setup): assert resource_setup["Blue"] != 1 tmp>py.test -v -s scope.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp/, inifile: collected 3 items scope.py::test_db connect to db color Blue has id 2 color Green has id 3 color Red has id 1 PASSED disconnect scope.py::test_red connect to db PASSED disconnect scope.py::test_blue connect to db PASSED disconnect ========================== 3 passed in 0.02 seconds Fixtures can also be described in the conftest.py file, which is automatically imported by PyTest. In this case, the fixture can have any level (only through the description in this file, you can create a fixture with the “session” level).

For example, create a separate session scope folder with the conftest.py file and two test files (recall that in order for PyTest to automatically import modules, their names must begin with test_. Although this behavior can be changed.):

conftest.py:

import pytest @pytest.fixture(scope="session", autouse=True) def auto_session_resource(request): """ Auto session resource fixture """ print("auto_session_resource_setup") def auto_session_resource_teardown(): print("auto_session_resource_teardown") request.addfinalizer(auto_session_resource_teardown) @pytest.fixture(scope="session") def manually_session_resource(request): """ Manual set session resource fixture """ print("manually_session_resource_setup") def manually_session_resource_teardown(): print("manually_session_resource_teardown") request.addfinalizer(manually_session_resource_teardown) @pytest.fixture(scope="function") def function_resource(request): """ Function resource fixture """ print("function_resource_setup") def function_resource_teardown(): print("function_resource_teardown") request.addfinalizer(function_resource_teardown) test_session_scope1.py

import pytest def test_1_that_does_not_need_session_resource(): print("test_1_that_does_not_need_session_resource") def test_2_that_does(manually_session_resource): print("test_2_that_does") test_session_scope2.py

import pytest def test_3_that_uses_all_fixtures(manually_session_resource, function_resource): print("test_2_that_does_not") Run:

tmp\session scope>py.test -s -v ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\pro ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp\session scope, inifile: collected 3 items test_session_scope1.py::test_1_that_does_not_need_session_resource auto_session resource_setup test_1_that_does_not_need_session_resource PASSED test_session_scope1.py::test_2_that_does manually_session_resource_setup test_2_that_does PASSED test_session_scope2.py::test_3_that_uses_all_fixtures function_resource_setup test_2_that_does_not PASSEDfunction_resource_teardown manually_session_resource_teardown auto_session_resource_teardown ========================== 3 passed in 0.02 seconds It is also interesting that PyTest supports the --fixtures input parameter, when called, with which it returns all available fixers, including those described in conftest.py (had a docstring).

tmp\session scope>py.test --fixtures ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 rootdir: tmp\session scope, inifile: collected 3 items cache Return a cache object that can persist state between testing sessions. …..... path object. ----------------------- fixtures defined from conftest ------------------------ manually_session_resource Manual set session resource fixture function_resource Function resource fixture auto_session_resource Auto session resource fixture ============================== in 0.07 seconds Request object

In the example of creating an extended fixture, we passed the request parameter to it. This was done through his addfinalizer method to add a finalizer. However, this object also has quite a lot of attributes and other methods (a full list in the official API ).

import pytest @pytest.fixture(scope="function") def resource_setup(request): print request.fixturename print request.scope print request.function.__name__ print request.cls print request.module.__name__ print request.fspath def test_1(resource_setup): assert True class TestClass(): def test_2(self, resource_setup): assert True Run:

tmp>py.test -v -s request_object.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp/, inifile: collected 2 items request_object.py::test_1 resource_setup function test_1 None 08 tmp\request_object.py PASSED request_object.py::TestClass::test_2 resource_setup function test_2 08.TestClass 08 tmp\request_object.py PASSED ========================== 2 passed in 0.04 seconds Parameterization

Parameterization is a way to run the same test with a different set of input parameters. For example, we have a function that adds a question mark to a string if it is longer than 5 characters, an exclamation mark is less than 5 characters, and a full stop if there are exactly 5 characters in the string. Accordingly, instead of writing three tests, we can write one, but called with different parameters.

There are two ways to set the parameters for the test:

1) Through the value of the params parameter of the fixture, into which you need to transfer an array of values.

That is, in fact, the fixture in this case is a wrapper transmitting the parameters. And in the test itself, they are passed through the param attribute of the request object described above.

2) Through the decorator (label) @ pytest.mark.parametrize, in which the list of variable names and an array of their values are transferred.

So the first way.

import pytest def strange_string_func(str): if len(str) > 5: return str + "?" elif len(str) < 5: return str + "!" else: return str + "." @pytest.fixture(scope="function", params=[ ("abcdefg", "abcdefg?"), ("abc", "abc!"), ("abcde", "abcde.") ]) def param_test(request): return request.param def test_strange_string_func(param_test): (input, expected_output) = param_test result = strange_string_func(input) print "input: {0}, output: {1}, expected: {2}".format(input, result, expected_output) assert result == expected_output Run:

tmp>py.test -s -v parametrizing_base.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp/, inifile: collected 3 items parametrizing_base.py::test_strange_string_func[param_test0] input: abcdefg, output: abcdefg?, e xpected: abcdefg? PASSED parametrizing_base.py::test_strange_string_func[param_test1] input: abc, output: abc!, expected: abc! PASSED parametrizing_base.py::test_strange_string_func[param_test2] input: abcde, output: abcde., expec ted: abcde. PASSED ========================== 3 passed in 0.03 seconds Everything works fine except for one unpleasant detail - by the name of the test it is impossible to understand what parameter was passed to the test. And in this case, the fixture ids parameter helps. It accepts either a list of test names (its length must match the number of these), or a function that generates a final name.

import pytest def strange_string_func(str): if len(str) > 5: return str + "?" elif len(str) < 5: return str + "!" else: return str + "." @pytest.fixture(scope="function", params=[ ("abcdefg", "abcdefg?"), ("abc", "abc!"), ("abcde", "abcde.")], ids=["len>5","len<5","len==5"] ) def param_test(request): return request.param def test_strange_string_func(param_test): (input, expected_output) = param_test result = strange_string_func(input) print "input: {0}, output: {1}, expected: {2}".format(input, result, expected_output) assert result == expected_output def idfn(val): return "params: {0}".format(str(val)) @pytest.fixture(scope="function", params=[ ("abcdefg", "abcdefg?"), ("abc", "abc!"), ("abcde", "abcde.")], ids=idfn ) def param_test_idfn(request): return request.param def test_strange_string_func_with_ifdn(param_test_idfn): (input, expected_output) = param_test result = strange_string_func(input) print "input: {0}, output: {1}, expected: {2}".format(input, result, expected_output) assert result == expected_output Run:

tmp>py.test -s -v parametrizing_named.py --collect-only ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp/, inifile: collected 6 items <Module 'parametrizing_named.py'> <Function 'test_strange_string_func[len>5]'> <Function 'test_strange_string_func[len<5]'> <Function 'test_strange_string_func[len==5]'> <Function "test_strange_string_func_with_ifdn[params: ('abcdefg', 'abcdefg?')] "> <Function "test_strange_string_func_with_ifdn[params: ('abc', 'abc!')]"> <Function "test_strange_string_func_with_ifdn[params: ('abcde', 'abcde.')]"> ============================== in 0.03 seconds In this case, I launched PyTest with the additional option —collect-only, which allows you to collect all the tests generated by parameterization without starting them.

The second method has one advantage: if you specify several labels with different parameters, then the test will be run with all possible parameter sets (that is, the Cartesian product of the parameters).

import pytest @pytest.mark.parametrize("x", [1,2]) @pytest.mark.parametrize("y", [10,11]) def test_cross_params(x, y): print "x: {0}, y: {1}".format(x, y) assert True Run:

tmp>py.test -s -v parametrizing_combinations.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp/, inifile: collected 4 items parametrizing_combinations.py::test_cross_params[10-1] x: 1, y: 10 PASSED parametrizing_combinations.py::test_cross_params[10-2] x: 2, y: 10 PASSED parametrizing_combinations.py::test_cross_params[11-1] x: 1, y: 11 PASSED parametrizing_combinations.py::test_cross_params[11-2] x: 2, y: 11 PASSED ========================== 4 passed in 0.02 seconds In the parameterization label, you can also pass the ids parameter, which is responsible for displaying the parameters in the output, similar to the first method of specifying parameters:

import pytest def idfn_x(val): return "x=({0})".format(str(val)) def idfn_y(val): return "y=({0})".format(str(val)) @pytest.mark.parametrize("x", [-1,2], ids=idfn_x) @pytest.mark.parametrize("y", [-10,11], ids=idfn_y) def test_cross_params(x, y): print "x: {0}, y: {1}".format(x, y) assert True @pytest.mark.parametrize("x", [-1,2], ids=["negative x","positive y"]) @pytest.mark.parametrize("y", [-10,11], ids=["negative y","positive y"]) def test_cross_params_2(x, y): print "x: {0}, y: {1}".format(x, y) assert True Run:

tmp>py.test -s -v parametrizing_combinations_named.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp/, inifile: collected 8 items parametrizing_combinations_named.py::test_cross_params[y=(-10)-x=(-1)] x: -1, y: -10 PASSED parametrizing_combinations_named.py::test_cross_params[y=(-10)-x=(2)] x: 2, y: -10 PASSED parametrizing_combinations_named.py::test_cross_params[y=(11)-x=(-1)] x: -1, y: 11 PASSED parametrizing_combinations_named.py::test_cross_params[y=(11)-x=(2)] x: 2, y: 11 PASSED parametrizing_combinations_named.py::test_cross_params_2[negative y-negative x] x: -1, y: -10 PASSED parametrizing_combinations_named.py::test_cross_params_2[negative y-positive y] x: 2, y: -10 PASSED parametrizing_combinations_named.py::test_cross_params_2[positive y-negative x] x: -1, y: 11 PASSED parametrizing_combinations_named.py::test_cross_params_2[positive y-positive y] x: 2, y: 11 PASSED ========================== 8 passed in 0.04 seconds Calling multiple fixtures and fixtures using fixtures

PyTest does not limit the list of fixtures called for the test.

import pytest @pytest.fixture() def fixture1(request): print("fixture1") @pytest.fixture() def fixture2(request): print("fixture2") @pytest.fixture() def fixture3(request): print("fixture3") def test_1(fixture1, fixture2): print("test_1") def test_2(fixture1, fixture2, fixture3): print("test_2") tmp>py.test -s -v multiply_fixtures.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp, inifile: collected 2 items multiply_fixtures.py::test_1 fixture1 fixture2 test_1 PASSED multiply_fixtures.py::test_2 fixture1 fixture2 fixture3 test_2 PASSED ========================== 2 passed in 0.01 seconds Also, any fixture can also cause any number of fixtures to itself for execution:

import pytest @pytest.fixture() def fixture1(request, fixture2): print("fixture1") @pytest.fixture() def fixture2(request, fixture3): print("fixture2") @pytest.fixture() def fixture3(request): print("fixture3") def test_1(fixture1): print("test_1") def test_2(fixture2): print("test_2") tmp>py.test -s -v fixtures_use_fixtures.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp, inifile: collected 2 items fixtures_use_fixtures.py::test_1 fixture3 fixture2 fixture1 test_1 PASSED fixtures_use_fixtures.py::test_2 fixture3 fixture2 test_2 PASSED ========================== 2 passed in 0.01 seconds Tags

PyTest supports the @ pytest.mark decorators class called “marks”. :

1) @pytest.mark.parametrize — ( )

2) @pytest.mark.xfail – , PyTest , (, ). , .

3) @pytest.mark.skipif –

4) @pytest.mark.usefixtures – ,

«py.test --markers».

import pytest import sys @pytest.mark.xfail() def test_failed(): assert False @pytest.mark.xfail(sys.platform != "win64", reason="requires windows 64bit") def test_failed_for_not_win32_systems(): assert False @pytest.mark.skipif(sys.platform != "win64", reason="requires windows 64bit") def test_skipped_for_not_win64_systems(): assert False Run:

tmp>py.test -s -v basic_marks.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp, inifile: collected 3 items basic_marks.py::test_failed xfail basic_marks.py::test_failed_for_not_win32_systems xfail basic_marks.py::test_skipped_for_not_win64_systems SKIPPED ==================== 1 skipped, 2 xfailed in 0.02 seconds , . , -m.

import pytest def test_1(): print "test_1" @pytest.mark.critital_tests def test_2(): print "test_2" def test_3(): print "test_3" tmp>py.test -s -v -m "critital_tests" custom_marks.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp, inifile: collected 3 items custom_marks.py::test_2 test_2 PASSED ================= 2 tests deselected by "-m 'critital_tests'" ================= =================== 1 passed, 2 deselected in 0.01 seconds ==================== , pytest.ini. , «py.test --markers».

pytest.ini

# content of pytest.ini [pytest] markers = critical_test: mark test as critical. These tests must to be checked first. tmp>py.test --markers @pytest.mark.critical_test: mark test as critical. These tests must to be checked first. …...... , , ( ) , (. ).

import pytest pytestmark = pytest.mark.level1 def test_1(): print "test_1" @pytest.mark.level2 class TestClass: def test_2(self): print "test_2" @pytest.mark.level3 def test_3(self): print "test_3" tmp>py.test -s -v -m "level3" custom_marks_others.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp, inifile: pytest.ini collected 3 items custom_marks_others.py::TestClass::test_3 test_3 PASSED ===================== 2 tests deselected by "-m 'level3'" ===================== =================== 1 passed, 2 deselected in 0.07 seconds ==================== tmp>py.test -s -v -m "level2" custom_marks_others.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp, inifile: pytest.ini collected 3 items custom_marks_others.py::TestClass::test_2 test_2 PASSED custom_marks_others.py::TestClass::test_3 test_3 PASSED ===================== 1 tests deselected by "-m 'level2'" ===================== =================== 2 passed, 1 deselected in 0.03 seconds ==================== tmp>py.test -s -v -m "level1" custom_marks_others.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp, inifile: pytest.ini collected 3 items custom_marks_others.py::test_1 test_1 PASSED custom_marks_others.py::TestClass::test_2 test_2 PASSED custom_marks_others.py::TestClass::test_3 test_3 PASSED ========================== 3 passed in 0.02 seconds =========================== import pytest @pytest.mark.parametrize(("x","expected"), [ (1,2), pytest.mark.critical((2,3)), (3,4) ]) def test_inc(x,expected): print x, "+ 1 = ", expected assert x + 1 == expected tmp>py.test -s -v -m "critical" custom_marks_params.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp, inifile: pytest.ini collected 3 items custom_marks_params.py::test_inc[2-3] 2 + 1 = 3 PASSED ==================== 2 tests deselected by "-m 'critical'" ==================== =================== 1 passed, 2 deselected in 0.02 seconds ==================== , , PyTest «with pytest.raises()».

import pytest def f(): print 1/0 def test_exception(): with pytest.raises(ZeroDivisionError): f() tmp>py.test -s -v check_exception.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp, inifile: pytest.ini collected 1 items check_exception.py::test_exception PASSED ========================== 1 passed in 0.01 seconds ID

Individual tests from modules can be run by listing the full path to them in the form module.py::class::method or. module.py::function. And also passing with the -k flag part of their name.

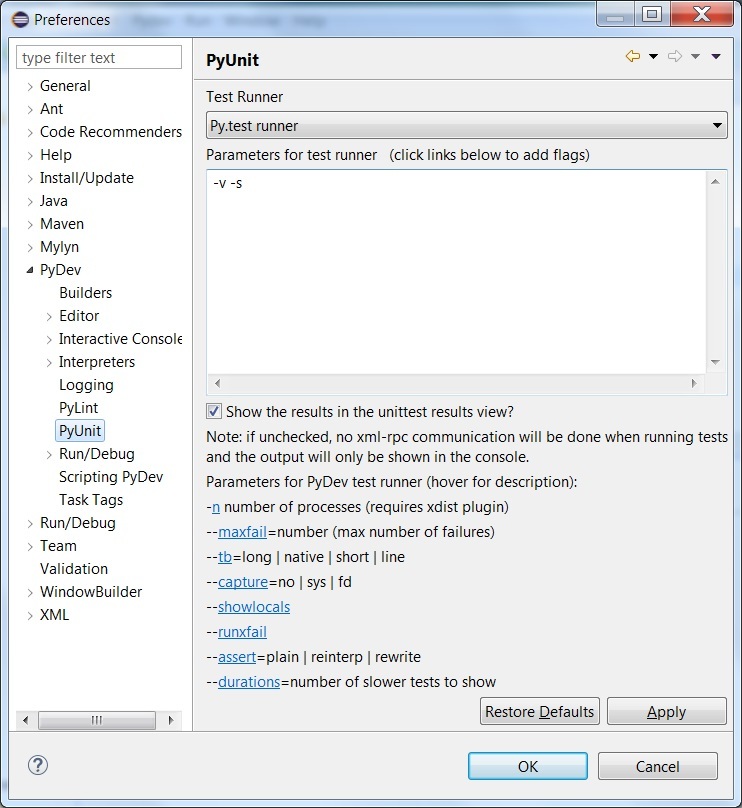

import pytest def test_one(): print "test_one" def test_one_negative(): print "test_one_negative" def test_two(): print "test_one_negative" tmp>py.test -s -v call_by_name_and_id.py::test_two ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp, inifile: pytest.ini collected 4 items call_by_name_and_id.py::test_two test_one_negative PASSED ========================== 1 passed in 0.04 seconds tmp>py.test -s -v -k "one" call_by_name_and_id.py ============================= test session starts ============================= platform win32 -- Python 2.7.8, pytest-2.8.2, py-1.4.30, pluggy-0.3.1 -- c:\prog ram files (x86)\python27\python.exe cachedir: .cache rootdir: tmp, inifile: pytest.ini collected 3 items call_by_name_and_id.py::test_one test_one PASSED call_by_name_and_id.py::test_one_negative test_one_negative PASSED ======================== 1 tests deselected by '-kone' ======================== =================== 2 passed, 1 deselected in 0.01 seconds ==================== Integration with PyDev in Eclipse

I would like to mention that PyTest is integrated into the PyUnit component of the PyDev module for Eclipse. Just in the settings you need to specify that you need to use it.

Additional modules

PyTest .

, ( ):

pytest-describe – (--, --testcase).

pytest-instafail – , .

pytest-marks – :

@pytest.mark.red @pytest.mark.green @pytest.mark.blue def some_test_method(self): ..... pytest-ordering — Run.

import pytest @pytest.mark.run(order=2) def test_foo(): assert True @pytest.mark.run(order=1) def test_bar(): assert True pytest-pep8 – pep-8.

pytest-smartcov — , , .

pytest-timeout — , .

pytest-sugar — PyTest', . , .

Afterword

PyTest . : PyTest ( , ), ( metafunc) .

Source: https://habr.com/ru/post/269759/

All Articles