Introduction to working with ORTC in Microsoft Edge

In October last year, we announced our intention to support ORTC in Microsoft Edge, with a special focus on audio / video communications . Since then, we have worked a lot on this and today we are happy to announce that a preview version of our implementation is available in a fresh build of Edge as part of the Windows Insider program.

ORTC support in Microsoft Edge is the result of collaboration between operating system teams (OSG) and Skype. Combining together 20 years of building a web platform and 12 years of creating one of the largest real-time communication services for ordinary and business users, we set a goal to make it possible to build a browser experience not only with Skype users, but and other communication services compatible with WebRTC.

')

Looking to the future, we hope to see a variety of community solutions that will be possible through the use of ORTC. In turn, we want to tell you in more detail what our preliminary implementation of the ORTC includes, and show with a simple example how to build audio and video communication in 1: 1 scenarios.

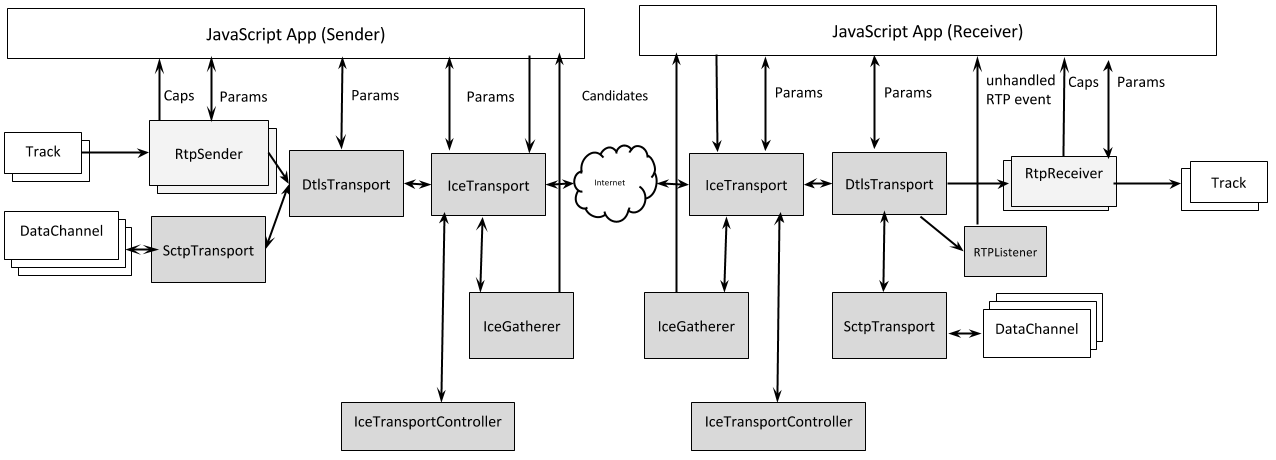

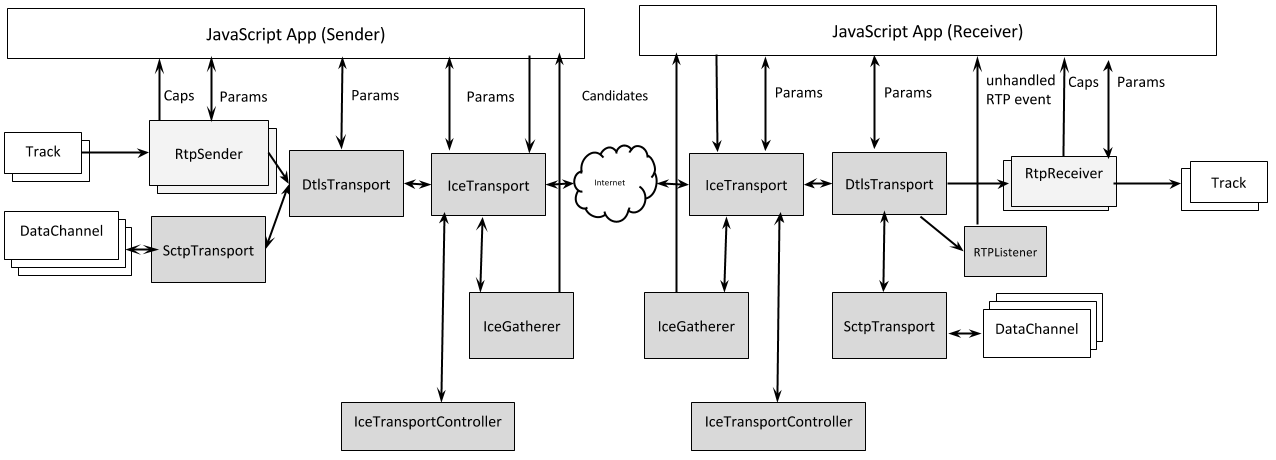

The diagram below is part of the overview section of the ORTC API specification. It provides a high-level description of the interconnection between various ORTC objects and is useful for illustrating the interaction of individual sections of code: from capturing media streams (tracks) to RtpSender objects, and then following the path to RtpReceiver objects that can be sent to video / audio tags. We recommend using this diagram as a reference when starting to explore the ORTC API.

Our initial implementation of ORTC includes the following components:

Although our preliminary implementation can still contain bugs, we think that it can already be used in typical scenarios, and we will be happy to hear feedback from developers who will try to implement it in practice.

If you are familiar with WebRTC 1.0 implementations and are interested in learning more about the evolution of object support within WebRTC 1.0 and ORTC, we recommend that you get acquainted with the following presentation from Google, Microsoft and Hookflash from the IIT RTC 2014 conference: “ ORTC API Update .”

Now let's discuss how to go from the overview of the ORTC to the implementation in the code of a simple 1: 1 scenario of audio / video communication. For this specific scenario, you will need two machines with Windows 10 working as two interaction clients (points), and a web server operating as a signaling channel for the exchange of information between these points so that you can establish a connection between them.

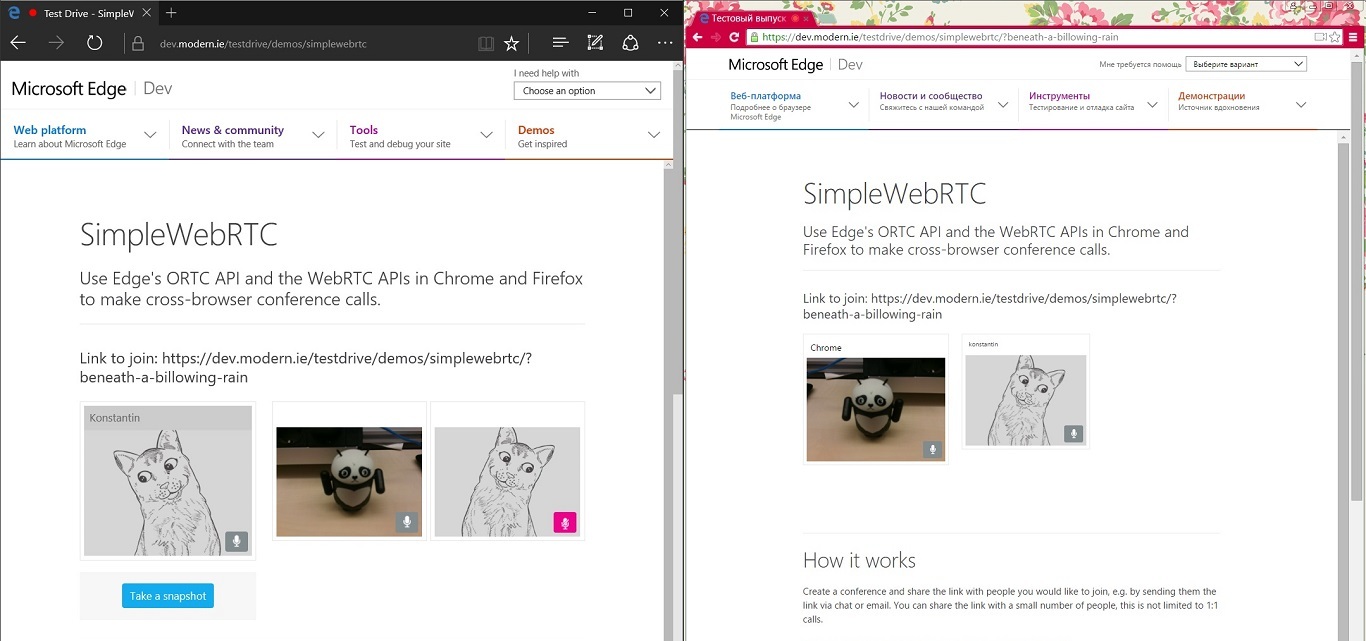

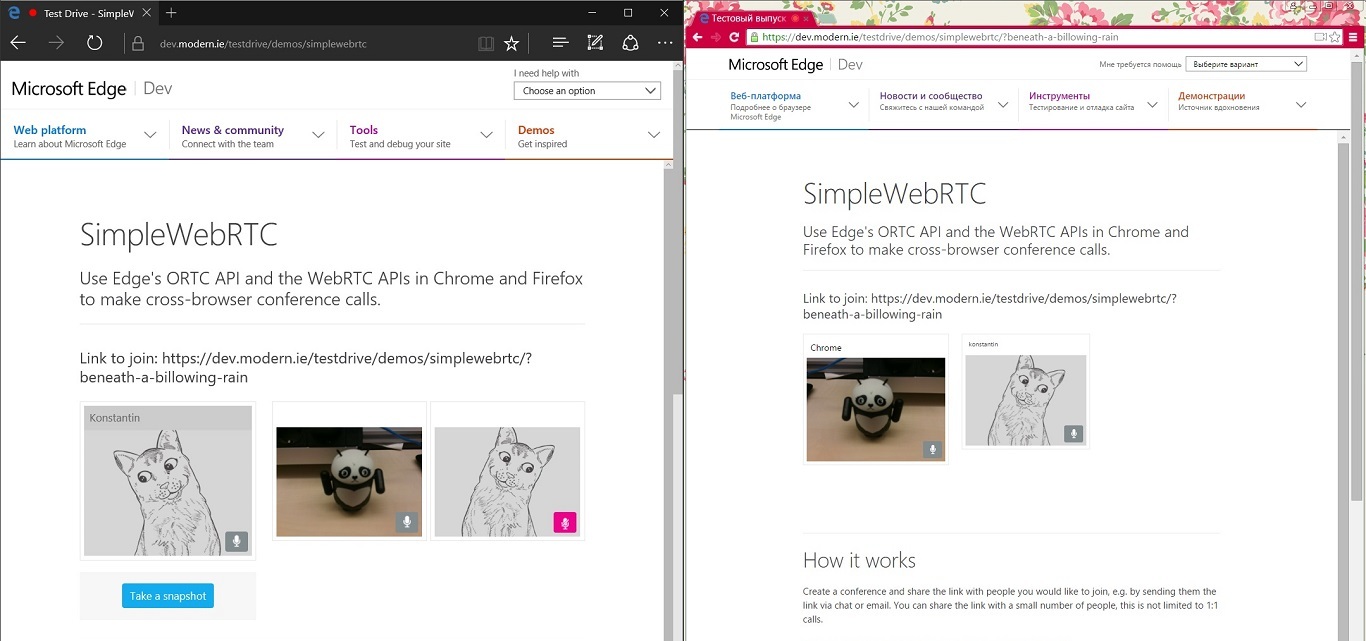

The steps below relate to operations performed by one of the clients. Both points must go through similar steps to establish a 1: 1 interaction. To get a better feel for the code samples below, we recommend using our example on the Microsoft Edge Test Drive as a sample implementation.

More information about working with the Media Capture API can be found in our article with the announcement of support for capturing media signals in Microsoft Edge .

To help protect the privacy of users, we added an option that allows the user to control whether the IP address of the local host can be transferred to IceGatherer objects. The corresponding configuration interface can be found in the Microsoft Edge browser settings.

In our Test Drive example, we deployed a TURN server. It has limited bandwidth, so we limited it to our demo page only.

Another option is to collect all remote ICE candidates into the remoteCandidates array and call the function iceTr.setRemoteCandidates (remoteCandidates to immediately add all remote candidates.

The following is a sketch for an auxiliary function:

In general, these are the main steps in writing the year. As we said, our example of working with ORTC additionally includes showing video previews, processing error messages, etc.

Once you understand the setting of 1: 1 calls, it will be quite obvious how to make a group session using the grid topology, in which each point has a 1: 1 connection with the rest of the group. Since parallel duplication in our implementation does not work, you will need to use 1: 1 signals, so that the independent IceGatherer and DtlsTransport objects will be used for each connection.

We update our implementation to keep up with the latest ORCT specification updates. In general, the specification is quite stable since the release of the “call for implementation” by the ORTC CG, so we do not expect significant changes at the JavaScript API level. In this regard, it seems to us that our implementation is ready to start testing cross-browser compatibility at the protocol level.

Some of the limitations of our implementation that we need to identify are:

Although our implementation is preliminary, we would love to hear your feedback. They will help us implement full support for ORTC in Microsoft Edge in the coming months. Our goal is to make the implementation of the standard compatible with the modern web and other solutions of the real-time communications industry in the future.

On the way to this goal in the near future, the Skype team will start using the ORTC API in Microsoft Edge to create a full-fledged audio / video communication and interaction experience in Skype and Skype for Business web clients. The team also invests in compatibility with the standard WebRTC protocol to ensure that Skype works on key desktop, mobile and browser platforms. For developers who want to integrate Skype and Skype for Business into their applications, the Skype Web SDK will also be updated to allow for the use of the ORTC and WebRTC API. Additional information on this topic is available in the article " Enabling Seamless Communication Experiences for the Web with Skype, Skype for Business, and Microsoft Edge ".

Moreover, several members of ORTC CG worked closely with us on the early adaptation of the technology. We plan to continue working together on the evolution of WebRTC technology in the direction of “ WebRTC Next Version (NV) .” We expect that the pioneers in working with ORTC will soon begin to make their experiences.

Additional examples: ORTC + WebRTC Bundle , Call between ORTC and Twilio .

- Shijun Sun, Principal Program Manager, Microsoft Edge

- Hao Yan, Principal Program Manager, Skype

- Bernard Aboba, Architect, Skype

ORTC support in Microsoft Edge is the result of collaboration between operating system teams (OSG) and Skype. Combining together 20 years of building a web platform and 12 years of creating one of the largest real-time communication services for ordinary and business users, we set a goal to make it possible to build a browser experience not only with Skype users, but and other communication services compatible with WebRTC.

')

Looking to the future, we hope to see a variety of community solutions that will be possible through the use of ORTC. In turn, we want to tell you in more detail what our preliminary implementation of the ORTC includes, and show with a simple example how to build audio and video communication in 1: 1 scenarios.

What we provide

The diagram below is part of the overview section of the ORTC API specification. It provides a high-level description of the interconnection between various ORTC objects and is useful for illustrating the interaction of individual sections of code: from capturing media streams (tracks) to RtpSender objects, and then following the path to RtpReceiver objects that can be sent to video / audio tags. We recommend using this diagram as a reference when starting to explore the ORTC API.

Our initial implementation of ORTC includes the following components:

- ORTC API support . Our main focus now is audio / video communications. We have implemented support for the following objects: IceGatherer, IceTransport, DtlsTransport, RtpSender, RtpReceiver, as well as RTCStats interfaces that are not directly displayed on the diagram.

- Support for RTP / RTCP plexing (required when working with DtlsTransport). Audio / video multiplexing is also supported.

- Support STUN / TURN / ICE. We support STUN ( RFC 5389 ), TURN ( RFC 5766 ) and ICE ( RFC 5245 ). Within the framework of the ICE, a regular nomination is supported, while the aggressive choice is supported in part (for the recipient). DTLS-SRTP ( RFC 5764 ) is supported based on DTLS 1.0 ( RFC 4347 ).

- Codec support . From audio codecs, we implemented support for G.711 , G.722 , Opus, and SILK . We also support Comfort Noise (CN) and DTMF in accordance with the audio requirements of RTCWEB . For video, we only support the H.264UC codec used by Skype services (including such features as simultaneous and scalable video coding and advanced error correction). In the future, we plan to implement compatible support for H.264- based videos.

Although our preliminary implementation can still contain bugs, we think that it can already be used in typical scenarios, and we will be happy to hear feedback from developers who will try to implement it in practice.

If you are familiar with WebRTC 1.0 implementations and are interested in learning more about the evolution of object support within WebRTC 1.0 and ORTC, we recommend that you get acquainted with the following presentation from Google, Microsoft and Hookflash from the IIT RTC 2014 conference: “ ORTC API Update .”

How to create an application for 1: 1 interaction

Now let's discuss how to go from the overview of the ORTC to the implementation in the code of a simple 1: 1 scenario of audio / video communication. For this specific scenario, you will need two machines with Windows 10 working as two interaction clients (points), and a web server operating as a signaling channel for the exchange of information between these points so that you can establish a connection between them.

The steps below relate to operations performed by one of the clients. Both points must go through similar steps to establish a 1: 1 interaction. To get a better feel for the code samples below, we recommend using our example on the Microsoft Edge Test Drive as a sample implementation.

Step # 1 . Creating a MediaStream object (for example, through the Media Capture API) with one audio track and one video track.

navigator.MediaDevices.getUserMedia ({ "audio": true, "video": { width: 640, height: 360, facingMode: "user" } }).then( gotStream ).catch( gotMediaError ); function gotStream(stream) { var mediaStreamLocal = stream; … } More information about working with the Media Capture API can be found in our article with the announcement of support for capturing media signals in Microsoft Edge .

Step # 2. Create an ICE collector, and allow local candidates for setting up interaction using the ICE protocol to inform themselves about remote points.

var iceOptions = new RTCIceGatherOptions; iceOptions.gatherPolicy = "all"; iceOptions.iceservers = ... ; var iceGathr = new RTCIceGatherer(iceOptions); iceGathr.onlocalcandidate = function(evt) { mySignaller.signalMessage({ "candidate": evt.candidate }); }; To help protect the privacy of users, we added an option that allows the user to control whether the IP address of the local host can be transferred to IceGatherer objects. The corresponding configuration interface can be found in the Microsoft Edge browser settings.

In our Test Drive example, we deployed a TURN server. It has limited bandwidth, so we limited it to our demo page only.

Step # 3. Create an ICE transport for audio and video and prepare to handle remote ICE candidates in the ICE transport

var iceTr = new RTCIceTransport(); mySignaller.onRemoteCandidate = function(remote) { iceTr.addRemoteCandidate(remote.candidate); } Another option is to collect all remote ICE candidates into the remoteCandidates array and call the function iceTr.setRemoteCandidates (remoteCandidates to immediately add all remote candidates.

Step # 4. Create DTLS transport

var dtlsTr = new RTCDtlsTransport(iceTr); Step # 5. Create sender and receiver object

var audioTrack = mediaStreamLocal.getAudioTracks()[0]; var videoTrack = mediaStreamLocal.getVideoTracks()[0]; var audioSender = new RtpSender(audioTrack, dtlsTr); var videoSender = new RtpSender(videoTrack, dtlsTr); var audioReceiver = new RtpReceiver(dtlsTr, "audio"); var videoReceiver = new RtpReceiver(dtlsTr, "video"); Step # 6. Request sender and receiver capabilities

var recvAudioCaps = RTCRtpReceiver.getCapabilities("audio"); var recvVideoCaps = RTCRtpReceiver.getCapabilities("video"); var sendAudioCaps = RTCRtpSender.getCapabilities("audio"); var sendVideoCaps = RTCRtpSender.getCapabilities("video"); Step # 7. Exchange ICE / DTLS parameters and capabilities for receiving / sending.

mySignaller.signalMessage({ "ice": iceGathr.getLocalParameters(), "dtls": dtlsTr.getLocalParameters(), "recvAudioCaps": recvAudioCaps, "recvVideoCaps": recvVideoCaps, "sendAudioCaps": sendAudioCaps, "sendVideoCaps": sendVideoCaps }; Step # 8. Get the parameters of the remote point, start the ICE and DTLS transports, set the parameters for sending and receiving audio / video.

mySignaller.onRemoteParams = function(params) { // The responder answers with its preferences, parameters and capabilities // Derive the send and receive parameters. var audioSendParams = myCapsToSendParams(sendAudioCaps, params.recvAudioCaps); var videoSendParams = myCapsToSendParams(sendVideoCaps, params.recvVideoCaps); var audioRecvParams = myCapsToRecvParams(recvAudioCaps, params.sendAudioCaps); var videoRecvParams = myCapsToRecvParams(recvVideoCaps, params.sendVideoCaps); iceTr.start(iceGathr, params.ice, RTCIceRole.controlling); dtlsTr.start(params.dtls); audioSender.send(audioSendParams); videoSender.send(videoSendParams); audioReceiver.receive(audioRecvParams); videoReceiver.receive(videoRecvParams); }; The following is a sketch for an auxiliary function:

RTCRtpParameters function myCapsToSendParams (RTCRtpCapabilities sendCaps, RTCRtpCapabilities remoteRecvCaps) { // Function returning the sender RTCRtpParameters, based on intersection of the local sender and remote receiver capabilities. // Steps to be followed: // 1. Determine the RTP features that the receiver and sender have in common. // 2. Determine the codecs that the sender and receiver have in common. // 3. Within each common codec, determine the common formats and rtcpFeedback mechanisms. // 4. Determine the payloadType to be used, based on the receiver preferredPayloadType. // 5. Set RTCRtcpParameters such as mux to their default values. } RTCRtpParameters function myCapsToRecvParams(RTCRtpCapabilities recvCaps, RTCRtpCapabilities remoteSendCaps) { return myCapsToSendParams(remoteSendCaps, recvCaps); } Step # 9. Display and start playback of remote media streams via a video tag

var videoRenderer = document.getElementById("myRtcVideoTag"); var mediaStreamRemote = new MediaStream(); mediaStreamRemote.addTrack(audioReceiver.track); mediaStreamRemote.addTrack(videoReceiver.track); videoRenderer.srcObject = mediaStreamRemote; videoRenderer.play(); In general, these are the main steps in writing the year. As we said, our example of working with ORTC additionally includes showing video previews, processing error messages, etc.

Once you understand the setting of 1: 1 calls, it will be quite obvious how to make a group session using the grid topology, in which each point has a 1: 1 connection with the rest of the group. Since parallel duplication in our implementation does not work, you will need to use 1: 1 signals, so that the independent IceGatherer and DtlsTransport objects will be used for each connection.

Additional details on the implementation of ORTC in Microsoft Edge

We update our implementation to keep up with the latest ORCT specification updates. In general, the specification is quite stable since the release of the “call for implementation” by the ORTC CG, so we do not expect significant changes at the JavaScript API level. In this regard, it seems to us that our implementation is ready to start testing cross-browser compatibility at the protocol level.

Some of the limitations of our implementation that we need to identify are:

- We do not support RTCIceTransportController. Our implementation processes ICE freeze / defrost commands at the level of each transport, so the possibility of giving instructions to all IceTransports is not mandatory. We think this should be compatible with existing implementations.

- RtpListener is not yet supported. This means that the SSRC must be specified in advance within the framework of RtpReceiver.

- Duplication (creation of a fork) is not supported either in IceTransport, or in IceGatherer, or in DtlsTransport. The decision to implement duplication in DtlsTransport is still under discussion in the ORTC CG.

- Non-multiplex RTP / RTCP is not supported in DtlsTransport. When using DtlsTransport, your application must support RTP / RTCP multiplexing.

- In RTCRtpEncodingParameters, we currently ignore most of the quality settings. However, we require the installation of the attributes 'active' and 'ssrc'.

- The icecandidatepair changes event is not yet supported. You can get information about the candidate to establish a connection through the getNominatedCandidatePair method.

- Today we do not support the functionality of DataChannel defined by the ORTC specification.

What's next

Although our implementation is preliminary, we would love to hear your feedback. They will help us implement full support for ORTC in Microsoft Edge in the coming months. Our goal is to make the implementation of the standard compatible with the modern web and other solutions of the real-time communications industry in the future.

On the way to this goal in the near future, the Skype team will start using the ORTC API in Microsoft Edge to create a full-fledged audio / video communication and interaction experience in Skype and Skype for Business web clients. The team also invests in compatibility with the standard WebRTC protocol to ensure that Skype works on key desktop, mobile and browser platforms. For developers who want to integrate Skype and Skype for Business into their applications, the Skype Web SDK will also be updated to allow for the use of the ORTC and WebRTC API. Additional information on this topic is available in the article " Enabling Seamless Communication Experiences for the Web with Skype, Skype for Business, and Microsoft Edge ".

Moreover, several members of ORTC CG worked closely with us on the early adaptation of the technology. We plan to continue working together on the evolution of WebRTC technology in the direction of “ WebRTC Next Version (NV) .” We expect that the pioneers in working with ORTC will soon begin to make their experiences.

Additional examples: ORTC + WebRTC Bundle , Call between ORTC and Twilio .

- Shijun Sun, Principal Program Manager, Microsoft Edge

- Hao Yan, Principal Program Manager, Skype

- Bernard Aboba, Architect, Skype

Source: https://habr.com/ru/post/269619/

All Articles