Low cost VDS hosting in Russia. Is it possible to?

Impressed by the success of the American hosting company DigitalOcean (which is something to hide), in 2014 we decided to create an inexpensive and high-quality VDS hosting in Russia. At that time, we had a small “data center” of 4 racks located in the building of the former dormitory, and two low-cost split systems were used as a cooling system. A channel for 100 megabits, 2 ancient Cisco routers and a hundred relatively new servers. It would be funny to promise customers good quality with such a Starter Kit. But we decided to try to achieve our goal. Let's try to answer the title of this post, and in parallel we will tell about our experience in creating low-cost hosting.

Stage zero. Strategy Development

After analyzing the market, we have identified several types of VDS hosters on the Russian market:

- Resellers of foreign and Russian hosters

- Hosters renting ready servers

- Hosters with their own server hardware

- Hosters that have their own data center, network infrastructure and servers

With the first type - the easiest. In addition to the site, coupled with the billing service provider and advertising, almost nothing is required. Low cost is impossible, in particular in the Russian location. The second type is the rental of ready servers. You can create a hosting infrastructure on their base, administering remotely. Due to the rental of a large number of servers, you can achieve the optimal rental price, but it’s unlikely that you will be able to achieve super-low prices on VDS. The third type is that which would suit us. The lack of a professional data center forced us to consider the option of renting racks in someone else's data center. But the following factors did not give rest: the cost of renting racks, restrictions on channel width and traffic, lack of operational access to equipment, limits / surcharge for kilowatts consumed. Indeed, in fact, the final cost of the VDS hosting service is affected by only 3 things:

')

- Cost of equipment and its placement

- Electricity cost

- Traffic cost

Reflecting on how to create a real low-cost - we came to the conclusion that we still can not do without our own data center. Therefore, we decided that we will improve our mini “data center” (as long as it is in quotes) as far as possible and see if the project will be of interest to the market.

They have not yet decided on tariffs, but they knew for sure - they should not be higher than those of western competitors, or similar, but in this case the amount of resources provided should be higher. In the end, we did both, but we will come back to this later.

SSD drives of Samsung 840 EVO series were chosen for the disk subsystem, as by tests they turned out to be the most productive. And since there were a lot of HDD SATA disks in stock, we decided to add a line of tariffs for those who are not very interested in the speed of the disk subsystem at a more loyal price. In the end, as the demand for VDS with HDD SATA drives decreases, we could reduce their number to zero.

HDD SATA were combined in RAID 10, and SSD - in RAID 1.

The main type of virtualization is KVM, but on servers with HDD SATA disks they left the choice of KVM / OpenVZ, because at that time, as part of the experiment, a pair of nodes on OpenVZ was already working, and this type of virtualization was especially interesting for game server holders.

Until now, they did not understand how correct our decision was, but we still provide users with a choice of SSD / HDD and KVM / OpenVZ. In terms of the administration and maintenance of servers, this does not cause unnecessary trouble.

The main rule of the project was: minimum restrictions and maximum opportunities and amenities for customers, even though it is low-cost. We wanted to do something amazing, to surprise the Russian market. Where to begin?

Stage one. Overselling?

There were no problems with memory and hard disk - the volume we set up with a margin would be enough for 30-40 clients of a single node, since SSD disks were purchased at a maximum capacity of 1 TB, and memory was installed at 48-60 GB in each node. But there were obviously fewer cores than needed: 2 x Intel Xeon E5530 = 16 logical cores with hypertreaming enabled. However, in the course of the experiments, we realized that the most loaded node uses no more than 50% of processor resources at the peak, since a situation where ~ 30 clients need 100% of the kernel resources at the same time is unlikely. However, we decided nevertheless to insure. In semi-automatic mode (in the future it is planned to fully automate) - if one of the nodes begins to consume more than 50% of the processor's resources, then the most “voracious” clients are distributed to less loaded nodes through live migration. The user will not even notice this. And since there are already more than 150+ working nodes at the moment, there will simply be no situation where any of the clients will be affected by the declared processor resources.

Yes, it is overselling, but literate. In practice - you will never feel a shortage of CPU. The question is very sensitive and always causes a lot of disputes. Also, the use of server processors Xeon shows the best performance when using virtualization, because there is a significant savings in CPU cycles when switching between processes, compared with desktop counterparts with a higher frequency.

Another parameter we oversell is traffic. Here the same conditions work as for the CPU. For 100 customers with little traffic, there is one that pumps a lot. Therefore, we decided not to count traffic and not divide it into Russian / foreign, but simply to provide guaranteed 100 Mbit / s per VDS. At the same time, we only put a limit on the bandwidth of 100 Mbit / s if the client traffic is so large that it starts delivering inconvenience to the neighbors in the server. For all the time of our work, we have never applied this rule. And in normal mode, traffic is sent / received at speeds of 300-800 Mbit / s. In general, for the system it is even better, quickly giving up the amount of information creates a smaller queue in the stack and as a result, loads the system less.

Stage Two. Own channel to M9

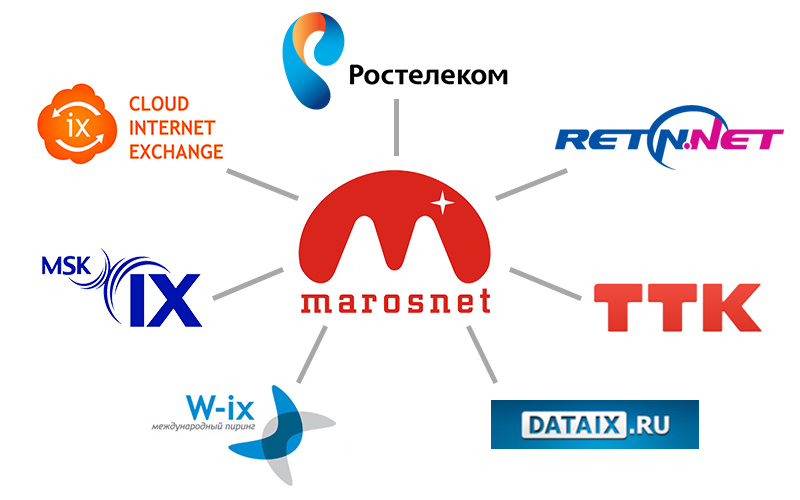

The weakest point at that time was the Internet channel. Therefore, the first thing we laid the optics to M9. This made it possible to independently improve their network. For good network connectivity, within six months we connected 7 links to leading providers and traffic exchange networks:

This gave the data center several advantages at once: minimum ping from different regions; high speed from the regions of Russia, from Ukraine, the CIS countries and Europe; The total bandwidth of external channels is 65 Gbit / s. This is more fun than 100 megabits. Since then, 100 megabits is available to every virtual server without traffic restrictions!

However, there was one serious problem that, by a “happy” coincidence, had already spoiled the lives of us and our clients three times - the lack of a backup channel at that time. For a year and a half, we had three optics broken. As a rule, the reason is always the same - road works, construction, excavator at random points of the channel.

Stage Three. Improving switching equipment

One of the main problems in the period of growth were DDoS attacks. While the channel was weak - the channel was laid. When the channel became powerful - old tsisk began to fall. In the period from February to December 2014, the entire fleet of network equipment had to be updated. The transition to 10G played a significant role in this. The most expensive “pleasure” at that time was the purchase of the Juniper MX-80 router, which can easily digest up to 80 Gbps of traffic:

The ddos problem has almost disappeared from that moment on. Now, 5-10 gigabits can flow to 1 client, while other customers are unlikely to notice. Is that the neighbors in the server, but not on the rack. But in such situations, as a rule, our system administrators respond very quickly. The most favorable option is to block malicious traffic on the edge switch. The least favorable is blackhole.

Stage Four. Fighting heat and uninterrupted power

The start of sales was in October 2014. Despite the fact that we almost did not have advertising, the popularity of our VDS grew every day. We did not expect that in half a year the number of active VDS will grow to 2-3 thousand. The first thing is no longer enough two split-systems. The temperature in the server room has risen above 25 degrees. We divided the space into a hot and cold corridor, realized the discharge of warm air. For a time, it saved. Then the summer began. Bought in addition to industrial floor air conditioner, as an emergency solution. But the number of working nodes increased every day, and with them the temperature outside the window. We just did not know what to do. Our "data center" was boiling, and everything went right up to the mobile office air conditioners, sent to the individual, most overheated areas. Many of you are likely to laugh at us now. But we were not at all laughing at that moment. For about 2 weeks, we fought desperately to save the servers, knowing full well that with the current “data center” we will not live until the end of the summer. In 30 degree heat we had to order 2 huge dry ice cubes! Only thanks to them they managed to survive the hottest day.

The lack of uninterrupted power disturbed us no less. In the server room there was an additional rack + 1 rack with uninterruptible power supply units, which, at best, lasted for 10-15 minutes with the maximum load on the "data center". There was no diesel generator at all (!). About outages usually warned in advance, in this case, the partners provided us with a diesel generator for rent.

Of course, we understood that this is an unprofessional approach. There have been sudden outages. And once, during a planned power outage, we were mistakenly brought in a generator of much lower power than necessary. As a result, there was a very long downtime.

All these problems were not unexpected. Back in February 2015, it became obvious that the growth rate is so high that it will inevitably lead us to the problems described above. Then began the active search for options for expansion.

Stage Five. We are all sick

For half a year we received a lot of positive and negative reviews. We were aware of the weaknesses and wanted to fix them as soon as possible. High-quality and prompt technical support, reasonable price and good performance were the retaining factors. But periodic downtime did not suit anyone, especially us. And here came the crucial moment - we acquired a new data center, now without quotes, on ~ 5000 servers.

This allowed us to instantly get rid of children's ills, as our new data center is equipped with a professional air-conditioning system, 2 powerful 2.4-megawatt diesel generators, industrial bespereboynikami, integrated fire extinguishing system and video surveillance. The data center is designed by professionals and is able to work at maximum load without any fakap. Finally, the backup channel to M9 appeared. Overview of the new data center was awarded a separate post, so here we will not tell about it in detail.

In the new data center, we have assembled a more powerful cluster based on servers with Intel Xeon E5620 processors and SSD drives in a RAID 10 configuration. This gave an overall performance increase of ~ 35%, according to UnixBench. The hypervisor now runs on CentOS 7, and the installation of virtual machines takes 1-2 minutes (vs. 5-15 before). We also included a full forwarding of all physical CPU instructions, including the model name. The network bandwidth increased by 1 rack from 10G to 20G. Added a private network - a special segment of the internal network, with "gray" IP-addresses, for the exchange of traffic between VDS. Implemented live migration when changing the tariff from HDD to SSD and back. Progress, however!

So is low-cost possible in Russia?

Definitely yes! We managed to achieve very good results over the past year and a half, and some customers speak of us as the only option in terms of price-quality ratio in Russia. On the other hand, we still have flaws - we lack better software solutions. Currently hosting is based on ISPsystem products. Now we are writing our own management system on Go and plan to implement it in the future (we hope that in the near future). Now there is no convenient API. No backup included in 1 click. Backups are only as a separate service.

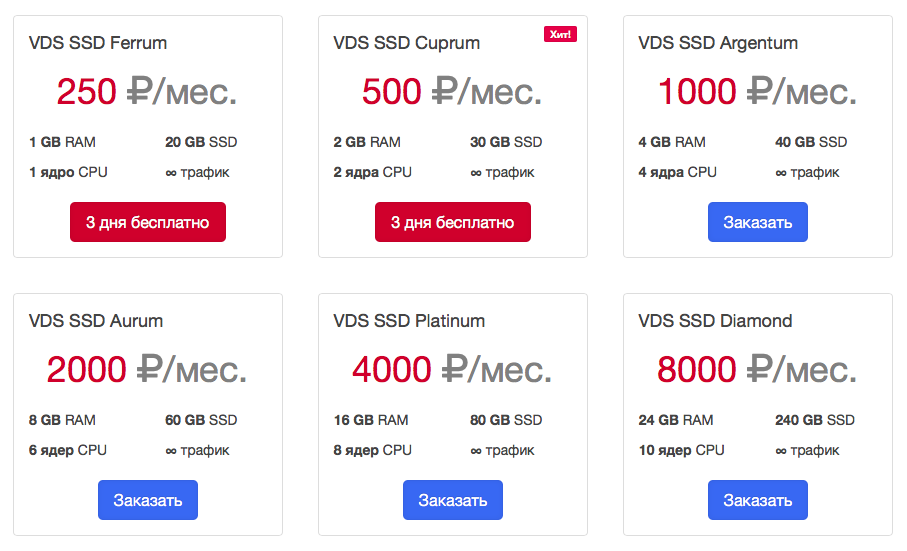

At the very beginning we promised to return to the tariffs. We can not say about them.

Initially, we created configurations similar to those of DigitalOcean. One to one. The price of the minimum configuration was set at 250 p., For VDS with SSD, and 200 p. for VDS with HDD SATA disk, that at the current rate of <5 $.

Then we decided that this was not enough and went further - we doubled the memory on all tariffs and the number of cores, starting with the second tariff. Here's what happened:

Conclusion

We hope you enjoyed our first post.

In future articles, we will describe in detail about the new data center and why we chose Go to create a new control panel, and what difficulties we encountered in the process of its creation.

Source: https://habr.com/ru/post/269561/

All Articles