Algorithm for extracting information in ABBYY Compreno. Part 1

Hi, Habr!

My name is Ilya Bulgakov, I am a programmer of the information extraction department at ABBYY. In a series of two posts, I will tell you our main secret - how Information Extraction technology works in ABBYY Compreno .

Earlier, my colleague Danya Skorinkin DSkorinkin managed to tell about the view of the system from the engineer, covering the following topics:

This time we will go deeper into the depths of ABBYY Compreno technology, let's talk about the architecture of the system as a whole, the basic principles of its operation and the algorithm for extracting information!

')

Recall the task.

We analyze natural language texts using ABBYY Compreno technology. Our task is to extract important information for the customer, represented by entities, facts and their attributes.

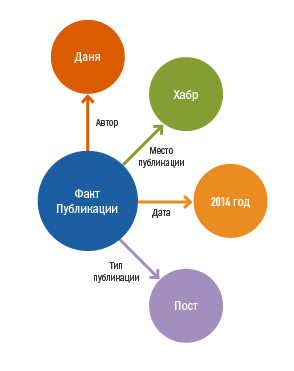

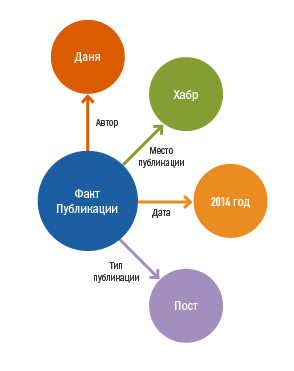

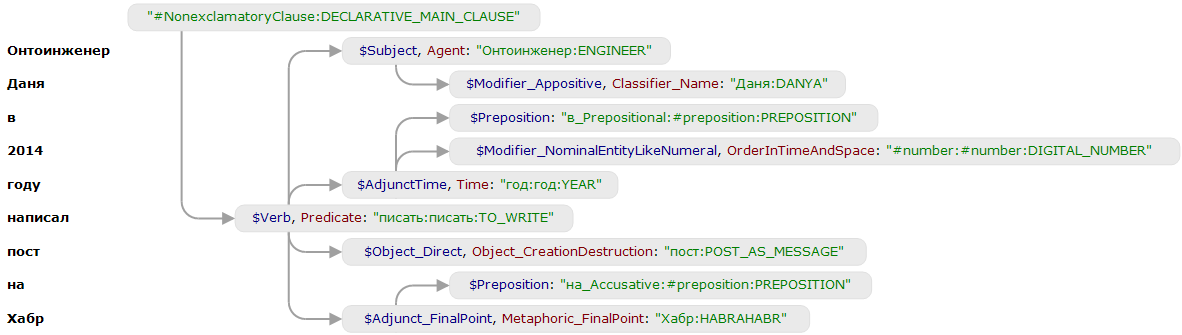

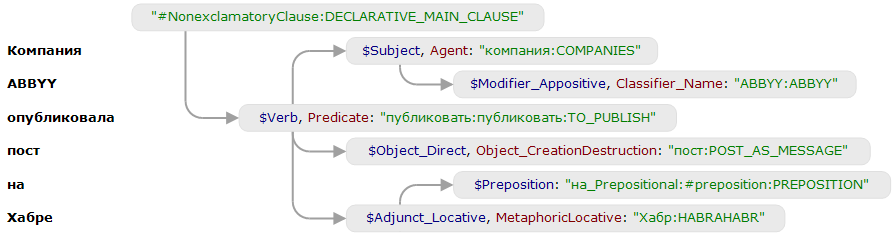

Onto engineer Danya in 2014 wrote a post on Habr

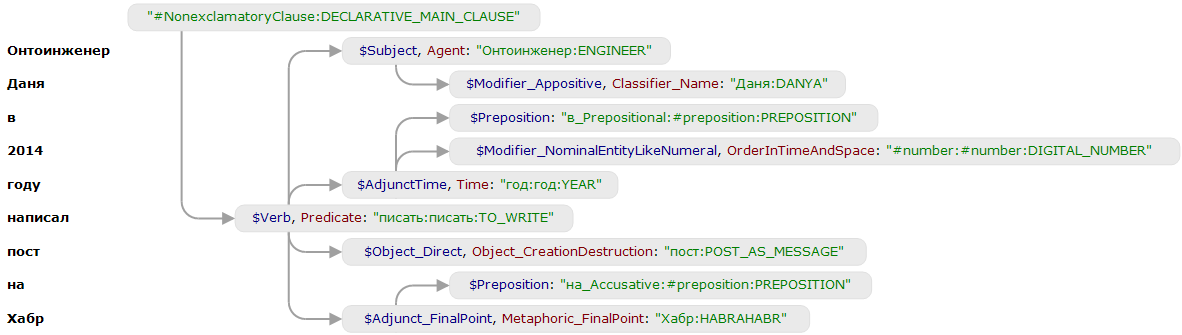

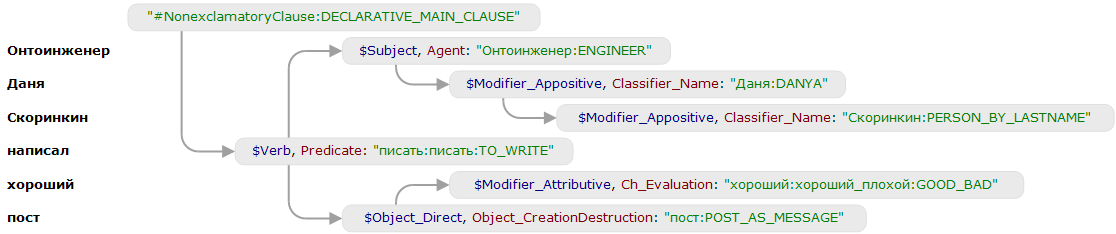

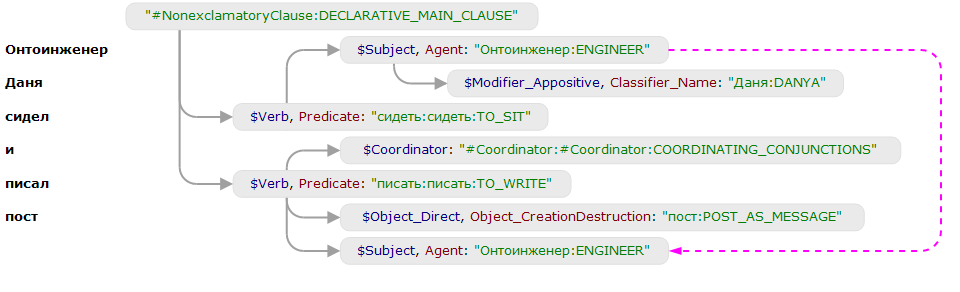

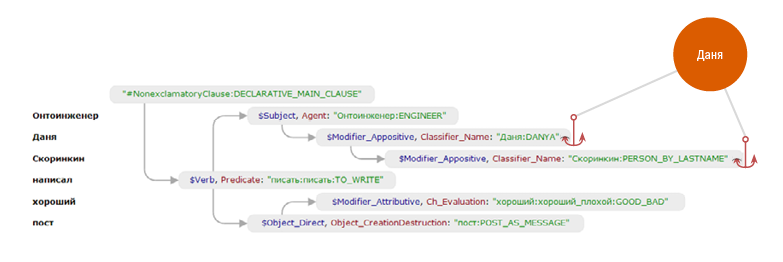

At the input to the information extraction system, parse trees received by the semantic-syntactic parser, which look like this:

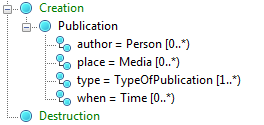

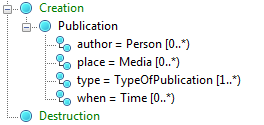

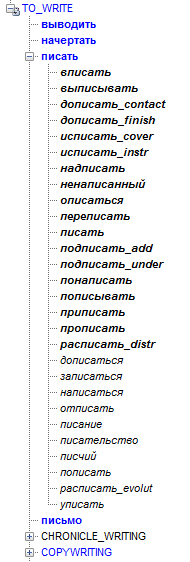

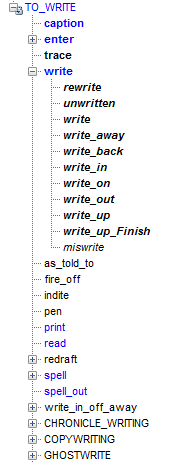

After a complete semantic-syntactic analysis, the system needs to understand what needs to be learned from the text. This will require a domain model (ontology) and rules for extracting information. The creation of ontologies and rules is carried out by a special department of computer linguists, whom we call engineer engineers. An example of an ontology that models the fact of publication:

The system applies the rules to different parts of the parse tree: if the fragment matches the pattern, the rule generates assertions (for example, create a Person object, add an attribute-Name, etc.). They are added to the “bag of statements” if they do not contradict the statements already contained in it. When no other rules can be applied, the system builds an RDF-graph (the format of the extracted information) according to the claims from the bag.

The complexity of the system is added to the fact that templates are built for semantic-syntactic trees, there is a wide variety of possible statements, rules can be written, almost without worrying about the order of their application, the output RDF-graph should correspond to a specific ontology and many other features. But let's get everything in order.

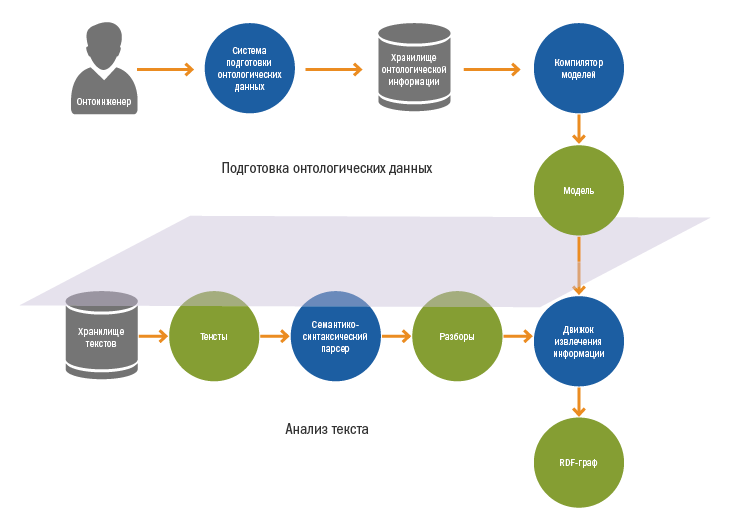

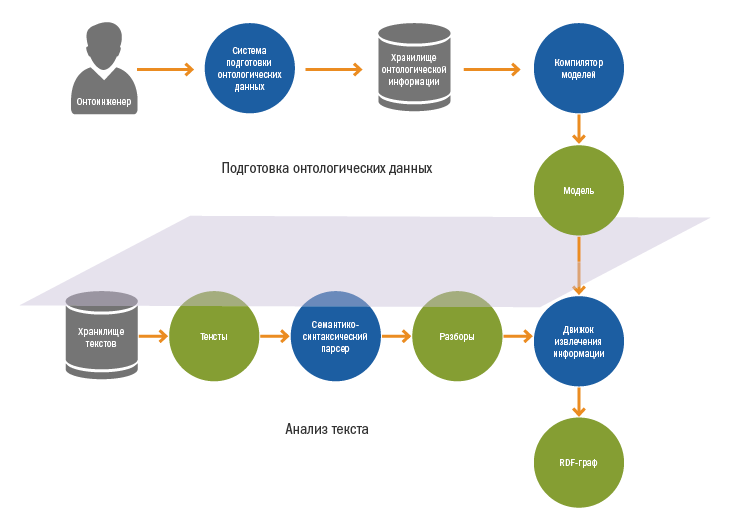

The system can be divided into two stages:

Preparation of ontological data is carried out by engineers in a special environment. In addition to designing ontologies, online engineers are engaged in creating rules for extracting information. We talked about the process of writing the rules in detail in the last article .

Rules and ontologies are stored in a special repository of ontological information, from where they fall into the compiler, which collects from them a binary domain model.

In the model fall:

The compiled model arrives at the input to the “engine” of information extraction.

In the very depths of the ABBYY Compreno technology lies the Semantic-syntactic parser. The story about him is worthy of a separate article, today we will discuss only its most important features for our task. If you wish, you can study the article from the Dialog conference .

What we need to know about the parser:

Inside our system, we are not working with an RDF-graph, but with some internal representation of the extracted information. We consider the extracted information as a set of information objects, where each object represents a certain entity / fact and a set of statements associated with it. Inside the information extraction system, we operate with information objects.

Information objects are allocated by the system using rules written by engineers. Objects can be used in rules to select other objects.

The following operations can be performed on objects:

The first four points are intuitive, and we already talked about them in the previous article. Let us dwell on the latter.

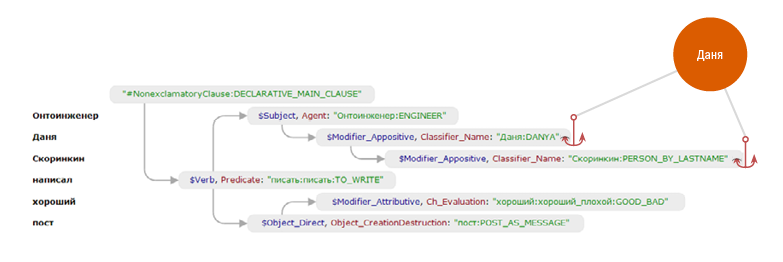

The mechanism of "anchors" occupies a very important place in the system. One information object can, in general, be connected by “anchors” with a certain set of nodes of semantic-syntactic trees. Binding to "anchors" allows you to re-access objects in the rules.

Consider an example.

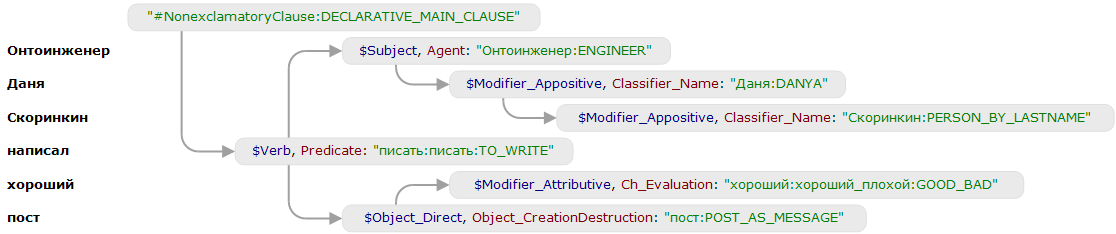

Onto engineer Danya Skorinkin wrote a good post

The rule below creates a person “Danya Skorinkin” and connects it with two components.

The first part of the rule (before the sign =>) is a pattern on the parse tree. The template involves two components with the semantic classes “PERSON_BY_FIRSTNAME” and “PERSON_BY_LASTNAME” . Two variables were compared with them - name and surname . In the second part of the rule, the first line creates a person P on the component, which is mapped to the variable name . This component is connected by the “anchor” with the object automatically. The second line of the

The result is an information object-person associated with two components.

After this, a fundamentally new opportunity appears in the template part of the rules - to check that the information object is already attached to a specific place in the tree.

This rule will only work if the object of the Person class has been attached to the component with the semantic class “PERSON_BY_LASTNAME” .

Why is this technique important to us?

The concept of the anchor mechanism is close to the notion of reference, but does not fully correspond to the model adopted by linguists. On the one hand, anchors often mark different referents of the same extracted subject. On the other hand, in practice this is not always the case, and the placement of anchors is sometimes used as a technical tool for the convenience of writing rules.

The placement of anchors in the system is a fairly flexible mechanism, allowing to take into account core (non-wood) communications. With the help of a special construction in the rules, it is possible to connect an object with an anchor not only with the selected component, but also with the components related to its core relational connections.

This feature is very important to increase the completeness of the selection of facts - the allocated information objects are automatically associated with all nodes that the parser considered coreferential, after which the rules highlighting the facts begin to “see” them in new contexts.

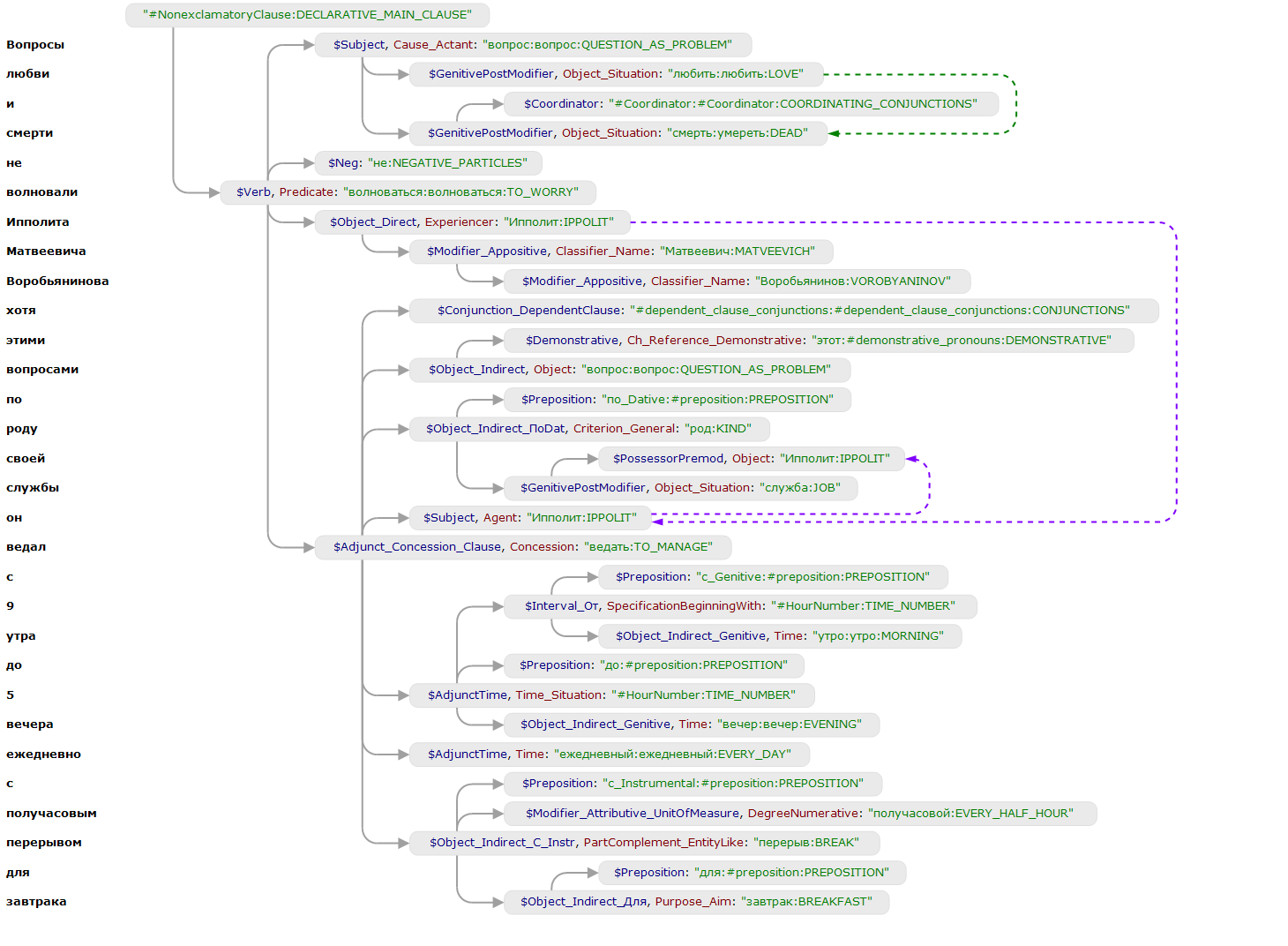

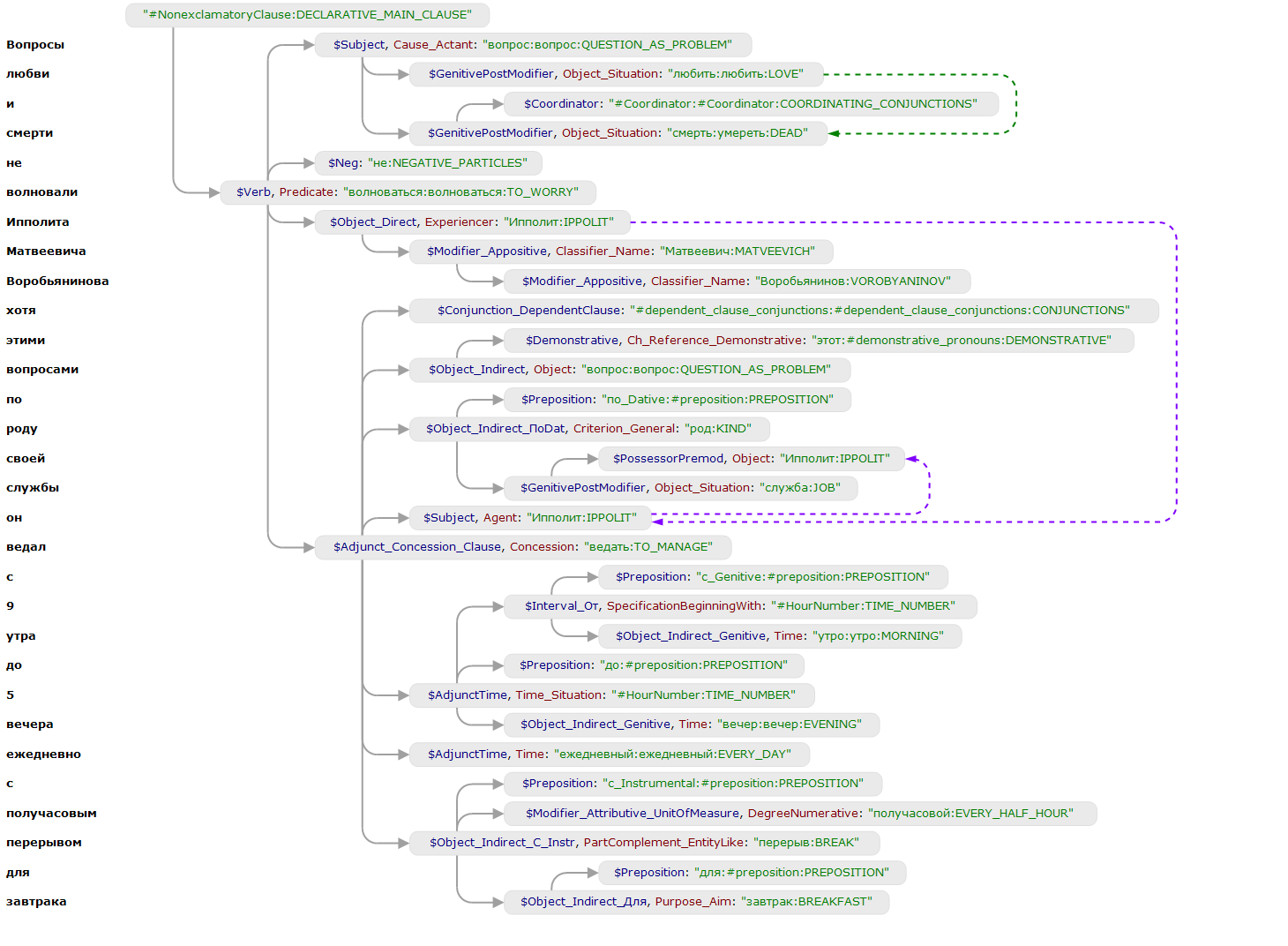

Below is an example of coreference. We analyze the text “The questions of love and death did not worry Ippolit Matveyevich Vorobyaninov, although, by the nature of his service, he was in charge of these questions from 9 am to 5 pm daily, with a half-hour break for breakfast.”

The parser restores the IPPOLIT semantic class for the “He” node. The nodes are connected by coreferential non-wood connection (indicated by a purple arrow).

The following construction in the rules allows the anchor to associate an object P not only with the node that matched the this variable, but also with those nodes that are associated with it by a core relation (i.e., go along the purple arrows).

On it the first part came to an end. In it, we talked about the general system architecture and elaborated on the input data of the information extraction algorithm (analyzes, ontologies, rules).

The next post, which will be released tomorrow, we will immediately begin with a discussion of how the "engine" of extracting information is arranged and what ideas it contains.

Thank you for your attention, stay with us!

Update: second part

My name is Ilya Bulgakov, I am a programmer of the information extraction department at ABBYY. In a series of two posts, I will tell you our main secret - how Information Extraction technology works in ABBYY Compreno .

Earlier, my colleague Danya Skorinkin DSkorinkin managed to tell about the view of the system from the engineer, covering the following topics:

This time we will go deeper into the depths of ABBYY Compreno technology, let's talk about the architecture of the system as a whole, the basic principles of its operation and the algorithm for extracting information!

')

What are you talking about?

Recall the task.

We analyze natural language texts using ABBYY Compreno technology. Our task is to extract important information for the customer, represented by entities, facts and their attributes.

Onto engineer Danya in 2014 wrote a post on Habr

At the input to the information extraction system, parse trees received by the semantic-syntactic parser, which look like this:

After a complete semantic-syntactic analysis, the system needs to understand what needs to be learned from the text. This will require a domain model (ontology) and rules for extracting information. The creation of ontologies and rules is carried out by a special department of computer linguists, whom we call engineer engineers. An example of an ontology that models the fact of publication:

The system applies the rules to different parts of the parse tree: if the fragment matches the pattern, the rule generates assertions (for example, create a Person object, add an attribute-Name, etc.). They are added to the “bag of statements” if they do not contradict the statements already contained in it. When no other rules can be applied, the system builds an RDF-graph (the format of the extracted information) according to the claims from the bag.

The complexity of the system is added to the fact that templates are built for semantic-syntactic trees, there is a wide variety of possible statements, rules can be written, almost without worrying about the order of their application, the output RDF-graph should correspond to a specific ontology and many other features. But let's get everything in order.

Information retrieval system

The system can be divided into two stages:

- Preparation of ontologies and compilation of models

- Text analysis:

- Semantic and syntactic analysis of texts in natural language

- Information retrieval and generation of the final RDF-graph

Preparation of ontological data and compilation of the model

Preparation of ontological data is carried out by engineers in a special environment. In addition to designing ontologies, online engineers are engaged in creating rules for extracting information. We talked about the process of writing the rules in detail in the last article .

Rules and ontologies are stored in a special repository of ontological information, from where they fall into the compiler, which collects from them a binary domain model.

In the model fall:

- Ontologies

- Extraction Rules

- Identification rules

The compiled model arrives at the input to the “engine” of information extraction.

Semantic-syntactic analysis of texts

In the very depths of the ABBYY Compreno technology lies the Semantic-syntactic parser. The story about him is worthy of a separate article, today we will discuss only its most important features for our task. If you wish, you can study the article from the Dialog conference .

What we need to know about the parser:

- The parser generates trees of semantic-syntactic analysis of sentences (one tree per sentence). Subtrees we call components. As a rule, tree nodes correspond to the words of the input text, but there are exceptions: sometimes several words are grouped into one component (for example, text in quotes), sometimes zero nodes appear (instead of omitted words). Nodes and arcs are marked.

- Nodes are based on a semantic hierarchy. The semantic hierarchy is a tree of generic relations, the intermediate nodes of which are language-independent semantic classes (for example, “HABRAHABR” ), and “leaves” are lexical classes specific to each language ( “Habr: HABRAHABR” ). Information about syntactic and semantic compatibility is correlated with hierarchy nodes. The principle of inheritance works - everything that is true for the parent class turns out to be true for the child if there is no explicit clarification in the child class.

An example of a semantic class and lexical classes specific to different languages.

- In addition to semantic-syntactic trees, the ABBYY Compreno parser returns information about non-wood links between their nodes (additional links between nodes that cannot be represented in the tree structure). First of all, these are relations expressing coreference (the same real-world object is mentioned in the text several times). For example, for the phrase “Onto engineer Danya sat and wrote a post” for the verb “to write” a zero subject will be restored, which will be associated with a non-timber link with the “Onto-engineer” node. ABBYY Compreno uses several other types of non-wood connections. In some cases, non-wood links can connect nodes from different sentences. This is often the case, for example, when resolving a pronoun anaphor. We told in detail about the pronominal anaphor in this post and in a separate article at the Dialogue conference .

An example of a restored subject (a pink arrow leads to it).

Onto engineer Danya sat and wrote a post.

- Removing homonymy. In one of the previous articles, we offered to consider the phrase “These types of steel are in stock” as an example of syntactic homonymy, which can have completely different meanings in different contexts.

The removal of homonymy occurs due to two factors:- All restrictions inherent in the semantic hierarchy are taken into account.

Tree nodes generated by the parser are always tied to a certain lexical class of the semantic hierarchy. This means that in the process of analysis, the parser removes lexical polysemy. - The compatibility statistics collected on the corpus of parallel texts is used (these are collections of texts in which texts in one language go along with their translations into another language). The idea of the approach is that, having a bilingual parser working on a single semantic hierarchy, it is possible to collect qualitative compatibility statistics on aligned parallel cases without additional markup.

When collecting statistics, only those aligned pairs of sentences for which the resulting semantic structures are comparable are taken into account. The latter means successful resolution of homonymy, since homonymy is overwhelmingly asymmetric in different languages.

No need for additional markup allows the use of large enclosures.

- All restrictions inherent in the semantic hierarchy are taken into account.

A word about information objects

Inside our system, we are not working with an RDF-graph, but with some internal representation of the extracted information. We consider the extracted information as a set of information objects, where each object represents a certain entity / fact and a set of statements associated with it. Inside the information extraction system, we operate with information objects.

Information objects are allocated by the system using rules written by engineers. Objects can be used in rules to select other objects.

The following operations can be performed on objects:

- Create

- Annotate text fragments

- Bind to ontology classes

- Fill attributes

- Associate with components using an anchor mechanism

The first four points are intuitive, and we already talked about them in the previous article. Let us dwell on the latter.

The mechanism of "anchors" occupies a very important place in the system. One information object can, in general, be connected by “anchors” with a certain set of nodes of semantic-syntactic trees. Binding to "anchors" allows you to re-access objects in the rules.

Consider an example.

Onto engineer Danya Skorinkin wrote a good post

The rule below creates a person “Danya Skorinkin” and connects it with two components.

name "PERSON_BY_FIRSTNAME" [ surname "PERSON_BY_LASTNAME " ] => Person P(name), Anchor(P, surname); The first part of the rule (before the sign =>) is a pattern on the parse tree. The template involves two components with the semantic classes “PERSON_BY_FIRSTNAME” and “PERSON_BY_LASTNAME” . Two variables were compared with them - name and surname . In the second part of the rule, the first line creates a person P on the component, which is mapped to the variable name . This component is connected by the “anchor” with the object automatically. The second line of the

Anchor(P, surname) we explicitly associate with the object of the second component, which is associated with the variable surname .The result is an information object-person associated with two components.

After this, a fundamentally new opportunity appears in the template part of the rules - to check that the information object is already attached to a specific place in the tree.

name "PERSON_BY_LASTNAME" <% Person %> => This.o.surname == Norm(name); This rule will only work if the object of the Person class has been attached to the component with the semantic class “PERSON_BY_LASTNAME” .

Why is this technique important to us?

- The whole system of fact finding is based on already retrieved information objects.

For example, when filling in the attribute “author” at the fact of publication, the rule is based on the persona object created earlier. - The technique helps with the decomposition of the rules and improves their support.

For example, one rule can only create a person, and several others can select individual properties (first name, last name, middle name, etc.).

The concept of the anchor mechanism is close to the notion of reference, but does not fully correspond to the model adopted by linguists. On the one hand, anchors often mark different referents of the same extracted subject. On the other hand, in practice this is not always the case, and the placement of anchors is sometimes used as a technical tool for the convenience of writing rules.

The placement of anchors in the system is a fairly flexible mechanism, allowing to take into account core (non-wood) communications. With the help of a special construction in the rules, it is possible to connect an object with an anchor not only with the selected component, but also with the components related to its core relational connections.

This feature is very important to increase the completeness of the selection of facts - the allocated information objects are automatically associated with all nodes that the parser considered coreferential, after which the rules highlighting the facts begin to “see” them in new contexts.

Below is an example of coreference. We analyze the text “The questions of love and death did not worry Ippolit Matveyevich Vorobyaninov, although, by the nature of his service, he was in charge of these questions from 9 am to 5 pm daily, with a half-hour break for breakfast.”

The parser restores the IPPOLIT semantic class for the “He” node. The nodes are connected by coreferential non-wood connection (indicated by a purple arrow).

The following construction in the rules allows the anchor to associate an object P not only with the node that matched the this variable, but also with those nodes that are associated with it by a core relation (i.e., go along the purple arrows).

// , P , // this. c Coreferential , // , // , . anchor( P, this, Coreferential ) On it the first part came to an end. In it, we talked about the general system architecture and elaborated on the input data of the information extraction algorithm (analyzes, ontologies, rules).

The next post, which will be released tomorrow, we will immediately begin with a discussion of how the "engine" of extracting information is arranged and what ideas it contains.

Thank you for your attention, stay with us!

Update: second part

Source: https://habr.com/ru/post/269191/

All Articles