Testing NVIDIA GRID + VMware Horizon

But I did not find an objective comparison to the forehead with the local Quadro performance.

In our configurator , server models with GRID technology support have been open for a long time, because GRID cards (formerly VGX) have appeared for a long time.

')

The first tests did not meet the expectations, I waited for the drivers to finish, and gradually ceased to monitor progress in this area.

The idea of testing this technology came back at the moment of implementation of a single project, when the client needed to optimize the existing server fleet for virtualization of workplaces using specialized 3D software.

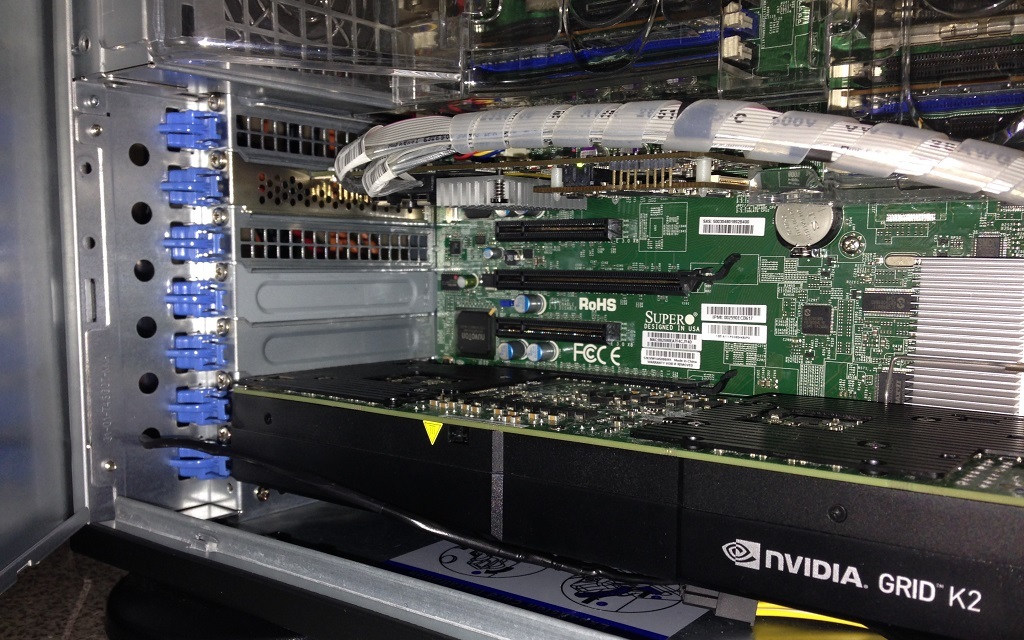

The servers were of the following configuration:

- Case CSE-745TQ

- X9DR3-F motherboard

- CPU 2pcs E5-2650

- 128GB RAM

- 8 SAS disks in RAID5

Equipped servers with GRID K2 cards. We chose VMware as the hypervisor. We installed specialized software on virtual machines and conducted testing.

During the work of the customer’s benchmark, I observed 3D graphics performance that was unprecedented for a virtual environment. The results prompted me to continue the study. For further GRID tests, I decided to use SPECviewperf as a fairly objective benchmark.

I also wanted to estimate the total cost of the solution for comparison with the implementation on the basis of a personal workstation.

Fortunately, the Quadro K5000 and Quadro K420 cards were in stock.

To begin with, I ran tests locally on Windows 7 — I got the performance results of the Quadro K5000 and K420. Since the GRID K2 card includes 2 chips, similar to the K5000 chip, I will need this data to compare the performance of virtual machines in different division modes of graphics processors.

At the very beginning, there were difficulties with cooling the card: GRID K2 has passive cooling, and the 745 case cannot provide the necessary blowing through the cards using standard methods. I had to install a 90mm fan on the back panel of the case, muffling completely empty expansion slots. In order not to risk - set the momentum to the maximum. The noise from it turned out to be significant, but the cooling is excellent.

There are many step-by-step guides for setting up and installing software in the network, so I’ll briefly go over the main points.

After installing ESXI, we install the necessary drivers and modules for GRID. Raise vCenter and Horizon. Create a virtual machine with Windows (for example), update VMware software and install all Windows updates. But further settings depend on which GPU virtualization mode we choose.

There are 3 options:

- vSGA (distribution of GPU resources between virtual machines through the VMware driver) is not interesting (high density, but extremely low performance) and will not be considered.

- vDGA (physical probro GPU in a virtual machine using the native driver NVIDIA) - this mode is also of little interest, since it does not provide high density. We consider it only for comparison with the local work of the Quadro K5000 and the profile of the vGPU K280Q.

- vGPU (forwarding some GPU resources to a virtual machine using a special NVIDIA driver) is the most interesting and long-awaited implementation option, and the main hope was pinned on it, since vGPU allows for unprecedented density of virtual machines using hardware 3D acceleration.

vDGA

To use this virtualization mode, it is necessary to forward our GRID K2 map into a virtual environment. Through vsphere client in the settings of the host, we allocate devices for transferring resources to virtual machines.

After rebooting the host in the virtual machine, select a new PCI device.

We start the car, install the NVIDIA GRID and Horizon Agent drivers. After reboots, a physical card with a native NVIDIA driver and a bunch of virtual devices (audio playback devices, microphone, etc.) appear in the system.

Next, go to the Horizon setup. Through the web-interface we create a new pool of ready-made virtual machines.

Hardware rendering is not enabled, since we have passthrough mode. Customize pool permissions. At this stage, any device with an installed Horizon client can use this virtual machine.

I tried connecting via iPhone 5.

3D was displayed with slight delays, but streaming video was terribly slow. Since when I connected via LAN, everything flew - I concluded: either the brakes are caused by the wireless network, or the phone processor cannot cope with PCoIP unpacking.

I ran tests on the iPhone 5s - the result was better. But the iPhone 6 showed excellent results.

The performance on Android smartphones was not very different from the iPhone 5, and the work was not very comfortable. But, in any case, the flexibility of access to the virtual machine is obvious. You can use an existing fleet of workstations / office computers. Or you can switch to thin clients. Horizon-client is placed on almost any popular OS.

There is also a ready build on linux . It already includes the necessary client and it costs about $ 40.

So, in vDGA mode, we can create 2 virtual machines for each K2 card, which will exclusively use their own GPU.

Performance in the SPECviewperf test is very high for a virtual environment, but still lower than with local tests on the Quadro K5000. All results will be presented at the end of the article for an objective assessment.

vGPU

To use this virtualization mode, you need to uncheck / leave blank checkboxes in the host settings responsible for transferring resources to the virtual environment.

In the virtual machine settings, select the Shared PCI Device.

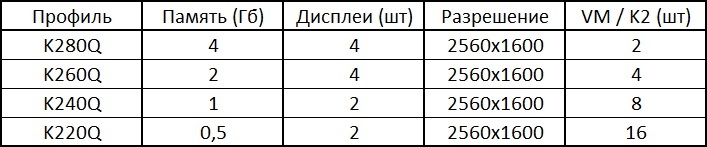

There are several profiles to choose from.

K200 is a stripped-down K220Q, less resolution and less video memory.

K220Q-K260Q - the main profiles that can be selected to perform specific 3D tasks.

K280Q is a controversial profile, by the maximum number of virtual loops it is the same vDGA (2 pcs. Per K2 card), and lower in performance. The only, in my opinion, plus of this profile is that it can be used in conjunction with another vGPU profile. It should be noted that no more than 2 types of profiles can be allocated to one GRID card. Moreover, it is impossible to combine the vGPU and vDGA modes for obvious reasons - they have a different way of interacting with the virtual environment.

Having defined the profiles and created the required number of virtual machines or templates, proceed to the creation of a pool / pools.

This time, in the render settings, select NVIDIA GRID VGPU.

After installing the original NVIDIA drivers and the Horizon-agent on the virtual machine, the virtual machines are available to work through the Horizon client. A vGPU-mode video card will be defined as an NVIDIA GRID device with a profile name.

SPECviewperf V12.0.2 Testing

Visually, everything looked very cool, especially in K280Q mode.

For comparison, the same test mode K220Q.

Not so cheerful, but in any case worthy of a virtual environment.

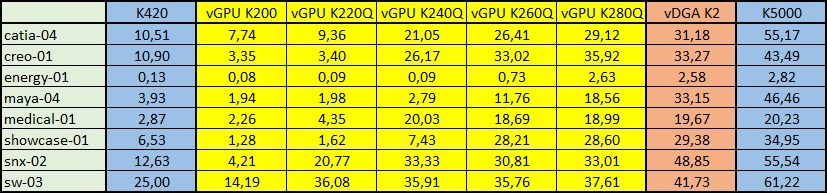

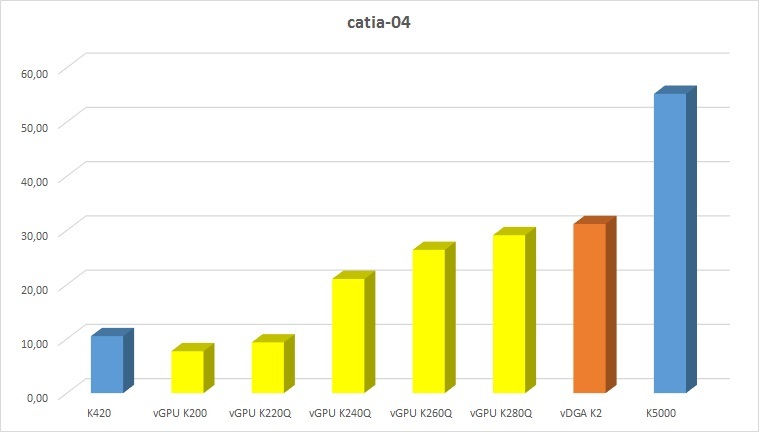

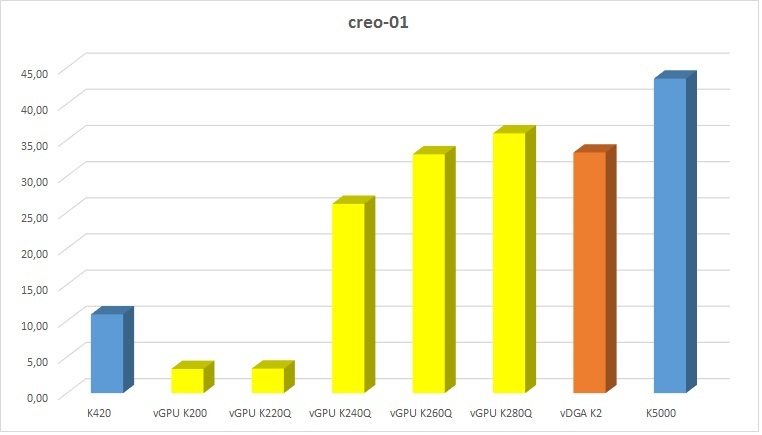

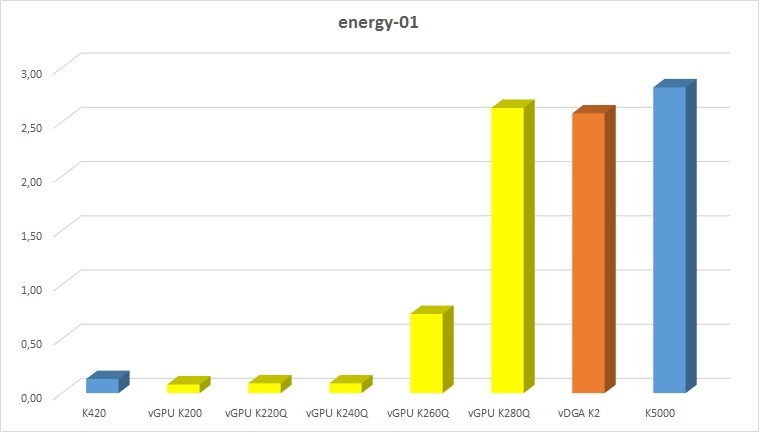

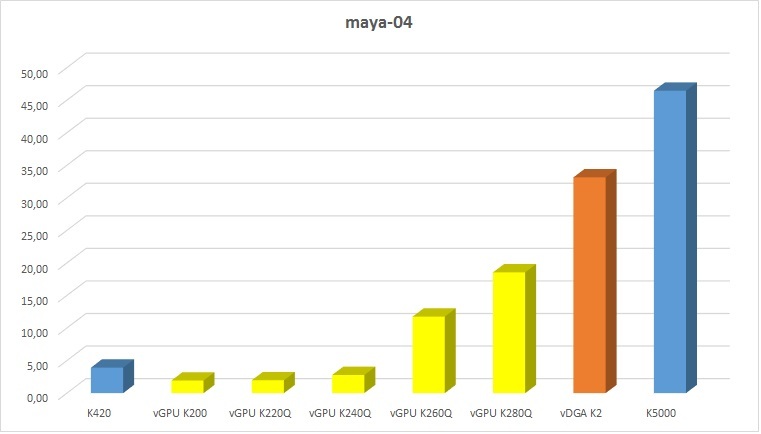

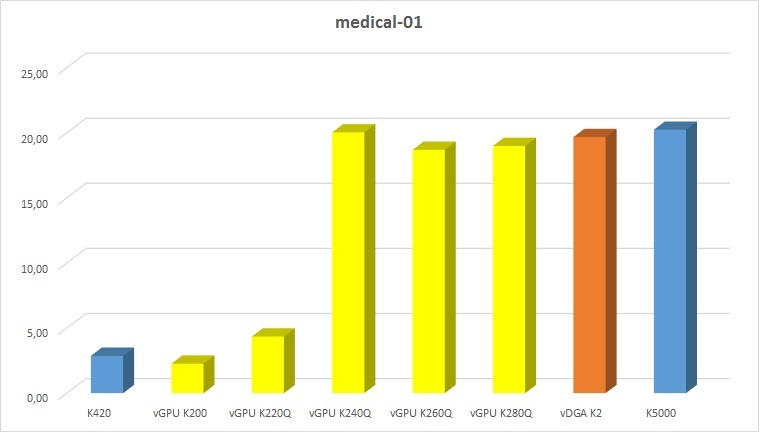

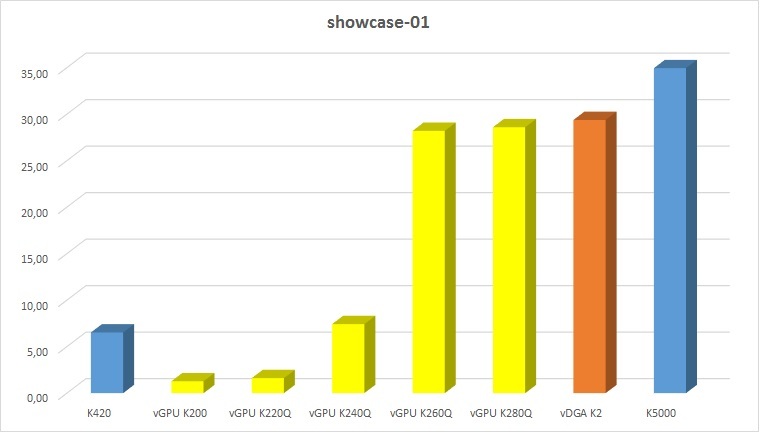

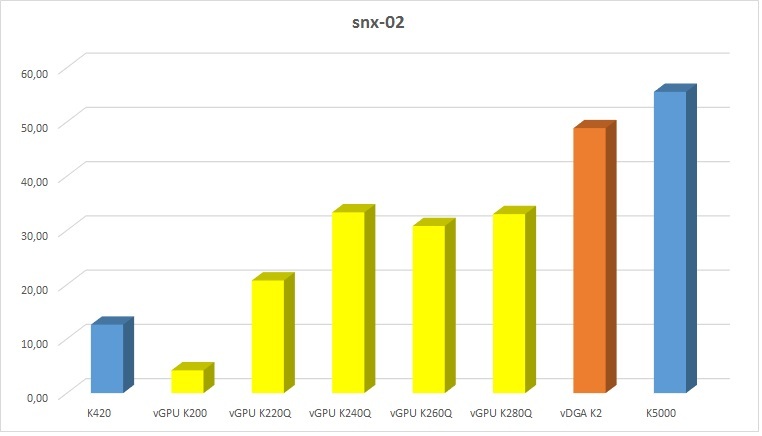

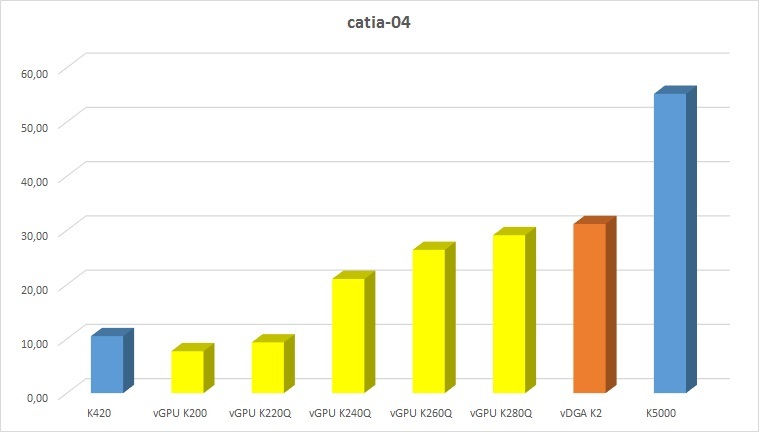

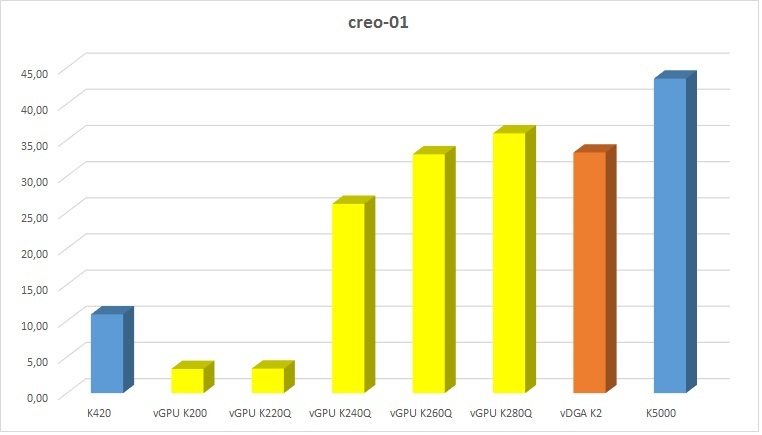

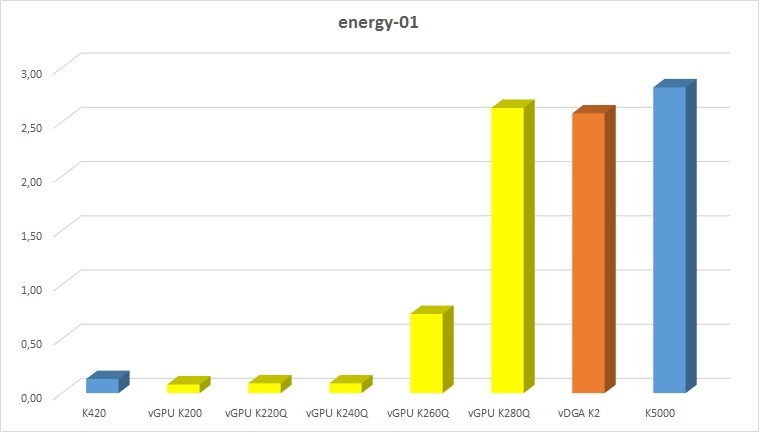

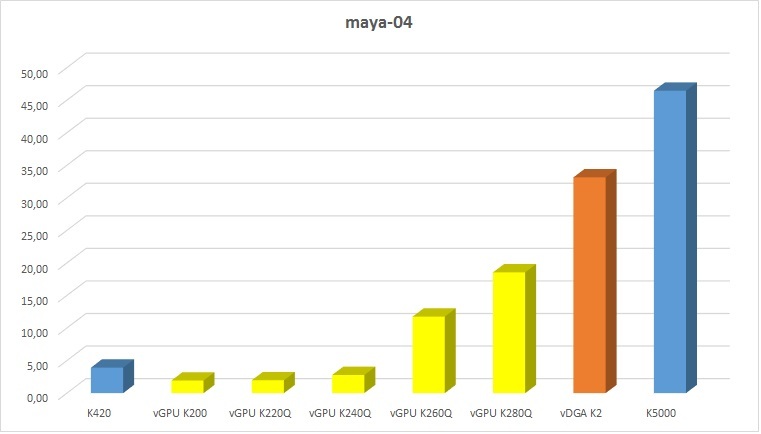

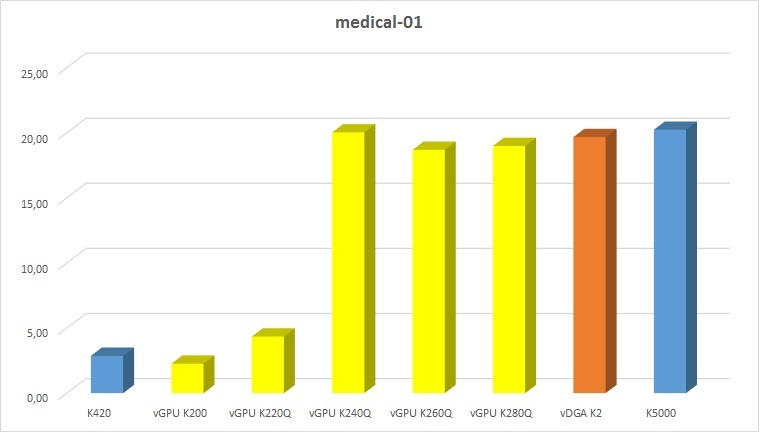

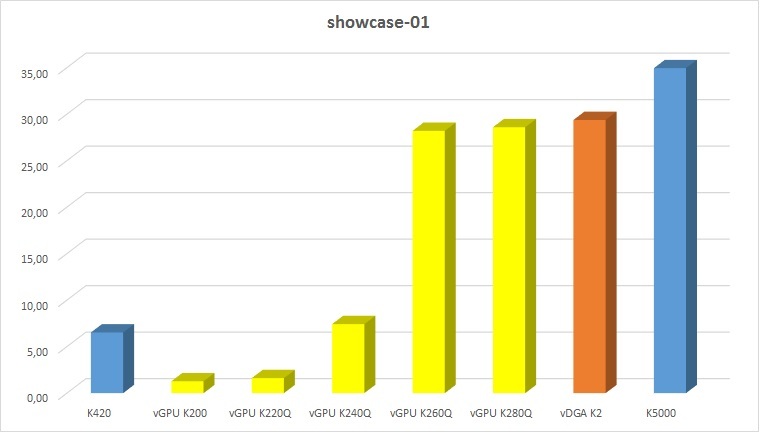

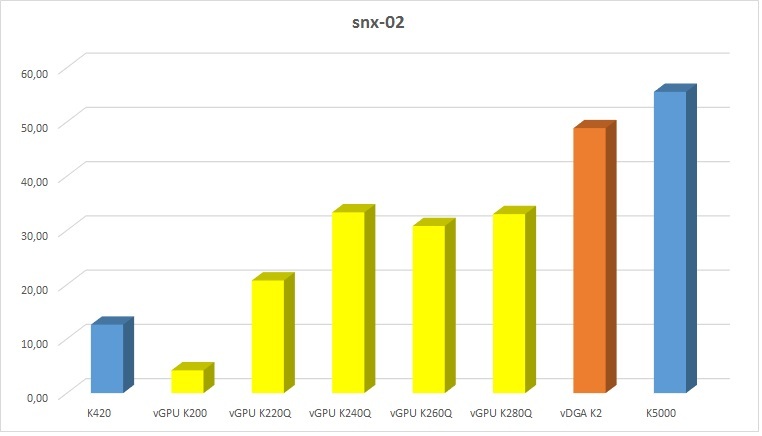

Below, I present a summary table for each SPEC testing module for all virtualization modes + the results of the Quadro K5000 and K420 local tests.

Test scores

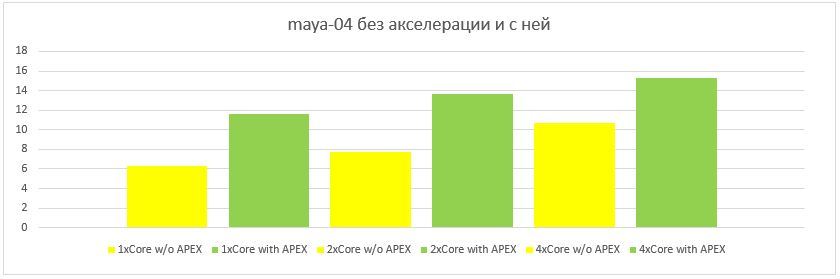

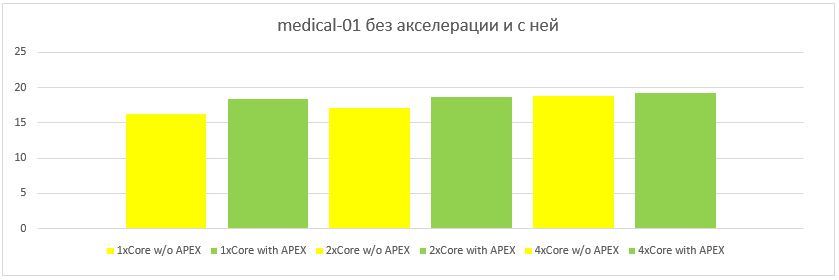

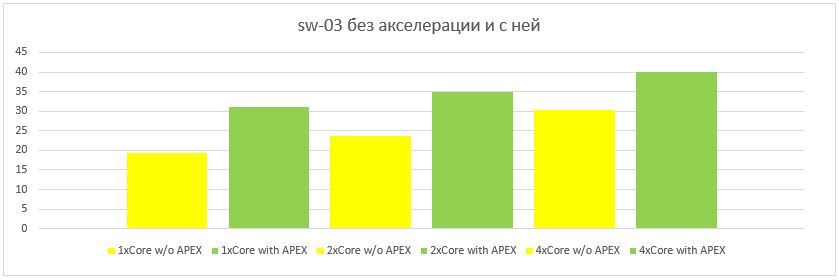

After analyzing the results, you can see that not all modes have a linear performance increase for certain 3D applications. For example, for the Siemens NX there is no difference between the K240Q, K260Q and K280Q profiles (the CPU probably became the bottleneck). And the Medical module showed the same result not only in the K240Q, K260Q and K280Q modes, but also in the vDGA mode and even with local Quadro K5000 tests. Maya, in turn, shows a significant leap between the K240Q and K260Q modes (probably due to the amount of video memory), and Solid Works showed the same result in all full-fledged profiles.

These results are far from fully reflecting the performance of the solution, but, in any case, they will help to choose the optimal configuration and correctly position the solution for specialized 3D tasks.

3ds max testing

Since the SPEC test for 3ds Max currently only supports version 2015, and requires its installation, I limited myself to the manual test of the trial version 2016.

All vGPU modes behave with dignity - the limitations are as always: the less video memory is allocated, the fewer polygons you can handle.

Work in the youngest mode (K220Q - 16 users per card) was no worse than working on the younger Quadro. With the increase in the number of polygons, FPS remained at a comfortable level of 20-30 frames per second.

Realistic mode (automatic rendering in the preview window) worked without delays; when the model was stopped, the textures were updated fairly quickly. In general, I did not find anything that would cause discomfort in the work.

Testing KOMPAS-3D

For the sake of interest, I conducted the test with the Compass-3D benchmark. Graphics performance in all modes was not much different - it ranged from 29 to 33 in their “parrots”. ASCON specialists said that this is the average result of a similar decision on Citrix. The test somehow passed swiftly, the model was spinning at a tremendous speed (there was no such smoothness as in SPEC), apparently this is a feature of the test. So I tried to turn it by hand. Spinning smoothly and comfortably, despite the fact that the model is complex.

PCoIP hardware acceleration

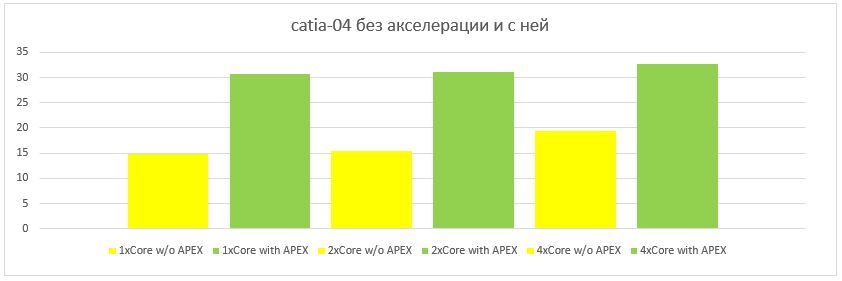

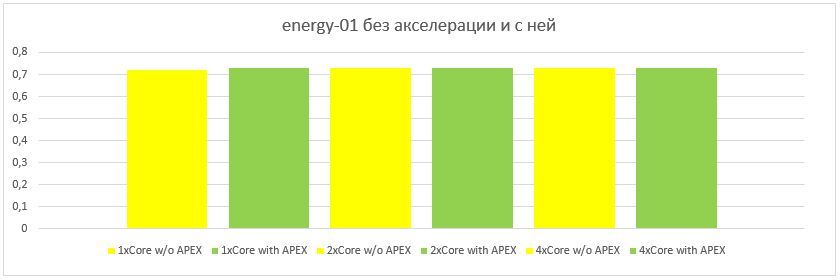

After analyzing the results of SPEC, I found that in some testing modes a processor could become a bottleneck. I conducted tests with a decrease in the number of cores per virtual machine. In the single core virtual machine, the results were significantly worse, despite the fact that SPEC loaded only one thread and rarely 100%.

I realized that in addition to the main tasks, the central processor deals with encoding the PCoIP stream for sending it to the client. Considering the fact that PCoIP cannot be called an “easy” protocol, the processor load must be substantial. To offload the CPU, I tried using the Teradici PCoIP Hardware Accelerator APEX 2800 .

Having installed the driver on ESXI and virtual machines, I repeated several tests. The results were impressive:

Test scores

In some tests, the performance increased up to 2 times when using the APEX 2800. This card is able to unload up to 64 active displays.

Cost Estimate Solution

For the final comparison of the solution for virtualization of graphic workplaces with personal workstations, it is necessary to determine the cost of one workplace for both implementations.

I did several calculations in different ways: a virtual workplace turns out to be more expensive than a physical station from 1.5 to 4 times, depending on the number of virtual machines. The most budget virtual machine turned out to be in the configuration: 32 virtuals, 1-Core, 7GB RAM, K220Q 0.5GB (equivalent to Quadro K420).

For those who want to see real numbers, I attach links to the GRID solution configurator and the workstation configurator .

Naturally, the miracle did not happen and is unlikely to ever happen - an increase in density entails an increase in the cost of the solution. But we note the obvious advantages of this technology:

- Security (first plus client-server architecture - all data is stored centrally and isolated)

- High density (up to 64 users of graphics applications on 4U rack space)

- Maximum utilization of computing power (minimized system downtime compared to a personal workstation)

- Ease of administration (all on one gland and in one place)

- Reliability (fault tolerance at the component level + ability to build a fault-tolerant cluster)

- Flexibility of resource allocation (in a matter of seconds, the user receives the resources necessary to perform resource-intensive tasks, without changing the hardware)

- Remote access (access from anywhere on the planet via the Internet)

- Cross - platform client part (connection from any device with any OS that supports VMware Horizon Client)

- Energy efficiency (with an increase in the number of workplaces, the power consumption of the server and thin clients becomes several times lower than that of all local workstations)

findings

As a result of testing, I noted for myself several potential bottlenecks in the system:

CPU frequency The low frequency of classic Intel Xeon processors, as shown by tests, often became the bottleneck of the system. Therefore it is necessary to use high-frequency versions of processors.

PC over IP . When performing tasks related to the display of streaming video or 3D-animation, packing PCoIP takes a significant part of the CPU resources of the virtual machine. Therefore, the use of PCOIP hardware accelerator will significantly improve the performance of the computing subsystem.

Disk subsystem . It is no secret that the spindle disk in the personal system, in most cases, is a bottleneck. In the server, this problem is even more acute, because a one-time start of a dozen virtual locks makes any array think, and an increase in the number of disks at a certain point can no longer affect the situation. Therefore, it is necessary to build hybrid disk subsystems using SSDs. With a higher number of virtual machines, it is necessary to consider the option of storage at all.

Well that's all. Of course, these were only preliminary tests in order to assess the potential and understand the positioning of this solution. The next steps will be: a full cycle of testing the original configuration with constant and variable load on all virtual machines, building a cluster to improve fault tolerance and, perhaps, something else - what do you recommend ...

Conclusion

I had the idea to open access for all of you to the virtualization server so that interested users could evaluate the performance on their own. But I had difficulty: what to choose as a tool for assessing performance? SPEC - You have already seen everything, and indeed, a synthetic test is not the best way to manually check.

In this regard, I want to conduct a couple of surveys that will help me know your opinion and prepare the best platform for testing.

Thank you for your attention, below are links to our activity:

Official site

Youtube channel

In contact and Facebook

Twitter and Instagram

Source: https://habr.com/ru/post/269087/

All Articles