Amazon Web Services Infrastructure Inside. Part 1

Rows of servers inside the Amazon data center

After cloud computing emerged as a new paradigm, and finally emerged as a separate sphere, Amazon quickly became the leader in this area. The launch of Amazon Web Services in 2006 (think only about 10 years ago!) Allowed the retailer to become the largest player in the market, with a market share of $ 6 billion.

Over time, Amazon’s cloud services began to serve tens and hundreds of thousands of customers (now over a million). Accordingly, the uptime of services is very critical, and even a minute of downtime can cost the company's customers very expensive. Not so long ago, a failure nevertheless happened, and as a result, Netflix, Reddit, Tinder, IMdB and many other services suffered. All this happened due to a fault in a data center located in Virginia, USA. Today we offer to get acquainted with the entire infrastructure of the company, describing its approximate geography and opportunities.

')

So, Amazon now manages at least 30 data centers of its global network, and another 10-15 will soon be built or are at the design stage. Unfortunately, the company does not disclose the full scheme of its infrastructure, but according to indirect data, experts conclude that only in the USA the total capacity of the company’s DC is about 600 MW.

According to Gartner experts, the computing power of Amazon Web Services is five times greater than the total computing power of 14 other cloud providers.

Opening the veil of secrecy

Amazon has been very reluctant to talk about data centers since the advent of Amazon Web Services, reporting significantly less information about its infrastructure than other companies, such as Google, Facebook and Microsoft. However, in the past few years the situation has changed a bit - top managers are already more willing to talk about the company's data centers.

“We are often asked questions about the physical infrastructure of Amazon Web Services. We have never talked much about this area, and now we want to lift the veil of secrecy regarding our network and data centers, ”said Werner Vogels, technical director and vice president of Amazon Web Services at the July AWS Summit in Tel Aviv. .

The main purpose of these meetings is to help developers understand the Amazon philosophy regarding cloud infrastructure, and also to learn more about the uptime of the system and its reliability. So, the entire infrastructure is divided into 11 regions, each contains a cluster of data centers. In each region, there are several Availability Zones, which provide customers with the ability to duplicate their services or mirror them to avoid downtime. True, recent AWS infrastructure failures show that Amazon’s team could have worked harder and more thoroughly.

Investment in the platform is growing

Amazon Web Services grew by 81% in the last quarter, compared with the same period last year. This does not mean that the entire infrastructure is growing at a similar rate, but here you can be sure that Amazon is constantly adding servers, data warehouses and data centers to its infrastructure.

“Every day, Amazon increases the capacity of its infrastructure so much that it would be enough to provide Amazon’s global infrastructure when the company represented an organization with an annual income of $ 7 billion,” said James Hamilton, vice president and lead engineer of Amazon. This is quite significant.

Now Amazon’s data center development strategy is based on the idea of reducing costs. By the way, since the launch of Amazon Web Services, the cost of service prices have fallen 49 times.

Werner Vogels (Photo: YouTube)

“We do a lot to reduce the cost of our services. Our business has a small margin and we are happy to keep it at the current level. Nevertheless, we reduce the cost of service on a regular basis, ”- said Werner Vogels.

The cornerstone of Amazon’s entire strategy is determining the optimal size of data centers. According to company representatives, most Amazon data centers include from 50 to 80 thousand servers, with a power of a single data center of 25-30 MW. The company went to this figure for a long time.

How big should the data center be?

As the size of Amazon’s data centers increases, the failure rate of a single data center also increases. According to experts of the company, the data center, if I may say so, is an element of failure. And the larger the data center, the stronger the impact on the size of the failure it can have. That is why the company does not create data centers with a capacity of more than 100 thousand servers, most of its DCs have smaller sizes and capacity

There is a second question - how many servers do Amazon Web Services provide? The information provided by the company's vice-presidents makes it possible to speak of a minimum number of 1.5 million. The maximum number of AWS servers calculated by Platform is 5.6 million.

Amazon leases the creation of a number of data center operators, including Digital Realty Trust and Corporate Office Properties Trust. In the past, the company leased buildings, such as warehouses, and converted them into data centers. Relatively recently, Amazpn decided to change the strategy, focusing on creating a DC from scratch. In Oregon, the company used ready-made modular components to build a solid data center.

Amazon has the advantage of creating its own power substations. Here the need to ensure the speed of work, and not the management of operating expenses, is becoming more apparent. The savings are minimal, but you can create a data center at a much faster pace.

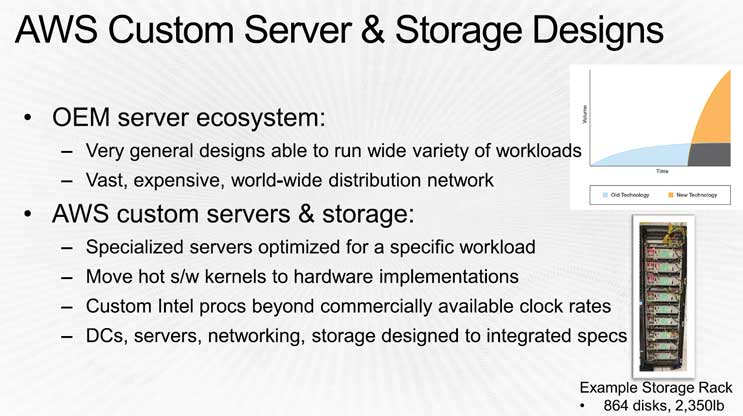

Custom server and data storage

At the earliest stages of development of its cloud platform, Amazon purchased equipment from the most well-known manufacturers. The main equipment supplier for Amazon was Rackable Systems. Only in 2008, Amazon ordered the North for $ 86 million from this company, and a year earlier - for $ 56 million.

But with the growth of infrastructure, the company began to develop its own hardware for its data centers. This allows Amazon to fine-tune its servers, data warehouses and network equipment, optimizing the overall performance of all hardware, while reducing costs.

“Yes, we create our own servers. We can buy ready-made solutions, but they are very expensive, and not too optimized for our needs. Therefore, we create our equipment. We worked together with Intel in order to be able to use conventional processors in enhanced performance mode. This, in turn, allowed us to create customized server types for very specific uses, ”Vogels said.

Image: James Hamilton

In the EC2 instances, these servers are used, assembled on the basis of Xeon E5 processors, made on a 22-nanometer process using the Haswell architecture. At the same time, the servers here are of different configurations, designed to perform various tasks.

According to Amazon experts, the company now knows how to build servers with a configuration that is optimally suited to perform a specific range of tasks, including supporting the operation of certain software and services.

AWS uses both proprietary software and hardware to build the network infrastructure.

Speed of light and cloud

The “speed of light factor” plays an important role in the design of the Amazon infrastructure.

“The most common way customers work is to launch applications in a specific data center, and you can ensure maximum reliability of the data center for this option, agreeing that 99.9% uptime is quite sufficient. But if you create a highly reliable application or service, you already need two data centers to ensure its operation. At the same time, the distance between data centers can be very large, and the signal path is rather long. Therefore, the creation of a distributed infrastructure, especially if the data centers are distant from each other over a long distance, can be a difficult task, ”Vogels said.

The answer to this problem can be Availability Zones: clusters of data centers within the region, allowing customers to run instances in various isolated locations, thus avoiding points of failure. If something happens to one instance, the application is supported by another in another Availability Zone. Each region has 2 to 6 Availability Zones.

At the same time, the company made Availability Zones isolated from each other, but close enough to ensure minimal signal delay in the Network. According to company experts, the signal delay between zones is usually 1-2 milliseconds. For comparison - the delay in the passage of data from New York to Los Angeles is 70 milliseconds.

“We decided to place Availability Zones close to each other. But they must still be located in different geographic regions, and be connected to different power grids, moreover, they must be at different heights above sea level, ”says Hamilton.

In the next article, we’ll tell you more about the geography of Amazon’s network infrastructure.

Source: https://habr.com/ru/post/268217/

All Articles