DDoS attacks and e-commerce: modern approaches to protection

In the marketing materials on protection against DDoS-attacks, published by various companies, time after time there are errors of the same plan. Namely, data on recorded attacks with volume of, for example, 400 Gbit / s, taken from someone's reports, is concluded that everything is bad and you need to do something urgently, but the characteristics of the offered services indicate the upper limit of the volume of filtered attacks. at 10 Gbps. And such inconsistencies occur quite often.

This happens because the specialists who create the service itself do not really believe that such powerful attacks are real at all. Because neither these experts themselves, nor anyone whom they know, have encountered such attacks. And therefore the question that is relevant for e-commerce arises: which threats are really relevant now and which are unlikely? How to assess risks? All this and much more is described in the report of Artem Gavrichenkov at the conference Bitrix Summer Fest .

Attack classification

Let's first talk about what kind of attack. As a criterion for classification, we take the object of attack, that is, what exactly is put out of action. In this case, there are four main classes of attacks carried out at different levels according to the OSI model.

')

The first class (L2) is channel clogging. These are attacks that are aimed at depriving access to an external network due to the exhaustion of channel capacity. Absolutely no matter how. As a rule, for this purpose massed, in terms of traffic, attacks of the type “something” -Amplification (NTP-, DNS-, RIP- ... Amplification can be used, it does not make sense to list). In general, all kinds of floods, including ICMP Flood, etc., belong to this class of attacks. The main task is to fill at least 1.1 gigabits / s into a channel of, say, 1 gigabit / s. This will be enough to stop access.

The second class (L3) is a malfunction of the network infrastructure. This class includes, among other things, attacks that lead to problems with routing within the framework of the BGP protocol, with network announcements (Hijacking) - or attacks that result in problems on transit network equipment: for example, overflow of the connection tracking table. Attacks of this class are very diverse.

The third class (L4) is the exploitation of the weak points of the TCP stack, that is, the attacks at the transport level. This transport protocol, which is the basis of HTTP and a number of other protocols, is rather complicated. For example, it uses a large table of open connections, each of which is, in fact, a state machine. And it is attacks on this machine that make up the third class of DDoS attacks. The third class can also be attributed to the SYN Flood attack if it did not cause damage at the two previous levels, reached the server itself and, as a result, is a priori attack on the TCP stack. It also includes the opening of a large number of connections (TCP Connection Flood), which leads to an overflow of the protocol table. The third class, as a rule, also includes the use of tools such as SlowLoris and Slow POST.

The fourth class (L7) is the degradation of the web application. This includes all sorts of “custom” attacks, ranging from typical GET / POST / HTTP Flood to attacks aimed at repeatedly repeating the search and retrieval of specific information from the database, memory or disk, until the server simply runs out of resources.

Note that evaluating attacks in gigabits makes sense mainly at the lowest level (L2). Because for disabling, for example, MySQL using the advanced search for all products a lot of gigabits is not necessary, as well as the mind. It is enough to use from 5,000 bots that actively request a search and update the page, perhaps even the same one (depending on the caching settings on the victim's server). With such attacks, problems will arise for many. Someone will "squeeze" with the attack of 50,000 bots, the most stable systems withstand the attack up to 100,000 bots. At the same time last year the maximum registered number of simultaneously attacking bots reached 419,000.

Attack protection

Let's see what can be countered on each of the above levels.

L2 . There is no reception against scrap, except for other scrap. If the attack band exceeds 100 gigabit / s, then these gigabits need to be processed somewhere, for example, on the provider or data center side, and the problem will always be in the “last mile.” With the help of BGP Flow Spec technology, you can filter part of attacks by packet signatures — say, Amplification is easily cut off at the source port. However, this method is quite expensive and not capable of protecting everything.

L3 . On L3, you need to analyze the network infrastructure, and not only yours. A classic example is that in 2008, Pakistan, due to its own error, intercepted prefixes from YouTube via BGP Hijacking. That is, a significant part of the traffic of this video hosting was redirected to Pakistan. Unfortunately, it is impossible to fight automatically with a similar scourge, everything will have to be done manually. But before the struggle begins, it will still be necessary to determine that this problem (theft of the prefix) has arisen at all. If this happens, then you need to contact the network operator, the data center administration, the hoster, etc. They will help in solving the problem. But this requires advanced analytics of the network infrastructure, because the Hijacking feature is, in general, only that, from some point on, the announcements of this network on the Internet went "atypical", not like they were for a long time. before. Accordingly, for timely detection it is necessary to have at least a history of announcements.

If you do not have your own autonomous system (AS), then we can assume that the fight against attacks at this level is more or less the duty of your data center (or provider). However, it is usually not possible to say in advance how seriously this or that data center approaches this problem seriously.

L4 . To protect against attacks at the fourth level, it is necessary to analyze the behavior of TCP clients, TCP packets on the server, and heuristic analysis.

L7 . At L7, it is necessary to conduct behavioral, correlation analysis, and monitor. Having no tools for analytics and monitoring, it is impossible to take and configure the same Nginx so that it repels any attacks, the fight against attacks will still turn into manual work.

Risk assessment

So, where we accept and process incoming HTTP requests:

- Purchased or rented "physical" server

- Cloud hosting

- CDN

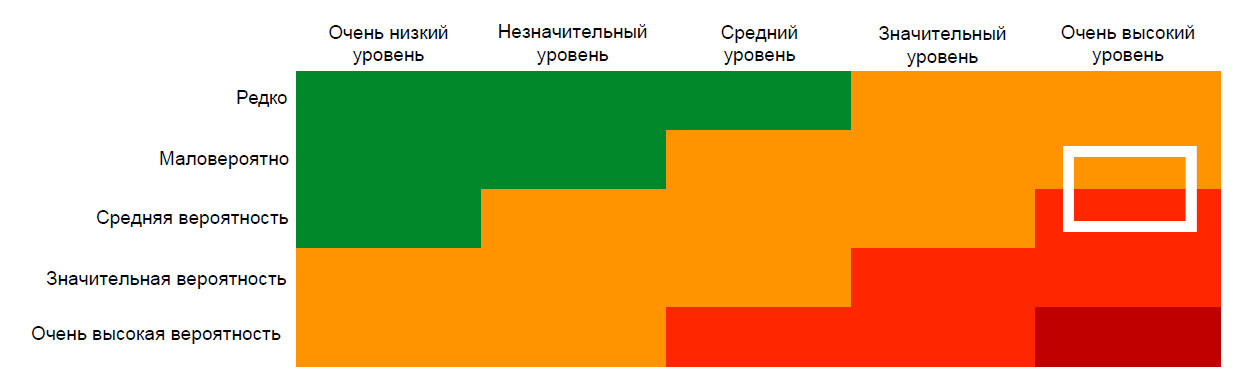

For the risk assessment, you can use such a useful tool as the Probability & Impact Matrix.

On the horizontal axis, the seriousness of the consequences of an event is postponed, and on the vertical axis - its probability. The white frame in this case indicates the current level of risk for DDoS attacks.

What determines the likelihood of an attack? First of all, attacks are an instrument of competition. If your market segment is more or less calm, then, most likely, there will be no attacks for a long time. But if competition increases, then you need to prepare for protection, and the white frame needs to be moved down the axis.

But the worst thing about a DDoS attack is the impact, impact. Let's say the attack is carried out on your hoster. Even if at this moment contact the supplier of anti-DDoS-solutions, it is not a fact that he can help. The problem is that your IP address, which contains “your everything” - from the Web server to the database and other important components - is already known to the attacker. And even if you specify some other address in the DNS, with a certain probability it will not play any role and the attack will be continued directly. At best, you will have to move out of this hosting. And in this case, your problems can be assessed as “very serious”, because it is difficult to think of something worse for the IT project than moving to an unprepared site during working hours, while the server is lying. Well, except that Barmin's patch is yes, worse :)

Why is it not enough to change the IP on the same hosting? Because the new address will be from the same autonomous system (AS). And today, attackers already know how to look at the list of autonomous system prefixes. Therefore, to find you at the new address will not work, it is enough to attack all the addresses in the autonomous system.

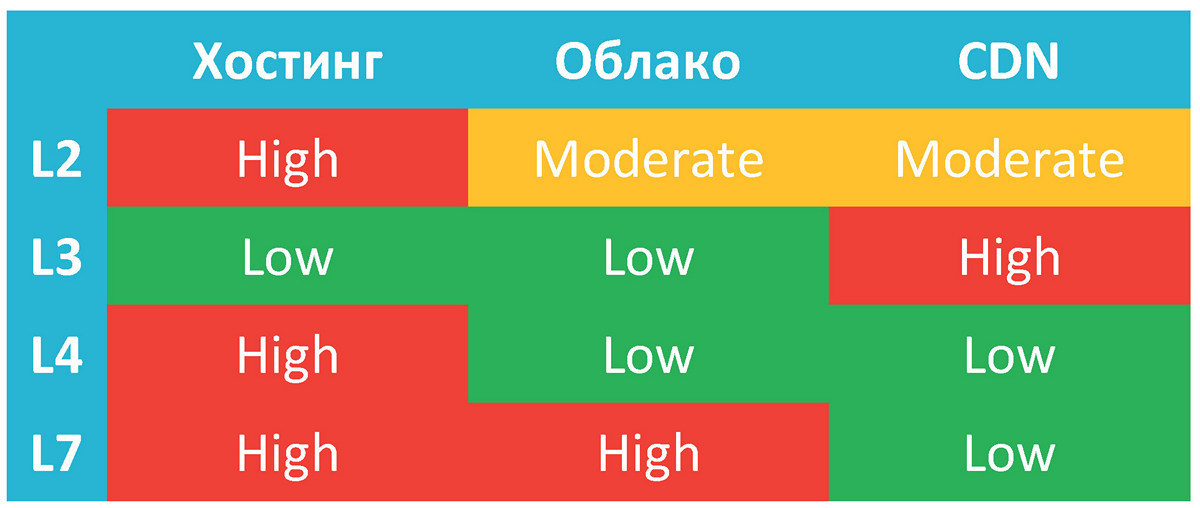

Let's try to assess how one or another network resource is subject to attacks of one or another network level.

Hosting . Most hosting sites will not be able to filter out powerful attacks, whose traffic significantly exceeds 100 Gbps. Including the last mile to your server. Therefore, you have to independently handle all, or almost all, of the flood that comes to you. And at the seventh level you will have to conduct analytics yourself, because this is your server and your problems.

On L3, the danger is not very high, because, most likely, for the sake of your resource they will not, say, steal the prefix from the entire hosting. This is very time consuming and expensive for the attacker. This can be done, but you need a very good reason. Although, of course, there are exceptions to every rule of life, especially if there are performance problems in the network infrastructure of the hosting.

There is an important point: many vendors offer to protect expensive equipment, which is placed in your rack and it includes uplink and downlink, leading to your server. The problem here is this: the network is no more stable than the last mile, including the equipment you use. And in any case, you should have a performance margin so that during peak hours the processor load is not close to 100%. Otherwise, any slight jump can lead to failure. If the attackers are inexperienced, and you can cope on your own, then you still need a margin of safety while you study queries, write scripts for the autobahn, set up fail2ban, etc.

It should also be noted that if you are physically located on resources that are not protected, changing the DNS with a probability of up to 30% will not help you. This is evidenced by the experience of recent attacks on US financial companies. So with a lot of confidence we can say that you will have to move quickly from an unprotected hosting.

Cloud The cloud must have an Anycast network, that is, the same prefix must be announced from many places in the world. Because in one place, an attack on hundreds of gigabits cannot be digested, and in a year we can expect terabit attacks. Due to the distributed structure, the danger of attacks on the channel is greatly reduced. But even under Anycast-network conditions, an attack of 400-500 gigabits per second is a lot. We need to prepare for this, but not everyone does it.

The network must be distributed so that the attacker could not exploit its infrastructure. There is a need for performance (preferably double), because no amount of resources will save you, say, from operating problems when accessing the database.

And finally, the most "funny" is that the cloud is able to digest a lot of user traffic, but you have to pay for it. When the bill reaches tens of thousands of dollars over the past month, then you will begin to pick off the phone, or simply disconnect because of your insolvency. So staying in the cloud still does not solve the whole problem of protection against attacks. It only improves stability before you get protected.

CDN . CDN is designed to handle large volumes of traffic, so it can cope with “static” (images, CSS, etc.) easily. With channel capacity here everything is the same as that of the cloud. But at the level of infrastructure is much more interesting. A CDN always has a DNS server to which all resource services are tied. If, in the case of a cloud, the network infrastructure can be hidden behind a simple Anycast, then the CDN in 99.9% of cases you will see a DNS router that redirects the user to the nearest CDN point. In addition, the CDN will have points taken out from Anycast and from its network and located on foreign networks, closer to the user. Accordingly, they will not be protected by default. They are simply impossible to protect. And here it all depends on how protected his DNS is at the CDN, how ready it is for an emergency shutdown of the attacked nodes from the network. But this happens infrequently. The DNS server, which is engaged in spreading users by region, must be protected, stable and thought out.

General Network Architecture Tips

- Anycast address is very useful. And most importantly, it can be rented. Balancing and reserving with an Anycast router is much more reliable than DNS balancing.

- Please note that IPv4 is running out, and now, if you are a large organization, it is possible to get the last piece of this address space. This needs to be used, because in the future you will only get IPv6, and there are not very many users in this space.

- It is better to untie the application from the physical server. The word "Docker" asks for the language, but I do not want to be attached here to any particular technology. If you are hosting an application somewhere, stock up on a set of documentation, installation scripts, automate the deployment and configuration - in general, do anything to be ready to deploy the same application with the same database on a server on another site. Because the problems can start not only for you, but also - regardless of you - for those with whom you are located. This is a fairly common situation.

To date, the average level of threats has reached such a level that it is very difficult to cope with them independently. It is much easier for an attacker to organize an attack than for a victim to defend himself. And after two days without sleep, absolutely any system administrator is no longer able to oppose the attacker. Therefore, we have launched a new service for 1C-Bitrix customers: ten days a year for free. Under attack, not under attack - it does not matter. So if you have trouble, then in the control panel of your website of the latest version of “1C-Bitrix: Site Management” there is a cherished button, please click on it, do not hesitate.

Source: https://habr.com/ru/post/267947/

All Articles