Network overlay technologies for data centers. Part 1

Recently, in the materials of various conferences on network technologies, reviews, articles, such terms as TRILL, FabricPath, VXLAN, OTV and LISP have become increasingly common, especially in the context of building data centers. You catch yourself thinking, but what is it? Of course, many of them, like stars, are far enough from our everyday reality. But still, probably, it would not be bad to understand, at least in general terms, but what does all this mean. Moreover, it seems like they all change the usual principles of the network: switching by some labels, some routing is not the same, and the addressing of the host is not at all the same. In general, I suggest trying to figure it out.

The article will be divided into three parts. In the first part, we consider what is overlay technology. Let's look at the prerequisites for the emergence of new overlay technologies for the data center, as well as their general classification. The remaining parts will be devoted directly to the technologies of TRILL, FabricPath, VXLAN, OTV and LISP.

So, all these technologies are united under the common name - overlay network technologists. As we know, overlay technologists allow us to obtain new network operation logic using standard protocols as a basis. Those. This is an add-on that provides us with new services. Typically, overlay technologies use additional headers that are attached to the source package. This allows you to abstract from the headers of the original packet and to ensure further transmission over the network based on the overlay header.

')

An example of such a technology is VPN (in particular, IPSec in tunnel mode). VPN allows us to build a tunnel over the network. Further inside this tunnel we can transfer any data. The transport network will only route such data based on the VPN header. Thus, with the help of VPN technology, we seem to change the standard traffic routing rules, ensuring the transfer of the data we need over the network. There are a lot of examples of overlay technologies. But let's dwell on those that appeared and are used primarily for building data centers.

In the cycle of these articles, I would like to review the prerequisites for the emergence of new overlay technologies for the data center, try to classify them, noting the main points on the most common and relevant technologies for today. Basically, it will focus on technologies that are supported by Cisco equipment, since I have to deal with it most often. Of course, other manufacturers of network equipment have similar technologies, but their analysis will remain outside our review.

Where did the need for some new overlay technology come from? More recently, the three-tier network building architecture usually did not raise any questions. The number of available VLANs equal to 4096 was perceived as a fairly large number. Standard rules for switching and routing traffic were unshakable postulates that hold the

All of us have long been accustomed to the traditional three-tier architecture for building networks: the access level (access), the aggregation / distribution level (aggregation) and the core (core). Routing (L3) is commonly used between the network core and the distribution level, and switching (L2) or routing (L3) between the access and distribution level. In some cases, the network core and the level of distribution can be combined.

However, the emergence and active use of virtualization, as well as the growth of data centers (in size) revealed a number of new network requirements:

- The need to handle a large number of MAC addresses. Consequently, increased requirements for switch MAC tables (tables where the records of correspondences of the MAC address, switch port and other service information are stored). Now, dozens of virtual machines (each with its own MAC address) can be behind a single physical server, and the number of servers themselves in some data centers has become measured in thousands.

- Support for more than 4K VLAN: again, the reason for the use of virtualization, it is now possible to serve more users. In addition, each user requires its own isolated network segment. Moreover, sometimes even several segments per user are required.

- Mobility: The virtual machine must be able to move between hosts, both within the data center and between data centers. In this case, for a number of technologies (for example, Vmware vMotion), the hosts in this case must be located in the same L2 domain.

- Elasticity and unification: the application can be located on any server, while it does not matter where the server itself will physically be located.

- Interaction “all with all”: there was a significant increase in traffic between servers within the data center (the so-called “east-west” traffic), since services can be located anywhere in the data center (again, due to virtualization).

- Other requirements: energy efficiency, manageability, etc.

It is not difficult to notice that the new requirements have begun to fit in poorly with the traditional three-tier network building architecture using the protocols we are used to.

In particular, the requirement of transparent mobility of virtual machines forces us to “stretch” the broadcast domain between all access level switches. And this entails additional costs for its maintenance. You will need to use the STP protocol. This means that part of the channels will be blocked or insufficiently utilized, there will not always be optimal traffic transmission. We also have an increased load on the MAC tables of switching equipment and problems with flooding (broadcast traffic, etc.).

The “all with all” interaction model also reveals certain problems: the busiest part of the network is its core, plus we have a large number of hops when transmitting traffic between servers connected via various aggregation level switches. And I would like the number of hops to be equal to one, while all the channels are evenly utilized.

It would be ideal, for example, to combine the advantages of routing and switching, getting the opportunity:

- migrate virtual machines without the need for a large broadcast domain;

- balancing traffic for uniform utilization of all channels with a minimum number of hops during its transmission.

Since it is impossible to completely rebuild the network (replace all network protocols and equipment), new network overlay technologies were developed. These technologies can generally be divided into two types:

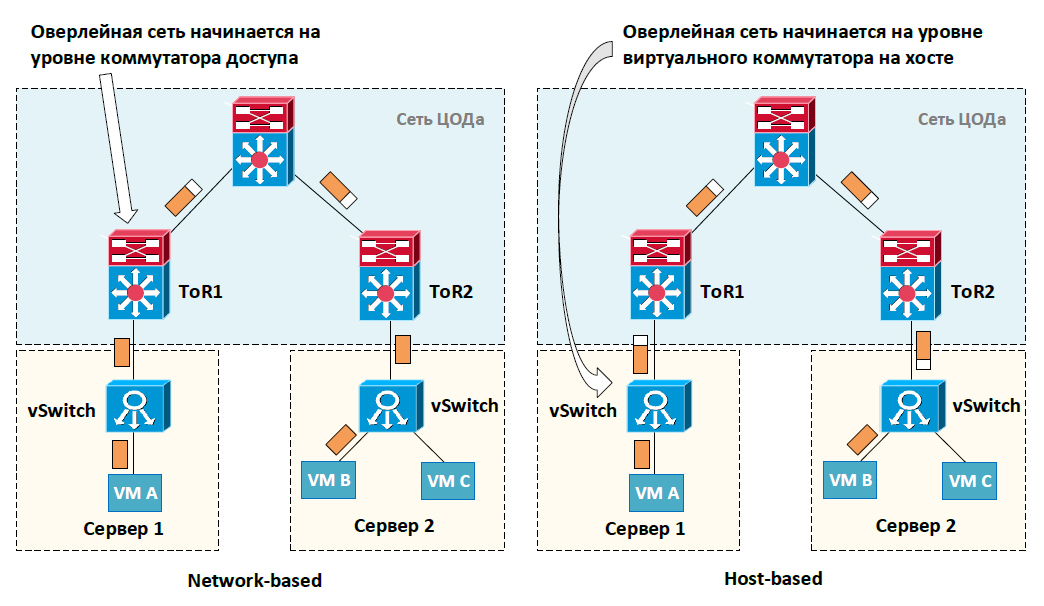

- working on network equipment - Network-based (or Switch-based),

- running on the end host (more precisely, on a virtual switch) - Host-based.

In both cases, a specialized header is added to the source package. Further transfer of the packet is already using the new header. In the case of Network-based, the process of encapsulation and de-encapsulation occurs on the switching equipment. The terminal host does not even realize that the packet is transmitted in some special way. In the case of Host-based, adding a specialized header is performed by the host itself. At the same time, switching equipment is now unaware of the use of overlay technologies.

Features of the implementation of Network-based and Host-based overlay technologies

| Network-based | Host-based |

|---|---|

| "Iron" should support this technology. | No information on network topology. Possible non-optimal traffic transmission |

| Increased MAC table requirements for ToR (Top-of-Rack) switches | Possible performance issues as the host itself performs the encapsulation / de-encapsulation process |

By the way, software-defined networks (Software-defined Networking - SDN) have become another “customer” of overlay technologies. Since one of the tasks of the SDN is to ensure sufficiently flexible network operation rules, it is difficult to achieve this without new technologies. Overlay technologies are great for providing additional information for each packet (for example, to provide security functions or segmentation), fast communication between hosts (for example, L2 connection organization), ensuring the operation of various client network technologies (their tunneling) within the data center transport network etc. In particular, Cisco's Application Centric Infrastructure (ACI) SDN is used as the underlying VXLAN technology.

Of course, there is not one of the best overlay technology that would completely solve all the problems facing the construction of a data center. Moreover, there are whole classes of such technologies that implement a single task, for example, organizing data center communications with each other. Therefore, they are often shared with each other.

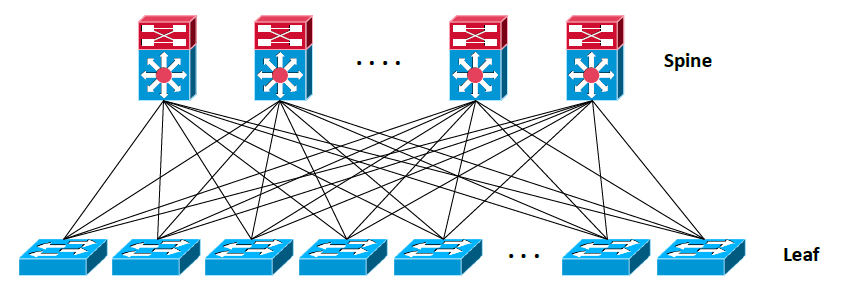

Before proceeding further, I would like to note that along with new overlay technologies, new architectures for building data centers have emerged. For example, the Clos architecture, its derivative Fat-Tree, etc. has been used. It is believed that these architectures are more adapted to the realities of modern data centers.

Why did I mention them? After all, we are talking about overlay technologies. The answer is simple. Some overlay technologies rely on new architectures for maximum effect. In particular, TRILL / FabricPath work best on top of the Clos network. This architecture assumes the existence of a large number of connections between devices and the implementation of a simple rule: switches of a certain level are connected only with switches of a higher level and a lower level, without having a connection between them. The figure shows an example of the simplest two-level Clos network.

This architecture is simply expanding. It allows you to get a good total bandwidth. In it, we have a large number of identical routes between access level devices (Leaf). The impact of switching equipment failures on the overall network performance is also significantly reduced, since there is no pronounced center.

On this, I think, it's time to complete the introduction. In the following articles we will proceed directly to the technologies we are interested in, namely TRILL, FabricPath, VXLAN, OTV and LISP. And at the end summarize some results.

Source: https://habr.com/ru/post/267919/

All Articles