Creating a JavaScript Synthesizer

I had an idea to make a browser synthesizer quite a long time ago, even when the Audio API was in a very embryonic state and practically the only chance to extract sound from the browser (except for playing finished files) was the generation of WAV with its subsequent encoding to base64 and writing to an audio tag. And if the synthesis and coding succeeded without problems (WAV format is quite simple), then with streaming audio for playing in real time everything was worse and no tricks were able to achieve seamless buffering, and therefore the idea stalled, not having time to be born. Over the years, browsers in the Audio API support have noticeably added, which in turn inspired me to new experiments in this area. This article describes step by step the process of creating a browser-based synthesizer using HTML5 tools, starting with the generation of a simple sine wave, continuing with switching and modulation of signals and ending with audio effects.

As a hobby musician, but a full-time programmer, I often catch up with musical ideas right at work, when there is no musical instrument at hand to estimate the realization, and ideally also to record. Thus, the idea of an online MIDI sequencer was first born, which would allow us to jot down and save most of the ideas. But what kind of sequencer without the ability to play and record the melody that came to mind in real time "without departing from the cash register", using at least a mouse with a keyboard? As a result, in the process of working on the simplest synthesizer, a little thought crept in and not to wipe us at anything bigger. Of course, the idea of a JavaScript synthesizer is not new, and realizations of varying degrees of persuasion continually arose here and there, but at least here, in Habré, I found only a few articles on the Audio API topic and not a single one concerning the synthesis that and prompted to sit behind this text.

As was briefly noted in the preface, the first thing I thought about is the synthesis and processing of sound completely analytically and then encoded directly into the WAV format, but in the process of dealing with streaming playback of this format and scrolling through the documentation on related topics, the idea suddenly came up to try Audio API browsers, as described in MDN. Audio API with no tweaks and minimal means makes it possible to create a virtual audio path from functional blocks, customizing and commuting them to your taste. Practically all the necessary basic elements are presented in the API: oscillators, amplifiers, splitters, etc. I recommend referring to the appropriate section of the MDN for a complete list and usage examples, so the main issue is to correctly and conveniently switch and create and control effects.

')

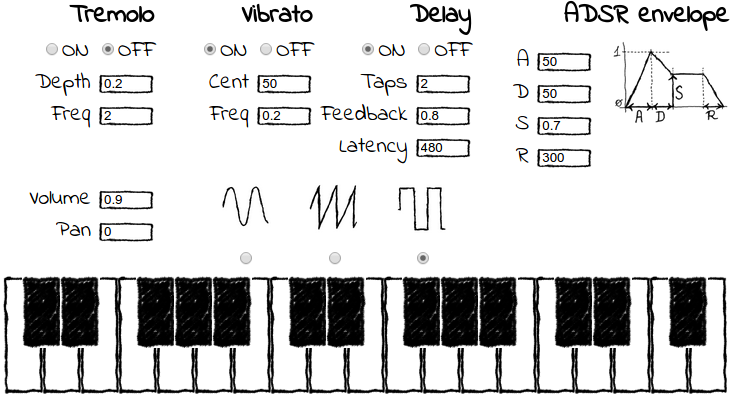

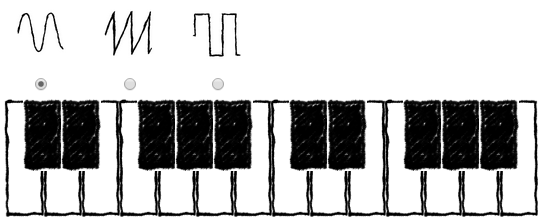

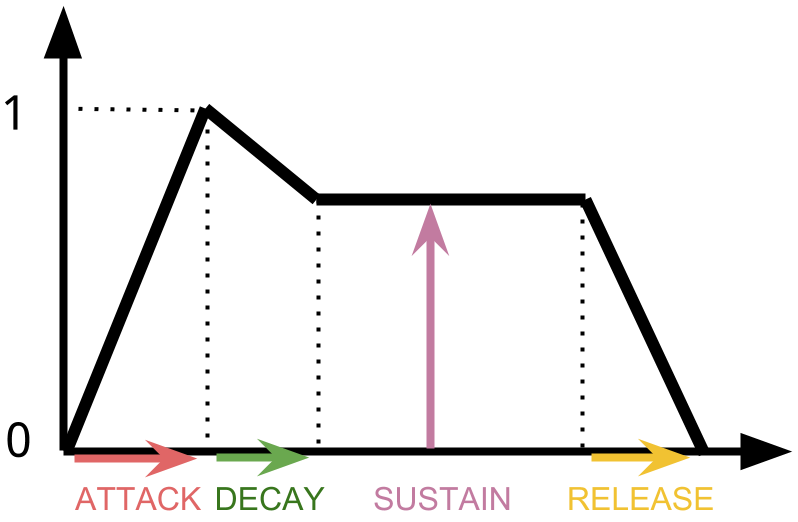

For the first version, we need to determine the minimum functionality that we would like to implement. As for me, it is absolutely necessary to choose the waveform (sine wave, sawtooth, meander), as well as effects such as vibrato (tone fluctuation), tremolo (volume variation) and echo. In addition, from the classic functions I would like to see the ADSR-envelope setting, which is a simplified approximation of the phases of the intensity of the sound of a note played on a real musical instrument.

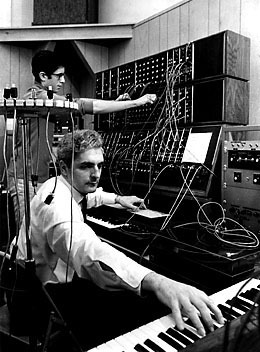

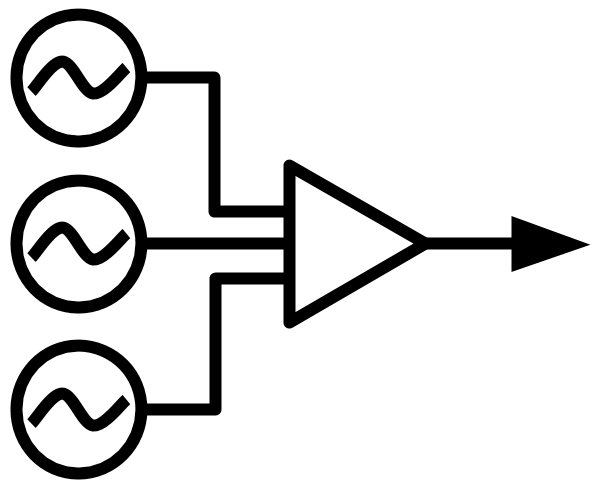

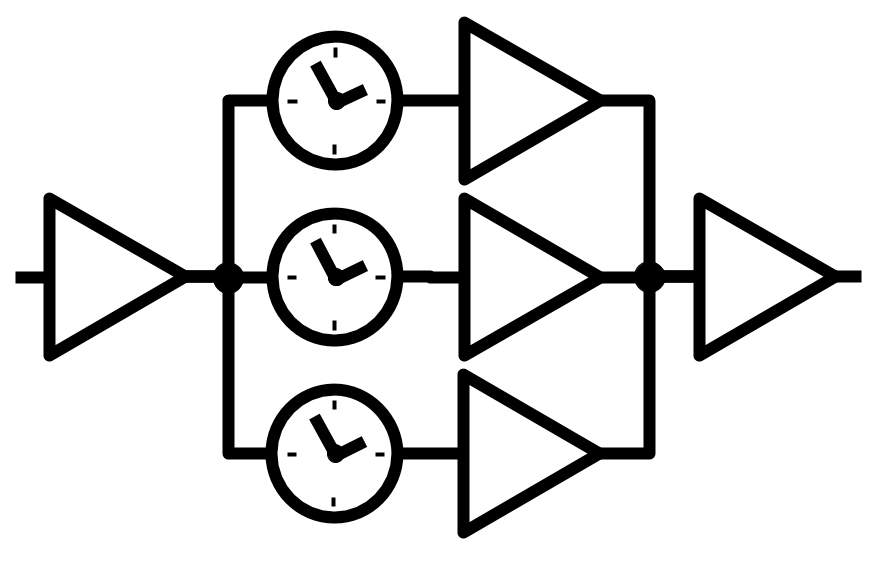

Considering that tremolo and vibrato are modulating effects (amplitudes and pitch, respectively), the implementation of a universal modulator for any of the system parameters seems to be the most natural and flexible solution. The whole point of modulators is that they can influence not only directly the signal parameters, but also the parameters of other seb-like modulators, obtaining possibilities limited for imagination to form sounds. At one time, this idea was also the basis of analog synthesizers, but then the switching looked like this:

Here it will look like this:

synth.connect(volume); volume.connect(delay.input); delay.connect(pan); pan.connect(audioCtx.destination); var vibrato = new SineModulator(); vibrato.modulate(synth, 'pitchShift'); Let's start with a simple sound synthesizing, for which we construct an AudioContext object, within which we create an oscillator, set its frequency, connect it to the audio output and then make it oscillate, producing the sound.

audioContext = new AudioContext(); var oscillator = audioContext.createOscillator() ; oscillator.frequency.value = 440; oscillator.connect(audioContext.destination); oscillator.start(0); If everything is done correctly, a pleasant sound should be heard in the speakers / headphones with the familiar frequency of a telephone hooter (or a tuning fork - here it is closer to someone). You can stop this process by calling the appropriate .stop () medo of the same object. By the way, the parameter passed to the start and stop functions is the time in seconds, after which the specified actions should be implemented in relation to the signal. We do not need this yet, so the parameter is set to 0, but you can not specify it at all. It is also necessary to pay attention to the .connect () and .disconnect () methods, which are part of the common for all AudioNode interface nodes and are used for switching their inputs and outputs. By calling the .connect () oscillator, we specify to send the resulting audio signal to the node passed as a parameter, in this case audioContext.destination, which from the point of view of our program is the final point, directing the sound to the operating system for further playback.

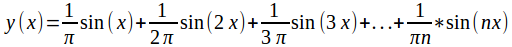

As the first function of our synthesizer, we implement the choice of waveform. The most common waveforms (for example, saw or square wave) are available as part of the API and can be used by specifying the appropriate parameter for the oscillator (eg audioContext.createOscillator ('square')). For more interesting cases, there is a PeriodicWave interface that allows you to specify the waveform of an arbitrary shape. Two arrays with Fourier coefficients are passed to the function, the calculation of which for an arbitrary waveform can be easily found in the literature (for example, briefly here ). So, for example, for the same sawtooth wave, which is the sum of all harmonics of a signal with a proportional decrease in amplitude, the coefficients for cosines (valid in complex recording) will be 0, and for sines (imaginary) 1 / n, i.e. look like this:

Naturally, for greater sharpness of the saw, it is necessary to increase the number of folding harmonics. The process is illustrated in animation:

Therefore, the calculation of the coefficients and their transfer to PeriodicWave for this signal will look like this:

var context = new global.AudioContext(); var steps = 128; var imag = new global.Float32Array(steps); var real = new global.Float32Array(steps); for (var i = 1; i < steps; i++) { imag[i] = 1 / (i * Math.PI); } var wave = context.createPeriodicWave(real, imag); module.exports = wave; The next step is the ability to select the pitch of the sound. In real synthesizers, this is done through the keyboard, but we will construct an ersatz keyboard on the monitor screen for starters. I’m not a designer, and I still couldn’t make a high-quality design, so I’ll draw all the graphics by hand to disguise the poverty of my design ideas. As a result, we have a keyboard with basic functions, as well as three waveforms to choose from:

Each keystroke should send a signal to the synthesizer with information about the number of the note you want to play. The same is true for the key release event. Although classical synthesizers at first had one oscillator modulated by the pitch of a keystroke, due to the lack of technical limitations, I decided not to complicate life and make as many oscillators as needed to simultaneously play an arbitrary number of notes. For convenient switching with subsequent modules, we agree to have one common output node to which all oscillators will be connected and which will mix all their signals in one stream. The rule of one entry point and one exit point will be further applied for all subsequent modules. The simplest GainNode with a gain of 1 (the default) is used as such a point. Schematically it looks like this:

And in the code like this:

function Synth(context) { this.audioContext = context; this.output = context.createGain(); this._oscillators = {}; } Synth.prototype.play = function(note) { var oscillator; oscillator = this._oscillators[note.pitch] = this.audioContext.createOscillator(); oscillator.frequency.value = note.frequency; oscillator.connect(this.output); oscillator.start(0); return oscillator; }; Synth.prototype.stop = function(note) { this._oscillators[note.pitch].stop(0); }; One of the basic functions, without which no device designed to produce sounds can be imagined, is to adjust the volume and balance of channels. For this, the Audio API provides the GainNode and StereoPanner interfaces, respectively. Add them to the circuit, commuting with the help of the same connect method:

var audioContext = new AudioContext(); var volume = audioContext.createGain(); var pan = audioContext.createStereoPanner(); volume.gain.value = 1; pan.pan.value = 0; synth.output.connect(volume); volume.connect(pan); pan.connect(audioContext.destination); To adjust the parameters, create two input fields and directly connect them to the corresponding nodes. For reading and transferring the values, I created a simple object controls that implements the mediator pattern and sends the field values to the interested as they change. It makes no sense to dwell on its implementation, let’s focus better on what happens when a value changes in a field:

controls.on('volume-change', function(value) { volume.gain.value = value; }); controls.on('pan-change', function(value) { pan.pan.value = value; }); Thinking ahead about the development of the vibrato effect, as well as about controlling the pitch by means of a physical lever on a MIDI device, add the ability to change the pitch for all sounds generated by the Synth module. The function is implemented by the admixture to the source module:

PitchShifter code

function PitchShifter() { this._pitchShift = 0; var oscillators = {}; Object.defineProperty(this, "pitchShift", { set: function (ps) { this._pitchShift = ps; for(var pitch in oscillators) { oscillators[pitch].frequency.value = oscillators[pitch].baseFrequency * Math.pow(2, this._pitchShift/1200); } }, get: function() { return this._pitchShift; } }); var old = { play: this.play, stop: this.stop }; this.play = function(note) { var osc = oscillators[note.pitch] = old.play.call(this, note); osc.baseFrequency = note.frequency; osc.frequency.value = osc.baseFrequency * Math.pow(2, this._pitchShift/1200); return osc; }; this.stop = function(note) { delete oscillators[note.pitch]; old.stop.apply(this, arguments); }; } Here we are closer to the implementation of the first effect - vibrato, i.e. periodic change of tone in height. For these purposes, as mentioned, we will conclude a modulator, and, as planned, the modulator should be implemented in such a way that it can modulate any system parameter, including the properties of other modulators, such as amplitude and frequency.

( UPD : in the comments rightly hinted at the non-optimal approach with intervals, especially considering that the oscillators can control the parameters directly, which I did not know at the time of writing this article, therefore the part about modulators is more theoretical than practical value)

The first problem with which there is a risk of encountering a “head-on” frequency change is a jump between signal levels, which, unfortunately, is also clearly audible, as can be seen in the following illustration:

Moreover, the difference in the phases of the previous and subsequent sinusoids depends on the current point in time, which makes it very ugly. To avoid this undesirable effect, when changing the frequency, it is necessary to compress or stretch the sinusoid not relative to zero, but relative to the current point along the X axis. For this, the phase of the signal will be permanently stored in the object and used as a reference point when calculating further amplitudes.

The second important point in the implementation of modulators is their additivity. Since theoretically the waveform obtained as a result of the addition of an arbitrary number of sinusoids has no limitations, having modulators that satisfy the additivity condition, we get additional scope for creativity.

Taking into account the above conditions, we realize the necessary modulator:

Modulator Code

function SineModulator (options) { options = options || {}; this._frequency = options.frequency || 0; this._phaseOffset = 0; this._startedAt = 0; this._interval = null; this._prevValue = 0; this.depth = options.depth || 0; Object.defineProperty(this, "frequency", { set: function (frequency) { frequency = parseFloat(frequency); this._phaseOffset = this._phaseNow(); this._startedAt = Date.now(); this._frequency = frequency; }, get: function() { return this._frequency; } }); } SineModulator.prototype.modulate = function(object, property) { this._objToModulate = object; this._propertyToModulate = property; }; SineModulator.prototype.start = function() { this._startedAt = Date.now(); var this_ = this; this._interval = setInterval(function() { var value = this_._modValueNow(); var diff = value - this_._prevValue; this_._objToModulate[this_._propertyToModulate] += diff; this_._prevValue = value; }, 10); }; SineModulator.prototype._phaseNow = function() { var timeDiff = (Date.now() - this._startedAt) / 1000; var phase = this._phaseOffset + timeDiff * this.frequency % 1; return phase; }; SineModulator.prototype._modValueNow = function() { var phase = this._phaseNow(); return Math.sin((phase) * 2 * Math.PI) * this.depth; }; SineModulator.prototype.stop = function() { clearInterval(this._interval); } module.exports = SineModulator; Now that we have a modulator, we will try to make a cyclical change in pitch, while at the same time adding effect parameter input fields to the interface and linking them to the corresponding modulator properties.

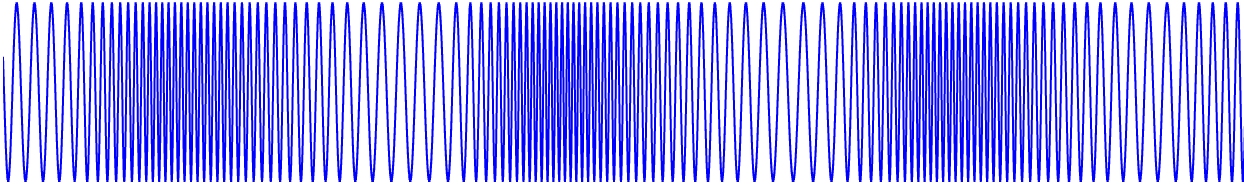

var vibrato = new SineModulator(); vibrato.modulate(synth, 'pitchShift'); controls.on('vibrato-on-change', function(value) { parseInt(value) ? vibrato.start() : vibrato.stop(); }); controls.on('vibrato-depth-change', function(value) { vibrato.depth = value; }); controls.on('vibrato-freq-change', function(value) { vibrato.frequency = value; }); We start and analyze the resulting signal, we state the presence of vibration. Effect achieved:

The next item on the list - tremolo - will be similar in principle, the only difference is the modulated parameter, this time it’s loudness. The code for this effect is also minimalistic and almost identical:

var tremolo = new SineModulator(); tremolo.modulate(volume.gain, 'value'); controls.on('tremolo-on-change', function(value) { parseInt(value) ? tremolo.start() : tremolo.stop(); }); controls.on('tremolo-depth-change', function(value) { tremolo.depth = value; }); controls.on('tremolo-freq-change', function(value) { tremolo.frequency = value; }); Having two modulation data and combining, or vice versa spreading, their frequencies, you can already get effects that are quite interesting in sound, and making the periods mutually simple, and amplitudes wide, even catch an unprepared listener unawares with chaos and unpredictability!

The next logical step is to try to follow the level above and modulate one of the parameters of any of the existing modulators, for example, the frequency of the vibrato, as a result of which the effect of playing on the speed of vibration should be obtained. To achieve this, we will create two modulators and assign one of them as a modulated parameter to the frequency property of the second:

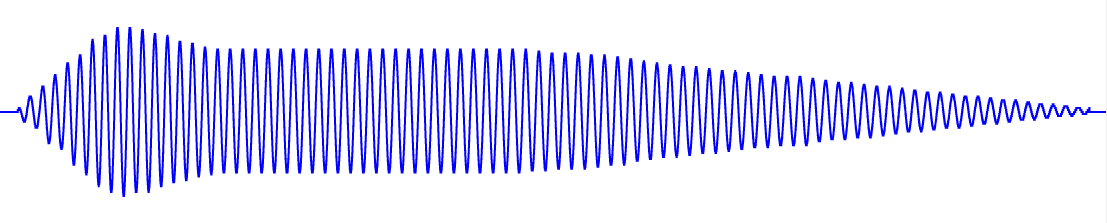

var vibrato = new SineModulator(); vibrato2.modulate(synth, 'pitchShift') vibrato2.frequency = 5; vibrato2.depth = 50; vibrato.modulate(synth, 'pitchShift'); vibrato.start(); vibrato2 = new SineModulator(); vibrato2.modulate(vibrato, 'frequency'); vibrato2.frequency = 0.2; vibrato2.depth = 3; vibrato2.start(); The cyclic change in the frequency of the tone change is audible to the naked ear, the resulting signal, recorded and opened in the audio editor, looks appropriate (the higher density sections correspond to the sound with a higher frequency):

At this, experiments with modulations can be considered successful, and the effects realized. Although modulation by an arbitrary signal can be achieved by adding sinusoids, in the future it would be much more convenient to have a set of pre-fabricated modulators, at least for the most commonly used waveforms (saw, square wave), for this scenario, you can create a set of designers by analogy with the existing SineModulator, and use the mechanism for specifying the waveform through the Fourier coefficients, applied by us when specifying the oscillator waveform. This task is no longer directly related to the Audio API, so for now I propose to complete this topic and go on to implement the first non-modulating effect, namely, echo.

In most cases, this effect is characterized by three parameters: the number of responses, the response time and the attenuation coefficient. Working with the number of responses different from zero and one implies branching the signal and creating delay lines for each branch. The delay line is a node that delays the passage of a signal for a certain period of time. We will use the delay lines provided by the Audio API and created by the AudioContext.createDelay function. The presence of the attenuation coefficient turns each of the branches into a circuit of the delay line - amplifier. In addition, we need to switch between a pure signal and a signal with an effect, as well as to ensure the possibility of simple switching with the previous and subsequent links of the path (remember the agreement to have one input and one output), which ultimately results in the following scheme:

Unfortunately, I did not find a way to create elements that would fully implement the AudioNode interface and which could be directly used as parameters for the connect method of other nodes. The Internet search also did not give any result, so in the end I followed the advice given by supposedly knowledgeable people on the Internet, the essence of which boils down to the fact that the object is a container for a set of standard nodes, and the connection to the input is not directly, but through the input property, which is the base node GainNode.

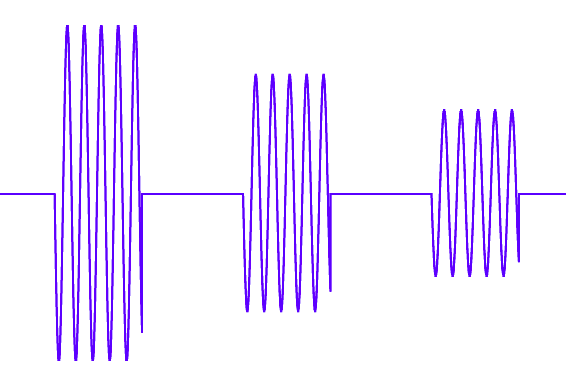

Tests, implementation, run. We have a wave of the following form:

Reached here by such code:

Echo implementation

function Delay(audioCtx) { this._audioCtx = audioCtx; this.input = audioCtx.createGain(); this._delayLines = []; this._gainNodes = []; this._delayLinesInput = audioCtx.createGain(); this._output = audioCtx.createGain(); this._taps = 0; this._latency = 0; this._feedback = 0; Object.defineProperty(this, "feedback", { set: function (freq) { this._feedback = freq; this._applyParams(); }, get: function() { return this._feedback; } }); Object.defineProperty(this, "latency", { set: function (freq) { this._latency = freq; this._applyParams(); }, get: function() { return this._latency; } }); Object.defineProperty(this, "taps", { set: function (value) { var prevTaps = this._taps; var diff = value - this._taps; for(var i = 0; i < diff; i++) { diff < 0 ? this._popTap() : this._pushTap(); } this._taps = value; }, get: function() { return this._taps; } }); this.input.connect(this._output); } Delay.prototype._applyParams = function() { for(var i = 0; i < this._delayLines.length; i++) { this._delayLines[i].delayTime.value = this._latency / 1000 * (i + 1); this._gainNodes[i].gain.value = Math.pow(this._feedback, (1 + i)) } }; Delay.prototype._pushTap = function() { var delay = this._audioCtx.createDelay(10.0); this._delayLines.push(delay); var gainNode = this._audioCtx.createGain(); this._gainNodes.push(gainNode); gainNode.connect(this._output); delay.connect(gainNode); this._delayLinesInput.connect(delay); }; Delay.prototype._popTap = function() { var lastDelayLine = this._delayLines.pop(); var lastGainNode = this._gainNodes.pop(); lastDelayLine.disconnect(lastGainNode); lastGainNode.disconnect(this._output); this._delayLinesInput.disconnect(lastDelayLine); }; Delay.prototype.start = function() { if (!this._started) { this.input.connect(this._delayLinesInput); this._started = true; } } Delay.prototype.stop = function() { if (this._started) { this.input.disconnect(this._delayLinesInput); this._started = false; } }; Delay.prototype.connect = function(target) { this._output.connect(target); }; module.exports = Delay; Progress is evident: the sounds resulting from the application of effects largely possess the musicality

To overcome this effect in particular, but in terms of its main function, to give dynamics, we can be helped by the so-called ADSR envelope (Attack-Decay-Sustain-Release), which characterizes the shape of the synthesized wave in time, approximately describing the behavior of the sound taken on a real musical instrument.

By applying such an envelope to each reproduced note, as a result of a gradual increase and attenuation of the volume, we remove the jump-like stall with a vertical front, which the ear perceives as a high-frequency click. The implementation, as in the case of the pitch shift - impurity directly to the synthesizer. When creating each of the oscillators, we wedge an amplifier between it and the output node, subsequently controlling the gain in accordance with the specified parameters of the ADSR envelope:

A piece of code is longer

function ADSR() { this.ADSR = { A: null, D: null, S: null, R: null }; var oscillators = {}; var gainNodes = {}; var old = { play: this.play, stop: this.stop }; this.play = function(note) { var osc = oscillators[note.pitch] = old.play.call(this, note); var gain = gainNodes[note.pitch] = this.audioContext.createGain(); osc.disconnect(this.output); osc.connect(gain); gain.connect(this.output); gain.gain.value = 0; this.ADSR.A = parseInt(this.ADSR.A); this.ADSR.D = parseInt(this.ADSR.D); this.ADSR.S = parseFloat(this.ADSR.S); this.ADSR.R = parseInt(this.ADSR.R); var this_ = this; var startedAt = Date.now(); var interval = setInterval(function() { var diff = Date.now() - startedAt; if (diff < this_.ADSR.A) { gain.gain.value = diff / this_.ADSR.A; } else if (diff < this_.ADSR.A + this_.ADSR.D) { gain.gain.value = 1 - (diff - this_.ADSR.A) / (this_.ADSR.D / (1 - this_.ADSR.S)); } else { gain.gain.value = this_.ADSR.S; clearInterval(interval); } }, 10); return osc; }; this.stop = function(note) { var releasedAt = Date.now(); var this_ = this; var arguments_ = arguments; var gain = gainNodes[note.pitch]; var osc = oscillators[note.pitch]; var gainOnRelease = gain.gain.value; var interval = setInterval(function() { var diff = Date.now() - releasedAt; if (diff < this_.ADSR.R) { gain.gain.value = gainOnRelease * (1 - diff / this_.ADSR.R); } else { clearInterval(interval); gain.gain.value = 0; old.stop.apply(this_, arguments_); osc.disconnect(gainNodes[note.pitch]); gain.disconnect(this.output); delete oscillators[note.pitch]; delete gain[note.pitch]; } }, 20); }; } module.exports = ADSR;

So, having made this stroke, we got a completely functional basic synthesizer that generates sounds suitable for human listening. Further steps to improve could be interface changes such as, for example, visual creation, tuning and switching of oscillators and modulators, and directly to the synthesis, adding filters, introducing harmonics, nonlinear distortions and other things, but this is a topic for further research. In future articles, we plan to connect MIDI instruments to the resulting synthesizer, in particular, a keyboard and a guitar, as well as to switch to sound recording and real audio effects. All this, of course, in the browser!

The program demonstration is available at the following link: miroshko.imtqy.com/Synzer

All source code is available on github: github.com/miroshko/Synzer , I will be glad to have asterisks, forks, and pull requests.

Source: https://habr.com/ru/post/267895/

All Articles