Planning resources for Veeam Backup & Replication 8.0: calculating the required place in the repository

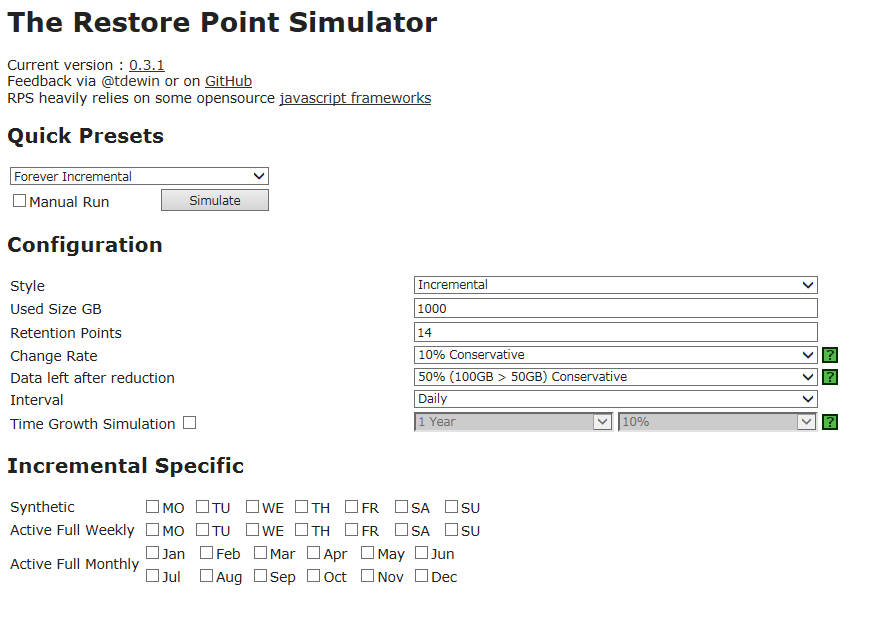

So that when planning resources for Veeam Backup & Replication, people could figure out how much space is needed in the repository for backup of the virtual machine, a Restore Point Simulator (RPS) calculator-simulator of recovery points was created in due time.

But since from time to time we hear from users “Why \ why is this value here?”, Today I will give a few explanations about the input parameters and tell you where the default values come from.

For details, welcome under cat.

The formula that is used to calculate the size of the backup that is obtained in one pass of the backup task is:

')

Backup Size (Backup Size) = C x (F x Data + R x D x Data)

Here:

The parameters of this formula and the RPS calculator are related as follows:

We still have two parameters from the formula - F and R. These values indicate how much you want to have full backups (Fulls) or incremental backups (incRements). With the Reverse incremental / Forever incremental modes, everything is obvious - for them there will be:

F = 1

and

R = rps (total number of recovery points) - F

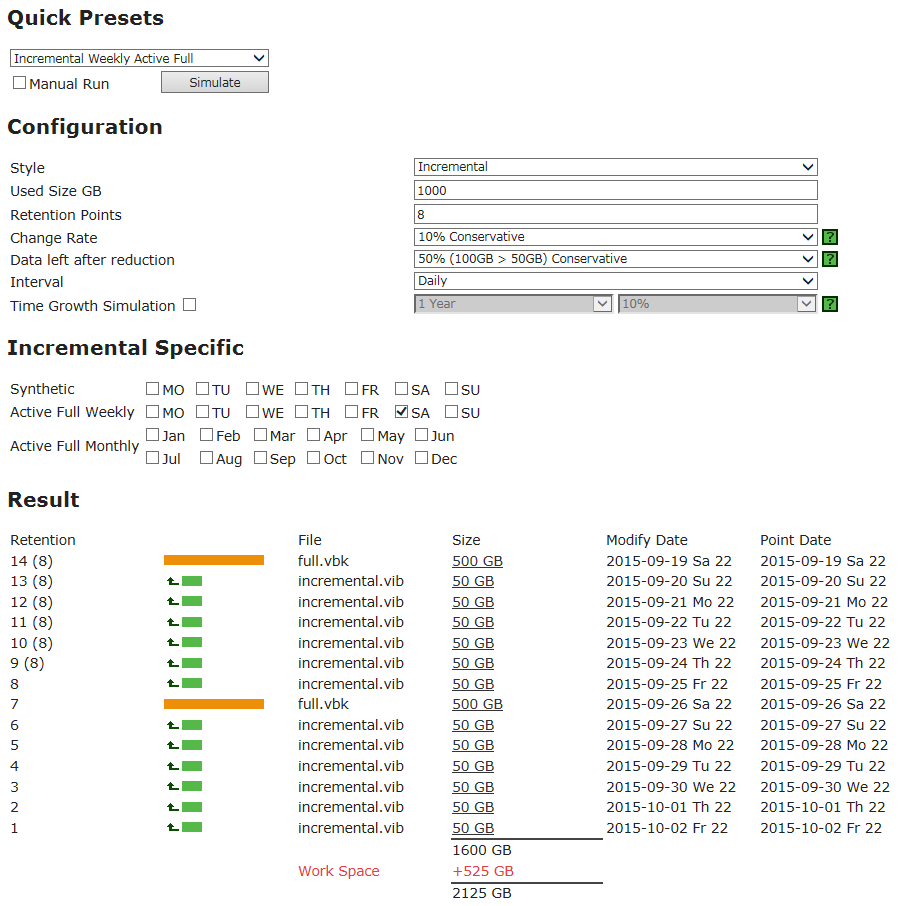

And if you need weekly synthetics or active fulls? It all becomes a bit more complicated, because you need to take into account the storage policy settings. For example, if you need to build an incremental chain of forward incremental, doing weekly full on top of that, and the policy prescribes to store 2 restore points, then you may have 9 points at some point - and all for dependencies between increments and full backups. The term “recovery point” should be interpreted literally (as Veeam Backup & Replication does) - this is the point from which you can recover. If there is no full backup - the “foundation” from which the chain of increments, “floors” is built, then it will be impossible to restore the car (“house”) from any increment “floor”.

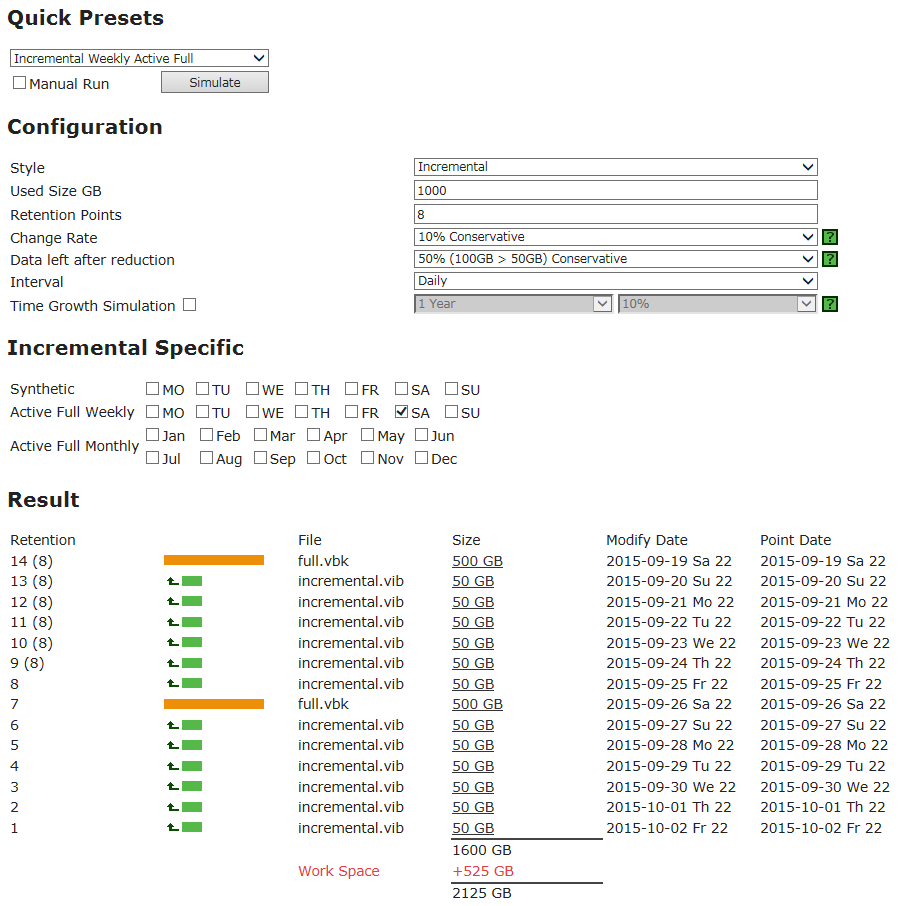

The simulator calculator also illustrates this dependence: after you select a mode, enter the number of points prescribed by the policy in the Retention points field and specify other parameters, and then click Simulate , in the results section in the Retention column you will see a pair of numbers N1 (N2) . This means that the recovery point number N1 will be stored (will not be deleted), because another point (N2) depends on it.

If you count on a piece of paper or on a regular calculator, the formula will look like this:

F = (number of weeks) + 1

R = (F x 7 x number of daily backups - F)

For example, if you need to store 14 points, and run the backup daily, you get:

F = 2 + 1 = 3

R = 3 x 7 x 1 - 3 = 21 - 3 = 18

Another subtlety is in understanding how much space a weekly backup will take, and how much monthly. Remember that a monthly backup can have a chain of 30 points. If you set up the weekly full week, the chain will be a maximum of 7 days, i.e. less storage space is needed. However, if you set up a policy for storing, say, 60 points, then monthly monthly full may be more profitable than weekly.

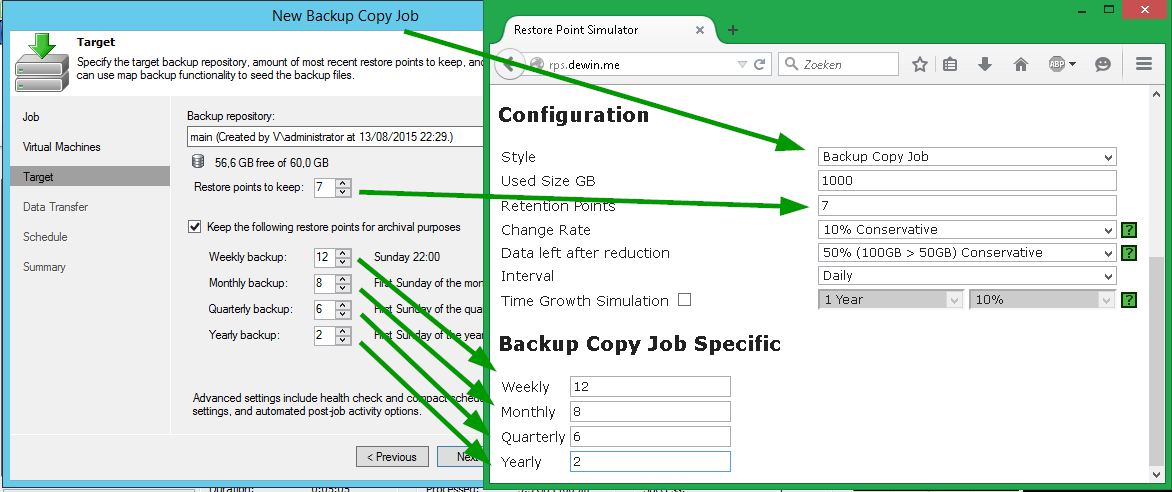

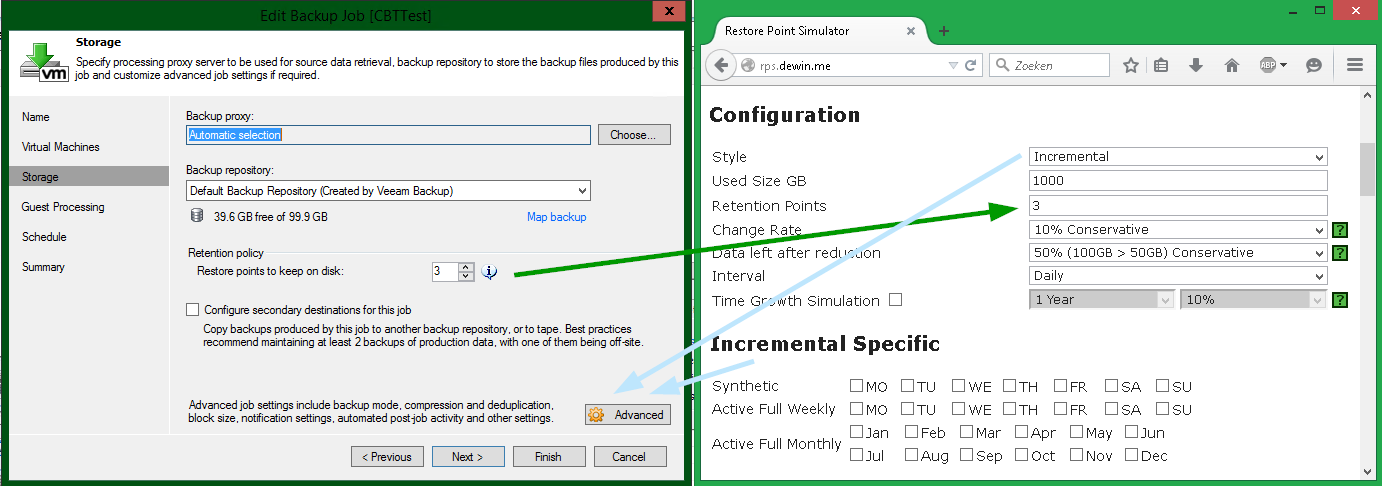

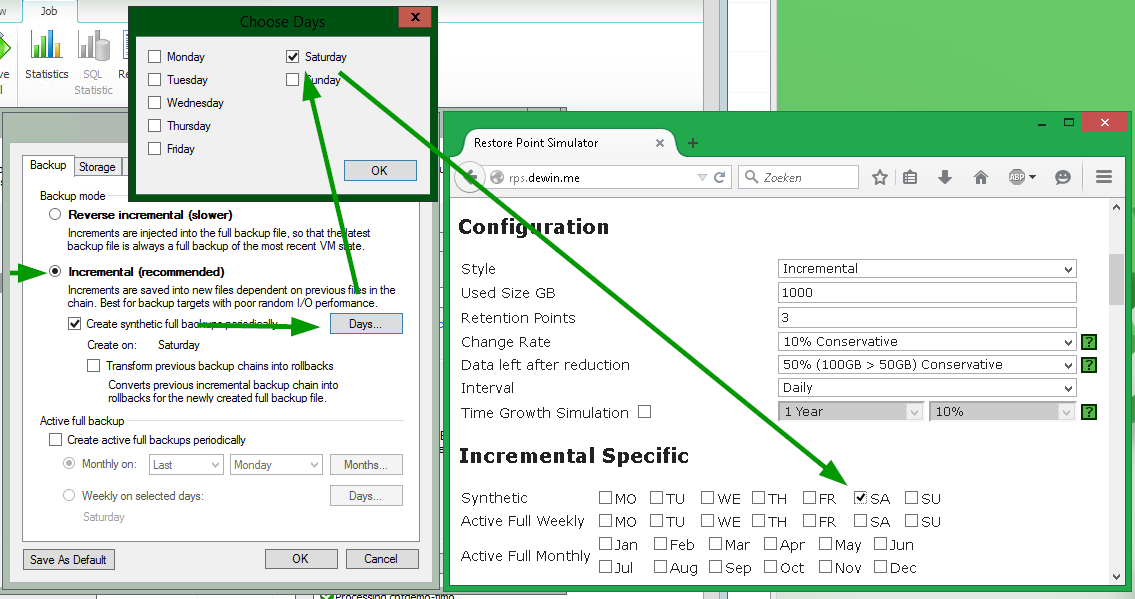

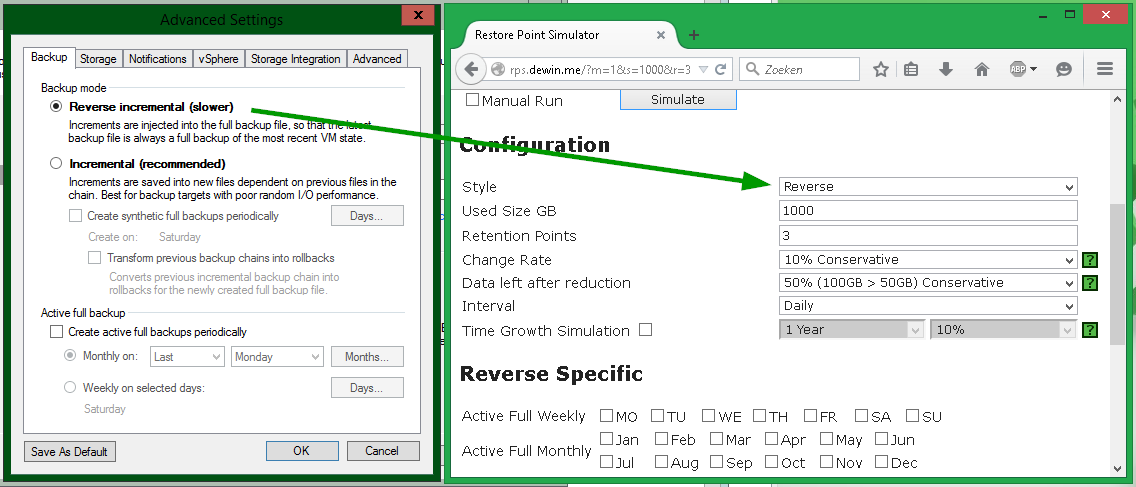

As you have already noticed, some of the calculator fields correspond to the fields of the Veeam Backup & Replication 8 interface; the pictures show both UIs - for clarity and more convincing.

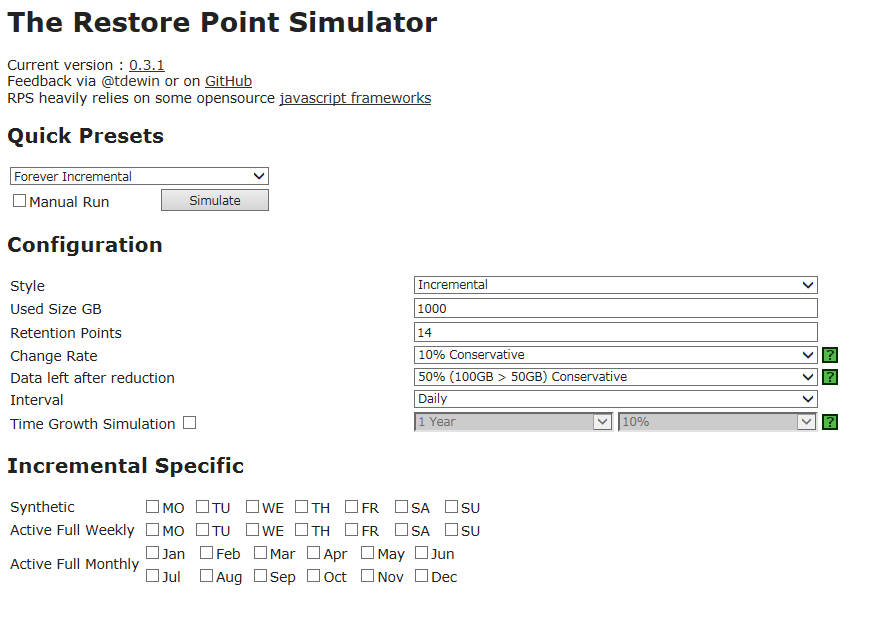

So, we are determined with the policy of storing recovery points. If you need to keep daily backups (one per day) in the amount of 14 pieces, then enter 14 in the Retention points field, and Daily in the Interval field.

Important! Remember that at certain points in time there will be more stored points than you specified - since not only the value you set is taken into account, but also the dependencies between increments and full backups. Therefore, do not rush to edit these values only because it seems to you to be surplus - or rather, carefully reread the previous section about F and R again.

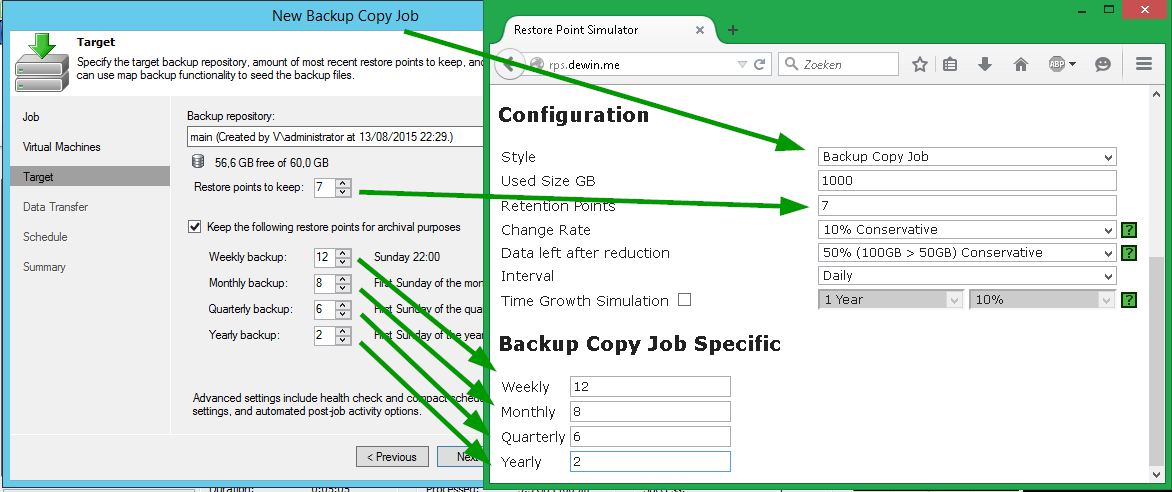

The number of parameters to be set depends also on what is called Style in our calculator (the style, or the method of creating a restore point, for which you need to perform estimation calculations). It's all the same as the Veeam Backup & Replication console. For example, let's take the Backup Copy Job “style” - obviously, if you want to do the calculation for this option, the same settings will be used that you set when configuring Backup Copy Job in the Veeam console:

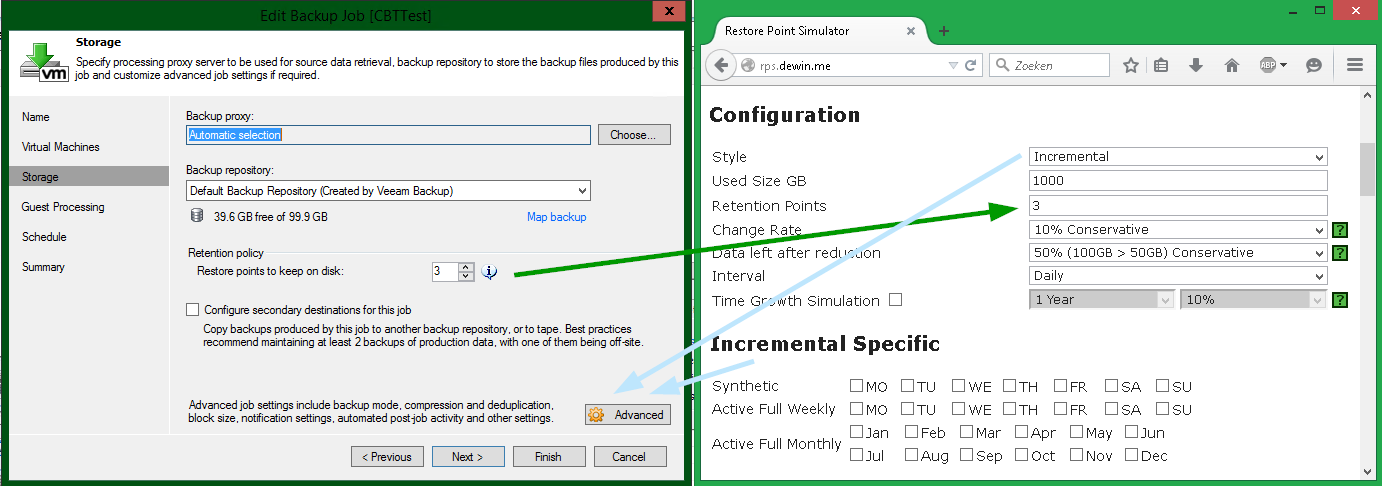

For other “styles” named Incremental and Reverse , the calculation parameters will correspond to the Backup Job backup job settings (with the exception of Retention Points ):

Note: If you select Incremental at all without full backups (active fulls or synthetic fulls), you will get an infinite chain of increments.

I draw your attention to the differences in the UI:

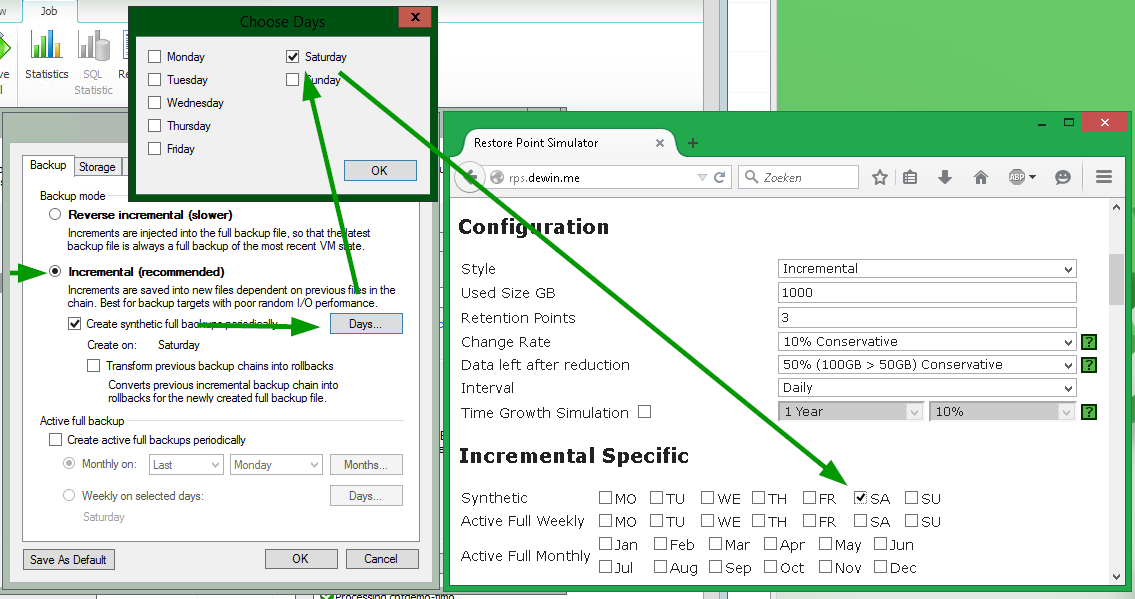

In this case, the settings should be similar to those in this picture (on the left, what you would have indicated in the Veeam console, on the right, what needs to be indicated in the calculator):

Note: The calculator does not reproduce the “Transform” option for one simple reason - so far nobody has asked the author about it :).

To calculate the scenario with monthly active full, the settings should be the same as in this picture:

Important! Remember that for Backup Backup job there is no GFS storage scheme - this scheme can be specified only in Backup Copy Job transfer jobs. (One user tried to check in the backup task only January, because he dreamed of "having an annual backup for the archive" - naturally, he was quite surprised to see what happened as a result of his settings. Of course, the calculation was made for a whole year long chain, creating the next full backup in January).

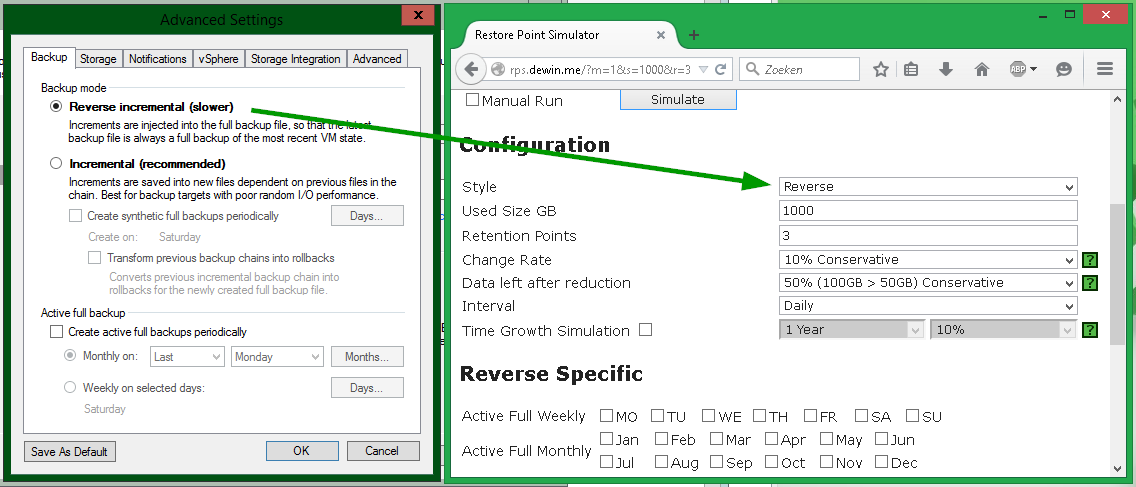

To calculate the same for Reverse, incremental settings should be as follows:

It remains to explain only oneowl one option - the simulation of the growing amount of data of the Time Growth Simulator . This is a recent and, in my opinion, quite useful invention. Its essence is that if you want, say, to make a calculation of the increase in the amount of data 3 years ahead, and you know that the amount of input data is growing by 10% per year, then you just need to check the checkbox next to Time Growth Simulator and specify this period and these% by selecting the desired values from the drop-down lists.

The calculator takes the value of Used Size GB (it was mentioned above) and calculates the daily increase in the amount of data using the formula:

Future Used Data = Used Size x (1 + 10%) ^ (Day N / 365)

Thus, on the last calculated day (in 3 years) it will turn out:

Future Used Data = Used Size x (1 + 10%) ^ (1095/365)

Suppose that you chose the Reverse incremental backup method, you want to calculate the required volume for 3 years ahead, taking into account the annual growth of data volume by 10%, and the initial Used Size you have is 1000GB (for simplicity, the compression ratio will not be taken into account).

Calculate using the formula Future Used Data after 3 years:

Future Used Data = 1000 x (1 + 10%) ^ (1095/365) = 1000 x (110%) ^ 3 = 1000 x (1.10) ^ 3 = 1000 x 1.10 x 1.10 x 1, 10 = 1331

Let's check our calculation using a calculator simulator:

By the way, note that an incremental backup, which represents a recovery point created 2 days before the expiration of the 3-year period, will have a smaller Future Used Data size - according to the same formula:

Future Used Data = 1000 x (1 + 10%) ^ (1093/365) = ~ 1330.30

For standard backup tasks, the difference is not very large, but if you do calculations, for example, for a Backup Copy Job job with a GFS storage policy, you can see a more significant increase.

Well, in conclusion, a few words about Quick Presets are typical scenarios that are most often taken for calculation. For example, the script Incremental Monthly Active Full will calculate everything for you exactly the same as if you selected Incremental in the Style list and checked all 12 months in Active Full Monthly . That's all.

Thanks for attention!

Article on Habré about backup methods

Article on Habré about testing the performance of storage systems for backups

But since from time to time we hear from users “Why \ why is this value here?”, Today I will give a few explanations about the input parameters and tell you where the default values come from.

For details, welcome under cat.

Calculation formula on paper and in calculator

The formula that is used to calculate the size of the backup that is obtained in one pass of the backup task is:

')

Backup Size (Backup Size) = C x (F x Data + R x D x Data)

Here:

- Data - the total size of all virtual machines processed by a specific backup task (the actual space occupied, but not allocated)

- C - the average compression ratio \ deduplication (actually depends on a number of factors, it can be very high, but we take the minimum - 50%; for details later)

- F - the number of full backups (VBK) in the chain, according to the storage policy (take 1, unless you are using the mode with the periodic creation of full backups, about which I will also tell)

- R - the number of incremental backups (VRB or VIB) in the chain, according to the retention policy (14 by default)

- D (delta) - the average number of VM disk changes in the interval from one pass of a backup job to another (in the current version of the calculator, this value is 10%, but perhaps in the future it will change by 5% according to the feedback from users ... actually for The main mass of VMs is 1-2%, but for Exchange and SQL the value reaches 10-20% due to the large number of transactions and, accordingly, log entries, so 5% will be quite reasonable).

The parameters of this formula and the RPS calculator are related as follows:

- Data is what you need to enter in the Used Size GB field of the calculator.

This is the size of the actual space used, i.e. if you have a VM with one VMDK, then this value is equal to the number of blocks that were written to. For example, if it was a “thick” (thick provisioned) disk at 50GB, and you used 20GB for a guest, then in the Used Size GB field, you need to enter a value close to 20GB. You can say, Used Size GB is a place that a VM would really occupy, if it had a “thin” disk (thin provisioned), because since Veeam copies data at the block level, then it’s a “thin” disk (i.e. . Actually occupied space) would correspond to the amount of data that must be processed when creating a full backup (well, plus some metadata). - D , or delta is the size of the data that varies between runs of the backup job. In the calculator, this is the Change rate field. The choice of its value is influenced by two things: how often the task is started (usually this is once a day) and which application runs on the VM.

To underestimate this value is not recommended. Again, Veeam copies the data at the block level, and a tiny change can cause a larger scale change at the block and disk level — more than what you put in the calculator. If writing to disk goes sequentially, as in the case of, for example, a file server, then this is most likely not so noticeable, because 10 consecutive changes may well fall into one block. But if the application makes a lot of even very small random changes in different places, the value can quickly grow. From what I've observed, the value of 5% is a rather optimistic estimate, and 10% is a rather conservative one.

Useful: If you have a deployed Veeam ONE solution, then to assess changes in% specifically for your infrastructure, you can generate a VM Change Rate Estimation report from the Infrastructure Assessment report set. - C , it is compression (compression) - in the calculator this field is Data left after reduction . There are different opinions about his interpretation among the audience, but I propose to look at our formula, which can be written like this:

Backup Size = C x (Total Data In)

That is, speaking in Russian:

Backup size = x (total input data)

It shows that when multiplied by C, the value of Total Data In changes proportionally, giving us Backup Size . This means that the compression ratio C shows how much (in%) the volume of incoming data has decreased, more precisely, how much of the original volume remains after compression. So, for example, if you set it to 40% (40/100) and take the volume of incoming data for 100 units, then the size of the backup will obviously be (40/100) * 100 = 40 units. If you set it to 60%, then it turns out that you expect a smaller effect from compression, because according to the formula, it turns out that the backup size will be (60/100) * 100 = 60 units . The bottom line: the smaller the compression ratio, the better the compression.

To some, this will seem intuitive, and to someone it’s more familiar to see the compression ratio "2 times" or "3 times." This is easy to convert - you get 1 / N x 100% , that is, for "twice" will be 1/2 x 100% = 50% .

Substitute this value into our formula and get: 50 backup size = 50% x (100 total data in)

That's right, the incoming data volume has shrunk by half. Similarly, for compression "3 times": 1/3 x 100% = 33% .

Note: If you plan to disable data compression altogether, set this factor to 100%.

Pro recovery points and retention policy

We still have two parameters from the formula - F and R. These values indicate how much you want to have full backups (Fulls) or incremental backups (incRements). With the Reverse incremental / Forever incremental modes, everything is obvious - for them there will be:

F = 1

and

R = rps (total number of recovery points) - F

And if you need weekly synthetics or active fulls? It all becomes a bit more complicated, because you need to take into account the storage policy settings. For example, if you need to build an incremental chain of forward incremental, doing weekly full on top of that, and the policy prescribes to store 2 restore points, then you may have 9 points at some point - and all for dependencies between increments and full backups. The term “recovery point” should be interpreted literally (as Veeam Backup & Replication does) - this is the point from which you can recover. If there is no full backup - the “foundation” from which the chain of increments, “floors” is built, then it will be impossible to restore the car (“house”) from any increment “floor”.

The simulator calculator also illustrates this dependence: after you select a mode, enter the number of points prescribed by the policy in the Retention points field and specify other parameters, and then click Simulate , in the results section in the Retention column you will see a pair of numbers N1 (N2) . This means that the recovery point number N1 will be stored (will not be deleted), because another point (N2) depends on it.

If you count on a piece of paper or on a regular calculator, the formula will look like this:

F = (number of weeks) + 1

R = (F x 7 x number of daily backups - F)

For example, if you need to store 14 points, and run the backup daily, you get:

F = 2 + 1 = 3

R = 3 x 7 x 1 - 3 = 21 - 3 = 18

Another subtlety is in understanding how much space a weekly backup will take, and how much monthly. Remember that a monthly backup can have a chain of 30 points. If you set up the weekly full week, the chain will be a maximum of 7 days, i.e. less storage space is needed. However, if you set up a policy for storing, say, 60 points, then monthly monthly full may be more profitable than weekly.

The prologue is over, go to the input

As you have already noticed, some of the calculator fields correspond to the fields of the Veeam Backup & Replication 8 interface; the pictures show both UIs - for clarity and more convincing.

So, we are determined with the policy of storing recovery points. If you need to keep daily backups (one per day) in the amount of 14 pieces, then enter 14 in the Retention points field, and Daily in the Interval field.

Important! Remember that at certain points in time there will be more stored points than you specified - since not only the value you set is taken into account, but also the dependencies between increments and full backups. Therefore, do not rush to edit these values only because it seems to you to be surplus - or rather, carefully reread the previous section about F and R again.

The number of parameters to be set depends also on what is called Style in our calculator (the style, or the method of creating a restore point, for which you need to perform estimation calculations). It's all the same as the Veeam Backup & Replication console. For example, let's take the Backup Copy Job “style” - obviously, if you want to do the calculation for this option, the same settings will be used that you set when configuring Backup Copy Job in the Veeam console:

For other “styles” named Incremental and Reverse , the calculation parameters will correspond to the Backup Job backup job settings (with the exception of Retention Points ):

Note: If you select Incremental at all without full backups (active fulls or synthetic fulls), you will get an infinite chain of increments.

I draw your attention to the differences in the UI:

- In the Veeam interface, the corresponding settings are opened by clicking the Advanced button in the Storage step (of course) of the backup job wizard. The schedule settings for which a full backup should be created are opened in Advanced Settings by clicking the Days and Months buttons.

- You will not see the checkbox “Enable Active” or “Enable Synthetic” in the calculator itself, but locking in any daw in the Incremental Specific section will result in choosing Active Full or Synthetic Full.

- Well, in addition to the Monthly option in the calculator, the priority is over the Weekly option - in the Veeam GUI you can select only one of them, and here at least both, but if Monthly is selected, then Weekly will be ignored.

Example №1: incremental backup with weekly synthetic full

In this case, the settings should be similar to those in this picture (on the left, what you would have indicated in the Veeam console, on the right, what needs to be indicated in the calculator):

Note: The calculator does not reproduce the “Transform” option for one simple reason - so far nobody has asked the author about it :).

Example number 2: incremental backup with monthly active full

To calculate the scenario with monthly active full, the settings should be the same as in this picture:

Important! Remember that for Backup Backup job there is no GFS storage scheme - this scheme can be specified only in Backup Copy Job transfer jobs. (One user tried to check in the backup task only January, because he dreamed of "having an annual backup for the archive" - naturally, he was quite surprised to see what happened as a result of his settings. Of course, the calculation was made for a whole year long chain, creating the next full backup in January).

Example 3: reverse incremental backup

To calculate the same for Reverse, incremental settings should be as follows:

Do you make long-term forecasts?

It remains to explain only one

The calculator takes the value of Used Size GB (it was mentioned above) and calculates the daily increase in the amount of data using the formula:

Future Used Data = Used Size x (1 + 10%) ^ (Day N / 365)

Thus, on the last calculated day (in 3 years) it will turn out:

Future Used Data = Used Size x (1 + 10%) ^ (1095/365)

Example 4

Suppose that you chose the Reverse incremental backup method, you want to calculate the required volume for 3 years ahead, taking into account the annual growth of data volume by 10%, and the initial Used Size you have is 1000GB (for simplicity, the compression ratio will not be taken into account).

Calculate using the formula Future Used Data after 3 years:

Future Used Data = 1000 x (1 + 10%) ^ (1095/365) = 1000 x (110%) ^ 3 = 1000 x (1.10) ^ 3 = 1000 x 1.10 x 1.10 x 1, 10 = 1331

Let's check our calculation using a calculator simulator:

By the way, note that an incremental backup, which represents a recovery point created 2 days before the expiration of the 3-year period, will have a smaller Future Used Data size - according to the same formula:

Future Used Data = 1000 x (1 + 10%) ^ (1093/365) = ~ 1330.30

For standard backup tasks, the difference is not very large, but if you do calculations, for example, for a Backup Copy Job job with a GFS storage policy, you can see a more significant increase.

Well, in conclusion, a few words about Quick Presets are typical scenarios that are most often taken for calculation. For example, the script Incremental Monthly Active Full will calculate everything for you exactly the same as if you selected Incremental in the Style list and checked all 12 months in Active Full Monthly . That's all.

Thanks for attention!

PS: What else to read

Article on Habré about backup methods

Article on Habré about testing the performance of storage systems for backups

Source: https://habr.com/ru/post/267743/

All Articles