How we did the conversation: from the prototype on the hackathon to the application of Yandex

Recently, Yandex has released an experimental application, Conversation , which helps people who are deaf and hard of hearing communicate. Now the international week of the deaf is taking place, and we decided that this is a very good reason to tell about our application, about why we did it and how it turned out that Yandex supported our idea. And also about how the process of working on a prototype for a hackathon differs from the release of a full-fledged product.

Last fall, at the Moscow Institute of Physics and Technology, where I studied, we were taught the course “Creating New Internet Products” at the basic Yandex department . He was conceived as a kind of start-up workshop, in the framework of which it was necessary to come up with something that would successfully solve the existing problem with the help of Yandex technologies. With several of my classmates, we thought that communication of people who were turned off from the usual voice communication with the rest of the hearing world was a task that fits such criteria. According to the World Health Organization, 10% of the inhabitants of the Earth have hearing problems, 1.5-2% of them suffer from serious violations. There are 2.2 million of them in Russia. It would be great to do something that could help these people in everyday life.

In the process, we had the idea to recognize sign language using computer vision. But we quickly realized how difficult this task was for a group of fourth-year students. And then they remembered speech recognition and SpeechKit . So implementation of the idea has changed, but the essence has been preserved. We decided to create a mobile application that would recognize the interlocutor's speech, display it on the screen and give the user the opportunity to type an answer.

')

In addition, we suspected that the deaf and hearing impaired had a big problem with hails, so we came up with the following feature: the user clogs, for example, his name or any other call, and the phone vibrates when they are pronounced nearby.

Having decided on the idea, we realized that we know practically nothing about the world of the deaf. Before creating a prototype, it was necessary to dive into the subject area, understand how people with hearing loss need a software solution to their problem, and how this solution may look like. To do this, we communicated with several sign language interpreters and deaf people, conducted in-depth interviews, learned how the mentality and life of the deaf were arranged. It turned out that it was not at all difficult to find a person who was ready to talk with you live to help you plunge into the world of the deaf.

After a little research, we formed the vision of our product and started creating it. The teachers organized a hackathon for us in Yandex, where everything was according to the canons - pizza, redbull, ottomans, and nightly continuous non-stop coding. Before the hackathon, the guys from SpeechKit came to us and made a small brief on the API. At 7 am, we showed a prototype on Android to our teachers in the department, Sonya Terpugova and Denis Popovtsev. My classmate put on headphones, unscrewed them to the maximum, and we tried to talk. At this point, it became clear: it seems that something happened to us. (I want to say thank you to all the guys with whom we worked on the prototype - Antonina Parshina, Andrey Osipov, Nikita Kireev and Alexander Kuzmin.)

Naturally, we had an unhealthy desire to test the application on its target audience. I quickly found a group of deaf and hearing impaired students from MSTU. Bauman, who were engaged in studying English learning. We were asked to come to their class to talk about the application - they happily agreed. And here we are in a small office in front of 10 deaf and hearing impaired children and tell them about our prototype. Speech recognition was far from ideal: around was quite noisy, a lot of people. But even with this in mind, feedback was enthusiastic, because the dialogue was still possible to hold. The guys vying began to throw ideas for development, to notice shortcomings, to demand a version for iOS. Here we understood that the project must have a development.

Then the winter session began. It was necessary to pass tests and exams, and the students had no time for projects. However, Sonya Terpugova, our teacher from the department, did not waste time in vain. She showed a prototype in Yandex, told about the feedback he received. So the project continued to develop already inside Yandex.

In February, I was offered an interview for the role of an application manager for the deaf and hearing impaired. Of course, I was very happy: the opportunity to continue working on the project already inside the company seemed very tempting. Interview, a few weeks of waiting - and I'm already working as a PM in Yandex. All the guys with whom we came up with the application, were glad that the project was developed.

In May, we started working on the project and the first month was devoted to a deeper immersion in the subject area. It was necessary to analyze what mobile applications, devices and services are for deaf and hearing impaired people. It seemed to me that recently in the IT sphere, they began to pay increased attention to people with disabilities, so there were a lot of projects.

All existing solutions that we found recognize and reflect the speech of the interlocutor. But, as a rule, they do not have a very good interface. The artisanal and multiplicity of such applications suggests that the problem is already being tried technically, but there is no systematic approach and driving force in this direction.

There are still announced applications. These are full-fledged startups with a large team working only on this project. The funds they receive through crowdfunding platforms, and gaining the necessary amount quickly enough. This confirms the need to create an assistant for the deaf: it is important for people to solve their problem, they are even willing to pay for it.

As a result of this analysis, it became clear that the problem is really sick and has already been noticed by many. We set out to create the highest quality and affordable solution for the deaf and hearing impaired based on speech recognition technology.

To regularly communicate with our future users, immerse yourself in their lives and problems, we created a VKontakte group . Being engaged in the project, people began to notice deaf people everywhere - in the subway and electric trains, at the ticket offices in Auchan - and specially for such situations they prepared cards with an invitation to our group in VK. After each such meeting, they involuntarily looked at the list of new participants - I really wanted to see the familiar faces of those who came to the group by our direct invitation.

Pretty quickly, we recruited an initiative group of guys who were ready, at our request, to come to the interview, and to usability testing, and answer questions. Moreover, they themselves gave us advice, threw off important contacts - in general, they helped in every way to create the project. I would like to say a special thank you to Evgenia Khusnutdinova, who in the ROOI Perspektiva is engaged in the employment of the deaf and hard of hearing, and Denis Kuleshov, head of the laboratory of the GUIMC MGTU. Baumana - the largest center for working with people with hearing loss among Russian universities. The guys gave valuable information and introduced a lot of the deaf and hearing impaired, with whom we did hard work.

We invited people to the office, conducted in-depth interviews, showed a prototype. We asked to talk with us through the phone and watched what was convenient or inconvenient for us and the user. We saw a lot of mistakes in the original interface, when they began to use Talk in real conditions.

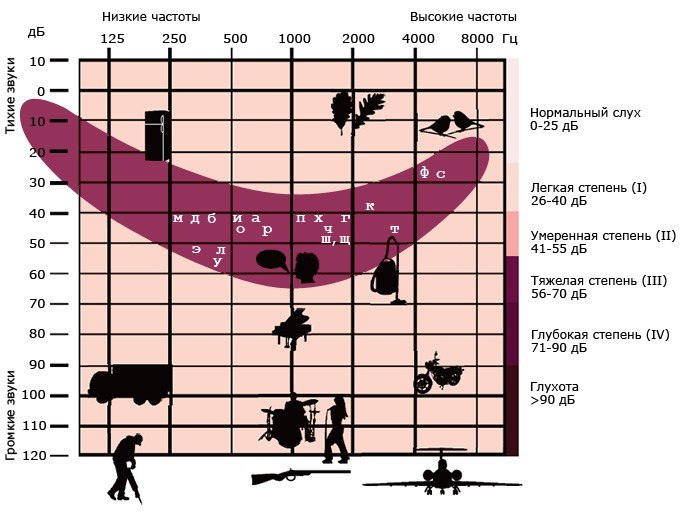

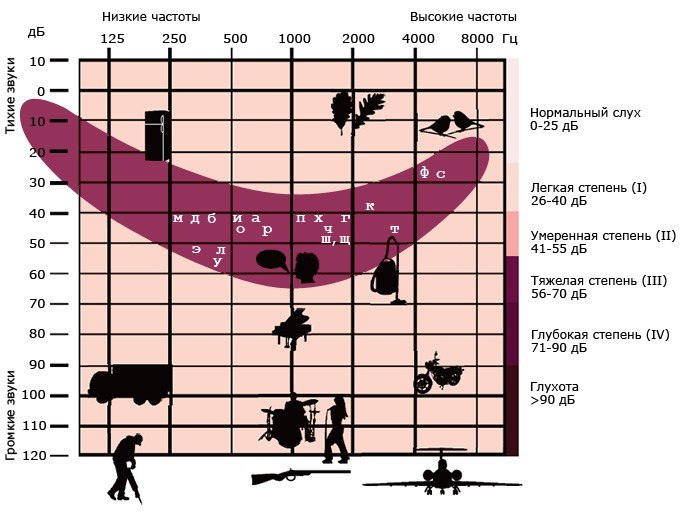

During this time we learned a lot of important things. For example, that there are four degrees of hearing loss. The first two degrees, as a rule, manifest themselves in old age, may be in any of us, but this is not always possible to understand. In the community of people with hearing loss, two categories are completely deaf (grade 4) and hard of hearing (grade 3–4).

Partially hearing people mostly read their lips, and completely deaf people are explained by gestures. Since most people do not know the sign language, the hearing-impaired communicate mainly within their small but very friendly company. Communication with people from public services or even healthcare is a forced and uncomfortable measure. The greater the degree of deafness, the less the degree of socialization of a person, and the less he receives information. At the same time, it spreads very quickly within the deaf community.

We wanted to help all these people. They have common problems - understanding complex terms, names, addresses. But at the same time, the most urgent problem for partially hearing people is telephone conversations and meetings, and for the deaf it is most important to at least somehow understand the interlocutor and be understood.

Somewhere after 15–20 interviews, we realized that many problems and use cases recur — this was a signal that we felt pain points. There were many unexpected findings from the study:

Having processed all the information received, they began to think about the implementation.

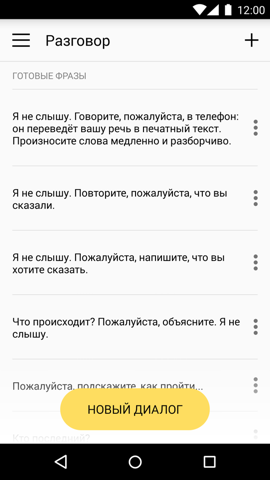

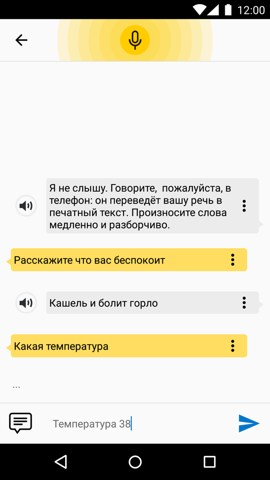

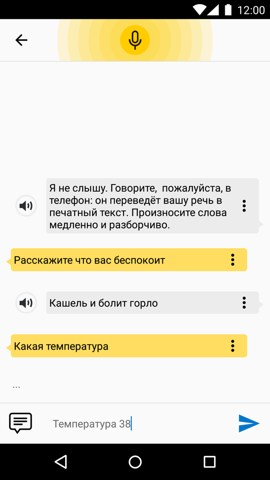

The basic functionality of the application remains the same - it is the speech recognition of the interlocutor and the text output on the screen. A person with a hearing impairment can type text and transmit his answer using speech synthesis or by increasing the phrase to full screen.

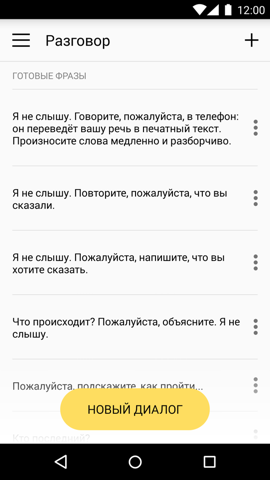

To make it easier to dialogue, in the application there are prepared phrases. The user can add and their blanks that they may need.

The whole history of communication is stored on the phone, and the dialogues can be continued, which can be convenient for frequently communicating interlocutors. We also added a phrase highlighting function: an important message or dialogue can be noted to make it easier to find when needed.

For speech recognition, we use our native technology, of which we are very proud. SpeechKit is able to recognize voice well and synthesize speech, which was required in our application. In our case, it was decided to use the Notes language model - for free dictation of short texts, SMS and notes, which is closest to spontaneous speech.

SpeechKit developers have achieved speech recognition in 82%. The stream recognition mode with intermediate results turned out to be very useful (as soon as a person starts speaking, his speech is immediately transferred to the recognition service in small parts), which made communication using the application very fast. The important thing is that we used the open-source SpeechKit API as completely third-party developers. Download it from the site, read the official documentation - no additional help was needed. The experience of creating a prototype in the framework of the hackathon suggested that making a mobile application using SpeechKit was extremely simple and effective.

When choosing a platform, we decided to orient ourselves on Android, because according to our statistics, this OS is for most people with hearing loss. An interesting fact: mostly deaf geeks - they are very active in using smartphones and mobile Internet. Many of them do not think of life without unlimited access to the Internet through the phone. We bet on it, because SpeechKit so far works only with an internet connection.

Having formed a technical task for the product, we began to develop.

Work on the UX was carried out as follows. First, we wrote out what usage scenarios our application will have. For example, an unexpected conversation on the street or a conversation of the deaf who speaks ill, with a doctor. Then they tried to describe it with words, they began to sketch mokapy of screens in a very schematic, managerial way. With such a clickable prototype, it was already easier to think out the details.

As ideas in the interface, we threw examples from existing applications. For example, for the start screen were inspired by Shazam, but then everything was redone. With a simple prototype and ideas on the interface, we came to a UX-specialist and already thought out the logic of the screens with him. The result was a clickable prototype with all the buttons and details. Further, the UI-designer set to work, adding beauty and animation to our schematic prototype.

Now the application can solve only one-to-one communication problems and will be useful for people who have completely lost their hearing. While the user needs to take into account some of the conditions under which the conversation works as well as possible.

• Dialogue should be conducted in a low-noise environment.

• Recognition works with only one speaker.

• Speak slowly and clearly.

• Use simple sentence design.

We are heavily dependent on technology, so we focus on the future: technology does not stand still, with their development and the quality of our application will improve. In addition to improving personal communication, we have a lot of ideas for the next releases - we will try to implement them. After the first release, we still have a large stack of problems, the solution of which we plan to please our users in the near future. Tomorrow the application will be updated and its recognition will improve.

We continue to lead discussions in our VKontakte group, arrange surveys on items of interest to us, do not forget to answer questions. Some participants are very enterprising - they watch over the project. For example, one of them, on his own initiative, made a video in sign language about us and uploaded on his channel on YouTube. Then the application also had the working title “Dialogue”.

It turned out that he is a well-known video blogger among people with hearing loss - thanks to the mention on his channel many people came to our group.

Since deaf and hearing impaired people are mostly visuals, we decided to shoot a video for them, where we were going to tell why we are making the application and how it works. And again it was absolutely not difficult for us to find three people who were ready to play in it - they were eager to help the application. It was very nice to feel such support. Thanks to her, the belief that we are doing the right thing is incredibly strengthened.

One of the main attributes of the mobile application (after the content, of course) is considered the icon and title. In our case, they had to be not only flashy, beautiful and as informative as possible. It was also very important to make it so that people with hearing loss could understand that this application is for them. There were many options for icons: from the sound wave and the babbles in the messengers to the image of different gesture words.

As a result, we stopped at the gesture "Say". So that there was no misinterpretation, they showed each version to the deaf and asked what they saw. In this case, the gesture is similar to the “OK” sign.

With the title was a real epic. It seemed that almost all our friends, relatives, guys from the deaf initiative group were drawn into the process of inventing a name. There were many options: from the Latin "Surdo" to neutral "speech." We stopped in the end on “Conversation”, because it most fully reflects the meaning of the application, which is the missing link for communication between the hearing and the person with hearing loss. Many thanks to everyone who helped us work on the Talk. If you have any questions and suggestions, please contact us at deaf-support@yandex-team.ru or leave them in the comments.

Last fall, at the Moscow Institute of Physics and Technology, where I studied, we were taught the course “Creating New Internet Products” at the basic Yandex department . He was conceived as a kind of start-up workshop, in the framework of which it was necessary to come up with something that would successfully solve the existing problem with the help of Yandex technologies. With several of my classmates, we thought that communication of people who were turned off from the usual voice communication with the rest of the hearing world was a task that fits such criteria. According to the World Health Organization, 10% of the inhabitants of the Earth have hearing problems, 1.5-2% of them suffer from serious violations. There are 2.2 million of them in Russia. It would be great to do something that could help these people in everyday life.

In the process, we had the idea to recognize sign language using computer vision. But we quickly realized how difficult this task was for a group of fourth-year students. And then they remembered speech recognition and SpeechKit . So implementation of the idea has changed, but the essence has been preserved. We decided to create a mobile application that would recognize the interlocutor's speech, display it on the screen and give the user the opportunity to type an answer.

')

In addition, we suspected that the deaf and hearing impaired had a big problem with hails, so we came up with the following feature: the user clogs, for example, his name or any other call, and the phone vibrates when they are pronounced nearby.

Having decided on the idea, we realized that we know practically nothing about the world of the deaf. Before creating a prototype, it was necessary to dive into the subject area, understand how people with hearing loss need a software solution to their problem, and how this solution may look like. To do this, we communicated with several sign language interpreters and deaf people, conducted in-depth interviews, learned how the mentality and life of the deaf were arranged. It turned out that it was not at all difficult to find a person who was ready to talk with you live to help you plunge into the world of the deaf.

After a little research, we formed the vision of our product and started creating it. The teachers organized a hackathon for us in Yandex, where everything was according to the canons - pizza, redbull, ottomans, and nightly continuous non-stop coding. Before the hackathon, the guys from SpeechKit came to us and made a small brief on the API. At 7 am, we showed a prototype on Android to our teachers in the department, Sonya Terpugova and Denis Popovtsev. My classmate put on headphones, unscrewed them to the maximum, and we tried to talk. At this point, it became clear: it seems that something happened to us. (I want to say thank you to all the guys with whom we worked on the prototype - Antonina Parshina, Andrey Osipov, Nikita Kireev and Alexander Kuzmin.)

Naturally, we had an unhealthy desire to test the application on its target audience. I quickly found a group of deaf and hearing impaired students from MSTU. Bauman, who were engaged in studying English learning. We were asked to come to their class to talk about the application - they happily agreed. And here we are in a small office in front of 10 deaf and hearing impaired children and tell them about our prototype. Speech recognition was far from ideal: around was quite noisy, a lot of people. But even with this in mind, feedback was enthusiastic, because the dialogue was still possible to hold. The guys vying began to throw ideas for development, to notice shortcomings, to demand a version for iOS. Here we understood that the project must have a development.

The continuation of the project in Yandex

Then the winter session began. It was necessary to pass tests and exams, and the students had no time for projects. However, Sonya Terpugova, our teacher from the department, did not waste time in vain. She showed a prototype in Yandex, told about the feedback he received. So the project continued to develop already inside Yandex.

In February, I was offered an interview for the role of an application manager for the deaf and hearing impaired. Of course, I was very happy: the opportunity to continue working on the project already inside the company seemed very tempting. Interview, a few weeks of waiting - and I'm already working as a PM in Yandex. All the guys with whom we came up with the application, were glad that the project was developed.

In May, we started working on the project and the first month was devoted to a deeper immersion in the subject area. It was necessary to analyze what mobile applications, devices and services are for deaf and hearing impaired people. It seemed to me that recently in the IT sphere, they began to pay increased attention to people with disabilities, so there were a lot of projects.

All existing solutions that we found recognize and reflect the speech of the interlocutor. But, as a rule, they do not have a very good interface. The artisanal and multiplicity of such applications suggests that the problem is already being tried technically, but there is no systematic approach and driving force in this direction.

There are still announced applications. These are full-fledged startups with a large team working only on this project. The funds they receive through crowdfunding platforms, and gaining the necessary amount quickly enough. This confirms the need to create an assistant for the deaf: it is important for people to solve their problem, they are even willing to pay for it.

As a result of this analysis, it became clear that the problem is really sick and has already been noticed by many. We set out to create the highest quality and affordable solution for the deaf and hearing impaired based on speech recognition technology.

Work and study with the deaf and hearing impaired

To regularly communicate with our future users, immerse yourself in their lives and problems, we created a VKontakte group . Being engaged in the project, people began to notice deaf people everywhere - in the subway and electric trains, at the ticket offices in Auchan - and specially for such situations they prepared cards with an invitation to our group in VK. After each such meeting, they involuntarily looked at the list of new participants - I really wanted to see the familiar faces of those who came to the group by our direct invitation.

Pretty quickly, we recruited an initiative group of guys who were ready, at our request, to come to the interview, and to usability testing, and answer questions. Moreover, they themselves gave us advice, threw off important contacts - in general, they helped in every way to create the project. I would like to say a special thank you to Evgenia Khusnutdinova, who in the ROOI Perspektiva is engaged in the employment of the deaf and hard of hearing, and Denis Kuleshov, head of the laboratory of the GUIMC MGTU. Baumana - the largest center for working with people with hearing loss among Russian universities. The guys gave valuable information and introduced a lot of the deaf and hearing impaired, with whom we did hard work.

We invited people to the office, conducted in-depth interviews, showed a prototype. We asked to talk with us through the phone and watched what was convenient or inconvenient for us and the user. We saw a lot of mistakes in the original interface, when they began to use Talk in real conditions.

During this time we learned a lot of important things. For example, that there are four degrees of hearing loss. The first two degrees, as a rule, manifest themselves in old age, may be in any of us, but this is not always possible to understand. In the community of people with hearing loss, two categories are completely deaf (grade 4) and hard of hearing (grade 3–4).

Partially hearing people mostly read their lips, and completely deaf people are explained by gestures. Since most people do not know the sign language, the hearing-impaired communicate mainly within their small but very friendly company. Communication with people from public services or even healthcare is a forced and uncomfortable measure. The greater the degree of deafness, the less the degree of socialization of a person, and the less he receives information. At the same time, it spreads very quickly within the deaf community.

We wanted to help all these people. They have common problems - understanding complex terms, names, addresses. But at the same time, the most urgent problem for partially hearing people is telephone conversations and meetings, and for the deaf it is most important to at least somehow understand the interlocutor and be understood.

Somewhere after 15–20 interviews, we realized that many problems and use cases recur — this was a signal that we felt pain points. There were many unexpected findings from the study:

- The issue of navigation in the city and in public places is not so acute.

- Recognition of the deaf name (a hypothesis implemented in the framework of the prototype on the hackathon) is also not relevant - people from childhood very often hear their names, so they can isolate them. For the same reason, some deaf people do not understand their mothers a little.

- Almost all interviewees wear hearing aids. Even if they do not help to understand speech, they serve for security.

- When communicating hearing and inaudible suffers mainly the first! The deaf have become accustomed to such communication, but the hearing is not. Unfortunately, they scream, angry, etc.

Having processed all the information received, they began to think about the implementation.

Formation of ideas. Speechkit

The basic functionality of the application remains the same - it is the speech recognition of the interlocutor and the text output on the screen. A person with a hearing impairment can type text and transmit his answer using speech synthesis or by increasing the phrase to full screen.

To make it easier to dialogue, in the application there are prepared phrases. The user can add and their blanks that they may need.

The whole history of communication is stored on the phone, and the dialogues can be continued, which can be convenient for frequently communicating interlocutors. We also added a phrase highlighting function: an important message or dialogue can be noted to make it easier to find when needed.

For speech recognition, we use our native technology, of which we are very proud. SpeechKit is able to recognize voice well and synthesize speech, which was required in our application. In our case, it was decided to use the Notes language model - for free dictation of short texts, SMS and notes, which is closest to spontaneous speech.

SpeechKit developers have achieved speech recognition in 82%. The stream recognition mode with intermediate results turned out to be very useful (as soon as a person starts speaking, his speech is immediately transferred to the recognition service in small parts), which made communication using the application very fast. The important thing is that we used the open-source SpeechKit API as completely third-party developers. Download it from the site, read the official documentation - no additional help was needed. The experience of creating a prototype in the framework of the hackathon suggested that making a mobile application using SpeechKit was extremely simple and effective.

When choosing a platform, we decided to orient ourselves on Android, because according to our statistics, this OS is for most people with hearing loss. An interesting fact: mostly deaf geeks - they are very active in using smartphones and mobile Internet. Many of them do not think of life without unlimited access to the Internet through the phone. We bet on it, because SpeechKit so far works only with an internet connection.

Having formed a technical task for the product, we began to develop.

Application development

Work on the UX was carried out as follows. First, we wrote out what usage scenarios our application will have. For example, an unexpected conversation on the street or a conversation of the deaf who speaks ill, with a doctor. Then they tried to describe it with words, they began to sketch mokapy of screens in a very schematic, managerial way. With such a clickable prototype, it was already easier to think out the details.

As ideas in the interface, we threw examples from existing applications. For example, for the start screen were inspired by Shazam, but then everything was redone. With a simple prototype and ideas on the interface, we came to a UX-specialist and already thought out the logic of the screens with him. The result was a clickable prototype with all the buttons and details. Further, the UI-designer set to work, adding beauty and animation to our schematic prototype.

Now the application can solve only one-to-one communication problems and will be useful for people who have completely lost their hearing. While the user needs to take into account some of the conditions under which the conversation works as well as possible.

• Dialogue should be conducted in a low-noise environment.

• Recognition works with only one speaker.

• Speak slowly and clearly.

• Use simple sentence design.

We are heavily dependent on technology, so we focus on the future: technology does not stand still, with their development and the quality of our application will improve. In addition to improving personal communication, we have a lot of ideas for the next releases - we will try to implement them. After the first release, we still have a large stack of problems, the solution of which we plan to please our users in the near future. Tomorrow the application will be updated and its recognition will improve.

We continue to lead discussions in our VKontakte group, arrange surveys on items of interest to us, do not forget to answer questions. Some participants are very enterprising - they watch over the project. For example, one of them, on his own initiative, made a video in sign language about us and uploaded on his channel on YouTube. Then the application also had the working title “Dialogue”.

It turned out that he is a well-known video blogger among people with hearing loss - thanks to the mention on his channel many people came to our group.

Since deaf and hearing impaired people are mostly visuals, we decided to shoot a video for them, where we were going to tell why we are making the application and how it works. And again it was absolutely not difficult for us to find three people who were ready to play in it - they were eager to help the application. It was very nice to feel such support. Thanks to her, the belief that we are doing the right thing is incredibly strengthened.

One of the main attributes of the mobile application (after the content, of course) is considered the icon and title. In our case, they had to be not only flashy, beautiful and as informative as possible. It was also very important to make it so that people with hearing loss could understand that this application is for them. There were many options for icons: from the sound wave and the babbles in the messengers to the image of different gesture words.

As a result, we stopped at the gesture "Say". So that there was no misinterpretation, they showed each version to the deaf and asked what they saw. In this case, the gesture is similar to the “OK” sign.

With the title was a real epic. It seemed that almost all our friends, relatives, guys from the deaf initiative group were drawn into the process of inventing a name. There were many options: from the Latin "Surdo" to neutral "speech." We stopped in the end on “Conversation”, because it most fully reflects the meaning of the application, which is the missing link for communication between the hearing and the person with hearing loss. Many thanks to everyone who helped us work on the Talk. If you have any questions and suggestions, please contact us at deaf-support@yandex-team.ru or leave them in the comments.

Source: https://habr.com/ru/post/267679/

All Articles