How we developed a 3D people scanning system using Intel RealSense 3D cameras and Intel Edison technology

Cappasity has been developing 3D scanning technologies for two years now. This year we are launching a scanning software for ultrabooks and tablets with the Intel RealSense camera - Cappasity Easy 3D Scan , and next year - hardware and software solutions for scanning people and objects.

Due to the fact that I am an Intel Software Innovator and thanks to the Intel team that leads this program, we received an invitation to show our prototype for scanning people much earlier than planned. Despite the fact that there was very little preparation time, we decided to take the risk. And in this article I will tell you how our demonstration was created for the Intel Developer Forum 2015, which was held in San Francisco from August 18-20.

Our demonstration is based on the previously developed technology of combining depth cameras and RGB cameras into a single scanning complex (US Patent Pending). The general principle of operation is as follows: we calibrate the positions, inclinations and optical parameters of the cameras and due to this we can combine the data for the subsequent reconstruction of the 3D model. In order to make a 3D shooting of an object, we can position the cameras around the shooting object, rotate the camera system around the object, or rotate the object in front of the camera system.

')

We decided to choose Intel RealSense 3D cameras, since, in our opinion, they are the best solution in terms of price and quality. We are currently developing prototypes of two systems built using multiple Intel RealSense 3D cameras: a scanning box with several 3D cameras for instant scanning of objects and a system for scanning people to their full height.

We showed both prototypes at IDF 2015, and the prototype for scanning a person successfully accomplished the task of scanning a fairly large flow of visitors to the booth during the three days of the conference.

We now turn to how everything works. On the vertical bar, we secured three Intel RealSense long-range cameras so that the lowermost one took off the lower part of the legs, including the feet, the middle one - the legs and most of the body, and the uppermost - the head and shoulders.

Each camera was connected to a separate Intel NUC computer, and all computers were connected to a local network.

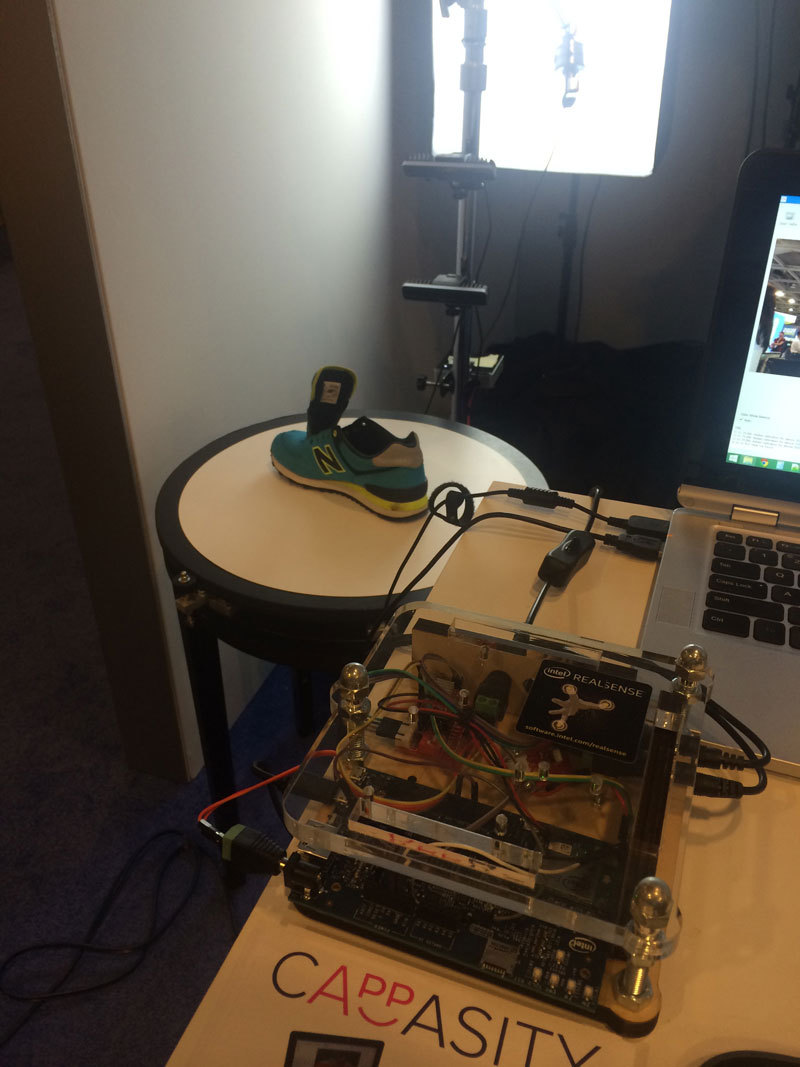

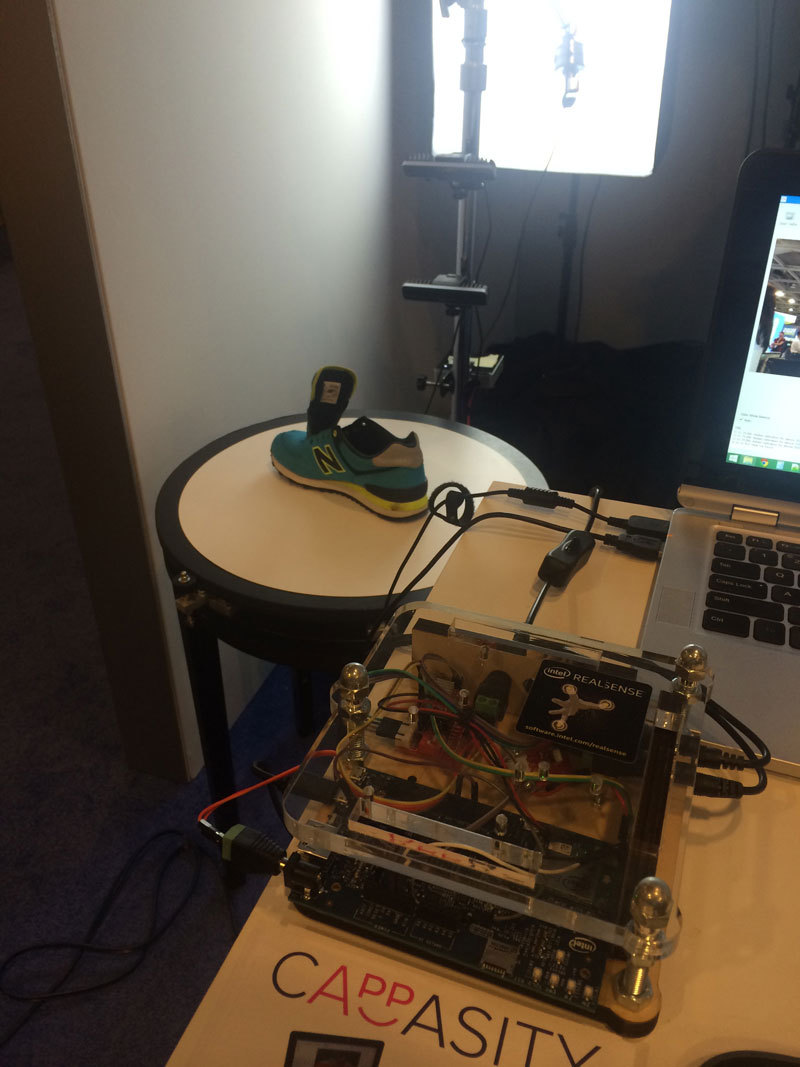

Since the cameras are attached to a stationary bar, we use a rotary table to rotate a person. The table has a simple structure based on plexiglass, bearings for rollers and a stepper motor. Through Intel Edison, it is connected to a computer and receives commands via the USB port.

In addition, a simple system of constant illumination is used, which allows you to evenly illuminate the frontal part of a person. Of course, in the future, all the elements described above will be enclosed in a single case, but so far we have demonstrated an early prototype of the scanning system, and therefore everything has been assembled on the basis of commercially available elements.

Our software has a client-server architecture, but the server can be run on virtually any modern computer. That is, we conditionally call the computer on which the calculations take place a server and often use a regular ultrabook with Intel HD Graphics as a server. The server sends a command to the NUC computers for recording, loads data from them, analyzes and reconstructs the 3D model.

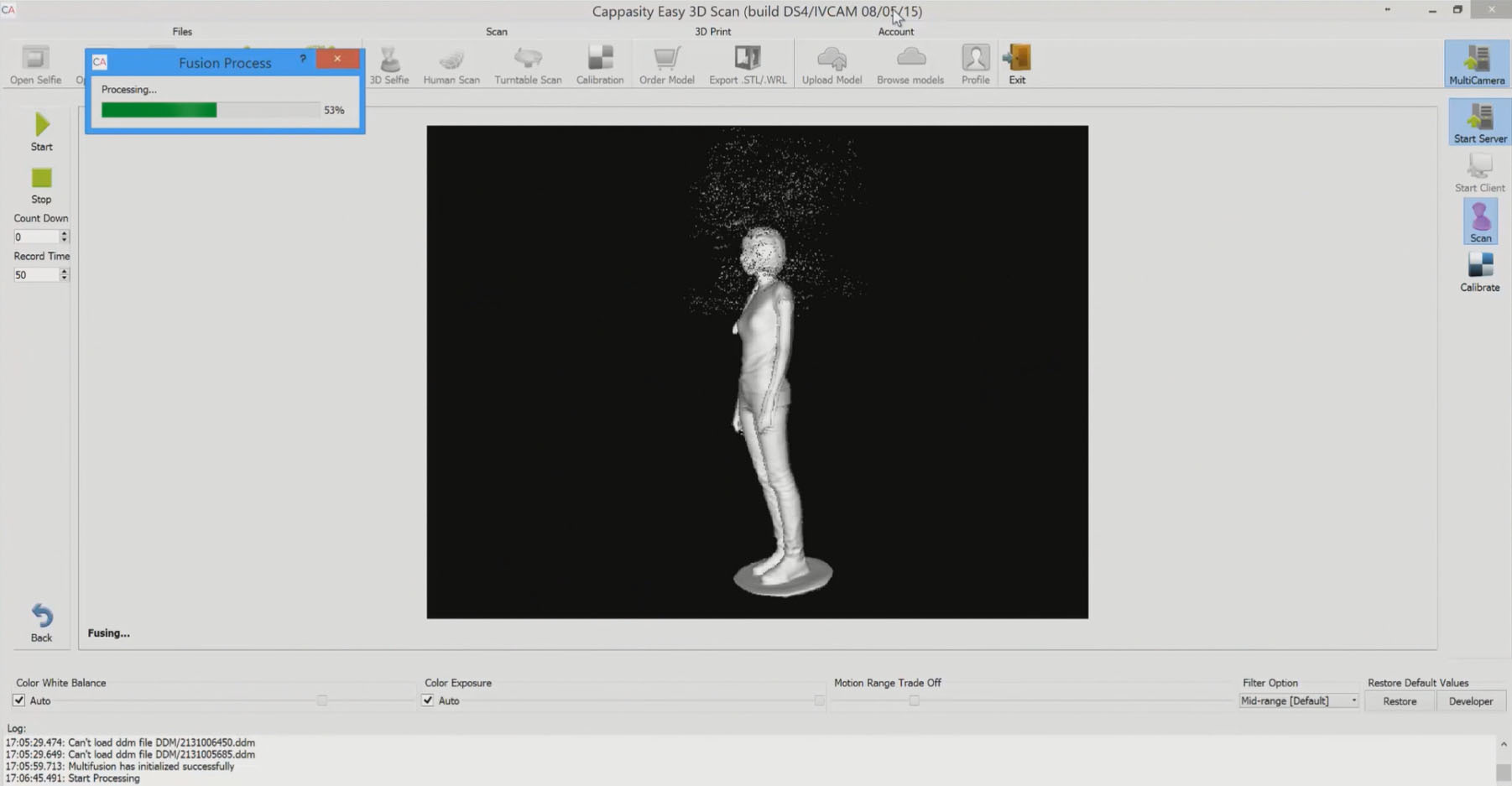

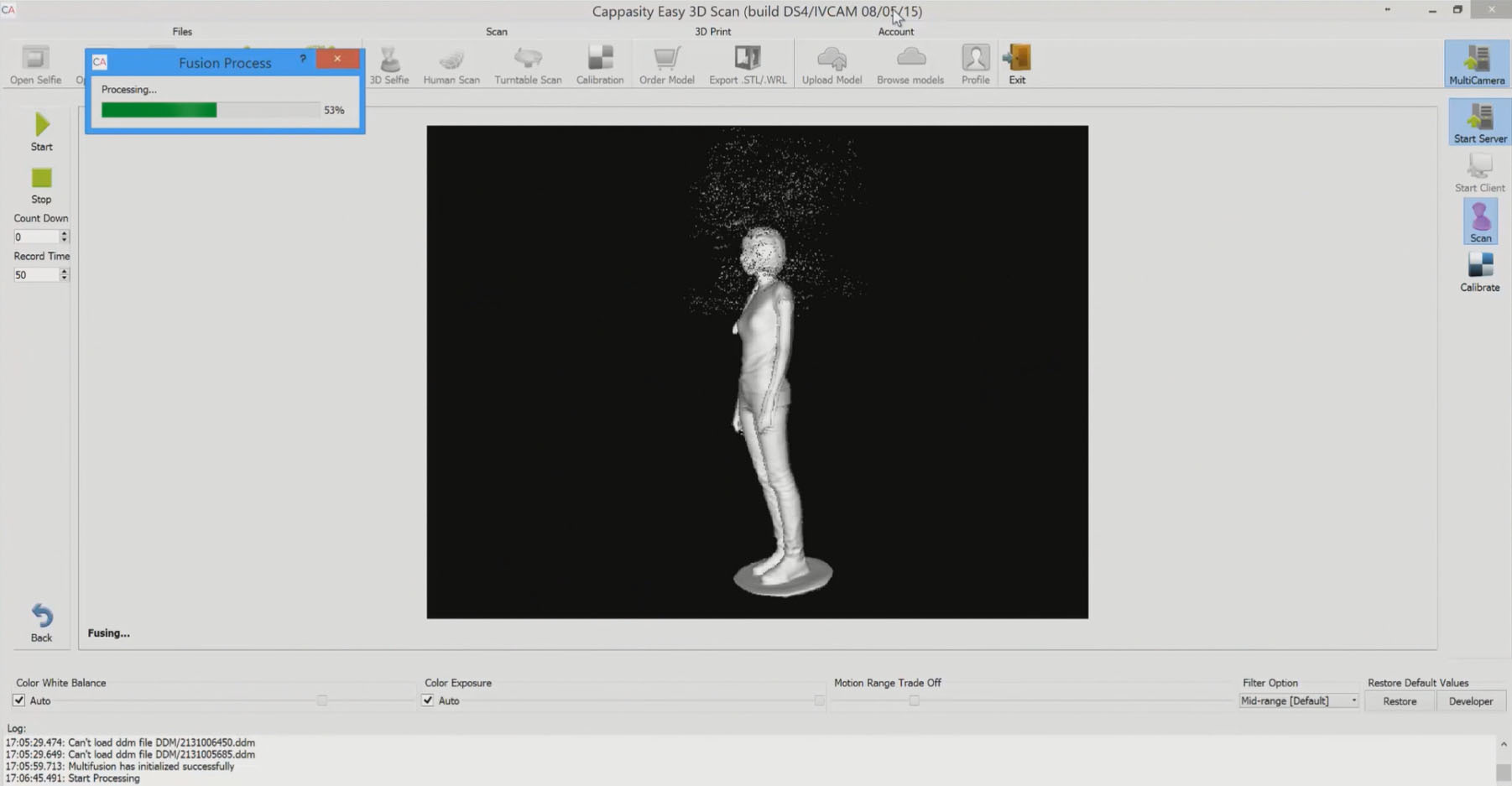

We now turn to the features of the problem being solved. At the heart of the 3D reconstruction that we use in Cappasity products is the implementation of the Kinect Fusion algorithm that we wrote. But here the task was much more difficult - in a month it was necessary to write an algorithm that could reconstruct data from several sources. We called it Multi-Fusion, and in its current implementation it can integrate data from an unlimited number of sources into a single voxel volume. In the case of a human scan, we had three data sources.

So, the first step is calibration. Cappasity software allows you to calibrate devices in pairs. At one time, we had a year on R & D, and before IDF 2015, we were very helpful with the old developments. For a couple of weeks, we reworked the calibration and maintained the voxel volumes obtained after Fusion. Prior to this, calibration worked more with point clouds. Calibration needs to be performed only once after installing the cameras, and it takes no more than 5 minutes.

Then there was a question about the approach to data processing, and after conducting a series of studies, we chose to post process data. Thus, at the beginning we record data from all the cameras, then we upload them over the network to the server and then we begin to reconstruct sequentially. Flows of color and depth are recorded from each camera. Thus, we have a complete copy of the data for further work with them, which is extremely convenient in view of the continuous improvement of post-processing algorithms, and we have written them literally in the last days before the IDF in an emergency mode.

Intel RealSense long-range R200 cameras work better with black color and complex materials than Intel RealSense F200 cameras. The number of failures in tracking was minimal, which, of course, made us happy. And the main thing is that cameras allow you to shoot at the distances we need. So that everything was reconstructed quickly even on HD Graphics 5500+, we optimized our Fusion algorithm for OpenCL. Noises were removed by Fusion and additional data segmentation after the construction of a single mesh.

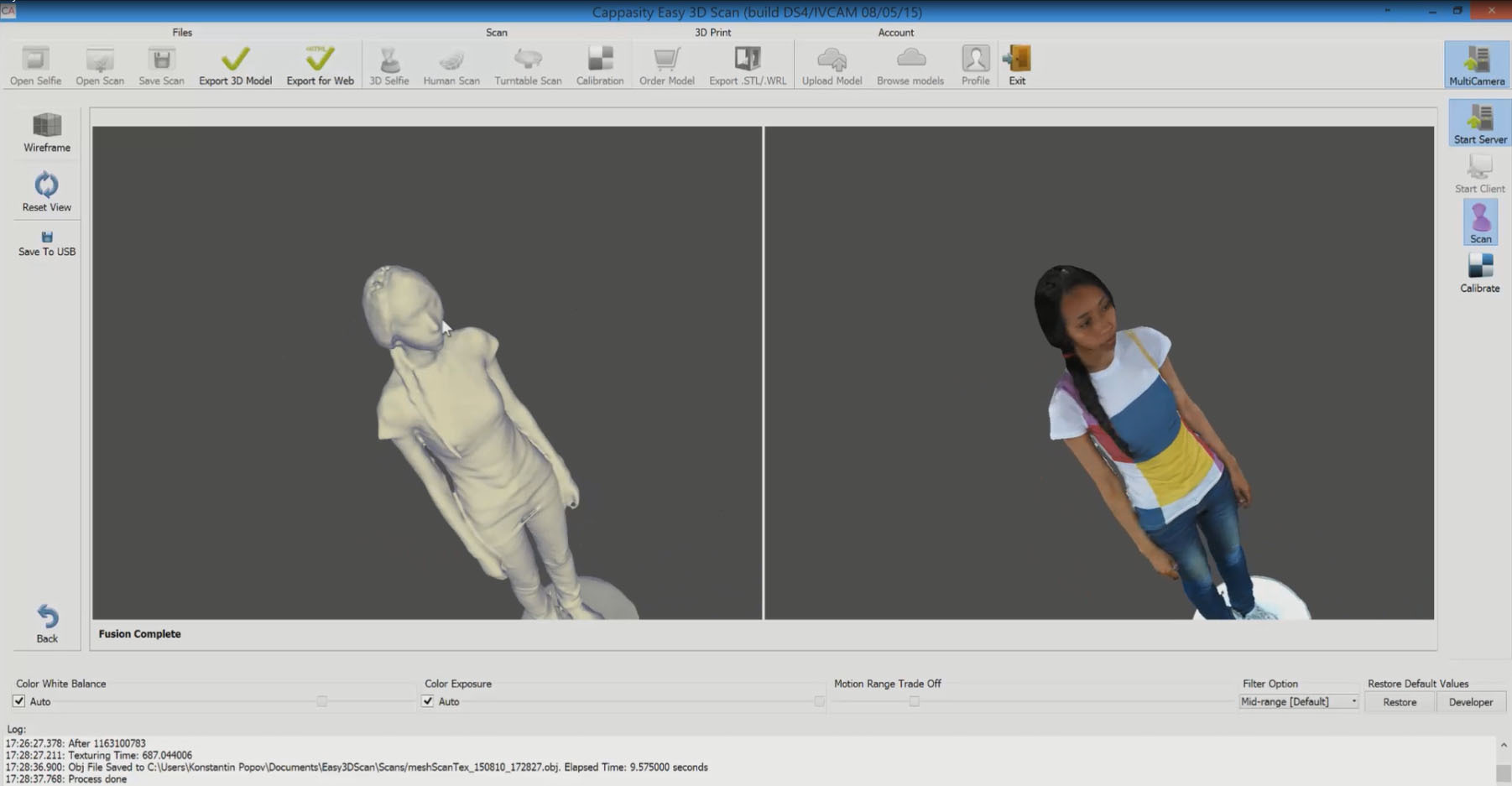

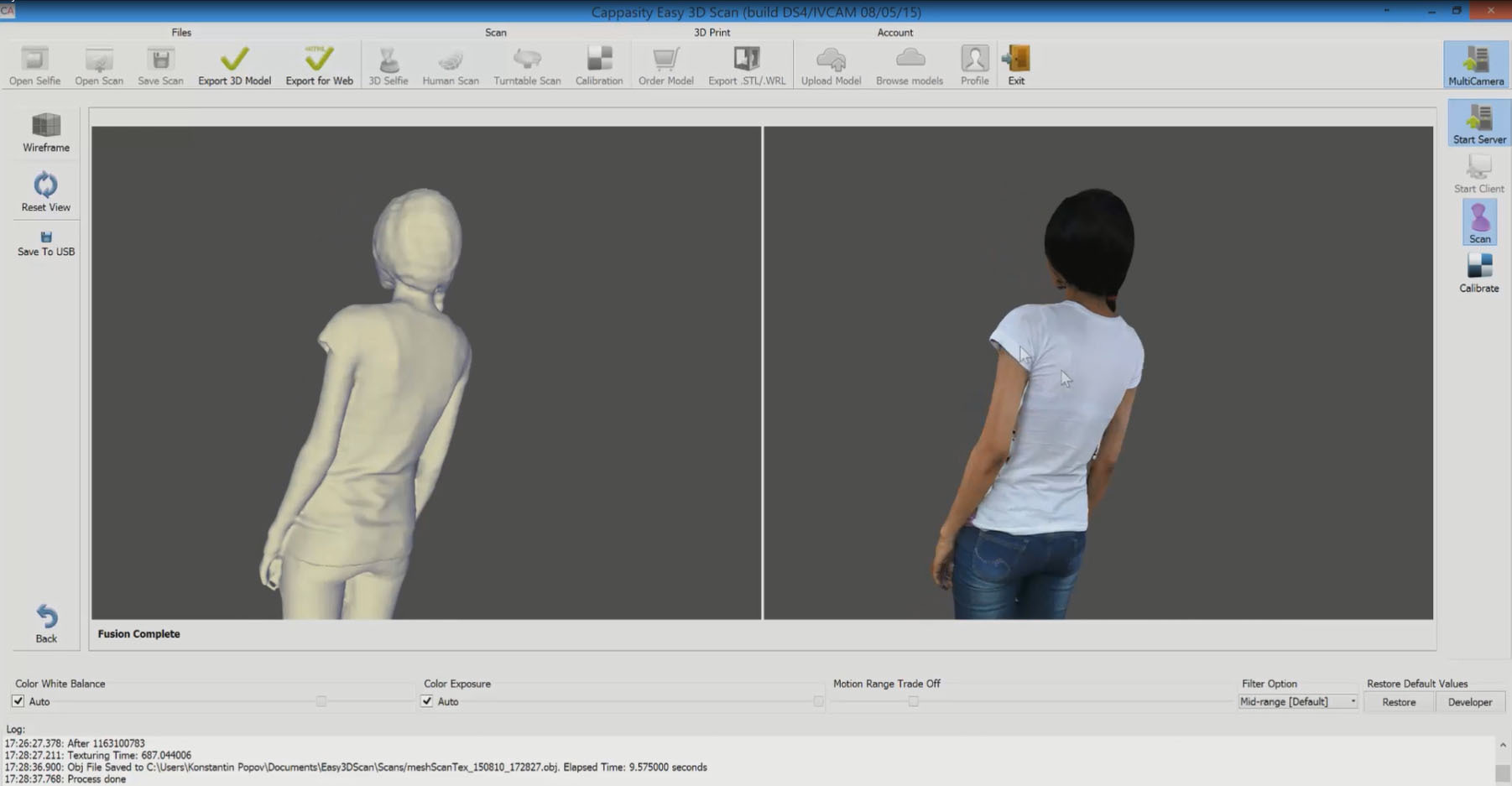

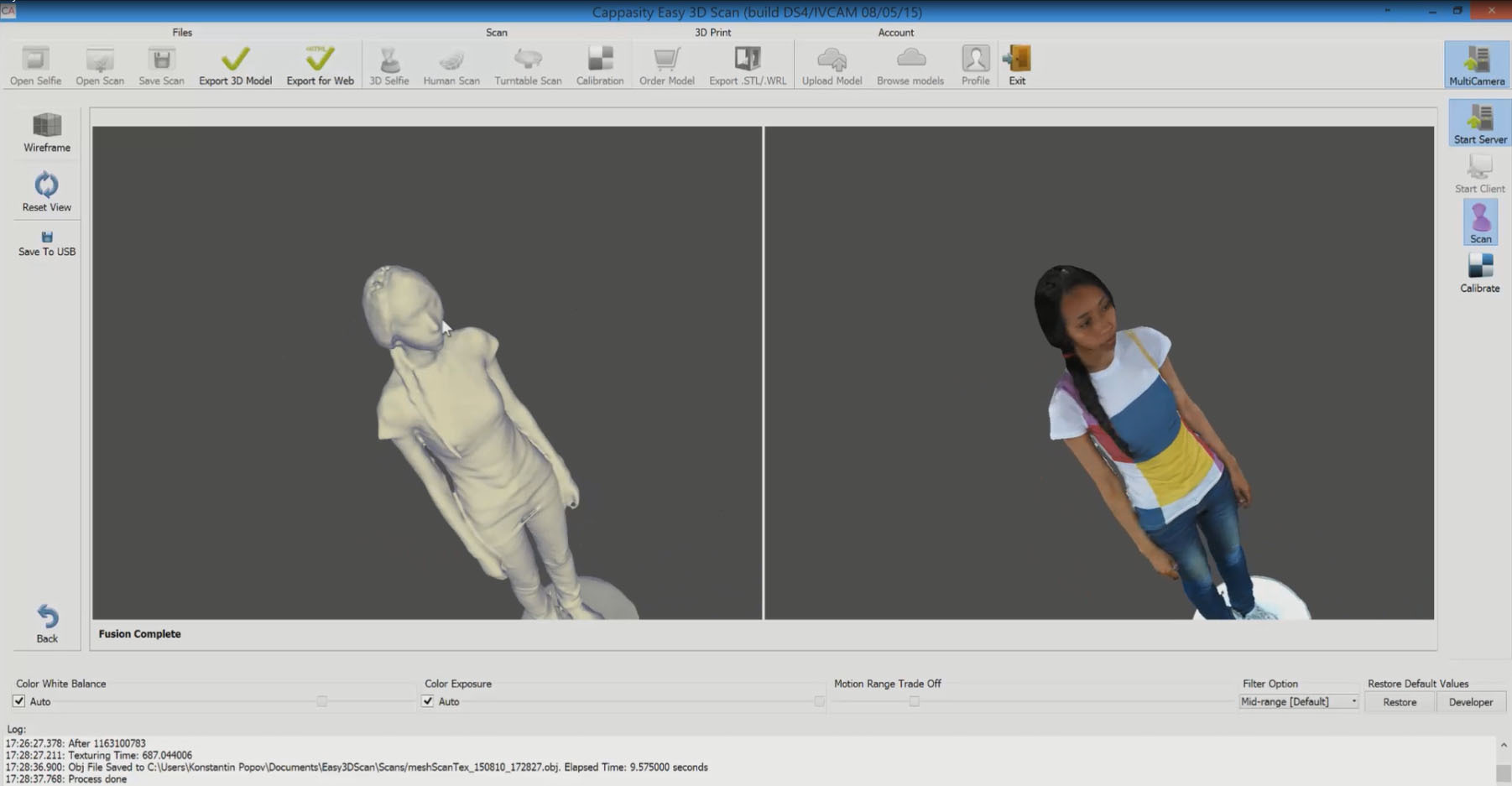

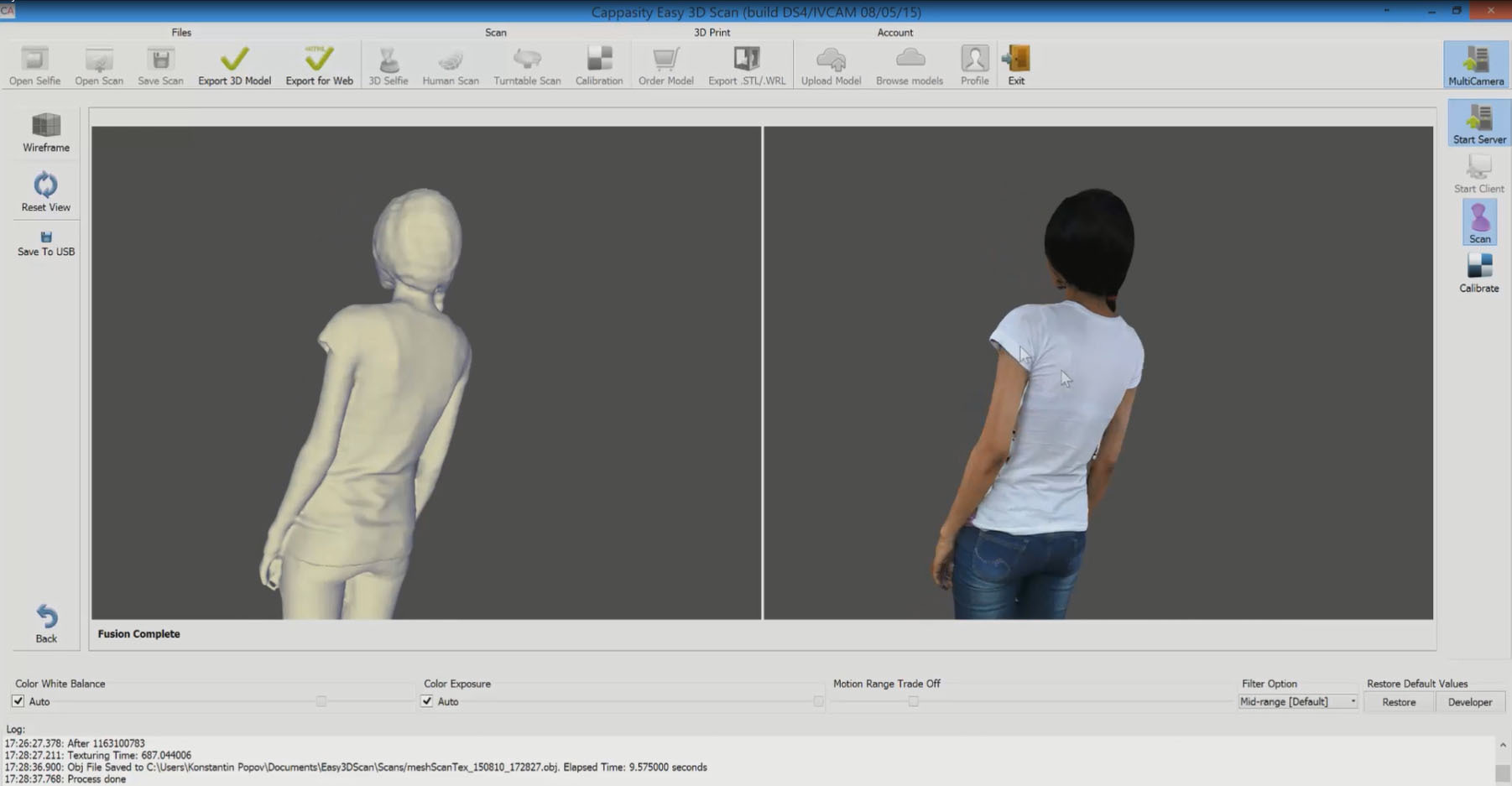

In addition, we modified the high-resolution texturing algorithm to IDF. Here we have the following approach: we take pictures in full resolution of the color camera and then project them onto the mesh. We do not use voxel colors, as this blurs the quality of the texture. The projection method is extremely difficult to implement, but it gives us the opportunity to use not only built-in cameras, but also external ones as a color source. For example, the scanning box we are developing uses DSRL cameras for high resolution textures, and this is extremely important for our e-commerce clients.

But the cameras built into RealSense RGB gave excellent colors. Here is an example of a model after texture mapping:

We are currently working on a new algorithm that would allow us to eliminate texture shifts and plan to finish it before the release of our Easy 3D Scan product.

As you can see, at first glance, a simple demonstration is worth a lot of complex code, thanks to which we can compete with scanning systems that cost about $ 100K +. Intel RealSense cameras are affordable and can change the market for B2B solutions.

What is the advantage of the human scanning system we are developing:

We understand that we have not yet discovered the full potential of Intel RealSense cameras, but we are sure that already at CES 2016 we will be able to show significantly improved products all in our hands!

Due to the fact that I am an Intel Software Innovator and thanks to the Intel team that leads this program, we received an invitation to show our prototype for scanning people much earlier than planned. Despite the fact that there was very little preparation time, we decided to take the risk. And in this article I will tell you how our demonstration was created for the Intel Developer Forum 2015, which was held in San Francisco from August 18-20.

Our demonstration is based on the previously developed technology of combining depth cameras and RGB cameras into a single scanning complex (US Patent Pending). The general principle of operation is as follows: we calibrate the positions, inclinations and optical parameters of the cameras and due to this we can combine the data for the subsequent reconstruction of the 3D model. In order to make a 3D shooting of an object, we can position the cameras around the shooting object, rotate the camera system around the object, or rotate the object in front of the camera system.

')

We decided to choose Intel RealSense 3D cameras, since, in our opinion, they are the best solution in terms of price and quality. We are currently developing prototypes of two systems built using multiple Intel RealSense 3D cameras: a scanning box with several 3D cameras for instant scanning of objects and a system for scanning people to their full height.

We showed both prototypes at IDF 2015, and the prototype for scanning a person successfully accomplished the task of scanning a fairly large flow of visitors to the booth during the three days of the conference.

We now turn to how everything works. On the vertical bar, we secured three Intel RealSense long-range cameras so that the lowermost one took off the lower part of the legs, including the feet, the middle one - the legs and most of the body, and the uppermost - the head and shoulders.

Each camera was connected to a separate Intel NUC computer, and all computers were connected to a local network.

Since the cameras are attached to a stationary bar, we use a rotary table to rotate a person. The table has a simple structure based on plexiglass, bearings for rollers and a stepper motor. Through Intel Edison, it is connected to a computer and receives commands via the USB port.

In addition, a simple system of constant illumination is used, which allows you to evenly illuminate the frontal part of a person. Of course, in the future, all the elements described above will be enclosed in a single case, but so far we have demonstrated an early prototype of the scanning system, and therefore everything has been assembled on the basis of commercially available elements.

Our software has a client-server architecture, but the server can be run on virtually any modern computer. That is, we conditionally call the computer on which the calculations take place a server and often use a regular ultrabook with Intel HD Graphics as a server. The server sends a command to the NUC computers for recording, loads data from them, analyzes and reconstructs the 3D model.

We now turn to the features of the problem being solved. At the heart of the 3D reconstruction that we use in Cappasity products is the implementation of the Kinect Fusion algorithm that we wrote. But here the task was much more difficult - in a month it was necessary to write an algorithm that could reconstruct data from several sources. We called it Multi-Fusion, and in its current implementation it can integrate data from an unlimited number of sources into a single voxel volume. In the case of a human scan, we had three data sources.

So, the first step is calibration. Cappasity software allows you to calibrate devices in pairs. At one time, we had a year on R & D, and before IDF 2015, we were very helpful with the old developments. For a couple of weeks, we reworked the calibration and maintained the voxel volumes obtained after Fusion. Prior to this, calibration worked more with point clouds. Calibration needs to be performed only once after installing the cameras, and it takes no more than 5 minutes.

Then there was a question about the approach to data processing, and after conducting a series of studies, we chose to post process data. Thus, at the beginning we record data from all the cameras, then we upload them over the network to the server and then we begin to reconstruct sequentially. Flows of color and depth are recorded from each camera. Thus, we have a complete copy of the data for further work with them, which is extremely convenient in view of the continuous improvement of post-processing algorithms, and we have written them literally in the last days before the IDF in an emergency mode.

Intel RealSense long-range R200 cameras work better with black color and complex materials than Intel RealSense F200 cameras. The number of failures in tracking was minimal, which, of course, made us happy. And the main thing is that cameras allow you to shoot at the distances we need. So that everything was reconstructed quickly even on HD Graphics 5500+, we optimized our Fusion algorithm for OpenCL. Noises were removed by Fusion and additional data segmentation after the construction of a single mesh.

In addition, we modified the high-resolution texturing algorithm to IDF. Here we have the following approach: we take pictures in full resolution of the color camera and then project them onto the mesh. We do not use voxel colors, as this blurs the quality of the texture. The projection method is extremely difficult to implement, but it gives us the opportunity to use not only built-in cameras, but also external ones as a color source. For example, the scanning box we are developing uses DSRL cameras for high resolution textures, and this is extremely important for our e-commerce clients.

But the cameras built into RealSense RGB gave excellent colors. Here is an example of a model after texture mapping:

We are currently working on a new algorithm that would allow us to eliminate texture shifts and plan to finish it before the release of our Easy 3D Scan product.

As you can see, at first glance, a simple demonstration is worth a lot of complex code, thanks to which we can compete with scanning systems that cost about $ 100K +. Intel RealSense cameras are affordable and can change the market for B2B solutions.

What is the advantage of the human scanning system we are developing:

- Affordable solution and ease of setup and use - everything works by pressing a single button;

- Compactness - the scanning complex can be located in any trading floors, entertainment centers, medical centers, casinos, and so on;

- Quality models are suitable for 3D printing, content development for AR / VR applications;

- The accuracy of the resulting mesh allows you to take the size of the scanned object.

We understand that we have not yet discovered the full potential of Intel RealSense cameras, but we are sure that already at CES 2016 we will be able to show significantly improved products all in our hands!

Source: https://habr.com/ru/post/267561/

All Articles