Advantageous replacement with tiring arrays: review and tests of the HP 3PAR 7400 full-flash hardware

Kick such a box with extreme caution.

In general, I just wanted to say that if you watch an all-flash array to replace the tiring with a price per gigabyte a little lower , reliable, and from a top vendor - read on. The minimum American street price, announced by the vendor, starts with 19 thousand dollars. But it is clear that on projects where such iron is needed, there is always a discount, a program or a special offer that can reduce the price of the specification, sometimes significantly. Look inside to see why SSD can be cheaper than a tiring array, in particular, for VDI.

The story began of this piece of iron with the fact that HP has long suspected that after about 2012, more and more server capacity will require fast storage systems. Therefore, they bought the 3PAR office, which riveted good midrange storage systems, took the file, and it started. What happened - now show.

')

Architecture

When I first got the HP 3PAR 7400 in my hands, I was skeptical of him as my younger brother in the Full Flash family of arrays. But two days spent with the array, greatly changed my opinion.

The HP 3PAR 7400 expands to 4 controllers, can carry 240 SSD drives, holds 96 Gb of RAM into each controller pair, and is simply very beautiful. The iron part of the array is not much different from the usual mid-rande arrays, but the software was pleasantly surprised by its architecture. It looks more like high-end software arrays, than to what I'm used to in the middle-level arrays.

The facts are as follows:

The array can be expanded to 4 controllers, that is, the HP 3PAR fault tolerance level (if properly configured) is much higher than that of most mid-level arrays.

Then the cache. In most mid-range arrays, the controller pair has a cache divided into read and write. The write cache is mirrored between the controllers, but only as protection, and the heads use their memory separately. If the storage system wants to get a significant part of the market, it needs to learn how to dynamically resize the memory allocated for reading and writing. HP 3PAR went further. Engineers made a Cache-Centric array with shared memory for all controllers. As a result, a team of 4 controllers in the HP 3PAR works significantly better than 4 loosely coupled controllers in most mid-range storage systems.

Deduplication No one likes doing the same job twice. With this marketing statement, many arrays rushed into the market, offering solutions with deduplication. If to understand, the majority of these arrays do deduplication in the post-process. Write data to disk, and then, when there is free time, read this data and try to deduplicate. Thus, the work was done not twice, but four times. In the plus - some gain in place with a small percentage of the record. In other cases - not applicable. 3PAR does deduplication on the fly without recording intermediate data. Thus, a well-deduplicated dataset results in a gain in the speed of the array (less data needs to be written to the disks, some of it is eliminated as already recorded), and space is saved.

The system also does not write empty (zero) blocks. They are eliminated at the level of ASICs.

Among us are left-handed and right-handed. Similarly with arrays. There are Active \ Passive arrays (these are one-armed), Active \ Active ALUA (these are the average left-handers and right-handers), and there are honest Active \ Active. HP 3PAR is exactly that. The array controllers are equal, no problems with moving the moons and inactive paths.

Array controller

Updating arrays is better online. The average array during the upgrade reloads the controllers one by one, which means that the host at some time loses half the way. Usually passes without problems, but sometimes a host can dull and not restore the path on time. Updating the next controller will put the other half of the paths. Applications of such treatment can not forgive and fall with a thud, causing alerts in the monitoring systems and gray hair on the heads of admins. In 3PAR we tried to take into account the problems of ordinary people and save us from them. In the event of a controller being upgraded or broken, its WWNs will temporarily move to the next controller. As a result, the paths are not lost, the butt does not fall, and I will sleep more calmly (and more).

Placement of data. Typically, storage is sliced into fixed RAID groups. The disadvantages of this approach are as follows:

- The moons are located on only one RAID group.

- If the disk fails, one RAID group is involved in the rebuild. Slow and unreliable.

- Need a dedicated HotSpare drive. It is clear that the place must be reserved in any case, but the disk is idle. I would like to use it, especially if it is an SSD in the FULL FLASH array.

- On the same set of disks can lie the moon only with the same level of protection.

Here the solution is this: all disks are cut into small pieces from which moons can be made. For each moon, you can choose the level of protection and the number of pieces for which it will be considered as parity. On one disk can lie the moon with a different level of protection, and each moon is smeared on all disks. It turns out:

- Each moon is evenly distributed across the storage disks.

- If the disk breaks, all disks of the array are involved in the rebuild.

- You do not need a dedicated HotSpare disk.

- On the same disks data with a different level of protection is easily placed.

Well, how do you like the functional? For the price - mid-range. Compare the functionality with what is in your server.

The theoretical part is over, it's time to move on to testing. I want to note that performance testing does not pretend to "complete report on the performance of the array." This requires a week and several configuration options. Here are a few basic tests that provide a general understanding of storage capabilities. There are only 12 disks in the test piece.

Our copy

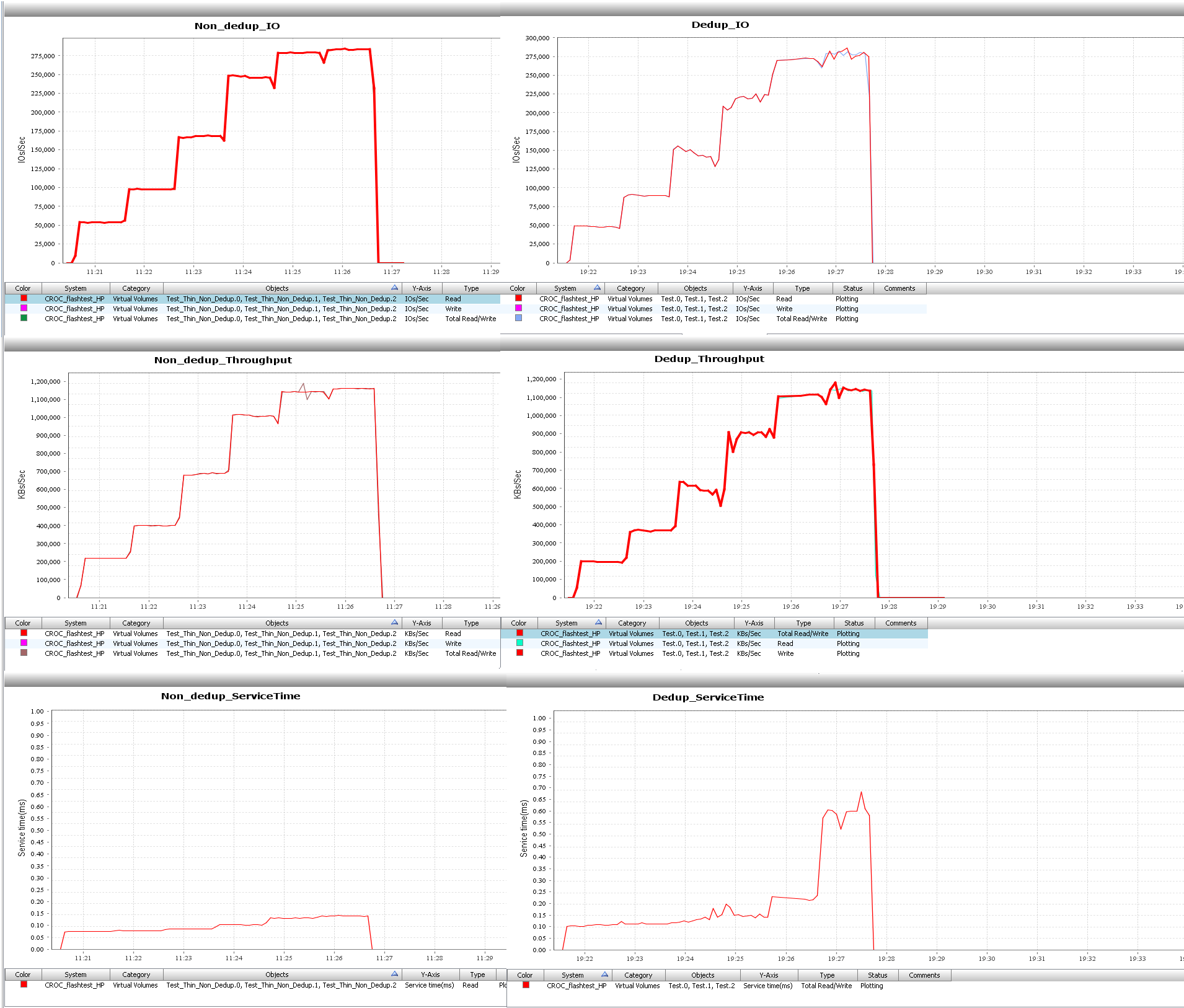

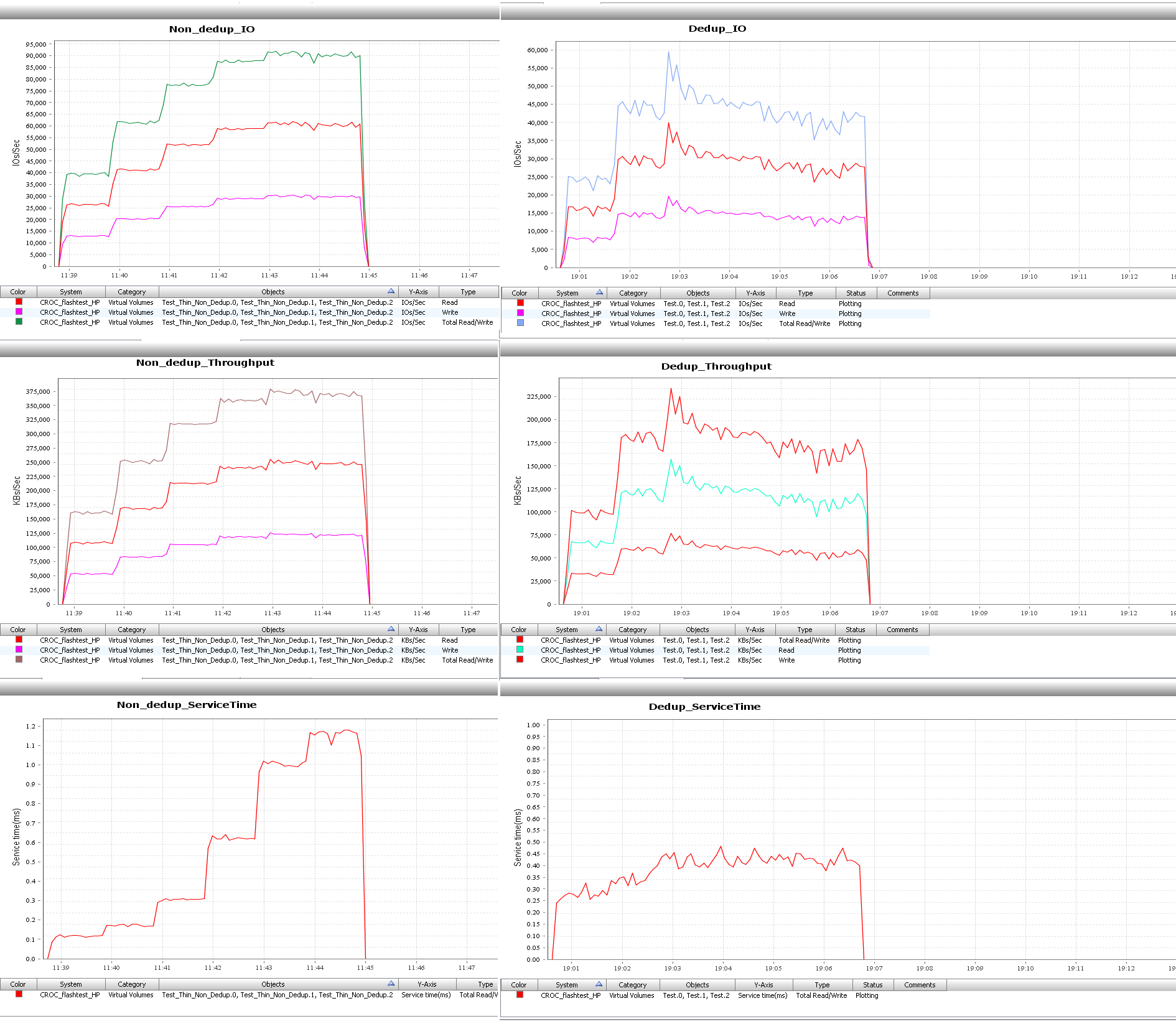

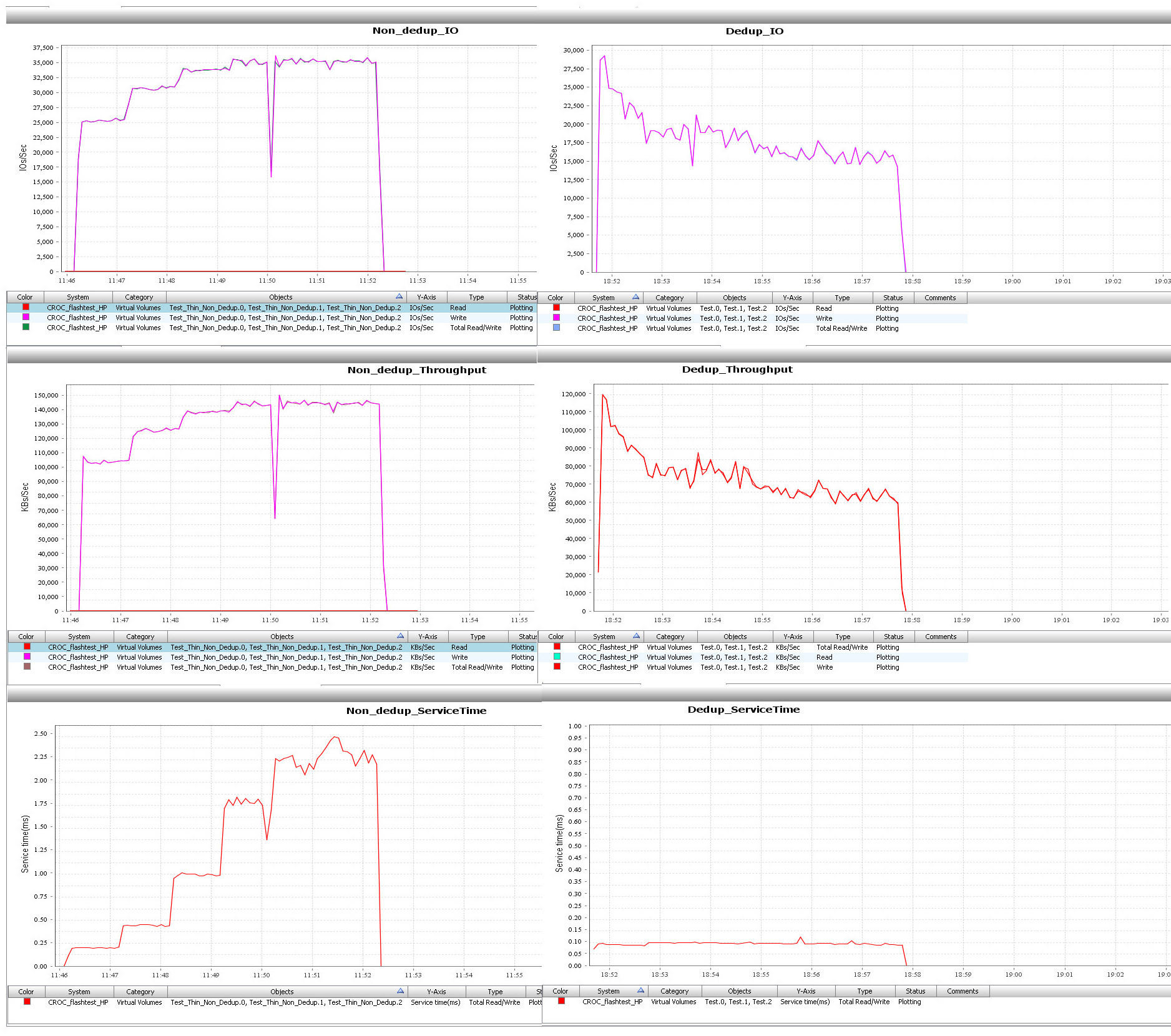

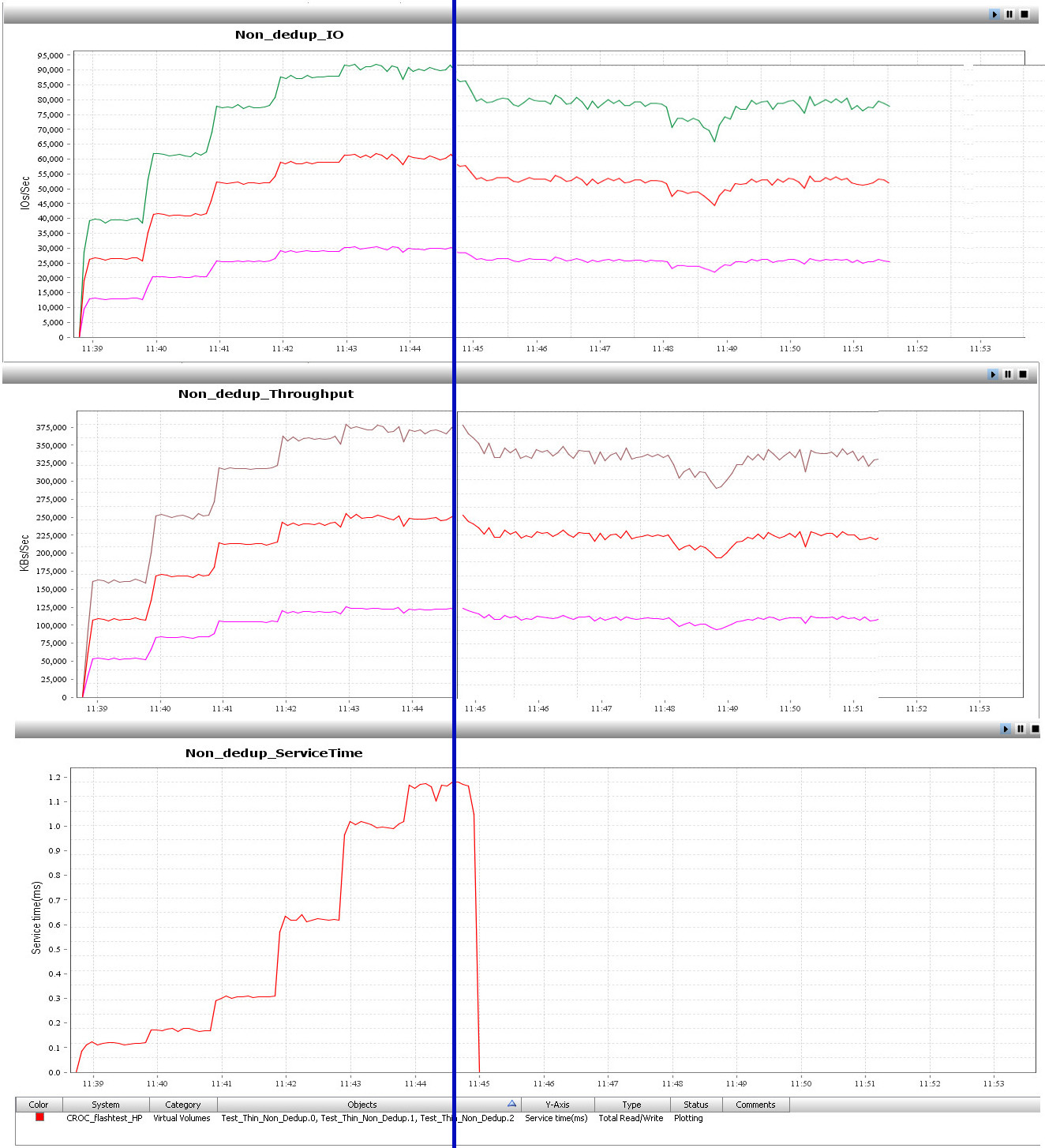

To assess the performance of storage systems with a large number of disks, you will have to use logic and a calculator. I tested three types of load. The charts below. On the left - the moon without deduplication, on the right - the same moon with deduplication. The data that I wrote to the disk is completely random and absolutely not deduplicating - it was interesting for me to look at the "overhead" of deduplication.

Test 1. 100% reading, 100% Random, blocks 4k

On 12 SSD drives, the array makes about 300,000 IO with a response time of less than a second. Unfortunately, the response time on the moons with deduplication starts to “collapse” after 250,000 IO, but the result is still less than a millisecond.

Test 2. 70% read, 30% write, 100% Random, 4k blocks

From the graphs it is clear that the moon with deduplication noticeably lost in performance on a mixed load. But 90,000 IO on a non-duplicated moon with a response less than 1 ms in a 12-disk storage system is a good result for midrange storage systems. For moons with deduplication on this configuration, 45,000 is the ceiling, although the response time is normal.

Test 3. 100% record, 100% Random, blocks 4k

Quite worthy for 100% random recording. The response time, of course, is a bit overstated, but this is easily solved by adding disks.

Test 4. Classic test with pulling out a disc on a mixed load.

It can be seen that the performance of the array fell slightly, but since all disks are involved in the rebuild at the same time, there was no sharp drop in performance.

The most important

The array will be an excellent all-flash substitute for the old man for use in a mixed or virtual environment. An all-flash array is usually more expensive than a tiring solution, but if we consider in-line deduplication, then everything may not be so obvious. With a deduplication ratio of 2, Flash arrays are starting to become cheaper than the tiring ones. But you need to be considered for a specific case.

The equipment is imported into Russia through a distributor. On average, even now, it takes 8 weeks from placing an order to delivery. Configurator bills are billed in dollars, if necessary we can convert the specification into rubles for the contract. By the way, this equipment can be ordered on the program Flexible Capacity Service.

What do you need to know about this iron?

A maximum of 240 SSD drives can be scored in the array. Interface - FC, 8 Gb / s, 8 ports, you can add another 16 ports FC or 10 GbE iSCSI. At least two controllers, maximum 4 controllers. RAID 1, RAID 5 and RAID 6. 64 GB cache per controller pair.

Special features

- The piece of iron is very cool. 3PAR is good for mixed and virtual environments , great for all well-duplicated data (all sorts of virtualization, VDI). Can be used as a universal storage system. It will work like a Kalashnikov machine gun, but the price per gigabyte in full Flash configuration will be slightly higher than that of tiring arrays.

- There are some features. If you write a lot of information on the storage for a long time, the performance drops . In my opinion, this is due to the CMLC FLASH memory technology used in the disks, which makes it possible to significantly win the price of the disk. It seems that the array at certain times artificially limits the recording speed in order to avoid premature disc wear, but there is no documentary evidence of this fact.

- On board there is free (for other vendors, this option often goes for extra money) deduplication , but don’t expect much deduplication of the database. She sharpened for virtual environments. Deduplication block - 16 kilobytes.

- Vendor writes the price per gigabyte 1.5 dollars. In our test, it turned out higher, but still very tasty.

Here is a link to the description of different technological chips of the array.

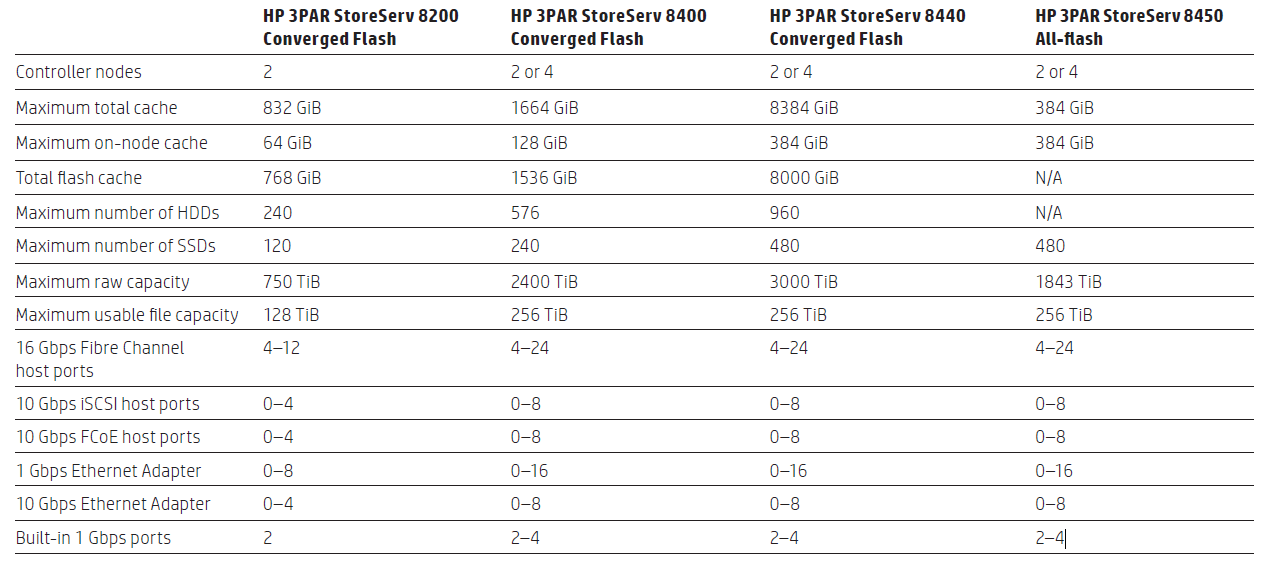

Line model differences

This testing is completed. Ready to answer questions, write in the comments or directly to the email rpokruchin@croc.ru.

Source: https://habr.com/ru/post/267395/

All Articles