Monitoring delays during online video broadcasts and teleconferences

About a week ago there was an interesting article about the methods of organizing video broadcasts with the lowest possible delay, and a number of legitimate questions were voiced in the comments, many of which I did not see a complete and meaningful answer. In my post I would like to add material from my colleagues and share with my readers my thoughts on the following issues:

Why do we need a minimum delay?

How can you easily and visually measure the delay in video transmission?

What elements of the video path to increase the delay?

Our top result - FullHD signal flew to the server and back in less than half a second.

')

Interesting? Then read on.

So, why do we need a minimum delay ?

My company organizes online video broadcasts, and lately we have been very actively developing the topic of telemedicine - we arrange broadcasts of surgical master classes, and we organize almost full-fledged TV bridges between operating and conference rooms: an image is transmitted from external cameras and medical devices ( endoscopes, laparoscopes, robotic surgeons), the spectators sit in comfortable chairs at the other end of the city, look at the healthy FullHD-picture screen, and ask the doctors if necessary. In this scenario, the comfort in communication turned out to be very important for customers - everyone got used to the phone and Skype, and even a 3-4-second delay significantly complicates the interaction between the hall and the operating room, and it is very distracting for surgeons who perform the real operations.

Here are the typical conditions in which we have to work:

- 720p or 1080i signal, most often in SDI format, received either directly from the camera or medical stand, or from the program output of the switcher;

- most often a fairly slow Internet, or its complete absence - a considerable part of the projects we do through 4G networks;

- lack of external IP;

- highly dynamic and extremely detailed picture, the need to maintain adequate color reproduction;

I will say right away: we studied the options for Skype and video conferencing systems (video conferencing), tested them and safely buried them.

The main problems of Skype are the impossibility of manually adjusting the video quality parameters, and its “too smart” coding algorithm, which can independently begin to degrade picture quality if it suddenly decides that it lacks the width of the Internet channel. Well, to get in Skype a picture from the SDI output of the switcher is a separate witch, not every Muggle is subject to ...

The video conferencing also did not turn out smoothly - the essential requirements for the bandwidth of the channel, the availability of external IPs, the lack of professional video and audio inputs, a completely horse price tag for both buying and renting, and at the same time “no” quality. Yes, it’s possible that the VKS can be beautifully shown by the uncle and aunt in suits sitting in a fashionably decorated rally room, but when at one status medical event our competitors launched a broadcast from the laparoscopic stand through the VKS, the picture came terrifying video signal with high detail, and instead of FullHD on the screens there was a completely infernal kaleidoscope that most resembled the trailer for the Pixels movie.

The picture quality on our mass broadcasts conducted via our own servers based on Wowza was much better than on Skype and on video conferencing, plus we had a decent fleet of encoders - powerful compact computers with SDI video capture cards, which made it possible to do several projects without nerves at the same time.

I set before my engineers the task of “squeezing” the maximum possible speed out of Wowza, and immediately the question arose of how and what to measure the delay ? Frankly, they thought for a long time, and therefore the result looks even more amusing, once again confirming that everything ingenious is simple.

We took as a basis the classic “countdown” used (or rather, not used for a long time) in film and television production, making it a bit more informative and detailed.

The measurement procedure is ridiculously simple: we turn on the video in the player, run through the entire video path, put the transmitting and receiving computer screens nearby, tritely photograph both screens on the phone, subtract the smaller figure from the larger one, and get the delay time accurate to the frame. Accordingly, if the transmission and reception points are removed, then you can send a signal from site A, receive it at site B, immediately send it back to site A, take a similar picture, and divide the result into two. The revolving timecode and the red square that is pointing back and forth allow you to visually monitor the possible misalignments of the transmission of a video stream of the “stuck” type and “explosions” of the picture.

Using this simple tool, we conducted a total revision of our video path, conjured with the server settings, and literally milliseconds squeezed all the delays, having a rather good result - when broadcasting via “dedicated” we received FullHD video speed of 4-5 Mbit / s in 11-16 frames (about half a second). When broadcasting over 4G networks and when transmitting over long distances (for example, testing St. Petersburg - Astana), the delay increased by about half a second. Here, of course, complex routing between the points of transmission and reception is already beginning to be felt.

For obvious reasons, I will not disclose the nuances of “tuning” a broadcast server, but I want to draw attention to an important nuance — often tangible delays come from the “iron” elements of the video section , which few people think about when preparing a project. For example, we are making a teleconference with a conference room in a hotel, where all the switching on projectors is done on VGA, and you have the entire receiving path on SDI or HDMI - you can be sure that the mixer and conversion to VGA will add at least half a second to you. Antediluvian projector connected by "composite"? Second. At the transfer point, they put a cheap camera with an HDMI output, and screwed an SDI-HDMI converter to it with tape? Lost three frames. Calculate how much such converters, splitters, and other pieces of hardware you have in your path, and get very impressive numbers, often negating the efforts of translation engineers. The conclusion is simple - optimize the path, removing all unnecessary signal conversion.

And for testing, you can safely use our video, you can download it for free at this link .

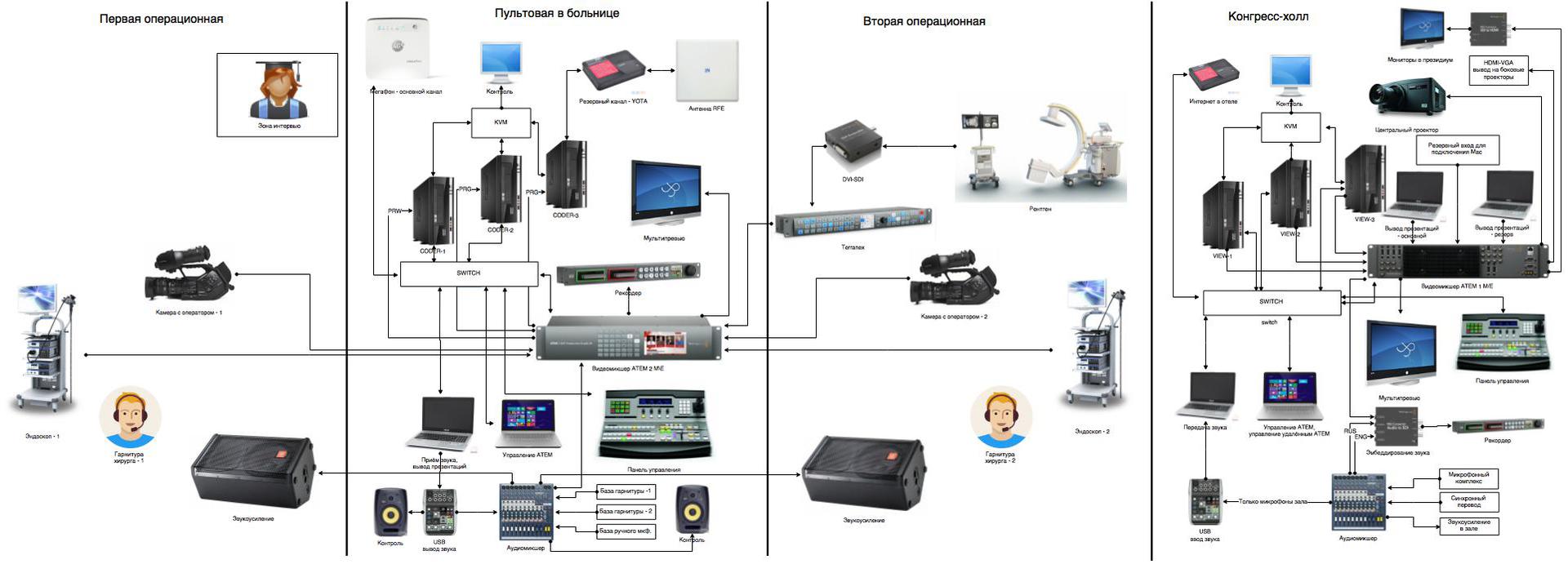

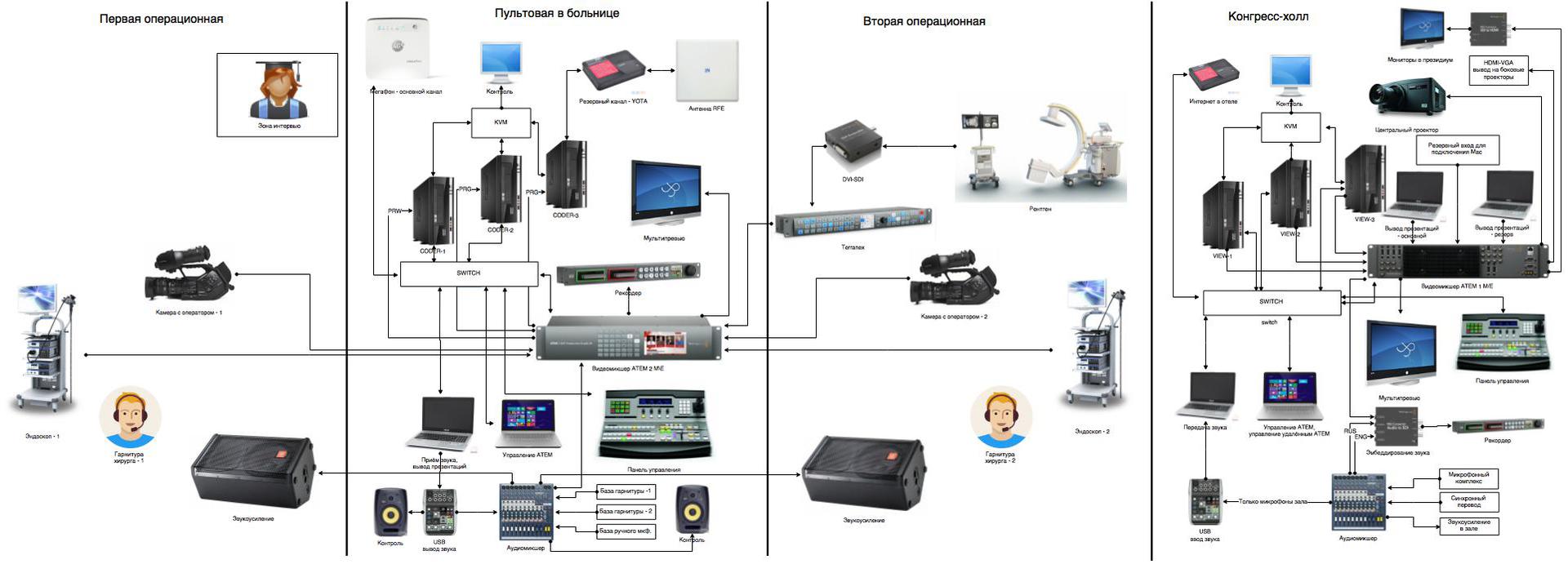

PS For fans of unpretentious labyrinths - a block diagram of switching from one of our medical projects.

Why do we need a minimum delay?

How can you easily and visually measure the delay in video transmission?

What elements of the video path to increase the delay?

Our top result - FullHD signal flew to the server and back in less than half a second.

')

Interesting? Then read on.

So, why do we need a minimum delay ?

My company organizes online video broadcasts, and lately we have been very actively developing the topic of telemedicine - we arrange broadcasts of surgical master classes, and we organize almost full-fledged TV bridges between operating and conference rooms: an image is transmitted from external cameras and medical devices ( endoscopes, laparoscopes, robotic surgeons), the spectators sit in comfortable chairs at the other end of the city, look at the healthy FullHD-picture screen, and ask the doctors if necessary. In this scenario, the comfort in communication turned out to be very important for customers - everyone got used to the phone and Skype, and even a 3-4-second delay significantly complicates the interaction between the hall and the operating room, and it is very distracting for surgeons who perform the real operations.

Here are the typical conditions in which we have to work:

- 720p or 1080i signal, most often in SDI format, received either directly from the camera or medical stand, or from the program output of the switcher;

- most often a fairly slow Internet, or its complete absence - a considerable part of the projects we do through 4G networks;

- lack of external IP;

- highly dynamic and extremely detailed picture, the need to maintain adequate color reproduction;

I will say right away: we studied the options for Skype and video conferencing systems (video conferencing), tested them and safely buried them.

The main problems of Skype are the impossibility of manually adjusting the video quality parameters, and its “too smart” coding algorithm, which can independently begin to degrade picture quality if it suddenly decides that it lacks the width of the Internet channel. Well, to get in Skype a picture from the SDI output of the switcher is a separate witch, not every Muggle is subject to ...

The video conferencing also did not turn out smoothly - the essential requirements for the bandwidth of the channel, the availability of external IPs, the lack of professional video and audio inputs, a completely horse price tag for both buying and renting, and at the same time “no” quality. Yes, it’s possible that the VKS can be beautifully shown by the uncle and aunt in suits sitting in a fashionably decorated rally room, but when at one status medical event our competitors launched a broadcast from the laparoscopic stand through the VKS, the picture came terrifying video signal with high detail, and instead of FullHD on the screens there was a completely infernal kaleidoscope that most resembled the trailer for the Pixels movie.

The picture quality on our mass broadcasts conducted via our own servers based on Wowza was much better than on Skype and on video conferencing, plus we had a decent fleet of encoders - powerful compact computers with SDI video capture cards, which made it possible to do several projects without nerves at the same time.

I set before my engineers the task of “squeezing” the maximum possible speed out of Wowza, and immediately the question arose of how and what to measure the delay ? Frankly, they thought for a long time, and therefore the result looks even more amusing, once again confirming that everything ingenious is simple.

We took as a basis the classic “countdown” used (or rather, not used for a long time) in film and television production, making it a bit more informative and detailed.

The measurement procedure is ridiculously simple: we turn on the video in the player, run through the entire video path, put the transmitting and receiving computer screens nearby, tritely photograph both screens on the phone, subtract the smaller figure from the larger one, and get the delay time accurate to the frame. Accordingly, if the transmission and reception points are removed, then you can send a signal from site A, receive it at site B, immediately send it back to site A, take a similar picture, and divide the result into two. The revolving timecode and the red square that is pointing back and forth allow you to visually monitor the possible misalignments of the transmission of a video stream of the “stuck” type and “explosions” of the picture.

Using this simple tool, we conducted a total revision of our video path, conjured with the server settings, and literally milliseconds squeezed all the delays, having a rather good result - when broadcasting via “dedicated” we received FullHD video speed of 4-5 Mbit / s in 11-16 frames (about half a second). When broadcasting over 4G networks and when transmitting over long distances (for example, testing St. Petersburg - Astana), the delay increased by about half a second. Here, of course, complex routing between the points of transmission and reception is already beginning to be felt.

For obvious reasons, I will not disclose the nuances of “tuning” a broadcast server, but I want to draw attention to an important nuance — often tangible delays come from the “iron” elements of the video section , which few people think about when preparing a project. For example, we are making a teleconference with a conference room in a hotel, where all the switching on projectors is done on VGA, and you have the entire receiving path on SDI or HDMI - you can be sure that the mixer and conversion to VGA will add at least half a second to you. Antediluvian projector connected by "composite"? Second. At the transfer point, they put a cheap camera with an HDMI output, and screwed an SDI-HDMI converter to it with tape? Lost three frames. Calculate how much such converters, splitters, and other pieces of hardware you have in your path, and get very impressive numbers, often negating the efforts of translation engineers. The conclusion is simple - optimize the path, removing all unnecessary signal conversion.

And for testing, you can safely use our video, you can download it for free at this link .

PS For fans of unpretentious labyrinths - a block diagram of switching from one of our medical projects.

Source: https://habr.com/ru/post/267269/

All Articles