Inventing servers - Open Compute Project

Launched in 2011, the Facebook project called the Open Compute Project (OCP) involves the creation of open standards and hardware architectures for building energy efficient and cost-effective data centers. OCP began as a Facebook data center hardware project in Prineville, Oregon. As a result, Facebook decided to make the architecture open, including server boards, power supplies, server chassis and racks. The company released OCP specifications with recommendations for compact and energy-efficient rack-mount server architecture and cooling methods.

Under the cat, we will look at the details of what these servers consist of, how they work and what it gives.

')

The Open Compute Project was born thanks to Facebook technical director Frank Frankowski . It was he who launched the initiative that allowed the industry community not only to get acquainted with the Facebook data center project in Oregon, but also to take part in the further development of the new architecture. The ultimate goal is to improve data centers, to form an ecosystem, to create more efficient and cost-effective servers.

In general, the idea resembles the community of software developers Open Source, which creates and improves their products. The project was so interesting that it was supported by large companies. OCP has more than a hundred and fifty members.

Server and storage architectures are created in accordance with the OCP Open Rack specifications , covering such hardware components as motherboards and power supply components. The project also involves the development of standards, in particular, management standards. Last year, OCP added new members. Now IBM, Microsoft, Yandex, Box.net and many other well-known companies are participating in Open Compute.

If the OCP architecture becomes the de facto standard for data centers, it can simplify system deployment and management. But the main thing is the savings, allowing to provide customers with cheaper services and thereby win in a highly competitive market. OCP aims to increase MTBF, increase server density, ease of maintenance with access from the cold corridor, improve energy efficiency, which is especially important for companies operating thousands of servers.

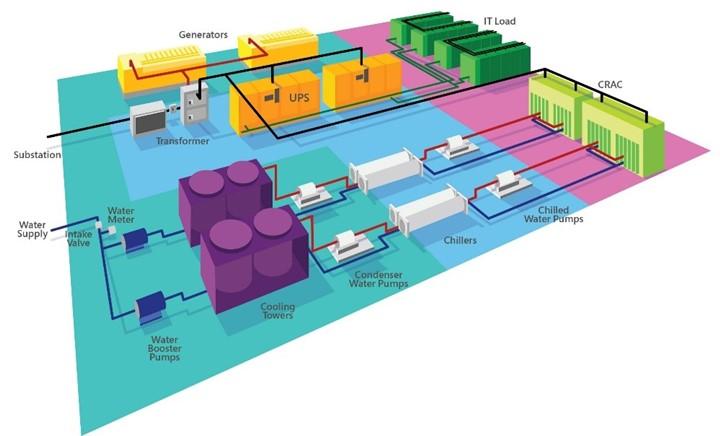

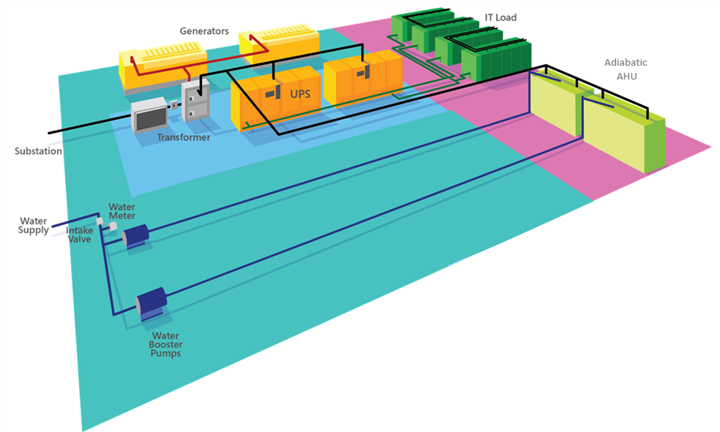

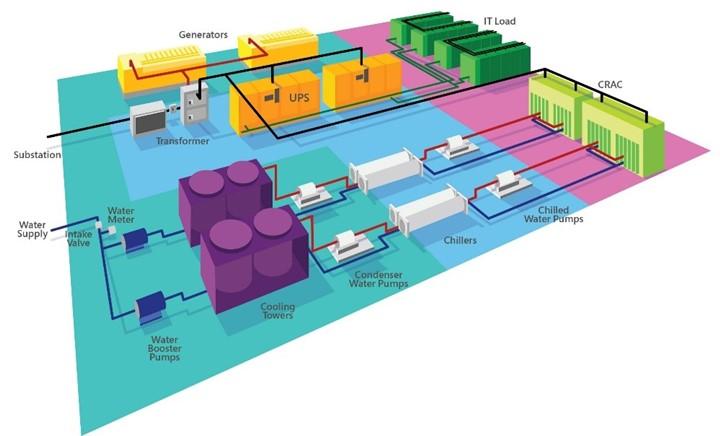

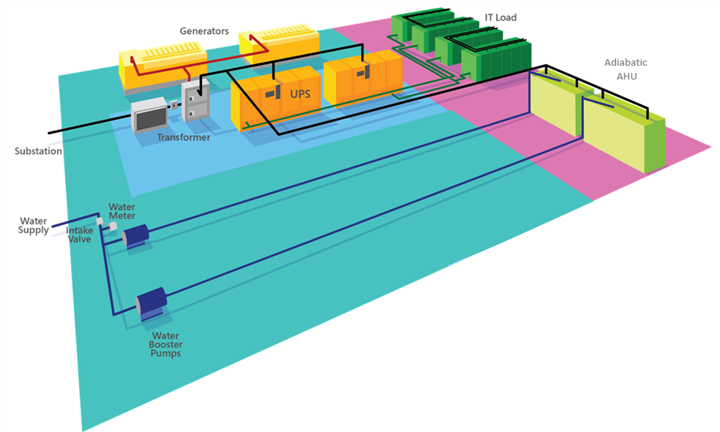

For example, Facebook's data center in Oregon consumes 38% less electricity than other data centers of the company, it uses a non-killer adiabatic cooling system, and the PUE value reaches 1.07-1.08 (and this is without water cooling) while the industry average is about 1.5. At the same time, capital expenditures were reduced by a quarter.

Open Compute servers have a lightweight design and can operate at elevated temperatures. They are much lighter, but larger than a normal server - the case height is 1.5U instead of 1U. They have high radiators and more efficient fans. The modular design of the Open Compute server simplifies access to all of its components — processors, disks, network cards, and memory modules. No tools are required to maintain it.

By 2014, OCP specifications covered an entire pool of “open” hardware — from servers to data center infrastructure. The number of companies using OCP has also grown. As it turned out, many OCP innovations are suitable not only for large data centers, but also for use in solutions for private / public clouds.

This year, Facebook introduced several more developments within the OCP project. In particular, Yosemite is working on a “server on a chip” jointly with Intel, while Accton and Broadcom are participating in the Wedge switch design project.

The introduction of the technologies created within the project gave Facebook the opportunity to save more than $ 2 billion over three years. However, it should be noted that such significant savings were achieved by optimizing the software, and for each type of application five typical platforms were developed.

Today, these are basically variants of the Facebook Leopard platform on Xeon E5 processors. It became the development of servers of previous generations, such as the Windmill system released in 2012 based on the Intel Sandy Bridge-EP processor and the AMD Opteron 6200/6300 processor.

One of the problems with Freedom servers is the lack of a backup power supply unit PSU. Adding a PSU to each server would mean an increase not only of CAPEX, but also of OPEX, since in active / passive mode the passive PSU still consumes electricity.

It was logical to group the power supply of several servers in the chassis. This is reflected in the architecture of the racks Open Rack v1, where the power supplies are located on the "shelves" of 12.5V DC, supplying the "zones" of 4.2 kW each.

Each zone has its own power shelf (height 3OU, OpenUnits, 1OU = 48 mm). Power supplies are reserved under the 5 + 1 scheme and occupy a total of 10OU per rack. When the power consumption is low, part of the PSU is automatically turned off, allowing the rest to work with optimal load.

Improvements touched and power distribution system. There are no power cables to disconnect each time a server is serviced. Power is supplied by vertical power tires in each zone. When the server moves into the rack, a power connector is plugged into the back of it. A separate 2OU compartment is allocated for switches.

Open Rack specifications suggest the creation of racks 48U high, which contributes to the improvement of air circulation in the equipment and simplifies the access to equipment for technicians. The Open Rack's rack width is 24 inches, but the equipment bay is 21 inches wide — 2 inches wider than a regular rack. This allows you to install three motherboards in the chassis or five 3.5-inch disks.

Open Rack v1 required new server design. Using the Freedom chassis without a PSU left a lot of empty space, and simply filling it with a 3.5 "HDD would be wasteful, and for most of the Facebook loads, so many disks were not required. A solution similar to power supplies was chosen. The disks were grouped and moved out of the server nodes. So the Knox storage system was born.

Generally speaking, OCP Knox is a regular JBOD disk shelf, created under Open Rack. HBA neighboring Winterfell server nodes are connected to it. It differs from the standard 19 "constructive in that it can hold 30 3.5" disk drives and is very easy to maintain. To replace the disc, the tray moves forward, the corresponding compartment opens, the disc is replaced, and everything moves back.

Seagate has developed its own “Ethernet storage device” specification, known as Seagate Kinetic. These drives were an object storage that is connected directly to the data network. A new BigFoot Storage Object Open chassis was also developed with these disks and 12 10GbE ports in the 2OU package.

On Facebook, for a similar purpose, they created the Honey Badger system, a Knox modification for storing images. It is equipped with Panther + compute nodes based on the Intel Avoton SoC (C2350 and C2750) with four DDR3 SODIMM slots and mSATA / M.2 SATA3 interfaces.

Such a system can work without a head node - usually Winterfell servers (Leopard servers are not planning to use Facebook from Knox). A slightly modified version of Knox was used as archive storage. Disks and fans in it are started only when it is required.

Another version of the archival system from Facebook using OpenRack intervenes 24 stores with 36 cartridge-containers, 12 Blu-ray discs in each. That is, the total capacity reaches 1.26 PB. And stored Blu-ray discs up to 50 years or more. The system works like a jukebox.

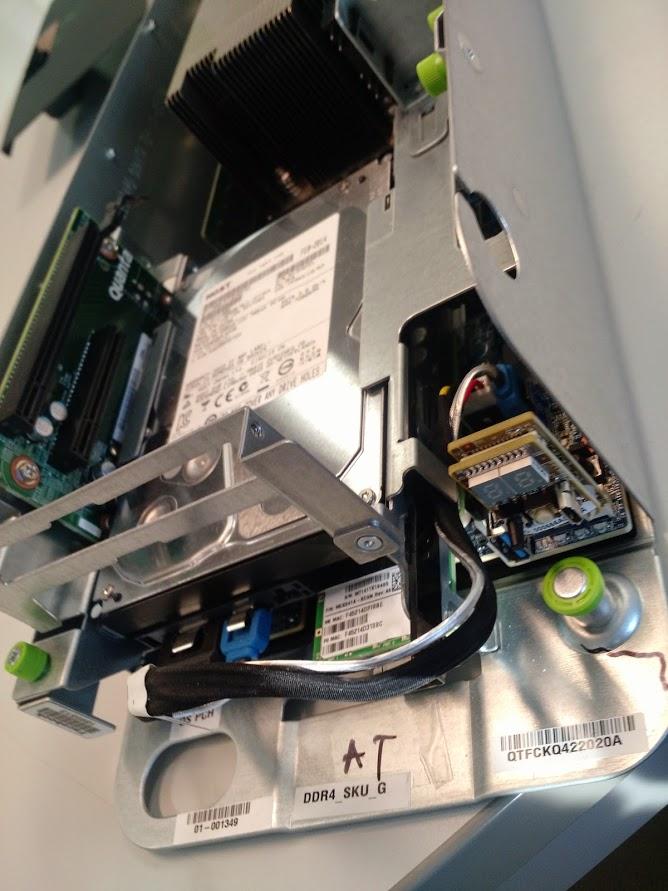

The Freedom chassis without a PSU is essentially just a motherboard, a fan, and a boot disk. Facebook engineers have created a more compact form factor - Winterfell. It resembles the Supermicro dual server node, but three such nodes can be placed on the ORv1 shelf. One 2OU Winterfell node contains a modified Windmill motherboard, a power bus connector and a backplane for connecting power cables and fans to the motherboard. You can install a full-size x16 PCIe card and a x8 half-size card, as well as a x8 PCIe network interface card on the motherboard. The boot disk is connected via SATA or mSATA.

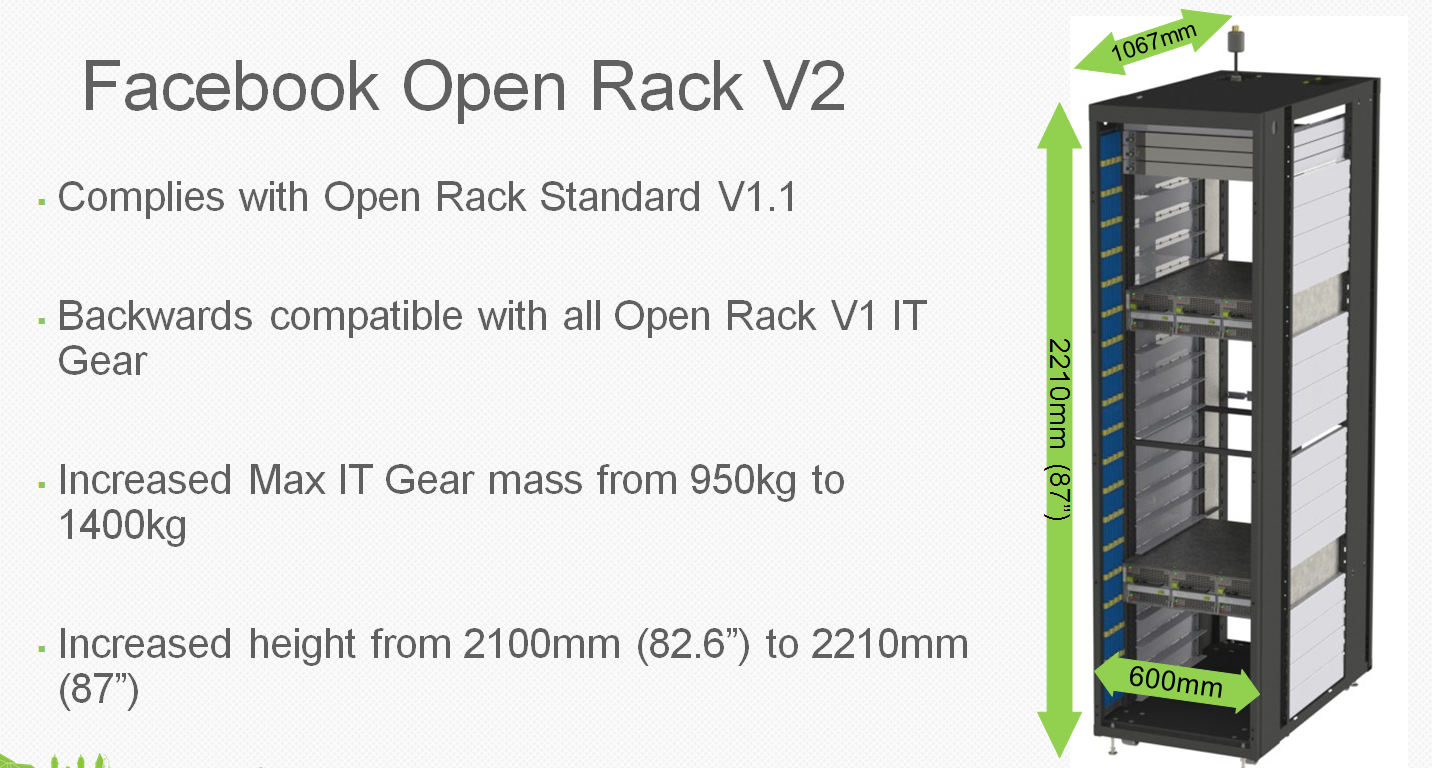

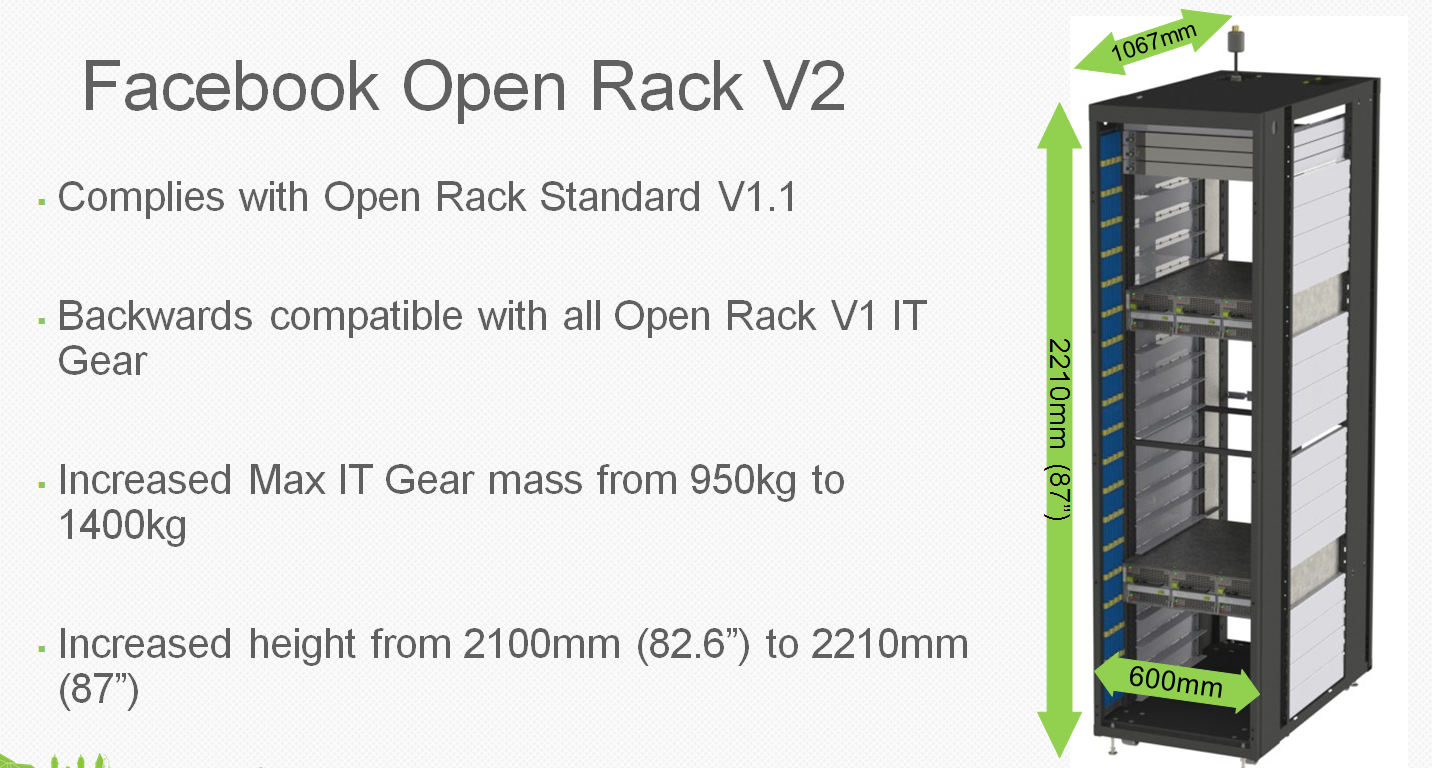

In the process of deploying ORv1, it became clear that the three power zones with three tires each are redundant - so much power is simply not required. There is a new version - Open Rack v2 with two power zones instead of three and one bus per zone. And the height of the compartment for switches has grown to 3OU.

Changes in nutrition led to incompatibility with Winterfell, so a new project appeared - Project Cubby. Cubby is in fact a sort of Supermicro TwinServer chassis, but instead of the two PSUs built into the server module, the power bus is used. This design will use three power supply units (2 + 1) 3.3 kW per supply area instead of six. Each power zone provides 6.3 kW of power. The bottom of the rack can contain three batteries - Battery Backup Units (BBU) in case of a power failure.

So, Leopard. This is the latest Windmill update with the Intel C226 chipset and support for up to two E5-2600v3 Haswell Xeon processors.

Enlarged CPU heatsinks and good airflow allow you to use processors with a thermal pack up to 145 W, that is, the entire Xeon family, excluding the 160-watt E5-2687W v3. Each processor has 8 DIMM channels available, and DDR4 allows in the future to use 128 GB memory modules, which will give up to 2 TB of RAM - more than enough for Facebook. You can also use NVDIMM modules (DIMM flash memory) and Facebook is testing this option.

Other changes include the absence of an external PCIe connector, support for a mezzanine card with two QSFP +, an mSATA / M.2 slot for SATA / NVMe drives and 8 more PCIe lanes for an additional card - there are only 24 of them. There is no SAS connector - Leopard is not used as head node for Knox.

An important addition is the control controller (Baseboard Management Controller, BMC). This is an Aspeed AST1250 controller with IPMI and Serial Over Lan access. BMC allows you to remotely update CPLD, VR, BMC and UEFI firmware. Power control for PSU load control is also provided.

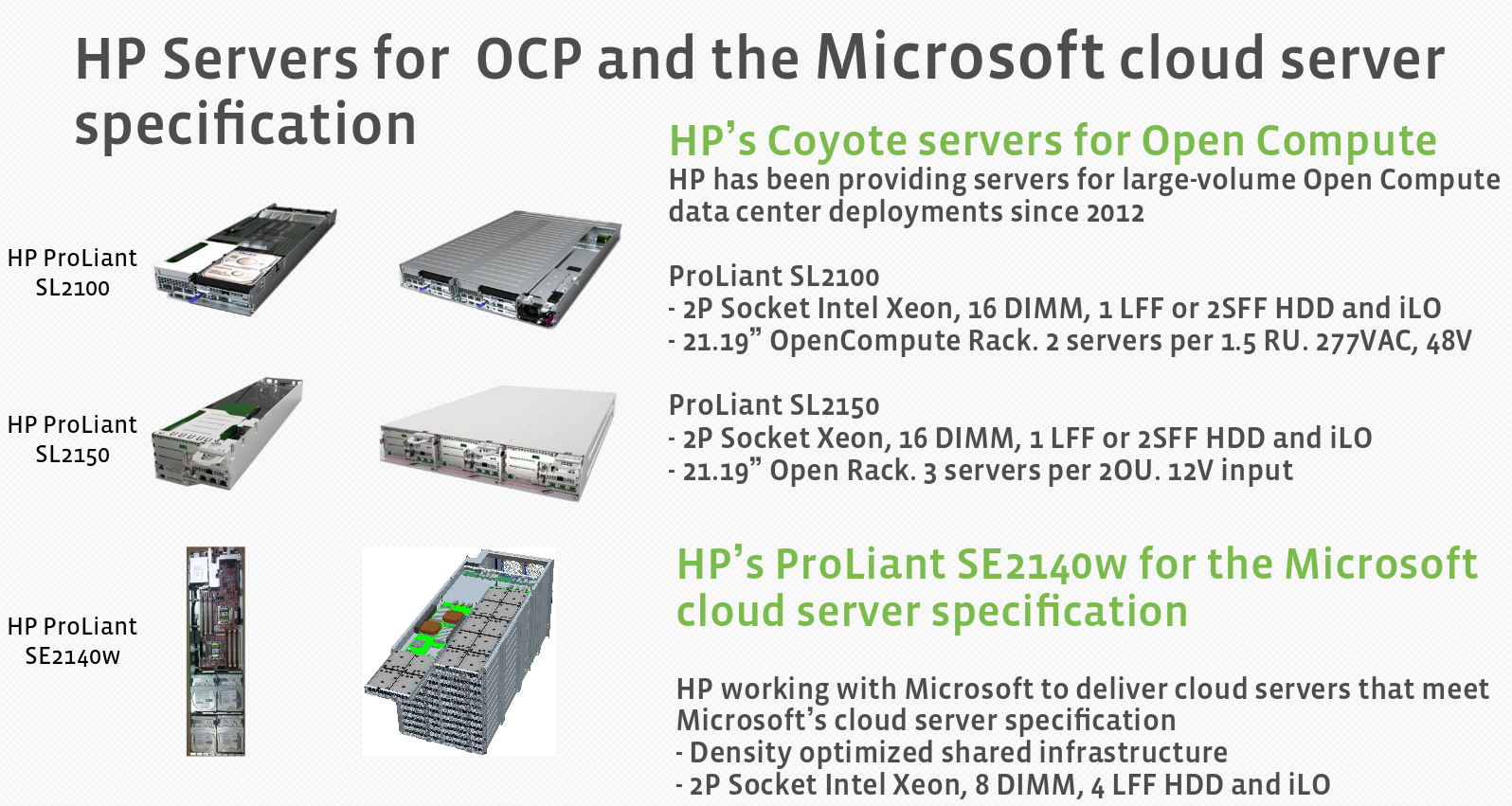

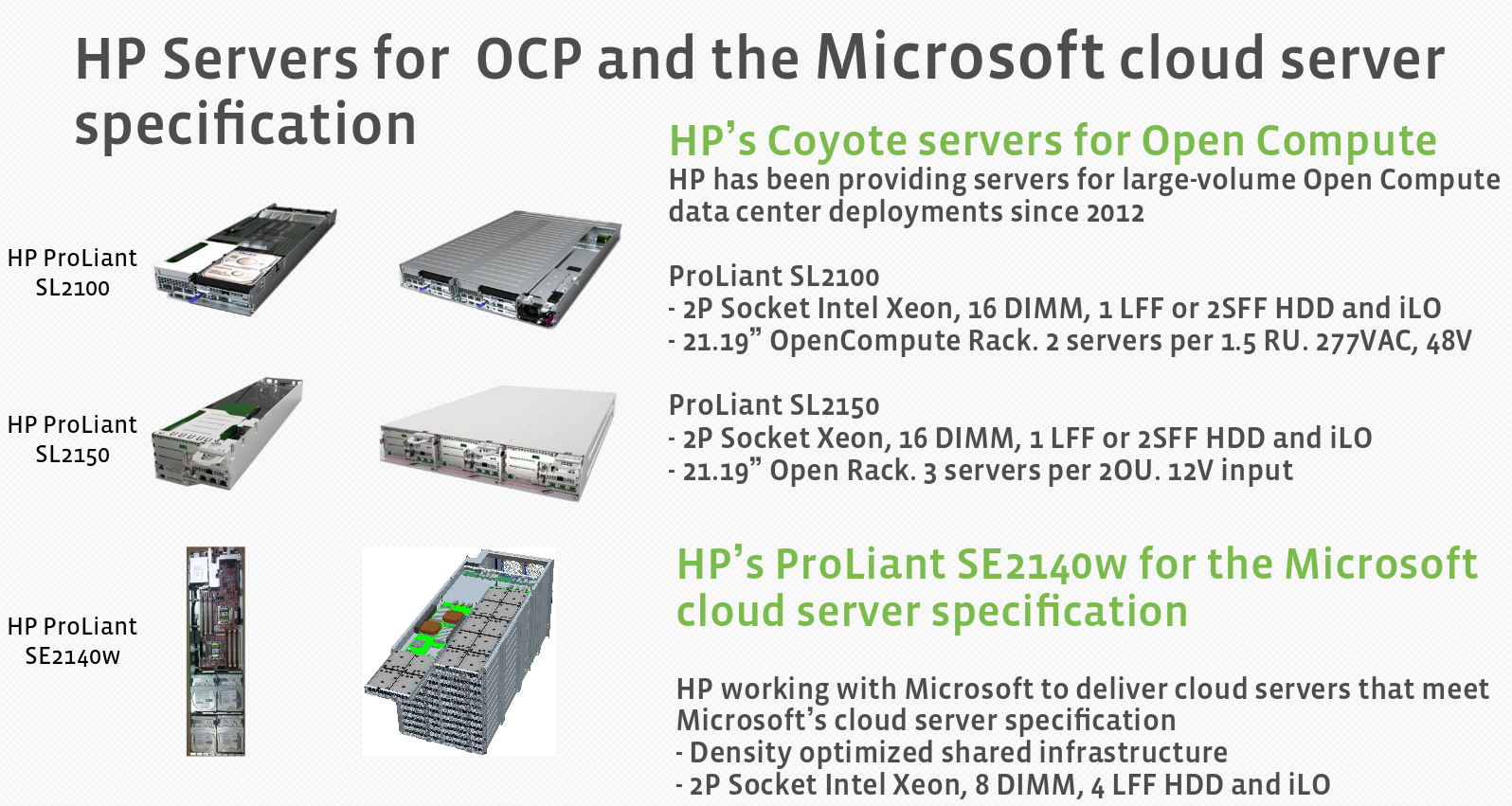

OCP equipment is usually made to order. But there are also "retail" versions of Leopard. Manufacturers offer their modifications. Examples include cloud-based Quanta QCT servers and WiWynn systems with an increased number of disks. HP, Microsoft and Dell also did not stand aside.

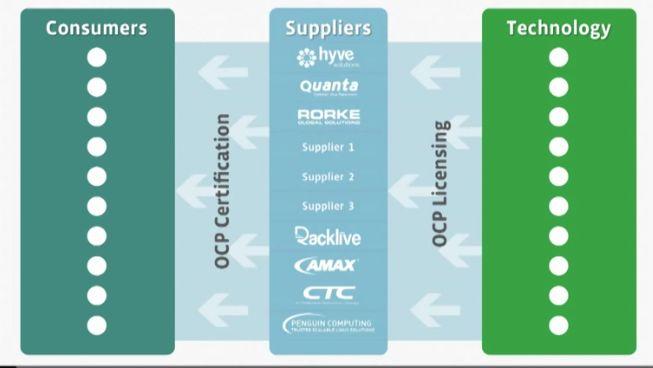

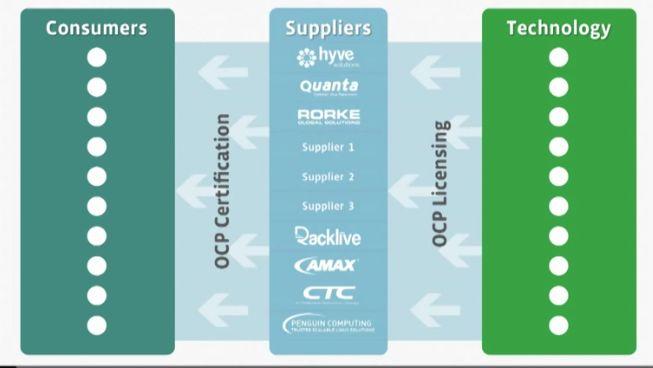

With the increase in the number of Open Compute equipment suppliers, it has become necessary to ensure that they follow the accepted specifications. There are two certifications - OCP Ready and OCP Certified. The first means that the equipment meets specifications and can operate in an OCP environment. The second is assigned to special testing organizations. There are just two of them - at the University of Texas at San Antonio ( UTSA ) in the USA and at the Industrial Technology Research Institute ( ITRI ) in Taiwan. WiWynn and Quanta QCT were the first vendors to certify their equipment.

OCP innovations are gradually being introduced in data centers and standardized, becoming available to a wide range of customers.

Open technologies allow customers to offer any combination of compute nodes, storage systems and switches in a rack, to use ready-made or own components. At the same time there is no hard link to the switching systems - you can use switches from any vendor.

Microsoft presented detailed specifications of its Open Compute servers and even revealed the source code of infrastructure management software with server diagnostics, cooling monitoring and power functions. She contributed to OCP by developing the Open Cloud Server architecture. These servers are optimized to work with Windows Server and are built in accordance with the high requirements for availability, scalability and efficiency, which makes the Windows Azure cloud platform.

According to Microsoft, the server cost has been reduced by almost 40%, energy efficiency has increased by 15%, and infrastructure can be deployed 50% faster.

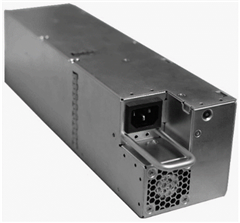

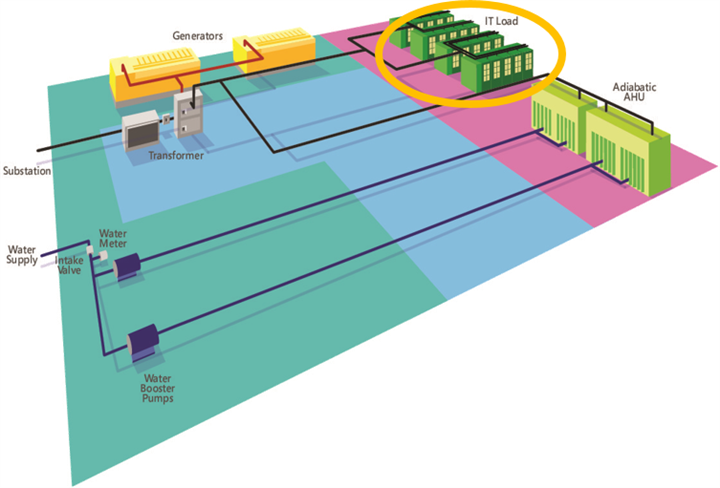

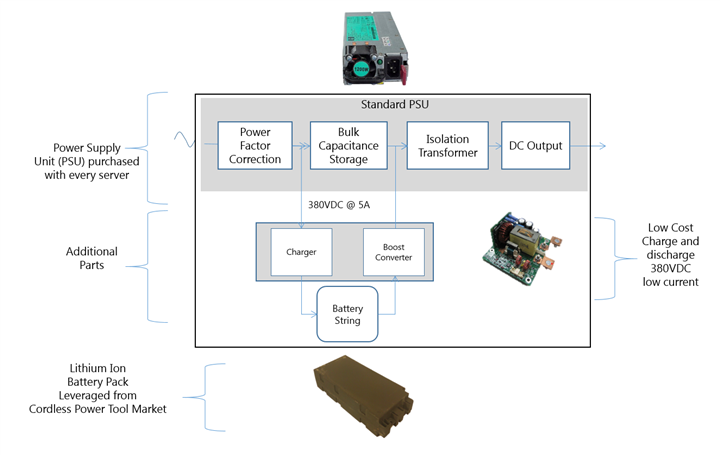

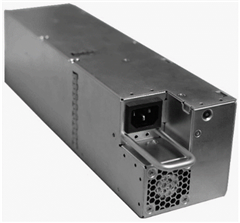

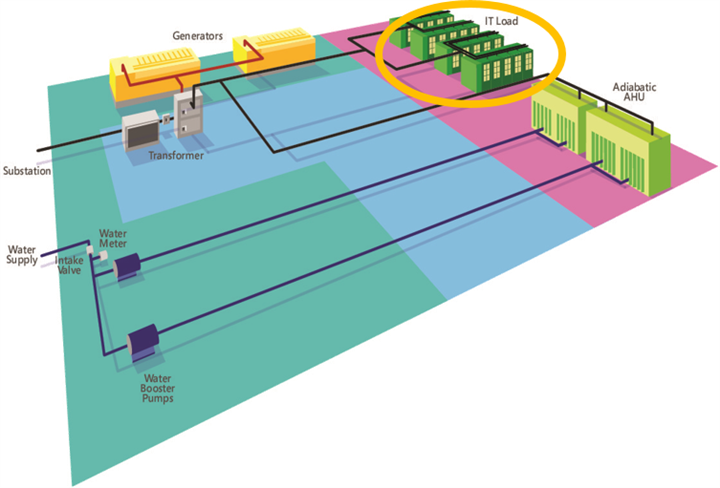

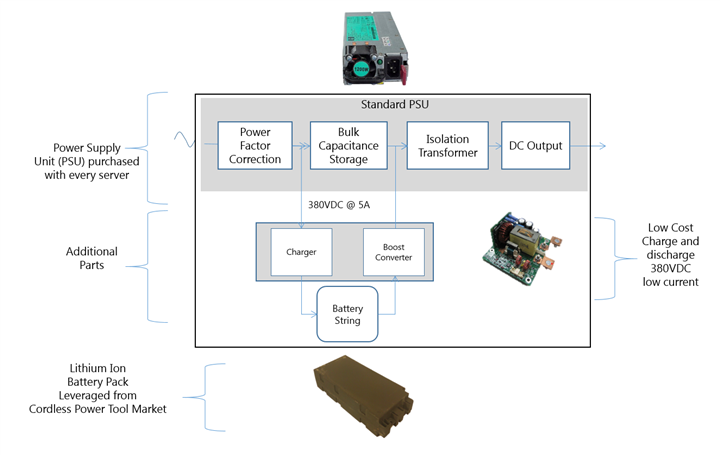

At the Open Compute Project (OCP) Summit forum in the US, Microsoft showed another interesting development - the distributed UPS technology called Local Energy Storage (LES) . It is a combination of power module and battery compatible with the Open CloudServer chassis (OCS) v2. New LES modules are interchangeable with previous PSUs. Depending on the data center topology and power backup requirements, you can choose which type of PSU to use. Usually, UPSs are placed in a separate room, and lead batteries are used to back up power to IT equipment. For several reasons, this solution is ineffective. Large areas are occupied, energy is lost due to AC / AC and AC / DC conversions (direct / alternating current). Double conversion and battery charging increases the data center PUE by up to 17%. Reliability decreases, operating costs increase.

At the Open Compute Project (OCP) Summit forum in the US, Microsoft showed another interesting development - the distributed UPS technology called Local Energy Storage (LES) . It is a combination of power module and battery compatible with the Open CloudServer chassis (OCS) v2. New LES modules are interchangeable with previous PSUs. Depending on the data center topology and power backup requirements, you can choose which type of PSU to use. Usually, UPSs are placed in a separate room, and lead batteries are used to back up power to IT equipment. For several reasons, this solution is ineffective. Large areas are occupied, energy is lost due to AC / AC and AC / DC conversions (direct / alternating current). Double conversion and battery charging increases the data center PUE by up to 17%. Reliability decreases, operating costs increase.

Switching to adiabatic cooling can reduce costs and simplify operations.

But how to improve the power distribution system and UPS? Is it possible to radically simplify everything here? Microsoft decided to abandon a separate room for UPS and move power modules closer to the IT load, at the same time integrating the battery system with IT management. So LES appeared.

In the LES topology, the PSU design was changed - components such as batteries, a battery management controller, and a low-voltage charger were added.

Batteries are used lithium-ion, as in electric transport. Thus, the LES developers took the standard PSU elements, conventional batteries, and connected them in one module. What does this give? According to Microsoft:

Innovations of the Open Compute Project are changing the data center and cloud services market, standardizing and cheapening the development of server solutions. This is just one of many examples. In the next series of materials you will learn about other interesting solutions.

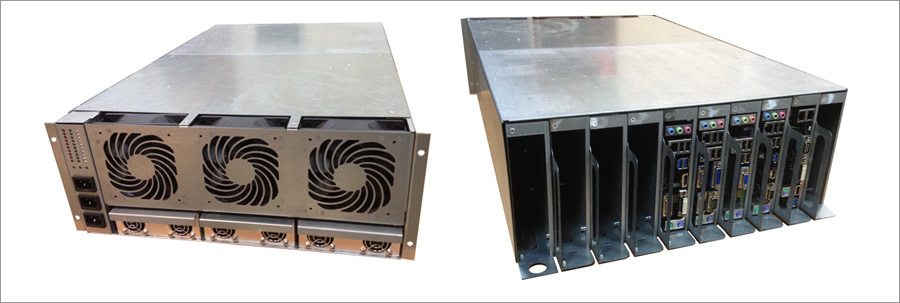

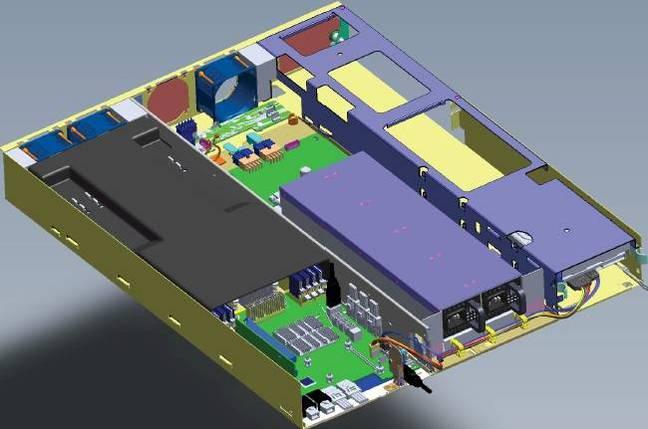

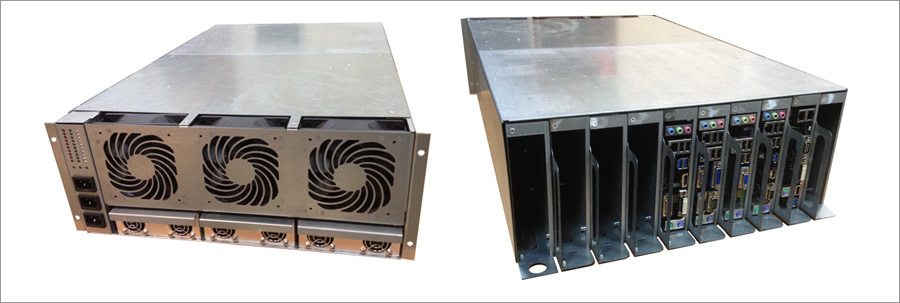

We at Khostkay also make the servers themselves for renting them out as dedicated servers - traditional solutions do not allow technologically to offer low-budget machines to customers. After many attempts, the 4U platform codenamed Aero10 went into the preliminary series: the solutions on this platform fully satisfy the need for microservers on 2-4 core processors.

We at Khostkay also make the servers themselves for renting them out as dedicated servers - traditional solutions do not allow technologically to offer low-budget machines to customers. After many attempts, the 4U platform codenamed Aero10 went into the preliminary series: the solutions on this platform fully satisfy the need for microservers on 2-4 core processors.

We use traditional mini-ITX motherboards, the rest of the development is completely ours - from the case to power distribution electronics and control circuits - everything is done locally in Moscow. We still use Chinese power supplies - MeanWell RSP1000-12 at 12V with load sharing and hot swapping.

The platform implements dedicated micro-servers on Celeron J1800 2x2.4Ghz, Celeron J1900 4x2.0Ghz, i3-4360 2x3.7Ghz and flagship i7-4790 4x3.6Ghz processors. In the middle segment, we make servers based on the E3-1230v3 with a remote control module on the same platform using ASUS P9D-I motherboards.

Using this solution allows us to reduce the cost of capital expenditures by up to 50%, save up to 20-30% of electricity and develop this solution further. All this allows us to offer our clients competitive prices that are possible on the Russian market in the absence of crediting, leasing, installments for 3 years and other instruments available to us in the Netherlands .

The project already has a solution for 19 blades mini-ITX in the same 4U and we are constantly working to improve the power and cooling system. In the future, for the operation of such servers, a traditional data center will not be required.

In the near future we will tell about this platform and our other developments in detail, subscribe and follow the news.

Under the cat, we will look at the details of what these servers consist of, how they work and what it gives.

')

The Open Compute Project was born thanks to Facebook technical director Frank Frankowski . It was he who launched the initiative that allowed the industry community not only to get acquainted with the Facebook data center project in Oregon, but also to take part in the further development of the new architecture. The ultimate goal is to improve data centers, to form an ecosystem, to create more efficient and cost-effective servers.

In general, the idea resembles the community of software developers Open Source, which creates and improves their products. The project was so interesting that it was supported by large companies. OCP has more than a hundred and fifty members.

Server and storage architectures are created in accordance with the OCP Open Rack specifications , covering such hardware components as motherboards and power supply components. The project also involves the development of standards, in particular, management standards. Last year, OCP added new members. Now IBM, Microsoft, Yandex, Box.net and many other well-known companies are participating in Open Compute.

Make it cheaper, and then you lose

If the OCP architecture becomes the de facto standard for data centers, it can simplify system deployment and management. But the main thing is the savings, allowing to provide customers with cheaper services and thereby win in a highly competitive market. OCP aims to increase MTBF, increase server density, ease of maintenance with access from the cold corridor, improve energy efficiency, which is especially important for companies operating thousands of servers.

For example, Facebook's data center in Oregon consumes 38% less electricity than other data centers of the company, it uses a non-killer adiabatic cooling system, and the PUE value reaches 1.07-1.08 (and this is without water cooling) while the industry average is about 1.5. At the same time, capital expenditures were reduced by a quarter.

Open Compute servers have a lightweight design and can operate at elevated temperatures. They are much lighter, but larger than a normal server - the case height is 1.5U instead of 1U. They have high radiators and more efficient fans. The modular design of the Open Compute server simplifies access to all of its components — processors, disks, network cards, and memory modules. No tools are required to maintain it.

By 2014, OCP specifications covered an entire pool of “open” hardware — from servers to data center infrastructure. The number of companies using OCP has also grown. As it turned out, many OCP innovations are suitable not only for large data centers, but also for use in solutions for private / public clouds.

This year, Facebook introduced several more developments within the OCP project. In particular, Yosemite is working on a “server on a chip” jointly with Intel, while Accton and Broadcom are participating in the Wedge switch design project.

From Freedom to Leopard

The introduction of the technologies created within the project gave Facebook the opportunity to save more than $ 2 billion over three years. However, it should be noted that such significant savings were achieved by optimizing the software, and for each type of application five typical platforms were developed.

Today, these are basically variants of the Facebook Leopard platform on Xeon E5 processors. It became the development of servers of previous generations, such as the Windmill system released in 2012 based on the Intel Sandy Bridge-EP processor and the AMD Opteron 6200/6300 processor.

Generations of OCP servers from Facebook | ||||||

| Freedom (Intel) | Freedom (AMD) | Windmill (Intel) | Watermark (AMD) | Winterfell | Leopard | |

| Platform | Westmere-ep | Interlagos | Sandy Bridge-EP | Interlagos | Sandy Bridge-EP / Ivy-Bridge EP | Haswell-ep |

| Chipset | 5500 | SR5650 / SP5100 | C602 | SR5650 / SR5670 / SR5690 | C602 | C226 |

| Models | X5500 / X5600 | Opteron 6200/6300 | E5-2600 | Opteron 6200/6300 | E5-2600 v1 / v2 | E5-2600v3 |

| Sockets | 2 | 2 | 2 | 2 | 2 | 2 |

| Thermopacket, W | 95 | 85 | 115 | 85 | 115 | 145 |

| RAM to socket | 3x DDR3 | 12x DDR3 | 8x DDR3 | 8x DDR3 | 8x DDR3 | 8x DDR4 / NVDIMM |

| ~ Server node width (inches) | 21 | 21 | eight | 21 | 6.5 | 6.5 |

| Form Factor (U) | 1.5 | 1.5 | 1.5 | 1.5 | 2 | 2 |

| Number of fans per node | four | four | 2 | four | 2 | 2 |

| Fan Size (mm) | 60 | 60 | 60 | 60 | 80 | 80 |

| The number of compartments for disks (3.5 '') | 6 | 6 | 6 | 6 | one | one |

| Disk interface | SATA II | SATA II | SATA III | SATA III | SATA III / RAID HBA | SATA III / M.2 |

| The number of DIMM slots per socket | 9 | 12 | 9 | 12 | eight | eight |

| DDRX generation | 3 | 3 | 3 | 3 | 3 | four |

| Ethernet | 1 GbE fix | 2 GbE fix | 2 GbE fix + PCIe mezzanine | 2 GbE fix | 1GbE fix + 8x PCIe Mezzanine | 8x PCIe Mezzanine |

| Where deployed | Oregon | Oregon | Sweden | Sweden | Pennsylvania | ? |

| PSU model | PowerOne SPAFCBK- 01G | PowerOne SPAFCBK- 01G | Powerone | Powerone | - | - |

| PSU number | one | one | one | one | - | - |

| PSU power (W) | 450 | 450 | 450 | 450 | - | - |

| Number of nodes | one | one | 2 | 2 | 3 | 3 |

| BMC | No (Intel RMM) | Not | No (Intel RMM) | Not | No (Intel RMM) | Yes (Aspeed AST1250 w 1GB Samsung DDR3 DIMM K4B1G1646G- BCH9) |

One of the problems with Freedom servers is the lack of a backup power supply unit PSU. Adding a PSU to each server would mean an increase not only of CAPEX, but also of OPEX, since in active / passive mode the passive PSU still consumes electricity.

It was logical to group the power supply of several servers in the chassis. This is reflected in the architecture of the racks Open Rack v1, where the power supplies are located on the "shelves" of 12.5V DC, supplying the "zones" of 4.2 kW each.

Each zone has its own power shelf (height 3OU, OpenUnits, 1OU = 48 mm). Power supplies are reserved under the 5 + 1 scheme and occupy a total of 10OU per rack. When the power consumption is low, part of the PSU is automatically turned off, allowing the rest to work with optimal load.

Improvements touched and power distribution system. There are no power cables to disconnect each time a server is serviced. Power is supplied by vertical power tires in each zone. When the server moves into the rack, a power connector is plugged into the back of it. A separate 2OU compartment is allocated for switches.

Open Rack specifications suggest the creation of racks 48U high, which contributes to the improvement of air circulation in the equipment and simplifies the access to equipment for technicians. The Open Rack's rack width is 24 inches, but the equipment bay is 21 inches wide — 2 inches wider than a regular rack. This allows you to install three motherboards in the chassis or five 3.5-inch disks.

OCP Knox and others

Open Rack v1 required new server design. Using the Freedom chassis without a PSU left a lot of empty space, and simply filling it with a 3.5 "HDD would be wasteful, and for most of the Facebook loads, so many disks were not required. A solution similar to power supplies was chosen. The disks were grouped and moved out of the server nodes. So the Knox storage system was born.

Generally speaking, OCP Knox is a regular JBOD disk shelf, created under Open Rack. HBA neighboring Winterfell server nodes are connected to it. It differs from the standard 19 "constructive in that it can hold 30 3.5" disk drives and is very easy to maintain. To replace the disc, the tray moves forward, the corresponding compartment opens, the disc is replaced, and everything moves back.

Seagate has developed its own “Ethernet storage device” specification, known as Seagate Kinetic. These drives were an object storage that is connected directly to the data network. A new BigFoot Storage Object Open chassis was also developed with these disks and 12 10GbE ports in the 2OU package.

On Facebook, for a similar purpose, they created the Honey Badger system, a Knox modification for storing images. It is equipped with Panther + compute nodes based on the Intel Avoton SoC (C2350 and C2750) with four DDR3 SODIMM slots and mSATA / M.2 SATA3 interfaces.

Such a system can work without a head node - usually Winterfell servers (Leopard servers are not planning to use Facebook from Knox). A slightly modified version of Knox was used as archive storage. Disks and fans in it are started only when it is required.

Another version of the archival system from Facebook using OpenRack intervenes 24 stores with 36 cartridge-containers, 12 Blu-ray discs in each. That is, the total capacity reaches 1.26 PB. And stored Blu-ray discs up to 50 years or more. The system works like a jukebox.

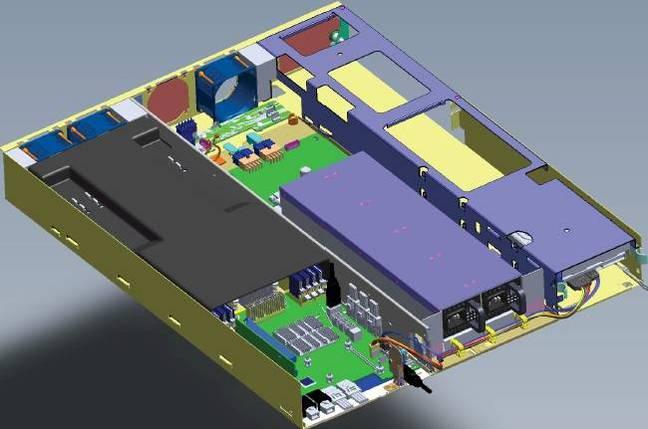

Winterfell Servers

The Freedom chassis without a PSU is essentially just a motherboard, a fan, and a boot disk. Facebook engineers have created a more compact form factor - Winterfell. It resembles the Supermicro dual server node, but three such nodes can be placed on the ORv1 shelf. One 2OU Winterfell node contains a modified Windmill motherboard, a power bus connector and a backplane for connecting power cables and fans to the motherboard. You can install a full-size x16 PCIe card and a x8 half-size card, as well as a x8 PCIe network interface card on the motherboard. The boot disk is connected via SATA or mSATA.

Open Rack v2

In the process of deploying ORv1, it became clear that the three power zones with three tires each are redundant - so much power is simply not required. There is a new version - Open Rack v2 with two power zones instead of three and one bus per zone. And the height of the compartment for switches has grown to 3OU.

Changes in nutrition led to incompatibility with Winterfell, so a new project appeared - Project Cubby. Cubby is in fact a sort of Supermicro TwinServer chassis, but instead of the two PSUs built into the server module, the power bus is used. This design will use three power supply units (2 + 1) 3.3 kW per supply area instead of six. Each power zone provides 6.3 kW of power. The bottom of the rack can contain three batteries - Battery Backup Units (BBU) in case of a power failure.

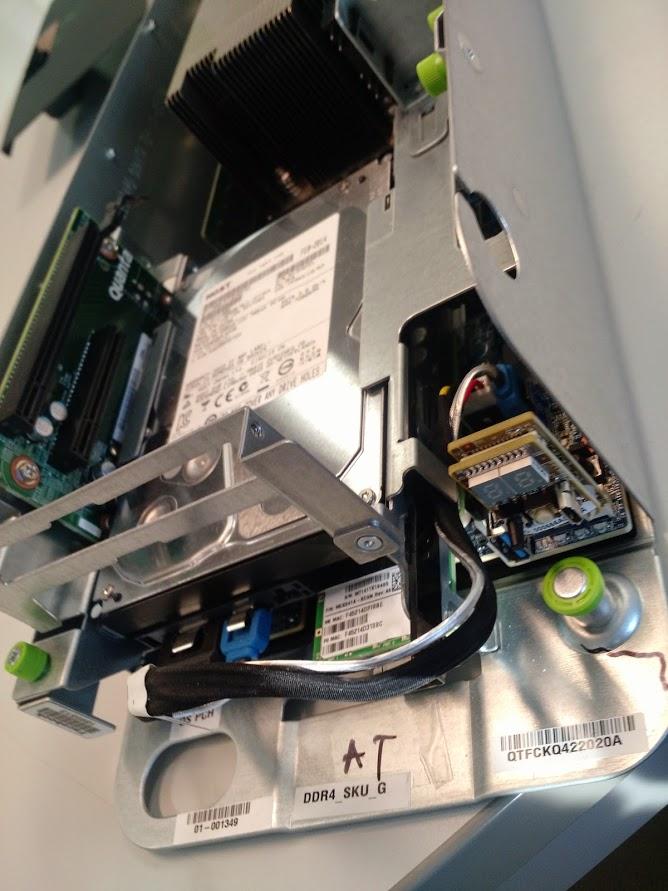

Leopard Servers

So, Leopard. This is the latest Windmill update with the Intel C226 chipset and support for up to two E5-2600v3 Haswell Xeon processors.

Enlarged CPU heatsinks and good airflow allow you to use processors with a thermal pack up to 145 W, that is, the entire Xeon family, excluding the 160-watt E5-2687W v3. Each processor has 8 DIMM channels available, and DDR4 allows in the future to use 128 GB memory modules, which will give up to 2 TB of RAM - more than enough for Facebook. You can also use NVDIMM modules (DIMM flash memory) and Facebook is testing this option.

Other changes include the absence of an external PCIe connector, support for a mezzanine card with two QSFP +, an mSATA / M.2 slot for SATA / NVMe drives and 8 more PCIe lanes for an additional card - there are only 24 of them. There is no SAS connector - Leopard is not used as head node for Knox.

An important addition is the control controller (Baseboard Management Controller, BMC). This is an Aspeed AST1250 controller with IPMI and Serial Over Lan access. BMC allows you to remotely update CPLD, VR, BMC and UEFI firmware. Power control for PSU load control is also provided.

Certified Solutions

OCP equipment is usually made to order. But there are also "retail" versions of Leopard. Manufacturers offer their modifications. Examples include cloud-based Quanta QCT servers and WiWynn systems with an increased number of disks. HP, Microsoft and Dell also did not stand aside.

With the increase in the number of Open Compute equipment suppliers, it has become necessary to ensure that they follow the accepted specifications. There are two certifications - OCP Ready and OCP Certified. The first means that the equipment meets specifications and can operate in an OCP environment. The second is assigned to special testing organizations. There are just two of them - at the University of Texas at San Antonio ( UTSA ) in the USA and at the Industrial Technology Research Institute ( ITRI ) in Taiwan. WiWynn and Quanta QCT were the first vendors to certify their equipment.

OCP innovations are gradually being introduced in data centers and standardized, becoming available to a wide range of customers.

Open technologies allow customers to offer any combination of compute nodes, storage systems and switches in a rack, to use ready-made or own components. At the same time there is no hard link to the switching systems - you can use switches from any vendor.

Microsoft Innovation

Microsoft presented detailed specifications of its Open Compute servers and even revealed the source code of infrastructure management software with server diagnostics, cooling monitoring and power functions. She contributed to OCP by developing the Open Cloud Server architecture. These servers are optimized to work with Windows Server and are built in accordance with the high requirements for availability, scalability and efficiency, which makes the Windows Azure cloud platform.

According to Microsoft, the server cost has been reduced by almost 40%, energy efficiency has increased by 15%, and infrastructure can be deployed 50% faster.

At the Open Compute Project (OCP) Summit forum in the US, Microsoft showed another interesting development - the distributed UPS technology called Local Energy Storage (LES) . It is a combination of power module and battery compatible with the Open CloudServer chassis (OCS) v2. New LES modules are interchangeable with previous PSUs. Depending on the data center topology and power backup requirements, you can choose which type of PSU to use. Usually, UPSs are placed in a separate room, and lead batteries are used to back up power to IT equipment. For several reasons, this solution is ineffective. Large areas are occupied, energy is lost due to AC / AC and AC / DC conversions (direct / alternating current). Double conversion and battery charging increases the data center PUE by up to 17%. Reliability decreases, operating costs increase.

At the Open Compute Project (OCP) Summit forum in the US, Microsoft showed another interesting development - the distributed UPS technology called Local Energy Storage (LES) . It is a combination of power module and battery compatible with the Open CloudServer chassis (OCS) v2. New LES modules are interchangeable with previous PSUs. Depending on the data center topology and power backup requirements, you can choose which type of PSU to use. Usually, UPSs are placed in a separate room, and lead batteries are used to back up power to IT equipment. For several reasons, this solution is ineffective. Large areas are occupied, energy is lost due to AC / AC and AC / DC conversions (direct / alternating current). Double conversion and battery charging increases the data center PUE by up to 17%. Reliability decreases, operating costs increase.

Switching to adiabatic cooling can reduce costs and simplify operations.

But how to improve the power distribution system and UPS? Is it possible to radically simplify everything here? Microsoft decided to abandon a separate room for UPS and move power modules closer to the IT load, at the same time integrating the battery system with IT management. So LES appeared.

In the LES topology, the PSU design was changed - components such as batteries, a battery management controller, and a low-voltage charger were added.

Batteries are used lithium-ion, as in electric transport. Thus, the LES developers took the standard PSU elements, conventional batteries, and connected them in one module. What does this give? According to Microsoft:

- Up to five times the cost is reduced compared to traditional UPS, the power supply system in the data center is greatly simplified, and the function of energy storage is performed by commercially available batteries.

- Moving the battery to the server eliminates the 9% loss typical of conventional UPS systems. In lithium-ion batteries, only 2% is lost in charge, while in lead batteries - up to 8% and 1% for power supply. As a result, PUE decreases.

- The data center areas are reduced by 25%, and this is a radical saving in capital costs.

- Maintenance is greatly simplified - LES modules are easily replaced, no acid. The consequences of failure are minimized and localized.

Innovations of the Open Compute Project are changing the data center and cloud services market, standardizing and cheapening the development of server solutions. This is just one of many examples. In the next series of materials you will learn about other interesting solutions.

We at Khostkay also make the servers themselves for renting them out as dedicated servers - traditional solutions do not allow technologically to offer low-budget machines to customers. After many attempts, the 4U platform codenamed Aero10 went into the preliminary series: the solutions on this platform fully satisfy the need for microservers on 2-4 core processors.

We at Khostkay also make the servers themselves for renting them out as dedicated servers - traditional solutions do not allow technologically to offer low-budget machines to customers. After many attempts, the 4U platform codenamed Aero10 went into the preliminary series: the solutions on this platform fully satisfy the need for microservers on 2-4 core processors.

We use traditional mini-ITX motherboards, the rest of the development is completely ours - from the case to power distribution electronics and control circuits - everything is done locally in Moscow. We still use Chinese power supplies - MeanWell RSP1000-12 at 12V with load sharing and hot swapping.

The platform implements dedicated micro-servers on Celeron J1800 2x2.4Ghz, Celeron J1900 4x2.0Ghz, i3-4360 2x3.7Ghz and flagship i7-4790 4x3.6Ghz processors. In the middle segment, we make servers based on the E3-1230v3 with a remote control module on the same platform using ASUS P9D-I motherboards.

Using this solution allows us to reduce the cost of capital expenditures by up to 50%, save up to 20-30% of electricity and develop this solution further. All this allows us to offer our clients competitive prices that are possible on the Russian market in the absence of crediting, leasing, installments for 3 years and other instruments available to us in the Netherlands .

The project already has a solution for 19 blades mini-ITX in the same 4U and we are constantly working to improve the power and cooling system. In the future, for the operation of such servers, a traditional data center will not be required.

In the near future we will tell about this platform and our other developments in detail, subscribe and follow the news.

Source: https://habr.com/ru/post/266835/

All Articles