About a Data Science Problem

Hi, Habr!

As promised, I continue to publish articles in which I describe my experience after completing training in Data Science from the guys from MLClass.ru (by the way, who haven't had time yet - I recommend registering ). This time, using the example of the Digit Recognizer task, we will study the effect of the size of the training sample on the quality of the machine learning algorithm. This is one of the very first and most basic questions that arise when building a predictive model.

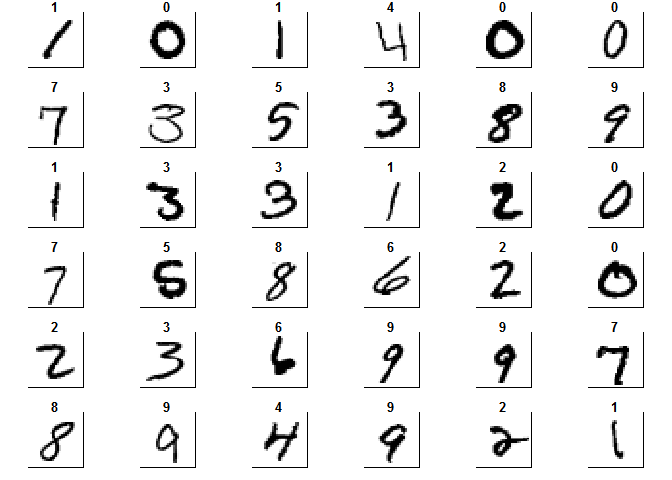

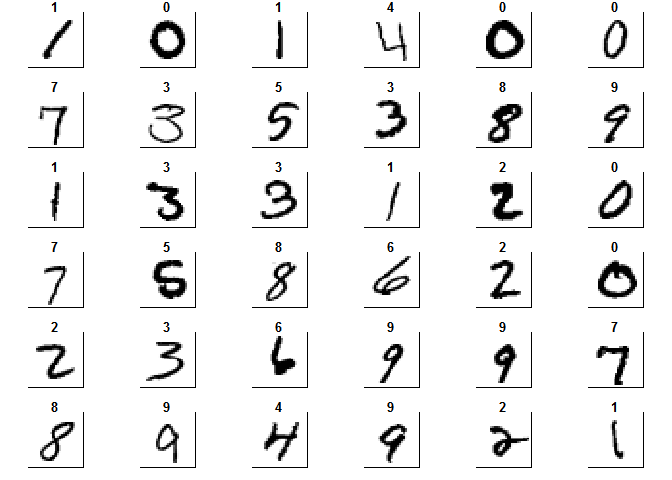

In the process of working on data analysis, there are situations when the size of the sample available for research is an obstacle. I met this example while participating in a Digit Recognizer contest held on the Kaggle website. The object of the competition is the database of images of hand-written numbers - The MNIST database of handwritten digits . Images were centered and brought to the same size. A sample of 42,000 such numbers is proposed as a training sample. Each digit is decomposed into a line of 784 signs, the value in each is its brightness.

')

First, load the full training sample into R

Now, to get an idea of the data provided, let's draw the numbers in the usual form for the human eye.

Then one could proceed to the construction of various models, the choice of parameters, etc. But let's look at the data. 42000 objects and 784 signs. When I tried to build more complex models, such as Random Forest or Support Vector Machine, I received an error about the lack of memory, and not even a minute of learning from a full sample takes place. One of the options for dealing with this is to use a significantly more powerful machine for computing, or creating clusters of several computers. But in this paper I decided to investigate how the use of all the data provided for training part affects the quality of the model.

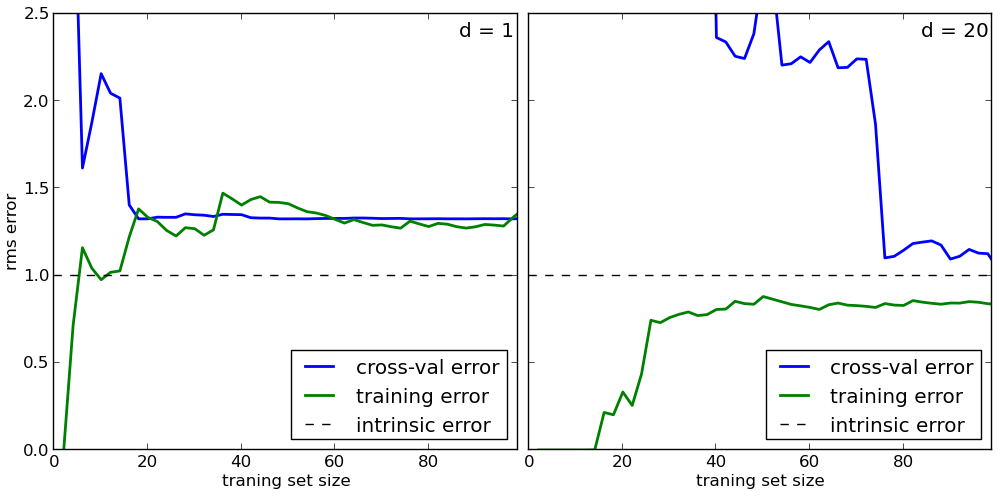

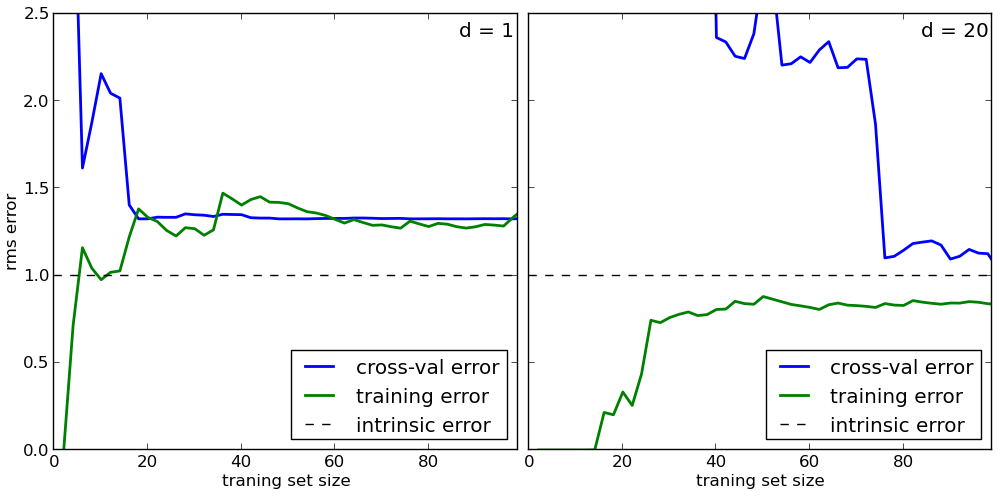

As a research tool, I use the Learning Curve or learning curve, which is a graph consisting of the dependence of the average model error on the data used for training and the dependence of the average error on the test data. In theory, there are two main options that are obtained when building this graph.

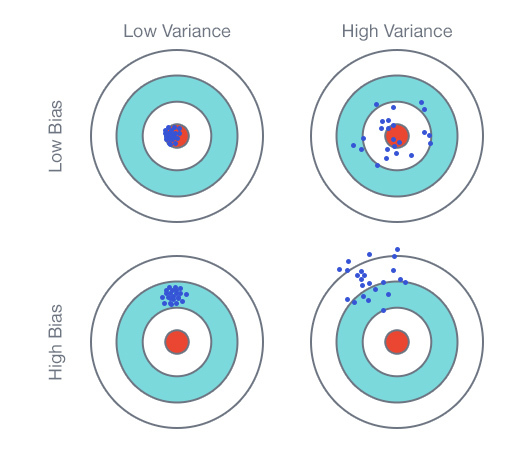

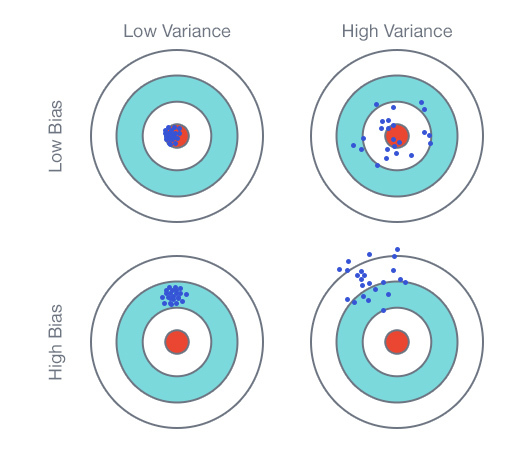

The first option is when the model is under-trained or has a high bias . The main symptom of this situation is a high average error for both training and test data. In this case, attracting additional data will not improve the quality of the model. The second option is when the model is retrained or has a large variability ( High variance ). Visually, you can determine the presence of a large gap between the test and training curves and low training error. Here, on the contrary, more data may lead to an improvement in the test error and, accordingly, to an improvement in the model.

Divide the sample into training and test in the ratio of 60/40

If you look at the images of the figures given above, you can see that, because they are centered, then at the edges there is a lot of space on which the figure itself never happens. That is, in the data this feature will be expressed in features that have a constant value for all objects. Firstly, such signs do not carry any information for the model and, secondly, for many models, with the exception of those based on trees, can lead to errors in learning. Therefore, you can remove these attributes from the data.

Such signs were 532 of 784 . To check how this significant change affected the quality of the models, we will train a simple CART model (which should not be adversely affected by the presence of permanent features) on the data before and after the change. As an estimate, the average percentage of error on the test data is given.

Since changes have affected a hundredth of a percent, then you can further use data with remote signs

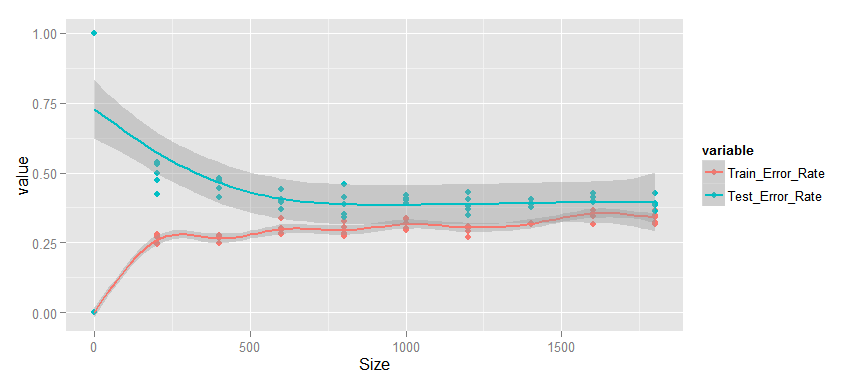

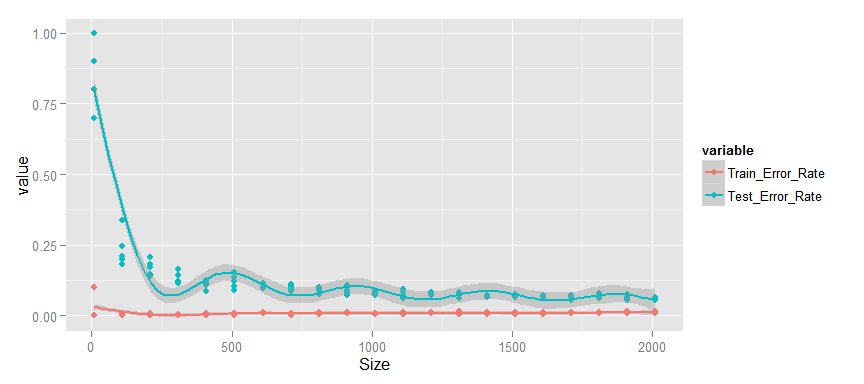

Finally, we construct the learning curve itself. A simple CART model was applied without changing the default parameters. To obtain statistically significant results, each assessment was performed on each sample size value five times.

Averaging was performed using the GAM model.

What do we see?

To summarize, the CART model is clearly under-trained, i.e. has a constant high offset. An increase in the sample for training will not lead to an improvement in the quality of the prediction on the test data. In order to improve the results of this model, it is necessary to improve the model itself, for example, by introducing additional significant features.

Now, we will evaluate the Random Forest model. Again, the model was applied "as is", no parameters were changed. The initial sample size is changed to 100, since A model cannot be built if there are substantially more features than objects.

Here we see a different situation.

I believe that this graph shows a possible third option, i.e. there is no retraining, because there is no gap between the curves, but there is no obvious under-training. I would say that with an increase in the sample, there will be a gradual decrease in the test and training errors until they reach the limit of internally inherent in the model and the improvement does not stop. In this case, the schedule will be similar to the untrained. Therefore, I think that increasing the sample size should lead, albeit to a small, but improving the quality of the model and, accordingly, it makes sense.

Before proceeding with the study of the third model - Support Vector Machine , it is necessary to once again process the data. We will carry out their standardization, since This is necessary for the “convergence” of the algorithm.

Now we will build a graph.

I think that we are confronted with the second version of the theory, i.e. the model is retrained or has high variability. Based on this conclusion, we can confidently say that increasing the size of the training sample will lead to a significant improvement in the quality of the model.

This work showed that the Learning Curve is a good tool in the data explorer’s arsenal both for evaluating the models used and for assessing the need to increase the sample of the data used.

Next time I will talk about the application of the principal component method (PCA) to this problem.

Stay in touch!)

As promised, I continue to publish articles in which I describe my experience after completing training in Data Science from the guys from MLClass.ru (by the way, who haven't had time yet - I recommend registering ). This time, using the example of the Digit Recognizer task, we will study the effect of the size of the training sample on the quality of the machine learning algorithm. This is one of the very first and most basic questions that arise when building a predictive model.

Introduction

In the process of working on data analysis, there are situations when the size of the sample available for research is an obstacle. I met this example while participating in a Digit Recognizer contest held on the Kaggle website. The object of the competition is the database of images of hand-written numbers - The MNIST database of handwritten digits . Images were centered and brought to the same size. A sample of 42,000 such numbers is proposed as a training sample. Each digit is decomposed into a line of 784 signs, the value in each is its brightness.

')

First, load the full training sample into R

library(readr) require(magrittr) require(dplyr) require(caret) data_train <- read_csv("train.csv") Now, to get an idea of the data provided, let's draw the numbers in the usual form for the human eye.

colors<-c('white','black') cus_col<-colorRampPalette(colors=colors) default_par <- par() par(mfrow=c(6,6),pty='s',mar=c(1,1,1,1),xaxt='n',yaxt='n') for(i in 1:36) { z<-array(as.matrix(data_train)[i,-1],dim=c(28,28)) z<-z[,28:1] image(1:28,1:28,z,main=data_train[i,1],col=cus_col(256)) } par(default_par)

Then one could proceed to the construction of various models, the choice of parameters, etc. But let's look at the data. 42000 objects and 784 signs. When I tried to build more complex models, such as Random Forest or Support Vector Machine, I received an error about the lack of memory, and not even a minute of learning from a full sample takes place. One of the options for dealing with this is to use a significantly more powerful machine for computing, or creating clusters of several computers. But in this paper I decided to investigate how the use of all the data provided for training part affects the quality of the model.

Learning curve theory

As a research tool, I use the Learning Curve or learning curve, which is a graph consisting of the dependence of the average model error on the data used for training and the dependence of the average error on the test data. In theory, there are two main options that are obtained when building this graph.

The first option is when the model is under-trained or has a high bias . The main symptom of this situation is a high average error for both training and test data. In this case, attracting additional data will not improve the quality of the model. The second option is when the model is retrained or has a large variability ( High variance ). Visually, you can determine the presence of a large gap between the test and training curves and low training error. Here, on the contrary, more data may lead to an improvement in the test error and, accordingly, to an improvement in the model.

Data processing

Divide the sample into training and test in the ratio of 60/40

data_train$label <- as.factor(data_train$label) set.seed(111) split <- createDataPartition(data_train$label, p = 0.6, list = FALSE) train <- slice(data_train, split) test <- slice(data_train, -split) If you look at the images of the figures given above, you can see that, because they are centered, then at the edges there is a lot of space on which the figure itself never happens. That is, in the data this feature will be expressed in features that have a constant value for all objects. Firstly, such signs do not carry any information for the model and, secondly, for many models, with the exception of those based on trees, can lead to errors in learning. Therefore, you can remove these attributes from the data.

zero_var_col <- nearZeroVar(train, saveMetrics = T) sum(zero_var_col$nzv) ## [1] 532 train_nzv <- train[, !zero_var_col$nzv] test_nzv <- test[, !zero_var_col$nzv] Such signs were 532 of 784 . To check how this significant change affected the quality of the models, we will train a simple CART model (which should not be adversely affected by the presence of permanent features) on the data before and after the change. As an estimate, the average percentage of error on the test data is given.

library(rpart) model_tree <- rpart(label ~ ., data = train, method="class" ) predict_data_test <- predict(model_tree, newdata = test, type = "class") sum(test$label != predict_data_test)/nrow(test) ## [1] 0.383507 model_tree_nzv <- rpart(label ~ ., data = train_nzv, method="class" ) predict_data_test_nzv <- predict(model_tree_nzv, newdata = test_nzv, type = "class") sum(test_nzv$label != predict_data_test_nzv)/nrow(test_nzv) ## [1] 0.3838642 Since changes have affected a hundredth of a percent, then you can further use data with remote signs

train <- train[, !zero_var_col$nzv] test <- test[, !zero_var_col$nzv] CART

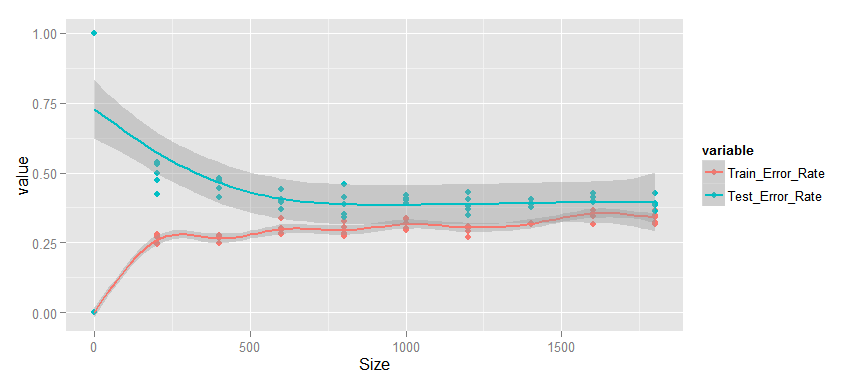

Finally, we construct the learning curve itself. A simple CART model was applied without changing the default parameters. To obtain statistically significant results, each assessment was performed on each sample size value five times.

learn_curve_data <- data.frame(integer(), double(), double()) for (n in 1:5 ) { for (i in seq(1, 2000, by = 200)) { train_learn <- train[sample(nrow(train), size = i),] test_learn <- test[sample(nrow(test), size = i),] model_tree_learn <- rpart(label ~ ., data = train_learn, method="class" ) predict_train_learn <- predict(model_tree_learn, type = "class") error_rate_train_rpart <- sum(train_learn$label != predict_train_learn)/i predict_test_learn <- predict(model_tree_learn, newdata = test_learn, type = "class") error_rate_test_rpart <- sum(test_learn$label != predict_test_learn)/i learn_curve_data <- rbind(learn_curve_data, c(i, error_rate_train_rpart, error_rate_test_rpart)) } } Averaging was performed using the GAM model.

colnames(learn_curve_data) <- c("Size", "Train_Error_Rate", "Test_Error_Rate") library(reshape2) library(ggplot2) learn_curve_data_long <- melt(learn_curve_data, id = "Size") ggplot(data=learn_curve_data_long, aes(x=Size, y=value, colour=variable)) + geom_point() + stat_smooth(method = "gam", formula = y ~ s(x), size = 1)

What do we see?

- The change in the average error percentage occurs monotonously, starting with 500 objects in the sample.

- The error for both training and test data is quite high.

- The gap between test and training data is small.

- Test error is not reduced.

To summarize, the CART model is clearly under-trained, i.e. has a constant high offset. An increase in the sample for training will not lead to an improvement in the quality of the prediction on the test data. In order to improve the results of this model, it is necessary to improve the model itself, for example, by introducing additional significant features.

Random forest

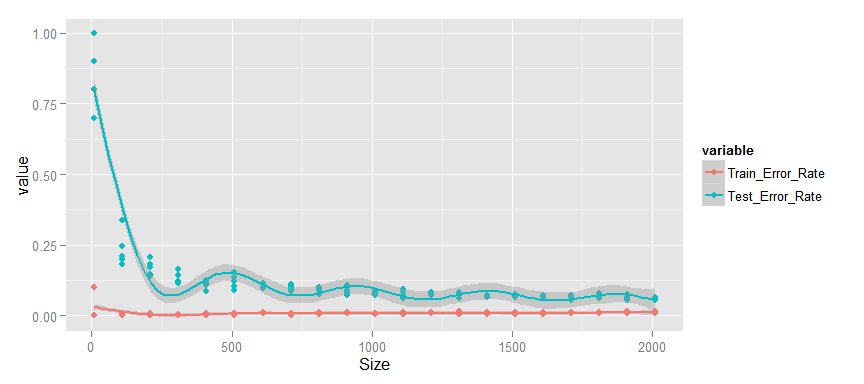

Now, we will evaluate the Random Forest model. Again, the model was applied "as is", no parameters were changed. The initial sample size is changed to 100, since A model cannot be built if there are substantially more features than objects.

library(randomForest) learn_curve_data <- data.frame(integer(), double(), double()) for (n in 1:5 ) { for (i in seq(100, 5100, by = 1000)) { train_learn <- train[sample(nrow(train), size = i),] test_learn <- test[sample(nrow(test), size = i),] model_learn <- randomForest(label ~ ., data = train_learn) predict_train_learn <- predict(model_learn) error_rate_train <- sum(train_learn$label != predict_train_learn)/i predict_test_learn <- predict(model_learn, newdata = test_learn) error_rate_test <- sum(test_learn$label != predict_test_learn)/i learn_curve_data <- rbind(learn_curve_data, c(i, error_rate_train, error_rate_test)) } } colnames(learn_curve_data) <- c("Size", "Train_Error_Rate", "Test_Error_Rate") learn_curve_data_long <- melt(learn_curve_data, id = "Size") ggplot(data=learn_curve_data_long, aes(x=Size, y=value, colour=variable)) + geom_point() + stat_smooth()

Here we see a different situation.

- The change in the average error rate also occurs monotonously.

- Test and training errors are small and continue to decrease.

- The gap between test and training data is small.

I believe that this graph shows a possible third option, i.e. there is no retraining, because there is no gap between the curves, but there is no obvious under-training. I would say that with an increase in the sample, there will be a gradual decrease in the test and training errors until they reach the limit of internally inherent in the model and the improvement does not stop. In this case, the schedule will be similar to the untrained. Therefore, I think that increasing the sample size should lead, albeit to a small, but improving the quality of the model and, accordingly, it makes sense.

Support Vector Machine

Before proceeding with the study of the third model - Support Vector Machine , it is necessary to once again process the data. We will carry out their standardization, since This is necessary for the “convergence” of the algorithm.

library("e1071") scale_model <- preProcess(train[, -1], method = c("center", "scale")) train_scale <- predict(scale_model, train[, -1]) train_scale <- cbind(train[, 1], train_scale) test_scale <- predict(scale_model, test[, -1]) test_scale <- cbind(test[, 1], test_scale) Now we will build a graph.

learn_curve_data <- data.frame(integer(), double(), double()) for (n in 1:5 ) { for (i in seq(10, 2010, by = 100)) { train_learn <- train_scale[sample(nrow(train_scale), size = i),] test_learn <- test_scale[sample(nrow(test_scale), size = i),] model_learn <- svm(label ~ ., data = train_learn, kernel = "radial", scale = F) predict_train_learn <- predict(model_learn) error_rate_train <- sum(train_learn$label != predict_train_learn)/i predict_test_learn <- predict(model_learn, newdata = test_learn) error_rate_test <- sum(test_learn$label != predict_test_learn)/i learn_curve_data <- rbind(learn_curve_data, c(i, error_rate_train, error_rate_test)) } } colnames(learn_curve_data) <- c("Size", "Train_Error_Rate", "Test_Error_Rate") learn_curve_data_long <- melt(learn_curve_data, id = "Size") ggplot(data=learn_curve_data_long, aes(x=Size, y=value, colour=variable)) + geom_point() + stat_smooth(method = "gam", formula = y ~ s(x), size = 1)

- The training error is very small.

- There is a significant gap between the test and training curve, which monotonously decreases.

- The test error is quite small and continues to decrease.

I think that we are confronted with the second version of the theory, i.e. the model is retrained or has high variability. Based on this conclusion, we can confidently say that increasing the size of the training sample will lead to a significant improvement in the quality of the model.

findings

This work showed that the Learning Curve is a good tool in the data explorer’s arsenal both for evaluating the models used and for assessing the need to increase the sample of the data used.

Next time I will talk about the application of the principal component method (PCA) to this problem.

Stay in touch!)

Source: https://habr.com/ru/post/266727/

All Articles