Handling cloud traffic. Who needs network function virtualization (NFV)?

Today I want to talk about a concept that in the next few years will fundamentally change the design of communication networks and telecommunication services - about the virtualization of network functions, Network Functions Virtualization .

Today I want to talk about a concept that in the next few years will fundamentally change the design of communication networks and telecommunication services - about the virtualization of network functions, Network Functions Virtualization .Unlike the pervasive application virtualization, network functions are much more difficult to migrate to the cloud, and some of them are completely impossible. I will talk about the tasks and principles of the NFV, about the history of this initiative and its current status, about the limitations and shortcomings of this approach, and share my thoughts on which tasks can be solved with its help and which are not fundamentally.

From Application Virtualization to Network Function Virtualization

If we untie the application we need from the equipment and make it work in an isolated software container instead of hardware, we can call this application virtualized . Virtualization ideas accompany the IT industry since the advent of the first computers - since the 60s of the last century, engineers have solved the problem of dividing one large mainframe into several isolated projects. In the "zero" mankind once again faced a similar task - this time it took to effectively share server resources among many of their consumer clients. In addition to the ability to "cut" the hardware resource, virtualization brings tremendous benefits.

The coexistence of several applications on a common hardware is more profitable than working on dedicated servers — a virtualized solution requires fewer servers for the same performance; This solution is more compact, consumes less power, requires less network ports and connections. New ways to deliver applications in the form of a virtual machine template allow the buyer to abandon the previously imposed hardware server, but at the same time receive all the advantages of a “boxed” solution. The capabilities of modern hypervisors and management systems have brought a lot of new things in terms of scaling, fault tolerance, various optimizations. Virtualization has reformatted the IT market: the concept of “cloud” services of various models has emerged - SaaS, IaaS, PaaS. However, the laws of the software and application sphere are not fully applicable to the network sphere.

')

The problem NFV virtualization must solve

Modern telecommunication networks contain a large number of proprietary equipment, usually very specialized, tailored for a specific operation: one device provides NAT, another limits access speed and counts traffic, the third performs parental control and content filtering, another is responsible for firewall functions. For the established separation of functions between network equipment there are several reasons: first, each network vendor, as it happened, specializes in several functions in which this particular company has its own know-how and know-how. Another likely reason is reduced to a very specific stuffing of such devices, which has little to do with general-purpose servers. Therefore, the operator-operator of such decisions involuntarily forced at any cost to maintain the existing "diversity". The launch of a new service requires the installation of a new set of equipment that supports the necessary function. This, in turn, requires the allocation of additional space, additional power supply, logistics "iron", its installation and commissioning.

Now it is obvious that a network of physical “boxes”, each of which performs only one single function, is not the most optimal model for development. In addition to high capital and operating costs, this design is very inert, does not allow the operator to increase the portfolio of services provided as quickly as required by shareholders. The network should be much more flexible and dynamic, facilitate the introduction of any new service, its quick activation at the request of the subscriber and the release of busy resources when deactivating the service.

Examples of network functions. Which ones can be virtualized, and which ones are not very

The functions performed by network devices vary considerably in their characteristics and capabilities for their virtualization. Some it is advisable to transfer to the cloud, while others - absolutely can not be separated from the network equipment. Data transfer is somehow connected with the movement of network packets from one geographical point to another, and at each of these points, at each communication center, there must be at least a real network device with the required number of network ports - a switch or a router.

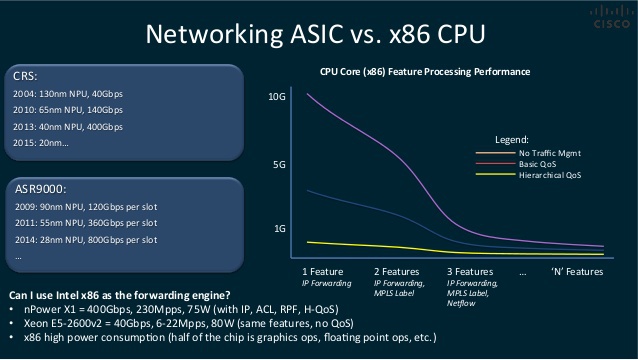

Forwarding — forwarding / routing / switching — is the most primitive operation on a network packet that a network device performs. In fact - copying from one port to another, sometimes with the replacement of certain fields of the frame or package. This operation on most platforms is performed by a special complex, “sharpened” under a quick search for matching in a special, ternary TCAM memory and replacing the required fields of the package “on the fly”. Specialized ASIC used in routers and switches (it is also called Network Processor / Network Processing Unit) for such primitive tasks is more adapted - it works faster, consumes less energy, is primitive, compacted and cheap.

For comparison, the implementation of the same functionality by software on the general purpose CPU, with access to external data through a complex I / O stack (through the kernel and driver), would dramatically reduce performance, and in the server format it would be impossible to provide the necessary port density.

Cisco slide on the benefits of NP, and the impact of features on forwarding performance

Data transfer, along with all the low-level logic that processes each packet, is conventionally distinguished into the data transmission plane, the Data Plane . Almost all signaling - neighborhood detection protocols, routing and traffic control protocols, various mechanisms for balancing, preventing loops, telemetry, managing services and bandwidth, authorization, authentication and accounting - all of them belong to the control plane - Control Plane . The more intense the Control Plane and the “thinner” Data Plane, the more expedient it is to virtualize this network function, transfer it from the network hardware to the server. Conversely, the “thicker” Data Plane and the more primitive the Control Plane - the more difficult the function is in virtualization, and it is better to leave it inside the network device.

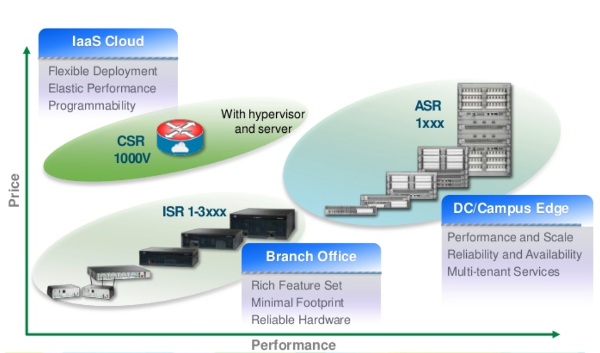

CE (Customer Edge) routers have a very broad Data Plane functionality - from simple routing to supporting special PBR / ABF routing methods, from primitive ACLs to complex Zone-Based firewalls, such routers support various standards of encapsulation and tunneling (GRE / IPIP / MPLS / OTV), network address translation (NAT, PAT), complex QoS policies (policing, shaping, marking, scheduling). Functional Control Plane is also very wide - from primitive ARP / ND to the most complex features like MPLS Traffic Engineering. Such a Branch-router with a load of several tens or hundreds of megabits per second can be perfectly virtualized - you can place a dozen or two of such elements on an industrial server.

niche for using Cisco's virtual CS -router CSR1000v on hardware platforms

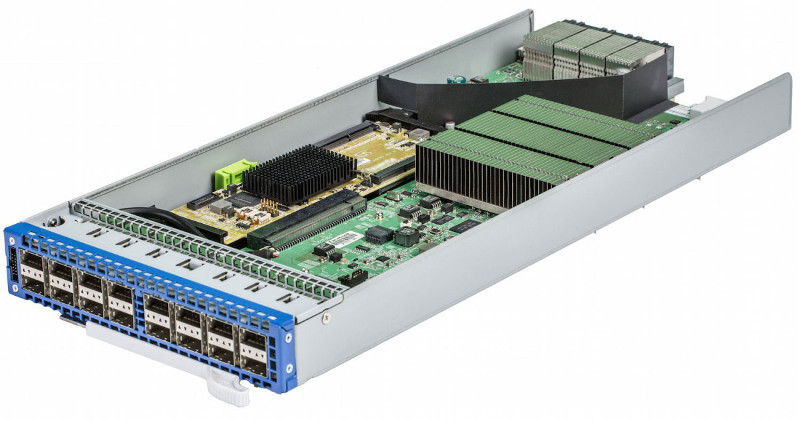

P / PE routers with similar functionality are designed to set up an operator’s network, and their data-plane handles an enormous amount of traffic: from hundreds of gigabits to tens of terabits per second. However, they should have a maximum port density. Needless to say, the server of the traditional architecture is not designed for such amounts of data used by provider-class routers. Their DataPlane is too “thick” and not interesting for virtualization. By analogy with operator routers, virtualization of high-performance switches, widely used in data centers, is almost impossible.

16x40GE-port module unpretentious switch . Under the big heatsink, NPU Broadcom Trident II is hidden, providing 640 honest Gbps.

The only thing that sometimes can or should be virtual in relation to such giants is only the control plane, in whole or in part. Data Plane in this case remains completely on the device, and the packet forwarding itself is performed on the tables programmed by the external system. Such a system can be not only a traditional SDN controller in the narrow sense (working according to the OpenFlow standard) - it can be any orchestrator, a mediator, a network element driver that supports any available interface (CLI, NetConf, SNMP).

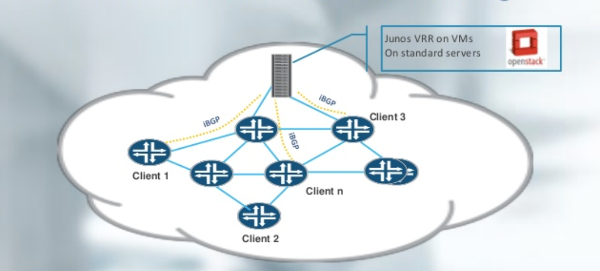

This traditional category includes such a traditional element as, say, BGP Route-Reflector , which “spills” BGP routing tables around the network. Such elements are well suited for virtualization, but there are only a few of them on the operator’s network. The NFV-concept concerns rather dynamic, subscriber, mass, but not infrastructure services.

Looking ahead, I say that the rendered Control Plane and such a thing as SDN are related to NFV in a slightly different way - rather as an element of the network infrastructure for the NFV itself, and not as a function.

virtualized BGP Route-Reflector Juniper on a standard server

CPE (Customer Premises Equipment or Residential Gateway) is the same box installed in the subscriber’s apartment where the cable from the operator comes. It distributes the Internet to all devices in the apartment and is the central point of its home network: it has a DHCP server, a caching DNS server, a client of the protocol that communicates with the provider: PPPoE / L2TP / PPTP / DHCP / 802.1x. From the dataplane features, NAT and Firewall are also defining. In any case, there is no way out of the box with the port for switching on the camera cable, and the requirements for the device remain the same: a small, cute, problem-free, cheap device. These aspirations have long been caught by electronics manufacturers, and such devices are available in our market for every taste and budget. We must pay tribute to the vendors of the most common chipsets of such “soap cases”: their computational ability can be very high, and such resource-intensive functions as NAT and switching can be implemented in hardware.

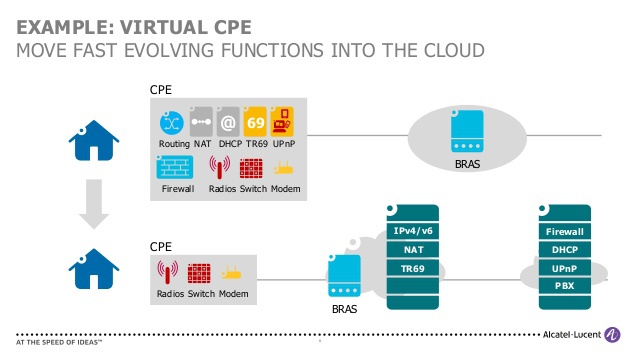

Such devices currently do not meet the requirements of the operators rather because of a lack of functionality: the provider often cannot assess their status and load, they are often a bottleneck for the provision of the service; the operator does not see the local network of the subscriber and does not control the settings of the CPE, which complicates diagnostics; the lack of necessary features on this element makes it difficult to launch new services; Upgrading the software of these devices is usually performed out of time, which brings additional risks. I am sure that in the near future we are waiting for the rethinking of the model of services such as parental control, Internet cinema, smart home management, backup and replication, storage and viewing of video recordings from home surveillance cameras, services like “desktop student”, etc. ., and a significant role in this will be played by the CPE, virtualized in the domain of the operator. The presence of an individual subscriber vCPE-container, through which subscriber traffic passes, is likely to change the principle of accounting for the consumption of services: finally, the object of billing can be not only traffic, but a computing or disk resource. “The box with the antenna” in this service will remain in its place in the subscriber apartment, but will have the most minimal set of functions.

Alcatel-Lucent slide vividly illustrates the transfer of network functions from a CPE device to the cloud

BNG / BRAS are the so-called gateway routers through which the operator’s subscribers access the Internet. It is these routers that perform the “metering” and metering of consumed services in accordance with the tariff plan for each of the users. These devices have a tremendous computational load - each subscriber needs to be authorized in the AAA system using the RADIUS protocol, to get unique rules and attributes for each of the sessions in order to create a unique service for each with its own classes and restrictions, accounting rules and addressing, QoS policies and ACL. This element is responsible for address assignment, supports the operation of ARP / ND / PPP / LCP / DHCP signaling with tens and hundreds of thousands of subscriber devices. For each session, BNG has to understand how much traffic is in a particular class, how long the session lasted, who should be blocked from accessing the Internet, who should be turned into the portal, and who should increase speed. Do I have to say that these pieces of iron must have a very productive Control Plane? BNG has network processors even more complex than on P or PE platforms! In addition to sending packets, this smart piece of hardware gets up with them more interesting "exercises": each subscriber session is programmed into a network processor, each can have several counters, each can have its own rules, its own classifiers, its own access speeds.

Such "packet magic" is impossible without additional hardware: hundreds of thousands of counters and huge amounts of special types of memory: TCAM for classifiers and access lists, packet memory for queuing. Some subscriber access technologies use tunneling (L2TP / PPPoE), and then BNG is also responsible for data encapsulation-decapsulation, and the more operations are performed on the network packet, the more advantages the ASIC has over x86. However, a virtualized BNG can be interesting with a total traffic volume of no more than a few Gbps units, with very dynamic services and a large number of sessions and bring up / teardown events. A good example is WiFi in transport: with a small amount of traffic, high dynamics, with the presence of additional services such as banner ads that can be placed on the same cluster of servers where vBNG is deployed.

NAT - translation of network addresses - due to the shortage of public addresses that are at the disposal of telecom operators, this feature has become in demand in recent years not only in Enterprise and SMB, but also in the SP segment. NAT-functionality is one of the most resource-intensive Data Plane functions and requires significant computational resources. Its complexity is associated with a huge number of simultaneously monitored connections. Opening only one of this page (with all objects and pictures) created, used and closed hundreds of translations on the NAT device. Of course, if your operator uses NAT. Peer-to-peer file-sharing network clients generate many thousands of broadcasts per megabit bandwidth.

The development of simplified NAT modes (the so-called Carrier-Grade modes) has led to an increase in performance, but the implementation of NAT on network processors today is the exception rather than the rule. A large amount of traffic that must be serviced by the NAT complex today is implemented on high-performance servers installed in the slot of one of the central routers on the network. This approach allows you to avoid external network connections - the complex connects directly to the backplane of the router and looks to the router as another linear module.

Multiservice Huawei ME-60 router with an installed SFU module, which can run Carrier-Grade NAT

Another prominent characteristic of NAT is thickness - it can be divided into smaller parts by splitting the address pool, but this will make it less efficient. The more centralized it is, the better the available address resource is used. Because of its thickness, large volumes of traffic, and a large computational load, NAT is an interesting case for the concept of distributed NFV (dNFV), when network functions are logically centralized (we have a single pool of addresses and resources and a single management point), but are geographically distributed among nodes and located topologically close to the transit nodes of the served traffic.

Security: The network security functionality is close to NAT in its properties - it is also characterized by a huge number and state dynamics (Stateful FW, NGFW, IDS, IPS, Antivirus, Antispam), large memory consumption and CPU resources, which makes it interesting for virtualization. Unlike NAT, this service cannot be called fat. It can be divided up to the ratio of 1 subscriber to 1 container, therefore this service is considered one of the most explicit use-case for NFV.

DPI - Deep Packet Inspection - a feature that allows a telecom operator to limit or determine the level of service (quota, speed) for a specific type of application or content. One example is such a common “parental control” service, which, if activated, triggers subscriber traffic through a filter based on the class of the visited resource. Data Plane of this service is usually implemented by software, and the operator’s interesting performance can start with rather small quantities - this is also one of the bright juz-cases.

MMSC / IMS - IP Multimedia Subsystem is a “borderline” element for the provision of voice services, television broadcasting, video streaming for applications such as a home theater or video surveillance, various multimedia menus and instant messaging services. Excellent complements the portfolio of carrier services.

Infrastructure elements of GSM / UMTS / LTE / WiFi mobile networks : wireless controllers, EPC, IMS, CSN, SMSC, PCC, GWc, GWu, PGW, SGW, HSS, PCRF - these functions have high requirements for computing resources, and for the amount of transmitted Data - rather moderate. Only a few of them have a number of features related to providing the necessary QoS parameters.

For completeness, I will mention such applications as DHCP, DNS, HTTP servers (with the widest choice of available WEB applications), HTTP Proxy (including various optimizers, accelerators and URL filters), SIP Proxy , etc. They can be referred to as network functions, they can be argued and referred to the Application-level of the OSI model, but, in any case, they are in demand and easily virtualizable, and can also serve as composite building blocks of the new generation services.

Development and current status of the NFV standard

So, with the functions themselves more or less clear. It remains to understand how they can be combined with each other, how to ensure isolation of network connections between these virtual elements, security, scalability and fault tolerance, how to make the system transparent and manageable, ultimately, what equipment to choose — computing and network — and how to configure it. . In order to answer these questions, the seven largest foreign providers (AT & T, BT, Deutsche Telekom, Orange, Telecom Italia, Telefonica and Verizon) decided to unite their efforts in research and in 2013 created a working group under the auspices of the European Telecommunication Standardization Institute ETSI . Later, dozens of manufacturers of network equipment and software joined their experiments, and at the moment NFV ISG includes several hundred participants.

Several test zones were organized on the networks of the participating operators and research topics and the most interesting questions for study were distributed. Practical developments are recorded, summarized and published for further commenting and correction, and new issues are formulated as a result of the work. A year ago, NFV technology was little filled with specifics, but with each new publication the mosaic of the future standard looks more and more complete.

It is noteworthy that the successful experience of “piloting” is often adopted and replicated “as is”, and even despite the “proprietary” nature and the lack of a full-fledged open standard, a number of vendors who want to occupy this sought-after niche already provide not only the virtual elements themselves, but and holistic solutions of NFV, of different scale, of different degrees of readiness and completeness, implemented in different ways.

In 2014, the OpenSource community of the Linux Foundation joined the vendor-provider initiatives, which launched the open source platform project OPNFV , which should expand the industry’s interest in using public and open products in this niche. For example, OpenvSwitch and OpenDaylight would work for the network, OpenStack for the virtualization layer, Ceph as the storage, KVM as the hypervisor, Linux as the host and guest OS. Today the first release of this product, Arno. NFV standardization activities are also seen in industry organizations such as, for example, MEF or TM Forum .

NFV Architecture and Components

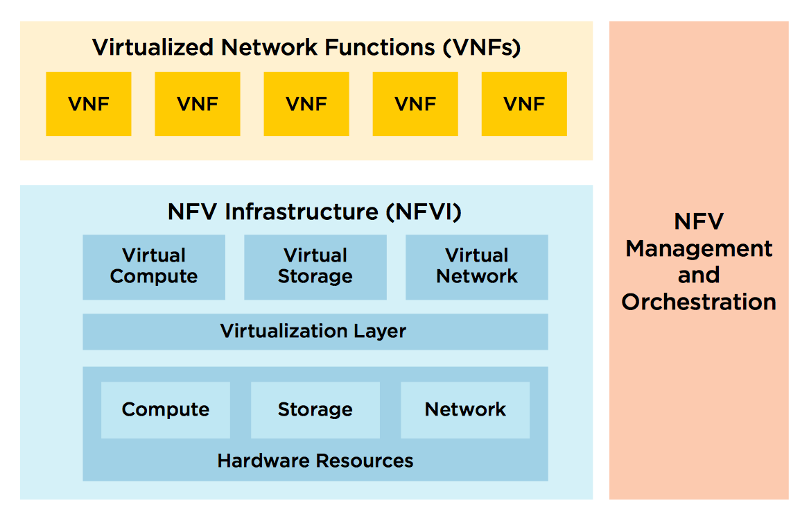

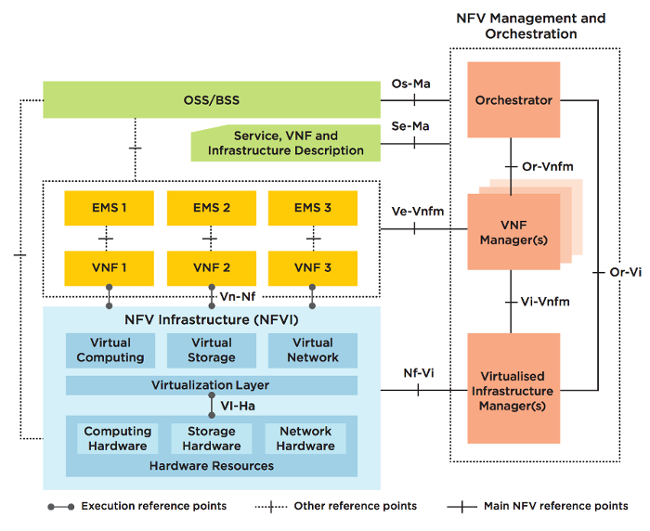

In accordance with the ETSI specifications, the NFV domain consists of three conditional components:

1. Virtualized Network Functions (VNF) - the same network functions, which were discussed above. As a rule, a VNF element means a virtual machine or a set of virtual machines, and their combination defines the upper plane of the solution. Since VNF-element to be dynamically configured, there is another sub-block in its composition or located above it - Element Management System (EMS) .

2. NFV Infrastructure (NFVI) - physical resources, their components and settings: network devices, storages, servers and virtual resources supplementing them: hypervisors, virtualization system, virtual switches. They, being at the lower level, form the lower plane of the "pie".

3. NFV Management and Orchestration (MANO) —binding, managing the lifecycle of virtual elements and the underlying infrastructure — is indicated by a vertical rectangle that has connections to both solution planes through components ( Managers ) that are responsible for managing a particular plane. , , .

Operations/Business support systems (OSS/BSS) : , , , , , NFV ( MANO), NFVI- VNF- .

Conclusion

NFV , ETSI , , . , . , , service-chaining . NFV-.

Source: https://habr.com/ru/post/266343/

All Articles