Performance Comparison of C ++ and C #

There are different opinions regarding the performance of C ++ and C #.

For example, it’s hard to argue that C # code can work faster by optimizing for the platform during the JIT compilation. Or, for example, with the fact that the .Net Framework kernel itself is very well optimized.

On the other hand, a weighty argument is that C ++ is compiled directly into machine code and works with the minimum possible number of helpers and layers.

')

There are also opinions that measuring code performance is not correct, because the micro level does not characterize the performance at the macro level. (I certainly agree with the fact that at the macro level you can spoil the performance, but you can hardly agree with the fact that the performance at the macro level does not consist of the performance at the micro level)

There were also claims that C ++ code is about ten times faster than C # code.

All this variety of conflicting opinions leads to the idea that you need to try to write the most identical and simple code in one and another language, and compare the time of its implementation. Which was done by me.

The test that is performed in this article

I wanted to perform the most primitive test that will show the difference between languages at the micro level. In the test we will go through a full cycle of data operations, container creation, filling, processing and deleting, i.e. as usually happens in applications.

We will work with int data in order to make their processing as identical as possible. We will only compare the release builds of the default configuration using Visual Studio 2010 .

The code will perform the following actions:

1. Allocation of an array / container

2. Filling an array / container with numbers in ascending order.

3. Sorting an array / container by the bubble method in descending order (the method chosen is the simplest, since we do not compare the sorting methods, but the means of implementation)

4. Deleting array \ container

The code was written by several alternative methods, differing in different types of containers and methods of their allocation. In the article itself I will give only examples of code that, as a rule, worked as quickly as possible for each of the languages. The remaining examples, with inserts for calculating the speed of execution, can be fully seen here .

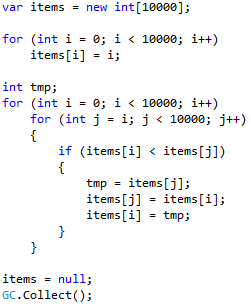

Test code

As you can see, the code is fairly simple and almost identical. Since in C # you cannot explicitly perform a delete, the execution time of which we want to measure, we will use items = null instead of deletion ; GC.Collect (); provided that we did not create anything other than the container (in our entire example), GC.Collect should also only delete the container, so I think this is a fairly adequate replacement for delete [] items .

Declaration int tmp; for a cycle in the case of C # saves time, so it was considered such a variation of the test for the case of C #.

Different machines obtained different results of this test (apparently due to the difference in architectures), but the difference in the performance of the code allows measuring the measurement results.

In measurements, to calculate the execution time of the code , QueryPerformanceCounter was used, the “time” of creating, filling, sorting and deleting forms on the test platforms was measured:

From the tables it is clear that:

1. The fastest C # implementation runs slower than the fastest C ++ implementation by 30-60% (depending on the platform)

2. The spread between the fastest and slowest C ++ implementation of 1-65% (depending on the platform)

3. The slowest (of the considered ones) implementation in C #, about 4 times slower than the slowest C ++ implementation

4. The most time is the sorting stage (according to this, in the future we will consider it in more detail)

Another thing to note is that std :: vector is a slow container on older platforms, but quite fast on modern ones. And also the fact that the time of "deletion" in the case of the first .Net test is somewhat higher, apparently due to the fact that in addition to the test data, some other entities are deleted.

The reason for the performance difference C ++ and C # code

Let's look at the code that is executed by the processor in each case. To do this, we take the sorting code from the fastest examples and see what it compiles, we will use the Visual Studio 2010 debugger and disassembly mode, as a result we will see the following code for sorting:

What can we see here?

19 C ++ instructions against 23 in C #, the difference is not big, but coupled with other optimizations, I think it can explain the reason for the longer execution time for C # code.

The C # implementation also raises some questions.

jae 000001C2 , which performs the transition

on 000001c2 call 731661B1

Which apparently also affects the run-time difference, introducing additional delays.

Other performance comparisons

It is worth noting that there are other articles where C ++ and C # performance were measured. Of those that came across to me, the most informative seemed Head-to-head benchmark: C ++ vs .NET

The author of this article, in some tests, “played up” with C # forbidding to use SSE2 for C ++, therefore some results of C ++ tests with floating became about two times slower than they would be with SSE2 enabled. In the article one can find another criticism of the author’s methodology, among which there is a very subjective choice of a container for a test in C ++.

However, without taking into account floating point tests without SSE2, and making an amendment to a number of other features of the testing methodology, the results obtained in the article should be considered.

According to the measurement results, a number of interesting conclusions can be made:

1. Debug build C ++ is noticeably slower than the release build, while the difference between the debugging and release build C # is less significant

2. C # performance under the .Net Framework is noticeably (more than 2 times) higher than the performance under Mono

3. For C ++, it is quite possible to find a container that will work slower than a similar container for C #, and no optimization will help to overcome this except using another container.

4. Some operations of working with a file in C ++ are noticeably slower than analogs in C #, but their alternatives are just as noticeably faster than analogs of C #.

If we sum up and talk about Windows, then the article comes to similar results: C # code is slower than C ++ code, about 10-80%

Is it a lot -10 ..- 80%?

Suppose when developing in C #, we will always use the most optimal solution, which will require very good skills from us. And suppose we will fit into the total 10..80% performance loss of performance. What does it threaten us with? Let's try to compare these percentages with other indicators characterizing productivity.

For example, in 1990-2000, single-line processor performance grew by about 50% over the year. And since 2004, the growth rate of processor performance has fallen, and was only 21% per year, at least until 2011.

A Look Back at Single-Threaded CPU Performance

The expected performance growth figures are very vague. It is unlikely that a growth above 21% was shown in 2013 and 2014, moreover, it is quite likely that in the future growth is expected to be even lower. At least, Intel plans to master new technologies every year more and more modest ...

Another direction to assess is energy efficiency and low cost of iron. For example, here you can see that speaking of the top-end gland + 50% of single-thread performance can increase the cost of the processor by 2-3 times.

From the point of view of energy efficiency and noise, it is quite possible to assemble an economical PC on passive cooling, but you have to sacrifice performance, and this sacrifice may well be about 50% or more of the performance of relatively voracious and hot, but productive iron.

How the performance of processors will grow is not exactly known, but it is estimated that in the case of a 21% productivity growth per year, an application in C # may lag behind in performance by 0.5-4 years relative to an application in C ++. In the case of, for example, 10% growth, the lag will already be 1-8 years. However, the real application may fall behind much less, below we consider why.

I do not dare to assess the profitability of the victim 10..80% of performance for the sake of cost savings on development. Obviously, this profitability depends on the cost of obtaining these 10..80% in other ways (that is, at the expense of iron). However, the emerging trend shows that each next percent of the performance of iron will be more expensive than the previous one, which is likely, sooner or later, will lead to a situation where it is cheaper to get additional performance by optimizing the code.

What is the real score?

On the one hand, you are unlikely to write so optimal to always show maximum performance.

But on the other hand, what is more important: how much runtime (runtime) of your program will be occupied by your code, and how much system code?

For example, if the code takes 1% of the execution time of an application or service, then even a 10-fold drop in the performance of this code would not greatly affect the speed of the application, and a performance hit would be only about 10%.

But it’s quite another thing when about 100% of the execution time of an application is the execution of your code, and not the OS code. In this case, you can easily get both -80% and large performance losses.

findings

Of course, from all the above, it does not follow that you need to urgently switch from C # to C ++. Firstly, development in C # is cheaper, and secondly, for a number of tasks, the performance of modern processors is redundant, and even optimization within C # is not necessary. But it seems to me important to pay attention to overhead costs, that is, the fee for using a managed environment, and the assessment of these costs. Obviously, depending on market conditions and emerging issues, this fee may be significant. Other aspects of comparing C # and C ++ can be found in my previous article, Choosing Between C ++ and C # .

For example, it’s hard to argue that C # code can work faster by optimizing for the platform during the JIT compilation. Or, for example, with the fact that the .Net Framework kernel itself is very well optimized.

On the other hand, a weighty argument is that C ++ is compiled directly into machine code and works with the minimum possible number of helpers and layers.

')

There are also opinions that measuring code performance is not correct, because the micro level does not characterize the performance at the macro level. (I certainly agree with the fact that at the macro level you can spoil the performance, but you can hardly agree with the fact that the performance at the macro level does not consist of the performance at the micro level)

There were also claims that C ++ code is about ten times faster than C # code.

All this variety of conflicting opinions leads to the idea that you need to try to write the most identical and simple code in one and another language, and compare the time of its implementation. Which was done by me.

The test that is performed in this article

I wanted to perform the most primitive test that will show the difference between languages at the micro level. In the test we will go through a full cycle of data operations, container creation, filling, processing and deleting, i.e. as usually happens in applications.

We will work with int data in order to make their processing as identical as possible. We will only compare the release builds of the default configuration using Visual Studio 2010 .

The code will perform the following actions:

1. Allocation of an array / container

2. Filling an array / container with numbers in ascending order.

3. Sorting an array / container by the bubble method in descending order (the method chosen is the simplest, since we do not compare the sorting methods, but the means of implementation)

4. Deleting array \ container

The code was written by several alternative methods, differing in different types of containers and methods of their allocation. In the article itself I will give only examples of code that, as a rule, worked as quickly as possible for each of the languages. The remaining examples, with inserts for calculating the speed of execution, can be fully seen here .

Test code

| C ++ HeapArray | With # HeapArray fixed tmp |

|  |

As you can see, the code is fairly simple and almost identical. Since in C # you cannot explicitly perform a delete, the execution time of which we want to measure, we will use items = null instead of deletion ; GC.Collect (); provided that we did not create anything other than the container (in our entire example), GC.Collect should also only delete the container, so I think this is a fairly adequate replacement for delete [] items .

Declaration int tmp; for a cycle in the case of C # saves time, so it was considered such a variation of the test for the case of C #.

Different machines obtained different results of this test (apparently due to the difference in architectures), but the difference in the performance of the code allows measuring the measurement results.

In measurements, to calculate the execution time of the code , QueryPerformanceCounter was used, the “time” of creating, filling, sorting and deleting forms on the test platforms was measured:

From the tables it is clear that:

1. The fastest C # implementation runs slower than the fastest C ++ implementation by 30-60% (depending on the platform)

2. The spread between the fastest and slowest C ++ implementation of 1-65% (depending on the platform)

3. The slowest (of the considered ones) implementation in C #, about 4 times slower than the slowest C ++ implementation

4. The most time is the sorting stage (according to this, in the future we will consider it in more detail)

Another thing to note is that std :: vector is a slow container on older platforms, but quite fast on modern ones. And also the fact that the time of "deletion" in the case of the first .Net test is somewhat higher, apparently due to the fact that in addition to the test data, some other entities are deleted.

The reason for the performance difference C ++ and C # code

Let's look at the code that is executed by the processor in each case. To do this, we take the sorting code from the fastest examples and see what it compiles, we will use the Visual Studio 2010 debugger and disassembly mode, as a result we will see the following code for sorting:

| C ++ | WITH# |

| for (int i = 0; i <10,000; i ++) 00F71051 xor ebx, ebx 00F71053 mov esi, edi for (int j = i; j <10000; j ++) 00F71055 mov eax, ebx 00F71057 cmp ebx, 2710h 00F7105D jge HeapArray + 76h (0F71076h) 00F7105F nop { if (items [i] <items [j]) 00F71060 mov ecx, dword ptr [edi + eax * 4] 00F71063 mov edx, dword ptr [esi] 00F71065 cmp edx, ecx 00F71067 jge HeapArray + 6Eh (0F7106Eh) { int tmp = items [j]; items [j] = items [i]; 00F71069 mov dword ptr [edi + eax * 4], edx items [i] = tmp; 00F7106C mov dword ptr [esi], ecx for (int j = i; j <10000; j ++) 00F7106E inc eax 00F7106F cmp eax, 2710h 00F71074 jl HeapArray + 60h (0F71060h) for (int i = 0; i <10,000; i ++) 00F71076 inc ebx 00F71077 add esi, 4 00F7107A cmp ebx, 2710h 00F71080 jl HeapArray + 55h (0F71055h) } } | int tmp; for (int i = 0; i <10,000; i ++) 00000076 xor edx, edx 00000078 mov dword ptr [ebp-38h], edx for (int j = i; j <10000; j ++) 0000007b mov ebx, dword ptr [ebp-38h] 0000007e cmp ebx, 2710h 00000084 jge 000000BB 00000086 mov esi, dword ptr [edi + 4] { if (items [i] <items [j]) 00000089 mov eax, dword ptr [ebp-38h] 0000008c cmp eax, esi 0000008e jae 000001C2 00000094 mov edx, dword ptr [edi + eax * 4 + 8] 00000098 cmp ebx, esi 0000009a jae 000001C2 000000a0 mov ecx, dword ptr [edi + ebx * 4 + 8] 000000a4 cmp edx, ecx 000000a6 jge 000000B0 000000a8 mov dword ptr [edi + ebx * 4 + 8], edx items [i] = tmp; 000000ac mov dword ptr [edi + eax * 4 + 8], ecx for (int j = i; j <10000; j ++) 000000b0 add ebx, 1 000000b3 cmp ebx, 2710h 000000b9 jl 00000089 for (int i = 0; i <10,000; i ++) 000000bb inc dword ptr [ebp-38h] 000000be cmp dword ptr [ebp-38h], 2710h 000000c5 jl 0000007B } } |

What can we see here?

19 C ++ instructions against 23 in C #, the difference is not big, but coupled with other optimizations, I think it can explain the reason for the longer execution time for C # code.

The C # implementation also raises some questions.

jae 000001C2 , which performs the transition

on 000001c2 call 731661B1

Which apparently also affects the run-time difference, introducing additional delays.

Other performance comparisons

It is worth noting that there are other articles where C ++ and C # performance were measured. Of those that came across to me, the most informative seemed Head-to-head benchmark: C ++ vs .NET

The author of this article, in some tests, “played up” with C # forbidding to use SSE2 for C ++, therefore some results of C ++ tests with floating became about two times slower than they would be with SSE2 enabled. In the article one can find another criticism of the author’s methodology, among which there is a very subjective choice of a container for a test in C ++.

However, without taking into account floating point tests without SSE2, and making an amendment to a number of other features of the testing methodology, the results obtained in the article should be considered.

According to the measurement results, a number of interesting conclusions can be made:

1. Debug build C ++ is noticeably slower than the release build, while the difference between the debugging and release build C # is less significant

2. C # performance under the .Net Framework is noticeably (more than 2 times) higher than the performance under Mono

3. For C ++, it is quite possible to find a container that will work slower than a similar container for C #, and no optimization will help to overcome this except using another container.

4. Some operations of working with a file in C ++ are noticeably slower than analogs in C #, but their alternatives are just as noticeably faster than analogs of C #.

If we sum up and talk about Windows, then the article comes to similar results: C # code is slower than C ++ code, about 10-80%

Is it a lot -10 ..- 80%?

Suppose when developing in C #, we will always use the most optimal solution, which will require very good skills from us. And suppose we will fit into the total 10..80% performance loss of performance. What does it threaten us with? Let's try to compare these percentages with other indicators characterizing productivity.

For example, in 1990-2000, single-line processor performance grew by about 50% over the year. And since 2004, the growth rate of processor performance has fallen, and was only 21% per year, at least until 2011.

A Look Back at Single-Threaded CPU Performance

The expected performance growth figures are very vague. It is unlikely that a growth above 21% was shown in 2013 and 2014, moreover, it is quite likely that in the future growth is expected to be even lower. At least, Intel plans to master new technologies every year more and more modest ...

Another direction to assess is energy efficiency and low cost of iron. For example, here you can see that speaking of the top-end gland + 50% of single-thread performance can increase the cost of the processor by 2-3 times.

From the point of view of energy efficiency and noise, it is quite possible to assemble an economical PC on passive cooling, but you have to sacrifice performance, and this sacrifice may well be about 50% or more of the performance of relatively voracious and hot, but productive iron.

How the performance of processors will grow is not exactly known, but it is estimated that in the case of a 21% productivity growth per year, an application in C # may lag behind in performance by 0.5-4 years relative to an application in C ++. In the case of, for example, 10% growth, the lag will already be 1-8 years. However, the real application may fall behind much less, below we consider why.

I do not dare to assess the profitability of the victim 10..80% of performance for the sake of cost savings on development. Obviously, this profitability depends on the cost of obtaining these 10..80% in other ways (that is, at the expense of iron). However, the emerging trend shows that each next percent of the performance of iron will be more expensive than the previous one, which is likely, sooner or later, will lead to a situation where it is cheaper to get additional performance by optimizing the code.

What is the real score?

On the one hand, you are unlikely to write so optimal to always show maximum performance.

But on the other hand, what is more important: how much runtime (runtime) of your program will be occupied by your code, and how much system code?

For example, if the code takes 1% of the execution time of an application or service, then even a 10-fold drop in the performance of this code would not greatly affect the speed of the application, and a performance hit would be only about 10%.

But it’s quite another thing when about 100% of the execution time of an application is the execution of your code, and not the OS code. In this case, you can easily get both -80% and large performance losses.

findings

Of course, from all the above, it does not follow that you need to urgently switch from C # to C ++. Firstly, development in C # is cheaper, and secondly, for a number of tasks, the performance of modern processors is redundant, and even optimization within C # is not necessary. But it seems to me important to pay attention to overhead costs, that is, the fee for using a managed environment, and the assessment of these costs. Obviously, depending on market conditions and emerging issues, this fee may be significant. Other aspects of comparing C # and C ++ can be found in my previous article, Choosing Between C ++ and C # .

Source: https://habr.com/ru/post/266163/

All Articles