Entertaining 40 gigabit

My very first post , which I wrote three years ago on Habr, was devoted to 10G Ethernet and 10G Intel network cards, which, in principle, is not accidental, because network technologies are my profession. Further posts on this topic almost did not appear in the blog Intel - as they say, there was no reason. And now he has appeared, and quite weighty. After all, the release of the new generation of Intel network cards on the X / XL710 controller brought with it not only improved performance and new features, but also the emergence of a fundamentally new product for the company - 40G Ethernet network cards for servers. It's time to talk about the current situation in the 40 gigabit area.

"4 times better than 10G"

The IEEE 802.3ba standard describing 40 / 100G Ethernet was published more than 5 years ago. The prospects were then drawn by the most optimistic: it was predicted that by 2015 (that is, already now), 40 / 100G ports would be at least a quarter of all Ethernet ports. As living at that time, we can definitely say: this did not happen. Why? Actually, over the past three years, nothing has changed in principle; Let me quote: “... the main reason, in my opinion, is that such speeds in most cases are simply not needed; With the exception of extra large data centers or giant cloud platforms, one or several 10G lines do an excellent job of transferring the traffic assigned to them. ”

Once in this optimistic time I had a chance to attend the presentation of a famous vendor. To the question: “Why are you promoting 40G?” The speaker answered concisely: “because it is 4 times better than 10G”. However, the market has once again demonstrated its pragmatism. It is unlikely that the operator will lead his partners to the AvtoZal to proudly show: we have a 40G module in the router! The end user needs to obtain the required performance indicators at an optimal cost. Even with the use of outdated technology.

')

According to my observations, the situation began to slowly change about two years ago, while, as is typical, 40G ports still tend to “collapse” at 4x10G (they do it easily, by nature), which again confirms the aforementioned thesis. In general, the process is very slow, telecom operators, even the largest ones, still prefer to manage with the aggregation of nx 10G channels, and in the Ethernet data center it is necessary to compete with Infiniband QDR / HDR, which is more economical than wasting channel capacity and providing lower delays. Who cares about the numbers, here is a report from Ethernet vs Infiniband testing using the Intel MPI Benchmark benchmark .

| 40G Ethernet | InfiniBand QDR | InfiniBand FDR | |

|---|---|---|---|

| Delays, ms | 7.8 | 1.5 | 0.86-1.4 |

| Speed, MB / s | 1126 | 3400 | 5300-6000 |

40G inside

The IEEE 802.3ba standard offered several options for data transmission at 40 Gbit / s.

| Specification | Wednesday | Max. distance |

|---|---|---|

| 40GBase-KR4 | Becplein | 1m |

| 40GBase-CR4 | Copper twinax cable | 7 m |

| 40GBase-SR4 | Optics multimode, 850 nm | 150 m (OM4) |

| 40GBase-LR4 | Optics single mode, 1310 nm | 10 km |

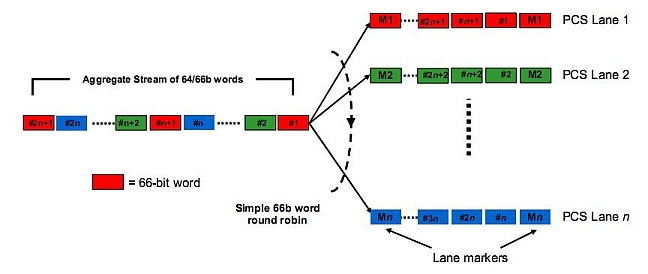

All 40G specifications with the number 4 at the end are united by one common point: 4 lines are used at the same time for data transfer. The distribution of data across the lines is done by a module named in the MLD (Multilane Distribution) standard. At the input of the module, a stream of data encoded using the 64b / 66b algorithm, MLD in turn (round-robin) distributes 66-bit blocks into each of the parallel lines, which can be either physically different fibers (40GBase-SR4), or different λ Frequencies within the WDM (Wave Division Multiplexing) structure, as in the 40GBase-LR4 specification.

In order to avoid errors during the reverse assembly of traffic at the stage of multiplexing, service markers are inserted into the stream of 66-bit words.

Frequency multiplexing technologies, which have long been registered in Ethernet, turned out to be very useful here, however, there was a slight stretch: WDM devices have long been used in MAN / WAN trunk lines, why do we need another CWDM under a different name? But the multi-fiber version of the prospects, of course, is.

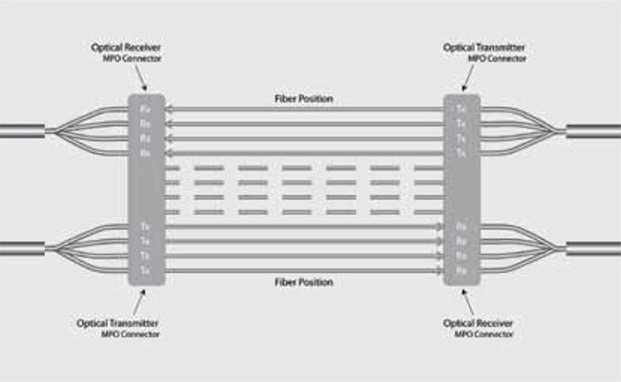

The prospect of the 40GBase-SR4 specification (and we are talking about it) is in its economy. Currently, this is the most affordable 40G solution for LAN-distances and is actually the only active solution used among the entire 40G line of specifications. As a constructive connection for 40GBase-SR4, a 12-pin MPO (Multi-fiber push-on) connector is used.

Optical outputs in a 12-pin MPO are located in one row between the rails, for 40GBase-SR4 4 pairs of lines are used. The 100G Ethernet MPO connector is 24-pin, the outputs go in two rows.

The “counterpart” of the 40GBase-SR4 optical port in the equipment is the QSFP + connector (similar to SFP for 1G and SFP + for 10G), so the 40GBase-SR4 transceiver has an MPO on one side and a QSFP + on the other.

What does the 710 give us?

We now turn to the network products of Intel. First, a little history. Network cards are devices that do not have a marketing load; therefore, changes in their generations are due solely to technical reasons and occur infrequently - we already talked about this in the introduction.

The breakthrough in high-speed Ethernet has become for Intel the Intel 82598EB networking chip, created 10 years ago and becoming the de facto benchmark for 10G Ethernet for many years. A few years later, the Intel 82599EN chip was added to the line, which brought a number of improvements: the technical process decreased, the data transfer speed on the bus increased ... By the way, few people know, but Intel network chips also have code names like processors: let's say the first one is Bellefontaine , the second - Spring Fountain. When the next update of the line of network controllers was released, Intel decided that it was useless to break the brain about long indices and called it shorter and clearer - X540; the same name received and cards based on it. With such baggage, we approached the release of a new generation of X / XL710 chips. If anyone is interested, the codename of the new chip is Fortville. Currently there are 4 map options available, which are unlikely to become more.

| Map | Speed | Ports | Module Options |

|---|---|---|---|

| Intel X710-DA2 | 10G | 2 x SFP + | 10GBase-LR, 10GBase-SR, DA |

| Intel X710-DA4 | 10G | 4 x SFP + | 10GBase-LR, 10GBase-SR, DA |

| Intel XL710-QDA1 | 40G | 1 x QSFP + | 40GBase-SR4, 40GBase-LR4, DA |

| Intel XL710-QDA2 | 40G | 2 x QSFP + | 40GBase-SR4, 40GBase-LR4, DA |

Partner partitions for Intel optical modules and copper cables can be found at the links on the card pages.

Why is the emergence of new network cards greeted with such loud words? The fact is that the novelty of X / XL710 is truly comprehensive, both technologically and ideologically. Let's start with the ideology. Previously, Intel preferred to produce 10G cards with fixed ports, fearing (and sometimes rightly) that a poor-quality transceiver installed in the card and, as a result, the incorrect operation of the entire system is undermining its reputation. However, this approach presented a certain inconvenience, was inflexible with respect to the end user. And so Intel decided: the entire X710 family comes exclusively with SFP + connectors (QSFP in 40G cards on an XL710 chip).

Now about technology. The 710 series was the first to receive PCIe 3.0 support at a speed of 8 GT / s per line. Thus, eight lines provide sufficient margin to accommodate 4 10G ports on the card or one 40G port (two 40G ports with oversubscription) - naturally, this configuration also appeared for the first time. Well, in addition, the next reduction in process technology - now it is 28 nm.

So, now the terminal device (server) has at its disposal a 40G interface in the form of one port or four on one card. In the light of chapter two of this post, the question arises: does he really need it? In my opinion, this is the moment when the appearance of such an adapter is justified. Here are my arguments.

- Servers with several 10G interfaces are no longer a rarity, there may be several reasons for this: network topology, routing features, application requirements, etc. 4-port card will save you space in the server, which in the conditions of chronic shortage of space is welcome. In addition, 4 ports on one map will cost you substantially less than individual ones.

- Increasing the density of ports on the servers will allow inquisitive Pts. skillful hands to implement interesting hardware and software solutions for traffic juggling. The list of options is very wide: monitoring, filtering at any level, aggregation, balancing, and so on, as they say, DPDK in your hands.

- Powerful virtualization platforms are also a very real topic, and they also want to be thinner. For some application servers, 40G will also be, I think, not superfluous.

Based on the dynamics of the process, my next post will be about an Intel 100G card. Well, that will certainly happen too. Or maybe something interesting will happen.

Source: https://habr.com/ru/post/265975/

All Articles