API for validator from Yandex. And why do the micro-mark validators give different answers?

Some time ago, we released an API for our microdata validator. And today I want to talk both about the API, and in general about validators. For example, to understand why the results of different validators differ.

Validators are of different types and are developed for different purposes. In general, they can be divided into two types: universal and specialized. Universal - our validator , Google's Structured data testing tool , Validator.nu , Structured Data Linter , Markup Validator from Bing - check several standards of marking at once. At the same time, validators from search engines also check markup for compliance with the documentation for their products based on it. Specialized validators, such as the JSON-LD Playground , Open Graph Object Debugger , are tools from the developers of the standards themselves. Using the Open Graph Object Debugger, you can check the correctness of the Open Graph markup, and the JSON-LD Playground shows how the JSON-LD markup will be handled by robots.

')

We took various markup examples and compared the responses of these validators to find the best one.

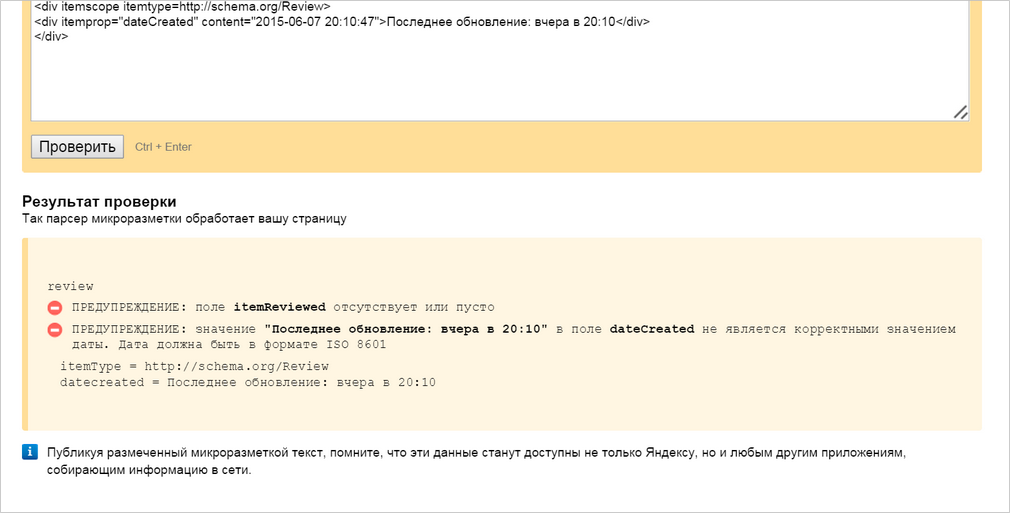

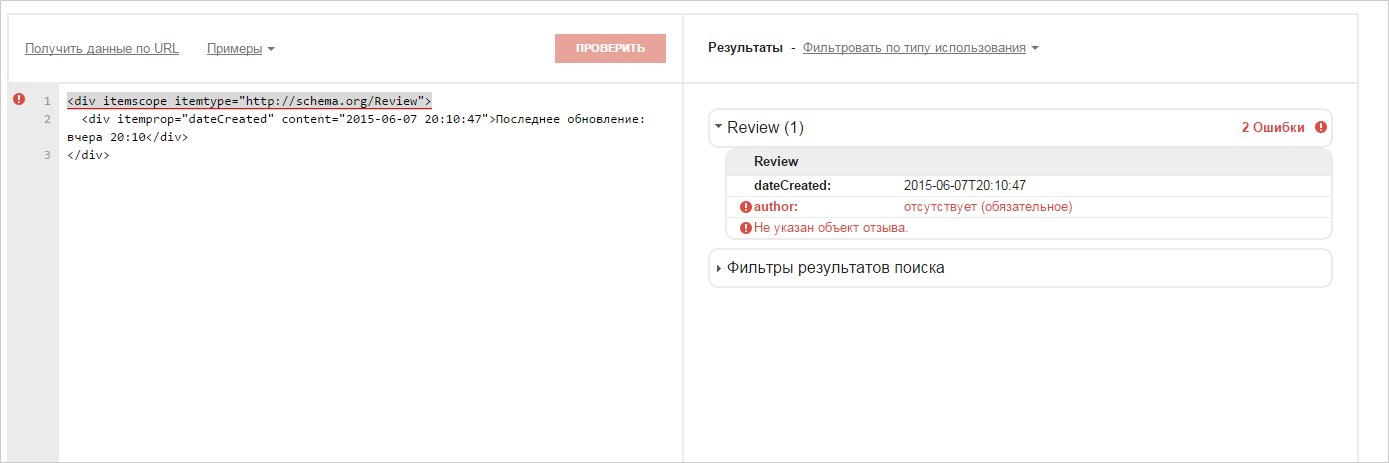

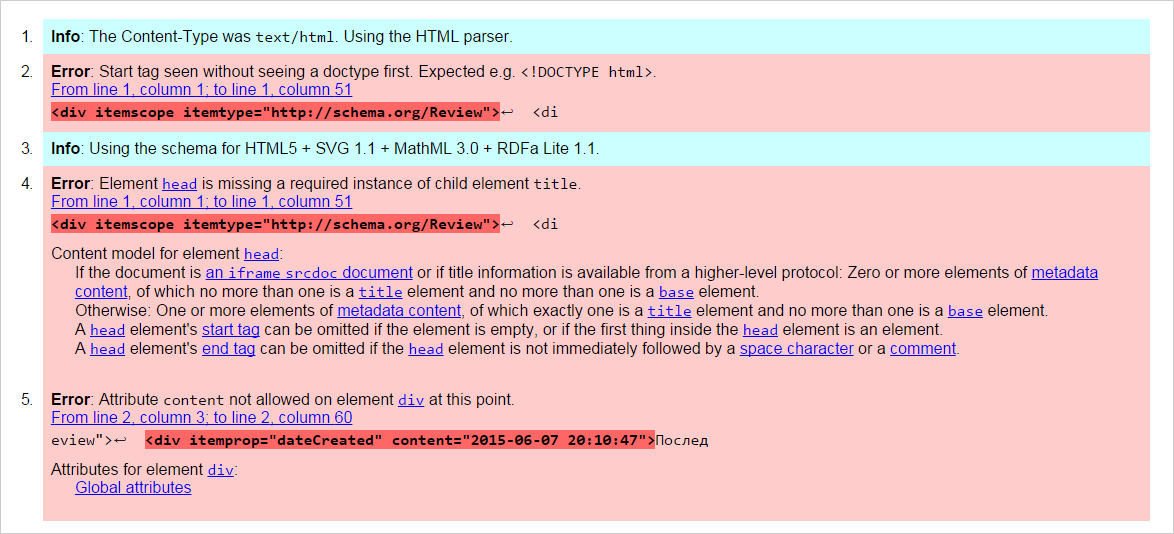

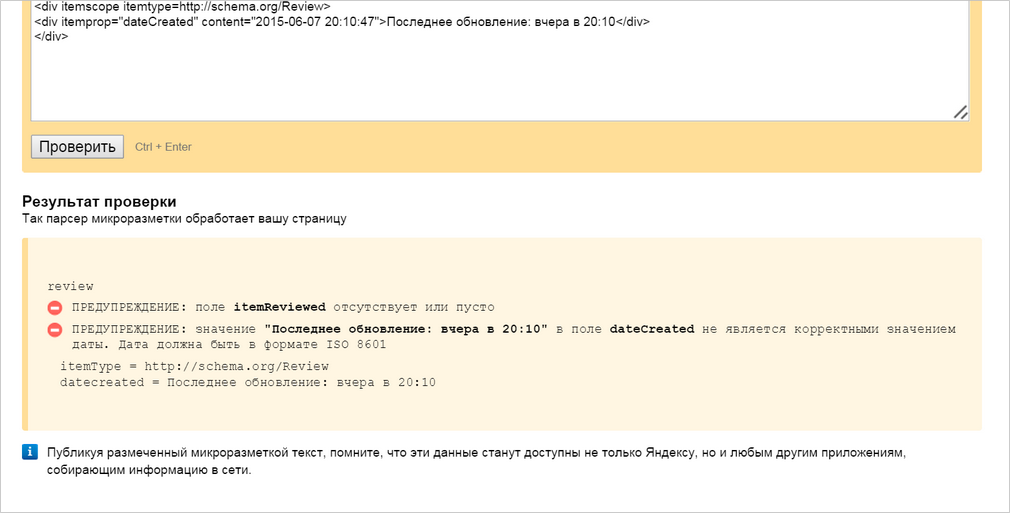

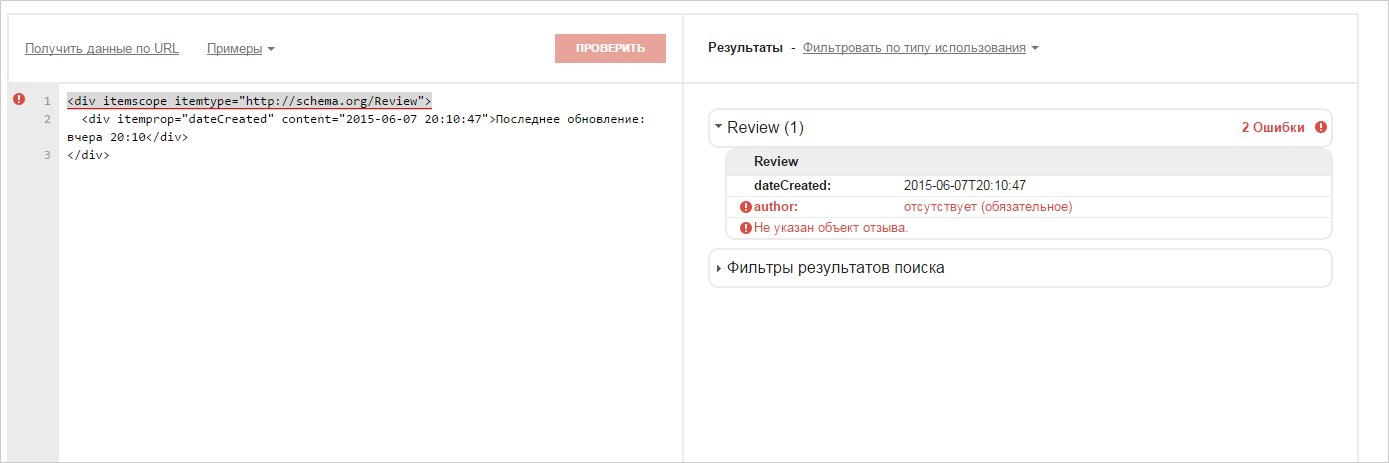

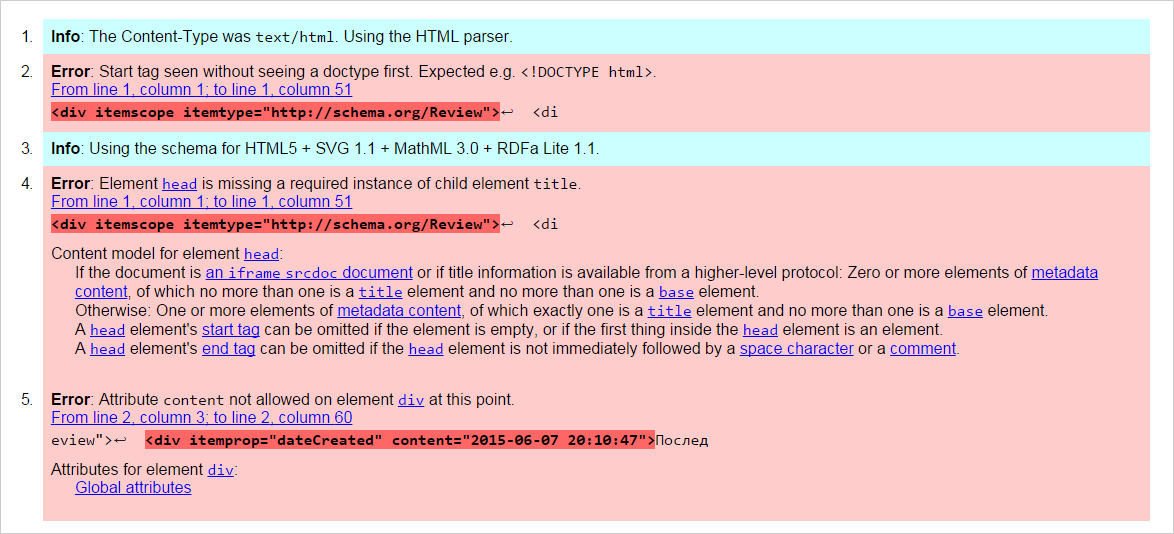

1. Use content in tags other than meta.

The microdata specification does not provide for the use of content in attributes other than meta tags. But lately, such use is becoming more common, so it is interesting to see how different validators parse such an example.

Markup example:

The Yandex Validator ignores content in tags other than meta (we are now adding the ability to use such a construct both in the parser and in the validator due to the fact that this is becoming a common practice):

The Google Validator is producing product errors:

Validator.nu warns you that you can't use the content attribute with a tag, and offers to see which attributes you can use:

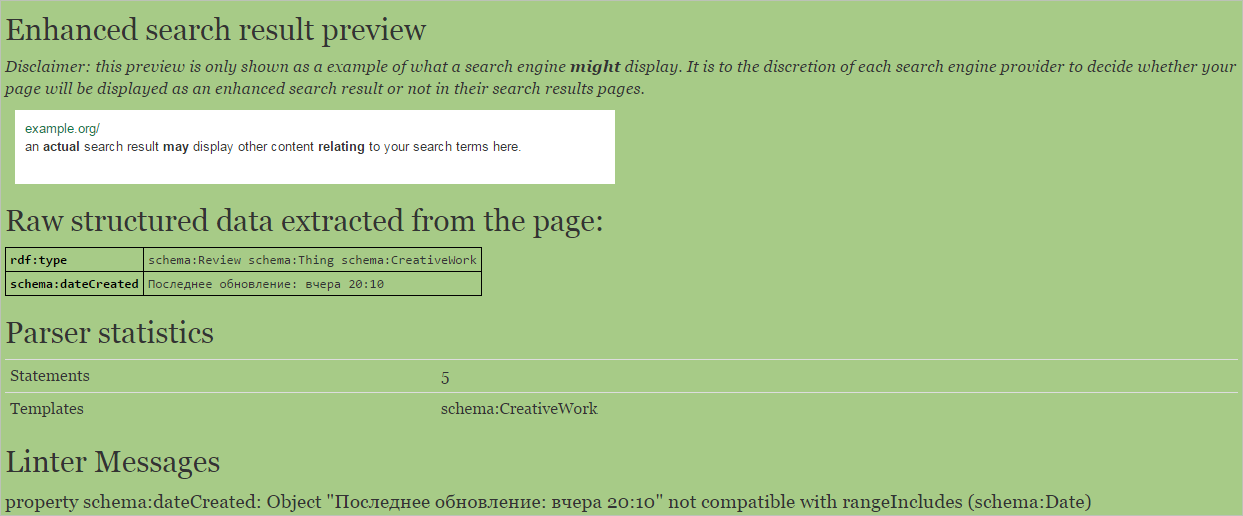

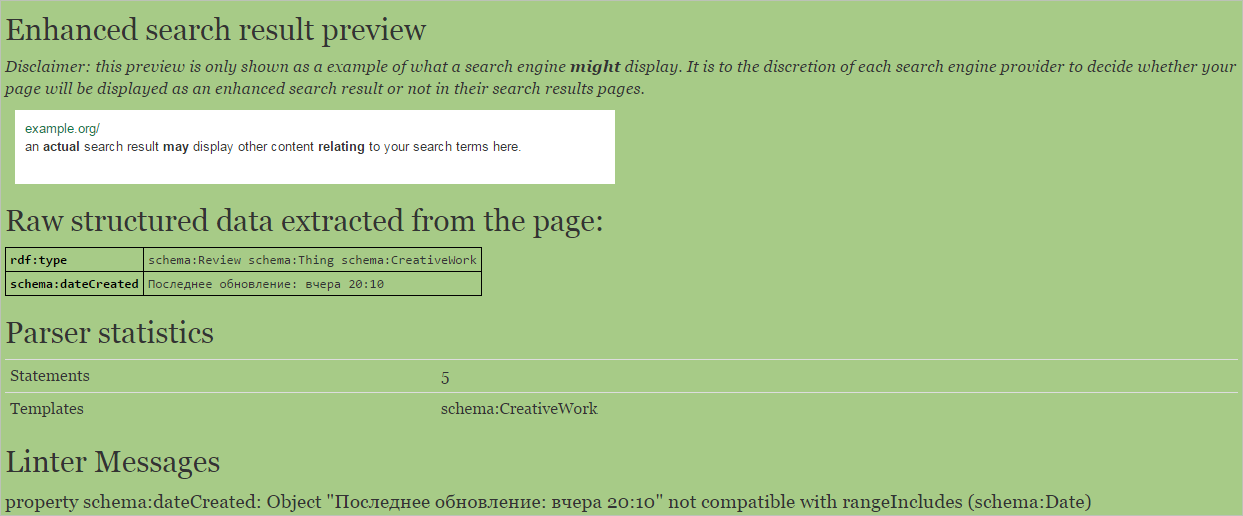

Structured Data Linter, like the Yandex validator, ignores content and warns about the wrong time format:

How correct? We also ask this question. Until recently, we believed that we should not ignore the requirements of the microdata specification. But due to the fact that in the examples of the documentation Schema.org this behavior is often found, we are going to more gently check such cases in the validator and use the data from the content attribute in the search.

2. Article with markup Open Graph and Applinks.

Open Graph is a long-known markup that helps customize the display of links on social networks, and Applinks is the increasingly popular standard for cross-platform application communications. Let's see how well the validators are familiar with it.

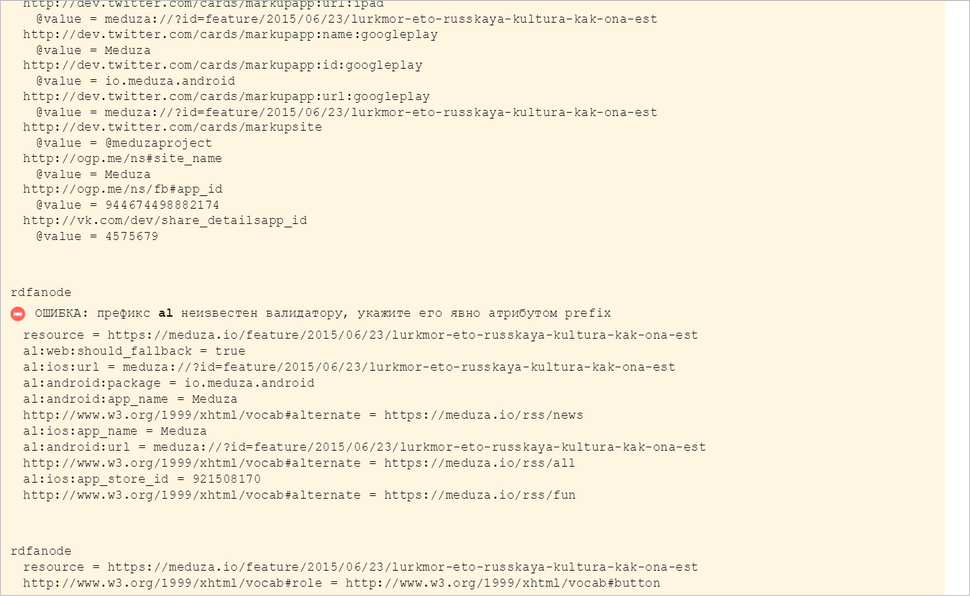

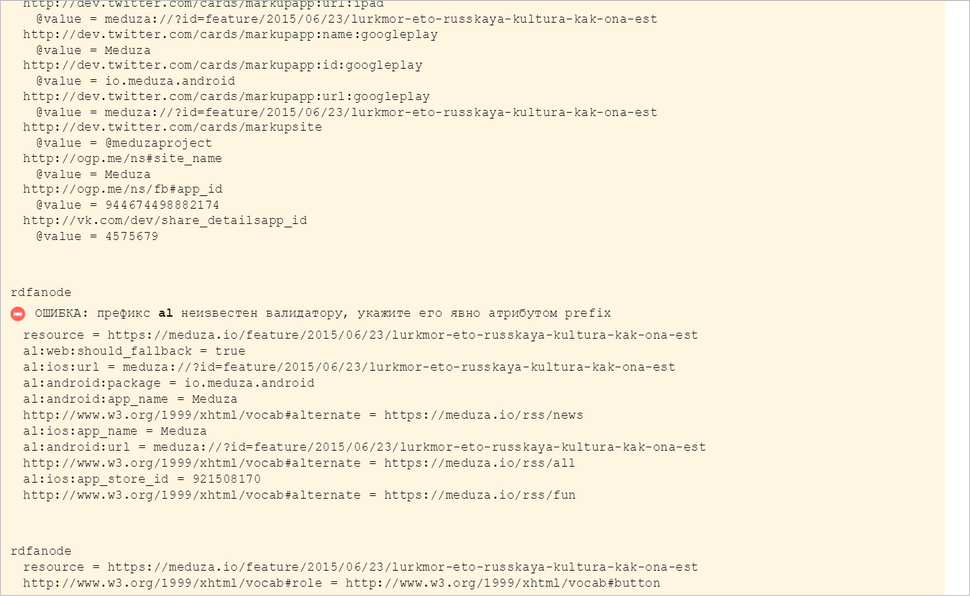

Yandex.Validator shows all the results that are found. At the same time, it warns about the undefined prefix al:

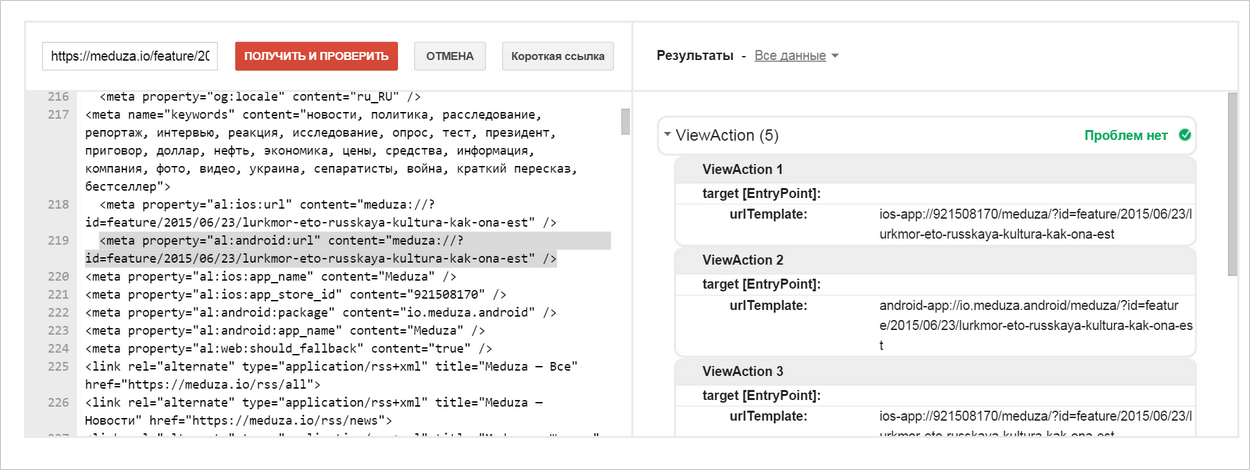

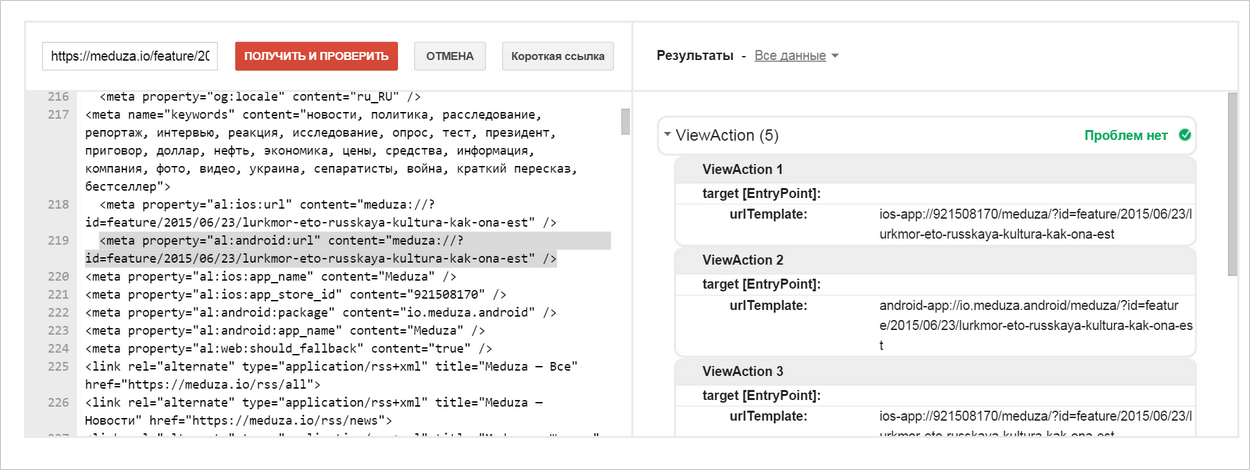

The Google Validator only shows Applinks markup, without Open Graph and other markup. There are no errors and the al prefix is recognized as the default prefix:

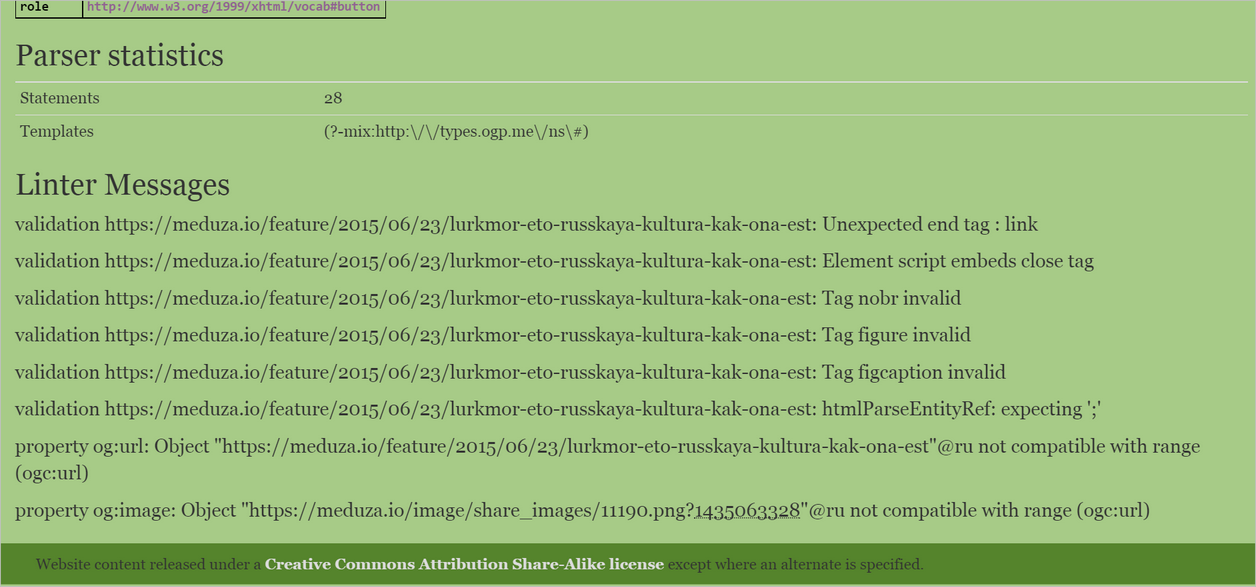

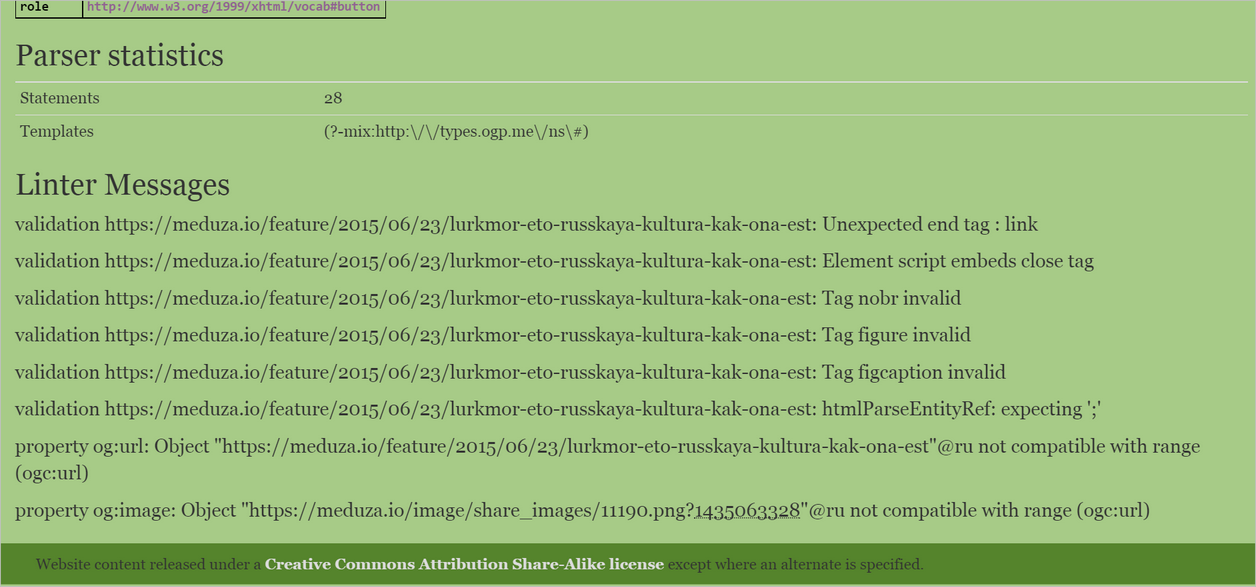

Structured Data Linter does not expand RDFa, warns about HTML errors and incorrect data type for og: url:

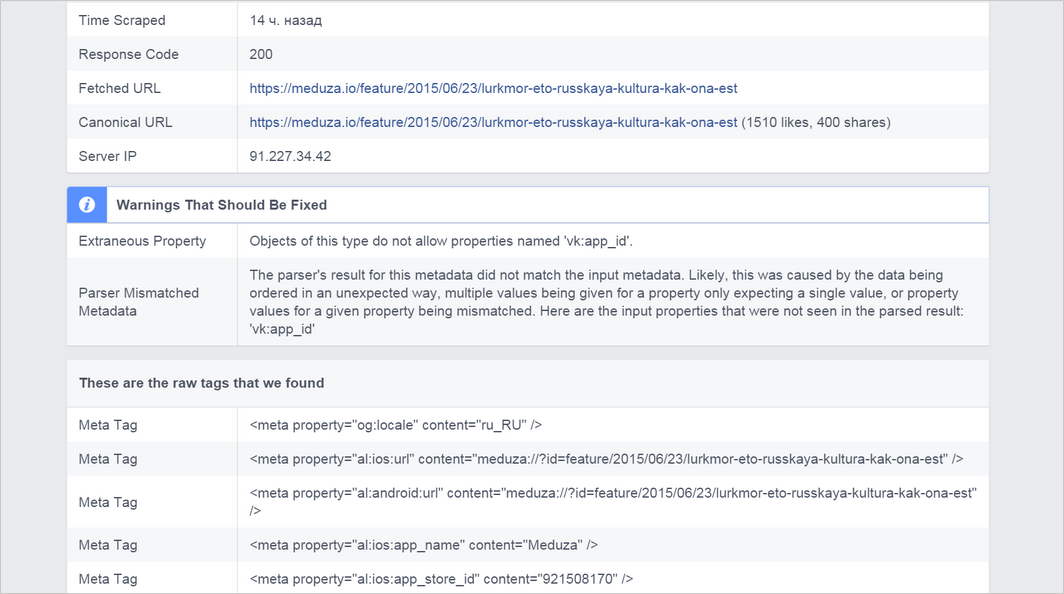

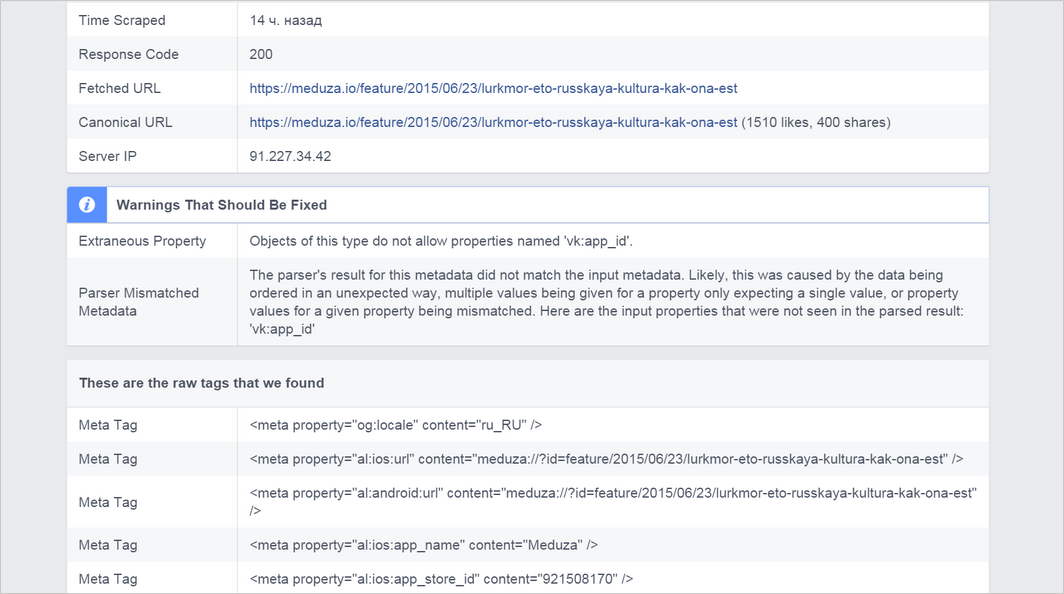

Open Graph Object Debugger warns about vk: app_id. Apparently, he does not know about him because of his foreign nature:

And also recognizes Applinks:

Validator.nu when checking this page does not produce any results.

How correct? According to the rules, all prefixes that are not defined in the RDFa specification must be explicitly indicated by the prefix attribute. But, as in the case of the content attribute, practice is not always consistent with theory. And many markup consumers, such as Vk.com, do not require explicit reference to the prefix. Therefore, recently we began to consider some of the most popular prefixes (vk, fb, twitter, and some others) as default prefixes and stopped warning about them. Others (for example, al) we have not yet added to the list of prefixes by default and expect them to be explicitly defined by webmasters. In any case, we recommend explicitly specifying all the prefixes that you use on your page.

3. JSON-LD markup with a context other than Schema.org.

Markup example:

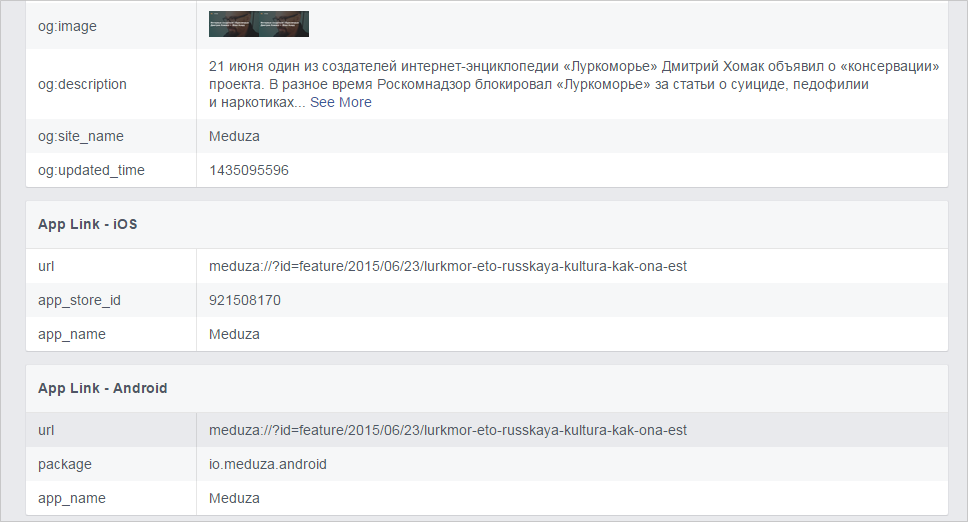

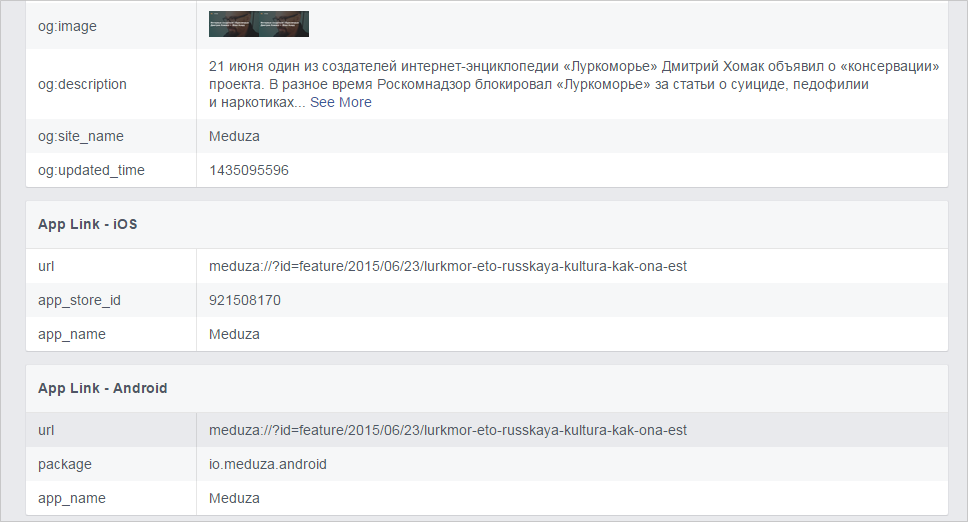

Yandex.Validator expands context:

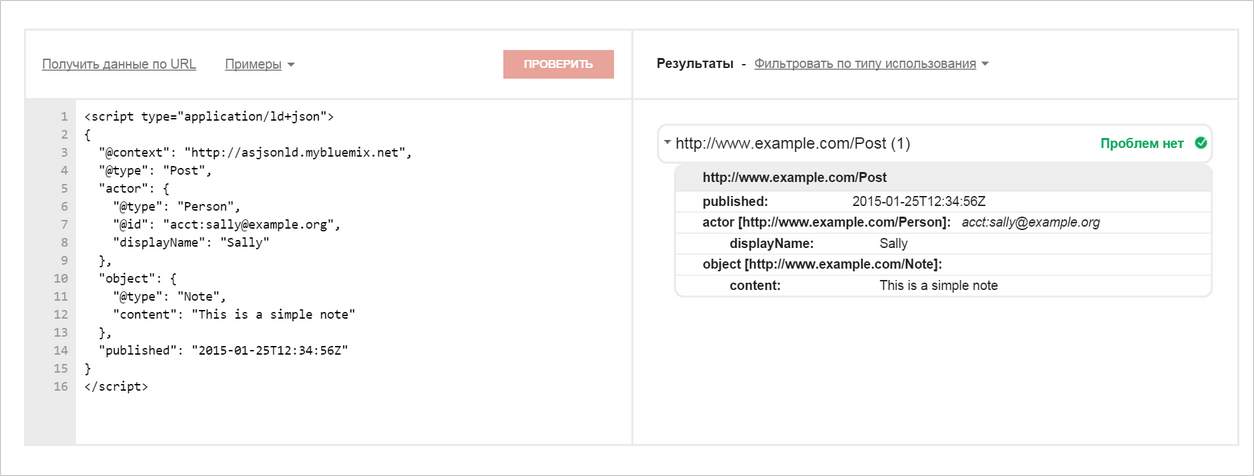

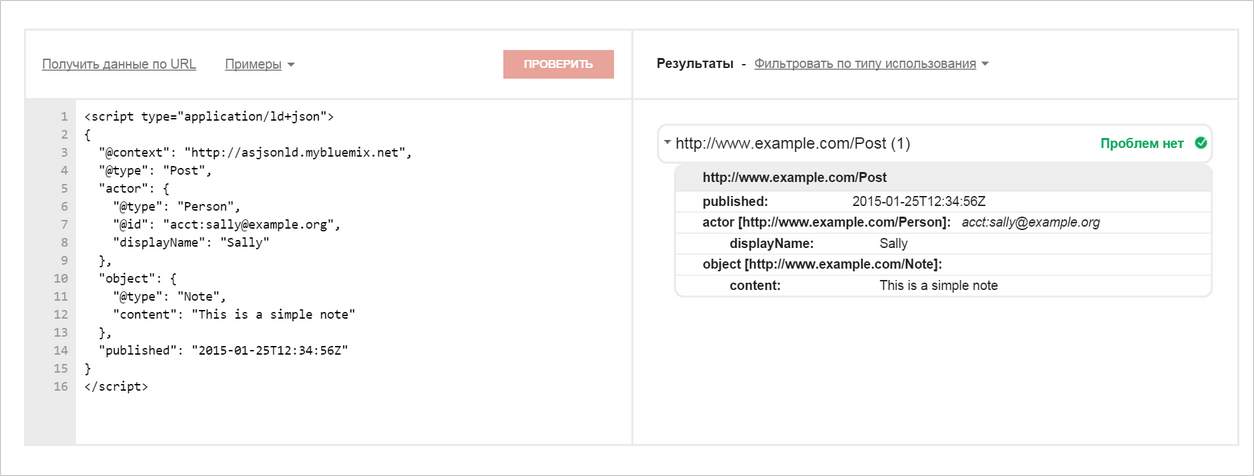

The Google Validator substitutes example.com instead of context:

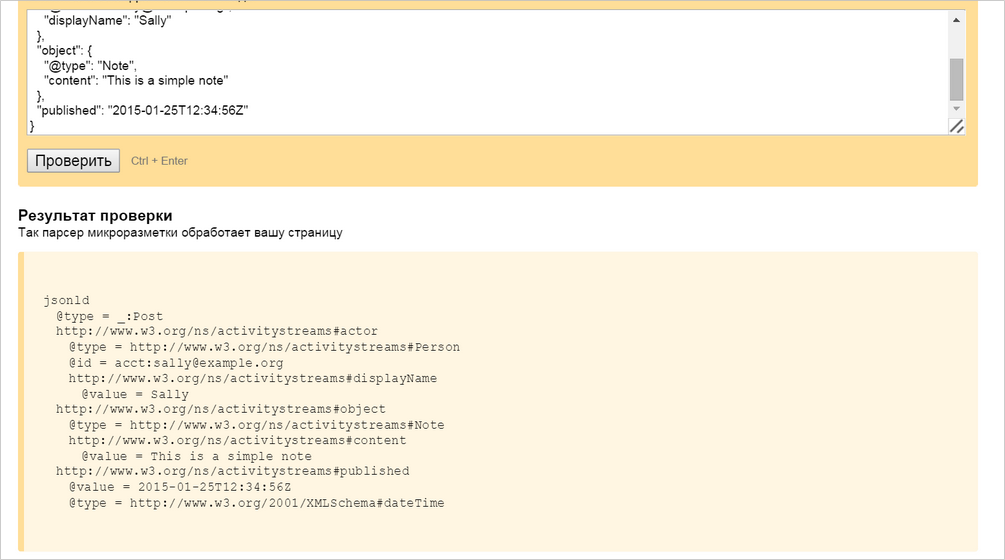

JSON-LD Playground parses the same way as Yandex.Validator:

The rest validators do not understand such an example. It seems that the best test result is obvious here.

4. An example with JSON-LD and an empty context.

Markup example:

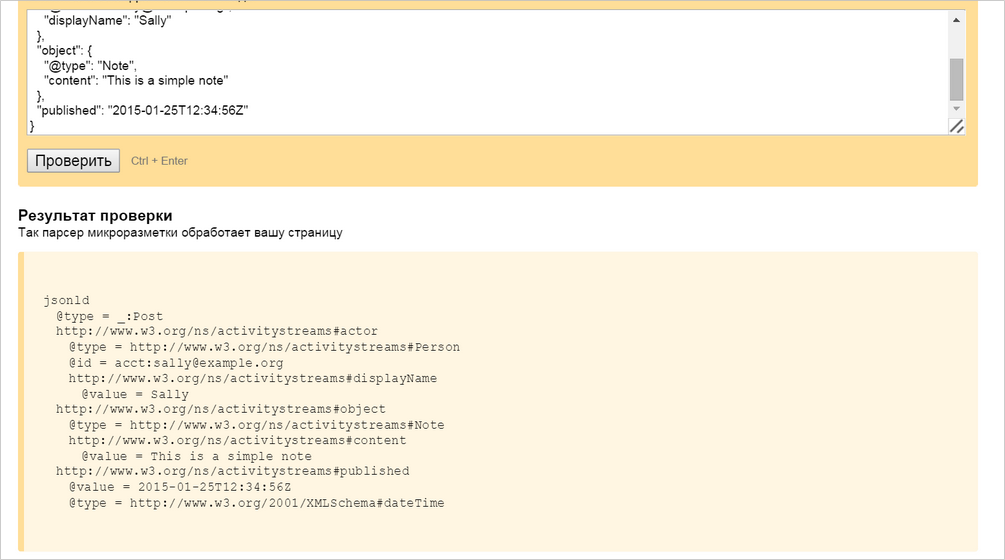

JSON-LD Playground warns about empty context:

Yandex Validator also warns of the impossibility of expanding such a context:

The validator from Google replaces the context with www.example.com and does not see any errors:

How correct? It seems more honest here JSON-LD Playground. We looked at them in the process of writing this article and also began to show a similar warning.

Comparing different validators turned out to be very interesting and useful, we ourselves were surprised by the results. And after some examples, they ran to improve their validator :) In general, it’s hard to say which service is the best: each validator solves the same problem in his own way. Therefore, when choosing a validator, we advise you to keep this in mind and focus on the goals for which you added markup.

And if you need a validation automation, welcome to the ranks of API users. Already, the validator API is used by different projects. First of all, these are large sites with a large number of pages that use the API to automatically check the markup (most of which is commodity). In addition, a service that monitors the state of the site from an optimization point of view, using the API, displays information about the presence and correctness of the micromarking.

In general, using the micromarking validator API you can:

The API has the same buns that are in our usual validator:

Speaking of specifications: when developing the API, we found an interesting fact that showed that the standards themselves do not always correspond to them.

Speech about the most popular dictionary today, which is used in the syntax of RDFa - Open Graph. He gained enormous popularity for two main reasons: the obvious benefits of using to control the appearance of links in social networks and ease of implementation. Ease of implementation is achieved largely due to the fact that Open Graph is recommended to place the markup in the head block with a set of meta tags. But this imposes certain restrictions. One of which is the implementation of nesting and arrays. In classic RDFa, nesting is determined based on a hierarchy of HTML tags. In this case, the order of the properties in RDFa is ignored. HTML tags cannot be embedded in nesting, so nesting in Open Graph is implemented in an unusual way. If you need to specify nested properties, they are indicated with a colon after the parent. For example, the url for an image (og: image) is set using og: image: url. Such properties in the specification are called structured. If you want to set an array of structured properties, you just need to specify them in the correct order. For example, in the example below, three images are shown. The first has a width of 300 and a height of 300, nothing is said about the dimensions of the second, and the third has a height of 1000.

For API version 1.0, we modified the RDFa parser to fully comply with the official specification (previously, some complex cases were not handled correctly). During testing, we found that structured properties are processed in a very strange way. It turned out that the point is that the position in the HTML code in RDFa is absolutely not important. The first reaction of our developer was to make a strike against the incorrect syntax in the Open Graph and to call them to order. But then we, of course, fixed everything.

This example once again shows how complex and contradictory the world of micromarking is :) We hope that our products will improve the lives of those who work with it.

If you have questions about the validator API, ask them in the comments or use the feedback form . The magic key to the API can be obtained here .

Mark your sites, check the markup, get beautiful snippets - and let everything be fine :)

Validators are of different types and are developed for different purposes. In general, they can be divided into two types: universal and specialized. Universal - our validator , Google's Structured data testing tool , Validator.nu , Structured Data Linter , Markup Validator from Bing - check several standards of marking at once. At the same time, validators from search engines also check markup for compliance with the documentation for their products based on it. Specialized validators, such as the JSON-LD Playground , Open Graph Object Debugger , are tools from the developers of the standards themselves. Using the Open Graph Object Debugger, you can check the correctness of the Open Graph markup, and the JSON-LD Playground shows how the JSON-LD markup will be handled by robots.

')

We took various markup examples and compared the responses of these validators to find the best one.

1. Use content in tags other than meta.

The microdata specification does not provide for the use of content in attributes other than meta tags. But lately, such use is becoming more common, so it is interesting to see how different validators parse such an example.

Markup example:

<div itemscope itemtype="http://schema.org/Review"> <div itemprop="dateCreated" content="2015-06-07 20:10:47"> : 20:10</div> </div> The Yandex Validator ignores content in tags other than meta (we are now adding the ability to use such a construct both in the parser and in the validator due to the fact that this is becoming a common practice):

The Google Validator is producing product errors:

Validator.nu warns you that you can't use the content attribute with a tag, and offers to see which attributes you can use:

Structured Data Linter, like the Yandex validator, ignores content and warns about the wrong time format:

How correct? We also ask this question. Until recently, we believed that we should not ignore the requirements of the microdata specification. But due to the fact that in the examples of the documentation Schema.org this behavior is often found, we are going to more gently check such cases in the validator and use the data from the content attribute in the search.

2. Article with markup Open Graph and Applinks.

Open Graph is a long-known markup that helps customize the display of links on social networks, and Applinks is the increasingly popular standard for cross-platform application communications. Let's see how well the validators are familiar with it.

Yandex.Validator shows all the results that are found. At the same time, it warns about the undefined prefix al:

The Google Validator only shows Applinks markup, without Open Graph and other markup. There are no errors and the al prefix is recognized as the default prefix:

Structured Data Linter does not expand RDFa, warns about HTML errors and incorrect data type for og: url:

Open Graph Object Debugger warns about vk: app_id. Apparently, he does not know about him because of his foreign nature:

And also recognizes Applinks:

Validator.nu when checking this page does not produce any results.

How correct? According to the rules, all prefixes that are not defined in the RDFa specification must be explicitly indicated by the prefix attribute. But, as in the case of the content attribute, practice is not always consistent with theory. And many markup consumers, such as Vk.com, do not require explicit reference to the prefix. Therefore, recently we began to consider some of the most popular prefixes (vk, fb, twitter, and some others) as default prefixes and stopped warning about them. Others (for example, al) we have not yet added to the list of prefixes by default and expect them to be explicitly defined by webmasters. In any case, we recommend explicitly specifying all the prefixes that you use on your page.

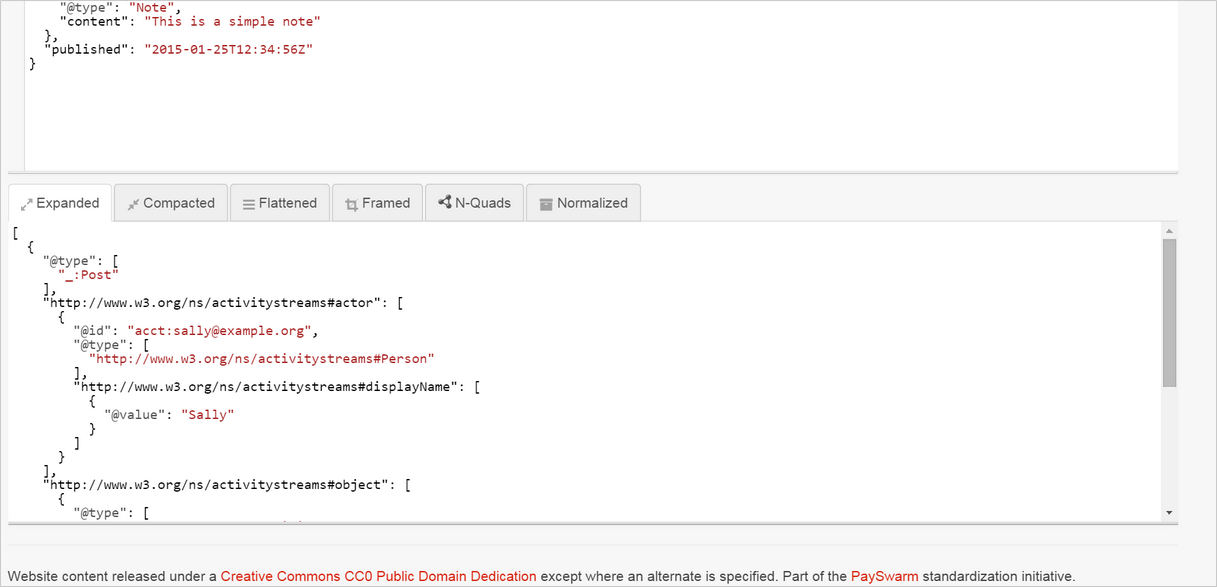

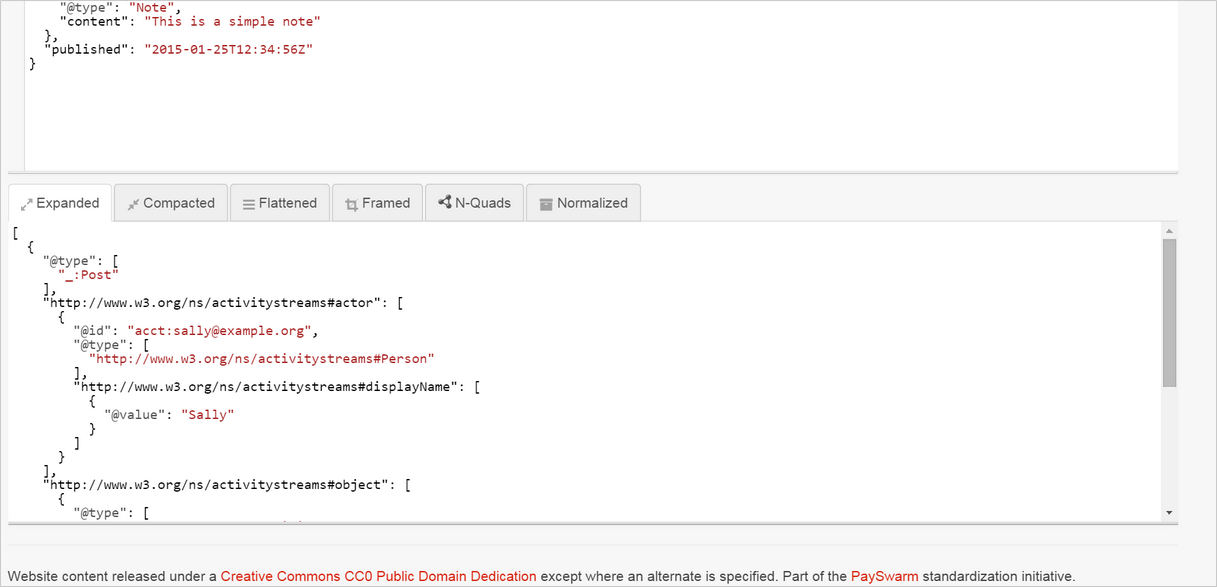

3. JSON-LD markup with a context other than Schema.org.

Markup example:

{ "@context": "http://asjsonld.mybluemix.net", "@type": "Post", "actor": { "@type": "Person", "@id": "acct:sally@example.org", "displayName": "Sally" }, "object": { "@type": "Note", "content": "This is a simple note" }, "published": "2015-01-25T12:34:56Z" } Yandex.Validator expands context:

The Google Validator substitutes example.com instead of context:

JSON-LD Playground parses the same way as Yandex.Validator:

The rest validators do not understand such an example. It seems that the best test result is obvious here.

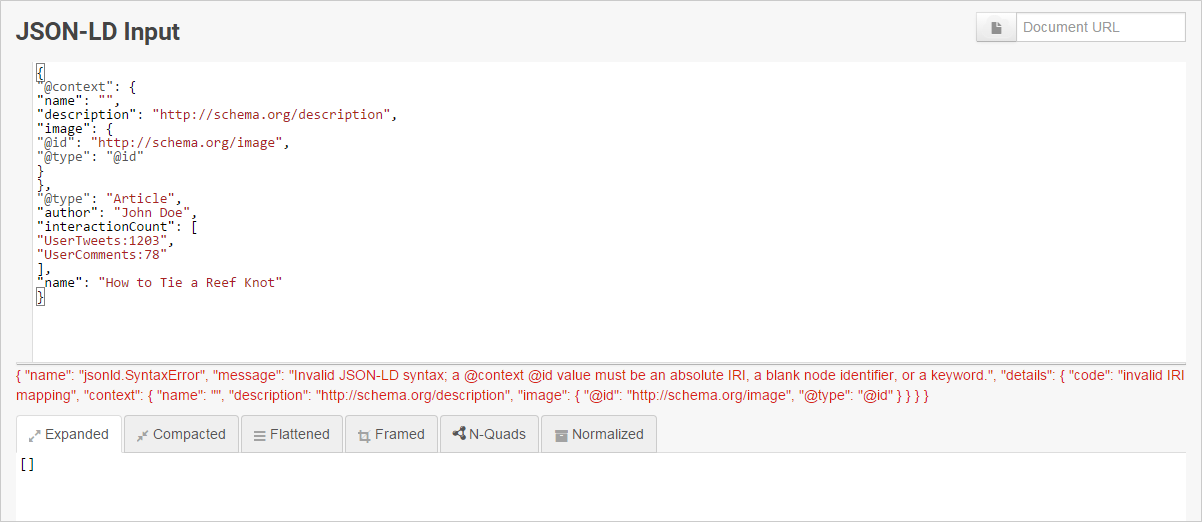

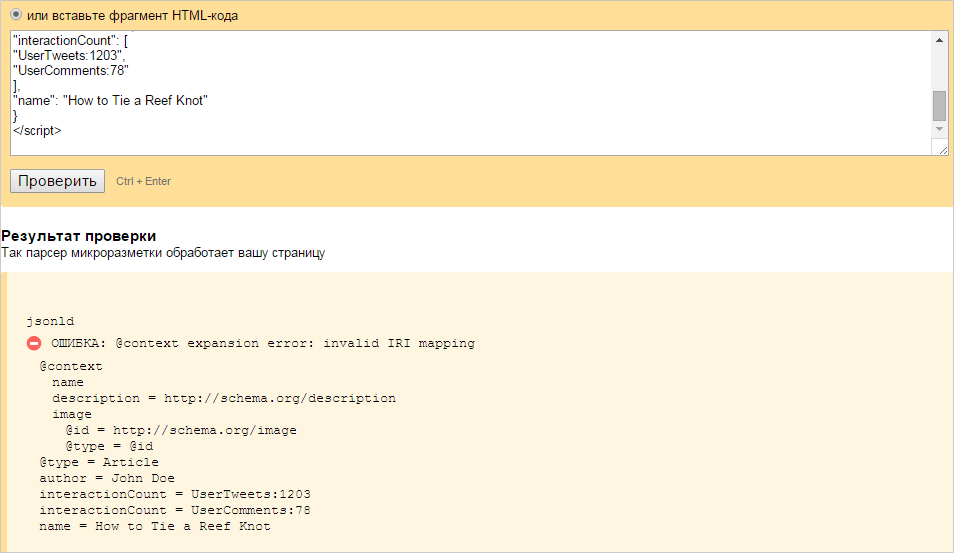

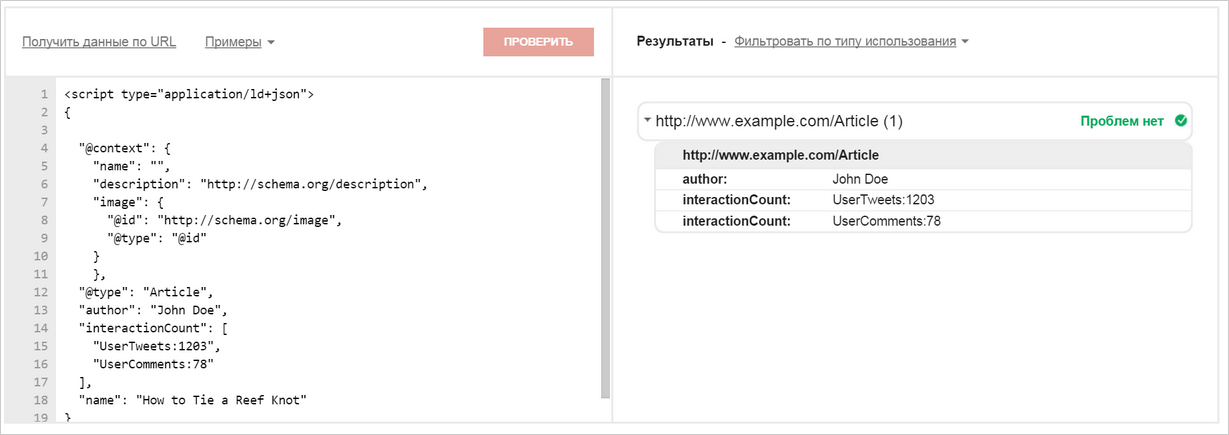

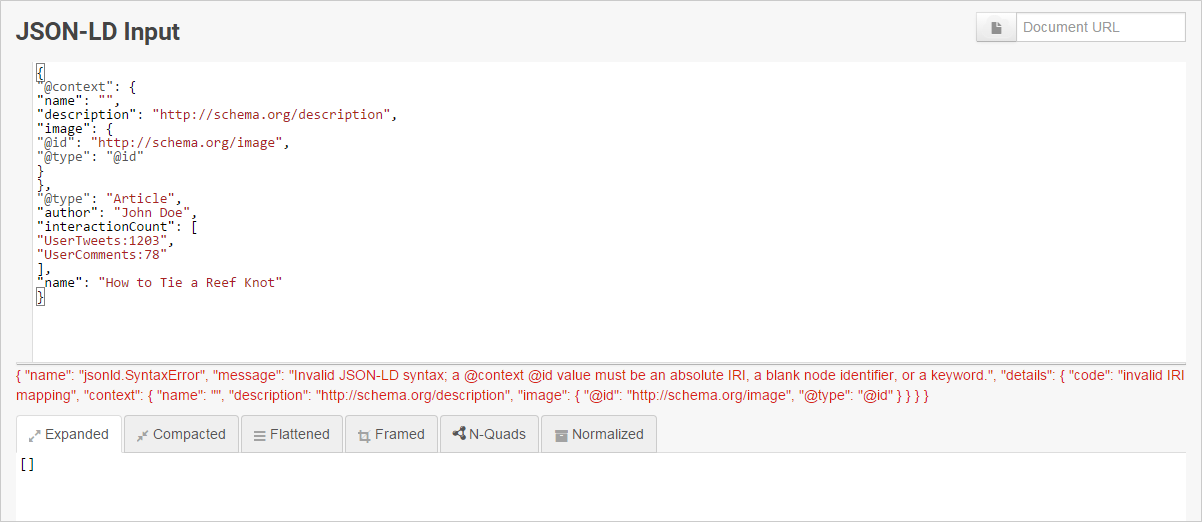

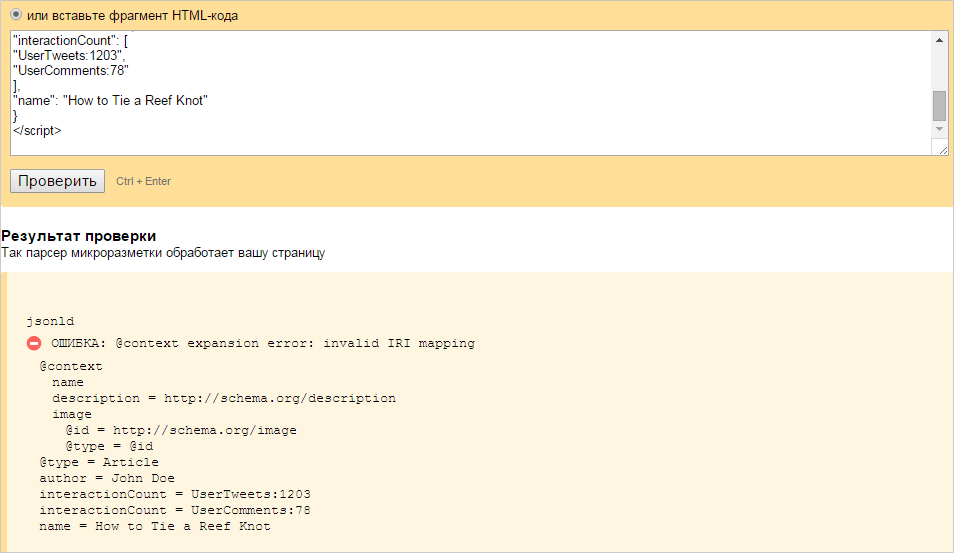

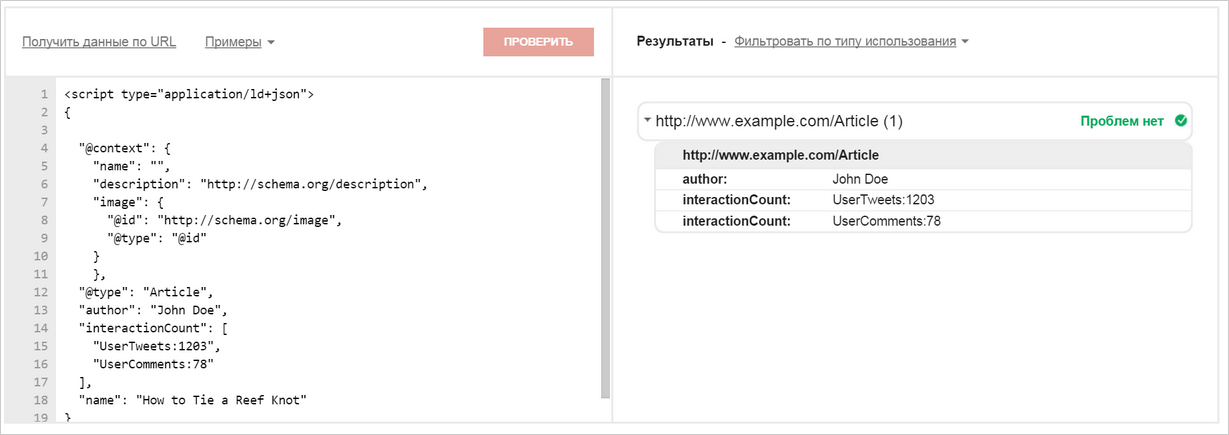

4. An example with JSON-LD and an empty context.

Markup example:

<script type="application/ld+json"> { "@context": { "name": "", "description": "http://schema.org/description", "image": { "@id": "http://schema.org/image", "@type": "@id" } }, "@type": "Article", "author": "John Doe", "interactionCount": [ "UserTweets:1203", "UserComments:78" ], "name": "How to Tie a Reef Knot" } </script> JSON-LD Playground warns about empty context:

Yandex Validator also warns of the impossibility of expanding such a context:

The validator from Google replaces the context with www.example.com and does not see any errors:

How correct? It seems more honest here JSON-LD Playground. We looked at them in the process of writing this article and also began to show a similar warning.

Comparing different validators turned out to be very interesting and useful, we ourselves were surprised by the results. And after some examples, they ran to improve their validator :) In general, it’s hard to say which service is the best: each validator solves the same problem in his own way. Therefore, when choosing a validator, we advise you to keep this in mind and focus on the goals for which you added markup.

And if you need a validation automation, welcome to the ranks of API users. Already, the validator API is used by different projects. First of all, these are large sites with a large number of pages that use the API to automatically check the markup (most of which is commodity). In addition, a service that monitors the state of the site from an optimization point of view, using the API, displays information about the presence and correctness of the micromarking.

In general, using the micromarking validator API you can:

- automate microdata checking on a large number of pages;

- develop plug-ins for various CMS;

- extract structured data from sites for use in their projects.

The API has the same buns that are in our usual validator:

- The validator checks all errors that exist in the base standards specification: Microdata , RDFa, and JSON-LD . JSON-LD was first tested in May 2014, before this standard was not supported in any of the validators from search engines.

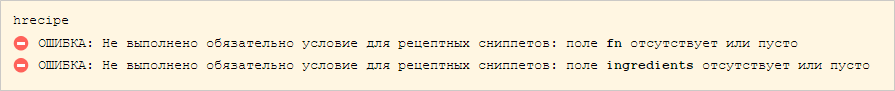

- The validator reports not only standard errors, but also product errors. Most often webmasters add markup in order to participate in an affiliate program with it. Therefore, our validator reports if the markup does not correspond to the documentation of any affiliate program:

- The validator very strictly checks for compliance with the specifications, but if some amendments start to be used everywhere, we go from the severity to meet the users. For example, we recently decided not to point out the use of the content attribute in tags other than meta, since it has become widely used in Schema.org. Also, in Open Graph, they began to accept without explicit indication the most commonly used prefixes: fb, og, and vk. We have sorted out such examples above.

Speaking of specifications: when developing the API, we found an interesting fact that showed that the standards themselves do not always correspond to them.

Speech about the most popular dictionary today, which is used in the syntax of RDFa - Open Graph. He gained enormous popularity for two main reasons: the obvious benefits of using to control the appearance of links in social networks and ease of implementation. Ease of implementation is achieved largely due to the fact that Open Graph is recommended to place the markup in the head block with a set of meta tags. But this imposes certain restrictions. One of which is the implementation of nesting and arrays. In classic RDFa, nesting is determined based on a hierarchy of HTML tags. In this case, the order of the properties in RDFa is ignored. HTML tags cannot be embedded in nesting, so nesting in Open Graph is implemented in an unusual way. If you need to specify nested properties, they are indicated with a colon after the parent. For example, the url for an image (og: image) is set using og: image: url. Such properties in the specification are called structured. If you want to set an array of structured properties, you just need to specify them in the correct order. For example, in the example below, three images are shown. The first has a width of 300 and a height of 300, nothing is said about the dimensions of the second, and the third has a height of 1000.

<meta property="og:image" content="http://example.com/rock.jpg" /> <meta property="og:image:width" content="300" /> <meta property="og:image:height" content="300" /> <meta property="og:image" content="http://example.com/rock2.jpg" /> <meta property="og:image" content="http://example.com/rock3.jpg" /> <meta property="og:image:height" content="1000" /> For API version 1.0, we modified the RDFa parser to fully comply with the official specification (previously, some complex cases were not handled correctly). During testing, we found that structured properties are processed in a very strange way. It turned out that the point is that the position in the HTML code in RDFa is absolutely not important. The first reaction of our developer was to make a strike against the incorrect syntax in the Open Graph and to call them to order. But then we, of course, fixed everything.

This example once again shows how complex and contradictory the world of micromarking is :) We hope that our products will improve the lives of those who work with it.

If you have questions about the validator API, ask them in the comments or use the feedback form . The magic key to the API can be obtained here .

Mark your sites, check the markup, get beautiful snippets - and let everything be fine :)

Source: https://habr.com/ru/post/265965/

All Articles