gRPC - framework for remote procedure call from Google

In the case of the remote procedure call, the case has long been exactly like in the famous “14 standards” comic book - which is not the point : ancient DCOM and Corba, strange SOAP and .NET Remoting, modern REST and AMQP (yes, I know that some - that from this formally not RPC, in order to discuss the terminology, even here a special topic was recently created, however it is all used as RPC, and if something looks like a duck and swims like a duck - well, you know ) .

And of course, in full accordance with the script of the comic, Google came to the market and announced that now he finally created another, the last and most correct RPC standard. Google can be understood - to continue in the 21st century to drive petabytes of data through the old and inefficient HTTP + REST, losing money on every byte - just stupid. At the same time, to take someone else’s standard and say “we couldn’t think of anything better” is absolutely not in their style.

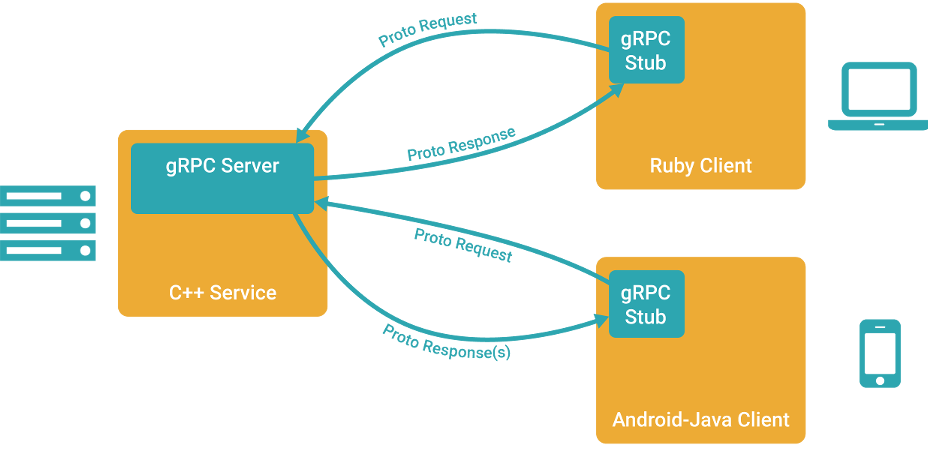

Therefore, meet, gRPC , which stands for “gRPC Remote Procedure Calls” - a new framework for remote call procedures from Google. In this article we will talk about why, unlike the previous 14 standards, it will still take over the world (or at least part of it), we will try to build the gRPC build under Windows + Visual Studio (and don’t even tell me that the instruction is not needed - in the official documentation 5 pieces of important steps were missed, without which nothing is going to), and also try to write a simple service and client exchanging requests and answers.

Why do we need another standard?

First of all, let's look around. What do we see? We see REST + HTTP / 1.1. No, there is everyone, but it is this cloud that closes a good three-fourths of the sky of client-server communications. Looking a little closer, we see that REST degenerates into CRUD in 95% of cases.

')

As a result, we have:

- The ineffectiveness of the HTTP / 1.1 protocol - uncompressed headers, the lack of full two-way communication, an inefficient approach to the use of OS resources, excess traffic, unnecessary delays.

- The need to pull our data model and events on REST + CRUD, which often turns out like a balloon on a globe, forces Yandex to write these, no doubt, very good articles , which, however, would not be needed if people did not have to think " What is the trigger spell to call elemental - PUT'om or POST'om? And what is the HTTP code to return, so that it means “Go forward 3 cells and draw a new card?”

This is where gRPC begins. So, out of the box we have:

- Protobuf as a tool for describing data types and serialization. Very cool and well-proven in practice stuff. As a matter of fact, those who needed performance - they used to take Protobuf before, and then they would bother with transport separately. Now everything is included.

- HTTP / 2 as a transport. And this is an incredibly powerful move! The beauty of full data compression, traffic control, event initiation from the server, re-use of one socket for several parallel requests is beautiful.

- Static paths - no more “service / collection / resource / query? parameter = value ". Now only "service", and what is inside - describe in terms of your model and its events.

- No binding of methods to HTTP methods, no binding of returned values to HTTP statuses. Write what you want.

- SSL / TLS, OAuth 2.0, authentication via Google services, plus you can tie your own (for example, two-factor)

- Support for 9 languages: C, C ++, Java, Go, Node.js, Python, Ruby, Objective-C, PHP, C # plus, of course, no one forbids taking and implementing your version at least for Brainfack.

- Support gRPC in public APIs from Google. Already working for some services . No, of course, the REST versions will also remain. But judge for yourself if you have a choice of using, say, from a mobile application, a REST version that gives data for 1 second or with the same development costs, take a gRPC version that works for 0.5 seconds - what would you choose? And what will your competitor choose?

Build gRPC

We need:

- Git

- Visual Studio 2013 + Nuget

- CMake

Pick up code

- We take gRPC repository from Gitkhab

- We execute the command

git submodule update --init

- this is necessary in order to download the dependencies (protobuf, openssl, etc.).

We collect Protobuf

- Go to the grpc \ third_party \ protobuf \ cmake folder and create the build folder there, go to it.

- We execute the command

cmake -G "Visual Studio 12 2013" -DBUILD_TESTING = OFF ... - Open the protobuf.sln file created in the previous step in Visual Studio and compile (F7).

At this stage, we get valuable artifacts - the protoc.exe utility, which we need to generate serialization / data deserialization code and lib files, which will be needed when linking gRPC. - Copy the grpc \ third_party \ protobuf \ cmake \ build \ Debug folder to the grpc \ third_party \ protobuf \ cmake folder.

Once again - the Debug folder needs to be copied 1 level up. This is some kind of inconsistency in the gRPC and Protobuf documentation. Protobuf says that you need to build everything in the build folder, but the gRPC project sources do not know anything about this folder and are looking for Protobuf libraries directly in grpc \ third_party \ protobuf \ cmake \ Debug

We collect gRPC

- Open the grpc \ vsprojects \ grpc_protoc_plugins.sln file and compile it.

If you have completed Protobuf correctly in the previous step, everything should go smoothly. Now you have plugins for protoc.exe, which allow it not only to generate serialization / deserialization code, but also to add gRPC functionality to it (strictly speaking, remote procedure call). Plugins and protoc.exe should be put in one folder, for example, in grpc \ vsprojects \ Debug. - Open the grpc \ vsprojects \ grpc.sln file and build it.

In the course of the build, Nuget should start and download the necessary dependencies (openssl, zlib). If you do not have Nuget or for some reason it did not download dependencies, there will be problems.

At the end of the build, we will have all the necessary libraries that we can use in our project for communication via gRPC.

Our project

Let's write such an API for Habrahabr using gRPC

We will have the following methods:

- GetKarma will receive a string with the username, and return a fractional number with the value of its karma

- PostArticle will receive a request to create a new article with all its metadata, and return the result of the publication - the structure with reference to the article, the time of publication, and the text of the error, if the publication failed.

This is all we need to describe in terms of gRPC. It will look something like this (the description of the types can be found in the documentation for protobuf ):

syntax = "proto3"; package HabrahabrApi; message KarmaRequest { string username = 1; } message KarmaResponse { string username = 1; float karma = 2; } message PostArticleRequest { string title = 1; string body = 2; repeated string tag = 3; repeated string hub = 4; } message PostArticleResponse { bool posted = 1; string url = 2; string time = 3; string error_code = 4; } service HabrApi { rpc GetKarma(KarmaRequest) returns (KarmaResponse) {} rpc PostArticle(PostArticleRequest) returns (PostArticleResponse) {} } Go to the grpc \ vsprojects \ Debug folder and run 2 commands there (by the way, please note that there are errors in the official documentation, incorrect arguments):

protoc --grpc_out=. --plugin=protoc-gen-grpc=grpc_cpp_plugin.exe habr.proto protoc --cpp_out=. habr.proto At the output we get 4 files:

- habr.pb.h

- habr.pb.cc

- habr.grpc.pb.h

- habr.grpc.pb.cc

It is not difficult to guess, the procurement of our future client and service, which will be able to exchange messages according to the protocol described above.

Let's create a project already!

- Create a new solution in Visual Studio, let's call it HabrAPI.

- Add to it two console applications - HabrServer and HabrClient.

- Add the h and cc files generated in the previous step to them. It is necessary to include all 4 in the server, in the client - only habr.pb.h and habr.pb.cc.

- Add the path to the grpc \ third_party \ protobuf \ src and grpc \ include folders in the settings of Additional Include Directories

- Add the path to grpc \ third_party \ protobuf \ cmake \ Debug in the project settings in Additional Library Directories

- Add in the project settings in Additional Dependencies library libprotobuf.lib

- We set the link type to be the same as Protobuf was built (Runtime Library property on the Code Generation tab). At this point, it may be that you did not assemble Protobuf in the configuration you need, and you have to go back and rebuild it. I chose both there and there / MTd.

- We add dependencies on zlib and openssl via Nuget.

Now we have everything going. True, nothing is working yet.

Customer

Everything is simple here. First, we need to create a class inherited from the stub generated in habr.pb.h. Second, implement the GetKarma and PostArticle methods. Third, call them and, for example, display the results in the console. It turns out something like this:

#include <iostream> #include <memory> #include <string> #include <grpc/grpc.h> #include <grpc++/channel.h> #include <grpc++/client_context.h> #include <grpc++/create_channel.h> #include <grpc++/credentials.h> #include "habr.grpc.pb.h" using grpc::Channel; using grpc::ChannelArguments; using grpc::ClientContext; using grpc::Status; using HabrahabrApi::KarmaRequest; using HabrahabrApi::KarmaResponse; using HabrahabrApi::PostArticleRequest; using HabrahabrApi::PostArticleResponse; using HabrahabrApi::HabrApi; class HabrahabrClient { public: HabrahabrClient(std::shared_ptr<Channel> channel) : stub_(HabrApi::NewStub(channel)) {} float GetKarma(const std::string& username) { KarmaRequest request; request.set_username(username); KarmaResponse reply; ClientContext context; Status status = stub_->GetKarma(&context, request, &reply); if (status.ok()) { return reply.karma(); } else { return 0; } } bool PostArticle(const std::string& username) { PostArticleRequest request; request.set_title("Article about gRPC"); request.set_body("bla-bla-bla"); request.set_tag("UFO"); request.set_hab("Infopulse"); PostArticleResponse reply; ClientContext context; Status status = stub_->PostArticle(&context, request, &reply); return status.ok() && reply.posted(); } private: std::unique_ptr<HabrApi::Stub> stub_; }; int main(int argc, char** argv) { HabrahabrClient client( grpc::CreateChannel("localhost:50051", grpc::InsecureCredentials(), ChannelArguments())); std::string user("tangro"); std::string reply = client.GetKarma(user); std::cout << "Karma received: " << reply << std::endl; return 0; } Server

There is a similar history with the server - we inherit from the class of service generated in habr.grpc.pb.h and implement its methods. Next, we run the listener on a certain port, and wait for the clients. Something like that:

#include <iostream> #include <memory> #include <string> #include <grpc/grpc.h> #include <grpc++/server.h> #include <grpc++/server_builder.h> #include <grpc++/server_context.h> #include <grpc++/server_credentials.h> #include "habr.grpc.pb.h" using grpc::Server; using grpc::ServerBuilder; using grpc::ServerContext; using grpc::Status; using HabrahabrApi::KarmaRequest; using HabrahabrApi::KarmaResponse; using HabrahabrApi::PostArticleRequest; using HabrahabrApi::PostArticleResponse; using HabrahabrApi::HabrApi; class HabrahabrServiceImpl final : public HabrApi::Service { Status GetKarma(ServerContext* context, const KarmaRequest* request, KarmaResponse* reply) override { reply->set_karma(42); return Status::OK; } Status PostArticle(ServerContext* context, const PostArticleRequest* request, PostArticleResponse* reply) override { reply->set_posted(true); reply->set_url("some_url"); return Status::OK; } }; void RunServer() { std::string server_address("0.0.0.0:50051"); HabrahabrServiceImpl service; ServerBuilder builder; builder.AddListeningPort(server_address, grpc::InsecureServerCredentials()); builder.RegisterService(&service); std::unique_ptr<Server> server(builder.BuildAndStart()); std::cout << "Server listening on " << server_address << std::endl; server->Wait(); } int main(int argc, char** argv) { RunServer(); return 0; } Good luck with gRPC.

Source: https://habr.com/ru/post/265805/

All Articles