Creating artificial intelligence for games - from design to optimization

Today is the first of September. So, many readers of Habra are beginning to undergo a new level of one ancient well-known game - the one in which they need to pump their intellect, and, as a result, get a magic artifact - a certificate or diploma confirming your education. To this day, we have done the abstract translation of an article about the implementation of artificial intelligence (AI) for games - from its design to optimize performance. We hope that it will be useful for both beginners and advanced game developers.

Creating artificial intelligence for games

In traditional AI research, the goal is to create true intelligence, albeit with artificial means. In projects such as the Kismet of the Massachusetts Institute of Technology (MIT), an attempt is made to create an AI capable of learning and social interaction, the manifestation of emotions. At the time of this writing, MIT is working on creating an AI that has the level of abilities of a small child, and the results of this work are very promising.

In terms of games, genuine AI goes far beyond the requirements of an entertainment software project. In games such power is not needed. Game AI should not be endowed with feelings and self-awareness (to be honest, it is very good that this is so!), It does not need to learn anything beyond the bounds of the gameplay. The real goal of AI in games is to simulate intelligent behavior and to provide the player with a compelling, plausible task.

Making decisions

The basic principle underlying the work of AI is decision making. In order to make decisions, the system must influence objects using AI. At the same time, such an impact can be organized in the form of “AI broadcasting” or “object hits”.

')

In systems with "broadcasting AI", the AI system is usually isolated as a separate element of the game architecture. Such a strategy often takes the form of a separate stream or several streams in which the AI calculates the best solution for the given game parameters. When an AI makes a decision, it is transmitted to all participating objects. This approach works best in real-time strategies, where the AI analyzes the overall course of events throughout the game.

Systems with “object references” are better suited for games with simple objects. In such games, objects access the AI system every time an object “thinks” or updates itself. This approach is great for systems with a large number of objects that do not need to “think” too often, for example in shooters. Such a system can also take advantage of a multi-threaded architecture, but it requires more complex planning (see the Orion Granatyr Multi-threaded AI article for details).

Basic perception

In order for artificial intelligence to make intelligent decisions, it needs to somehow perceive the environment in which it is located. In simple systems, this perception can be limited to simply checking the position of the player’s object. In more complex systems, it is required to determine the main characteristics and properties of the game world, for example, possible routes for movement, the presence of natural shelters on the ground, areas of conflict.

In this case, developers need to invent a way to identify and identify the basic properties of the game world, important for the AI system. For example, shelters on the ground can be predefined by level designers or pre-calculated when loading or compiling a level map. Some items need to be calculated on the fly, such as conflict maps and immediate threats.

Rule based systems

The simplest form of artificial intelligence is a rule-based system. Such a system is the furthest from this artificial intelligence. A set of predefined algorithms determines the behavior of game objects. Given the variety of actions, the final result may be an implicit behavioral system, although such a system will in fact not be “intellectual” at all.

A classic gaming application that uses such a system is Pac-Man. Player haunted by four ghosts. Each ghost acts in obedience to a simple set of rules. One ghost always turns to the left, another always turns to the right, the third turns in an arbitrary direction, and the fourth always turns in the direction of the player. If ghosts appeared on the screen one at a time, their behavior would be very easy to identify and the player could easily escape from them. But since a group of four ghosts appears at once, their movements seem complicated and coordinated in tracking down the player. In fact, only the last of the four ghosts takes into account the location of the player.

A pictorial representation of a set of rules that govern ghosts in Pac-Man, where the arrows represent the “decisions” being made.

From this example, it follows that the rules do not have to be rigidly defined. They can be based on the perceived state (as in the last ghost) or on the editable parameters of objects. Such variables as the level of aggression, the level of courage, range of vision and speed of thinking, allow us to obtain a more diverse behavior of objects even when using rules-based systems.

In more complex and rational systems, sequences of conditional rules are used as the basis. In tactical games, the rules control the choice of tactics used. In strategic games, the rules govern the sequence of objects under construction and the reaction to conflicts. Rule based systems are the foundation of AI.

State machines as AI

A finite state machine (a machine with a finite number of states) is a way of modeling and realizing an object that has different states during its life. Each "state" can represent the physical conditions in which the object is located, or, for example, a set of emotions expressed by the object. Here, the emotional states have nothing to do with the emotions of AI, they relate to predetermined behavioral models that fit into the context of the game.

The state map in a typical finite automaton, the arrows represent possible state changes

There are at least two simple ways to implement a finite state machine with a system of objects. The first way: each state is a variable that can be checked (often this is done using large switching instructions). The second way: use function pointers (in C language) or virtual functions (C ++ and other object-oriented programming languages).

Adaptive AI

If the game requires a greater variety, if the player must have a stronger and more dynamic opponent, then the AI must have the ability to develop, adapt and adapt.

Adaptive AI is often used in combat and strategic games with complex mechanics and a huge variety of possibilities in the gameplay.

Prediction

The ability to accurately predict the next move of the enemy is extremely important for an adaptive system. To select the next action, you can use various methods, such as the recognition of patterns of past moves or random guesses.

One of the easiest ways to adapt is to track decisions made earlier, and assess their success. The AI system records the choice made by the player in the past. All decisions taken in the past must be somehow evaluated (for example, in combat games, you can use a received or lost advantage, lost health or a time advantage as a measure of success). You can collect additional information about the situation to form a context for decisions, such as relative health, past actions, and level position (people play differently when they have nowhere to retreat).

You can evaluate the history to determine the success of previous actions and decide whether to change tactics. Before creating a list of previous actions, an object can use standard tactics or act arbitrarily. This system can be linked to rule-based systems and various states.

In a tactical game, the history of past battles will help you choose the best tactics to use against a player’s team, for example, the AI can play defensively, choose offensive tactics, attack with all forces despite losses, or take a balanced approach. In a strategy game, it is possible for each player to select the optimal set of different combat units in the army. In games where the AI controls the characters supporting the player, adaptive AI can better adapt to the player’s natural style by learning his actions.

Perception and the search for ways

So far, the talk was about the simplest solutions made by intelligent agents; so in research in the field of artificial intelligence are called objects that use AI. Next, I give our hero (or monster, or any other type of game object) context for making decisions. Intellectual agents should identify areas of interest in the game world, and then think about how to get there.

Here we are getting close enough to this artificial intelligence. All intelligent agents require a basic ability to perceive their environment and any means of navigation and movement in the world around them (real or otherwise). Our game objects require the same thing, although the approach is very different. In addition, in relation to the game world, you can cheat, and you have to do it to make everything work quickly and smoothly.

How does AI perceive the world

Vision

If your agent is going to make well-considered decisions, he needs to know what is going on around him. In AI systems used in robotics, a considerable amount of research is devoted to computer vision: robots acquire the ability to perceive the world around them with the help of three-dimensional three-dimensional vision in the same way as humans. But for our purposes this level of perfection is, of course, superfluous.

Virtual worlds in which most games take place have a huge advantage over the real world in terms of AI and its perception. Unlike the real world, we know the amount of literally everything that is in the virtual world: somewhere among the game’s resources there is a list that lists everything that exists in the game. You can search this list by specifying the desired request and instantly receive information that your agent can use to make more informed decisions. In this case, you can either stop at the very first object that will be of interest for your agent, or get a list of all objects within a given range so that the agent can make the best decision regarding the world around.

This approach works well for simple games, but when the style of the game becomes more complicated, your agents should be more selective about what they "see." If you do not want agents to behave as if they have eyes on the back of the head, you can sample the list of potential objects that are within the radius of view of the agent. This can be done quite quickly with the help of simple mathematics.

- Calculate the vector between the agent and the desired object by subtracting the position of the target from the position of the agent.

- Calculate the angle between this vector and the direction of the agent's gaze.

- If the absolute value of the angle exceeds the specified angle of view of the agent, then your agent does not see the object.

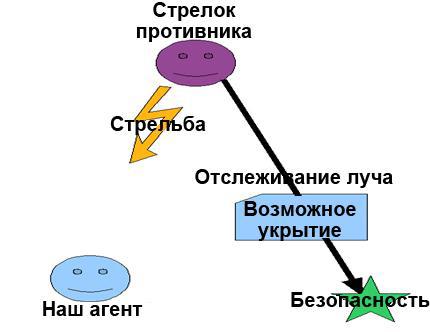

In more complex games, you need to consider that the player or other objects may be behind some kind of shelter. For such games, it may be necessary to build traveling rays (the so-called ray casting method) to find out if a possible target is not blocked. The construction of traveling rays is a mathematical way to check whether the beam intersects with any objects, starting from one point and moving in a given direction. If you want to know exactly how this is done with a specific example, read the article One head is good and two is better .

The method described above lets you know if something is blocking the center of the target, but this may not be enough to hide your agent. After all, it may be that the center of the agent is hidden, but his head is most convenient (for the enemy) sticks out above the shelter. The use of several traveling beams directed at specific points of the target will help not only to determine whether it is possible to hit the target, but also where exactly the target can be hit.

Hearing

It would seem that there is not much difference between hearing and vision. If you can see an object, then surely you can hear it. It is true that if your agent spotted an object, the agent can actively detect all the actions of the object until the object is out of sight. However, if you add an extra level of hearing to agents, then your vision will work more efficiently. Tracking object noise is the most important level of perception for any sneaky game.

As in the case of vision, you first need to get a list of nearby objects. To do this, you can again just check the distance, but the selection of the necessary objects from this list is already quite different.

A specific sound level is associated with every action an object can perform. You can preset sound levels (to optimize the game balance) or calculate them based on the actual energy of sound effects associated with certain actions (this allows you to achieve a high level of realism, but hardly necessary). If the sound produced is louder than a predetermined threshold, then your agent will notice the object making the sound.

If you need to take into account the obstacles, you can again reduce the list of objects and with the help of traveling rays to determine if there are any obstacles in the path of sound. But only a few materials are completely soundproof, so you should be more creative in reducing the list.

The basic functionality needed to give your agents a sense of hearing and hearing can also be used to simulate other senses. For example, the smell. (The ability to track players by intelligent agents by smell exists in modern games such as Call of Duty 4 *). Adding olfactory to the game does not cause any particular difficulties: it is enough to assign each game object a distinctive odor number and its intensity. The intensity of the smell determines two factors: the radius of the smell and the power of the smell of the wake, which remains behind. Players' active objects often track their previous positions for a number of reasons. One of these reasons may be the use of objects with a smell. Over time, the power of the smell of the trace decreases, the trace cools. When the agent's odor data changes, it should check for the presence of the smell just like the presence of sound (taking into account the radius and the obstacles). The success of the sense of smell is calculated based on the intensity of the smell and the smell force of the agent: these values are compared with the object and its trace.

Touching in games is supported initially, since in any game there is already a system for automatic handling of collisions of objects. Enough to ensure that intelligent agents respond to collision events and damage.

The ability to sense the world around us is wonderful, but what exactly should agents feel? It is necessary to indicate and identify accessible things in the agent settings. When you recognize what you see, the agents will be able to respond to it based on the rules that govern the object.

Temporary objects

They are sometimes called particles, sprites or special effects. Temporary objects are visual effects in the game world. Temporary objects are similar to ordinary objects in that one common class structure defines them all. The difference is that temporary objects do not think, do not react and do not interact with other objects of the game world, or with each other. Their only goal is to look beautiful, to improve the detail of the world for some time, and then to disappear. Temporary objects are used for such effects as bullet marks, smoke, sparks, blood splashes and even footprints on the ground.

Due to the nature of temporary objects, they do not require a significant amount of computation and collision detection (with the exception of very simple collisions with the outside world). The problem is that some temporary objects provide the player with visual clues about recent events. For example, bullet holes and marks of burning may indicate that there has been a battle here recently; footprints in the snow can lead to potential targets. Why shouldn't intelligent agents use such hints?

This problem can be solved in two ways. You can either expand the system of temporary objects by adding support for traveling rays (but the whole sense of the system of temporary objects will be distorted), or cheat: place an empty object close to the temporary objects. This empty object will not be able to think, no graphic elements will be associated with it, but your agents will be able to detect it, and the temporary object will have associated information that your agent can receive. So, when you draw a temporary pool of blood on the floor, you can also place there an invisible object, according to which your agents will find out that something has happened here. As for prints: this question is already solved with the help of a trace.

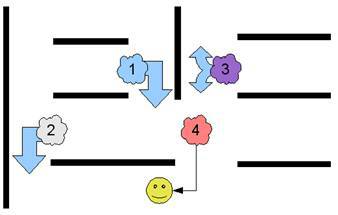

Shelter

In many shooting games, it would be great if agents knew how to hide behind cover, if they were nearby, and not just stand under enemy fire in an open area. But this problem is somewhat more complicated than all the problems listed earlier. How can agents be able to determine if there are any suitable shelters nearby?

The artists from Penny Arcade * satirically describe the problem of enemy AI and shelters

This problem actually consists of two tasks: first, you need to correctly recognize the shelter on the basis of the geometry of the surrounding world; secondly, you need to correctly recognize the shelter on the basis of objects of the surrounding world (as shown in the above comic). To determine whether a shelter is able to protect against attacks, you can simply once compare the size of the agent's border with the size of the possible shelter. Then you should check if your object will fit behind this cover. To do this, you need to draw rays from the differences in the positions of your shooter and cover. Using this beam, it is possible to determine whether the place behind the shelter is free (as viewed from the shooter’s side), and then mark this place as the next target of the agent.

In this scheme, our agent has determined that in a place marked with a green asterisk, it will be possible to hide in safety.

AI Navigation

So far, we have been talking about how the AI makes decisions and how the AI learns what is happening in the outside world (to make more informed decisions). Now let's see how the AI implements the decisions made. Having made a decision, an intelligent agent needs to understand how to move from point A to point B. To do this, you can use different approaches, choosing the best one depending on the nature of the game and the desired level of performance.

The algorithm, conventionally called Face and Turn , is one of the simplest ways to form an object’s route. Here is how it works.

- Move towards the goal.

- If you run into a wall, turn in the direction you are closest to the target. If none of the options available for selection has obvious advantages, the choice is made arbitrarily.

This approach works well for simple games. Perhaps, I can not even count how many monster games use this algorithm to track down the player. But using the “Encounter and Rotate” algorithm, objects that hunt a player are trapped behind concave walls or corners. Therefore, such an algorithm is ideal except for games with zombies or for games without walls and other obstacles.

If the agents in the game should act not so stupidly, you can extend the simple collision event and provide the agents with a memory. If agents are able to memorize where they have already been, they will be able to make more intelligent decisions as to where to turn further. If turns in all possible directions did not lead to luck, agents will be able to go back and choose a different route. In this way, agents will systematically search for a path to a goal. Here is how it works.

- Move towards the goal.

- If the path forks, choose one of the possible directions.

- If the path leads to a dead end, go back to the last branch and select another direction.

- If all possible paths are passed to no avail, discard the further search.

The advantage of this method is the low load on computing resources. This means that you can support a large number of moving agents without slowing down the game. This method can also take advantage of a multi-threaded architecture. The disadvantage is the waste of huge amounts of memory in vain, since each agent can track the whole map of possible paths.

However, poor memory usage can be avoided if agents keep trackable paths in shared memory. In this case, problems may arise in connection with a thread conflict, so we recommend storing the paths of objects in a separate module, to which all agents will send requests (as they move) and updated data (when new paths are found). The module of the map of paths can analyze the obtained information in order to avoid conflicts.

Search for ways

Maps on which paths are drawn using the “Collide and Rotate” algorithm allow you to adjust to changing maps. But in strategic games, players cannot wait for their troops to figure out how to route them. In addition, path maps can be very large, and a lot of resources will be spent on choosing the right path on such maps. In such situations, the search path algorithm comes to the rescue.

The search for ways can be considered a long ago successfully solved problem in the development of games. Even in such old games as the first version of the legendary game Starcraft * (Blizzard Entertainment *), huge amounts of game objects could determine the paths of movement on large and complex maps.

An algorithm called A * (pronounced e-star) is used to determine the paths of movement. With it, you can find the best path between any two points in the graph (in this case, on the map). A simple search on the Internet produces a pure algorithm that uses extremely “understandable” descriptive terms, such as F, G and H. Now I will try to describe this algorithm in a more comprehensible way.

First you need to create two lists: a list of nodes that are not yet checked (Unchecked), and a list of already checked nodes (Checked). Each list includes a location node, an estimated distance to the target, and a link to the parent object (the node that placed the node in the list). Initially lists are empty.

Now we add the initial location to the list of unchecked ones, without specifying anything as a parent object. Then we enter the algorithm.

- Select the most suitable node in the list.

- If this node is the goal, then everything is ready.

- If this node is not the target, add it to the list of checked.

- For each node adjacent to this node.

- If this node is not passable, ignore it.

- If this node is already in any of the lists (checked or unchecked), ignore it.

- Otherwise, add it to the list of unchecked, specify the current node as the parent and calculate the length of the path to the target (simply calculate the distance).

When an object reaches the target field, you can build a path by tracing the parent nodes down to the node that does not have a parent element (this is the starting node). At the same time, we get the optimal path along which the object can move.

This process works only when the agent receives an order or independently makes a decision about movement, therefore, here you can use multithreading with great benefit. The agent can send a request to the path search stream in order to get the detected path without affecting the AI performance. In most cases, the system can quickly get results. When loading a large number of requests for paths, the agent can either wait, or, without waiting for the paths to be issued, simply start moving in the right direction (for example, using the “Collide and Rotate” algorithm). On very large maps, you can divide the system into areas and calculate in advance all possible paths between areas (or route points).

In this case, the path finder simply finds the best path and returns the results immediately. The flow of the path map can simply track changes on the map (for example, when a player builds a wall), and then reruns the path verification as needed. Since this algorithm works in its own stream, it can adapt without affecting the performance of the rest of the game.

Multithreading can improve performance even within the path finding subsystem. This approach is widely used in all real-time strategy (RTS) and in systems with a large number of objects, each of which is trying to discover a potentially unique way. In different streams, you can simultaneously find many ways. Of course, the system must keep track of which paths are detected. Each path is enough to find only once.

Code example

Here is an example of the A * algorithm implemented in the C language. For the sake of simplicity, I have removed the supporting functions from this example.

This example is based on a game map in the form of a rectangular grid, each field of which can be passable or impassable. The above algorithm only supports moving to a neighboring field, but with minor changes it can be used to move diagonally, and even in games where level maps consist of hexagonal fields.

/*Get Path will return -1 on failure or a number on distance to path if a path is found, the array pointed to by path will be set with the path in Points*/ int GetPath(int sx,int sy,int gx,int gy,int team,Point *path,int pathlen) { int u,i,p; memset(&Checked,0,sizeof(Checked)); memset(&Unchecked,0,sizeof(Unchecked)); Unchecked[0].sx = sx; Unchecked[0].sy = sy; Unchecked[0].d = abs(sx - gx) + abs(sy - gy); Unchecked[0].px = -1; Unchecked[0].py = -1; Unchecked[0].used = 1; Unchecked[0].steps = 0; In the above code snippet, the initialization of the list of checked and untested nodes is processed, and the initial node is placed on the list of unchecked. After that, the rest of the algorithm runs as a loop.

do { u = GetBestUnchecked(); /*add */ AddtoList(Checked,Unchecked[u]); if((Unchecked[u].sx == gx)&&(Unchecked[u].sy == gy)) { break; } The above code snippet analyzes the node from the list of untested, closest to the target. The GetBestUnchecked () function checks the estimated distance of each node to the target. If this field is the target, the loop is broken, the process is complete.

Below you can see how the distance is calculated: take the estimated values of the distance to the target in the X and Y directions and add them. There may be a desire to use the Pythagorean theorem (the sum of the squares of the legs is equal to the square of the hypotenuse), but this is unnecessary. We need to get only the relative value of the distance, and not its exact value. Processors handle addition and subtraction many times faster than multiplication, which, in turn, works much faster than division. This code fragment is launched many times in each frame, therefore, we put optimization at the forefront.

/*tile to the left*/ if((Unchecked[u].sx - 1) >= 0)/*first, make sure we're on the map*/ { if((IsInList(Unchecked,Unchecked[u].sx - 1,Unchecked[u].sy,NULL) == 0)&&(IsInList(Checked,Unchecked[u].sx - 1,Unchecked[u].sy,NULL) == 0)) /*make sure we don't repeat a search*/ { if(TileValid(Unchecked[u].sx - 1,Unchecked[u].sy,team)) NewtoList(Unchecked,Unchecked[u].sx - 1,Unchecked[u].sy, Unchecked[u].sx, Unchecked[u].sy, abs((Unchecked[u].sx - 1) - gx) + abs(Unchecked[u].sy - gy), Unchecked[u].steps + 1); } } In the section above, the function analyzes the field to the left of the current node. If this field is not yet in the “Verified” or “Unchecked” lists, the function will try to add it to the list. TileValid () is another function that needs to be adapted for the game. If it passes the TileValid () check, then it will call NewToList () and the new location will be added to the unchecked list. In the following code fragments, the same process is repeated, but in other directions: on the right, above and below.

/*tile to the right*/ if((Unchecked[u].sx + 1) < WIDTH)/*first, make sure we're on the map*/ { if((IsInList(Unchecked,Unchecked[u].sx + 1,Unchecked[u].sy,NULL) == 0)&&(IsInList(Checked,Unchecked[u].sx + 1,Unchecked[u].sy,NULL) == 0)) /*make sure we don't repeat a search*/ { if(TileValid(Unchecked[u].sx + 1,Unchecked[u].sy,team)) NewtoList(Unchecked,Unchecked[u].sx + 1,Unchecked[u].sy, Unchecked[u].sx, Unchecked[u].sy, abs((Unchecked[u].sx + 1) - gx) + abs(Unchecked[u].sy - gy), Unchecked[u].steps + 1); } } /*tile below*/ if((Unchecked[u].sy + 1) < HEIGHT)/*first, make sure we're on the map*/ { if((IsInList(Unchecked,Unchecked[u].sx ,Unchecked[u].sy + 1,NULL) == 0)&&(IsInList(Checked,Unchecked[u].sx,Unchecked[u].sy + 1,NULL) == 0)) /*make sure we don't repeat a search*/ { if(TileValid(Unchecked[u].sx,Unchecked[u].sy + 1,team)) NewtoList(Unchecked,Unchecked[u].sx,Unchecked[u].sy + 1, Unchecked[u].sx, Unchecked[u].sy, abs(Unchecked[u].sx - gx) + abs((Unchecked[u].sy + 1) - gy), Unchecked[u].steps + 1); } } /*tile above*/ if((Unchecked[u].sy - 1) >= 0)/*first, make sure we're on the map*/ { if((IsInList(Unchecked,Unchecked[u].sx ,Unchecked[u].sy - 1,NULL) == 0)&&(IsInList(Checked,Unchecked[u].sx,Unchecked[u].sy - 1,NULL) == 0)) /*make sure we don't repeat a search*/ { if(TileValid(Unchecked[u].sx,Unchecked[u].sy - 1,team)) NewtoList(Unchecked,Unchecked[u].sx,Unchecked[u].sy - 1, Unchecked[u].sx, Unchecked[u].sy, abs(Unchecked[u].sx - gx) + abs((Unchecked[u].sy - 1) - gy), Unchecked[u].steps + 1); } } memset(&Unchecked[u],0,sizeof(PNode)); The last thing to do in this iteration is to remove the current node from the unchecked list. There is no need to analyze this field again.

} while(1) ; The final code snippet builds the path from the checked list by returning to the initial position. The path to the original location can always be found, since each node in the path keeps track of its parent node. Then the final path is returned (using the link). The function returns the length of the new path.

IsInList(Checked,Unchecked[u].sx,Unchecked[u].sy,&u); p = Checked[u].steps; if(path != NULL) { for(i = (p - 1);i >= 0;i--) { path[i].x = Checked[u].sx; path[i].y = Checked[u].sy; IsInList(Checked,Checked[u].px,Checked[u].py,&u); } } return p; } Tactical and strategic AI

Now it's time to talk about how to give agents more complex orders. Agents must learn to cope with the situation in which they find themselves. That is, let us move on to artificial intelligence, capable of working with broader goals and perceiving the situation on a larger scale.

Tactical AI

The role of tactical AI is to coordinate the efforts of groups of agents in the game. Groups are more effective because members of the group can support each other, can act as a single unit, exchange information and distribute actions to obtain information.

The principle of tactical AI is built around group dynamics. The game should track different groups of objects. Each group needs to be updated separately from individual objects. To do this, you can use a dedicated update module that tracks different groups, their goals and their composition. The disadvantage of this method is that it requires the development of a separate system for the game engine. Therefore, I prefer to use the group commander method.

One unit within the group can be assigned the role of group commander. All other members of the group are associated with their commander, their behavior is determined by the information obtained from the orders of the commander. The group commander handles all tactical AI calculations for the entire group.

Group movement: search for ways

The movement of objects can be improved with the help of group dynamics. When several agents act as a single unit, their movement can be made more efficient and realistic.

Pathfinding can take a lot of time even during acceleration using pre-computed path maps and multi-threaded AI. The group dynamics can significantly reduce the load generated by the path finding system.

When a group of units is given an order to move (by a player or artificial intelligence), the unit closest to the target is appointed as the commander of the group, and all other group members follow the commander. When updating the group commander, he asks for a path system. If there is a way, the group commander begins to move to the goal. All other units in the group simply follow their commander.

For more orderly movement applied system. When using the system, the group moves in an orderly manner, for example, by forming a phalanx or triangle.

In the game Overlord, private soldiers (red) act as one team and move in formations at the command of a player (warrior in armor)

It is very simple to manage the formation, for this it is enough to somewhat expand the scope of actions of the group commander. Each unit in the ranks performs a specific role. When building, each member of the group is assigned a place in the line in the same way as one of the units is assigned the role of group commander. The goal of each unit is to maintain its place at a relative distance from the other members of the group.

For example, take the rank and file in the game Overlord. They move in a triangular order. In the figure below, only the group commander (indicated by the letter “C”) should move along the route. Unit 1 follows unit "C" at the same speed behind and slightly to the left. Unit 2 monitors and follows unit 1, moving slightly to the side. Unit 3 does the same thing as unit 1, but follows unit 1, not the commander. All group members comply with this order.

Triangular order

Group tactics

Tactics, of course, is not limited to walking systems, but also includes support and combat as a team as a group. The commander assumes responsibility for planning and coordinating the work of the team. In the end, because it is the commander who is responsible for the lives of all subordinates in his unit.

For the implementation of group tactics, previously described systems can be used, for example, rule-based systems or finite automata. Typical examples of group behavior in games: treatment support (doctors remain close to the units that are most likely to be attacked), intelligence, fire cover, sacrifice (barrier of valuable units less valuable)

In the Enemy Territory Quake Wars game of the companies Id Software * and Splash Damage, Ltd. * there are five classes that play different roles in the dynamics of the group

In addition, another level of analysis can be useful in a group — an analysis of the capabilities of each group member. It is important for the commander to know in which situations the group can be effective, when the group gains an advantage and when the group should retreat.

For example, in the Blizzard Starcraft * real-time strategy, there are ground forces and flying troops. However, not all types of ground troops can shoot at flying troops. It is important for the group to be aware of this possibility. If there is not a single unit in the group capable of firing at flying units, then when a flying unit is detected, it is best to take flight. But if there are units in the group capable of hitting a flying enemy, even if there are few such units, it is better not to retreat, but to stop and defend (if this group has auxiliary units capable of treating those who fire at air targets).

Depending on the availability of various capabilities and on the number of units with such capabilities, it is possible to evaluate the combat effectiveness of a group in various situations. Groups in which these factors are taken into account will fight much more effectively.

Strategic AI

Strategic AI is a higher order AI, it manages a whole army and develops optimal strategies.

Strategic AI is usually used in real-time strategy, but lately it has been increasingly implemented in tactical first-person shooters. A player-controlled commander may be a separate system or may be configured as an empty object, having no place or graphic image, but being updated and reflecting.

Commanders obey hierarchical systems of rules and state machines that control such activities as collecting resources, studying tree technologies, creating an army, etc. As a rule, basic support for game elements does not require particularly complex reflections. Intellect is needed mainly when interacting with other players.

Managing this interaction (or battle) is where AI primarily works. The commander must examine the game map in order to locate the player, identify the main areas of interest, such as narrow passages, build defenses and analyze the defenses of another player. How exactly do this? There is no obvious answer to this question, but for simplicity you can use decision maps.

Solution maps

Decision maps are two-dimensional arrays, approximately corresponding to the game map. Each cell in the array corresponds to a specific area in the game and contains important information about that area. These cards help strategic AIs make intelligent decisions about the game as a whole.

Resource Cards

Resource maps contain information about the location of resources in a strategic game. The data on the places where resources are concentrated on the map can affect many decisions of the commander. Where to expand the base, where to deploy additional bases (resources near the base of the commander), where the enemy is most likely to expand its territory (resources near the base of the enemy), where are the most likely clashes for mastering the resources (resources midway between its base and base adversary).

Getting information about the number of possible available resources also influences decisions about which units to support and how to deploy an army. If there is a shortage of resources, you should be careful to use each unit, since there is less chance of replenishment. With an excess of resources, you can use the strategy of mass creation of cheap units or the creation of expensive powerful units.

Target maps

These cards contain information about the goals of the commander, for example, the location of the enemy bases, the location of the targets on the map (blow up an object of such and such, protect the object of such and such, hack the computer there, etc.) and the most important units in the army of our commander (main base, hero units, etc.). Keeping track of this information helps the commander more effectively manage his army. Places that need protection should be surrounded by fortifications and troops should always be located next to them. Targets to be attacked should be scouted and explored how they are protected. An analysis of the defense arranged around the targets is required to work out the optimal way to overcome this defense. On the basis of this data, the cornerstone of all war games is formed - conflict maps.

Conflict maps

Conflict maps are used and updated much more frequently than all the maps listed above. Conflict maps track all battles at this level of the game. Whenever one of the commander’s units engages with an adversary, this unit updates the conflict map, passing such data as the type of conflict, its strength, capabilities, and number of units.

Analysis of this information will help to draw the necessary conclusions about the effectiveness of deployed defense and attack, as well as the necessary countermeasures (involving additional units)

An example of a conflict map when applying to a terrain map. The more red, the more conflicts

Creating and using maps

Earlier, I said that the cards are compiled by units from the composition of the army commander. The rules governing artificial intelligence should include sending scouts as early as possible, as this will allow you to start creating maps. Sophisticated AI periodically checks the relevance of the cards. In the early stages of the game, when only a few units are engaged in supporting cards, the update should not form a significant load on the game engine. In the later stages of the game, when information is simultaneously issued by tens or hundreds of units, performance may be degraded.

However, it is not so difficult to achieve a quick update of the solution maps It is enough to place the solution map system in a separate stream. Ideally, each player controlled by artificial intelligence should have his own stream for processing his own set of decision cards. A significant performance increase will be achieved if all objects are already divided into several streams. Decision map streams will only process requests from messages that update parallelized objects.

The most efficient AI: thread processing

No matter how beautiful your AI system is, it is useless if it slows down the game. Effective programming and various optimization techniques provide some acceleration, but these measures alone are not enough.

Blizzard Entertainment’s Starcraft * II at the same time runs an AI of a huge number of units. It is best to use multi-threaded architecture for this.

When working with a system where several processors are installed (a processor with several cores), it is possible to divide the work between them. There are two ways to do this: task parallelization (functional parallelization) and data parallelization.

Task parallelization

The simplest way to adapt an application to a multi-threaded architecture is to divide it into separate tasks.

Functional parallelization allows each subsystem to use its own thread and core

A typical example is the sound system of the game engine. Sound does not need to interact with other systems: this system deals only with one thing - it reproduces sounds and their combinations upon request. Data exchange functions are calls for playing sounds and stopping playback. Due to this, the sound system is autonomous and is ideally suited for functional parallelization.

Depending on the needs of the game there can be many different tasks, each of which can be provided with a separate stream. Here we consider three such tasks: search for paths, strategic AI, and the actual system of objects.

Search for ways

The path finding system can be implemented in such a way that each object trying to find a path calls its own pathfinding algorithm every time a need arises. This method will work, but in this case the engine will wait for the pathfinding algorithm to work at each path request. If you select search paths in a separate subsystem, you can put it in a separate stream. Now the path finding system will work as a resource manager, in which paths are resources.

Any object that needs to find a path sends a search request and immediately receives a “receipt” from the path finding system. This receipt is simply a unique descriptor that the path finding system can use to operate. After that, the object continues to go about its business until the next frame in the game cycle. An object can check whether its receipt has been processed. If so, the object receives the calculated path; otherwise, the object continues to go about its business while waiting for the path to be processed.

In the path finding system, a receipt is used to track the path requests while the system is working on them, without affecting the performance of the other components. This approach has an interesting advantage - automatic tracking of all detected paths. Therefore, when a request is received for a path found earlier, the path finding system can simply issue a receipt for an already existing path. This method is great for systems where many objects request a path, since all found paths are likely to be requested multiple times.

Strategic AI

, , , , . , .

. . , . , ( ) : . ( 1/60 , , , .)

. , , , ,

. . . 8 ? Fine! 64 ? ! , . . , ( ), . «» , .

. , , .

Implementation

. , . , . , . .

. , , . . , .

( ) . , , , .

. (). « », . ( ). . , . , (, , ), .

.

- RequestPath(start, goal) . . :

- , ( ), ;

- ( ) ; , ;

- ( ).

- CheckPath(«ticket») . , , . , .

- UpdatePathFinder() . , . .

- . . ( ) .

- . . . :

- ;

- ;

- .

, , . ; . , - , - . « ».

, . . , ( ), . , , , , . . , , .

, . , ?

. . , . , , , . , .

: . . , , , . .

( ) , . «» : , «» , . , , .

. , . . ( , ) .

, . , : , . , , . ., , . .

Conclusion

, . , — , . , , . , . , .

( 1)

( 2)

( 3)

( 4)

Source: https://habr.com/ru/post/265679/

All Articles