Broadcast online video with minimal latency

Not so long ago, we were approached by a client who is engaged in video broadcasts of auctions and horse racing live. The events themselves are held in Australia, but the bets on them are made by players in Macau - the gaming capital of Southeast Asia. Of course, he was faced with a signal delay - as without it. The delay is the time between taking a frame and its appearance on the screen of the end device. And if a delay of 5 or even 10 seconds is not critical for an ordinary viewer, then for those who bet on the tote, such a difference can cost a lot of money. Hence, the task arose - to minimize the time it takes for the video to go from the source to the viewer.

As a result, the problem was solved, it was possible to reduce the delay in the entire chain to 500 ms. I remembered at the same time a case when using our software another client reduced the time of video broadcast from Android to the computer screen up to 1-2 seconds, which turned out to be the best indicator compared to other options that he tried.

We thought that some of the techniques that we used would be interesting not only for us.

')

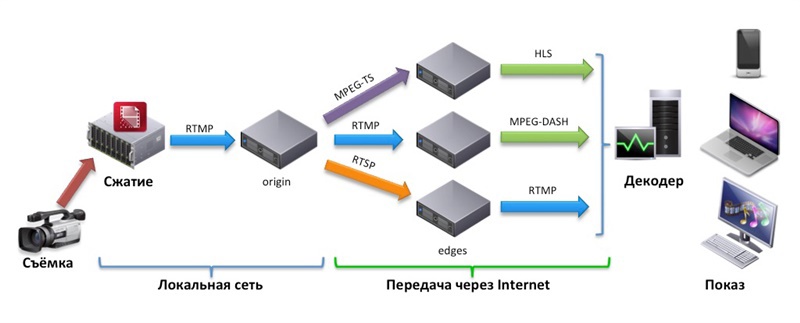

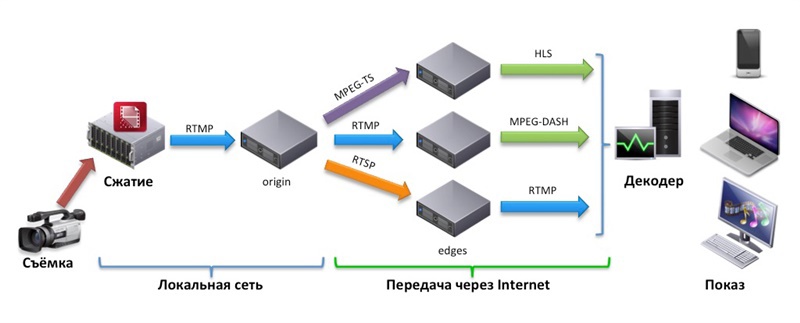

So, the video delivery chain can be schematically divided into 6 stages: shooting, compression, transmission over the local network from the encoder to the media server, transmission via the Internet, decoding and display on the user's device.

Let's see what determines the costs at each stage and how they can be reduced.

Everything is simple - it all depends on the camera, which is used in the shooting. The delay here is less than 1 ms, so when choosing a device you can focus on consumer qualities - codecs and protocols, picture quality, price, etc.

Video compression (encoding) is the processing of the original video in order to reduce the size of the transmitted data. The goal is to shake the stream with the appropriate codec for the task. Currently, the de facto standard is video in H.264 with audio in AAC.

Work on this step affects the entire chain in the future.

Preference is better to give hardware solutions, because software adds the time needed to work with resources and the operating system overhead. A properly configured encoder does not add any noticeable delay, but it sets the bitrate of the resulting stream and its type. There are variable bitrate (variable bitrate, VBR) and constant (constant bitrate, CBR).

The main advantage of VBR is that it produces a stream with the best ratio of image quality and amount of data occupied. However, it needs more computing power. In addition, if the bit rate at a particular point in time exceeds the channel capacity, this will further entail buffering at the decoding stage. Therefore, CBR is recommended for real-time video transmission with low latency.

However, the CBR is also not so simple. In fact, the constant bit rate is not constant at any one time, because H.264 stream contains frames of different sizes. Therefore, in the encoder there is a control of the bitrate averaging over separate periods of time, so that the amount of data is the same throughout the broadcast. Averaging is done, of course, at the expense of quality. The smaller the averaging period, the smaller the buffer at the decoding stage and the worse the quality of the transmitted video.

Industrial-level encoders provide a variety of bit rate controls designed to give the CBR a minimal impact on quality. Their description is beyond the scope of our article; we can only mention such parameters as granularity of bit rate control (Rate Control Granularity) and content-adaptive bit rate control (Content-Adaptive Rate Control).

The delay in this step is determined for the most part by the operation of the network between the encoder and the media server. Here, the settings of the buffer for compression and the overhead of the used media protocol play a role. Therefore, for the encoder buffer, it is necessary to specify the minimum number of frames and place it as close as possible to the media server.

The data transfer protocol should be selected based on the capabilities of the encoder and the media server, which will distribute data to end users. RTSP, RTMP or MPEG2-TS are most suitable for live video transmission.

The most interesting step, giving the greatest delay in most cases. However, using an efficient media server, a suitable protocol, and a reliable Internet connection will minimize latency.

The first factor in this chain is buffering within the media server during the transmaxing (repacking) of a stream from one protocol to another.

The second factor is related to the specifics of each protocol.

If you are going to use HTTP-based protocols, such as HLS or MPEG-DASH, be prepared for a significant increase in latency. The point here is the very principle of the work of these protocols - they are based on splitting content into segments, or chunks, which are issued sequentially. The size of the segments depends on the protocol and transmission parameters, but they are not recommended to be done for less than 2 seconds . Apple recommends that chunks be allocated in 10 seconds. Therefore, when using HLS, it is necessary either to reduce the size or accept the loss of time.

It can be assumed that it is possible to build delivery on HLS or DASH, minimizing the size of the segments and minimizing all other time losses. And for most cases it will work. However, for real-time transmission (after all, in our example, auctions), you need to use the RTMP or RTSP protocols.

In addition, we released our low latency live broadcast protocol, SLDP . Uses web sockets, so it works in most modern browsers. Plus there is an SDK for mobile platforms.

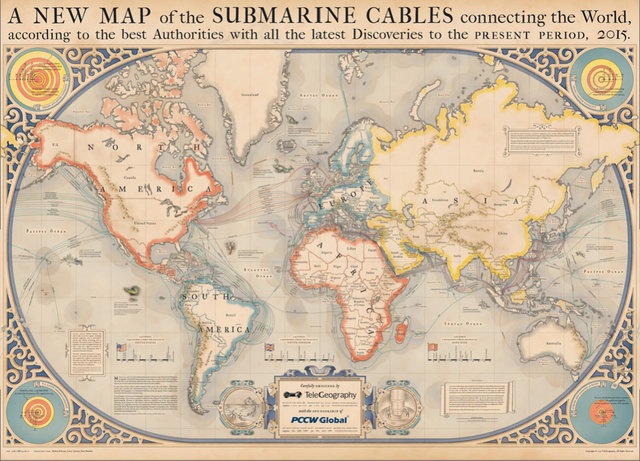

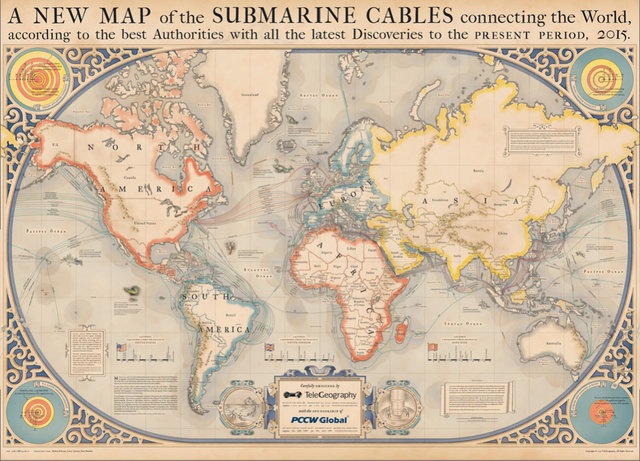

You can hardly influence the last factor, it is connected with the speed of pumping and the lags directly of the Internet communication channels. The transfer rate just needs to be added to the total latency. The decoder will play the possible lags by buffering.

(Full version of the cable card is here )

It is possible that between the source media server and the viewer you decide to put the so-called edge, that is, an intermediate caching media server to reduce the load on the system with a large number of simultaneous connections. If so, do not forget to throw a corresponding delay for the Edge too. Of course, for maximum optimization, you will only put Edge for ordinary viewers, for whom the delay is not critical, but you also need to remember this.

This step can greatly affect the transmission rate. In order to play out the possible lack of data during the transfer (remember the previous steps), the playback buffer must contain data from one complete average period, taking into account network delays. Therefore, the buffer can contain from several GOPs (GOP = group of pictures) to several frames, depending on the parameters of the encoder and the network status. Many players take the minimum value of the playback buffer equal to 1 second and change it in the course of work. The minimum possible buffer is achieved using hardware decoders (players), for example, based on the Raspberry Pi.

Here, as in the first step, the delay time is negligible — everything is covered by the capabilities of iron.

Returning to the example of our client, the delivery chain was built from the following parts. The video was filmed by a camera and given to the Beneston VMI-EN001-HD hardware encoder. Next, the RTMP stream went from it to the Nimble Streamer , which was tuned for maximum performance. In Macau, the data was also sent via RTMP, where the Raspberry Pi is located in the betting hall for decoding and displaying on large monitors. Ping from Australia to Macau was 140 ms. In the RTMP player on the Raspberry Pi, the buffer was set for 300 ms. The resulting signal delay for the 1080p30 stream varied between 500 and 600 milliseconds, which completely covers the customer's requirements. Simple viewers around the world see the picture with a delay of 3-4 seconds - not least because they prefer to watch through HLS on mobile devices. In this case, this value is also acceptable.

In general, live broadcasting is not an easy thing in every sense. Achieving high performance indicators is a serious task and reducing the signal transit time requires the selection of the right components and their painstaking adjustment.

As a result, the problem was solved, it was possible to reduce the delay in the entire chain to 500 ms. I remembered at the same time a case when using our software another client reduced the time of video broadcast from Android to the computer screen up to 1-2 seconds, which turned out to be the best indicator compared to other options that he tried.

We thought that some of the techniques that we used would be interesting not only for us.

')

So, the video delivery chain can be schematically divided into 6 stages: shooting, compression, transmission over the local network from the encoder to the media server, transmission via the Internet, decoding and display on the user's device.

Let's see what determines the costs at each stage and how they can be reduced.

1. Shooting video

Everything is simple - it all depends on the camera, which is used in the shooting. The delay here is less than 1 ms, so when choosing a device you can focus on consumer qualities - codecs and protocols, picture quality, price, etc.

2. Compression (encoding)

Video compression (encoding) is the processing of the original video in order to reduce the size of the transmitted data. The goal is to shake the stream with the appropriate codec for the task. Currently, the de facto standard is video in H.264 with audio in AAC.

Work on this step affects the entire chain in the future.

Preference is better to give hardware solutions, because software adds the time needed to work with resources and the operating system overhead. A properly configured encoder does not add any noticeable delay, but it sets the bitrate of the resulting stream and its type. There are variable bitrate (variable bitrate, VBR) and constant (constant bitrate, CBR).

The main advantage of VBR is that it produces a stream with the best ratio of image quality and amount of data occupied. However, it needs more computing power. In addition, if the bit rate at a particular point in time exceeds the channel capacity, this will further entail buffering at the decoding stage. Therefore, CBR is recommended for real-time video transmission with low latency.

However, the CBR is also not so simple. In fact, the constant bit rate is not constant at any one time, because H.264 stream contains frames of different sizes. Therefore, in the encoder there is a control of the bitrate averaging over separate periods of time, so that the amount of data is the same throughout the broadcast. Averaging is done, of course, at the expense of quality. The smaller the averaging period, the smaller the buffer at the decoding stage and the worse the quality of the transmitted video.

Industrial-level encoders provide a variety of bit rate controls designed to give the CBR a minimal impact on quality. Their description is beyond the scope of our article; we can only mention such parameters as granularity of bit rate control (Rate Control Granularity) and content-adaptive bit rate control (Content-Adaptive Rate Control).

3. Transfer from encoder to media server

The delay in this step is determined for the most part by the operation of the network between the encoder and the media server. Here, the settings of the buffer for compression and the overhead of the used media protocol play a role. Therefore, for the encoder buffer, it is necessary to specify the minimum number of frames and place it as close as possible to the media server.

The data transfer protocol should be selected based on the capabilities of the encoder and the media server, which will distribute data to end users. RTSP, RTMP or MPEG2-TS are most suitable for live video transmission.

4. Transfer via the Internet to the user's device

The most interesting step, giving the greatest delay in most cases. However, using an efficient media server, a suitable protocol, and a reliable Internet connection will minimize latency.

The first factor in this chain is buffering within the media server during the transmaxing (repacking) of a stream from one protocol to another.

The second factor is related to the specifics of each protocol.

If you are going to use HTTP-based protocols, such as HLS or MPEG-DASH, be prepared for a significant increase in latency. The point here is the very principle of the work of these protocols - they are based on splitting content into segments, or chunks, which are issued sequentially. The size of the segments depends on the protocol and transmission parameters, but they are not recommended to be done for less than 2 seconds . Apple recommends that chunks be allocated in 10 seconds. Therefore, when using HLS, it is necessary either to reduce the size or accept the loss of time.

It can be assumed that it is possible to build delivery on HLS or DASH, minimizing the size of the segments and minimizing all other time losses. And for most cases it will work. However, for real-time transmission (after all, in our example, auctions), you need to use the RTMP or RTSP protocols.

In addition, we released our low latency live broadcast protocol, SLDP . Uses web sockets, so it works in most modern browsers. Plus there is an SDK for mobile platforms.

You can hardly influence the last factor, it is connected with the speed of pumping and the lags directly of the Internet communication channels. The transfer rate just needs to be added to the total latency. The decoder will play the possible lags by buffering.

(Full version of the cable card is here )

It is possible that between the source media server and the viewer you decide to put the so-called edge, that is, an intermediate caching media server to reduce the load on the system with a large number of simultaneous connections. If so, do not forget to throw a corresponding delay for the Edge too. Of course, for maximum optimization, you will only put Edge for ordinary viewers, for whom the delay is not critical, but you also need to remember this.

5. Decoding

This step can greatly affect the transmission rate. In order to play out the possible lack of data during the transfer (remember the previous steps), the playback buffer must contain data from one complete average period, taking into account network delays. Therefore, the buffer can contain from several GOPs (GOP = group of pictures) to several frames, depending on the parameters of the encoder and the network status. Many players take the minimum value of the playback buffer equal to 1 second and change it in the course of work. The minimum possible buffer is achieved using hardware decoders (players), for example, based on the Raspberry Pi.

6. Display

Here, as in the first step, the delay time is negligible — everything is covered by the capabilities of iron.

Returning to the example of our client, the delivery chain was built from the following parts. The video was filmed by a camera and given to the Beneston VMI-EN001-HD hardware encoder. Next, the RTMP stream went from it to the Nimble Streamer , which was tuned for maximum performance. In Macau, the data was also sent via RTMP, where the Raspberry Pi is located in the betting hall for decoding and displaying on large monitors. Ping from Australia to Macau was 140 ms. In the RTMP player on the Raspberry Pi, the buffer was set for 300 ms. The resulting signal delay for the 1080p30 stream varied between 500 and 600 milliseconds, which completely covers the customer's requirements. Simple viewers around the world see the picture with a delay of 3-4 seconds - not least because they prefer to watch through HLS on mobile devices. In this case, this value is also acceptable.

In general, live broadcasting is not an easy thing in every sense. Achieving high performance indicators is a serious task and reducing the signal transit time requires the selection of the right components and their painstaking adjustment.

Source: https://habr.com/ru/post/265675/

All Articles