Node.js and Express as they are

Hello, lovers of our Habroblog and other readers!

We plan to once again be noted on the field of unfading Node.js and are considering the possibility of publishing this book:

')

Since the reader's interest is quite understandable, “how did he shove it all into two hundred pages, and why do I need it?” under the cut, we offer the translation of a thorough article by Tomislav Kapan on why Node.js is really needed.

Introduction

Thanks to the growing popularity, the JavaScript language is actively developing nowadays, and modern web development has dramatically changed from the recent past. Those things that we can do today on the Web with JavaScript, running on the server, as well as in the browser, it was difficult to imagine a few years ago - at best, such opportunities existed only in sandboxes like Flash or Java Applets.

Before talking in detail about Node.js, you can read about the benefits of using full-window JavaScript . At the same time, the language and data format (JSON) are closely intertwined, which allows for optimal reuse of development resources. Since this advantage is inherent not so much in Node.js, as JavaScript as a whole, we will not dwell on this topic in detail. But therein lies the key advantage that you gain by incorporating the Node into your stack.

Wikipedia reads: “Node.js is a package that includes Google’s JavaScript V8 engine, the platform’s level of abstraction is the libuv library, and the core library, which is itself written in JavaScript.” In addition, it should be noted that Ryan Dahl, the author of Node .js, wanted to create real-time, push-enabled sites, inspired by apps like Gmail. ” In Node.js, he provided programmers with a tool for working with the non-blocking, event-oriented I / O paradigm.

After 20 years of the domination of the paradigm of “stateless stateless web applications based on request-response stateless communication”, we finally have real-time bidirectional communication applications.

In short: Node.js shines in real-time applications, as it uses push technology via web sockets . What is so revolutionary about this? Well, as already mentioned, after 20 years of using the aforementioned paradigm, such bidirectional applications have appeared, where a connection can initiate both a client and a server, and then proceed to the free exchange of data. Such technology contrasts sharply with the typical paradigm of web responses, where the client always initiates communication. In addition, the whole technology is based on an open web stack (HTML, CSS and JS), the work goes through the standard port 80.

A reader may argue that we have had all this for more than one year - in the form of Flash and Java applets - but in fact they were just sandboxes that used the Web as a transport protocol for delivering data to the client. In addition, they worked in isolation and often operated through non-standard ports, which could require additional access rights, etc.

For all its merits, Node.js currently plays a key role in the technological stack of many prominent companies that directly depend on the unique properties of Node.

In this article, we’ll talk not only about how these benefits are achieved, but also why you might prefer Node.js - or opt out - taking as an example a few classic models of web applications.

How it works?

The main idea of Node.js is to use non-blocking event-oriented I / O to remain lightweight and efficient when handling applications that process large amounts of real-time data and operate on distributed devices.

Spacious.

In essence, this means that Node.js is not a platform for all occasions that will dominate the world of web development. On the contrary, it is a platform for solving strictly defined tasks . To understand this is absolutely necessary. Of course, you should not use Node.js for operations that intensively load the processor, moreover, the use of Node.js in heavy computations virtually nullifies all its advantages. Node.js is really good for creating fast, scalable network applications, because it allows you to simultaneously handle a huge number of high bandwidth connections, which is equivalent to high scalability.

The subtleties of the work of Node.js "under the hood" are quite interesting. Compared to traditional web services, where each connection (request) generates a new thread, loading the system’s RAM and, finally, sorting out this memory without a trace, Node.js is much more economical: it works in a single stream, it uses non-blocking I / O allows you to support tens of thousands of competitive connections (which exist in the event loop ).

Simple calculation: let's say each stream can potentially request 2 MB of memory and works in a system with 8 GB of RAM. In this case, we can theoretically expect a maximum of 4,000 concurrent connections, plus the cost of context switching between threads . It is with this scenario that one has to deal with using traditional web services. By avoiding all of this, Node.js can scale to more than a million competitive connections (as an experiment to validate the concept ).

Of course, the question arises about the separation of a single stream between all client requests, this is the main "trap" when writing applications using Node.js. First, complex calculations can clog a single Node.js thread, which is fraught with problems for all clients (more on this below), since incoming requests will be blocked until the requested calculation is completed. Secondly, developers should be very careful and not allow exceptions to pop up to the base (topmost) Node.js event cycle, because otherwise the Node.js instance will end (in fact, the entire program will crash).

To avoid exceptions that pop up to the surface itself, the following technique is used: errors are sent back to the caller as callback parameters (and not thrown away, as in other environments). In case any unhandled exception skips and pops up, there are many paradigms and tools that allow you to monitor the Node process and perform the necessary restoration of an abnormally terminated instance (although user sessions cannot be restored at the same time). The most common of these are the Forever module , or work using external system tools upstart and monit .

NPM: Package Manager Node

When discussing Node.js, you just need to mention the built-in support for package management that exists in it, for which the NPM tool is used, which by default is present in any Node.js installation. The idea behind NPM modules is in many ways similar to Ruby Gems: it’s a set of reusable, publicly available components that are easy to install via an online repository; they support version and dependency management.

A complete list of packaged modules is available on the NPM site npmjs.org , and is also available using the NPM CLI tool, which is automatically installed along with Node.js. The module ecosystem is completely open, anyone can publish their own module in it, which will appear in the list of NPM repository. A brief introduction to NPM (a bit oldish, but still relevant) is on howtonode.org/introduction-to-npm .

Some of the most popular modern NPM modules:

The list goes on. There are many generally available useful packages, it is simply impossible to list them all here.

When should Node.js be used?

CHAT

Chat is the most typical multi-user real-time application. From IRC (there were times) using a variety of open and open protocols that function through non-standard ports, we have come to modern times when everything can be implemented on Node.js using web sockets operating through standard port 80.

Chat software is really the ideal product for using Node.js: it is a lightweight, high-traffic application that intensively processes data (but does not consume any computing power) and runs on many distributed devices. In addition, it is very convenient to learn, as with all its simplicity it covers most of the paradigms that you might need to use in a typical Node.js application.

Let's try to depict how it works.

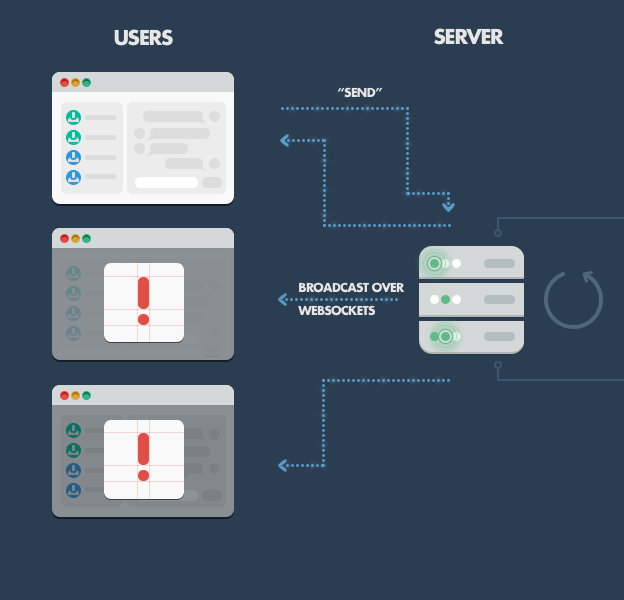

In the simplest case, we have the only chat room on the site where users come and exchange messages in the “one to many” mode (in fact, “to all”). Suppose we have three visitors on our site, and they all can write messages on our forum.

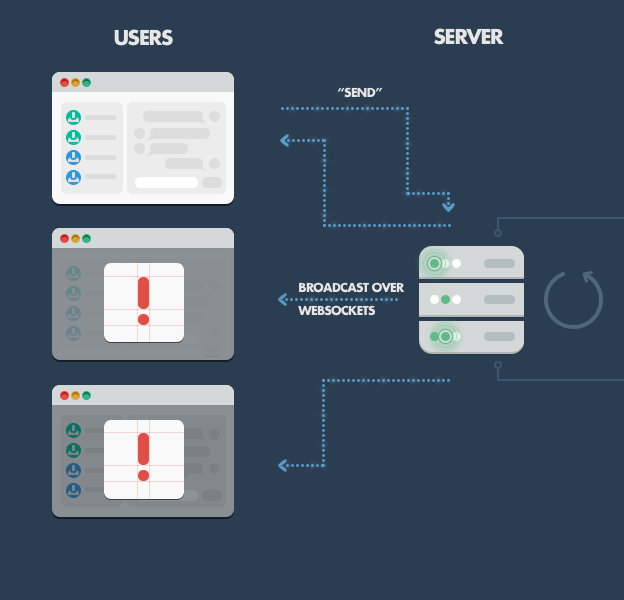

On the server side, we have a simple Express.js application that implements two things: 1) the GET '/' request handler, which serves the web page that hosts both the message forum and the 'Send' button, which initializes the new message entered and 2) a web socket server listening to new messages issued by web socket clients.

On the client side, we have an HTML page that has two handlers configured: one of them listens for click events on the 'Send' button, which picks up the entered message and drops it onto the web socket, and the other listens for new incoming messages on the client for maintenance Web sockets (i.e., messages sent by other clients that the server is about to display using this special client).

This is what happens when one of the clients sends a message:

This is the simplest example . For a more reliable solution, you can use a simple cache based on Redis storage. An even more advanced solution is a message queue that allows you to process message routing to clients and provide a more reliable delivery mechanism that can compensate for temporary connection breaks or store messages for registered clients while they are offline. But no matter what optimizations you perform, Node.js will still act according to the same basic principles: react to events, handle many competitive connections, and maintain smooth user interactions.

API OVER THE OBJECT DATABASE

Although Node.js is especially good in the context of real-time applications, it is also quite suitable for providing information from object databases (for example, MongoDB). Data stored in the JSON format allows Node.js to function without loss of compliance and without data conversion.

For example, if you use Rails, then you would have to convert JSON to binary models, and then submit them again as JSON over HTTP, when the data will be consumed by Backbone.js, Angular.js, or even by regular jQuery AJAX calls. Working with Node.js, you can simply provide your JSON objects to the client through the REST API so that the client consumes them. In addition, you don’t have to worry about converting between JSON and anything else when reading a database and writing to it (if you use MongoDB). So, you do without a lot of transformations using the universal data serialization format used on the client, on the server, and in the database.

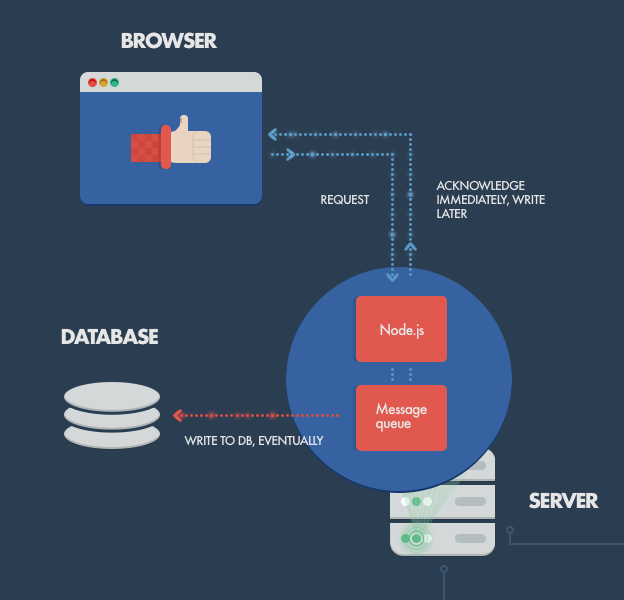

ENTRY TURN

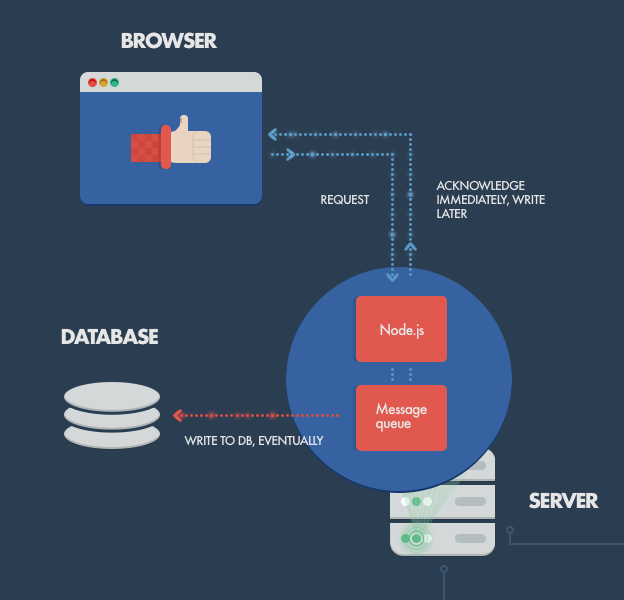

If you get large amounts of concurrent data, then the database can become a bottleneck. As shown above, Node.js easily handles competitive connections as such. But since accessing the database is a blocking operation (in this case), we have problems. The solution is to record the client's behavior before the data is actually written to the database.

With this approach, the responsiveness of the system is maintained under high load, which is especially useful if the client does not need confirmation that the data record was successful. Typical examples: logging or recording user activity data (user tracking), processed on a batch basis and not used afterwards; operations, the result of which should be reflected instantly (for example, updating the number of likes on Facebook), where consistency is ultimately acceptable, which is so often used in the world of NoSQL.

The data is lined up using a special infrastructure for caching and working with message queues (for example, RabbitMQ , ZeroMQ ) and is digested by a separate database process intended for packet writing or by special database interface services designed for intensive calculations. Similar behavior can be realized with the help of other languages / frameworks, but on different hardware and not with such high and stable bandwidth.

In short, Node allows you to postpone write operations to the database for later, continuing to work in this mode, as if these records were completed successfully.

DATA TRANSFER

On more traditional web platforms, HTTP requests and responses are treated as isolated events; but in fact they are streams. This point can be used in Node.js to create some cool features. For example, you can process files while they are still being uploaded, since data is being streamed and we can work with them online. This can be done, for example, when encoding video or audio in real time , as well as when installing a proxy between different data sources (for details, see the next section).

PROXY

Node.js can be used as a server proxy, and in this case it can handle a large number of simultaneous connections in non-blocking mode. This is especially useful when mediating between different services, which have different response times, or when collecting data from multiple sources.

For example, let's consider a server application that exchanges information with third-party resources, collects information from various sources, or stores resources such as images and video, which are then provided to third-party cloud services.

Although there are dedicated proxy servers, it is convenient to use Node instead, especially if the proxy infrastructure does not exist, or if you need a solution for local development. Here, I mean that you can create a client application where the Node.js development server will be used, where we will store resources and make proxies / stubs for requests to the API, and in real life such interactions will already be performed using a dedicated proxy service (nginx, HAProxy, etc.)

INFORMATION PANEL EXCHANGE TRADER

Let's go back to the application level. Another segment where PC programs dominate, but they can easily be replaced by a real-time web solution - this is trading software, where quotes are tracked, calculations and technical analysis are performed, and charts and diagrams are drawn.

If you use a real-time web solution in such a case, the broker using it can easily switch between workstations or sites. Soon we will begin to notice such brokers on the beaches of Florida ... Ibiza ... Bali.

PANEL FOR MONITORING APPLICATIONS

This is another practical case for which the “Node + Web Sockets” model is ideal. Here we monitor site visitors and visualize their interactions in real time (if you are interested in such an idea, then it is already solved with the help of Hummingbird ).

You can collect statistics about the user in real time and even go to a higher level by adding targeted visitor interactions to the program, opening a communication channel as soon as the guest reaches a specific point in your funnel (if you are interested in this idea, then it is already solved with CANDDi ).

Imagine how you could optimize your business if you could find out in real time what your users are doing - and also visualize their interactions. Bidirectional Node.js sockets offer you this opportunity.

INFORMATION PANEL FOR TRACKING SYSTEM

Now let's talk about the infrastructure aspects. Suppose there is a SaaS provider who wants to offer users a page for tracking services (say, the GitHub status page ). With the Node.js event loop, you can create a powerful web-based interface, where service states will be asynchronously checked in real time, and data will be sent to the client via web sockets.

This technology allows you to report on the status of both internal (intra-corporate) and publicly available services in real time. Let's develop this idea a bit and try to introduce a network operations center (NOC) that tracks the work of the telecom operator's applications, cloud service provider / hosting provider, or some financial institution. All this works in an open web stack based on Node.js and web sockets, not Java and / or Java applets.

Note: Do not attempt to create hard real-time systems on the Node (i.e., requiring well-defined response times). Perhaps, such applications are better developed on Erlang .

Where can I use Node.js

SERVER WEB APPLICATIONS

Node.js with Express.js can also be used to create classic web applications on the server side. However, even if it is possible, such a request / response paradigm, where Node.js will transfer the rendered HTML, is not typical for this technology. There are arguments both in favor of this approach and against it. Please note the following:

Behind:

[*] As an alternative to such CPU-intensive calculations, you can create a highly scalable MQ environment with processing on the database interface so that Node remains at the forefront and asynchronously processes client requests.

When should Node.js be used?

SERVER WEB APPLICATION FOR WHICH RELATIONAL DATABASE IS LOCATED

Comparing Node.js plus Express.js with Ruby on Rails, we confidently choose the second option when it comes to accessing relational data.

The relational database tools for Node.js are still in their infancy, and working with them is rather unpleasant. On the other hand, Rails automagically configures data access right at installation, plus it provides tools to support database schema migrations and other Gems. Rails and related frameworks have mature and proven implementations of access to the data layer (Active Record or Data Mapper), which you will be sorely lacking if you try to reproduce such a construct in pure JavaScript. [*]

Still, if you are firmly committed to doing everything in JavaScript, pay attention to Sequelize and Node ORM2 - both tools are not devoid of roughness, but over time they may ripen.

[*] Node can be used exclusively in the client part (and this is often done), and the machine interface can be executed on Rails, thus maintaining easy access to the relational database.

COMPLEX SERVER CALCULATIONS AND PROCESSING

When it comes to complex calculations, Node.js leaves much to be desired. Of course, you are not going to program a Node server for Fibonacci calculations . In principle, any computational operations that heavily load the processor devalue the gain in throughput, which in Node is achieved thanks to event-oriented non-blocking I / O. The point is that any incoming requests will be blocked, while a single stream is busy digesting numbers.

As mentioned above, Node.js is single-threaded and uses only one processor core. You may need to implement competition on a multi-core server, for this the Node kernel development team is already preparing a special cluster module. In addition, you could easily run multiple instances of the Node.js server behind the reverse proxy using nginx .

When clustering, it is possible to unload all complex calculations into background processes running in a more suitable environment, and to ensure communication between them through a message queue server, for example, RabbitMQ.

Although initially your background processing could run all on the same server, this approach provides very high scalability. Such background processing services can easily be distributed to separate working servers without the need to configure the load on the front-facing web servers.

Of course, such an approach is also appropriate on other platforms, but in the case of Node.js, that same huge bandwidth, which we mentioned above, is acquired, since each request is a small task that is processed very quickly and efficiently.

Conclusion

We discussed Node.js from a theoretical and practical point of view, starting with its goals and purpose and ending with a conversation about all sorts of goodies and pitfalls. If you have problems with Node.js, then remember that they almost always boil down to the following fact: blocking operations are the cause of all ills . In 99% percent of cases, all problems begin due to misuse of the Node.

Remember: Node.js was never intended to solve the problem of scaling computing. It was created to scale I / O, which really handles very well .

Why use Node.js? If your task does not involve intensive calculations and access to blocking resources, then you can fully take advantage of Node.js and enjoy fast and easily scalable web applications.

We plan to once again be noted on the field of unfading Node.js and are considering the possibility of publishing this book:

')

Since the reader's interest is quite understandable, “how did he shove it all into two hundred pages, and why do I need it?” under the cut, we offer the translation of a thorough article by Tomislav Kapan on why Node.js is really needed.

Introduction

Thanks to the growing popularity, the JavaScript language is actively developing nowadays, and modern web development has dramatically changed from the recent past. Those things that we can do today on the Web with JavaScript, running on the server, as well as in the browser, it was difficult to imagine a few years ago - at best, such opportunities existed only in sandboxes like Flash or Java Applets.

Before talking in detail about Node.js, you can read about the benefits of using full-window JavaScript . At the same time, the language and data format (JSON) are closely intertwined, which allows for optimal reuse of development resources. Since this advantage is inherent not so much in Node.js, as JavaScript as a whole, we will not dwell on this topic in detail. But therein lies the key advantage that you gain by incorporating the Node into your stack.

Wikipedia reads: “Node.js is a package that includes Google’s JavaScript V8 engine, the platform’s level of abstraction is the libuv library, and the core library, which is itself written in JavaScript.” In addition, it should be noted that Ryan Dahl, the author of Node .js, wanted to create real-time, push-enabled sites, inspired by apps like Gmail. ” In Node.js, he provided programmers with a tool for working with the non-blocking, event-oriented I / O paradigm.

After 20 years of the domination of the paradigm of “stateless stateless web applications based on request-response stateless communication”, we finally have real-time bidirectional communication applications.

In short: Node.js shines in real-time applications, as it uses push technology via web sockets . What is so revolutionary about this? Well, as already mentioned, after 20 years of using the aforementioned paradigm, such bidirectional applications have appeared, where a connection can initiate both a client and a server, and then proceed to the free exchange of data. Such technology contrasts sharply with the typical paradigm of web responses, where the client always initiates communication. In addition, the whole technology is based on an open web stack (HTML, CSS and JS), the work goes through the standard port 80.

A reader may argue that we have had all this for more than one year - in the form of Flash and Java applets - but in fact they were just sandboxes that used the Web as a transport protocol for delivering data to the client. In addition, they worked in isolation and often operated through non-standard ports, which could require additional access rights, etc.

For all its merits, Node.js currently plays a key role in the technological stack of many prominent companies that directly depend on the unique properties of Node.

In this article, we’ll talk not only about how these benefits are achieved, but also why you might prefer Node.js - or opt out - taking as an example a few classic models of web applications.

How it works?

The main idea of Node.js is to use non-blocking event-oriented I / O to remain lightweight and efficient when handling applications that process large amounts of real-time data and operate on distributed devices.

Spacious.

In essence, this means that Node.js is not a platform for all occasions that will dominate the world of web development. On the contrary, it is a platform for solving strictly defined tasks . To understand this is absolutely necessary. Of course, you should not use Node.js for operations that intensively load the processor, moreover, the use of Node.js in heavy computations virtually nullifies all its advantages. Node.js is really good for creating fast, scalable network applications, because it allows you to simultaneously handle a huge number of high bandwidth connections, which is equivalent to high scalability.

The subtleties of the work of Node.js "under the hood" are quite interesting. Compared to traditional web services, where each connection (request) generates a new thread, loading the system’s RAM and, finally, sorting out this memory without a trace, Node.js is much more economical: it works in a single stream, it uses non-blocking I / O allows you to support tens of thousands of competitive connections (which exist in the event loop ).

Simple calculation: let's say each stream can potentially request 2 MB of memory and works in a system with 8 GB of RAM. In this case, we can theoretically expect a maximum of 4,000 concurrent connections, plus the cost of context switching between threads . It is with this scenario that one has to deal with using traditional web services. By avoiding all of this, Node.js can scale to more than a million competitive connections (as an experiment to validate the concept ).

Of course, the question arises about the separation of a single stream between all client requests, this is the main "trap" when writing applications using Node.js. First, complex calculations can clog a single Node.js thread, which is fraught with problems for all clients (more on this below), since incoming requests will be blocked until the requested calculation is completed. Secondly, developers should be very careful and not allow exceptions to pop up to the base (topmost) Node.js event cycle, because otherwise the Node.js instance will end (in fact, the entire program will crash).

To avoid exceptions that pop up to the surface itself, the following technique is used: errors are sent back to the caller as callback parameters (and not thrown away, as in other environments). In case any unhandled exception skips and pops up, there are many paradigms and tools that allow you to monitor the Node process and perform the necessary restoration of an abnormally terminated instance (although user sessions cannot be restored at the same time). The most common of these are the Forever module , or work using external system tools upstart and monit .

NPM: Package Manager Node

When discussing Node.js, you just need to mention the built-in support for package management that exists in it, for which the NPM tool is used, which by default is present in any Node.js installation. The idea behind NPM modules is in many ways similar to Ruby Gems: it’s a set of reusable, publicly available components that are easy to install via an online repository; they support version and dependency management.

A complete list of packaged modules is available on the NPM site npmjs.org , and is also available using the NPM CLI tool, which is automatically installed along with Node.js. The module ecosystem is completely open, anyone can publish their own module in it, which will appear in the list of NPM repository. A brief introduction to NPM (a bit oldish, but still relevant) is on howtonode.org/introduction-to-npm .

Some of the most popular modern NPM modules:

- express : Express.js, a web development framework for Node.js, written in the spirit of Sinatra, is the de facto standard for most Node.js applications that exist today.

- connect : Connect is an extensible framework that works with Node.js as an HTTP server, providing a collection of high-performance "plug-ins", collectively known as middleware; serves as the basis for express.

- socket.io and sockjs are the back end of the two most common web socket components today.

- Jade is one of the most popular template engines written in the spirit of HAML, the default is used in Express.js.

- mongo and mongojs are MongoDB wrappers providing APIs for MongoDB object databases in Node.js.

- redis is the Redis client library.

- coffee-script is a CoffeeScript compiler that allows developers to write programs with Node.js using Coffee.

- underscore ( lodash , lazy ) - The most popular JavaScript helper library, packaged for use with Node.js, as well as two similar libraries that provide enhanced performance , because they are implemented a little differently.

- forever - Probably the most common utility that provides uninterrupted script execution on a given node. Maintains the performance of your Node.js process in case of any unexpected failures.

The list goes on. There are many generally available useful packages, it is simply impossible to list them all here.

When should Node.js be used?

CHAT

Chat is the most typical multi-user real-time application. From IRC (there were times) using a variety of open and open protocols that function through non-standard ports, we have come to modern times when everything can be implemented on Node.js using web sockets operating through standard port 80.

Chat software is really the ideal product for using Node.js: it is a lightweight, high-traffic application that intensively processes data (but does not consume any computing power) and runs on many distributed devices. In addition, it is very convenient to learn, as with all its simplicity it covers most of the paradigms that you might need to use in a typical Node.js application.

Let's try to depict how it works.

In the simplest case, we have the only chat room on the site where users come and exchange messages in the “one to many” mode (in fact, “to all”). Suppose we have three visitors on our site, and they all can write messages on our forum.

On the server side, we have a simple Express.js application that implements two things: 1) the GET '/' request handler, which serves the web page that hosts both the message forum and the 'Send' button, which initializes the new message entered and 2) a web socket server listening to new messages issued by web socket clients.

On the client side, we have an HTML page that has two handlers configured: one of them listens for click events on the 'Send' button, which picks up the entered message and drops it onto the web socket, and the other listens for new incoming messages on the client for maintenance Web sockets (i.e., messages sent by other clients that the server is about to display using this special client).

This is what happens when one of the clients sends a message:

- The browser picks up a click on the 'Send' button using a JavaScript handler, retrieves the value from the input field (ie, the message text) and issues a web socket message using the web socket client connected to our server (this client is initialized together with a web page).

- The server component of the web socket connection receives the message and forwards it to all other connected clients by the broadcast method.

- All clients receive a push message using a web socket component running on a web page. Then they pick up the content of the message and update the web page, adding a new entry to the forum.

This is the simplest example . For a more reliable solution, you can use a simple cache based on Redis storage. An even more advanced solution is a message queue that allows you to process message routing to clients and provide a more reliable delivery mechanism that can compensate for temporary connection breaks or store messages for registered clients while they are offline. But no matter what optimizations you perform, Node.js will still act according to the same basic principles: react to events, handle many competitive connections, and maintain smooth user interactions.

API OVER THE OBJECT DATABASE

Although Node.js is especially good in the context of real-time applications, it is also quite suitable for providing information from object databases (for example, MongoDB). Data stored in the JSON format allows Node.js to function without loss of compliance and without data conversion.

For example, if you use Rails, then you would have to convert JSON to binary models, and then submit them again as JSON over HTTP, when the data will be consumed by Backbone.js, Angular.js, or even by regular jQuery AJAX calls. Working with Node.js, you can simply provide your JSON objects to the client through the REST API so that the client consumes them. In addition, you don’t have to worry about converting between JSON and anything else when reading a database and writing to it (if you use MongoDB). So, you do without a lot of transformations using the universal data serialization format used on the client, on the server, and in the database.

ENTRY TURN

If you get large amounts of concurrent data, then the database can become a bottleneck. As shown above, Node.js easily handles competitive connections as such. But since accessing the database is a blocking operation (in this case), we have problems. The solution is to record the client's behavior before the data is actually written to the database.

With this approach, the responsiveness of the system is maintained under high load, which is especially useful if the client does not need confirmation that the data record was successful. Typical examples: logging or recording user activity data (user tracking), processed on a batch basis and not used afterwards; operations, the result of which should be reflected instantly (for example, updating the number of likes on Facebook), where consistency is ultimately acceptable, which is so often used in the world of NoSQL.

The data is lined up using a special infrastructure for caching and working with message queues (for example, RabbitMQ , ZeroMQ ) and is digested by a separate database process intended for packet writing or by special database interface services designed for intensive calculations. Similar behavior can be realized with the help of other languages / frameworks, but on different hardware and not with such high and stable bandwidth.

In short, Node allows you to postpone write operations to the database for later, continuing to work in this mode, as if these records were completed successfully.

DATA TRANSFER

On more traditional web platforms, HTTP requests and responses are treated as isolated events; but in fact they are streams. This point can be used in Node.js to create some cool features. For example, you can process files while they are still being uploaded, since data is being streamed and we can work with them online. This can be done, for example, when encoding video or audio in real time , as well as when installing a proxy between different data sources (for details, see the next section).

PROXY

Node.js can be used as a server proxy, and in this case it can handle a large number of simultaneous connections in non-blocking mode. This is especially useful when mediating between different services, which have different response times, or when collecting data from multiple sources.

For example, let's consider a server application that exchanges information with third-party resources, collects information from various sources, or stores resources such as images and video, which are then provided to third-party cloud services.

Although there are dedicated proxy servers, it is convenient to use Node instead, especially if the proxy infrastructure does not exist, or if you need a solution for local development. Here, I mean that you can create a client application where the Node.js development server will be used, where we will store resources and make proxies / stubs for requests to the API, and in real life such interactions will already be performed using a dedicated proxy service (nginx, HAProxy, etc.)

INFORMATION PANEL EXCHANGE TRADER

Let's go back to the application level. Another segment where PC programs dominate, but they can easily be replaced by a real-time web solution - this is trading software, where quotes are tracked, calculations and technical analysis are performed, and charts and diagrams are drawn.

If you use a real-time web solution in such a case, the broker using it can easily switch between workstations or sites. Soon we will begin to notice such brokers on the beaches of Florida ... Ibiza ... Bali.

PANEL FOR MONITORING APPLICATIONS

This is another practical case for which the “Node + Web Sockets” model is ideal. Here we monitor site visitors and visualize their interactions in real time (if you are interested in such an idea, then it is already solved with the help of Hummingbird ).

You can collect statistics about the user in real time and even go to a higher level by adding targeted visitor interactions to the program, opening a communication channel as soon as the guest reaches a specific point in your funnel (if you are interested in this idea, then it is already solved with CANDDi ).

Imagine how you could optimize your business if you could find out in real time what your users are doing - and also visualize their interactions. Bidirectional Node.js sockets offer you this opportunity.

INFORMATION PANEL FOR TRACKING SYSTEM

Now let's talk about the infrastructure aspects. Suppose there is a SaaS provider who wants to offer users a page for tracking services (say, the GitHub status page ). With the Node.js event loop, you can create a powerful web-based interface, where service states will be asynchronously checked in real time, and data will be sent to the client via web sockets.

This technology allows you to report on the status of both internal (intra-corporate) and publicly available services in real time. Let's develop this idea a bit and try to introduce a network operations center (NOC) that tracks the work of the telecom operator's applications, cloud service provider / hosting provider, or some financial institution. All this works in an open web stack based on Node.js and web sockets, not Java and / or Java applets.

Note: Do not attempt to create hard real-time systems on the Node (i.e., requiring well-defined response times). Perhaps, such applications are better developed on Erlang .

Where can I use Node.js

SERVER WEB APPLICATIONS

Node.js with Express.js can also be used to create classic web applications on the server side. However, even if it is possible, such a request / response paradigm, where Node.js will transfer the rendered HTML, is not typical for this technology. There are arguments both in favor of this approach and against it. Please note the following:

Behind:

- If your application does not perform intensive computations that load the processor, you can write it completely in JavaScript, including even the database, if you use an object database (for example, MongoDB) and JSON. This greatly simplifies not only the development, but also the selection of specialists.

- Search robots receive in return fully rendered HTML, which is much more convenient for search engine optimization than, for example, working with single-page applications or a web socket application running on Node.js.

- Any intensive computations that load the processor will block Node.js agility, so in this case it is better to use a multi-threaded platform. You can also try horizontal scaling calculations [*].

- Using Node.js with a relational database is still rather inconvenient (see below for more details). Do yourself a favor and choose some other medium, such as Rails, Django, or ASP.Net MVC, if you are going to do relational operations.

[*] As an alternative to such CPU-intensive calculations, you can create a highly scalable MQ environment with processing on the database interface so that Node remains at the forefront and asynchronously processes client requests.

When should Node.js be used?

SERVER WEB APPLICATION FOR WHICH RELATIONAL DATABASE IS LOCATED

Comparing Node.js plus Express.js with Ruby on Rails, we confidently choose the second option when it comes to accessing relational data.

The relational database tools for Node.js are still in their infancy, and working with them is rather unpleasant. On the other hand, Rails automagically configures data access right at installation, plus it provides tools to support database schema migrations and other Gems. Rails and related frameworks have mature and proven implementations of access to the data layer (Active Record or Data Mapper), which you will be sorely lacking if you try to reproduce such a construct in pure JavaScript. [*]

Still, if you are firmly committed to doing everything in JavaScript, pay attention to Sequelize and Node ORM2 - both tools are not devoid of roughness, but over time they may ripen.

[*] Node can be used exclusively in the client part (and this is often done), and the machine interface can be executed on Rails, thus maintaining easy access to the relational database.

COMPLEX SERVER CALCULATIONS AND PROCESSING

When it comes to complex calculations, Node.js leaves much to be desired. Of course, you are not going to program a Node server for Fibonacci calculations . In principle, any computational operations that heavily load the processor devalue the gain in throughput, which in Node is achieved thanks to event-oriented non-blocking I / O. The point is that any incoming requests will be blocked, while a single stream is busy digesting numbers.

As mentioned above, Node.js is single-threaded and uses only one processor core. You may need to implement competition on a multi-core server, for this the Node kernel development team is already preparing a special cluster module. In addition, you could easily run multiple instances of the Node.js server behind the reverse proxy using nginx .

When clustering, it is possible to unload all complex calculations into background processes running in a more suitable environment, and to ensure communication between them through a message queue server, for example, RabbitMQ.

Although initially your background processing could run all on the same server, this approach provides very high scalability. Such background processing services can easily be distributed to separate working servers without the need to configure the load on the front-facing web servers.

Of course, such an approach is also appropriate on other platforms, but in the case of Node.js, that same huge bandwidth, which we mentioned above, is acquired, since each request is a small task that is processed very quickly and efficiently.

Conclusion

We discussed Node.js from a theoretical and practical point of view, starting with its goals and purpose and ending with a conversation about all sorts of goodies and pitfalls. If you have problems with Node.js, then remember that they almost always boil down to the following fact: blocking operations are the cause of all ills . In 99% percent of cases, all problems begin due to misuse of the Node.

Remember: Node.js was never intended to solve the problem of scaling computing. It was created to scale I / O, which really handles very well .

Why use Node.js? If your task does not involve intensive calculations and access to blocking resources, then you can fully take advantage of Node.js and enjoy fast and easily scalable web applications.

Source: https://habr.com/ru/post/265649/

All Articles