Unstructured data analysis and storage optimization

The topic of unstructured data analysis is not new in itself. Recently, however, in the era of “big data”, this question has been posed by organizations much more acutely. The multiple growth in the volume of stored data in recent years, its ever-increasing rates and increasing diversity of stored and processed information greatly complicate the task of managing corporate data. On the one hand, the problem is of infrastructural nature. Thus, according to IDC, up to 60% of corporate repositories are occupied by information that does not bring any benefit to the organization (numerous copies of the same, scattered across different parts of the storage infrastructure; information to which no one has accessed a few and is hardly ever some other; corporate “trash”).

On the other hand, inefficient information management leads to increased business risks: storing personal data and other confidential information on public information resources, the appearance of suspicious user encrypted archives, violation of access policies to important information, etc.

In these circumstances, the ability to qualitatively analyze corporate information and promptly respond to any inconsistencies of its storage with the policies and requirements of the business is a key indicator of the maturity of the organization’s information strategy.

A separate Gartner document, published in September 2014 under the name Market Guide for File Analysis Software, is devoted to the topic of file data analytics. This document provides the following typical use cases for analytical software:

')

Taking this trend into account, Hewlett-Packard has launched two software solutions for advanced analysis of unstructured information: HP Storage Optimizer and HP Control Point. The first solution is mainly intended for data storage professionals. The second solution is suitable not only for IT specialists, but will also be of interest to employees of information security departments, Compliance-services, as well as management, who determine the strategy for storing and using information in the organization.

This article will provide a technical overview of both products.

HP Storage Optimizer combines the ability to analyze the metadata of objects in the repositories of unstructured information and the appointment of policies for their hierarchical storage.

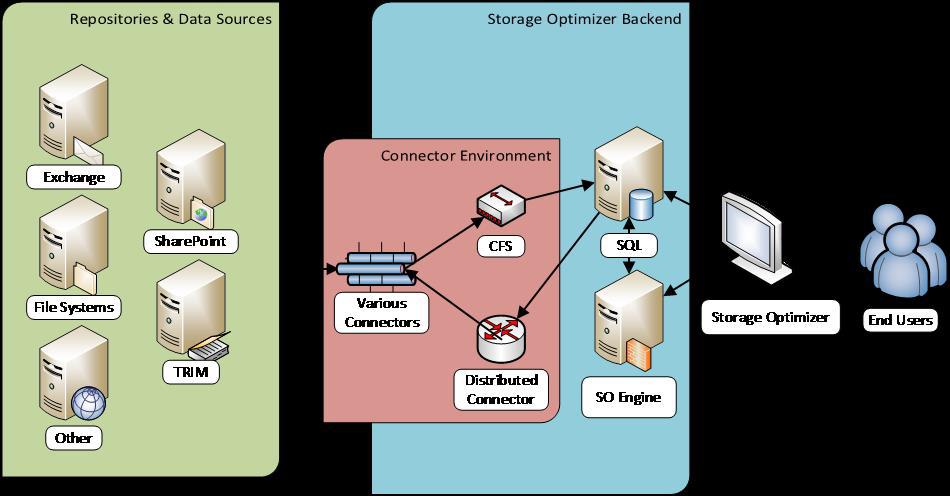

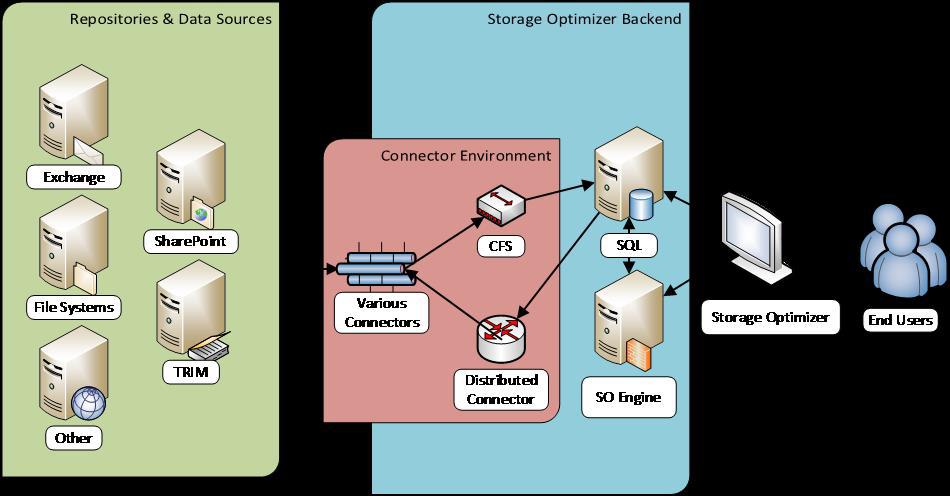

HP Storage Optimizer Architecture

The sources of information analyzed in the terminology of HP Storage Optimizer are called repositories. Various file systems are supported as repositories, as well as MS Exchange, MS SharePoint, Hadoop, Lotus Notes, Documentum and many others. There is also an opportunity to order the development of a connector to the repository, which is currently not supported by the product.

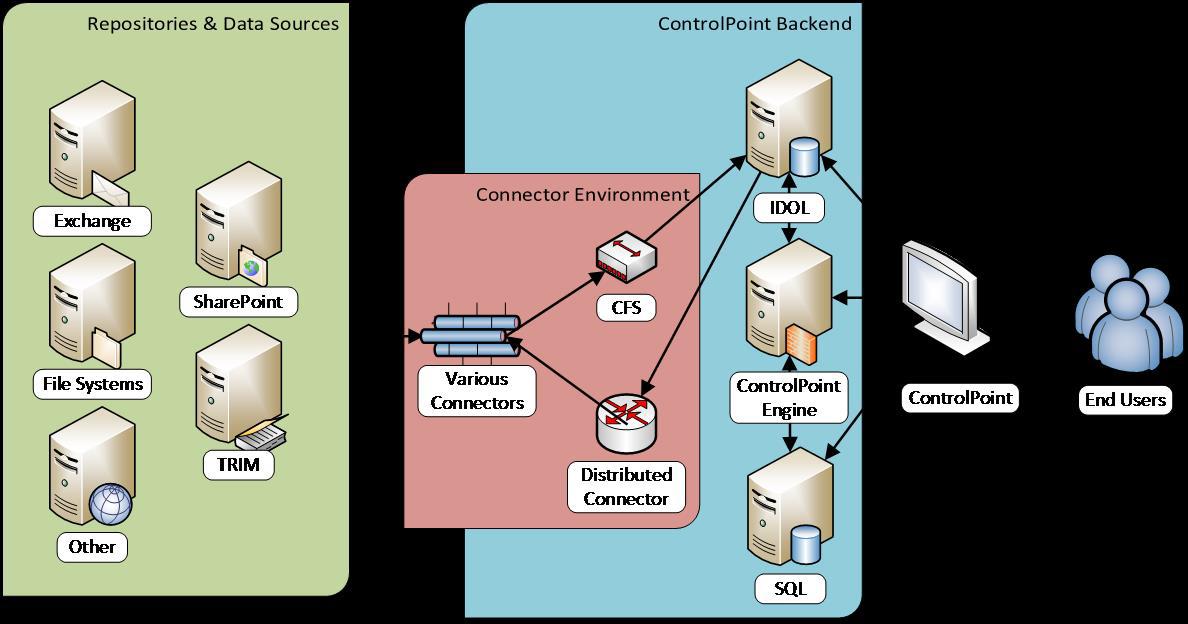

HP Storage Optimizer uses its own appropriate connectors to access the repositories being analyzed. Information from the connectors goes to a component called Connector Framework Server (designated as “CFS” in the picture), which, in turn, enriches it with additional metadata and sends the resulting data to indexing. To increase fault tolerance and load balancing, the application interacts with connectors using the Distributed Connector component.

Metadata is indexed by the “HP Storage Optimizer Engine” engine (“SO Engine” in the first picture) and placed in an MS SQL database. To access the results of the analysis and assignment of management policies, use the HP Storage Optimizer web application.

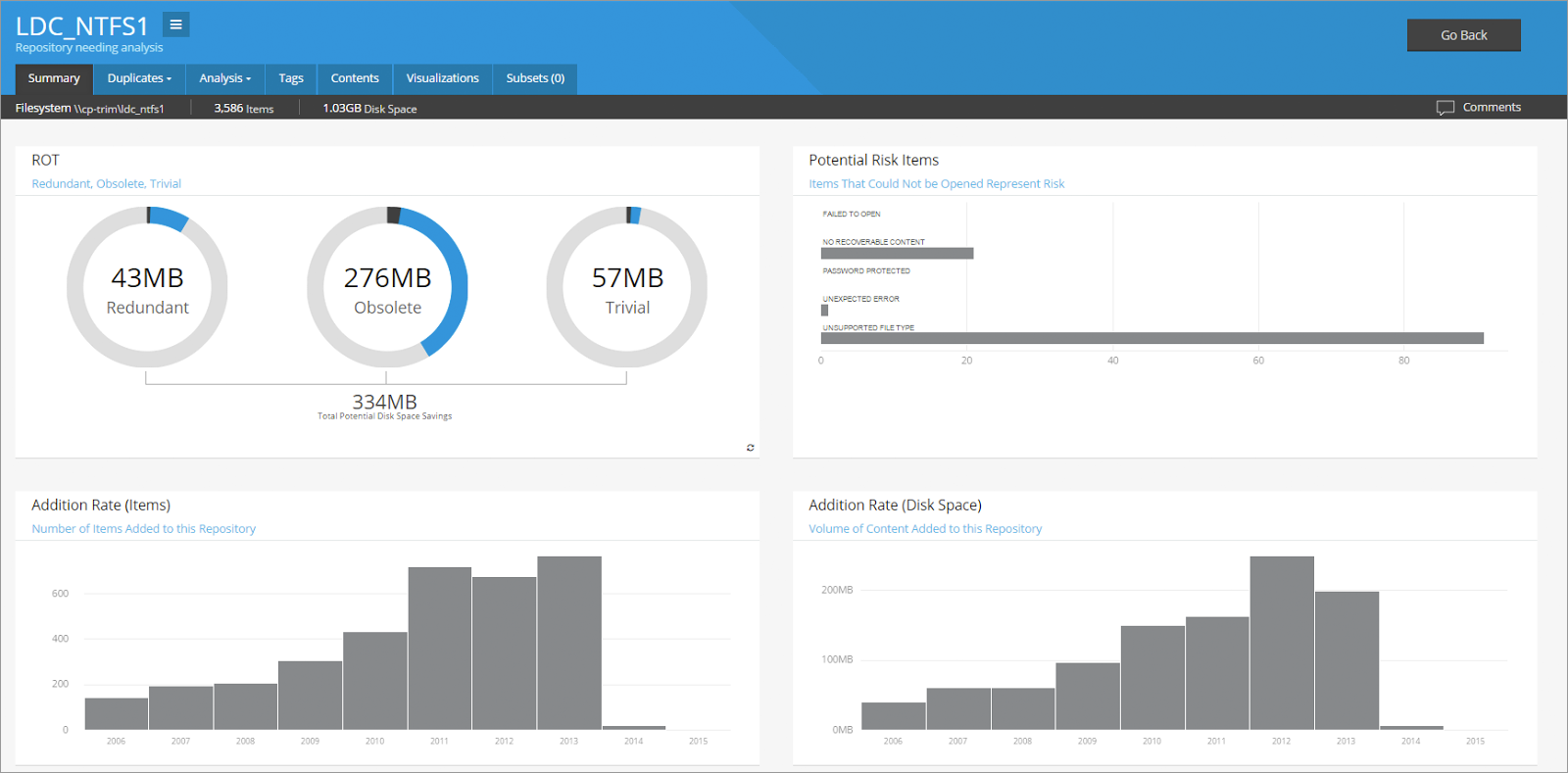

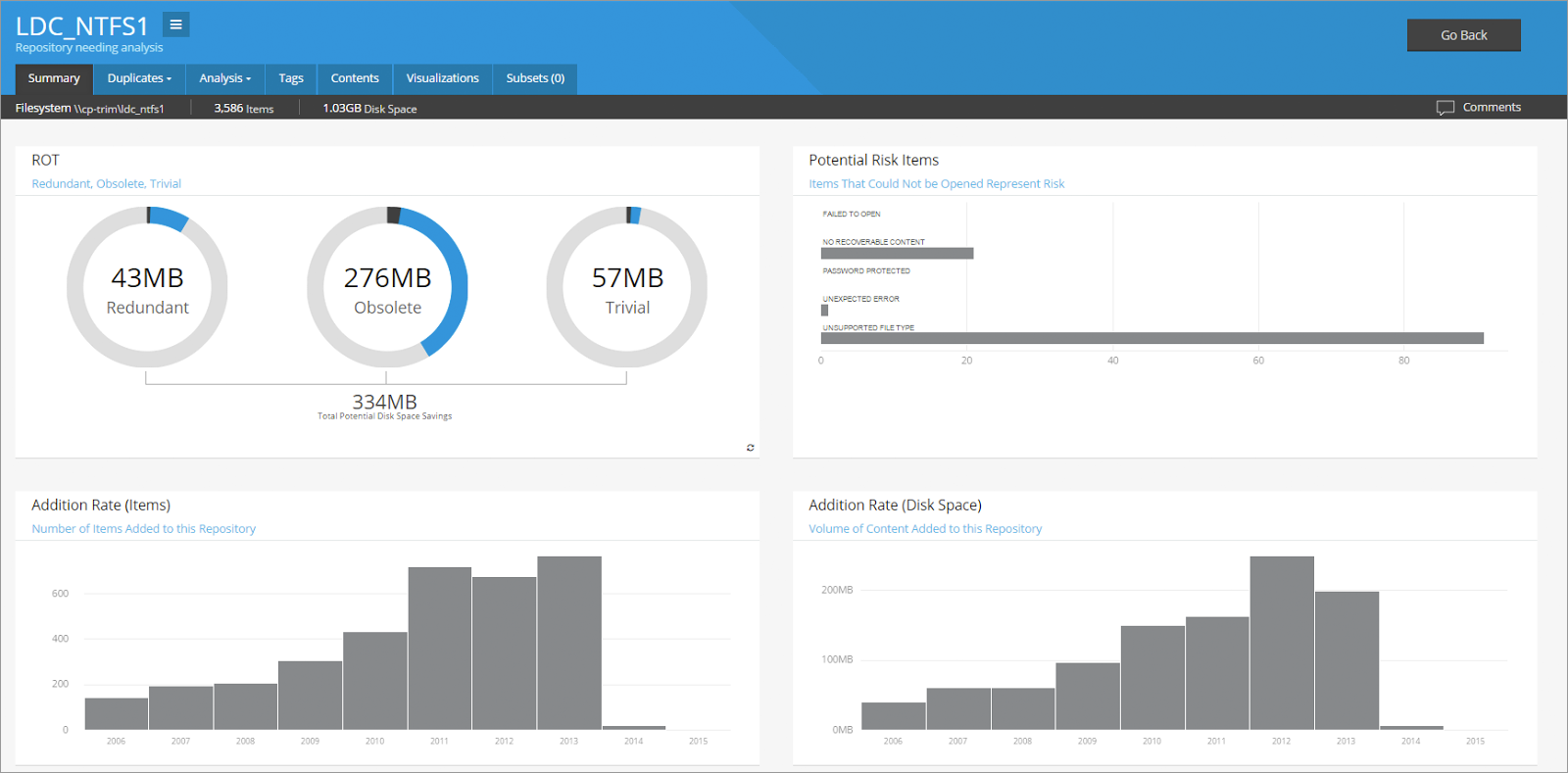

For visual display of information that may be optimized, HP Storage Optimizer uses pie charts (below) showing duplicate data, rarely needed and “unnecessary” data (ROT analysis: Redundant, Obsolete, Trivial). Criteria for "redkovosbovannosti" and "uselessness" can be flexibly configured, including individually for each repository. In addition to pie charts, graphs are available that illustrate the breakdown of data by type, time and frequency of addition, etc. All visualization elements are interactive, i.e. allow you to go into any category of the diagram (or column) and access the relevant data.

Graphical analysis of data in HP Storage Optimizer

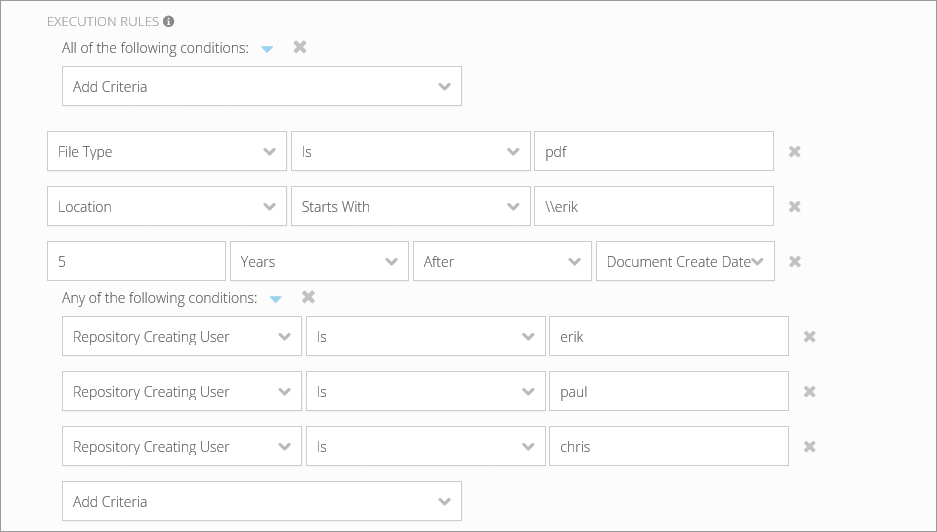

The list of metadata, which can be analyzed, is unusually wide and makes it possible to carry out highly accurate thematic samples.

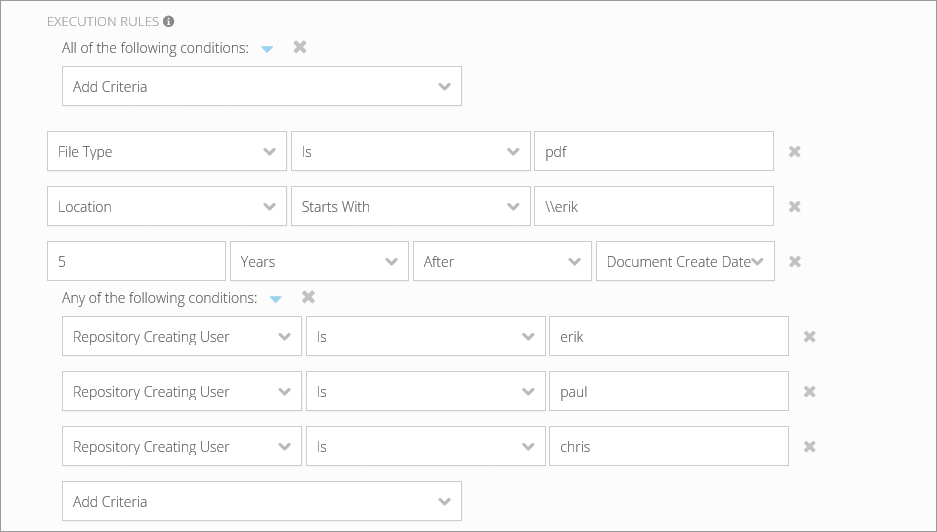

Example of working with metadata in HP Storage Optimizer

I would like to note that the HP Storage Optimizer and HP Control Point products include an indexing and visualization engine that allows you to view more than 400 different data formats without installing appropriate preview applications on the server. This greatly simplifies and speeds up the process of analyzing a large amount of diverse information.

After the data analysis is done, the system administrator is given the opportunity to assign data deletion or movement policies. It is possible to assign policies to certain data samples either manually or automatically. The powerful role-based management model, implemented in the HP Storage Optimizer and the HP Control Point, makes it possible to issue permissions to work with repositories, analyze data in them, and also by the purpose of policies, as flexibly as possible.

HP Control Point is essentially an extended version of HP Storage Optimizer and provides tools not only for solving storage optimization problems, but also for implementing storage policies and managing the life cycle of corporate information.

The product allows for the analysis of information not only by metadata, but also by its content. In addition, it implements additional mechanisms for analyzing data and assigning policies to work with them.

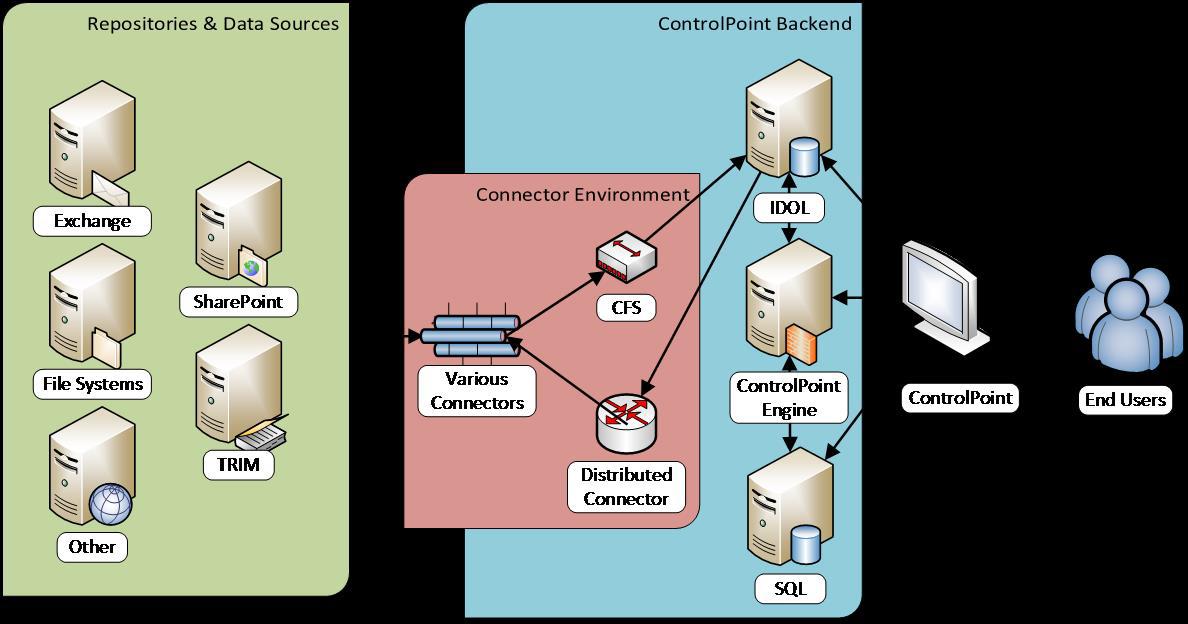

HP Control Point Architecture

Unlike the HP Storage Optimizer, HP Control Point makes extensive use of the indexing and semantic categorization of the HP IDOL (Intelligent Data Operating Layer) engine information: visualization, categorization, tagging, etc. It is based on the ability to determine the “meaning” of the set analyzed information regardless of its format, language, etc.

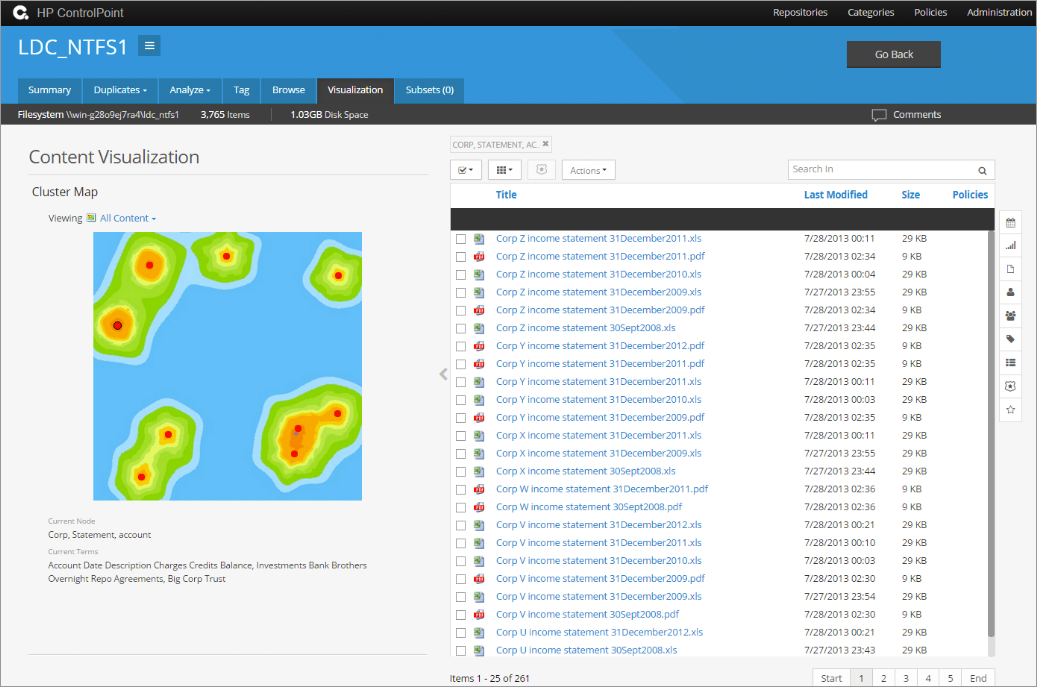

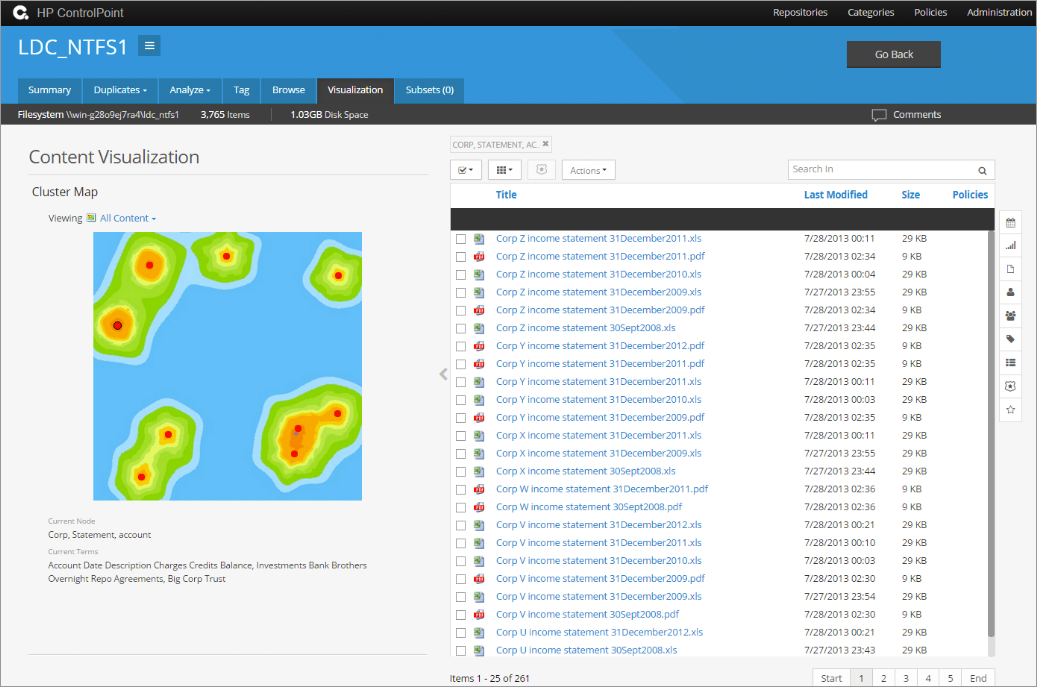

In particular, two types of information visualization are additionally available in HP Control Point: cluster map and spectrograph. A cluster map is a two-dimensional image of information "clusters". One cluster combines information that has a similar meaning. Thus, looking at the cluster map, you can quickly get an understanding of the main semantic groups of this information. Cluster maps are interactive, i.e. they allow you to access the information contained in them by clicking on certain clusters.

The appearance of the cluster map in HP Control Point

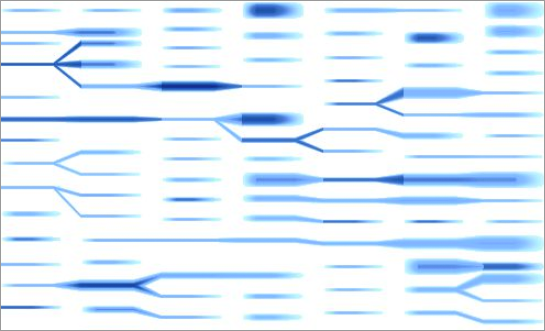

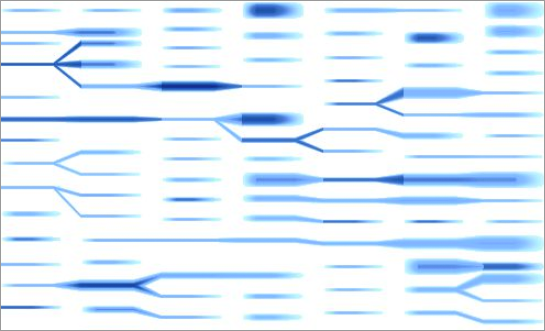

A spectrograph is a collection of information clusters taken at different points in time and makes it possible to graphically track how the meaning of information has changed in the analyzed repositories over time.

Appearance spectrogram in HP Control Point

In addition to the advanced visualization of information, the ability to categorize the analyzed information is available in the HP Control Point. Initially, information is categorized automatically by means of HP IDOL, giving the system user an array of data, divided into semantic parts. After receiving the primary partition, the analyst can further make a more verified categorization. For example, use any set of files, obviously for an analyst relevant to a particular category, to "train" a category for this set of files in order to subsequently obtain more accurate categorization results. For even more fine-tuning, you can use individual weights of files and even phrases and individual words within files, reflecting the degree of compliance of certain information units of the “trained” category. Such detailing can be used, for example, to create detailed rules for classifying the analyzed information as confidential.

As for the policies for working with the analyzed information, in HP Control Point, in addition to copying, transferring and deleting, the following options are also available:

- "Freeze" objects. Allows you to block access to individual objects, preventing their unauthorized change or deletion.

- Creating a workflow (workflow). For example, informing or requesting approval of an authorized employee or owner of the analyzed objects before they are transferred or deleted.

- Secure transfer to the HP Records Manager corporate records management system (for example, in the event of unauthorized presence of confidential documents on a public file server). At the same time, the transferred data is accompanied by metadata that will be used for further document management in the HP Records Manager system with the necessary access settings, privacy levels, etc.

As can be seen from the current review, the range of applications of HP Storage Optimizer and HP Control Point for solving problems of analyzing and managing corporate data is very wide. In addition, the ability to analyze documents in different languages (including Russian), as well as the scalable architecture of the components of both products, effectively solves the problem of analyzing the entire volume of unstructured data in organizations of any size and complexity.

The author of the article is Maxim Lugansky, Technical Consultant, Data Protection & Archiving, HP Big Data

On the other hand, inefficient information management leads to increased business risks: storing personal data and other confidential information on public information resources, the appearance of suspicious user encrypted archives, violation of access policies to important information, etc.

In these circumstances, the ability to qualitatively analyze corporate information and promptly respond to any inconsistencies of its storage with the policies and requirements of the business is a key indicator of the maturity of the organization’s information strategy.

A separate Gartner document, published in September 2014 under the name Market Guide for File Analysis Software, is devoted to the topic of file data analytics. This document provides the following typical use cases for analytical software:

')

- Storage Optimization. The most typical scenario. The purpose of the introduction of file analytics is to reduce the amount of stored data, and, thereby, increase the efficiency of their storage.

- Identification of unnecessary data and getting rid of them during the migration of IT infrastructure. Often initiated by data migration projects to the “cloud”. The content is scanned and, according to its results, the data that has the importance and value for business “moves” to the “cloud” and the rest is deleted.

- Classification. The purpose of such analysis projects is to group objects according to various criteria in order to assign common policies to them, to understand the value and potential risk that the stored information carries.

- Compliance with standards and requirements (compliance). Specialists of the relevant departments can develop and implement policies for access to important data and, using the classification built into analytical software, effectively monitor their compliance.

- Manage access levels. By obtaining information about the level and type of user access to files and directories, it is possible to carry out information management in order to protect personal data and other confidential information from unauthorized access.

- Automation of investigations. Analytical software allows you to quickly find objects related to the investigations carried out in the company, and automatically or safely copy or move them to special repositories.

Taking this trend into account, Hewlett-Packard has launched two software solutions for advanced analysis of unstructured information: HP Storage Optimizer and HP Control Point. The first solution is mainly intended for data storage professionals. The second solution is suitable not only for IT specialists, but will also be of interest to employees of information security departments, Compliance-services, as well as management, who determine the strategy for storing and using information in the organization.

This article will provide a technical overview of both products.

HP Storage Optimizer: analyze data to optimize storage

HP Storage Optimizer combines the ability to analyze the metadata of objects in the repositories of unstructured information and the appointment of policies for their hierarchical storage.

HP Storage Optimizer Architecture

The sources of information analyzed in the terminology of HP Storage Optimizer are called repositories. Various file systems are supported as repositories, as well as MS Exchange, MS SharePoint, Hadoop, Lotus Notes, Documentum and many others. There is also an opportunity to order the development of a connector to the repository, which is currently not supported by the product.

HP Storage Optimizer uses its own appropriate connectors to access the repositories being analyzed. Information from the connectors goes to a component called Connector Framework Server (designated as “CFS” in the picture), which, in turn, enriches it with additional metadata and sends the resulting data to indexing. To increase fault tolerance and load balancing, the application interacts with connectors using the Distributed Connector component.

Metadata is indexed by the “HP Storage Optimizer Engine” engine (“SO Engine” in the first picture) and placed in an MS SQL database. To access the results of the analysis and assignment of management policies, use the HP Storage Optimizer web application.

For visual display of information that may be optimized, HP Storage Optimizer uses pie charts (below) showing duplicate data, rarely needed and “unnecessary” data (ROT analysis: Redundant, Obsolete, Trivial). Criteria for "redkovosbovannosti" and "uselessness" can be flexibly configured, including individually for each repository. In addition to pie charts, graphs are available that illustrate the breakdown of data by type, time and frequency of addition, etc. All visualization elements are interactive, i.e. allow you to go into any category of the diagram (or column) and access the relevant data.

Graphical analysis of data in HP Storage Optimizer

The list of metadata, which can be analyzed, is unusually wide and makes it possible to carry out highly accurate thematic samples.

Example of working with metadata in HP Storage Optimizer

I would like to note that the HP Storage Optimizer and HP Control Point products include an indexing and visualization engine that allows you to view more than 400 different data formats without installing appropriate preview applications on the server. This greatly simplifies and speeds up the process of analyzing a large amount of diverse information.

After the data analysis is done, the system administrator is given the opportunity to assign data deletion or movement policies. It is possible to assign policies to certain data samples either manually or automatically. The powerful role-based management model, implemented in the HP Storage Optimizer and the HP Control Point, makes it possible to issue permissions to work with repositories, analyze data in them, and also by the purpose of policies, as flexibly as possible.

HP Control Point: Comprehensive Analysis to Reduce Storage Risk

HP Control Point is essentially an extended version of HP Storage Optimizer and provides tools not only for solving storage optimization problems, but also for implementing storage policies and managing the life cycle of corporate information.

The product allows for the analysis of information not only by metadata, but also by its content. In addition, it implements additional mechanisms for analyzing data and assigning policies to work with them.

HP Control Point Architecture

Unlike the HP Storage Optimizer, HP Control Point makes extensive use of the indexing and semantic categorization of the HP IDOL (Intelligent Data Operating Layer) engine information: visualization, categorization, tagging, etc. It is based on the ability to determine the “meaning” of the set analyzed information regardless of its format, language, etc.

In particular, two types of information visualization are additionally available in HP Control Point: cluster map and spectrograph. A cluster map is a two-dimensional image of information "clusters". One cluster combines information that has a similar meaning. Thus, looking at the cluster map, you can quickly get an understanding of the main semantic groups of this information. Cluster maps are interactive, i.e. they allow you to access the information contained in them by clicking on certain clusters.

The appearance of the cluster map in HP Control Point

A spectrograph is a collection of information clusters taken at different points in time and makes it possible to graphically track how the meaning of information has changed in the analyzed repositories over time.

Appearance spectrogram in HP Control Point

In addition to the advanced visualization of information, the ability to categorize the analyzed information is available in the HP Control Point. Initially, information is categorized automatically by means of HP IDOL, giving the system user an array of data, divided into semantic parts. After receiving the primary partition, the analyst can further make a more verified categorization. For example, use any set of files, obviously for an analyst relevant to a particular category, to "train" a category for this set of files in order to subsequently obtain more accurate categorization results. For even more fine-tuning, you can use individual weights of files and even phrases and individual words within files, reflecting the degree of compliance of certain information units of the “trained” category. Such detailing can be used, for example, to create detailed rules for classifying the analyzed information as confidential.

As for the policies for working with the analyzed information, in HP Control Point, in addition to copying, transferring and deleting, the following options are also available:

- "Freeze" objects. Allows you to block access to individual objects, preventing their unauthorized change or deletion.

- Creating a workflow (workflow). For example, informing or requesting approval of an authorized employee or owner of the analyzed objects before they are transferred or deleted.

- Secure transfer to the HP Records Manager corporate records management system (for example, in the event of unauthorized presence of confidential documents on a public file server). At the same time, the transferred data is accompanied by metadata that will be used for further document management in the HP Records Manager system with the necessary access settings, privacy levels, etc.

Conclusion

As can be seen from the current review, the range of applications of HP Storage Optimizer and HP Control Point for solving problems of analyzing and managing corporate data is very wide. In addition, the ability to analyze documents in different languages (including Russian), as well as the scalable architecture of the components of both products, effectively solves the problem of analyzing the entire volume of unstructured data in organizations of any size and complexity.

The author of the article is Maxim Lugansky, Technical Consultant, Data Protection & Archiving, HP Big Data

Source: https://habr.com/ru/post/265499/

All Articles