To help the analyst: we write our automatic download of Yandex.Metrica reports using AWS for free

In my work practice as an analyst, I often have to deal with situations where colleagues every day spend a lot of time on tedious data upload for regular reporting. If there are a lot of plugins, services and add-ins for Excel written for Google Analytics and other Google products, then for Yandex Metrics and other analytical systems we managed to find only a couple of 2013 libraries.

When working with advertising systems like Adfox, the situation is no less “difficult”. Numerous manual downloads can eventually lead anyone into depression without leaving time for the useful part of this action: analysis and conclusions based on this data. Sometimes the solution is to ask for help from developers who can help with setting up the upload of necessary data. However, in practice, requirements for reports often change, but constantly pulling developers will fail.

In this and the following article, I’ll show you how to simply configure automatic data upload using Amazon Web Services. On AWS, you can use a free account for 12 months, the limits of which include the algorithm described in the article. You can choose any other rental service of computing power, but I haven’t met completely free. In addition, the AWS instances already have a python with the necessary libraries.

As an example, the data counter will use your site . The result of this article will be a small script that, for a set of counters, unloads visits by days for specified dates. On the basis of it, you can quickly go to the desired unloading. The disadvantage of this method can be attributed to the need for the first time to spend about an hour setting up an account and getting used to the interface. But on subsequent visits this procedure is not necessary. You just run the finished instance and in a couple of minutes you will receive the necessary data.

')

So, let's begin. We register the account on Amazon Web Services console.aws.amazon.com and set up access to the management console. Unfortunately, after creating an account for full use of AWS, you need to wait a day until your card is verified. Within the framework of this article, funds will not be debited from it anyway (we will not go beyond the limits of free use). The account registration process is well described in the AWS documentation (up to the Getting started item) docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-ug.pdf

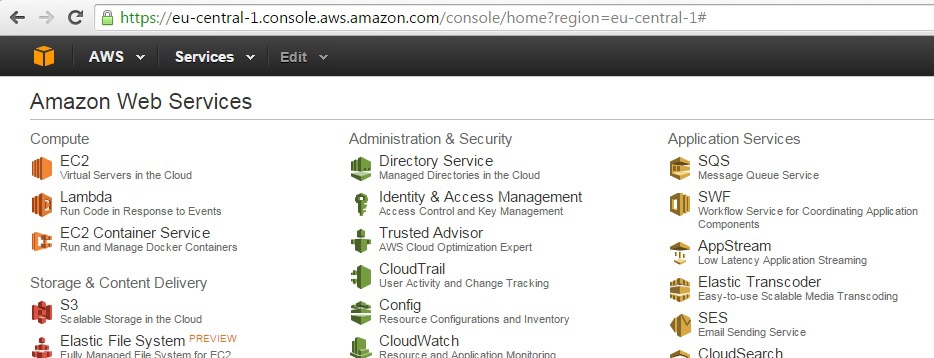

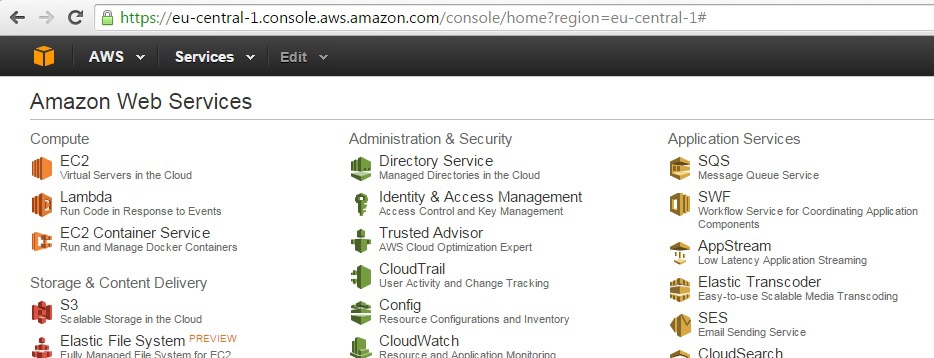

After successful verification of the card (you will receive a notification about the completion of this process), the console.aws.amazon.com console should look like this:

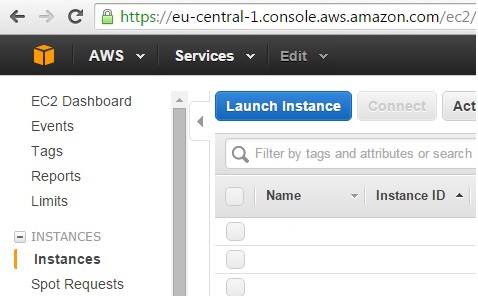

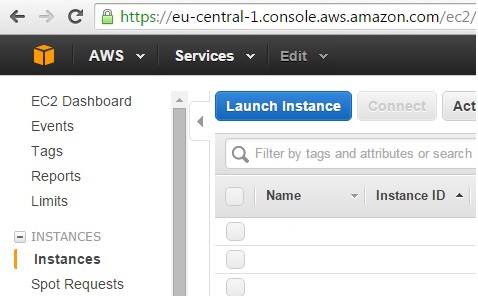

Go to EC2 and in the left pane select Instances. Click Launch Instance:

We will be offered a choice of a variety of operating systems. In this example, the choice of operating system is not so important. I'm used to Ubuntu (fourth line in the screenshot). You can select the first line of Amazon Linux. The difference from the example will be that by default on Amazon Linux, the user’s login when entering the ec2-user machine. For Ubuntu, the ubuntu login is:

In the next step, select the type of virtual. For free use choose the first option t2.micro. Prices for other options can be found at aws.amazon.com/ru/ec2/pricing :

In the last seventh step, we check the security group in the Security Groups field (SSH access should be allowed for 22 ports for at least your IP address) and click Launch. Go back to the list of instances and wait until the Instance State field for our running instance becomes running (it takes a couple of minutes). Choose a line with our instance and look for a Public DNS. Copy Public DNS and go to the machine. For Windows it is easier to use Putty, for MAC - Terminal. The documentation for setting up an account describes this process for Windows and MAC. Just in case, let me remind you once again for Putty. Login ubuntu, port 22:

Do not forget in Connection -> SSH -> Auth to specify the path to the private key (with the extension * .ppk):

Go to the machine, on the warning Windows "The server's host key is not cached in the registry" click "Yes." If everything went well, we get the following:

Check whether everything is fine with python (by default it is already installed on AWS): type in the “python” console and press Enter. After entering the python console, type 1 + 1 and press Enter again. To exit the python console, press Ctrl + D:

The final step for uploading data from Yandex Metrics will be an authorization token:

1. Go to the Yandex application creation page oauth.yandex.ru/client/new

2. We call our application and in the Rights field for Yandex Metrics we select “Obtaining statistics, reading the parameters of our own and trusted counters”:

The list of created applications and their IDs can be viewed on the page of registered applications oauth.yandex.ru (in the example, the application is named “Weekly Report”):

To get a token, we need an ID. We substitute this value into the link to receive the debug token in the browser in the last client_id parameter: oauth.yandex.ru/authorize?response_type=token&client_id=04d488...87aacb

As a result, we get an authorization token 11daf5 ... 516d, which we will use in each request to Yandex Metric:

We have completed a lengthy procedure for preparing the technical part. Next time it will not have to pass. We now write the first “Hello world” to our data in Yandex Metric. To edit scripts in an instance, you can use a wide variety of connections and editors, or simply work in the console. In Windows, for simple scripts, you can use WinSCP (https://winscp.net/eng/docs/lang:ru) and the built-in notepad.

After connecting to the instance (in the same way as we did through Putty) we find ourselves in the / home / ubuntu folder. Let's create a separate 'yam' folder (using F7) and create a file 'test.py' (Shift + F4). Now we’ll check that everything works by unloading the attendance report data for yesterday according to the Yandex Metrics API documentation tech.yandex.ru/metrika/doc/api2/api_v1/intro-docpage :

We import the 'requests' and 'json' libraries to work with requests to the API and the JSON format. In the variable atoken in quotes we specify the previously received authorization token. Copy this code into the file 'test.py' and save:

In the 'id' parameter of the r = requests.get (...) line, you must specify the number of the counter from which data is taken. It can be found in the list of counters ( https://metrika.yandex.ru/list ) next to the name. The accuracy parameter corresponds to the Accuracy slider in the web interface, date1 and date2 are the upload period, metrics are metrics (columns). There are also dimensions (dimensions), they will come in handy later. Yandex Metrics provides ready-made templates, you can substitute them into the query string tech.yandex.ru/metrika/doc/api2/api_v1/presets/preset_traffic-docpage

json.loads converts the response received from Yandex Metrika in JSON format into an array, from which you can easily select the items we need (in this case, the number of visits for yesterday). The line print json.dumps (...) will show us the API response in a more human form.

Run the script: in Putty or the terminal type 'python test.py'. Success:

To get the number 489 out of this array (that is, in python, this is a “dictionary”), let's go through its hierarchical structure from top to bottom:

1. The first level of the hierarchy is the element 'data', it will be the first in circulation: ['data']

2. Then there is a square bracket, then the list begins. In our case, this is a list of one element, then we put [0]

3. Next are the two elements 'dimensions' and 'metrics'. Select ['metrics']

3. Finally, in the field 'metrics' again a sheet of one element

Thus, the address of the number of visits 489.0 yesterday will be ['data'] [0] ['metrics'] [0]. Add a line to our script:

print parsed ['data'] [0] ['metrics'] [0]

As a result of the restart, the number of visits for yesterday will be displayed at the end.

Ok, 'hello world' is fun. Unload now something more practical. For example, visits on days in the past week for a list of sites and write it to a file. To begin with, we write to the file by the days of visiting one site last week:

Now the answer will look like this:

Now we need to cycle through all dates and get the number of visits for each day. From the structure it is clear that you need to go through the sheet in parser ['data']. Let's see what each element of this sheet is. To do this, add a loop to the end of the script:

If you are not familiar with python, pay attention to the indent before the 'print day' (tab or a few spaces). This indent determines the beginning and end of the cycle. Run:

Now it’s more clear how to get the date and number of visits. Instead of 'print day' set:

Add the ability to upload data for several projects and write everything in the file, replacing the dot in the visits with commas. That at export to Excel it was not necessary to do it. In the example, in the listOfSites list, you can substitute any number of project counters, here there are two identical ones just for example. The file 'weekly_data.txt' will be overwritten each time the script is run:

After launch we have to get the file 'weekly_data.txt' with visits by day of the sites listed in the listOfSites (in our example, the numbers are simply repeated twice), which can be easily copied to Excel. Or any other tool in which you are reporting:

After completing the work, go to the AWS management console and on our instance use the right mouse button to select Instance state - Stop. Next time you will not have to go through a long setup procedure. Simply select the Instance state - Start and after a couple of minutes immediately begin working with the code:

Using this simple approach and Yandex Metrics documentation, you can configure almost any upload. I hope this instruction will save you a lot of time and effort when uploading data.

When working with advertising systems like Adfox, the situation is no less “difficult”. Numerous manual downloads can eventually lead anyone into depression without leaving time for the useful part of this action: analysis and conclusions based on this data. Sometimes the solution is to ask for help from developers who can help with setting up the upload of necessary data. However, in practice, requirements for reports often change, but constantly pulling developers will fail.

In this and the following article, I’ll show you how to simply configure automatic data upload using Amazon Web Services. On AWS, you can use a free account for 12 months, the limits of which include the algorithm described in the article. You can choose any other rental service of computing power, but I haven’t met completely free. In addition, the AWS instances already have a python with the necessary libraries.

As an example, the data counter will use your site . The result of this article will be a small script that, for a set of counters, unloads visits by days for specified dates. On the basis of it, you can quickly go to the desired unloading. The disadvantage of this method can be attributed to the need for the first time to spend about an hour setting up an account and getting used to the interface. But on subsequent visits this procedure is not necessary. You just run the finished instance and in a couple of minutes you will receive the necessary data.

')

So, let's begin. We register the account on Amazon Web Services console.aws.amazon.com and set up access to the management console. Unfortunately, after creating an account for full use of AWS, you need to wait a day until your card is verified. Within the framework of this article, funds will not be debited from it anyway (we will not go beyond the limits of free use). The account registration process is well described in the AWS documentation (up to the Getting started item) docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-ug.pdf

After successful verification of the card (you will receive a notification about the completion of this process), the console.aws.amazon.com console should look like this:

Go to EC2 and in the left pane select Instances. Click Launch Instance:

We will be offered a choice of a variety of operating systems. In this example, the choice of operating system is not so important. I'm used to Ubuntu (fourth line in the screenshot). You can select the first line of Amazon Linux. The difference from the example will be that by default on Amazon Linux, the user’s login when entering the ec2-user machine. For Ubuntu, the ubuntu login is:

In the next step, select the type of virtual. For free use choose the first option t2.micro. Prices for other options can be found at aws.amazon.com/ru/ec2/pricing :

In the last seventh step, we check the security group in the Security Groups field (SSH access should be allowed for 22 ports for at least your IP address) and click Launch. Go back to the list of instances and wait until the Instance State field for our running instance becomes running (it takes a couple of minutes). Choose a line with our instance and look for a Public DNS. Copy Public DNS and go to the machine. For Windows it is easier to use Putty, for MAC - Terminal. The documentation for setting up an account describes this process for Windows and MAC. Just in case, let me remind you once again for Putty. Login ubuntu, port 22:

Do not forget in Connection -> SSH -> Auth to specify the path to the private key (with the extension * .ppk):

Go to the machine, on the warning Windows "The server's host key is not cached in the registry" click "Yes." If everything went well, we get the following:

Check whether everything is fine with python (by default it is already installed on AWS): type in the “python” console and press Enter. After entering the python console, type 1 + 1 and press Enter again. To exit the python console, press Ctrl + D:

The final step for uploading data from Yandex Metrics will be an authorization token:

1. Go to the Yandex application creation page oauth.yandex.ru/client/new

2. We call our application and in the Rights field for Yandex Metrics we select “Obtaining statistics, reading the parameters of our own and trusted counters”:

The list of created applications and their IDs can be viewed on the page of registered applications oauth.yandex.ru (in the example, the application is named “Weekly Report”):

To get a token, we need an ID. We substitute this value into the link to receive the debug token in the browser in the last client_id parameter: oauth.yandex.ru/authorize?response_type=token&client_id=04d488...87aacb

As a result, we get an authorization token 11daf5 ... 516d, which we will use in each request to Yandex Metric:

We have completed a lengthy procedure for preparing the technical part. Next time it will not have to pass. We now write the first “Hello world” to our data in Yandex Metric. To edit scripts in an instance, you can use a wide variety of connections and editors, or simply work in the console. In Windows, for simple scripts, you can use WinSCP (https://winscp.net/eng/docs/lang:ru) and the built-in notepad.

After connecting to the instance (in the same way as we did through Putty) we find ourselves in the / home / ubuntu folder. Let's create a separate 'yam' folder (using F7) and create a file 'test.py' (Shift + F4). Now we’ll check that everything works by unloading the attendance report data for yesterday according to the Yandex Metrics API documentation tech.yandex.ru/metrika/doc/api2/api_v1/intro-docpage :

We import the 'requests' and 'json' libraries to work with requests to the API and the JSON format. In the variable atoken in quotes we specify the previously received authorization token. Copy this code into the file 'test.py' and save:

import requests import json atoken = 'insert token here' r = requests.get('https://api-metrika.yandex.ru/stat/v1/data?&id=21075004&accuracy=full&date1=yesterday&date2=yesterday&metrics=ym:s:visits&oauth_token=' + atoken) parsed = json.loads(r.text) print json.dumps(parsed, indent=4, sort_keys=True) In the 'id' parameter of the r = requests.get (...) line, you must specify the number of the counter from which data is taken. It can be found in the list of counters ( https://metrika.yandex.ru/list ) next to the name. The accuracy parameter corresponds to the Accuracy slider in the web interface, date1 and date2 are the upload period, metrics are metrics (columns). There are also dimensions (dimensions), they will come in handy later. Yandex Metrics provides ready-made templates, you can substitute them into the query string tech.yandex.ru/metrika/doc/api2/api_v1/presets/preset_traffic-docpage

json.loads converts the response received from Yandex Metrika in JSON format into an array, from which you can easily select the items we need (in this case, the number of visits for yesterday). The line print json.dumps (...) will show us the API response in a more human form.

Run the script: in Putty or the terminal type 'python test.py'. Success:

To get the number 489 out of this array (that is, in python, this is a “dictionary”), let's go through its hierarchical structure from top to bottom:

1. The first level of the hierarchy is the element 'data', it will be the first in circulation: ['data']

2. Then there is a square bracket, then the list begins. In our case, this is a list of one element, then we put [0]

3. Next are the two elements 'dimensions' and 'metrics'. Select ['metrics']

3. Finally, in the field 'metrics' again a sheet of one element

Thus, the address of the number of visits 489.0 yesterday will be ['data'] [0] ['metrics'] [0]. Add a line to our script:

print parsed ['data'] [0] ['metrics'] [0]

As a result of the restart, the number of visits for yesterday will be displayed at the end.

Ok, 'hello world' is fun. Unload now something more practical. For example, visits on days in the past week for a list of sites and write it to a file. To begin with, we write to the file by the days of visiting one site last week:

import requests import json atoken = '' r = requests.get('https://api-metrika.yandex.ru/stat/v1/data?&id=21075004&accuracy=full&date1=2015-08-10&date2=2015-08-16&group=day&dimensions=ym:s:date&metrics=ym:s:visits&sort=ym:s:date&oauth_token=' + atoken) parsed = json.loads(r.text) print json.dumps(parsed, indent=4, sort_keys=True) Now the answer will look like this:

Now we need to cycle through all dates and get the number of visits for each day. From the structure it is clear that you need to go through the sheet in parser ['data']. Let's see what each element of this sheet is. To do this, add a loop to the end of the script:

for day in parsed['data']: print day If you are not familiar with python, pay attention to the indent before the 'print day' (tab or a few spaces). This indent determines the beginning and end of the cycle. Run:

Now it’s more clear how to get the date and number of visits. Instead of 'print day' set:

print str(day['dimensions'][0]['name']) + '\t' + str(day['metrics'][0])

Add the ability to upload data for several projects and write everything in the file, replacing the dot in the visits with commas. That at export to Excel it was not necessary to do it. In the example, in the listOfSites list, you can substitute any number of project counters, here there are two identical ones just for example. The file 'weekly_data.txt' will be overwritten each time the script is run:

import requests import json listOfSites = ['21075004', '21075004'] f = open('weekly_data.txt', 'w') atoken = '' for site in listOfSites: r = requests.get('https://api-metrika.yandex.ru/stat/v1/data?&id=' + site + '&accuracy=full&date1=2015-08-10&date2=2015-08-16&group=day&dimensions=ym:s:date&metrics=ym:s:visits&sort=ym:s:date&oauth_token=' + atoken) parsed = json.loads(r.text) print json.dumps(parsed, indent=4, sort_keys=True) for day in parsed['data']: print str(day['dimensions'][0]['name']) + '\t' + str(day['metrics'][0]) f.write(str(day['dimensions'][0]['name']) + '\t' + str(day['metrics'][0]).replace('.', ',') + '\n') f.close() After launch we have to get the file 'weekly_data.txt' with visits by day of the sites listed in the listOfSites (in our example, the numbers are simply repeated twice), which can be easily copied to Excel. Or any other tool in which you are reporting:

After completing the work, go to the AWS management console and on our instance use the right mouse button to select Instance state - Stop. Next time you will not have to go through a long setup procedure. Simply select the Instance state - Start and after a couple of minutes immediately begin working with the code:

Using this simple approach and Yandex Metrics documentation, you can configure almost any upload. I hope this instruction will save you a lot of time and effort when uploading data.

Source: https://habr.com/ru/post/265383/

All Articles