C # - Modeling "reasonable" life based on neural networks

This article is devoted to the study of the possibilities of neural networks when using them as a basis for the individual mind of the object being modeled.

Objective: to show whether the neural network (or its given implementation) is capable of perceiving the “surrounding” world, independently learning and making decisions based on its own experience that can be considered relatively reasonable.

To describe the information model, we need to understand what basic characteristics of the object we want to see, as the “main” characteristics of a rational being, I highlighted the following:

')

Under the surrounding world, we take space in a plane with a Euclidean metric , with some finite number of simulated objects.

Mechanisms of perception in space can be different, in this model I will accept 4 sensors , objects, which can give information about the world around with such mechanisms. Under the information about the world will be implied some value proportional to the distance to the nearest other object . Since 4 sensors will be shifted relative to the “center” of the object, it will get an idea of where the nearest object is located (in theory).

The mechanism of interaction in the model is as follows: 4 “movers”, each of which represents how quickly an object tends to move in one direction or another. 4 "mover" will allow you to move freely within the plane. The interaction in this case will be the ability to move in space.

The presence of memory can be implemented as follows: the object will store information about data from sensors and current “accelerations” in propulsion, thus having an array of such information you can compile full information about what happened to the object.

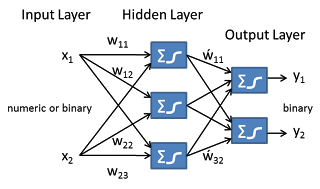

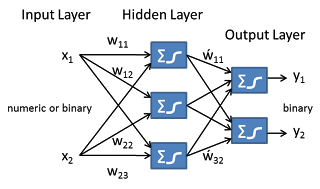

The mental system will be understood as a neural network in one configuration or another.

The ability to make decisions is determined by the neural network architecture, experience and perceived information is provided by the memory and sensors .

In order for the decisions to have any meaning, we will take "aspirations" for the objects:

1. “Eat” another object (in our case, the object will be “eaten” by another if it comes close enough and is “strong enough”).

2. "Do not be eaten," in our case, try not to allow other objects to get close to yourself, so as not to give them the opportunity to "eat."

In order to make a decision on the “strength” of the nearest object, we will take another 1 channel of information, which will inform “whether the nearest object is stronger”.

Thus, the described object model satisfies all the requirements.

To implement the model, the programming language C # will be used. Encog Machine Learning Framework will be used as the neural network implementation. It is very flexible and fast, not to mention ease of use.

The model code is quite simple, it is not the only possible implementation, this model can complement and change each.

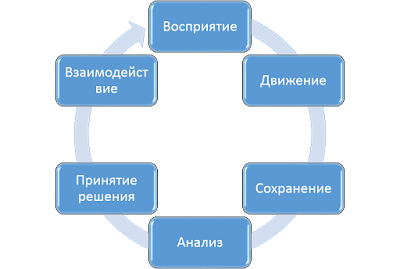

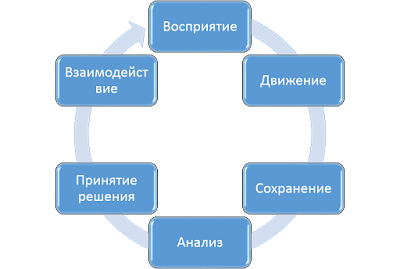

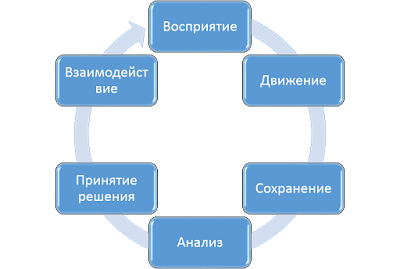

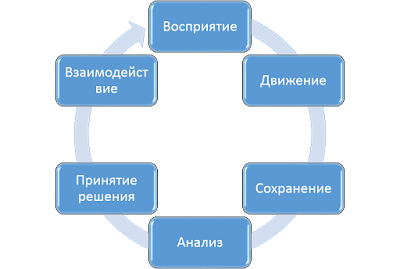

The functioning of the object in this implementation of the model is built around the function DoLive (), in which the following life stages of the object occur alternately:

RefreshSense - update sensor data (looked around);

Move - took a step (moved in space);

SaveToMemory - updated memory, remembered the current situation;

Train - “learned,” analyzed the memory;

Compute - made a decision on the position of propulsion on the basis of experience and information about the nearest object;

Output - set the parameters of the drivers based on the decision:

Thus, each life cycle of all objects proceeds throughout their entire existence.

To view the simulation result, you will need to somehow extract information about the current state of the model at each stage, since dynamic model the most effective will be the use of video. To implement the output to the video file, we will use the AForge.Video.FFMPEG library.

Example 1. Without information about the strength of the nearest object (there is no desire to avoid death):

Example 2. High "inertness" of thinking (less often stops thinking about the world around him and analyzing experience):

Example 3. The final implementation

Example 4. With the functions of "breeding" and "hunting"

Example 5. Extended model, “genetic” inheritance of “successful” genes, gene mutations during inheritance, greater genetic diversity (16 types of activation of neurons, different numbers and configuration of layers)

Example 6. The empowerment of perception, objects perceive not only the nearest object, but other objects as well.

Example 7. Partial inheritance of memory from "parent".

Objects were given a minimum of information, there were no rules regarding the movement or control of propulsion, only information about the surrounding space, objects and feedback about their condition. This information turned out to be enough for the objects to “ learn ” to independently find and “eat” the “weak” and “run away” from the “strong”.

In general, a neural network can be used to model actions that are reasonably reasonable.

The use of neural networks in modeling can also have practical significance, for example, in managing game objects, it is enough to describe the “ possibilities ”, then the objects will “live” their lives using the available opportunities for their own benefit. The principle shown in the article can be applied in robotics, it is enough to give the program access to sensor systems, control systems and to set a goal, thanks to memory and a neural network, the device can " learn " to independently manage available systems so that the goal is achieved.

All materials, source codes are free and available to anyone.

I hope this article will help those who want to learn how to use neural networks.

ps another interesting article on the same topic, proof of the performance of the idea that a fairly complex neural network can learn to use what it has.

Objective: to show whether the neural network (or its given implementation) is capable of perceiving the “surrounding” world, independently learning and making decisions based on its own experience that can be considered relatively reasonable.

Tasks

- Describe and build an information model.

- Implement a model and objects in a programming language

- Implement the basic properties of rational creatures.

- Implement the thinking apparatus and mechanisms of "perception" of the model of the surrounding world by the object

- Implement the mechanism of interaction of the model object with the outside world and other objects

1. Information model

To describe the information model, we need to understand what basic characteristics of the object we want to see, as the “main” characteristics of a rational being, I highlighted the following:

')

- The presence of mechanisms for the perception of the surrounding world;

- The presence of a mechanism of interaction with the outside world;

- The presence of memory;

- The presence of thinking systems (whatever they are);

- The ability to make decisions about interacting with the outside world on the basis of information that is perceived and experience.

Under the surrounding world, we take space in a plane with a Euclidean metric , with some finite number of simulated objects.

Mechanisms of perception in space can be different, in this model I will accept 4 sensors , objects, which can give information about the world around with such mechanisms. Under the information about the world will be implied some value proportional to the distance to the nearest other object . Since 4 sensors will be shifted relative to the “center” of the object, it will get an idea of where the nearest object is located (in theory).

The mechanism of interaction in the model is as follows: 4 “movers”, each of which represents how quickly an object tends to move in one direction or another. 4 "mover" will allow you to move freely within the plane. The interaction in this case will be the ability to move in space.

The presence of memory can be implemented as follows: the object will store information about data from sensors and current “accelerations” in propulsion, thus having an array of such information you can compile full information about what happened to the object.

The mental system will be understood as a neural network in one configuration or another.

The ability to make decisions is determined by the neural network architecture, experience and perceived information is provided by the memory and sensors .

In order for the decisions to have any meaning, we will take "aspirations" for the objects:

1. “Eat” another object (in our case, the object will be “eaten” by another if it comes close enough and is “strong enough”).

2. "Do not be eaten," in our case, try not to allow other objects to get close to yourself, so as not to give them the opportunity to "eat."

In order to make a decision on the “strength” of the nearest object, we will take another 1 channel of information, which will inform “whether the nearest object is stronger”.

Thus, the described object model satisfies all the requirements.

2. Implementation of the model

To implement the model, the programming language C # will be used. Encog Machine Learning Framework will be used as the neural network implementation. It is very flexible and fast, not to mention ease of use.

The model code is quite simple, it is not the only possible implementation, this model can complement and change each.

The functioning of the object in this implementation of the model is built around the function DoLive (), in which the following life stages of the object occur alternately:

RefreshSense - update sensor data (looked around);

Move - took a step (moved in space);

SaveToMemory - updated memory, remembered the current situation;

Train - “learned,” analyzed the memory;

Compute - made a decision on the position of propulsion on the basis of experience and information about the nearest object;

Output - set the parameters of the drivers based on the decision:

Thus, each life cycle of all objects proceeds throughout their entire existence.

3. Model Verification

To view the simulation result, you will need to somehow extract information about the current state of the model at each stage, since dynamic model the most effective will be the use of video. To implement the output to the video file, we will use the AForge.Video.FFMPEG library.

Example 1. Without information about the strength of the nearest object (there is no desire to avoid death):

Example 2. High "inertness" of thinking (less often stops thinking about the world around him and analyzing experience):

Example 3. The final implementation

Example 4. With the functions of "breeding" and "hunting"

Example 5. Extended model, “genetic” inheritance of “successful” genes, gene mutations during inheritance, greater genetic diversity (16 types of activation of neurons, different numbers and configuration of layers)

Example 6. The empowerment of perception, objects perceive not only the nearest object, but other objects as well.

Example 7. Partial inheritance of memory from "parent".

4. Conclusions

Objects were given a minimum of information, there were no rules regarding the movement or control of propulsion, only information about the surrounding space, objects and feedback about their condition. This information turned out to be enough for the objects to “ learn ” to independently find and “eat” the “weak” and “run away” from the “strong”.

In general, a neural network can be used to model actions that are reasonably reasonable.

The use of neural networks in modeling can also have practical significance, for example, in managing game objects, it is enough to describe the “ possibilities ”, then the objects will “live” their lives using the available opportunities for their own benefit. The principle shown in the article can be applied in robotics, it is enough to give the program access to sensor systems, control systems and to set a goal, thanks to memory and a neural network, the device can " learn " to independently manage available systems so that the goal is achieved.

All materials, source codes are free and available to anyone.

I hope this article will help those who want to learn how to use neural networks.

ps another interesting article on the same topic, proof of the performance of the idea that a fairly complex neural network can learn to use what it has.

Source: https://habr.com/ru/post/265201/

All Articles