Testing flash storage. Huawei Dorado 2100 G2

Our series of articles dedicated to testing various flash storage systems would not be complete without a Huawei product. I admit honestly, Dorado had already been to our laboratory earlier, almost immediately after it became available in Russia. This time, following the "wishes of the workers" we have collected more than enough data and are pleased to present them to your attention.

During testing, the following tasks were solved:

The test bench consists of the IBM x3750 M4 server connected directly to the storage of Huawei Dorado 2100 G2 via 4 FC channels of 8Gb each. Storage Huawei OceanStore Dorado 2100 G2

As an additional software, Symantec Storage Foundation 6.2 is installed on the test server, which implements:

The tests are performed by creating a synthetic load on the block device using fio, which is a logical volume of the type

Main conclusions:

1. When filling the array with data, it is noticed that formatting (RAID initialization) occurs only when writing data. Accordingly, the first entry is slow.

2. With a long load on the recording at a certain point in time, a significant degradation of storage performance is recorded. A drop in performance is expected and is a feature of the SSD (write cliff) operation related to the inclusion of garbage collection (GC) processes and the limited performance of the indicated processes. The performance of the disk array, fixed after the write cliff effect (after a drop in performance), can be considered as the maximum average performance of the disk array. A drop in performance occurs after 2-3 minutes of a peak load on the recording, which is not a very good indicator in comparison with disk arrays from other manufacturers and indicates the insignificant reserve of disk space for damping peak write loads.

3. The block size of I / O operations affects the performance of the garbage collection (GC) process.

In RAID 5 configuration:

In a RAID 0 configuration:

4. The controller cache does not affect the behavior of the storage system during a long peak write load. The moment of performance loss (Write Cliff) comes at the same time, regardless of the use of the array cache to write (Graph 2). The performance of the storage after a drop in performance is also independent of the use of the cache.

5. Storage density does not affect the operation of GC processes (Graph 3).

6. In the conditions of the load profile on the storage system with frequent intensive recording lasting 2-3 minutes or more, an 8K block is most effective for operation.

7. From the results of tests with a long mixed load, it can be seen that when the performance of write operations drops, the performance of read operations also decreases proportionally. This is caused, most likely by the fact that the fio processes generated the load in exactly the specified proportions (70/30 or 50/50), that is, in practice, this behavior of the disk array can be expected if there is a relationship between the write and read processes.

8. Tests with a long mixed load showed that the performance of write operations on a mixed load is the same as with a clean load on the record. It can be concluded that read operations do not affect the performance of the garbage collection.

9. The performance of write operations (both before and after the drop in performance) is significantly higher in the storage configuration of RAID0.

Main conclusions:

1. The maximum recorded performance parameters for storage in a RAID 5 configuration (from the average during the execution of each test - 1 min):

Record:

Reading:

Mixed load (70/30 rw):

* Considering the fact that the disk array is connected to the test server by means of 4 8Gb / s, the obtained values are most likely not the limit for the disk array, the limiting factor was the bandwidth of the connection channel to the server.

Minimal latency fixed:

2. The storage system enters saturation mode when:

3. On 1MB blocks, the maximum throughput is 2 times higher than the maximum values obtained on the middle blocks (16-64K).

4. The array produces the same number of I / O operations on blocks 4 and 8K and a little less on block 16K. The optimal blocks for working with an array are 8 and 16K.

Main conclusions:

1. Maximum recorded performance parameters for storage in a RAID 0 configuration (from the average for the duration of each test run - 1 min):

Record:

Reading:

* Considering the fact that the disk array is connected to the test server by means of 4 8Gb / s, the obtained values are most likely not the limit for the disk array, the limiting factor was the bandwidth of the connection channel to the server.

Mixed load (70/30 rw)

Minimal latency fixed:

2. The storage system enters saturation mode when:

3. On 1MB blocks, the maximum throughput is 2 times the maximum values obtained on the middle blocks (16-64K)

4. The array in the RAID0 configuration gives the same number of I / O operations on the 4 and 8K blocks and slightly less on the 16K block. The optimal blocks for working with an array are 8 and 16K.

There were pleasant impressions from working with the array. The performance results were higher than expected. The array is distinguished by a classic storage architecture with two controllers, standard RAID levels and ordinary eMLC SSDs. The consequence of this architecture is low cost compared to other flash storage systems and higher delays in processing I / O operations.

Huawei Dorado G2 is perfect for multi-threaded loads, not very critical to delays.

Testing method

During testing, the following tasks were solved:

- studies of the process of storage degradation during long-term write load (Write Cliff);

- Huawei Dorado G2 storage performance study with different load profiles in various configurations (R5 and R0);

Testbed Configuration

|

| Figure 1. The block diagram of the test bench. |

As an additional software, Symantec Storage Foundation 6.2 is installed on the test server, which implements:

- Functional logical volume manager (Veritas Volume Manager);

- Functional fault-tolerant connection to disk arrays (Dynamic Multi Pathing).

See tiresome details and all sorts of clever words.

On the test servers, the following settings are made to reduce the disk I / O latency:

During the tests, various settings on the storage system are made:

To create a synthetic load (run synthetic tests) on the storage system, use the Flexible IO Tester (fio) version 2.1.10 utility. All synthetic tests use the following fio configuration parameters of the [global] section:

The following utilities are used to remove performance indicators under synthetic load:

The removal of performance indicators during the test with the utilities iostat, vxstat, vxdmpstat is performed at intervals of 5 seconds.

- Changed the I / O scheduler from “cfq” to “noop” by assigning the noop value to the scheduler parameter of the Symantec VxVolume volume

- Added a parameter in

/etc/sysctl.confminimizes the queue size at the level of the Symantec logical volume manager:«vxvm.vxio.vol_use_rq = 0»; - increased limit of simultaneous I / O requests to the device to 1024, by assigning the value 1024 to the

nr_requestsparameter of the Symantec VxVolume volume - Disabling the check of the possibility of merging I / O operations (iomerge) is disabled, by assigning the value 1 to the

nomergesparameter of the Symantec VxVolume volume - The queue size on FC adapters has been increased by adding the options

ql2xmaxqdepth=64 (options qla2xxx ql2xmaxqdepth=64)/etc/modprobe.d/modprobe.confconfiguration fileql2xmaxqdepth=64 (options qla2xxx ql2xmaxqdepth=64);

During the tests, various settings on the storage system are made:

- implemented SSD configuration of RAID5 drives (for tests of storage in R5) or RAID0 (for tests of storage in configuration R0).

- 8 LUNs of the same size are created with a total volume covering the entire usable storage capacity (for tests on a fully marked storage system) or 70% of the volume (for tests on a not fully marked storage system). Block size LUN - 512byte. The moons are created in such a way that for half of them (four LUNs), the owner is one storage controller (A), and for the other half, the other controller (B). Created LUNs are presented to the test server.

- Different test groups can be conducted with Write Cache enabled on all LUNs or Write Back Disabled (Write Through). The value of the configuration parameter is given in the test description in the testing program (1.5).

Software used in the testing process

To create a synthetic load (run synthetic tests) on the storage system, use the Flexible IO Tester (fio) version 2.1.10 utility. All synthetic tests use the following fio configuration parameters of the [global] section:

- thread = 0

- direct = 1

- group_reporting = 1

- norandommap = 1

- time_based = 1

- randrepeat = 0

- ramp_time = 3

The following utilities are used to remove performance indicators under synthetic load:

- iostat, part of the sysstat version 9.0.4 package with txk keys;

- vxstat, which is part of Symantec Storage Foundation 6.2 with the svd keys;

- vxdmpadm, part of Symantec Storage Foundation 6.2 with the -q iostat keys;

- fio version 2.1.10, to generate a summary report for each load profile.

The removal of performance indicators during the test with the utilities iostat, vxstat, vxdmpstat is performed at intervals of 5 seconds.

Testing program.

The tests are performed by creating a synthetic load on the block device using fio, which is a logical volume of the type

«stripe, 8 column, stripe unit size=1MiB» , created using Veritas Volume Manager from 8 LUNs presented from the system under test.Ask for details

When creating a test load, the following fio program parameters are used (in addition to those defined in section 1.4):

A group of tests consists of tests that differ in the total volume of LUNs presented with the tested storage system, RAID configuration, use of the write cache and the direction of the load:

If the test requires changing the configuration of the RAID storage system, then after changing the RAID level, creating the LUN on the storage system and the server volume, the volume is filled with the fio 2.1.10 utility using the 16K block on the volume that is 2 times larger than the volume.

Based on the test results, based on the data output by the vxstat command, graphs are generated that combine the test results:

The analysis of the received information is carried out and conclusions are drawn about:

During testing, the following types of loads are investigated:

A test group consists of a set of tests representing all possible combinations of the above types of load. The duration of each test is 1 minute. To level the impact of the service processes of the storage system (garbage collection) on the test results, a pause between the tests is equal to the amount of information recorded during the test, divided by the performance of the storage service processes (determined by the results of the first group of tests).

')

All of the above tests are performed initially with the write cache turned off for all LUNs presented with the storage system.

Based on the test results, based on the data output by the fio software, upon completion of each of the tests, graphs are generated for each combination of load types (load profile, processing method for I / O operations, queue depth, combining tests with different I / O block values):

The results are analyzed, conclusions are drawn about the load characteristics of the disk array at latency less than or about 1ms, about the maximum performance of the array and about the performance of the array under single-threaded load, as well as about the effect of the cache on the storage performance.

Tests are conducted similarly to tests of group 2. The configuration of the storage system R0 is being tested. Based on the test results, based on the data output by the fio software, upon completion of each of the tests, graphs are generated for each combination of load types (load profile, processing method for I / O operations, queue depth, combining tests with different I / O block values):

The analysis of the obtained results is carried out, conclusions are drawn about the load characteristics of the disk array in R0 with latency less than or about 1ms, the maximum performance indicators of the array in R0 and the performance of the array under single-threaded load.

Group 1: Tests that implement a long write load and a long mixed load with a change in block size I / O operations (I / O)

When creating a test load, the following fio program parameters are used (in addition to those defined in section 1.4):

- rw = randwrite

- blocksize = [4K 8K 16K 32K 64K 1M] (the behavior of storage systems under load with different block sizes of input-output operations is investigated)

- numjobs = 64

- iodepth = 64

A group of tests consists of tests that differ in the total volume of LUNs presented with the tested storage system, RAID configuration, use of the write cache and the direction of the load:

- Recording tests performed on a fully-marked storage system in the R5 configuration with a variable block size (4,8,16,32,64,1024K). For each LUN, write cache is disabled. The duration of each test is 40 minutes, the interval between tests is 1 hour.

- The same with the write cache enabled.

- Recording tests performed on an incompletely marked storage system (total LUN volume - 70% of the useful volume) in the R5 configuration with a variable block size (4,8,16,32,64,1024K). For each LUN, write cache is disabled.

- Tests with long-term mixed load (50% write, 50% read), performed on a fully-marked storage system in the R5 configuration with varying block size (4,8,16,32,64,1024K). For each LUN, write cache is disabled. The duration of each test is 20 minutes, the interval between tests is 40 minutes.

- Tests with a long mixed load (70% write, 30% read), performed on a fully labeled storage system in the R5 configuration with varying block size (4,8,16,32,64,1024K). For each LUN, write cache is disabled. The duration of each test is 20 minutes, the interval between tests is 40 minutes.

- Recording tests performed on a fully-marked storage system in the R0 configuration with a variable block size (4,8,16,32,64,1024K). For each LUN, write cache is disabled. The duration of each test is 40 minutes, the interval between tests is 1 hour.

If the test requires changing the configuration of the RAID storage system, then after changing the RAID level, creating the LUN on the storage system and the server volume, the volume is filled with the fio 2.1.10 utility using the 16K block on the volume that is 2 times larger than the volume.

Based on the test results, based on the data output by the vxstat command, graphs are generated that combine the test results:

- IOPS as a function of time;

- Bandwidth, as a function of time.

The analysis of the received information is carried out and conclusions are drawn about:

- the presence of performance degradation with long-term load on the record and with a long mixed load;

- the performance of storage service processes (garbage collection), limiting the performance of the disk array to write during a long peak load in various storage configurations (R5 and R0);

- the impact of the cache on the record on the performance of storage service processes (garbage collection);

- the degree of influence of the size of the block I / O operations on the performance of the service processes of the storage;

- the amount of space reserved for storage for leveling storage service processes;

- the impact of storage density on the performance of service processes;

- the influence of storage service processes processes (garbage collection) on read performance.

Group 2: Disk array performance tests with different types of load, executed at the block device level, when configuring the storage system R5.

During testing, the following types of loads are investigated:

- load profiles (changeable software parameters fio: randomrw, rwmixedread):

- random recording 100%;

- random write 30%, random read 70%;

- random read 100%.

- block sizes: 1KB, 8KB, 16KB, 32KB, 64KB, 1MB (changeable software parameter fio: blocksize);

- methods of processing I / O operations: synchronous, asynchronous (variable software parameter fio: ioengine);

- the number of load generating processes: 1, 2, 4, 8, 16, 32, 64, 128, 256 (changeable software parameter fio: numjobs);

- queue depth (for asynchronous I / O operations): 32, 64 (changeable software parameter fio: iodepth).

A test group consists of a set of tests representing all possible combinations of the above types of load. The duration of each test is 1 minute. To level the impact of the service processes of the storage system (garbage collection) on the test results, a pause between the tests is equal to the amount of information recorded during the test, divided by the performance of the storage service processes (determined by the results of the first group of tests).

')

All of the above tests are performed initially with the write cache turned off for all LUNs presented with the storage system.

Based on the test results, based on the data output by the fio software, upon completion of each of the tests, graphs are generated for each combination of load types (load profile, processing method for I / O operations, queue depth, combining tests with different I / O block values):

- IOPS as a function of the number of load generating processes;

- Bandwidth as a function of the number of processes that generate the load;

- Latitude (clat) as a function of the number of load generating processes;

The results are analyzed, conclusions are drawn about the load characteristics of the disk array at latency less than or about 1ms, about the maximum performance of the array and about the performance of the array under single-threaded load, as well as about the effect of the cache on the storage performance.

Group 3: Disk array performance tests with different types of load, executed at the block device level, with the configuration of the storage system R0.

Tests are conducted similarly to tests of group 2. The configuration of the storage system R0 is being tested. Based on the test results, based on the data output by the fio software, upon completion of each of the tests, graphs are generated for each combination of load types (load profile, processing method for I / O operations, queue depth, combining tests with different I / O block values):

- IOPS as a function of the number of load generating processes;

- Bandwidth as a function of the number of processes that generate the load;

- Latitude (clat) as a function of the number of load generating processes;

The analysis of the obtained results is carried out, conclusions are drawn about the load characteristics of the disk array in R0 with latency less than or about 1ms, the maximum performance indicators of the array in R0 and the performance of the array under single-threaded load.

Test results

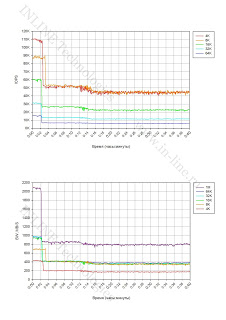

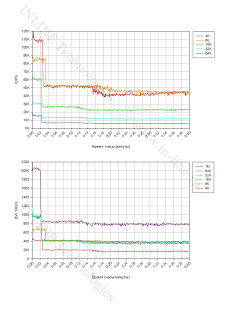

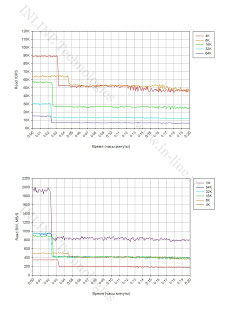

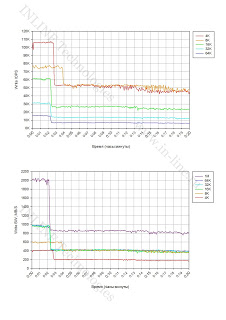

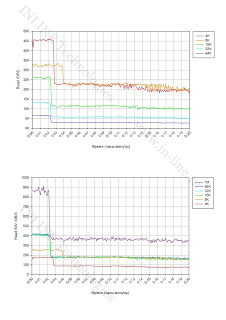

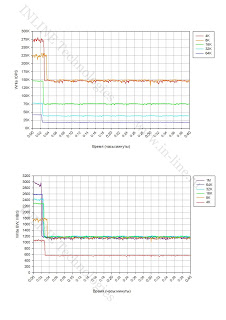

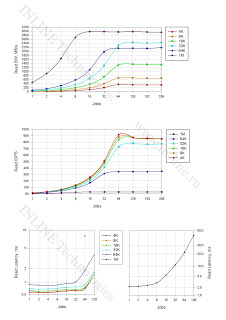

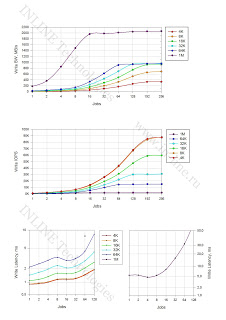

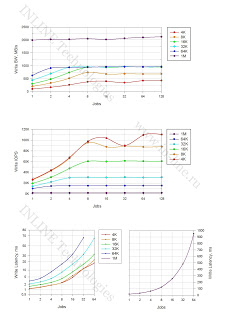

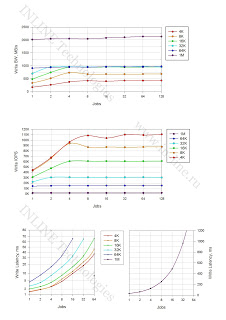

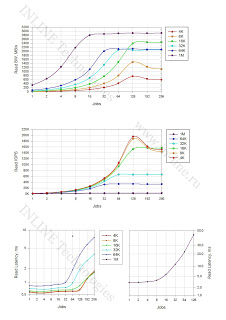

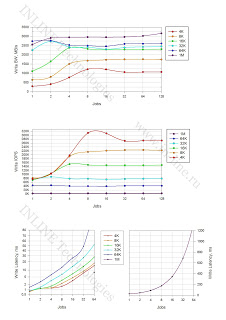

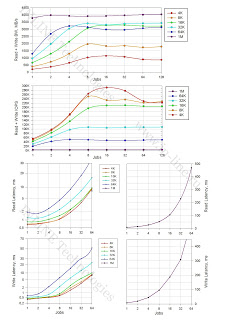

Group 1: Tests that implement a long write load and a long mixed load with a change in block size I / O operations (I / O).

Charts (All pictures are clickable)

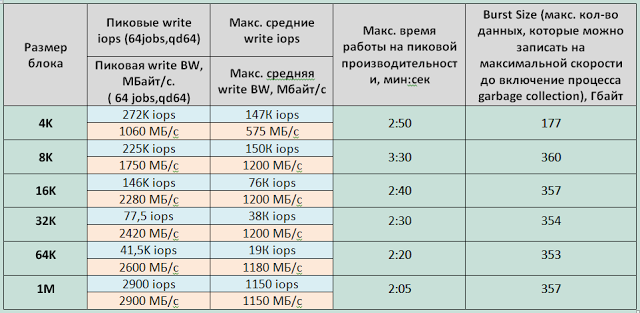

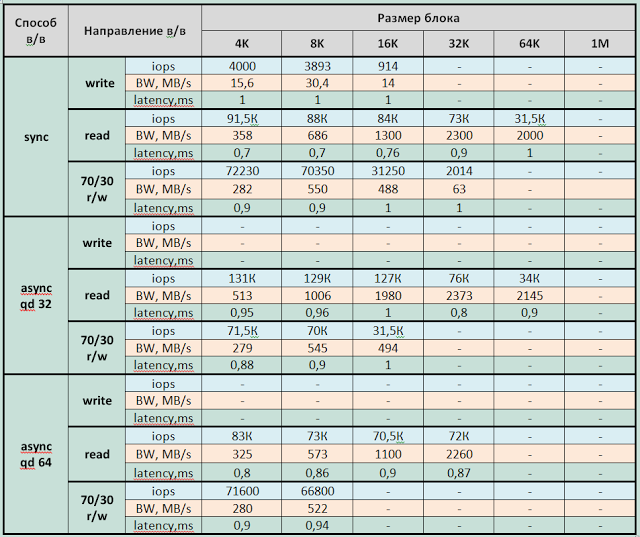

Tables 1-2. (All pictures are clickable)

|

| Table 1. The dependence of the performance of storage on the block size with a long write load in the storage configuration - RAID 5. |

|

| Table 2. The dependence of the performance of storage on the block size with a long write load in the storage configuration - RAID 0. |

Main conclusions:

1. When filling the array with data, it is noticed that formatting (RAID initialization) occurs only when writing data. Accordingly, the first entry is slow.

2. With a long load on the recording at a certain point in time, a significant degradation of storage performance is recorded. A drop in performance is expected and is a feature of the SSD (write cliff) operation related to the inclusion of garbage collection (GC) processes and the limited performance of the indicated processes. The performance of the disk array, fixed after the write cliff effect (after a drop in performance), can be considered as the maximum average performance of the disk array. A drop in performance occurs after 2-3 minutes of a peak load on the recording, which is not a very good indicator in comparison with disk arrays from other manufacturers and indicates the insignificant reserve of disk space for damping peak write loads.

3. The block size of I / O operations affects the performance of the garbage collection (GC) process.

In RAID 5 configuration:

- For small blocks (4K), GC performance is 180MB / s (45000 IOPS);

- For medium blocks (8-64K) GC performance - 350MB / s;

- For large blocks (1M), the GC performance is 800 MB / s.

In a RAID 0 configuration:

- For small blocks (4K), the GC performance is 575 MB / s (143750 IOPS);

- For medium and large blocks (8-64K), the GC performance is 1200 MB / s.

4. The controller cache does not affect the behavior of the storage system during a long peak write load. The moment of performance loss (Write Cliff) comes at the same time, regardless of the use of the array cache to write (Graph 2). The performance of the storage after a drop in performance is also independent of the use of the cache.

5. Storage density does not affect the operation of GC processes (Graph 3).

6. In the conditions of the load profile on the storage system with frequent intensive recording lasting 2-3 minutes or more, an 8K block is most effective for operation.

7. From the results of tests with a long mixed load, it can be seen that when the performance of write operations drops, the performance of read operations also decreases proportionally. This is caused, most likely by the fact that the fio processes generated the load in exactly the specified proportions (70/30 or 50/50), that is, in practice, this behavior of the disk array can be expected if there is a relationship between the write and read processes.

8. Tests with a long mixed load showed that the performance of write operations on a mixed load is the same as with a clean load on the record. It can be concluded that read operations do not affect the performance of the garbage collection.

9. The performance of write operations (both before and after the drop in performance) is significantly higher in the storage configuration of RAID0.

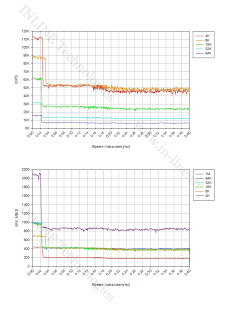

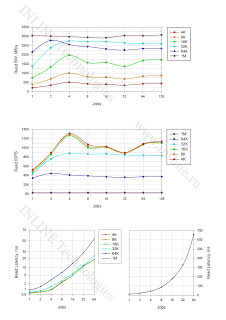

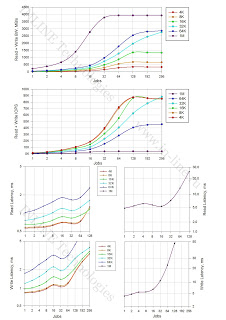

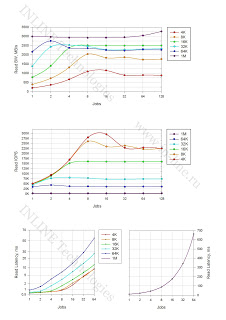

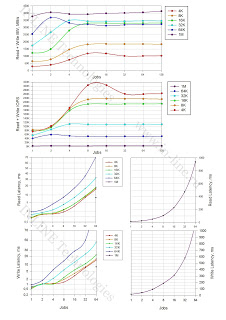

Group 2: Disk array performance tests with different types of load, executed at the block device level, when configuring the storage system R5.

Charts (All pictures are clickable)

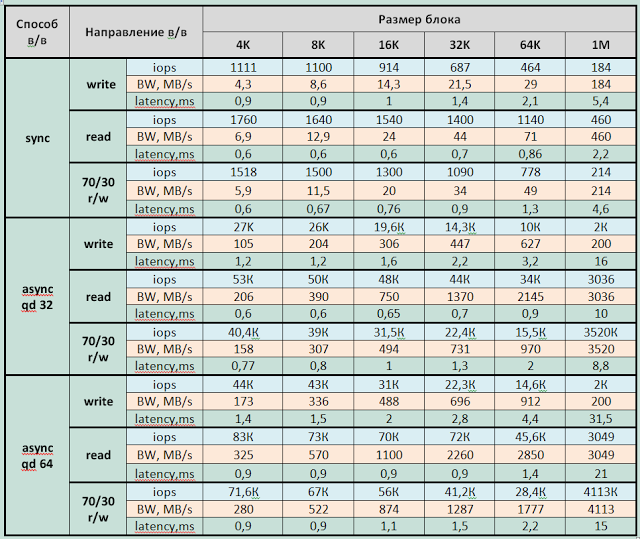

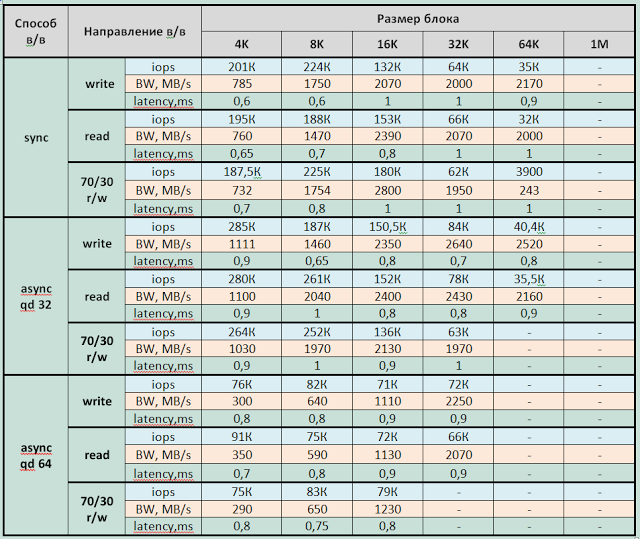

Tables 3-5. (All pictures are clickable)

|

| Table 3. Storage Performance in a RAID 5 Configuration with One Process Generating Load (jobs = 1) |

|

| Table 4. Maximum storage performance in a RAID 5 configuration with delays less than 1ms |

|

| Table 5. Maximum storage performance in a RAID 5 configuration with a different load profile. |

Main conclusions:

1. The maximum recorded performance parameters for storage in a RAID 5 configuration (from the average during the execution of each test - 1 min):

Record:

- 110,000 IOPS with latency 4,7ms (4KB async qd32.64 block);

- with synchronous I / O - 88,000 IOPS with latency of 2.5 ms (4K and 8K blocks);

- Bandwidth: 2130MB / c for large blocks (1MB), about 1000Mb / s for medium blocks (16-64K).

Reading:

- 140,000 IOPS with latency 1,8ms (blocks 4 and 8K async qd64);

- with synchronous I / O - 91,000 IOPS with latency of 0.7ms (4K block);

- Bandwidth: 3160MB / s for large blocks. *

Mixed load (70/30 rw):

- 132,000 IOPS with latency 1.9ms (blocks 4 and 8K async qd64);

- with synchronous I / O - 87,000 IOPS with latency 1,4ms (blocks 4, 8, 16K);

- Bandwidth 4100MB / s for large blocks (1 MB) and about 3000 MB / s for blocks 64, 32. *

* Considering the fact that the disk array is connected to the test server by means of 4 8Gb / s, the obtained values are most likely not the limit for the disk array, the limiting factor was the bandwidth of the connection channel to the server.

Minimal latency fixed:

- When recording - 0.9ms for block 4K jobs = 1,2 sync

- When reading - 0.6ms for 4K and 8K blocks jobs = 1-8 sync

2. The storage system enters saturation mode when:

- asynchronous I / O with 2-4 jobs on 32K, 64K blocks and 8 jobs on small and medium blocks (4-16K).

- synchronous way in / in at 128-192 jobs.

3. On 1MB blocks, the maximum throughput is 2 times higher than the maximum values obtained on the middle blocks (16-64K).

4. The array produces the same number of I / O operations on blocks 4 and 8K and a little less on block 16K. The optimal blocks for working with an array are 8 and 16K.

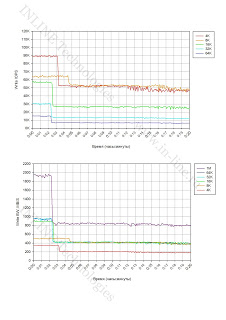

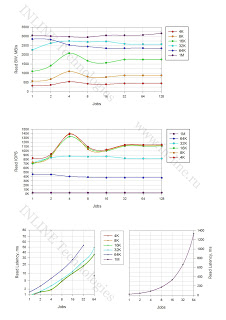

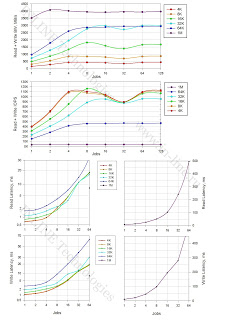

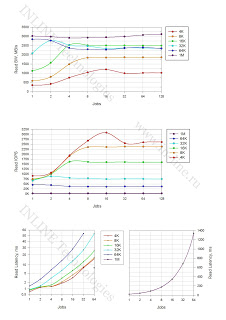

Group 3: Disk array performance tests with different types of load, executed at the block device level, with the configuration of the storage system R0.

Charts (All pictures are clickable)

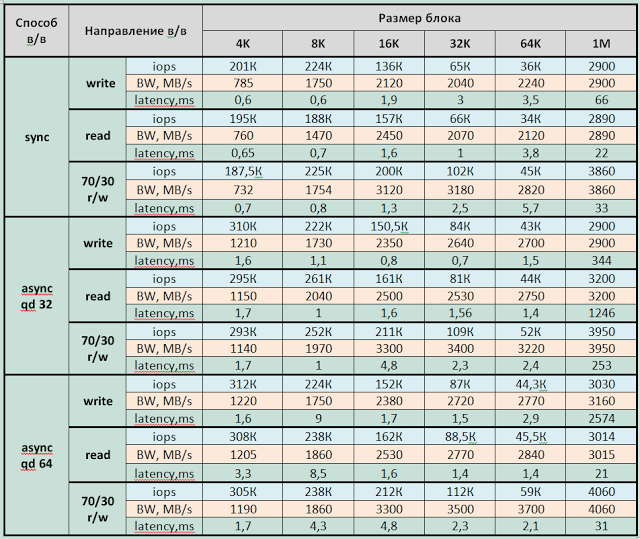

Tables 6-8. (All pictures are clickable)

|

| Table 6. Storage Performance in a RAID 0 Configuration with One Process Generating Load (jobs = 1) |

|

| Table 7. Maximum storage performance in a RAID 0 configuration with delays less than 1ms |

|

| Table 8. Maximum storage performance in a RAID 0 configuration with a different load profile. |

Main conclusions:

1. Maximum recorded performance parameters for storage in a RAID 0 configuration (from the average for the duration of each test run - 1 min):

Record:

- 311,000 IOPS with latency of 1.6ms (4KB async qd32.64 block);

- with synchronous I / O - 190,000 IOPS with latency of 0.7 ms (4K and 8K blocks);

- Bandwidth: 3160MB / c for large blocks (1MB), about 2500Mb / s for medium blocks (16-64K). *

Reading:

- 310,000 IOPS with latency 3,3ms (4K async qd64 block);

- with synchronous I / O - 91,000 IOPS with latency of 0.7ms (4K block);

- Bandwidth: 3260MB / s for large blocks. *

* Considering the fact that the disk array is connected to the test server by means of 4 8Gb / s, the obtained values are most likely not the limit for the disk array, the limiting factor was the bandwidth of the connection channel to the server.

Mixed load (70/30 rw)

- 305,000 IOPS with latency 1,2ms (4K async qd64 block);

- with synchronous I / O - 225,000 IOPS with latency of 0.95 ms (4K blocks);

- Bandwidth 4140MB / s for large blocks (1MB, 64K, 32K).

Minimal latency fixed:

- When recording - 0.35ms for 4K jobs = 1.2 sync

- When reading - 0.6ms for 4K and 8K blocks jobs = 1sync

2. The storage system enters saturation mode when:

- asynchronous I / O with 2-4 jobs on 32K, 64K blocks and 8 jobs on small and medium blocks (4-16K).

- synchronous method in / in with 64-128 jobs.

3. On 1MB blocks, the maximum throughput is 2 times the maximum values obtained on the middle blocks (16-64K)

4. The array in the RAID0 configuration gives the same number of I / O operations on the 4 and 8K blocks and slightly less on the 16K block. The optimal blocks for working with an array are 8 and 16K.

findings

There were pleasant impressions from working with the array. The performance results were higher than expected. The array is distinguished by a classic storage architecture with two controllers, standard RAID levels and ordinary eMLC SSDs. The consequence of this architecture is low cost compared to other flash storage systems and higher delays in processing I / O operations.

Huawei Dorado G2 is perfect for multi-threaded loads, not very critical to delays.

Source: https://habr.com/ru/post/265143/

All Articles