Creating a tester for match-3 games

In the process of working on a match-3 project, sooner or later the question always arises - how to assess the complexity of the created levels and the overall balance of the game?

Even with a large number of testers in the team, it is simply unrealistic to collect detailed statistics for each level (and there are hundreds of them in modern games). Obviously, the testing process must somehow be automated.

Below is a story about how we did it in our indie match-3 game, on which we are now finishing work. (Caution - footwoman!)

To automate the testing of levels, we decided to program a “bot” that would meet the following requirements:

')

Binding to the game engine - the bot must “use” the same blocks of code that are used during the standard game. In this case, you can be sure that both the bot and the real player will play the same game (that is, they will deal with the same logic, mechanics, bugs, and so on).

Scalability - you need a bot to test not only existing levels, but also levels that can be created in the future (given that new types of chips, cells, enemies, etc. can be added to the game in the future) . This requirement largely overlaps with the previous one.

Performance - to collect statistics on one level, you need to “play” it at least 1000 times, and hundreds of levels - then the bot must play and collect statistics quickly enough so that the analysis of one level does not take all day.

Reliability - the moves of the bot should be more or less close to the moves of the average player.

The first three points speak for themselves, but how to make the bot play “humanly”?

Before starting to work on our project, we went through about 1000 levels of varying degrees of difficulty in different match3 games (two or three games even went completely). And having considered this experience, we decided to dwell on the next approach to creating levels.

At once I will make a reservation that this decision does not claim to be the ultimate truth, and is not without flaws, but it helped us build a bot that meets the requirements described above.

We decided to proceed from the fact that a good level is a kind of task for finding the optimal strategy - the level designer creating the level “guessing” the optimal sequence of actions, and the player must guess it. If he guesses correctly, then the level is passed relatively easily. If the player acts “out of order”, then he is more likely to fall into a stalemate.

For example, let them give a level in which there are “breeding” chips (if you don’t collect a group of chips near them, then they will capture one of the adjacent cells before the next player’s move). The goal of the level is to drop certain chips into the bottom row of the board. Our game is devoted to sea adventures (aha, again!) Therefore it is required to lower to the bottom of divers, and corals play the role of breeding chips:

If the player does not take the time to destroy the corals from the very beginning, and instead will immediately try to lower the divers, then the corals are likely to grow and make the level impassable. Therefore, in this case, we can say that the strategy that the Level Designer “made” is approximately like this: “Destroy the corals as soon as possible, and then lower the divers”.

Thus, the strategy of passing the level, both from the level of the designer, and from the player, is to prioritize between the various tactical actions (hereinafter I will just call them tactics). The set of these tactics is approximately the same for all games of the genre:

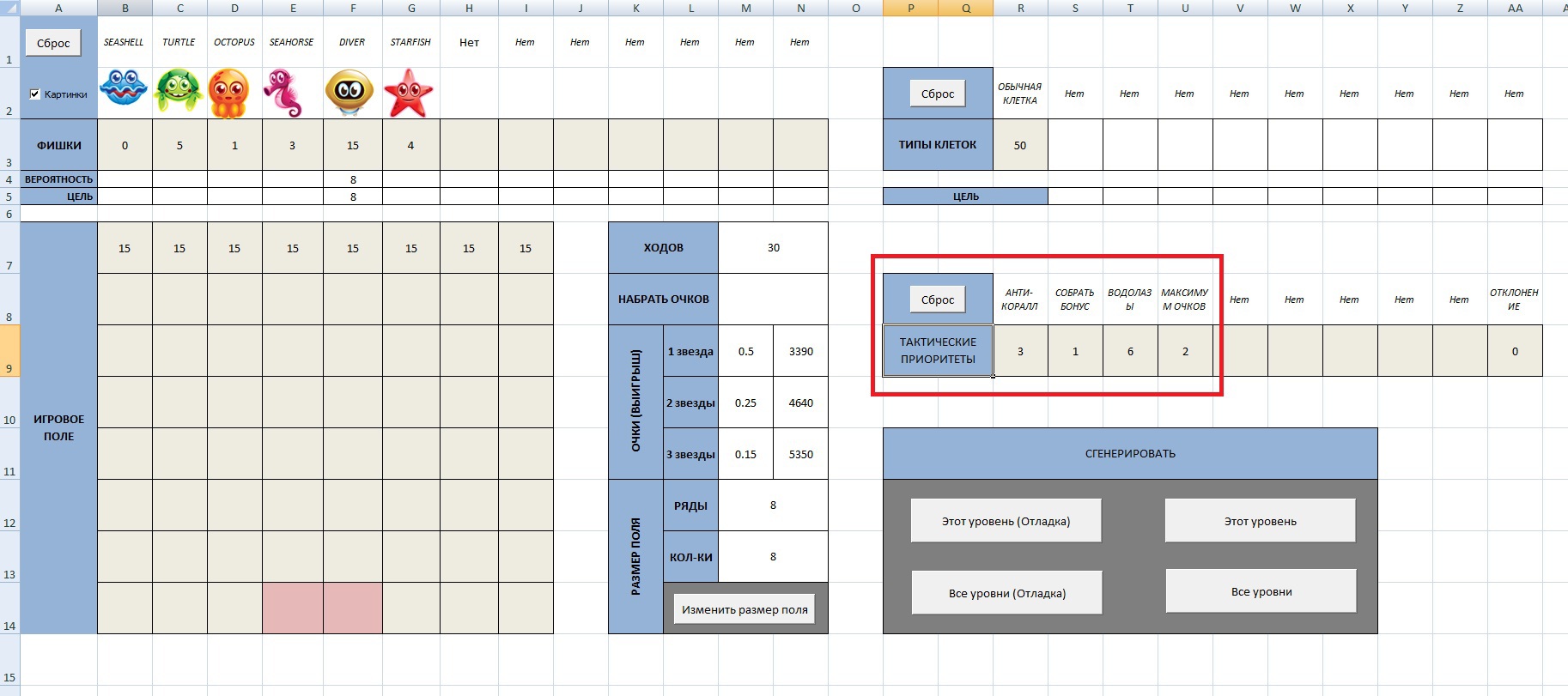

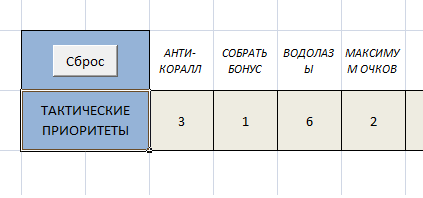

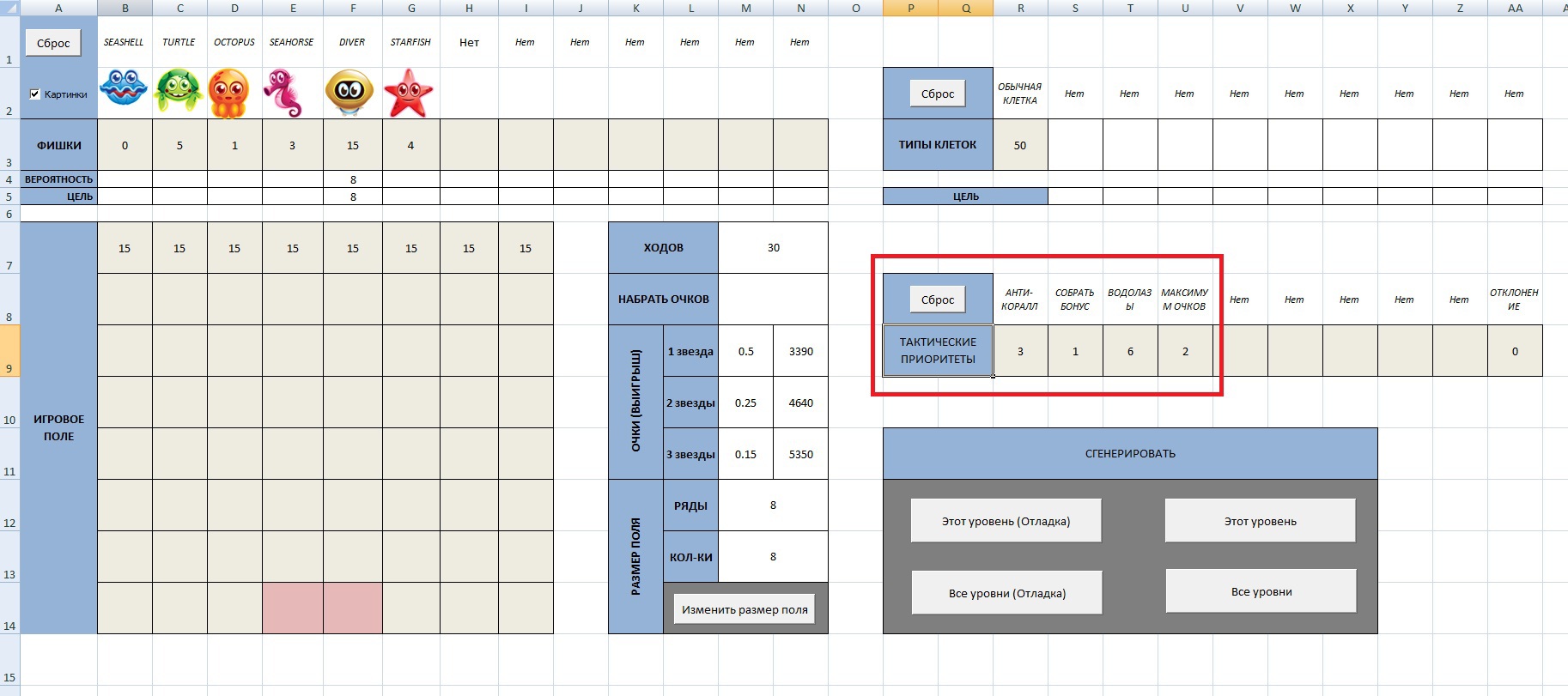

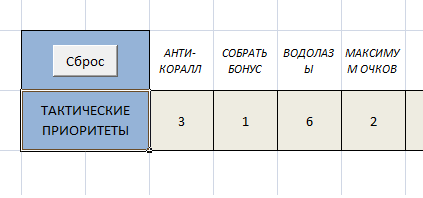

The possibility of setting these priorities for each level, we added to our level editor based on Excel:

Level-designer creates a level and sets tactical priorities for the bot - the generated XML file will contain all the necessary information about both the level and how to test it. As you can see in the picture, to solve the level, the following prioritization is assumed:

When testing the level, the bot must take these priorities into account and select the appropriate moves. Having decided on a strategy, it is already easy to program a bot.

The bot works according to the following simple algorithm:

The task of determining whether the course corresponds to a tactical priority will not be discussed in detail here. In short, when creating an array of possible moves, the parameters of each move are saved (how many chips were collected, what type of chips were there, whether a bonus chip was “created”, how many points were scored, etc.) and then these parameters are checked for compliance priorities.

After the bot "wins back" the level a specified number of times, it displays statistics on the results: how many times the level was won, how many moves it took on average, how many points were scored, etc. Based on this information, it will be possible to decide whether the level complexity corresponds to the desired balance. For example, part of the printout for the level shown in the pictures above:

It is seen that the level is lost in 63% of cases. This is a quantitative assessment of this level, on which you can already rely when balancing and determining the order of levels in the game.

Above, we assumed that the player, choosing the next move, analyzes the moves available in this position and from them chooses the one that best suits the most priority tactics at the moment. And based on this assumption, we asked the logic for the bot.

But there are two assumptions here:

It turns out that a bot built on the basis of these assumptions will not play as an average, but as an “optimal” player, who is well acquainted with all the subtleties of the game. And focus on the statistics collected by this bot can not. What to do?

Obviously, you need to introduce some kind of correction factor that would make the actions of the bot less “ideal”. We decided to dwell on a simple version - to make part of the bot moves just random.

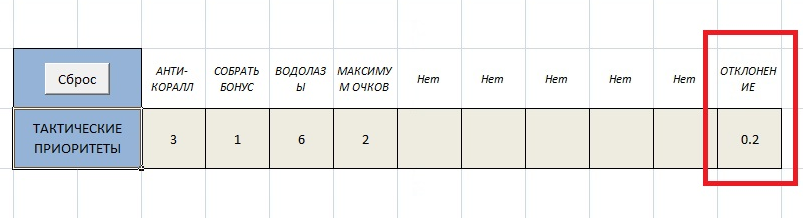

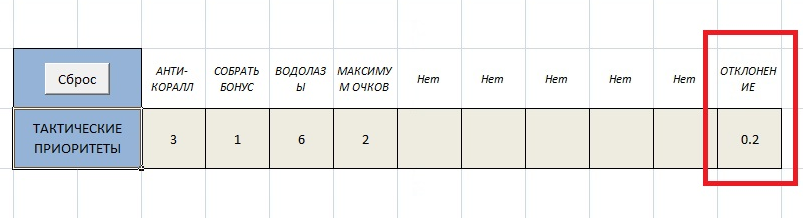

The coefficient determining the number of random moves again sets the level designer based on his goals. We called this coefficient “deviation from the strategy” - let's set it for this level equal to 0.2:

A deviation of 0.2 means that with a probability of 0.2 (or in other words in 20% of cases) the bot will simply choose a random move among those on the board. Let's see how the level statistics changed with this deviation (the previous statistics were calculated when the deviation equals zero - that is, the bot exactly followed the specified priorities):

The percentage of lost levels is expected to increase by 15% (from 63 to 78). The average completeness of the lost level (the percentage lowered to the bottom of divers) also expectedly fell. But the average number of moves when winning, curiously, has not changed.

Statistics show that this level is quite complicated: 24 out of 30 moves must be well thought out (30 * 0.2 = 6 moves can be made “for nothing”), and even in this case, the player will lose in 78% of cases.

The question remains - where did the coefficient of 0.2 for this level come from? What are the coefficients for other levels? We decided to leave this to the discretion of the level designer.

The meaning of this coefficient is very simple: “the number of rash moves a player can make at this level”. If you need a simple level for the initial stage of the game, which should be easily passed by any player, then you can balance the level with this coefficient equal to 0.9 or even 1. If you need a complex or very difficult level, for the passage of which the player must exert maximum efforts and skills , then balancing can be carried out with a small or even zero deviation from the optimal strategy.

And finally, a few words about the speed of work.

We have made the bot part of the game engine - depending on the flag set, the program either waits for a move from the player, or the moves are made by the bot.

The first version of the bot turned out to be quite slow - it took more than an hour for 1000 runs of a level of 30 moves, even though in the test mode all graphic effects were turned off and the chips moved between cells instantly.

Since all the game logic is tied to the rendering cycle, and it is limited to 60 frames per second, to speed up the tester's work, it was decided to disable vertical synchronization and release the FPS. We use the LibGDX framework in which it is done this way (it may be useful to someone):

After that, the bot quickly ran at a speed of almost 1000 frames per second! For most levels, this is enough to make 1000 level “runs” in less than 5 minutes. To be honest, I would like to be even faster, but you can already work with this.

Here you can see the video of the bot (unfortunately, when recording video, FPS drops to about 120):

As a result, we have a bot tied to the game engine - there is no need to maintain a separate code tester.

If in the future new mechanics are added to the game, the bot can be easily taught to test them - all that is needed is to add identifiers for new tactics to the level editor and enter additional parameters into the code when analyzing moves.

The hardest thing, of course, is to assess how the behavior of the bot corresponds to the behavior of the average player. But this will be the weak side of any approach to building an automatic tester, so no one, of course, has canceled testing on living people (especially from the target audience).

Even with a large number of testers in the team, it is simply unrealistic to collect detailed statistics for each level (and there are hundreds of them in modern games). Obviously, the testing process must somehow be automated.

Below is a story about how we did it in our indie match-3 game, on which we are now finishing work. (Caution - footwoman!)

Formulation of the problem

To automate the testing of levels, we decided to program a “bot” that would meet the following requirements:

')

Binding to the game engine - the bot must “use” the same blocks of code that are used during the standard game. In this case, you can be sure that both the bot and the real player will play the same game (that is, they will deal with the same logic, mechanics, bugs, and so on).

Scalability - you need a bot to test not only existing levels, but also levels that can be created in the future (given that new types of chips, cells, enemies, etc. can be added to the game in the future) . This requirement largely overlaps with the previous one.

Performance - to collect statistics on one level, you need to “play” it at least 1000 times, and hundreds of levels - then the bot must play and collect statistics quickly enough so that the analysis of one level does not take all day.

Reliability - the moves of the bot should be more or less close to the moves of the average player.

The first three points speak for themselves, but how to make the bot play “humanly”?

Bot strategy First approach

Before starting to work on our project, we went through about 1000 levels of varying degrees of difficulty in different match3 games (two or three games even went completely). And having considered this experience, we decided to dwell on the next approach to creating levels.

At once I will make a reservation that this decision does not claim to be the ultimate truth, and is not without flaws, but it helped us build a bot that meets the requirements described above.

We decided to proceed from the fact that a good level is a kind of task for finding the optimal strategy - the level designer creating the level “guessing” the optimal sequence of actions, and the player must guess it. If he guesses correctly, then the level is passed relatively easily. If the player acts “out of order”, then he is more likely to fall into a stalemate.

For example, let them give a level in which there are “breeding” chips (if you don’t collect a group of chips near them, then they will capture one of the adjacent cells before the next player’s move). The goal of the level is to drop certain chips into the bottom row of the board. Our game is devoted to sea adventures (aha, again!) Therefore it is required to lower to the bottom of divers, and corals play the role of breeding chips:

If the player does not take the time to destroy the corals from the very beginning, and instead will immediately try to lower the divers, then the corals are likely to grow and make the level impassable. Therefore, in this case, we can say that the strategy that the Level Designer “made” is approximately like this: “Destroy the corals as soon as possible, and then lower the divers”.

Thus, the strategy of passing the level, both from the level of the designer, and from the player, is to prioritize between the various tactical actions (hereinafter I will just call them tactics). The set of these tactics is approximately the same for all games of the genre:

- collect as many chips as possible (a set of points)

- collect chips of a certain type

- destroy the "interfering" chips \ cells

- create bonus chips

- attack the boss

- put certain chips down

- etc…

The possibility of setting these priorities for each level, we added to our level editor based on Excel:

Level-designer creates a level and sets tactical priorities for the bot - the generated XML file will contain all the necessary information about both the level and how to test it. As you can see in the picture, to solve the level, the following prioritization is assumed:

- Coral Destruction (ANTI-CORAL)

- Create bonuses (COLLECT BONUS)

- Descent divers (VODOLAZY)

- Selection of the most valuable moves (MAXIMUM POINTS)

When testing the level, the bot must take these priorities into account and select the appropriate moves. Having decided on a strategy, it is already easy to program a bot.

Bot algorithm

The bot works according to the following simple algorithm:

- Download priority list for level from XML file

- Save all currently possible moves to the array

- Take the next priority from the list

- Check if there are any moves in the array corresponding to the current priority

- If there is, then choose the best move.

- If not, GOTO 3.

- After the move is done, GOTO 2

The task of determining whether the course corresponds to a tactical priority will not be discussed in detail here. In short, when creating an array of possible moves, the parameters of each move are saved (how many chips were collected, what type of chips were there, whether a bonus chip was “created”, how many points were scored, etc.) and then these parameters are checked for compliance priorities.

After the bot "wins back" the level a specified number of times, it displays statistics on the results: how many times the level was won, how many moves it took on average, how many points were scored, etc. Based on this information, it will be possible to decide whether the level complexity corresponds to the desired balance. For example, part of the printout for the level shown in the pictures above:

STATISTICS BY LEVEL :

====================================

LIMIT OF MOVEMENTS : 30

NUMBER OF RUNNERS : 1000

PERCENT LOST : 63 % ( 630 RUNNERS )

AVERAGE COMPLETION OF LOST PLAYED : 61 %

AVERAGE NUMBER OF MOVES WHEN WIN : 24

------------------------------------

GLASSES :

-------------------

MINIMUM ACCOUNT : 2380

MAXIMUM ACCOUNT : 7480

-------------------

------------------------------------

RUNNING STATISTICS :

-------------------

0 PLAY ( 87.0 % COMPLETED )

1 . LOSS ( 12.0 % COMPLETED )

2 PLAY ( 87.0 % COMPLETED )

3 PLAY ( 87.0 % COMPLETED )

4 PLAY ( 87.0 % COMPLETED )

5 VICTORY ( LEVEL COMPLETED IN 26 STATES. 2 STARS COLLECTED. )

6 LOST ( 75.0 % COMPLETED )

7 VICTORY ( LEVEL COMPLETED IN 26 STATES. 3 STARS COLLECTED. )

...

It is seen that the level is lost in 63% of cases. This is a quantitative assessment of this level, on which you can already rely when balancing and determining the order of levels in the game.

And who said that the player will act that way?

Above, we assumed that the player, choosing the next move, analyzes the moves available in this position and from them chooses the one that best suits the most priority tactics at the moment. And based on this assumption, we asked the logic for the bot.

But there are two assumptions here:

- A real player hardly analyzes all the moves on the board — rather, he chooses the first more or less reasonable move found. And even if he tries to consider all the moves on the board, he may still not notice a good move.

- A player is not always able to determine and prioritize correctly - for this you need a good knowledge of a particular game and its mechanic, which means you can’t expect optimal actions on each particular move. (Although this is a smaller problem, because the player will learn from his mistakes and in the end will figure out what is important for passing the level and what can wait.)

It turns out that a bot built on the basis of these assumptions will not play as an average, but as an “optimal” player, who is well acquainted with all the subtleties of the game. And focus on the statistics collected by this bot can not. What to do?

Bot strategy Second approximation

Obviously, you need to introduce some kind of correction factor that would make the actions of the bot less “ideal”. We decided to dwell on a simple version - to make part of the bot moves just random.

The coefficient determining the number of random moves again sets the level designer based on his goals. We called this coefficient “deviation from the strategy” - let's set it for this level equal to 0.2:

A deviation of 0.2 means that with a probability of 0.2 (or in other words in 20% of cases) the bot will simply choose a random move among those on the board. Let's see how the level statistics changed with this deviation (the previous statistics were calculated when the deviation equals zero - that is, the bot exactly followed the specified priorities):

STATISTICS BY LEVEL :

====================================

LIMIT OF MOVEMENTS : 30

NUMBER OF RUNNERS : 1000

PERCENT LOST : 78 % ( 780 RUNNERS )

AVERAGE COMPLETION OF LOST PLAYED : 56 %

AVERAGE NUMBER OF MOVES WHEN WIN : 24

------------------------------------

GLASSES :

-------------------

MINIMUM ACCOUNT : 2130

MAXIMUM ACCOUNT : 7390

-------------------

...

The percentage of lost levels is expected to increase by 15% (from 63 to 78). The average completeness of the lost level (the percentage lowered to the bottom of divers) also expectedly fell. But the average number of moves when winning, curiously, has not changed.

Statistics show that this level is quite complicated: 24 out of 30 moves must be well thought out (30 * 0.2 = 6 moves can be made “for nothing”), and even in this case, the player will lose in 78% of cases.

The question remains - where did the coefficient of 0.2 for this level come from? What are the coefficients for other levels? We decided to leave this to the discretion of the level designer.

The meaning of this coefficient is very simple: “the number of rash moves a player can make at this level”. If you need a simple level for the initial stage of the game, which should be easily passed by any player, then you can balance the level with this coefficient equal to 0.9 or even 1. If you need a complex or very difficult level, for the passage of which the player must exert maximum efforts and skills , then balancing can be carried out with a small or even zero deviation from the optimal strategy.

Performance

And finally, a few words about the speed of work.

We have made the bot part of the game engine - depending on the flag set, the program either waits for a move from the player, or the moves are made by the bot.

The first version of the bot turned out to be quite slow - it took more than an hour for 1000 runs of a level of 30 moves, even though in the test mode all graphic effects were turned off and the chips moved between cells instantly.

Since all the game logic is tied to the rendering cycle, and it is limited to 60 frames per second, to speed up the tester's work, it was decided to disable vertical synchronization and release the FPS. We use the LibGDX framework in which it is done this way (it may be useful to someone):

cfg. vSyncEnabled = false ;

cfg. foregroundFPS = 0 ;

cfg. backgroundFPS = 0 ;

new LwjglApplication ( new YourApp ( ) , cfg ) ;

After that, the bot quickly ran at a speed of almost 1000 frames per second! For most levels, this is enough to make 1000 level “runs” in less than 5 minutes. To be honest, I would like to be even faster, but you can already work with this.

Here you can see the video of the bot (unfortunately, when recording video, FPS drops to about 120):

Results

As a result, we have a bot tied to the game engine - there is no need to maintain a separate code tester.

If in the future new mechanics are added to the game, the bot can be easily taught to test them - all that is needed is to add identifiers for new tactics to the level editor and enter additional parameters into the code when analyzing moves.

The hardest thing, of course, is to assess how the behavior of the bot corresponds to the behavior of the average player. But this will be the weak side of any approach to building an automatic tester, so no one, of course, has canceled testing on living people (especially from the target audience).

Source: https://habr.com/ru/post/265057/

All Articles