Low Cost 10GbE Cluster Infrastructure

At HOSTKEY, we are regularly faced with the need to organize VLANs at a speed of 10 Gbit for virtualization clusters - our own and client ones. This technology is necessary for interfacing with storage systems, for backing up, for accessing a database and for providing live migration of virtual machines. The question always arises - how to do it reliably and at minimal cost?

Until recently, the minimum costs for such a solution were substantial. The smallest 10GbE switch was 24 ports, and the simplest card was the Intel X520 for $ 500. The budget for the port was about 700-1000 dollars, and the entrance ticket was very high.

Progress does not stand still, at the beginning of 2015 a new class of 10GbE devices appeared for reasonable money from a warehouse in Moscow and under warranty.

Since we regularly build dedicated servers and private clouds on their base at HOSTKEY, we want to share our experience.

So, our Client has 5 machines in a cluster and he needs 10GbE VLAN - there are 2 filers, one backup machine and several nodes. On gigabit, everything is slow and I don't want to put gigabit four ports in the timing in the machines. It should be 10GbE and the budget is limited. Sounds familiar, doesn't it?

')

We select network cards for servers - to make new ones, from stock and warranty. There are three options, and they are all on PCIe x8:

1) Old proven Intel X520-DA2 .

2 SFP + ports, 5 years on the market. The price is about 30,000 rubles (~ $ 450). Please note that you need to take the modules for 15,000 rubles each or order them at NAG indicating that they should be requested for this model - otherwise it will not see them. The same applies to DAC (copper cables with direct connection to SFP + interfaces - maybe someone doesn’t know), we also thought that everyone was suitable and had to go to the second round.

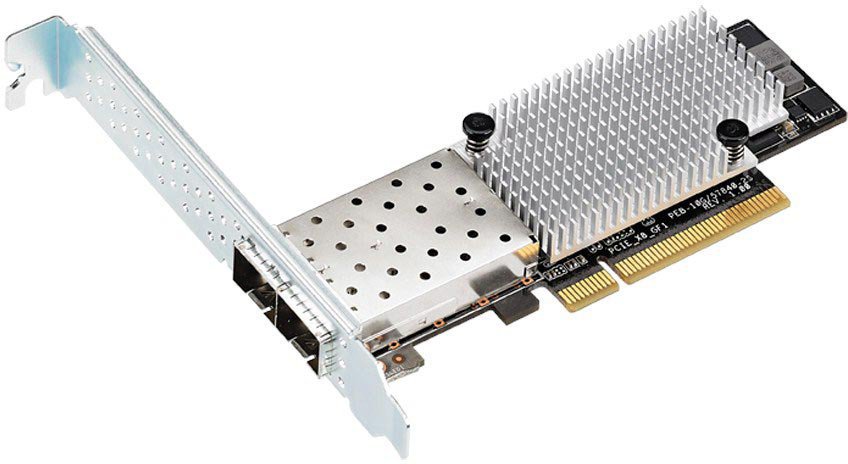

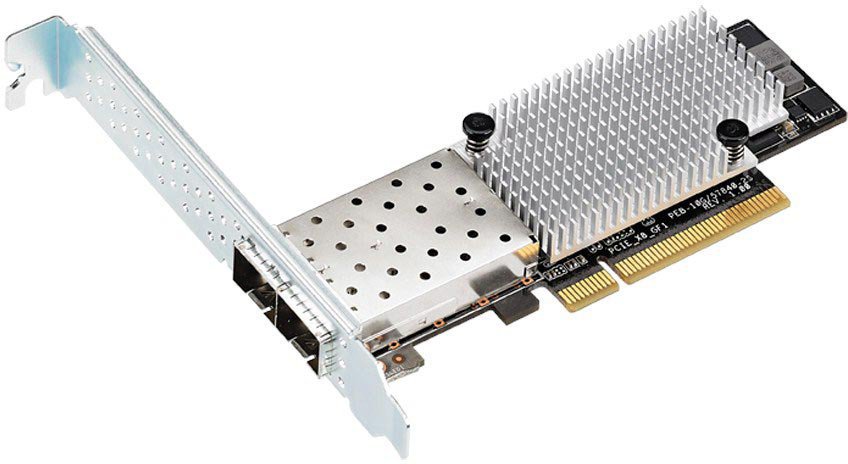

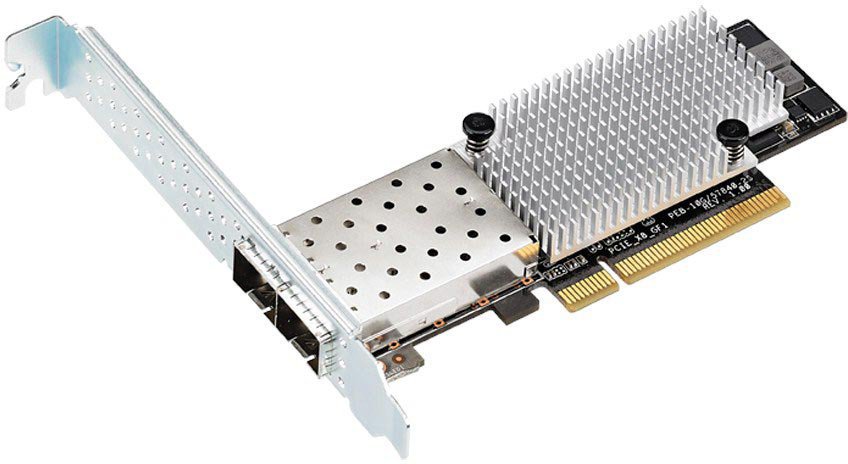

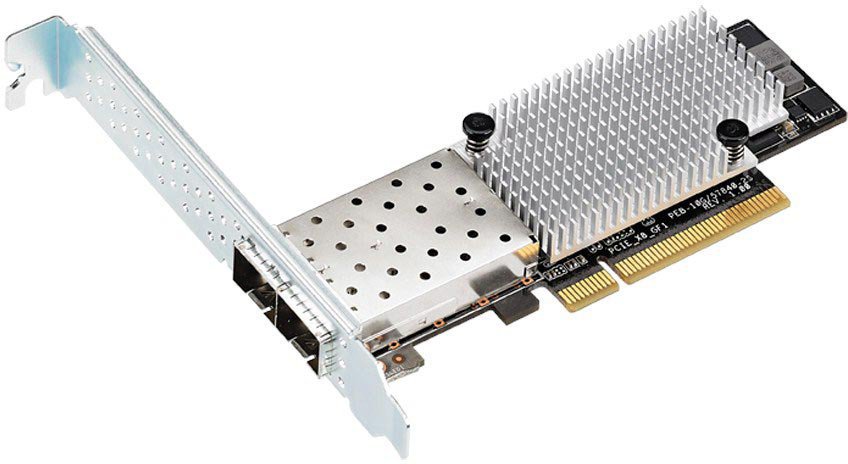

2) Newbie Asus PEB-10G / 57811-1S

1 or 2 SFP + ports on the BCM 57811S chipset.

For the first time we noticed them on sale in the spring. Issue price of 10,000 rubles apiece with one port and 16,000 for a 2-port PEB-10G / 57840-2S. Any modules, the controller does not matter. The scarf is surprisingly small, slightly larger than the palm. Please note - there are no drivers for VmWare in the box (we will try to solve this problem in one of the following posts).

3) New Qlogic QLE3440-CU-CK

One or two ports on SFP + under DAC, as we were looking for.

One-port version was able to buy for 16 000 rubles. On Amazon, the price for the QLE3440-CU-CK is 250 bucks, i.e. This is not a stock at the old price - nove. All drivers are in place, including ESXI. Card slightly larger. DAC / SFP + modules do not need special sewing - it is omnivorous.

Now we need to choose a switch, we need something for 8-12 ports with management at the L2 level without any special bells and whistles. Nexus and Extreme are not considered, not the same size. Again, we are looking for something new and from stock. Old Melanoks with Ebay, Tsiski on xenpack is not considered, although many do.

The choice is even more modest:

1) Netgear XS708E for 8 copper ports, the price is 72,000 rubles in Regard and other places. L2 is, everything is controlled. We do not have copper ports on the maps, in this case we will have to take something else.

2) Netgear XS712T-100NES for 12 copper ports, the price is 142,000 rubles in Regard.

3) SNR-S2970-12X - 12 port switch from NAG.

It has 12 SFP + ports, 8 simply gigabit ports and full control in L2. The price is about 100,000 rubles, i.e. 8333 rubles for 10G port and another 8 gigabit for free - we will also collect the management VLAN on it. We read the forum and the branch with the discussion of the device - for us this is good for the cluster, but it definitely will not work for the reference switch of the data center level.

We like DAC more, they have a delay of 0.3 µs versus 2-3 µs for 10GBase-T. At the level of network aggregation of data centers, this is not so important, and nothing is more important for connecting filers than a small delay.

We ask colleagues about operating experience and order in NAG.ru, after a few days the switch is already on the table in Moscow. The switch interface is simple, there is a web interface, an IOS-shaped CLI and everything is managed via SNMP. We do not need anything more complicated than cutting VLANs and putting in monitoring in CACTI, although it has much more functions. It can shape, it can make trunks, it can clamp MAC on ports, it can do port mirror on 1 / 10G, tint QOS traffic and everything else that stuck L2 switch in 2015.

First screen:

Port Management:

MAC addresses:

Port Configuration:

Configuring shaping and pps limits:

Port mirroring control:

VLAN and trunk management:

We are assembling a stand of two servers and a switch — two cars on s1366, a super micra and an intel. We put the cards, put them on the SSD servers of Windows Server 2012 (as the client needs) and connect all this DAC to each other. For reference (as they love here) DAC cable for 2 meters costs about 2000 rubles.

Drivers get up without problems, everything starts up almost immediately. No mistakes, everything works out of the box. Qlogic can make virtual adapters of itself, it’s convenient for interface virtualization in a cluster — for example, one for heartbeat, one for iscsi, one for live migration, one for the Internet, and one for managing all of this. With Qlogic, everything can be parsed at the level of virtual adapters.

IPERF in all modes shows 8.5 Gbit for Asus and about 8 Gbit for Qlogic. We tried in a different number of threads, turned off offload, twisted the size of the jumbo-frame - the difference is within the statistical error.

ASUS:

Qlogic:

Turning servers straight into each other - the same numbers, the switch does not introduce a delay. It’s as simple as that - just swap the cable from the switch to the server and everything turns on.

Please note - we initially noticed that Asus does not accelerate above 6 Gigabits. It turned out that in Intel's mother one of the eighth-size slots is actually four lanes. Rearranged in PCIe with eight lanes - it became immediately 8.5 Gbit. At such speeds, this is already important, rests on any narrow neck.

We test the work of the usual SMB-balls in Windows - without any special problems, the post is not about that. First, we get a speed of about 300MB of transfer - SSD via SATA2 no longer pulls. There is no SATA3 on the mother, so we install the RAM Disk and upload everything there. We distribute Tom over the network and the picture changes immediately - 609MB per second. 4.7 GB installation image is copied in less than 8 seconds. Judging by the fact that there is a flat shelf on the chart, we are somewhere in the constraint, and this is not a network. We will not understand anymore, 600MB per second is more than enough - a typical 10GB virtual machine can now be migrated in 16 seconds from the node to the node.

What happens is that everything works, quickly and simply. The transfer rate in this solution is about 8.5 Gbit / s - for most tasks it is more than enough. To create a 10G network infrastructure for a cluster of 10 cars, you need only 200,000 rubles or $ 3,000, the amount is more than lifting. If to complete with dual-port boards and two switches for a reserve, the issue price is 360,000 rubles, or about 5,500 dollars.

We at HOSTKEY specialize in renting for customers of large custom dedicated servers and clusters based on them. 10GbE port for organizing a VLAN on a dedicated server will cost only 2100 rubles per month. If switching on 10G Ethernet is done without a switch, for example, two servers look into one file with a two-port card - this will add only 1,200–1,600 rubles per server per month. Please note that it is difficult to simultaneously put a 10G controller and a hardware RAID controller in a single-processor 1U server. In dual-processor machines, there is usually a WIO option when putting two cards on top of each other through a riser or one board is placed in front, as in HP DL160 or Dellach.

HOSTKEY leases dedicated servers for rent in Moscow and the Netherlands since 2008. The total fleet of own servers now amounts to 1,300 physical machines on 5 sites. We work in TierIII-certified Moscow data center Datapro Aviamotornaya, Datalayn Nord and on several simpler sites. In the Netherlands, racks are rented at Serverius.nl , the servers there are all our own - no one is selling out. In these data centers we place our clients on the server colos and provide dedicated servers with all the network infrastructure. Prices are reasonable, we do everything to reduce our costs and offer customers economically viable conditions in this difficult time. If you need something special - contact us, we will always help.

HOSTKEY leases dedicated servers for rent in Moscow and the Netherlands since 2008. The total fleet of own servers now amounts to 1,300 physical machines on 5 sites. We work in TierIII-certified Moscow data center Datapro Aviamotornaya, Datalayn Nord and on several simpler sites. In the Netherlands, racks are rented at Serverius.nl , the servers there are all our own - no one is selling out. In these data centers we place our clients on the server colos and provide dedicated servers with all the network infrastructure. Prices are reasonable, we do everything to reduce our costs and offer customers economically viable conditions in this difficult time. If you need something special - contact us, we will always help.

Promo code for 10% discount until the end of August 2015 for readers - HABR170815

Until recently, the minimum costs for such a solution were substantial. The smallest 10GbE switch was 24 ports, and the simplest card was the Intel X520 for $ 500. The budget for the port was about 700-1000 dollars, and the entrance ticket was very high.

Progress does not stand still, at the beginning of 2015 a new class of 10GbE devices appeared for reasonable money from a warehouse in Moscow and under warranty.

Since we regularly build dedicated servers and private clouds on their base at HOSTKEY, we want to share our experience.

So, our Client has 5 machines in a cluster and he needs 10GbE VLAN - there are 2 filers, one backup machine and several nodes. On gigabit, everything is slow and I don't want to put gigabit four ports in the timing in the machines. It should be 10GbE and the budget is limited. Sounds familiar, doesn't it?

')

Choosing 10GbE controllers

We select network cards for servers - to make new ones, from stock and warranty. There are three options, and they are all on PCIe x8:

1) Old proven Intel X520-DA2 .

2 SFP + ports, 5 years on the market. The price is about 30,000 rubles (~ $ 450). Please note that you need to take the modules for 15,000 rubles each or order them at NAG indicating that they should be requested for this model - otherwise it will not see them. The same applies to DAC (copper cables with direct connection to SFP + interfaces - maybe someone doesn’t know), we also thought that everyone was suitable and had to go to the second round.

2) Newbie Asus PEB-10G / 57811-1S

1 or 2 SFP + ports on the BCM 57811S chipset.

For the first time we noticed them on sale in the spring. Issue price of 10,000 rubles apiece with one port and 16,000 for a 2-port PEB-10G / 57840-2S. Any modules, the controller does not matter. The scarf is surprisingly small, slightly larger than the palm. Please note - there are no drivers for VmWare in the box (we will try to solve this problem in one of the following posts).

3) New Qlogic QLE3440-CU-CK

One or two ports on SFP + under DAC, as we were looking for.

One-port version was able to buy for 16 000 rubles. On Amazon, the price for the QLE3440-CU-CK is 250 bucks, i.e. This is not a stock at the old price - nove. All drivers are in place, including ESXI. Card slightly larger. DAC / SFP + modules do not need special sewing - it is omnivorous.

Choosing a 10GbE Switch

Now we need to choose a switch, we need something for 8-12 ports with management at the L2 level without any special bells and whistles. Nexus and Extreme are not considered, not the same size. Again, we are looking for something new and from stock. Old Melanoks with Ebay, Tsiski on xenpack is not considered, although many do.

The choice is even more modest:

1) Netgear XS708E for 8 copper ports, the price is 72,000 rubles in Regard and other places. L2 is, everything is controlled. We do not have copper ports on the maps, in this case we will have to take something else.

2) Netgear XS712T-100NES for 12 copper ports, the price is 142,000 rubles in Regard.

3) SNR-S2970-12X - 12 port switch from NAG.

It has 12 SFP + ports, 8 simply gigabit ports and full control in L2. The price is about 100,000 rubles, i.e. 8333 rubles for 10G port and another 8 gigabit for free - we will also collect the management VLAN on it. We read the forum and the branch with the discussion of the device - for us this is good for the cluster, but it definitely will not work for the reference switch of the data center level.

We like DAC more, they have a delay of 0.3 µs versus 2-3 µs for 10GBase-T. At the level of network aggregation of data centers, this is not so important, and nothing is more important for connecting filers than a small delay.

We ask colleagues about operating experience and order in NAG.ru, after a few days the switch is already on the table in Moscow. The switch interface is simple, there is a web interface, an IOS-shaped CLI and everything is managed via SNMP. We do not need anything more complicated than cutting VLANs and putting in monitoring in CACTI, although it has much more functions. It can shape, it can make trunks, it can clamp MAC on ports, it can do port mirror on 1 / 10G, tint QOS traffic and everything else that stuck L2 switch in 2015.

SNR-S2970-12X web interface snapshots:

First screen:

Port Management:

MAC addresses:

Port Configuration:

Configuring shaping and pps limits:

Port mirroring control:

VLAN and trunk management:

Testing

We are assembling a stand of two servers and a switch — two cars on s1366, a super micra and an intel. We put the cards, put them on the SSD servers of Windows Server 2012 (as the client needs) and connect all this DAC to each other. For reference (as they love here) DAC cable for 2 meters costs about 2000 rubles.

Drivers get up without problems, everything starts up almost immediately. No mistakes, everything works out of the box. Qlogic can make virtual adapters of itself, it’s convenient for interface virtualization in a cluster — for example, one for heartbeat, one for iscsi, one for live migration, one for the Internet, and one for managing all of this. With Qlogic, everything can be parsed at the level of virtual adapters.

Iperf

IPERF in all modes shows 8.5 Gbit for Asus and about 8 Gbit for Qlogic. We tried in a different number of threads, turned off offload, twisted the size of the jumbo-frame - the difference is within the statistical error.

ASUS:

Qlogic:

Turning servers straight into each other - the same numbers, the switch does not introduce a delay. It’s as simple as that - just swap the cable from the switch to the server and everything turns on.

10G controllers and PCIe bus width

Please note - we initially noticed that Asus does not accelerate above 6 Gigabits. It turned out that in Intel's mother one of the eighth-size slots is actually four lanes. Rearranged in PCIe with eight lanes - it became immediately 8.5 Gbit. At such speeds, this is already important, rests on any narrow neck.

SMB file transfer

We test the work of the usual SMB-balls in Windows - without any special problems, the post is not about that. First, we get a speed of about 300MB of transfer - SSD via SATA2 no longer pulls. There is no SATA3 on the mother, so we install the RAM Disk and upload everything there. We distribute Tom over the network and the picture changes immediately - 609MB per second. 4.7 GB installation image is copied in less than 8 seconds. Judging by the fact that there is a flat shelf on the chart, we are somewhere in the constraint, and this is not a network. We will not understand anymore, 600MB per second is more than enough - a typical 10GB virtual machine can now be migrated in 16 seconds from the node to the node.

findings

What happens is that everything works, quickly and simply. The transfer rate in this solution is about 8.5 Gbit / s - for most tasks it is more than enough. To create a 10G network infrastructure for a cluster of 10 cars, you need only 200,000 rubles or $ 3,000, the amount is more than lifting. If to complete with dual-port boards and two switches for a reserve, the issue price is 360,000 rubles, or about 5,500 dollars.

We at HOSTKEY specialize in renting for customers of large custom dedicated servers and clusters based on them. 10GbE port for organizing a VLAN on a dedicated server will cost only 2100 rubles per month. If switching on 10G Ethernet is done without a switch, for example, two servers look into one file with a two-port card - this will add only 1,200–1,600 rubles per server per month. Please note that it is difficult to simultaneously put a 10G controller and a hardware RAID controller in a single-processor 1U server. In dual-processor machines, there is usually a WIO option when putting two cards on top of each other through a riser or one board is placed in front, as in HP DL160 or Dellach.

About HOSTKEY

HOSTKEY leases dedicated servers for rent in Moscow and the Netherlands since 2008. The total fleet of own servers now amounts to 1,300 physical machines on 5 sites. We work in TierIII-certified Moscow data center Datapro Aviamotornaya, Datalayn Nord and on several simpler sites. In the Netherlands, racks are rented at Serverius.nl , the servers there are all our own - no one is selling out. In these data centers we place our clients on the server colos and provide dedicated servers with all the network infrastructure. Prices are reasonable, we do everything to reduce our costs and offer customers economically viable conditions in this difficult time. If you need something special - contact us, we will always help.

HOSTKEY leases dedicated servers for rent in Moscow and the Netherlands since 2008. The total fleet of own servers now amounts to 1,300 physical machines on 5 sites. We work in TierIII-certified Moscow data center Datapro Aviamotornaya, Datalayn Nord and on several simpler sites. In the Netherlands, racks are rented at Serverius.nl , the servers there are all our own - no one is selling out. In these data centers we place our clients on the server colos and provide dedicated servers with all the network infrastructure. Prices are reasonable, we do everything to reduce our costs and offer customers economically viable conditions in this difficult time. If you need something special - contact us, we will always help.Promo code for 10% discount until the end of August 2015 for readers - HABR170815

Source: https://habr.com/ru/post/264931/

All Articles