Using Intel SSSE3 instruction set to speed up the implementation of the DNN algorithm in speech recognition tasks performed on mobile devices

Over the past thirty years, speech recognition technology has seriously advanced, starting its path in research laboratories and reaching a wide range of consumers. These technologies are beginning to play an important role in our lives. They can be found in the workplace, at home, in the car. They are used for medical purposes and in other areas. Speech recognition is in the top 10 promising world-class technologies.

Vladstudio Original Picture

As a result of research in recent years, there has been a change in the basic speech recognition algorithms. So, before that it was the GMM (Gaussian Mixture Model) and HMM-GMM (Hidden Markov Model - Gaussian Mixture Model) algorithms. From them there was a transition to the DNN (Deep Neural Network) algorithm. The operation of this algorithm resembles the activity of the human brain. It uses complex calculations and a huge amount of data.

Thanks to the Internet, any smartphone owner can use speech recognition technology. To his services - countless servers. But without the Internet, speech recognition services in mobile devices are almost useless. They are rarely able to correctly understand those who are trying to "talk" with them.

')

Is it possible to transfer the implementation of the DNN algorithm from the server to a smartphone or tablet? The answer to this question is yes. Thanks to Intel's support for the SSSE3 instruction set, speech recognition applications based on the DNN algorithm can be used on mobile devices. No internet connection is required. As a result of our tests, the accuracy of speech recognition by such an application was more than 80%. This is very close to what is achievable with server systems. In this article, we will discuss the DNN algorithm and how the Intel SSSE3 instruction set can help in speeding up the calculations required to implement this algorithm.

DNN (GNS) is short for the Deep Neural Network (Deep Neural Network). This is a direct distribution network containing many hidden layers. DNN is at the forefront of modern machine learning technology. For this algorithm, there were many options for practical application.

Deep neural networks have a large number of hidden layers. When learning, you need to modify tens of millions of parameters. As a result, training such networks requires a considerable amount of time.

Speech recognition is a typical example of using DNN. Simply put, speech recognition applications can be represented as consisting of an acoustic model (acoustic model), a language model (language model), and a decoding subsystem (decoding). The acoustic model is used to model the probability distribution of pronunciation options. The language model is used to model the connections between words. At the decoding stage, the two models described above are used; speech is converted into text. The neural network is able to simulate any verbal constructions. While the deep neural network has a stronger ability to extract essential data features than a shallow network, it models the structure of the human brain, and is thus able to more accurately "understand" the characteristics of things. As a result, in comparison with other methods, acoustic and language models can be modeled more accurately in such a neural network.

Scopes of the DNN algorithm

Typically, a typical deep neural network contains many linear and nonlinear layers that overlap each other.

Four hidden layers in the DNN acoustic model

The network whose scheme is shown here consists of a set of linear layers. Each neuron from the previous layer is associated with each neuron from the next. The connection of the network input with its output can be described by the following formula:

Y T = X T W T + B

X T is a line vector, the input to a neural network. When applied to speech recognition, we usually place 4 pieces of data for simultaneous work on them, thus creating a 4xM input matrix. W T and B are, respectively, the linear transformation matrix of the neural network and the displacement vector. Typically, the dimension of such a network is very large, in all layers there is the same number of neurons, that is, the network has a square shape.

Intel calls the Supplemental Streaming SIMD Extensions 3 command set, or, for short, just SSSE3, the SSE3 command set extension. It is part of SIMD technology integrated into Intel microprocessors. This technology is designed to improve multimedia data processing capabilities. It is designed to speed up the tasks of encoding and decoding information and to accelerate the implementation of various calculations. Using the SSSE3 instruction set, we can process several data streams using one instruction per clock cycle. This can significantly improve the efficiency of applications. In particular, the SSSE3 commands are applicable to matrix calculations.

To use the SSSE3 instruction set, you need to include the corresponding SIMD header files:

The header file tmmintrin.h provides work with SSSE3, below is a description of the functions that are defined in it.

The __m64 and __m128 data structure definitions are in the header for MMX (mmintrin.h) and SSE (xmmintrin.h).

__m64:

__m128:

Here we look at a couple of functions. Their example will show how SSSE3 is used to speed up calculations when implementing the DNN algorithm.

__m128i _mm_maddubs_epi16 (__m128i a, __m128i b) Addition with saturation

This function is very important when performing matrix calculations in the DNN algorithm. The parameter is a 128-bit register (register), which is used to store 16 integers without a sign (8-bit). The parameter b is a signed integer, also 8-bit. The result is 8 signed 16-bit integers. This feature is great for performing matrix calculations:

__m128i _mm_hadd_epi32 (__m128i a, __m128i b) Addition of contiguous elements

This function can be called a function that performs pairwise addition. Parameters a and b are 128-bit registers that store 4 signed 32-bit integers. In accordance with the usual operation of adding the corresponding elements in two vectors, the team performs the addition of adjacent elements of the input vector:

Suppose we have a vector-based computational problem typical of the implementation of DNN.

There are five vectors: a1, b1, b2, b3, b4. Vector a1 is a one-dimensional array of 16 integers of type signed char. Vectors b1, b2, b3, b4 are arrays of integers of 16 elements each of unsigned char type. We need to get the scalar products a1 * b1, a1 * b2, a1 * b3, a1 * b4, the result must be saved as a 32-bit integer with a sign.

If we use the usual approach for C programming, the code for solving this problem will look like this:

Suppose that in one clock cycle you can perform one multiplication and one addition operation. We get - 64 clock cycles to perform calculations.

Now we use the SSSE3 instruction set for solving the same problem.

We store the result in a 128-bit register (s), in which 4 integers are placed. Considering the pipeline data processing, the calculations will take 12 or 13 clock cycles. If you compare these data, you get the following:

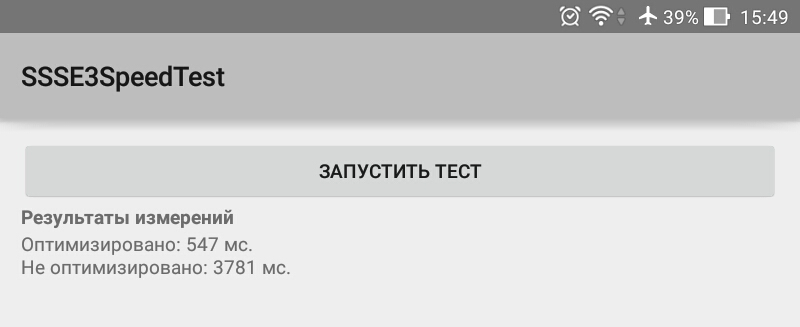

Let's make experiment, having taken the above-stated code as a basis. Create two functions that perform the same calculations in different ways. One of them, in the end, returns the sum of the elements of the integer array c , the second one - the sum of the 32-bit integer elements of the 128-bit register c . Variables are initialized with each function call. A total of 10,000,000 calls to each of the functions are performed, the test runs in the background thread.

Performance test application interface

Here are the results of testing the release version of the application on an Asus Fonepad 8 tablet with Intel Atom Z3530 CPU. Android 5.0 is installed on the device.

Comparison of execution speed of code written using and without using SSSE3

As a result, it turns out that the code implementing the calculations using the SSSE3 instructions is executed, on average, 7.2 times faster than normal.

The project source code, which can be imported into Android Studio, can be found here .

As you know, when recognizing speech using a deep neural network, a lot of matrix calculations are performed. If these calculations are optimized, you can achieve better than ever performance on the IA platform. We work in partnership with ISV Unisound, which provides speech recognition services in China. Unisound was able to achieve a performance gain of 10% when using software based on DNN on ARM devices.

DNN nowadays is becoming the main algorithm for speech recognition. It, in particular, is used by such services as Google Now, Baidu Voice, Tencent Wechat, iFlytek Speech Service, Unisound Speech Service and many others. At the same time, there is a set of SSSE3 instructions that can help in optimizing the calculations on which the speech recognition process is built. If everywhere, where DNN is used, they implement such optimization, this will improve the quality of speech recognition and will allow to fully discover the capabilities of the IA platform.

Vladstudio Original Picture

Overview

As a result of research in recent years, there has been a change in the basic speech recognition algorithms. So, before that it was the GMM (Gaussian Mixture Model) and HMM-GMM (Hidden Markov Model - Gaussian Mixture Model) algorithms. From them there was a transition to the DNN (Deep Neural Network) algorithm. The operation of this algorithm resembles the activity of the human brain. It uses complex calculations and a huge amount of data.

Thanks to the Internet, any smartphone owner can use speech recognition technology. To his services - countless servers. But without the Internet, speech recognition services in mobile devices are almost useless. They are rarely able to correctly understand those who are trying to "talk" with them.

')

Is it possible to transfer the implementation of the DNN algorithm from the server to a smartphone or tablet? The answer to this question is yes. Thanks to Intel's support for the SSSE3 instruction set, speech recognition applications based on the DNN algorithm can be used on mobile devices. No internet connection is required. As a result of our tests, the accuracy of speech recognition by such an application was more than 80%. This is very close to what is achievable with server systems. In this article, we will discuss the DNN algorithm and how the Intel SSSE3 instruction set can help in speeding up the calculations required to implement this algorithm.

Preliminary Information

DNN (GNS) is short for the Deep Neural Network (Deep Neural Network). This is a direct distribution network containing many hidden layers. DNN is at the forefront of modern machine learning technology. For this algorithm, there were many options for practical application.

Deep neural networks have a large number of hidden layers. When learning, you need to modify tens of millions of parameters. As a result, training such networks requires a considerable amount of time.

Speech recognition is a typical example of using DNN. Simply put, speech recognition applications can be represented as consisting of an acoustic model (acoustic model), a language model (language model), and a decoding subsystem (decoding). The acoustic model is used to model the probability distribution of pronunciation options. The language model is used to model the connections between words. At the decoding stage, the two models described above are used; speech is converted into text. The neural network is able to simulate any verbal constructions. While the deep neural network has a stronger ability to extract essential data features than a shallow network, it models the structure of the human brain, and is thus able to more accurately "understand" the characteristics of things. As a result, in comparison with other methods, acoustic and language models can be modeled more accurately in such a neural network.

Scopes of the DNN algorithm

Diagram of a typical deep neural network

Typically, a typical deep neural network contains many linear and nonlinear layers that overlap each other.

Four hidden layers in the DNN acoustic model

The network whose scheme is shown here consists of a set of linear layers. Each neuron from the previous layer is associated with each neuron from the next. The connection of the network input with its output can be described by the following formula:

Y T = X T W T + B

X T is a line vector, the input to a neural network. When applied to speech recognition, we usually place 4 pieces of data for simultaneous work on them, thus creating a 4xM input matrix. W T and B are, respectively, the linear transformation matrix of the neural network and the displacement vector. Typically, the dimension of such a network is very large, in all layers there is the same number of neurons, that is, the network has a square shape.

Intel SSSE3 instruction set

Intel calls the Supplemental Streaming SIMD Extensions 3 command set, or, for short, just SSSE3, the SSE3 command set extension. It is part of SIMD technology integrated into Intel microprocessors. This technology is designed to improve multimedia data processing capabilities. It is designed to speed up the tasks of encoding and decoding information and to accelerate the implementation of various calculations. Using the SSSE3 instruction set, we can process several data streams using one instruction per clock cycle. This can significantly improve the efficiency of applications. In particular, the SSSE3 commands are applicable to matrix calculations.

To use the SSSE3 instruction set, you need to include the corresponding SIMD header files:

#include <mmintrin.h> //MMX #include <xmmintrin.h> //SSE #include <emmintrin.h> //SSE2 #include <pmmintrin.h> //SSE3 #include <tmmintrin.h> //SSSE3 #include <smmintrin.h> //SSSE4.1 #include <nmmintrin.h> //SSSE4.2 #include <wmmintrin.h> //AES #include <immintrin.h> //AVX The header file tmmintrin.h provides work with SSSE3, below is a description of the functions that are defined in it.

/* [ ] , , {X,}MM2/m{128,64} (b) to {X,}MM1 (a).*/ //a=(a0, a1, a2, a3, a4, a5, a6, a7), b=(b0, b1, b2, b3, b4, b5, b6, b7) //then r0=a0+a1,r1=a2+a3,r2=a4+a5,r3=a6+a7,r4=b0+b1,r5=b2+b3,r6=b4+b5, r7=b6+b7 extern __m128i _mm_hadd_epi16 (__m128i a, __m128i b); //a=(a0, a1, a2, a3), b=(b0, b1, b2, b3) //then r0=a0+a1,r1=a2+a3,r2=b0+b1,r3=b2+b3 extern __m128i _mm_hadd_epi32 (__m128i a, __m128i b); //SATURATE_16(x) is ((x > 32767) ? 32767 : ((x < -32768) ? -32768 : x)) //a=(a0, a1, a2, a3, a4, a5, a6, a7), b=(b0, b1, b2, b3, b4, b5, b6, b7) //then r0=SATURATE_16(a0+a1), ..., r3=SATURATE_16(a6+a7), //r4=SATURATE_16(b0+b1), ..., r7=SATURATE_16(b6+b7) extern __m128i _mm_hadds_epi16 (__m128i a, __m128i b); //a=(a0, a1, a2, a3), b=(b0, b1, b2, b3) //then r0=a0+a1, r1=a2+a3, r2=b0+b1, r3=b2+b3 extern __m64 _mm_hadd_pi16 (__m64 a, __m64 b); //a=(a0, a1), b=(b0, b1), 则r0=a0+a1, r1=b0+b1 extern __m64 _mm_hadd_pi32 (__m64 a, __m64 b); //SATURATE_16(x) is ((x > 32767) ? 32767 : ((x < -32768) ? -32768 : x)) //a=(a0, a1, a2, a3), b=(b0, b1, b2, b3) //then r0=SATURATE_16(a0+a1), r1=SATURATE_16(a2+a3), //r2=SATURATE_16(b0+b1), r3=SATURATE_16(b2+b3) extern __m64 _mm_hadds_pi16 (__m64 a, __m64 b); /* [ ] , , {X,}MM2/m{128,64} (b) from {X,}MM1 (a).*/ //a=(a0, a1, a2, a3, a4, a5, a6, a7), b=(b0, b1, b2, b3, b4, b5, b6, b7) // r0=a0-a1, r1=a2-a3, r2=a4-a5, r3=a6-a7, r4=b0-b1, r5=b2-b3, r6=b4-b5, r7=b6-b7 extern __m128i _mm_hsub_epi16 (__m128i a, __m128i b); //a=(a0, a1, a2, a3), b=(b0, b1, b2, b3) //then r0=a0-a1, r1=a2-a3, r2=b0-b1, r3=b2-b3 extern __m128i _mm_hsub_epi32 (__m128i a, __m128i b); //SATURATE_16(x) is ((x > 32767) ? 32767 : ((x < -32768) ? -32768 : x)) //a=(a0, a1, a2, a3, a4, a5, a6, a7), b=(b0, b1, b2, b3, b4, b5, b6, b7) //then r0=SATURATE_16(a0-a1), ..., r3=SATURATE_16(a6-a7), //r4=SATURATE_16(b0-b1), ..., r7=SATURATE_16(b6-b7) extern __m128i _mm_hsubs_epi16 (__m128i a, __m128i b); //a=(a0, a1, a2, a3), b=(b0, b1, b2, b3) //then r0=a0-a1, r1=a2-a3, r2=b0-b1, r3=b2-b3 extern __m64 _mm_hsub_pi16 (__m64 a, __m64 b); //a=(a0, a1), b=(b0, b1), 则r0=a0-a1, r1=b0-b1 extern __m64 _mm_hsub_pi32 (__m64 a, __m64 b); //SATURATE_16(x) is ((x > 32767) ? 32767 : ((x < -32768) ? -32768 : x)) //a=(a0, a1, a2, a3), b=(b0, b1, b2, b3) //then r0=SATURATE_16(a0-a1), r1=SATURATE_16(a2-a3), //r2=SATURATE_16(b0-b1), r3=SATURATE_16(b2-b3) extern __m64 _mm_hsubs_pi16 (__m64 a, __m64 b); /* , {X,}MM2/m{128,64} (b) to {X,}MM1 (a).*/ //SATURATE_16(x) is ((x > 32767) ? 32767 : ((x < -32768) ? -32768 : x)) //a=(a0, a1, a2, ..., a13, a14, a15), b=(b0, b1, b2, ..., b13, b14, b15) //then r0=SATURATE_16((a0*b0)+(a1*b1)), ..., r7=SATURATE_16((a14*b14)+(a15*b15)) // a . b . extern __m128i _mm_maddubs_epi16 (__m128i a, __m128i b); //SATURATE_16(x) is ((x > 32767) ? 32767 : ((x < -32768) ? -32768 : x)) //a=(a0, a1, a2, a3, a4, a5, a6, a7), b=(b0, b1, b2, b3, b4, b5, b6, b7) //then r0=SATURATE_16((a0*b0)+(a1*b1)), ..., r3=SATURATE_16((a6*b6)+(a7*b7)) // a . b . extern __m64 _mm_maddubs_pi16 (__m64 a, __m64 b); /* , {X,}MM2/m{128,64} (b) to {X,}MM1 (a).*/ //a=(a0, a1, a2, a3, a4, a5, a6, a7), b=(b0, b1, b2, b3, b4, b5, b6, b7) //then r0=INT16(((a0*b0)+0x4000) >> 15), ..., r7=INT16(((a7*b7)+0x4000) >> 15) extern __m128i _mm_mulhrs_epi16 (__m128i a, __m128i b); //a=(a0, a1, a2, a3), b=(b0, b1, b2, b3) //then r0=INT16(((a0*b0)+0x4000) >> 15), ..., r3=INT16(((a3*b3)+0x4000) >> 15) extern __m64 _mm_mulhrs_pi16 (__m64 a, __m64 b); /* {X,}MM2/m{128,64} (b) by {X,}MM1 (a).*/ //SELECT(a, n) extracts the nth 8-bit parameter from a. The 0th 8-bit parameter //is the least significant 8-bits, b=(b0, b1, b2, ..., b13, b14, b15), b is mask //then r0 = (b0 & 0x80) ? 0 : SELECT(a, b0 & 0x0f), ..., //r15 = (b15 & 0x80) ? 0 : SELECT(a, b15 & 0x0f) extern __m128i _mm_shuffle_epi8 (__m128i a, __m128i b); //SELECT(a, n) extracts the nth 8-bit parameter from a. The 0th 8-bit parameter //is the least significant 8-bits, b=(b0, b1, ..., b7), b is mask //then r0= (b0 & 0x80) ? 0 : SELECT(a, b0 & 0x07),..., //r7=(b7 & 0x80) ? 0 : SELECT(a, b7 & 0x07) extern __m64 _mm_shuffle_pi8 (__m64 a, __m64 b); /* , , , {X,}MM2/m{128,64} (b) to {X,}MM1 (a).*/ //a=(a0, a1, a2, ..., a13, a14, a15), b=(b0, b1, b2, ..., b13, b14, b15) //then r0=(b0 < 0) ? -a0 : ((b0 == 0) ? 0 : a0), ..., //r15= (b15 < 0) ? -a15 : ((b15 == 0) ? 0 : a15) extern __m128i _mm_sign_epi8 (__m128i a, __m128i b); //a=(a0, a1, a2, a3, a4, a5, a6, a7), b=(b0, b1, b2, b3, b4, b5, b6, b7) //r0=(b0 < 0) ? -a0 : ((b0 == 0) ? 0 : a0), ..., //r7= (b7 < 0) ? -a7 : ((b7 == 0) ? 0 : a7) extern __m128i _mm_sign_epi16 (__m128i a, __m128i b); //a=(a0, a1, a2, a3), b=(b0, b1, b2, b3) //then r0=(b0 < 0) ? -a0 : ((b0 == 0) ? 0 : a0), ..., //r3= (b3 < 0) ? -a3 : ((b3 == 0) ? 0 : a3) extern __m128i _mm_sign_epi32 (__m128i a, __m128i b); //a=(a0, a1, a2, a3, a4, a5, a6, a7), b=(b0, b1, b2, b3, b4, b5, b6, b7) //then r0=(b0 < 0) ? -a0 : ((b0 == 0) ? 0 : a0), ..., //r7= (b7 < 0) ? -a7 : ((b7 == 0) ? 0 : a7) extern __m64 _mm_sign_pi8 (__m64 a, __m64 b); //a=(a0, a1, a2, a3), b=(b0, b1, b2, b3) //r0=(b0 < 0) ? -a0 : ((b0 == 0) ? 0 : a0), ..., //r3= (b3 < 0) ? -a3 : ((b3 == 0) ? 0 : a3) extern __m64 _mm_sign_pi16 (__m64 a, __m64 b); //a=(a0, a1), b=(b0, b1), 则r0=(b0 < 0) ? -a0 : ((b0 == 0) ? 0 : a0), //r1= (b1 < 0) ? -a1 : ((b1 == 0) ? 0 : a1) extern __m64 _mm_sign_pi32 (__m64 a, __m64 b); /* n*8 , {X,}MM2/m{128,64} (b) to {X,}MM1 (a).*/ //n: , , // , // n > 32, . //CONCAT(a, b) 256- , // a b. // – , n . //then r= (CONCAT(a, b) >> (n * 8)) & 0xffffffffffffffff extern __m128i _mm_alignr_epi8 (__m128i a, __m128i b, int n); //n: , , // . // n > 16, . //CONCAT(a, b) 128- , // a b. // - 64 , // n . //then r = (CONCAT(a, b) >> (n * 8)) & 0xffffffff extern __m64 _mm_alignr_pi8 (__m64 a, __m64 b, int n); /* , , , {X,}MM2/m{128,64} (b) to {X,}MM1 (a).*/ //a=(a0, a1, a2, ..., a13, a14, a15) //then r0 = (a0 < 0) ? -a0 : a0, ..., r15 = (a15 < 0) ? -a15 : a15 extern __m128i _mm_abs_epi8 (__m128i a); //a=(a0, a1, a2, a3, a4, a5, a6, a7) //then r0 = (a0 < 0) ? -a0 : a0, ..., r7 = (a7 < 0) ? -a7 : a7 extern __m128i _mm_abs_epi16 (__m128i a); //a=(a0, a1, a2, a3) //then r0 = (a0 < 0) ? -a0 : a0, ..., r3 = (a3 < 0) ? -a3 : a3 extern __m128i _mm_abs_epi32 (__m128i a); //a=(a0, a1, a2, a3, a4, a5, a6, a7) //then r0 = (a0 < 0) ? -a0 : a0, ..., r7 = (a7 < 0) ? -a7 : a7 extern __m64 _mm_abs_pi8 (__m64 a); //a=(a0, a1, a2, a3) //then r0 = (a0 < 0) ? -a0 : a0, ..., r3 = (a3 < 0) ? -a3 : a3 extern __m64 _mm_abs_pi16 (__m64 a); //a=(a0, a1), then r0 = (a0 < 0) ? -a0 : a0, r1 = (a1 < 0) ? -a1 : a1 extern __m64 _mm_abs_pi32 (__m64 a); The __m64 and __m128 data structure definitions are in the header for MMX (mmintrin.h) and SSE (xmmintrin.h).

__m64:

typedef union __declspec(intrin_type) _CRT_ALIGN(8) __m64 { unsigned __int64 m64_u64; float m64_f32[2]; __int8 m64_i8[8]; __int16 m64_i16[4]; __int32 m64_i32[2]; __int64 m64_i64; unsigned __int8 m64_u8[8]; unsigned __int16 m64_u16[4]; unsigned __int32 m64_u32[2]; } __m64; __m128:

typedef union __declspec(intrin_type) _CRT_ALIGN(16) __m128 { float m128_f32[4]; unsigned __int64 m128_u64[2]; __int8 m128_i8[16]; __int16 m128_i16[8]; __int32 m128_i32[4]; __int64 m128_i64[2]; unsigned __int8 m128_u8[16]; unsigned __int16 m128_u16[8]; unsigned __int32 m128_u32[4]; } __m128; Example: Using SSSE3 Functions to Accelerate Calculations Tried Out in the DNN Algorithm

Here we look at a couple of functions. Their example will show how SSSE3 is used to speed up calculations when implementing the DNN algorithm.

__m128i _mm_maddubs_epi16 (__m128i a, __m128i b) Addition with saturation

This function is very important when performing matrix calculations in the DNN algorithm. The parameter is a 128-bit register (register), which is used to store 16 integers without a sign (8-bit). The parameter b is a signed integer, also 8-bit. The result is 8 signed 16-bit integers. This feature is great for performing matrix calculations:

r0 := SATURATE_16((a0*b0) + (a1*b1)) r1 := SATURATE_16((a2*b2) + (a3*b3)) … r7 := SATURATE_16((a14*b14) + (a15*b15)) __m128i _mm_hadd_epi32 (__m128i a, __m128i b) Addition of contiguous elements

This function can be called a function that performs pairwise addition. Parameters a and b are 128-bit registers that store 4 signed 32-bit integers. In accordance with the usual operation of adding the corresponding elements in two vectors, the team performs the addition of adjacent elements of the input vector:

r0 := a0 + a1 r1 := a2 + a3 r2 := b0 + b1 r3 := b2 + b3 Suppose we have a vector-based computational problem typical of the implementation of DNN.

There are five vectors: a1, b1, b2, b3, b4. Vector a1 is a one-dimensional array of 16 integers of type signed char. Vectors b1, b2, b3, b4 are arrays of integers of 16 elements each of unsigned char type. We need to get the scalar products a1 * b1, a1 * b2, a1 * b3, a1 * b4, the result must be saved as a 32-bit integer with a sign.

If we use the usual approach for C programming, the code for solving this problem will look like this:

unsigned char b1[16],b2[16],b3[16],b4[16]; signed char a1[16]; int c[4],i; // // b1,b2,b3,b4 a1, c // for(i=0;i<16;i++){ c[0] += (short)a1[i]*(short)b1[i]; c[1] += (short)a1[i]*(short)b2[i]; c[2] += (short)a1[i]*(short)b3[i]; c[3] += (short)a1[i]*(short)b4[i]; } Suppose that in one clock cycle you can perform one multiplication and one addition operation. We get - 64 clock cycles to perform calculations.

Now we use the SSSE3 instruction set for solving the same problem.

register __m128i a1,b1,b2,b3,b4,c,d1,d2,d3,d4; // a1, b1, b2, b3 b4, c // d1 = _mm_maddubs_epi16(a1,b1); d1 = _mm_add_epi32(_mm_srai_epi32(_mm_unpacklo_epi16(d1, d1), 16), _mm_srai_epi32(_mm_unpackhi_epi16(d1, d1), 16)); d2 = _mm_maddubs_epi16(a1,b2); d2 = _mm_add_epi32(_mm_srai_epi32(_mm_unpacklo_epi16(d2, d2), 16), _mm_srai_epi32(_mm_unpackhi_epi16(d2, d2), 16)); d3 = _mm_hadd_epi32(d1, d2); d1 = _mm_maddubs_epi16(a1,b3); d1 = _mm_add_epi32(_mm_srai_epi32(_mm_unpacklo_epi16(d1, d1), 16), _mm_srai_epi32(_mm_unpackhi_epi16(d1, d1), 16)); d2 = _mm_maddubs_epi16(a1,b4); d2 = _mm_add_epi32(_mm_srai_epi32(_mm_unpacklo_epi16(d2, d2), 16), _mm_srai_epi32(_mm_unpackhi_epi16(d2, d2), 16)); d4 = _mm_hadd_epi32(d1, d2); c = _mm_hadd_epi32(d3, d4); We store the result in a 128-bit register (s), in which 4 integers are placed. Considering the pipeline data processing, the calculations will take 12 or 13 clock cycles. If you compare these data, you get the following:

| Implementation option | CPU clock cycles | Win |

| Conventional C Programming | 64 | - |

| Using SSSE3 | 13 | ~ 500% |

Comparative Testing

Let's make experiment, having taken the above-stated code as a basis. Create two functions that perform the same calculations in different ways. One of them, in the end, returns the sum of the elements of the integer array c , the second one - the sum of the 32-bit integer elements of the 128-bit register c . Variables are initialized with each function call. A total of 10,000,000 calls to each of the functions are performed, the test runs in the background thread.

Performance test application interface

Here are the results of testing the release version of the application on an Asus Fonepad 8 tablet with Intel Atom Z3530 CPU. Android 5.0 is installed on the device.

Comparison of execution speed of code written using and without using SSSE3

| No | Using SSSE3, ms. | Use normal C, ms. |

| one | 547 | 3781 |

| 2 | 507 | 3723 |

| 3 | 528 | 3762 |

| four | 517 | 3731 |

| five | 531 | 3755 |

| 6 | 517 | 3769 |

| 7 | 502 | 3752 |

| eight | 529 | 3750 |

| 9 | 514 | 3745 |

| ten | 510 | 3721 |

| The average | 520.2 | 3748.9 |

The project source code, which can be imported into Android Studio, can be found here .

Results

As you know, when recognizing speech using a deep neural network, a lot of matrix calculations are performed. If these calculations are optimized, you can achieve better than ever performance on the IA platform. We work in partnership with ISV Unisound, which provides speech recognition services in China. Unisound was able to achieve a performance gain of 10% when using software based on DNN on ARM devices.

DNN nowadays is becoming the main algorithm for speech recognition. It, in particular, is used by such services as Google Now, Baidu Voice, Tencent Wechat, iFlytek Speech Service, Unisound Speech Service and many others. At the same time, there is a set of SSSE3 instructions that can help in optimizing the calculations on which the speech recognition process is built. If everywhere, where DNN is used, they implement such optimization, this will improve the quality of speech recognition and will allow to fully discover the capabilities of the IA platform.

Source: https://habr.com/ru/post/264311/

All Articles