Using the Media Capture API in a browser

I offer the readers of Habrakhabr a translation of the article “Using the Media Capture API in the Browser” by Dave Voyles.

Today I want to experiment with Media Capture and Streams API , developed jointly in the Web Real-Time Communications Working Group from the W3C and Device APIs Working Group . Some developers may know them as getUserMedia, the main interface that allows web pages to access devices such as webcams and microphones.

You can find the source code of the project in my github . There are working demos for your experiments. In the latest Windows 10 preview release, Microsoft first added support for media capture APIs to Microsoft Edge . Much of the code from the example is taken from the Photo Capture sample, which the Edge development team did on their test site .

For those of you who want to learn a little more, Eric Bidelman wrote an excellent article on HTML5 rocks that tells the story of these APIs.

')

The getUserMedia () method is a great starting point in exploring the Media Capture API. The getUserMedia () call accepts MediaStreamConstraints as an argument that determines the settings and / or requirements for capture devices and captured media streams, such as microphone volume, video resolution, which camera is turned on (meaning translator).

Through MediaStreamConstraints, you can also use a specific capture device using its ID, which can be obtained via the enumerateDevices () method. When a user gives permission, getUserMedia () can return a promise along with a MediaSteam object if a specific MediaStreamConstraints is found.

And all this without the need to download the plugin! In this example, we’ll learn more about the API and make some great filters for video and images that we get. Is your browser supported? getUserMedia () was available from Chrome 21, Opera 18, and Firefox 17, and is now in Edge .

The detection function is idle checking for the existence of navigator.getUserMedia. This is a big job - check every browser. I advise using Modernizr . Here's how it works:

Without Modernizr, as in the example shown, use this:

In our HTML you can put a video tag at the top of the page. You may notice that it is on autoplay. Without this, hangs on the first frame.

There is no media source here yet, but we will do it with Javascript.

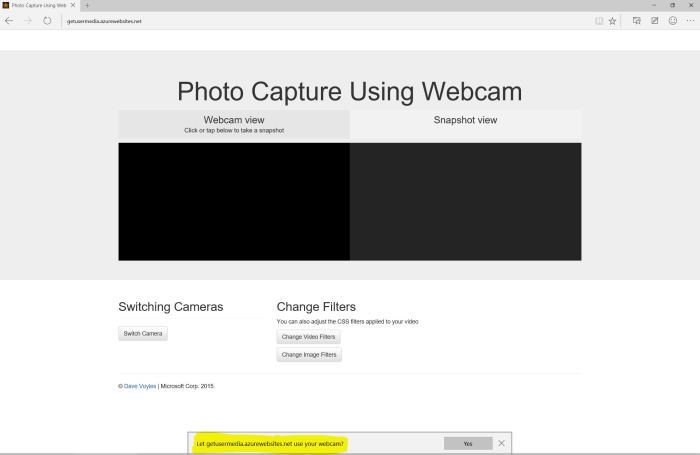

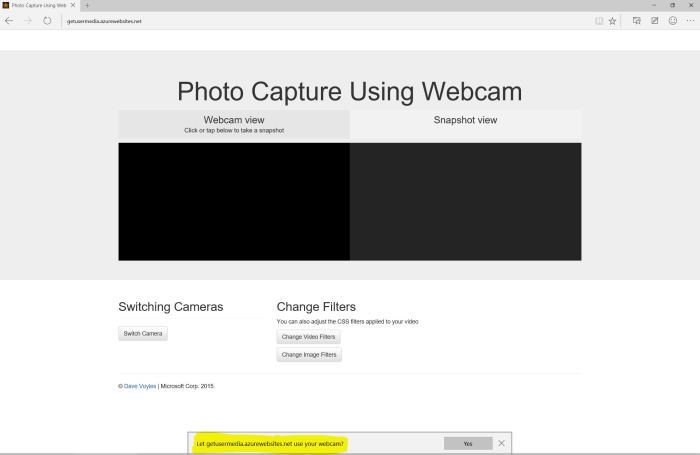

New functionality can give developers a few new features, but there is a risk in terms of user security, therefore the first thing that happens when you launch a web application is to request the user’s permission to access the capture device. getUserMedia takes several parameters. The first is an object that specifies the details and requirements for each type of media that you want to access. To access the camera, the first parameter must be {video: true}, to use both the camera and microphone {video: true, audio: true}.

Here it really becomes interesting. We also use the MediaDevices.enumeratedDevices method in this example. It will collect information about I / O devices available on your system, such as microphones, cameras, speakers. This promise can return several properties including the type (type) of the device: “videoinput”, “audioinput”, or “audiooutput.” It can also generate a unique ID in the form of a string (videoinput: id = csO9c0YpAf274OuCPUA53CNE0YHlIr2yXCi + SqfBZZ8 =), a label. , for example: "FaceTime HD Camera (Built-in)". This technology remains experimental, and is not yet available at CanIUse.com .

In the initalizeVideoStream functions you can see that we take the video tag from the page and set it as the source of our stream. Stream in itself - blob. If the browser does not support the srcObject attribute, you must make the URL for the mediastrium and set it

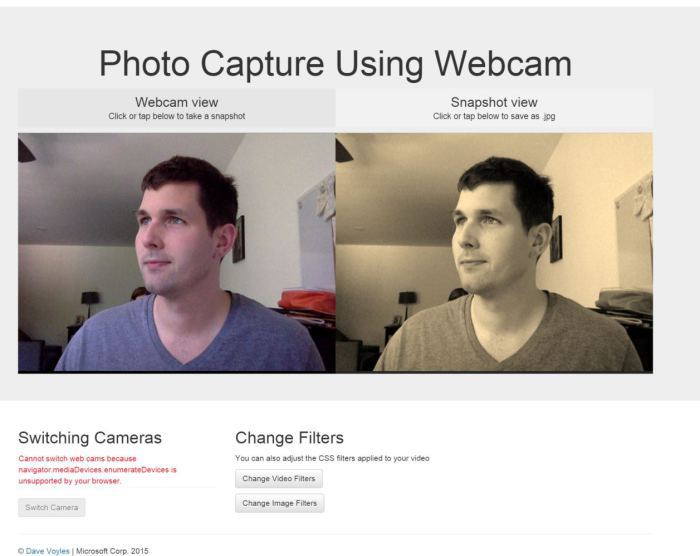

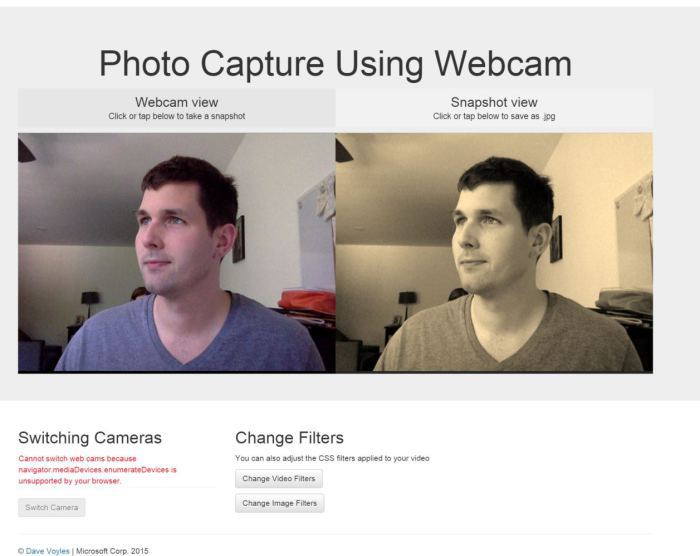

I'm a bad photographer, so I always rely on Instagram filters. But what if you can apply your filters to photos and videos? Well, you can!

I made a simple function for a video stream that allows me to apply CSS filters in real time. It is almost identical to the function for images.

At the beginning of the class there is an array with the names of the filters. They are stored as a string and correspond in name to CSS classes.

// CSS filters var index = 0; var filters = ['grayscale', 'sepia', 'blur', 'invert', 'brightness', 'contrast', '']; and CSS:

You can see more examples and change the values in real time on the Edge test drive page .

Understanding the code, you might notice a few things that you are not familiar with. The first thing that drew my eyes was navigator.msSaveBlob. Blob Designer allows you to easily create and manipulate blob right on the client. It is supported in IE 10+.

msSaveBlob allows you to save a blob (in this case, our photo) to disk. It has a brother, the msSaveOrOpenBlob method, which allows you to open images from inside the browser.

If the browser supports the method, then the amount of code required to save the image is reduced.

This is just the beginning. At the same time, we can use WebGL, which allows you to apply more filters, or put audio / video stream in an interactive environment in real time. Maybe this will be my next project ...

Additionally, you can bind the Web Audio API to apply frequency modulation to the audio stream. This example from the Web Audio tuner perfectly demonstrates this. Some are easier to perceive visually, so check out the example from Microsoft .

When mobile browsers start supporting this technology, you can use these APIs to bind and work with essential hardware without paying attention to the platform. Now is a great time to be a web developer, and I hope after using this you will understand why I am so glad to participate in this.

For all the errors found, please report in HP.

Today I want to experiment with Media Capture and Streams API , developed jointly in the Web Real-Time Communications Working Group from the W3C and Device APIs Working Group . Some developers may know them as getUserMedia, the main interface that allows web pages to access devices such as webcams and microphones.

You can find the source code of the project in my github . There are working demos for your experiments. In the latest Windows 10 preview release, Microsoft first added support for media capture APIs to Microsoft Edge . Much of the code from the example is taken from the Photo Capture sample, which the Edge development team did on their test site .

For those of you who want to learn a little more, Eric Bidelman wrote an excellent article on HTML5 rocks that tells the story of these APIs.

')

Let's start

The getUserMedia () method is a great starting point in exploring the Media Capture API. The getUserMedia () call accepts MediaStreamConstraints as an argument that determines the settings and / or requirements for capture devices and captured media streams, such as microphone volume, video resolution, which camera is turned on (meaning translator).

Through MediaStreamConstraints, you can also use a specific capture device using its ID, which can be obtained via the enumerateDevices () method. When a user gives permission, getUserMedia () can return a promise along with a MediaSteam object if a specific MediaStreamConstraints is found.

And all this without the need to download the plugin! In this example, we’ll learn more about the API and make some great filters for video and images that we get. Is your browser supported? getUserMedia () was available from Chrome 21, Opera 18, and Firefox 17, and is now in Edge .

Detection function

The detection function is idle checking for the existence of navigator.getUserMedia. This is a big job - check every browser. I advise using Modernizr . Here's how it works:

if (Modernizr.getusermedia) { var getUM = Modernizr.prefixed('getUserMedia', navigator); getUM({video: true}, function( //... //... } Without Modernizr, as in the example shown, use this:

navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia; if (!navigator.getuserMedia) { Console.log('You are using a browser that does not support the Media Capture API'); } Video player

In our HTML you can put a video tag at the top of the page. You may notice that it is on autoplay. Without this, hangs on the first frame.

<div class="view--video"> <video id="videoTag" src="" autoplay muted class="view--video__video"></video> </div> There is no media source here yet, but we will do it with Javascript.

Gaining access to a device

New functionality can give developers a few new features, but there is a risk in terms of user security, therefore the first thing that happens when you launch a web application is to request the user’s permission to access the capture device. getUserMedia takes several parameters. The first is an object that specifies the details and requirements for each type of media that you want to access. To access the camera, the first parameter must be {video: true}, to use both the camera and microphone {video: true, audio: true}.

Multi-camera support

Here it really becomes interesting. We also use the MediaDevices.enumeratedDevices method in this example. It will collect information about I / O devices available on your system, such as microphones, cameras, speakers. This promise can return several properties including the type (type) of the device: “videoinput”, “audioinput”, or “audiooutput.” It can also generate a unique ID in the form of a string (videoinput: id = csO9c0YpAf274OuCPUA53CNE0YHlIr2yXCi + SqfBZZ8 =), a label. , for example: "FaceTime HD Camera (Built-in)". This technology remains experimental, and is not yet available at CanIUse.com .

Installing the source in the video player

In the initalizeVideoStream functions you can see that we take the video tag from the page and set it as the source of our stream. Stream in itself - blob. If the browser does not support the srcObject attribute, you must make the URL for the mediastrium and set it

var initializeVideoStream = function(stream) { mediaStream = stream; var video = document.getElementById('videoTag'); if (typeof (video.srcObject) !== 'undefined') { video.srcObject = mediaStream; } else { video.src = URL.createObjectURL(mediaStream); } if (webcamList.length > 1) { document.getElementById('switch').disabled = false; } }; Applying CSS filters

I'm a bad photographer, so I always rely on Instagram filters. But what if you can apply your filters to photos and videos? Well, you can!

I made a simple function for a video stream that allows me to apply CSS filters in real time. It is almost identical to the function for images.

var changeCssFilterOnVid = function () { var el = document.getElementById('videoTag'); el.className = 'view--video__video'; var effect = filters[index++ % filters.length] if (effect) { el.classList.add(effect); console.log(el.classList); } } At the beginning of the class there is an array with the names of the filters. They are stored as a string and correspond in name to CSS classes.

// CSS filters var index = 0; var filters = ['grayscale', 'sepia', 'blur', 'invert', 'brightness', 'contrast', '']; and CSS:

/* image * video filters */ .grayscale { -webkit-filter: grayscale(1); -moz-filter: grayscale(1); -ms-filter: grayscale(1); filter: grayscale(1); } .sepia { -webkit-filter: sepia(1); -moz-filter: sepia(1); -ms-filter: sepia(1); filter: sepia(1); } .blur { -webkit-filter: blur(3px); -moz-filter: blur(3px); -ms-filter: blur(3px); filter: blur(3px); } You can see more examples and change the values in real time on the Edge test drive page .

Save images

Understanding the code, you might notice a few things that you are not familiar with. The first thing that drew my eyes was navigator.msSaveBlob. Blob Designer allows you to easily create and manipulate blob right on the client. It is supported in IE 10+.

msSaveBlob allows you to save a blob (in this case, our photo) to disk. It has a brother, the msSaveOrOpenBlob method, which allows you to open images from inside the browser.

var savePhoto = function() { if (photoReady) { var canvas = document.getElementById('canvasTag'); if (navigator.msSaveBlob) { var imgData = canvas.msToBlob('image/jpeg'); navigator.msSaveBlob(imgData, 'myPhoto.jpg'); } else { var imgData = canvas.toDataURL('image/jpeg'); var link = document.getElementById('saveImg'); link.href = imgData; link.download = 'myPhoto.jpg'; link.click(); } canvas.removeEventListener('click', savePhoto); document.getElementById('photoViewText').innerHTML = ''; photoReady = false; } }; If the browser supports the method, then the amount of code required to save the image is reduced.

What's next?

This is just the beginning. At the same time, we can use WebGL, which allows you to apply more filters, or put audio / video stream in an interactive environment in real time. Maybe this will be my next project ...

Additionally, you can bind the Web Audio API to apply frequency modulation to the audio stream. This example from the Web Audio tuner perfectly demonstrates this. Some are easier to perceive visually, so check out the example from Microsoft .

When mobile browsers start supporting this technology, you can use these APIs to bind and work with essential hardware without paying attention to the platform. Now is a great time to be a web developer, and I hope after using this you will understand why I am so glad to participate in this.

For all the errors found, please report in HP.

Source: https://habr.com/ru/post/264167/

All Articles