The formation of musical preferences in the neural network - an experiment to create a smart player

This article is devoted to the work on the study of the possibility of teaching the simplest (relatively) neural network to “listen” to music and to distinguish “good” in the opinion of the listener from “bad”.

To teach a neural network to distinguish “bad” music from “good” or to show that a neural network is incapable of it (this particular implementation of it).

Considering that music is a combination of an uncountable number of sounds, it will not work out to feed its neural network just like that, so it’s necessary to determine what the network will “listen to”. There are an infinite number of options for selecting the most important features from the music. You need to determine what you need to select from the music. As a reference data, I determined that you need to highlight some idea of the frequencies of sounds used in music.

')

I decided to select the frequencies because Firstly, they are different for different musical instruments .

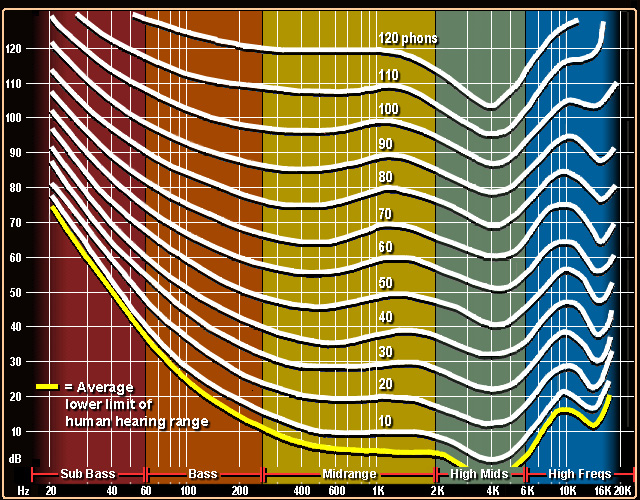

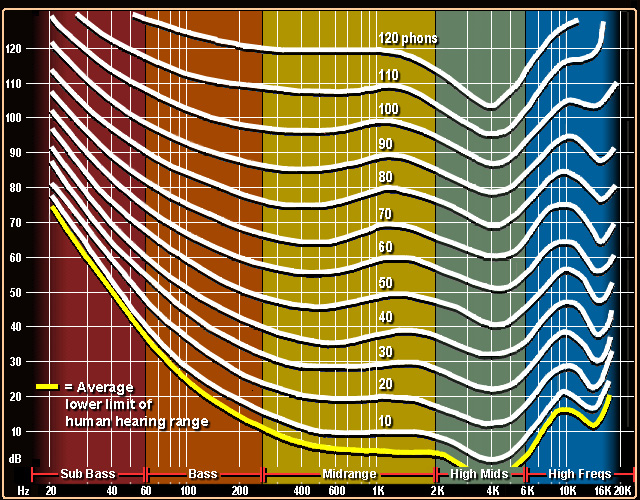

Secondly, the sensitivity of hearing, depending on the frequency, is different and quite individual (within reasonable limits).

Thirdly, the track saturation with certain frequencies is quite individual, but it is similar for similar compositions, for example, a guitar solo in two different, but similar tracks will give a similar picture of the track "saturation" at the level of individual frequencies.

Thus, the task of normalization is reduced to the selection of some information about frequencies, which shows:

To select the frequency saturation in the track at each time interval, you can use the FFT data, you can manually calculate this data if you want, but I will use the already open Bass open library, or rather the Bass.NET wrapper for it, which allows you to get this data more humanely.

To get FFT data from a track, it’s enough to write a small function .

After receiving the raw data you need to process them.

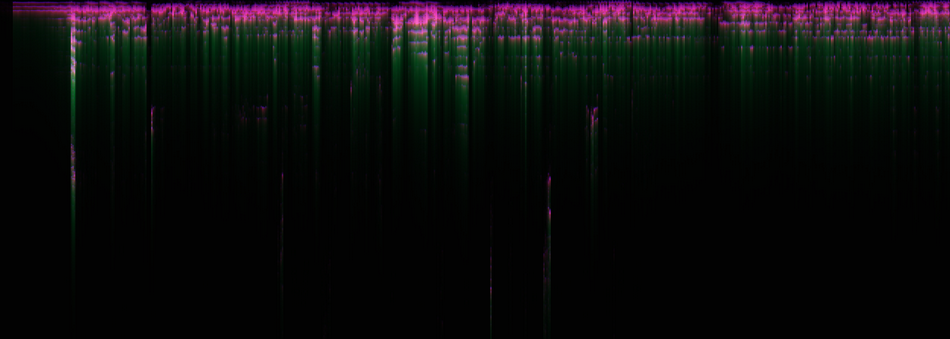

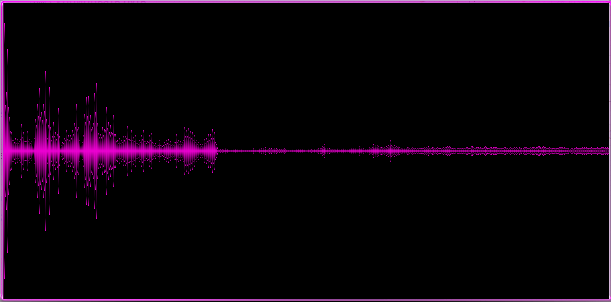

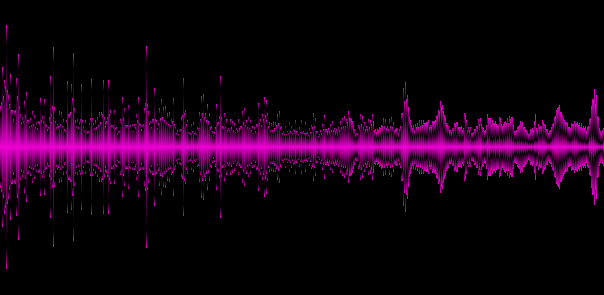

Data visualization

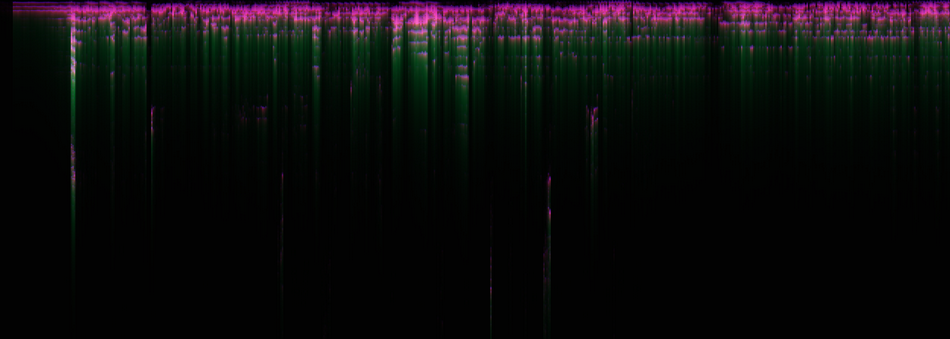

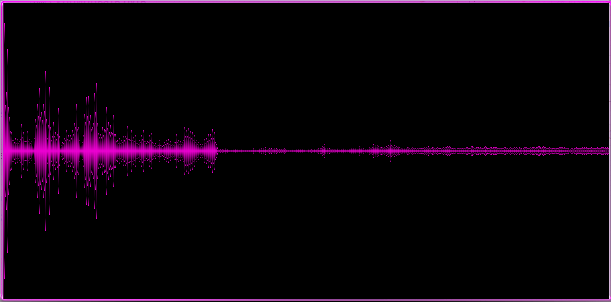

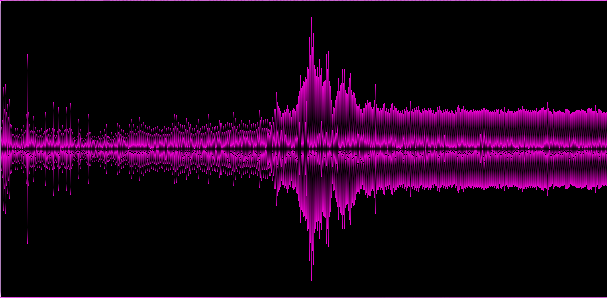

Firstly, it is necessary to determine by how many frequency ranges to divide the desired sound, this parameter determines how detailed the result of the analysis will be, but also gives a greater load to the neural network (it will need more neurons to operate with large data). For our task, we take 1024 gradations; this is a fairly detailed frequency spectrum and a relatively small amount of information at the output. Now it is necessary to determine how to obtain 1 array from the N float [] arrays, which contains more or less all the information we need: sound saturation with certain spectra, frequency of occurrence of various sound spectra, its volume, its duration.

With the first parameter “saturation” everything is quite simple, you can simply sum up all the arrays and at the output we get how much was each spectrum in the entire track, but this will not reflect other parameters.

In order to “reflect” on the final array, other parameters can be summed up a little more difficult, I will not go into details of the implementation of such a “summing” function, since the number of its possible implementations is virtually infinite.

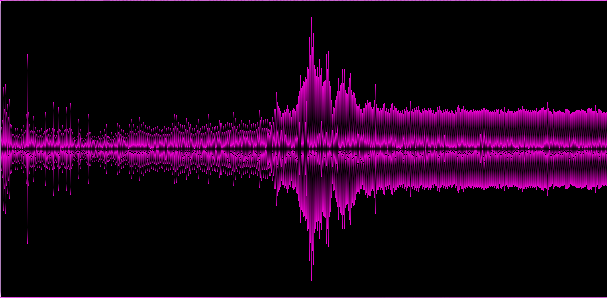

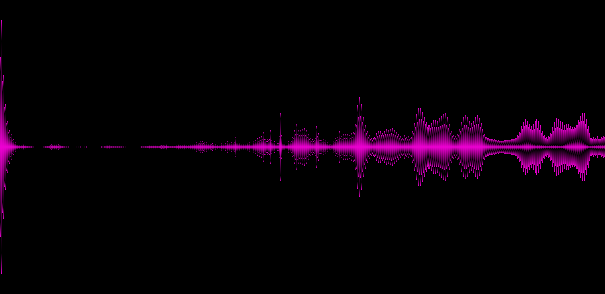

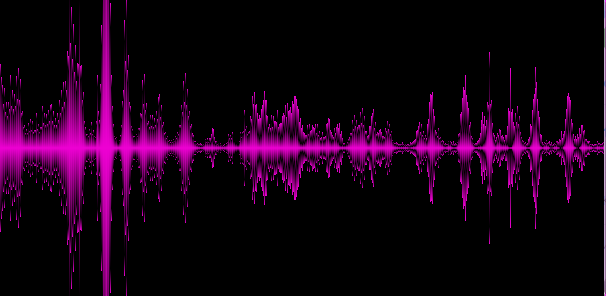

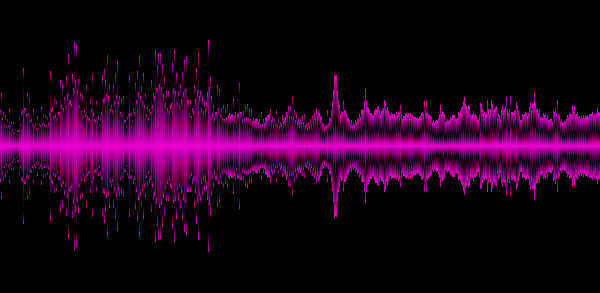

Graphic representation of the resulting array:

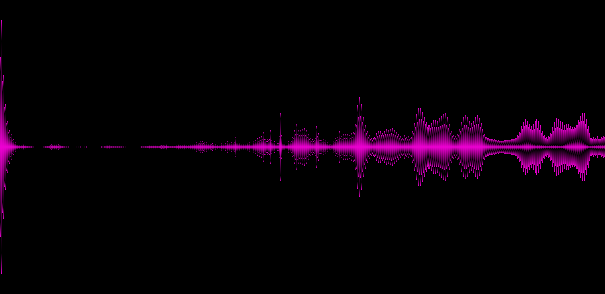

Example 1. Relatively calm melody with light rock elements, piano and vocals

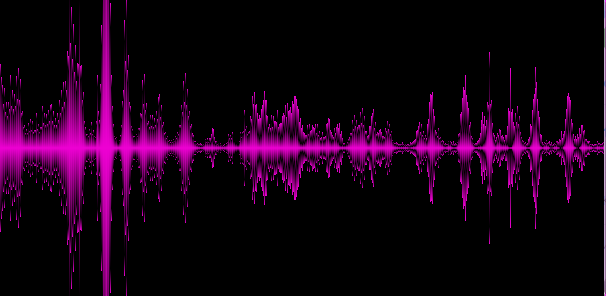

Example 2. Relatively “soft” dubstep with elements ofbrain scaling from the walls of a not very soft dubstep

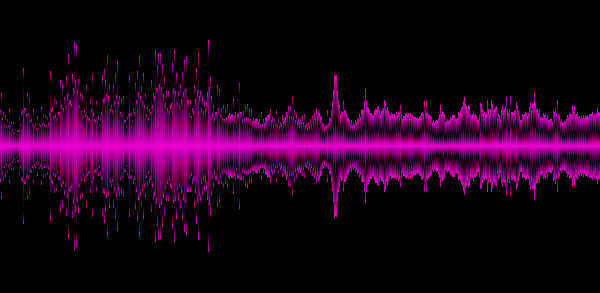

Example 3. Music in style close to trance.

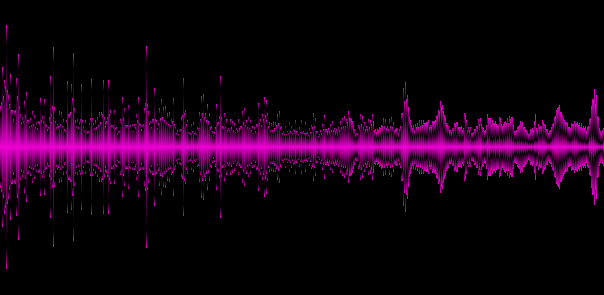

Example 4. Pink Floyd

Example 5. Van Halen

Example 6. Anthem of Russia

In the above examples, it becomes clear that different genres provide different “spectral pictures”, this is good, which means we have identified at least some key features of the track.

Now we need to prepare a neural network that we will “train”. There are a lot of algorithms of neural networks, some are better for certain tasks, some are worse, I don’t set goals to study all types in the context of the task, I’ll take its first realization (thanks to dr.kernel ) that is flexible enough to adapt it to the task. . I do not choose a “more suitable” neural network, since the task is to check the “neural network” and if its random implementation shows a good result, then definitely there are more suitable types of neural networks that show even better results, but if the network does not cope, then it will only show that this neural network did not cope with the task.

This neural network is trained by data that lies in the range from 0 to 1 and, at the output, also gives values from 0 to 1. Therefore, the data must be brought to a “suitable form”. It is possible to bring data to the appropriate form in a variety of ways, the result will still be similar.

Stage Two: Neural Network Preparation

The neural network I use is determined by the number of layers, the number of inputs, outputs, and the number of neurons on each layer.

Obviously, not all configurations are “equally useful”, but I don’t know certain methods for determining the “best” configuration for this task, if there are any at all. One could simply try several different configurations and dwell on the “best suited”, but I will go a different way, I will grow a neural network with an evolutionary algorithm, later in the article I will explain exactly how.

At this time, the format of the input data has been determined, the format of the output data needs to be determined. Obviously, you can just divide the tracks into “good” and “bad” and 1 output neuron will be enough, but I think that good and bad is a loose concept, in particular, certain music is better suited for waking up in the morning, another walk around the city on the way to work, the third for a rest in the evening after work, etc. that is, the quality of the track must also be determined relative to the time of day and day of the week, for a total of 24 * 7 output neurons.

Now you need to determine the sample for training, for this you can of course take all the tracks and sit to celebrate what time you want to listen or better never to hear, but I’m not one of those who would sit for hours and note the tracks, it’s much easier to do it “along the way” plays, that is, while listening to the track. That is, the “training” sample should form the player, while listening to tracks in which one could mark the track as “good” or “bad”. And so imagine that there is such a player (it really is, that is, it was written on the basis of the open codes of another player). After a dozen hours of listening to music on different days, data is collected for the first sample. Each sample contains input data (1024 values of the spectral pattern of the track) and output (24 * 7 values from 0 to 1, where 0 is a completely bad track and 1 is a very good track for each hour from 7 days of the week). At the same time, with the mark “good” track + put on all days of the week and hours, but at this hour / day of the week + was more, and similarly for “bad”, that is, the data is not 0 and 1, and some values are between 0 and 1 .

There is data for training, now you need to determine what is considered a trained network, in this case, we can assume that for the data loaded into it, the difference between the network response to the input data should not be and the initial output data should be minimal. The ability of the network to “predict” from unknown data is also extremely important, that is, it should not be retrained, there will be little use from the retraining network. There is no problem to determine the “quality of response”, for this there is a function calculating the error, the question remains how to determine the quality of the prediction. To solve this problem, it is enough to train the network on 1 sample, but check the quality for another, while the samples must be random and the elements of the first and second should not be repeated.

The algorithm for checking the network learning level has been found; now we need to determine its configuration. As I said, an evolutionary algorithm will be used for this. To do this, we take the starting configuration, say 10 layers, each with 100 neurons (this will be definitely not enough and the quality of such a network will not be very good), then we will train in a certain number of steps (say 1000), then we will determine the quality of its training. Next, go to the "evolution", create 10 configurations, each of which is the "mutant" of the original, that is, it has changed in a random direction, either the number of layers or the number of neurons on each or some layers. Next, we train each configuration in the same way as the initial one, select the best of them, and define it as the initial one. We continue this process until such a moment comes that we cannot find a configuration that learns better than the original one. We consider this configuration to be the best; it turned out to be able to “remember” the original data best of all and predicts best of all, that is, the result of its training is the highest quality possible.

The evolutionary process took about 6 hours for a sample of 6000 elements in size, after a couple of hours of optimization, the process takes about 30 minutes, the configuration may vary in different samples, but most often the configuration is from about 7 layers, with the number of neurons gradually increasing to 3-4 layers further more quickly reduced to the last layer, a peculiar hump on a 3-4 layer out of 7, apparently this configuration is the most “capable” for this network.

So the network has “grown” and is capable of learning, the usual training for the network begins, long and tedious (up to 15 minutes), after the network is ready to “listen to music” and say “bad” or “good”.

The weight of the “brain” - the configuration of the trained network is 25 mb, the weight will vary for different samples, in general, the larger the sample, the more neurons you need to cope with, but the weight of the average network will be approximately the same.

The training set consisted of “good” tracks in my humble opinion, such as Van Halen, Pink Floyd, classical music (not any), soft rock, melodic and calm tracks. “Bad” in the sample in my opinion were considered rap, pop, too heavy rock.

We define the "quality index" of random tracks, which will count the neural network after training.

The first neural network that came across, after the “evolutionary” configuration configuration and training, showed the presence of “skills” in determining the characteristics of the track. The neural network can be taught to “listen to music” and separate tracks that will or will not like the “listener” who taught it.

The full source code of the C # application and the compiled version can be downloaded on GitHub or via Sync .

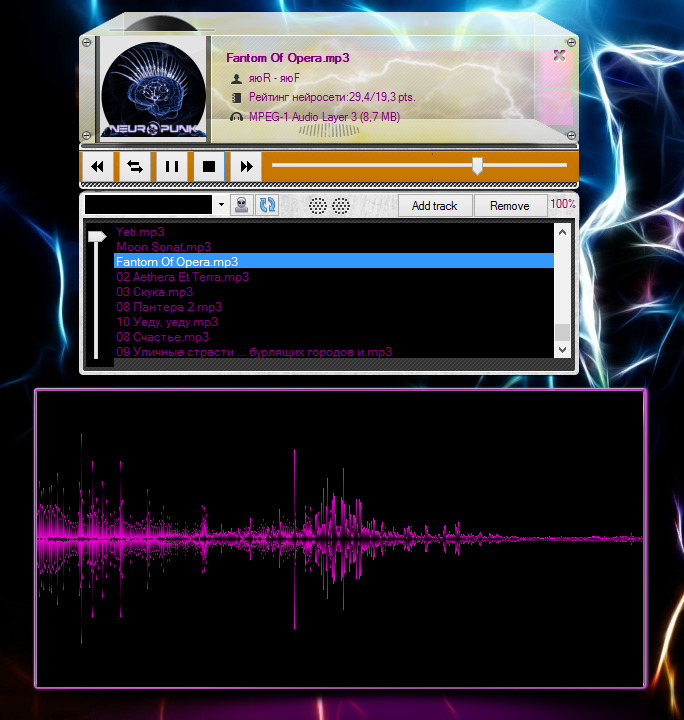

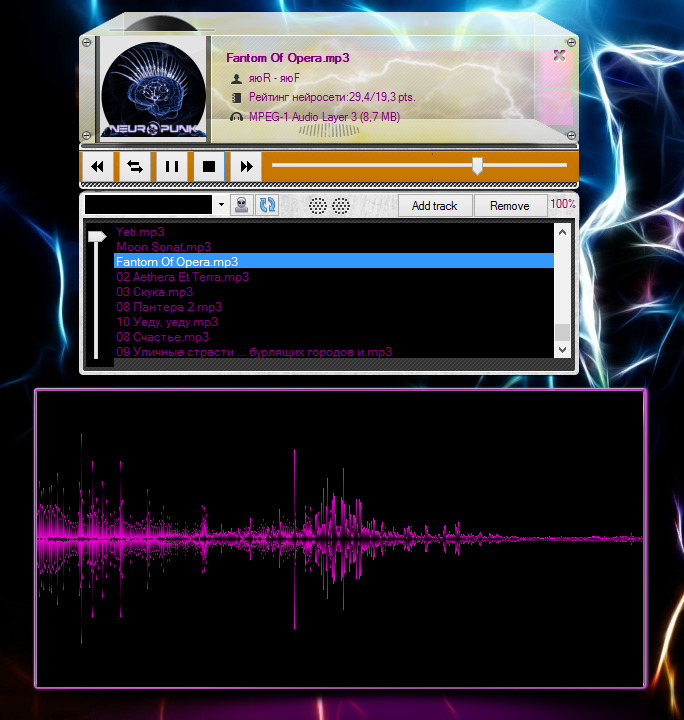

Appearance of the application , which implements all the functionality described in the article:

I hope my experience in this study will help those who want to explore the possibilities of neural networks in the future.

purpose

To teach a neural network to distinguish “bad” music from “good” or to show that a neural network is incapable of it (this particular implementation of it).

Stage One: Data Normalization

Considering that music is a combination of an uncountable number of sounds, it will not work out to feed its neural network just like that, so it’s necessary to determine what the network will “listen to”. There are an infinite number of options for selecting the most important features from the music. You need to determine what you need to select from the music. As a reference data, I determined that you need to highlight some idea of the frequencies of sounds used in music.

')

I decided to select the frequencies because Firstly, they are different for different musical instruments .

Secondly, the sensitivity of hearing, depending on the frequency, is different and quite individual (within reasonable limits).

Thirdly, the track saturation with certain frequencies is quite individual, but it is similar for similar compositions, for example, a guitar solo in two different, but similar tracks will give a similar picture of the track "saturation" at the level of individual frequencies.

Thus, the task of normalization is reduced to the selection of some information about frequencies, which shows:

- how often does the sound from this frequency range sound in the composition

- how loud he sounded

- how long has it sounded

- and so for each specific frequency range (it is necessary to divide the entire "audible" spectrum into a certain number of ranges).

To select the frequency saturation in the track at each time interval, you can use the FFT data, you can manually calculate this data if you want, but I will use the already open Bass open library, or rather the Bass.NET wrapper for it, which allows you to get this data more humanely.

To get FFT data from a track, it’s enough to write a small function .

After receiving the raw data you need to process them.

Data visualization

Firstly, it is necessary to determine by how many frequency ranges to divide the desired sound, this parameter determines how detailed the result of the analysis will be, but also gives a greater load to the neural network (it will need more neurons to operate with large data). For our task, we take 1024 gradations; this is a fairly detailed frequency spectrum and a relatively small amount of information at the output. Now it is necessary to determine how to obtain 1 array from the N float [] arrays, which contains more or less all the information we need: sound saturation with certain spectra, frequency of occurrence of various sound spectra, its volume, its duration.

With the first parameter “saturation” everything is quite simple, you can simply sum up all the arrays and at the output we get how much was each spectrum in the entire track, but this will not reflect other parameters.

In order to “reflect” on the final array, other parameters can be summed up a little more difficult, I will not go into details of the implementation of such a “summing” function, since the number of its possible implementations is virtually infinite.

Graphic representation of the resulting array:

Example 1. Relatively calm melody with light rock elements, piano and vocals

Example 2. Relatively “soft” dubstep with elements of

Example 3. Music in style close to trance.

Example 4. Pink Floyd

Example 5. Van Halen

Example 6. Anthem of Russia

In the above examples, it becomes clear that different genres provide different “spectral pictures”, this is good, which means we have identified at least some key features of the track.

Now we need to prepare a neural network that we will “train”. There are a lot of algorithms of neural networks, some are better for certain tasks, some are worse, I don’t set goals to study all types in the context of the task, I’ll take its first realization (thanks to dr.kernel ) that is flexible enough to adapt it to the task. . I do not choose a “more suitable” neural network, since the task is to check the “neural network” and if its random implementation shows a good result, then definitely there are more suitable types of neural networks that show even better results, but if the network does not cope, then it will only show that this neural network did not cope with the task.

This neural network is trained by data that lies in the range from 0 to 1 and, at the output, also gives values from 0 to 1. Therefore, the data must be brought to a “suitable form”. It is possible to bring data to the appropriate form in a variety of ways, the result will still be similar.

Stage Two: Neural Network Preparation

The neural network I use is determined by the number of layers, the number of inputs, outputs, and the number of neurons on each layer.

Obviously, not all configurations are “equally useful”, but I don’t know certain methods for determining the “best” configuration for this task, if there are any at all. One could simply try several different configurations and dwell on the “best suited”, but I will go a different way, I will grow a neural network with an evolutionary algorithm, later in the article I will explain exactly how.

At this time, the format of the input data has been determined, the format of the output data needs to be determined. Obviously, you can just divide the tracks into “good” and “bad” and 1 output neuron will be enough, but I think that good and bad is a loose concept, in particular, certain music is better suited for waking up in the morning, another walk around the city on the way to work, the third for a rest in the evening after work, etc. that is, the quality of the track must also be determined relative to the time of day and day of the week, for a total of 24 * 7 output neurons.

Now you need to determine the sample for training, for this you can of course take all the tracks and sit to celebrate what time you want to listen or better never to hear, but I’m not one of those who would sit for hours and note the tracks, it’s much easier to do it “along the way” plays, that is, while listening to the track. That is, the “training” sample should form the player, while listening to tracks in which one could mark the track as “good” or “bad”. And so imagine that there is such a player (it really is, that is, it was written on the basis of the open codes of another player). After a dozen hours of listening to music on different days, data is collected for the first sample. Each sample contains input data (1024 values of the spectral pattern of the track) and output (24 * 7 values from 0 to 1, where 0 is a completely bad track and 1 is a very good track for each hour from 7 days of the week). At the same time, with the mark “good” track + put on all days of the week and hours, but at this hour / day of the week + was more, and similarly for “bad”, that is, the data is not 0 and 1, and some values are between 0 and 1 .

There is data for training, now you need to determine what is considered a trained network, in this case, we can assume that for the data loaded into it, the difference between the network response to the input data should not be and the initial output data should be minimal. The ability of the network to “predict” from unknown data is also extremely important, that is, it should not be retrained, there will be little use from the retraining network. There is no problem to determine the “quality of response”, for this there is a function calculating the error, the question remains how to determine the quality of the prediction. To solve this problem, it is enough to train the network on 1 sample, but check the quality for another, while the samples must be random and the elements of the first and second should not be repeated.

The algorithm for checking the network learning level has been found; now we need to determine its configuration. As I said, an evolutionary algorithm will be used for this. To do this, we take the starting configuration, say 10 layers, each with 100 neurons (this will be definitely not enough and the quality of such a network will not be very good), then we will train in a certain number of steps (say 1000), then we will determine the quality of its training. Next, go to the "evolution", create 10 configurations, each of which is the "mutant" of the original, that is, it has changed in a random direction, either the number of layers or the number of neurons on each or some layers. Next, we train each configuration in the same way as the initial one, select the best of them, and define it as the initial one. We continue this process until such a moment comes that we cannot find a configuration that learns better than the original one. We consider this configuration to be the best; it turned out to be able to “remember” the original data best of all and predicts best of all, that is, the result of its training is the highest quality possible.

The evolutionary process took about 6 hours for a sample of 6000 elements in size, after a couple of hours of optimization, the process takes about 30 minutes, the configuration may vary in different samples, but most often the configuration is from about 7 layers, with the number of neurons gradually increasing to 3-4 layers further more quickly reduced to the last layer, a peculiar hump on a 3-4 layer out of 7, apparently this configuration is the most “capable” for this network.

So the network has “grown” and is capable of learning, the usual training for the network begins, long and tedious (up to 15 minutes), after the network is ready to “listen to music” and say “bad” or “good”.

Stage Three: Collecting Results

The weight of the “brain” - the configuration of the trained network is 25 mb, the weight will vary for different samples, in general, the larger the sample, the more neurons you need to cope with, but the weight of the average network will be approximately the same.

The training set consisted of “good” tracks in my humble opinion, such as Van Halen, Pink Floyd, classical music (not any), soft rock, melodic and calm tracks. “Bad” in the sample in my opinion were considered rap, pop, too heavy rock.

We define the "quality index" of random tracks, which will count the neural network after training.

- Van Halen - 29 pts

- Rammstein-Mutter (album) - 20-23 pts

- Rihanna - 26 pts

- The Punisher -11-17 pts

- Radiorama - 25 pts

- R Claudermann - 25-29 pts

- The Gregorians - 27-29 pts

- Pink Floyd - 29-33 pts

- Russian “rap” low quality - 9-11 pts

- Red mold 16-19 pts

- Bi-2 24-29 pts

- Leap year - 25-33 pts

Conclusion:

The first neural network that came across, after the “evolutionary” configuration configuration and training, showed the presence of “skills” in determining the characteristics of the track. The neural network can be taught to “listen to music” and separate tracks that will or will not like the “listener” who taught it.

The full source code of the C # application and the compiled version can be downloaded on GitHub or via Sync .

Appearance of the application , which implements all the functionality described in the article:

I hope my experience in this study will help those who want to explore the possibilities of neural networks in the future.

Source: https://habr.com/ru/post/263811/

All Articles