Modern Adaptec RAID controllers from A to Z. Part 1

Recently I came across a useful and very detailed article by Adaptec, which described well, just all the nuances of the controllers, except for the volume of 60 pages. There was a natural desire to cut and divide the article into 2 pieces:

The material will be of interest to all those involved in data storage - integrators, system administrators and end users.

Actually, Part 1.

')

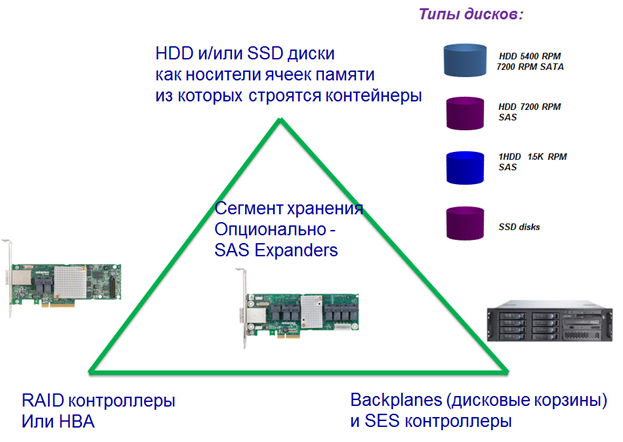

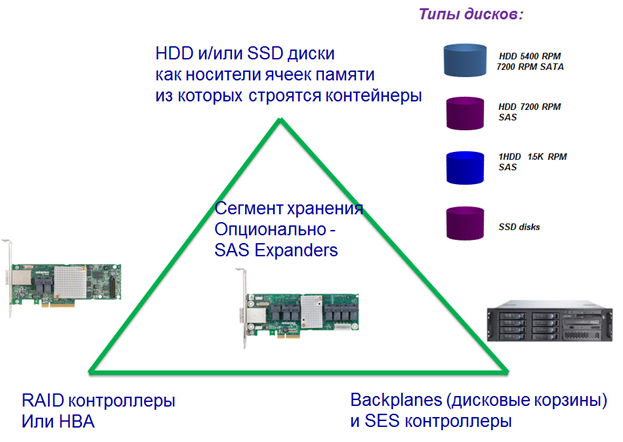

Today, the internal storage system of a modern server is based on SAS / SATA technologies. And here, compared to the old classic SCSI technology, which is no longer in use now, not so much new was added. In fact, only the SAS expander, which allows you to create a network segment of the second level for the exchange of information between the controller and the disks based on the switch of the second level (in scientific terms, the segment of the “switched media” type for communication targets and initiators).

And, as a rule, the internal server storage system is built on the basis of one or several SAS RAID controllers or HBA (in some cases they can be integrated on the motherboard) and SAS / SATA HDD or SSD drives.

External storage systems can additionally use other technologies, such as FibreChannel, iSCSI, PCI-E. Storage systems for data centers can use modern solutions such as NVRAM (virtual disks created based on fast RAM, which has protection against power loss) and PCI-E (when hard disk access is provided through the network segment of the PCI-Express technology).

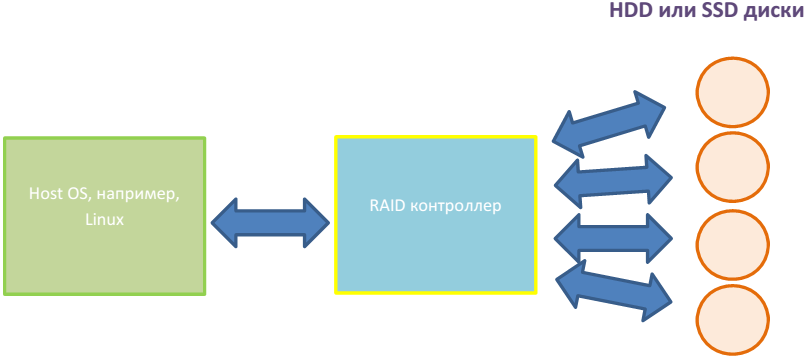

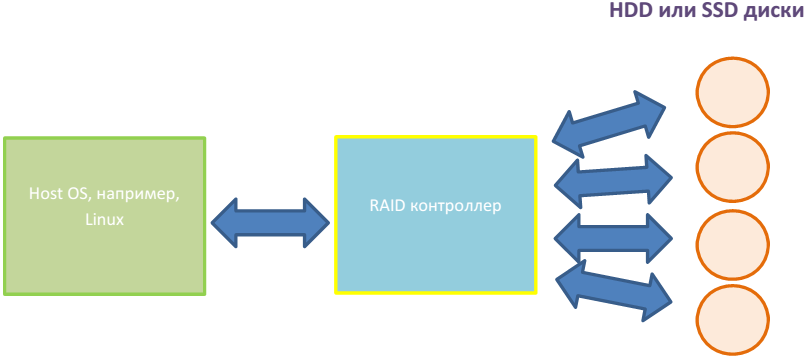

The figure shows a modern internal server storage subsystem.

In the most general sense, the stack is the operating system of the RAID controller.

In a narrow sense, the stack is a container virtualizer (or, more simply, a disk virtualizer). It creates a container of the desired type, distributes it over the required number of disks and from a large number of containers it “bakes” a virtual disk, which in the Adaptec stack is called “RAID volume”.

Note : at the time of assembling the server (creating the storage system), the latest controller of its OS or firmware must be downloaded to the RAID controller. For Adaptec RAID controllers, they are free.

Oddly enough, but today RAID stacks are private solutions that do not comply with the standards. You can remove all physical disks with RAID volumes created on them, for example, from an Adaptec 6805 controller and transfer them to the 8885 controller and the volumes will be visible. But if you try to transfer volumes to a controller from another manufacturer in this way, then a miracle will not happen, and there will be no opportunity to see the data and these RAID volumes. Why it happens? Because the controller from another manufacturer supports its own stack that is not compatible with the Adaptec stack.

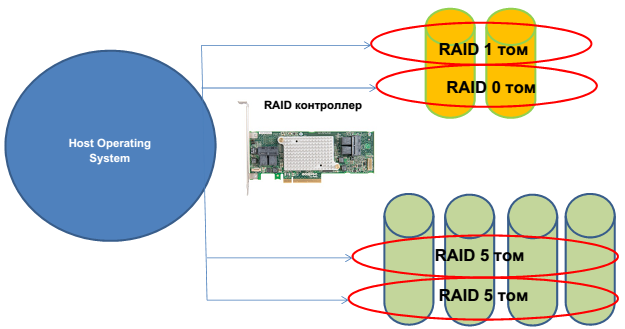

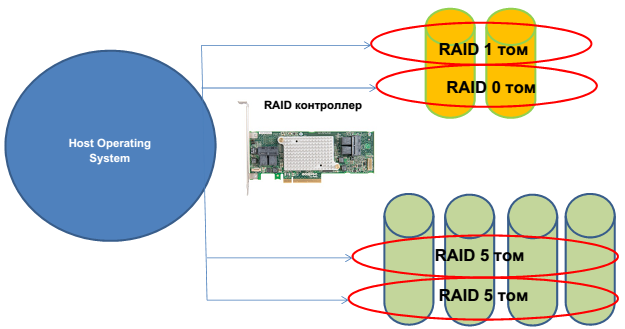

Each RAID volume is represented by the computer's operating system as a “SCSI disk”, which will be seen as a separate object in disk utilities such as the disk manager. It looks like this.

Thus, in the disk manager you can see 4 virtual disks: RAID0, RAID1 and two RAID5 disks.

When creating volumes, each level works.

The task of the physical disks level is to create a special area on the disks where information about the created volumes will be stored. Such an area is called a metadata area and is a complex storage container where service information is stored. Only the operating system of the controller and no one else has access to this area. In the Adaptec stack, this process of creating a service area is called - initialization and is executed through the command - initialize .

In RAID controllers that support HBA mode, there is a reverse command - deinitialize (these are 7th and 8th series controllers). This command completely removes this data structure from the physical disk and places the disk in HBA mode. That is, in order for the controller of the 7th or 8th series to start working as a normal HBA on it, it is enough to deinitialize all disks. Then they will all be visible in the utility of the central operating system such as DISK MANAGER and no other operations are required.

At the physical level, another well-known function is also performed, called coercion . On the Adaptec stack, it is produced simultaneously with initialize. This feature "cuts" a bit the hard disk capacity. The fact is that disks of the same category in capacity from different manufacturers still have unequal capacity. In order for a drive from one manufacturer to be replaced in the future, if necessary, with a drive from another manufacturer and the coercion function is performed. The cut-off container is simply “lost” forever and not used at all.

At the physical level, it is possible to place various service functions - checking a disk with a destructive or non-destructive method, formatting, completely erasing data, filling the disk with zeros, etc.

The logical level is necessary for two reasons:

Firstly, it significantly reduces the discreteness of the containers you choose to create volumes. This is done through the ability to create multiple logical disks (just cutting off a part of the capacity) on one physical disk or to create one logical disk using two or more physical disks. In this case, the capacity of various physical will simply fold. First, data will fill one area of one physical disk, then another another physical disk, etc. This method of joining disks is called Chain (in some other stacks, the word Span is used ).

Secondly, when creating such objects, information about them falls into metadata, and they cease to be tied to physical coordinates. After that, you can transfer disks from one controller port to another, transfer volumes from one controller to another, and this will still work fine.

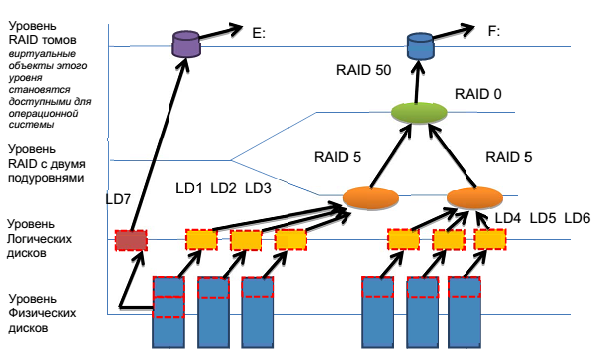

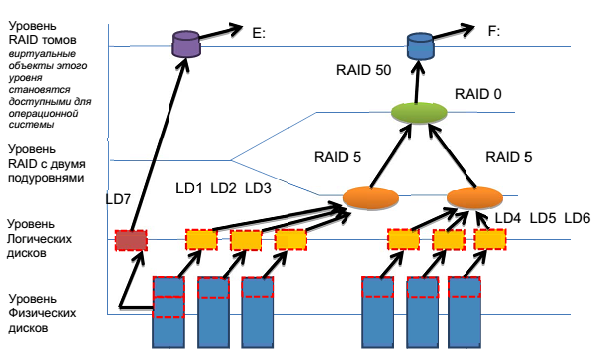

Next comes the turn of the RAID level . As a rule, modern stacks have two sublevels at this level. At each sublayer, elementary RAID is located, such as: chain or span (this is not really RAID, it is just “summing up” the capacities from different disks), RAID0, RAID1, RAID1E, RAID5, RAID6, etc.

The lowermost sublayer accepts logical disks, for example, LD1, LD2, LD3, as shown, and “bakes” the RAID5 volume out of them. The same thing happens with LD4, LD5, LD6. From them we get the second RAID5. Two RAID5 volumes move to an even higher level, where they use the function RAID0. At the output, we get a complex volume called RAID50 (where 5 is the type of RAID used in the lower layer and 0 is the type of RAID function from the upper level). The only thing missing in determining how much RAID5 (in this case, 2) was used to create a RAID50. On the Adaptec stack, this is called the second level device. This option will be needed if you create complex volumes of type 50 or 60.

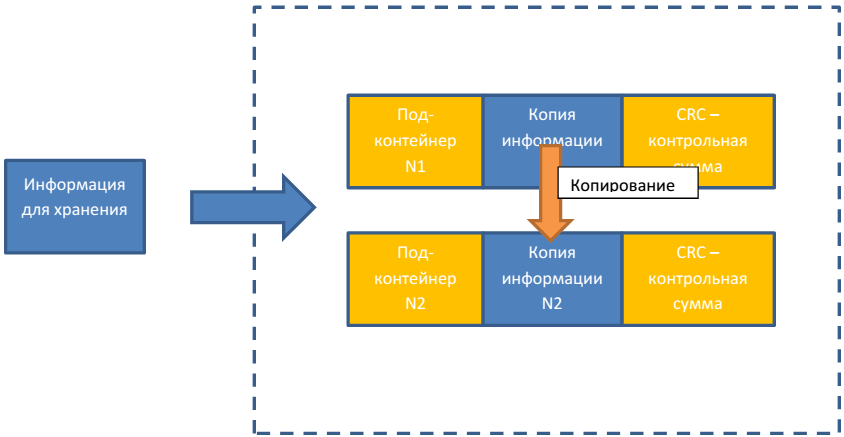

The top level is needed in order to provide such a virtual object for access by the operating system. When transferring to this level, a number of service functions are offered. The two most important Adaptec stack are build and clear.

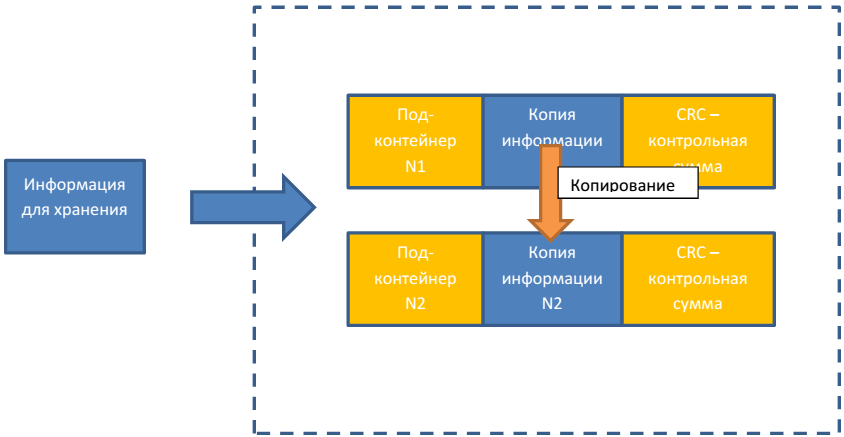

The figure - Virtual container type RAID1. Build operation

If you have new discs from the factory, then zeros are written in their sectors. Therefore, the result of both functions will be the same.

The difference between SimpleVolume and HBA mode.

These two modes are very similar to each other. Both can be used to create Software RAID solutions.

When a disk is running in HBA mode, no metadata is created on it. And there is no “cropping” of the capacity by the coercion function. This can cause a problem if you need to replace the disk with another manufacturer. The disk is directly transferred to the operating system. In this case, the controller cache will not work with it!

In the case of creating a Simple Volume through the configuration of volumes, a metadata area is created on the disk, the capacity is “trimmed” by the coertion function. Further, through the configuration utility, the Simple Volume is created using all available disk capacity. And after that, this object is transferred to the work of the central operating system.

Today, RAID controller stacks are constantly being improved. In its function, MaxCache plus, Adaptec has placed another level on the stack, the so-called tier level, with sublevels. This made it possible to take one RAID5 volume created on SSDs and another volume, for example, RAID60 created on SATA disks with 7200 rpm and collect from them an integrated, virtual volume where the most demanded data was stored on RAID5, and the least demanded on RAID60. In this case, the volume could be taken from different controllers. Today, this feature is not supported due to the transition of such capabilities to server operating systems. Naturally, the controller stack, as a virtualization mechanism, does not stand still and is constantly being improved both at the level of virtualization and at the level of basic and service functions.

A modern RAID controller is an information system (simplified computer), which, due to the performance of its main functions: creating a virtual container, placing and processing information in containers (in fact, READING - RECORDING information) exchanges data with two other types of information systems:

1. With the operating system;

2. With HDD or SSD drives.

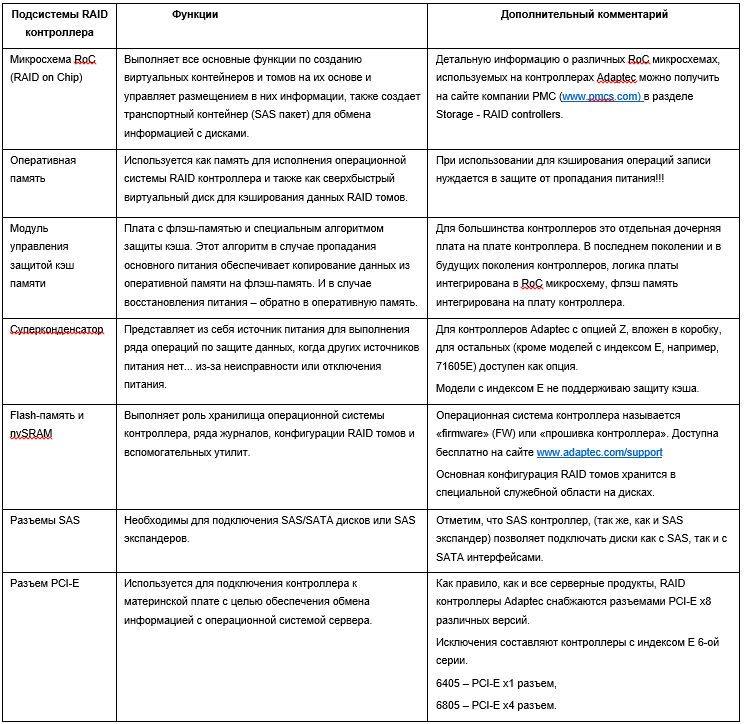

From the point of view of internal architecture, a modern RAID controller is the following set of main subsystems:

Table - The main subsystem of the RAID controller.

Support HBA mode.

We have already discussed above which RAID controller models support HBA mode. It is important to note that this is done independently for each disk. That is, on the controller, if you do not initialize part of the disks at the physical level, they will automatically fall into a program like the “disk manager” and will be visible and accessible there to work with them. You can use one part of such disks in HBA mode as single disks, and the other part to use for creating software RAID by means of the operating system. Initialized disks will be used to create RAID volumes by means of a RAID controller.

Hybrid volumes.

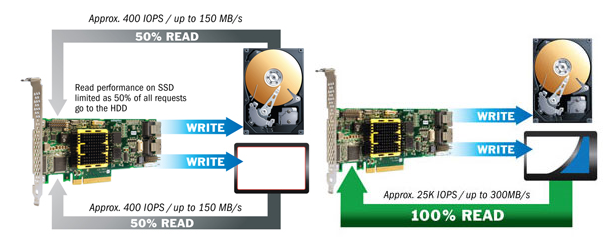

On the latest firmware versions, the Adaptec controller automatically creates a Hybrid RAID array when you create a RAID 1/10 from the same number of SSDs and HDDs (but in older firmware it was important that the RAIDD pairs the SSDs to be “master” and the HDD “slave” "). If you do not know how to check this - contact the service of those. Adaptec support. The Adaptec controller records simultaneously to the HDD and SSD. In the hybrid volume mode, reading is only with SSD! (for a volume of two HDDs, when a certain I / O threshold is exceeded, reading occurs from two disks. This is the main difference between the hybrid RAID1 / 10 mode). The result is a robust array with excellent read performance. Read like a single SSD drive. This is several orders of magnitude higher than that of the HDD. The feature comes standard with all Adaptec Series 2, 5.6, 7, 8 controllers.

Support for volume types that replace RAID5.

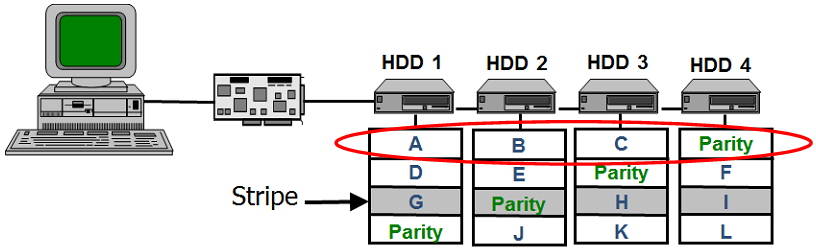

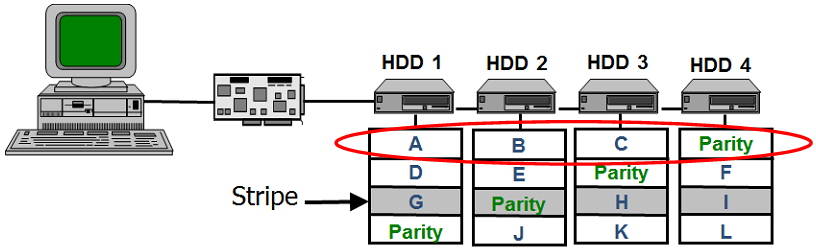

I hope you are well aware of the RAID5 container - this is a fairly classic solution.

Red shows the storage container created by the RAID controller. This is a RAID5 type container. The RAID5 volume itself consists of a large number of such virtual containers stacked in some kind of “stack”. A RAID5 container consists of a set of sectors of individual physical disks. The peculiarity of a RAID5 container is that it can “survive” the problems in a number of containers of hard disks of which it consists, that is, sectors of hard disks that are part of a RAID5 container lose their information, but the RAID5 container itself stores. This happens to a certain limit. With a certain number of “spoiled” sectors, the RAID5 container itself will no longer be able to guarantee 100% storage of information. Because of the transition from SCSI technology to SAS technology, the proposed basic quality of information storage by a RAID5 container has greatly deteriorated, literally, by several orders of magnitude.

This happened due to a number of objective reasons:

1. Due to the support of SATA disks, especially desktop class, the quality of storing information in a “disk sector” container has fallen markedly (SCSI controllers supported only high-quality SCSI disks);

2. The number of disks on the controller has grown many times (2-channel SCSI controller - a maximum of 26 disks, taking into account the performance of 8-10 (4-5 per channel));

3. Disk capacity has grown significantly. This means that much more RAID5 containers can fall into the RAID 5 volume (max. The capacity of SCSI disks is 400GB, the maximum capacity of a modern SATA hard disk is 8 TB).

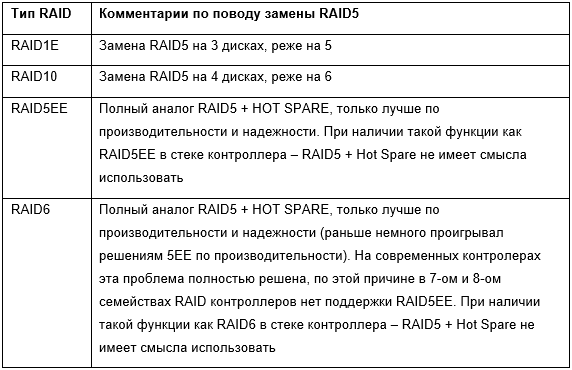

All this increases the likelihood of problems occurring in a single RAID5 container, which significantly reduces the likelihood of storing information in a RAID5 volume. For this reason, solutions have been added to modern RAID controller stacks to eliminate the use of RAID5. These are RAID1E, RAID5EE and RAID6.

Previously, the only alternative to RAID5 was RAID10. Support for RAID 10 is naturally preserved.

Options for replacing RAID5:

Bad stripe

Previously, if even one container of the controller (stripe) lost information or could not guarantee its safety, this led to the situation of transferring the entire volume to offline mode from the RAID controller (stopping access, literally, meant - the controller cannot guarantee 100% integrity of user data on volume).

A modern controller “works out” this situation differently:

1. Access to this does not stop;

2. There is a special “bad stripe” marker on the volume, which means that there are special containers in the RAID volume that have lost information;

3. Such “damaged containers” are reported to the operating system so that it can evaluate possible measures to prevent the loss of information or to restore it.

The bad stripe marker cannot be removed from the volume. You can only delete and re-create such a volume. The appearance of the volume with the flag “bad stripe” indicates a serious error or problems at the design stage of the storage system or at the stage of its operation. As a rule, behind this situation there is a serious incompetence of the designer or system administrator.

The main source of this kind of problems is incorrectly designed RAID5.

Some implementations of RAID 5 (for example, RAID5 on desktop disks) are prohibited for volumes with user data. Requires at least RAID5 + Hot Spare, which does not make sense if you have RAID6. It turns out that where it was necessary to create RAID6, RAID5 was created, which after several years of operation led to the appearance of the BAD STRIPE marker.

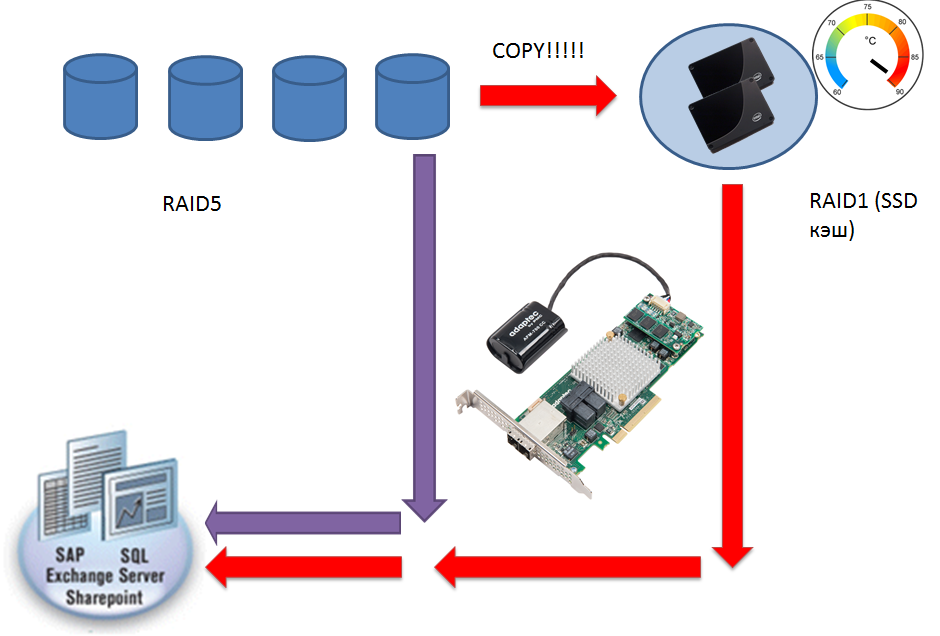

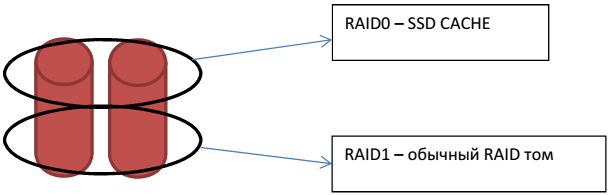

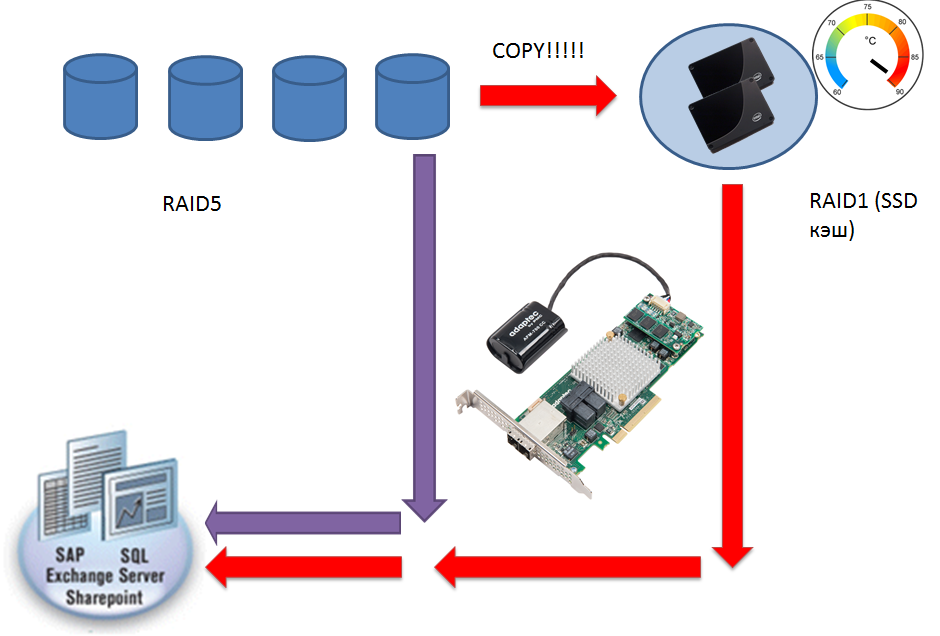

SSD caching

The SSD caching feature is one of the most sought-after features for optimizing the performance of RAID volumes of powerful storage systems with a large number of users without significant cost drag, the number of solution units, without loss of storage system capacity and ensuring optimal power consumption.

To use SSD write caching, you must make sure that two conditions are met:

1. The application works in the "random read" mode;

2. Requests to data have an uneven nature - there are RAID-level containers that are accessed more often in order to read data from there, and there are those that are accessed less frequently.

It should be noted that the more users the system has, the more likely it is that requests for individual containers will take the form of a standard statistical distribution. By the parameter “number of requests per unit of time”, one can distinguish conditionally “hot data” (the number of calls to them will be more than a specified parameter) and “cold data” (the number of calls to them is less than a specified parameter).

The work of the SSD cache for reading consists in copying “hot data” onto SSD disks and further reading from the SSD, which speeds up the process at times. Since this is a copy, the read cache has natural protection, if the SSD disk that forms the SSD cache area fails, this only leads to a loss of performance, but not to a loss of data.

The basic settings of the SSD caching function for the 7Q, 8Q controllers:

1. First of all, make sure that you have “hot data” and what size they have. This is best done experimentally by placing a large enough SSD disk in the cache area and configuring it in Simple Volume mode. Integrator companies can do this job for you. About a week later, through the control function, you can remove the statistics for SSD caching. It will show if you have “hot data” and how much they occupy.

2. It is desirable to add 10–50% of capacity to this volume and, based on this data, adjust your caching scheme in case of an increase in the amount of “hot data” in the future, if there is such a tendency.

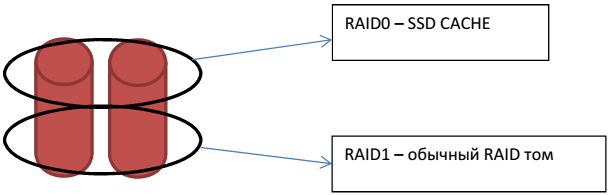

The settings allow you to “cut off” the required capacity from the capacity of your SSD drives, pick it up in the desired type of RAID volume, and the remaining capacity can be transferred to a regular RAID volume.

Next, you should evaluate whether it makes sense to use SSD cache for writing. As a rule, Internet applications are readable. The write cache is mainly used as an addition to the read cache, if the application uses writing in addition to reading. And in case of write cache, it is required to ensure cache protection. If something happens to the cache area, then the data placed there when caching the record will be lost. For protection, it is enough to use a RAID volume that provides redundancy on disks, for example, RAID1.

Possible configuration modes SSD cache area.

For individual volumes on the controller, you can independently enable and disable SSD cache for reading and writing depending on the needs and types of applications that work with each volume.

UEFI support.

All controllers and HBAs of the current Adaptec product line support uEFI BIOS mode for motherboards. Switching from MBR to uEFI allowed, for example, creating system and boot volumes larger than 2TB, which was impossible on boards with MBR BIOS (note that all Adaptec products fully support volumes> 2TB, this problem does not exist on the part of controllers and HBA). There are many other benefits of using the uEFI mode. For example, with the support of disks with a 4K sector size. All current Adaptec products support drives with a 4K sector, except for the 6th series of controllers.

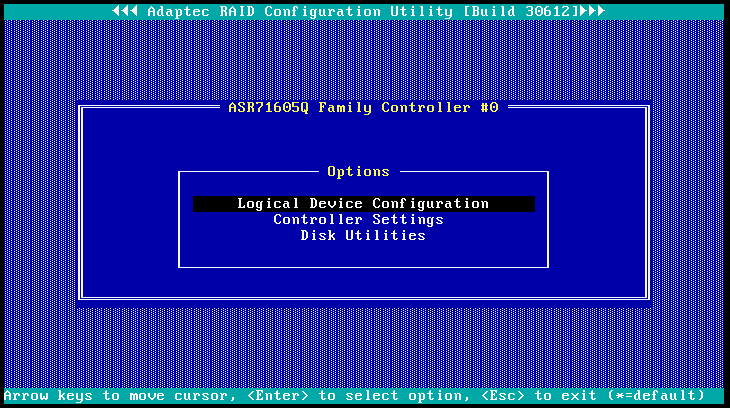

It is important to remember that if the motherboard uses the MBR mode, then the controller configuration utility is called via Cntrl + A.

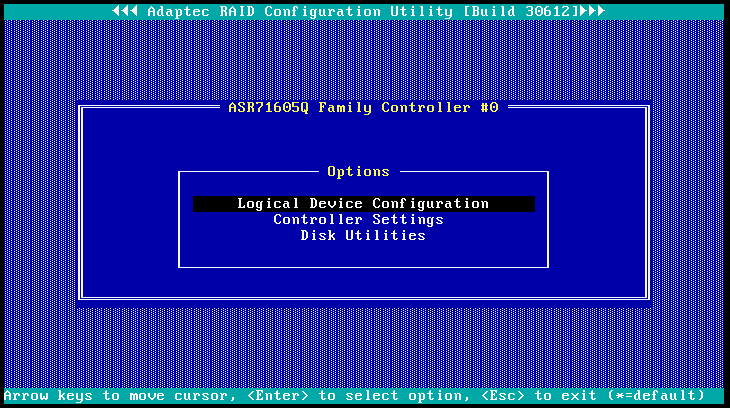

The figure shows the standard Adaptec configuration utility called via the Cntrl + A key combination.

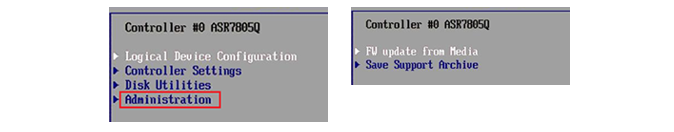

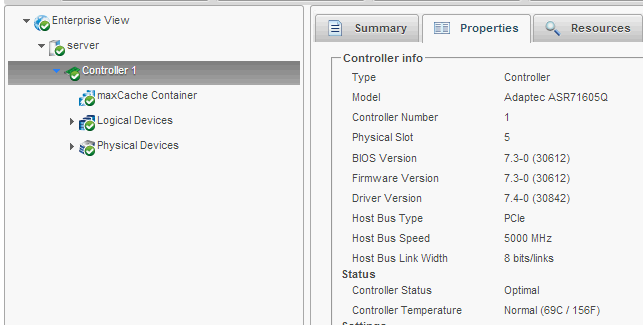

In the case of the uEFI mode, the controller configurator and HBA are integrated into the motherboard BIOS. This utility is easy to find in lines containing the word "Adaptec" or "PMC". And, as can be seen in the example below, the uEFI utility has more advanced functionality than the utility called via Cntrl + A.

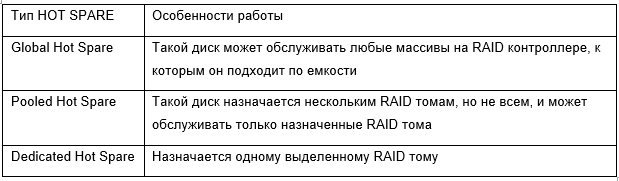

Hot Spare features.

Hot Spare disk acts as a passive RAID volume element, and gets into the RAID volume if something happens to one of the disks in the volume and it is no longer available to do its work. HOT SPARE is a disk that is installed on the controller, promoted and assigned to one or more volumes.

HOT SPARE, 3 :

Hot Spare daptc «» , . , RAID5 «degraded». , rescan . – HOT SPARE ( , , Global Hot Spare) , 100% «» . – Optimal. , – delete hot spare. HOT SPARE , RAID .

Power Management.

RAID volumes work differently. For example, volumes created to back up data can be used to move data, for example, two to three days per month. The question arises: how well is the power supply to the hard drives and keep the drives untwisted, if virtually all the remaining time they are not used?

The solution to this issue is the power management function. Its philosophy is that if disks are not used, their rotation can be slowed down (if such a function is supported by disks), and then completely stopped and held until they are needed, in the off state. The settings for this function are extremely simple.

First, the controller sets the time on days of the week, when this function is activated, and when it is not. This setting is tied to the work of a typical company. The parameters for setting the internal and external drives into operation are set - in pairs, three, four, etc., in order to distribute the load on the power supply units. In addition, three timer values are given. After the first one expires, if there are no I / O operations on the disks of this volume, then these disks will go into the “stand by” state, i.e. reduce their turnover by 50%. Not all disks support this mode; if it is not supported, then nothing will happen to the disk. After the second timer expires, the disks will completely stop and go to the “power off” state. The third timer is used to periodically check disks that have been turned off for a long time. The controller includes disks,carries out their non-destructive testing and, if everything is OK, then translates them back to the "power off" state.

After these settings, you can activate the power management scheme for each volume where it will be useful. Such volumes in the management system will be marked in green. This feature provides maximum benefits when used in data centers, allowing not only direct energy savings by stopping the drives, but also auxiliary savings by reducing the speed of the fans that blow the disks when the latter are turned off.

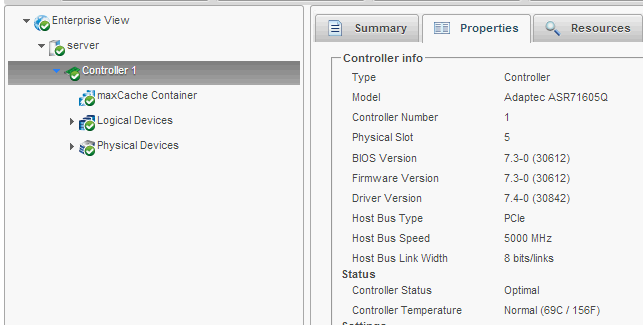

The management utilities that are included in the Max View Storage Manager (MSM) package are built on the most advanced standards and use the latest trends in improving principles and improving management efficiency. Therefore, we can easily use Adaptec Max View Storage Manager as a base model to look at the basic functions and methods in the field of storage management. The main control element is a RAID controller, which can exchange service information with disks, expanders and baskets and, thus, support management functions for the entire storage subsystem.

The main features of modern storage management systems:

• High level of detail, visualization and nesting of control objects. The administrator can see that the entire network segment has turned red, which means that there is a fault. If you expand the network segment icon, all servers will be visible. The problem server will be marked in red. If you click on this server, the RAID controllers installed in this system will be visible. The red color of one of them means some kind of problem. Further details will show the volumes created on this controller and the problem volume. And so up to the problem physical disk. Now the administrator knows exactly what happened, what the consequences were, and what disk to replace.

• . , , . . MSM . , HTTPS. .

• . «» MS WEB , E-mail. MSM , . Warning Error, RAID Degrade Failed. .

.

Information provided by Adaptec by PMC (Russia). Adaptec

- Part 1. General information about RAID controllers (a lot of theory, the basics)

- Part 2. Classification Adaptec controllers (here everything is very specific - a series of controllers, the functions of each series, tables, pictures)

The material will be of interest to all those involved in data storage - integrators, system administrators and end users.

Actually, Part 1.

')

Modern server information storage subsystem

Today, the internal storage system of a modern server is based on SAS / SATA technologies. And here, compared to the old classic SCSI technology, which is no longer in use now, not so much new was added. In fact, only the SAS expander, which allows you to create a network segment of the second level for the exchange of information between the controller and the disks based on the switch of the second level (in scientific terms, the segment of the “switched media” type for communication targets and initiators).

And, as a rule, the internal server storage system is built on the basis of one or several SAS RAID controllers or HBA (in some cases they can be integrated on the motherboard) and SAS / SATA HDD or SSD drives.

External storage systems can additionally use other technologies, such as FibreChannel, iSCSI, PCI-E. Storage systems for data centers can use modern solutions such as NVRAM (virtual disks created based on fast RAM, which has protection against power loss) and PCI-E (when hard disk access is provided through the network segment of the PCI-Express technology).

The figure shows a modern internal server storage subsystem.

RAID stack of the last generation.

In the most general sense, the stack is the operating system of the RAID controller.

In a narrow sense, the stack is a container virtualizer (or, more simply, a disk virtualizer). It creates a container of the desired type, distributes it over the required number of disks and from a large number of containers it “bakes” a virtual disk, which in the Adaptec stack is called “RAID volume”.

Note : at the time of assembling the server (creating the storage system), the latest controller of its OS or firmware must be downloaded to the RAID controller. For Adaptec RAID controllers, they are free.

Trinity Server - Secure Attachment

Build your server! Trinity Server Configurator.

- Operational assembly and high availability of necessary components. Trinity has 2 production sites with a capacity of up to 15,000 servers per year (OEM: Supermicro, Intel)

- High build quality - less than 2% of warranty cases.

- Mandatory testing : all hardware and software components; creating arrays; installation of operating systems (Windows, Linux); check equipment under high load.

- A guarantee from 3 years with service in 42 cities of Russia and the CIS.

- Delivery throughout Russia and the CIS . Pickup is possible.

Build your server! Trinity Server Configurator.

Oddly enough, but today RAID stacks are private solutions that do not comply with the standards. You can remove all physical disks with RAID volumes created on them, for example, from an Adaptec 6805 controller and transfer them to the 8885 controller and the volumes will be visible. But if you try to transfer volumes to a controller from another manufacturer in this way, then a miracle will not happen, and there will be no opportunity to see the data and these RAID volumes. Why it happens? Because the controller from another manufacturer supports its own stack that is not compatible with the Adaptec stack.

Each RAID volume is represented by the computer's operating system as a “SCSI disk”, which will be seen as a separate object in disk utilities such as the disk manager. It looks like this.

Thus, in the disk manager you can see 4 virtual disks: RAID0, RAID1 and two RAID5 disks.

When creating volumes, each level works.

The task of the physical disks level is to create a special area on the disks where information about the created volumes will be stored. Such an area is called a metadata area and is a complex storage container where service information is stored. Only the operating system of the controller and no one else has access to this area. In the Adaptec stack, this process of creating a service area is called - initialization and is executed through the command - initialize .

In RAID controllers that support HBA mode, there is a reverse command - deinitialize (these are 7th and 8th series controllers). This command completely removes this data structure from the physical disk and places the disk in HBA mode. That is, in order for the controller of the 7th or 8th series to start working as a normal HBA on it, it is enough to deinitialize all disks. Then they will all be visible in the utility of the central operating system such as DISK MANAGER and no other operations are required.

At the physical level, another well-known function is also performed, called coercion . On the Adaptec stack, it is produced simultaneously with initialize. This feature "cuts" a bit the hard disk capacity. The fact is that disks of the same category in capacity from different manufacturers still have unequal capacity. In order for a drive from one manufacturer to be replaced in the future, if necessary, with a drive from another manufacturer and the coercion function is performed. The cut-off container is simply “lost” forever and not used at all.

At the physical level, it is possible to place various service functions - checking a disk with a destructive or non-destructive method, formatting, completely erasing data, filling the disk with zeros, etc.

The logical level is necessary for two reasons:

Firstly, it significantly reduces the discreteness of the containers you choose to create volumes. This is done through the ability to create multiple logical disks (just cutting off a part of the capacity) on one physical disk or to create one logical disk using two or more physical disks. In this case, the capacity of various physical will simply fold. First, data will fill one area of one physical disk, then another another physical disk, etc. This method of joining disks is called Chain (in some other stacks, the word Span is used ).

Secondly, when creating such objects, information about them falls into metadata, and they cease to be tied to physical coordinates. After that, you can transfer disks from one controller port to another, transfer volumes from one controller to another, and this will still work fine.

Next comes the turn of the RAID level . As a rule, modern stacks have two sublevels at this level. At each sublayer, elementary RAID is located, such as: chain or span (this is not really RAID, it is just “summing up” the capacities from different disks), RAID0, RAID1, RAID1E, RAID5, RAID6, etc.

The lowermost sublayer accepts logical disks, for example, LD1, LD2, LD3, as shown, and “bakes” the RAID5 volume out of them. The same thing happens with LD4, LD5, LD6. From them we get the second RAID5. Two RAID5 volumes move to an even higher level, where they use the function RAID0. At the output, we get a complex volume called RAID50 (where 5 is the type of RAID used in the lower layer and 0 is the type of RAID function from the upper level). The only thing missing in determining how much RAID5 (in this case, 2) was used to create a RAID50. On the Adaptec stack, this is called the second level device. This option will be needed if you create complex volumes of type 50 or 60.

The top level is needed in order to provide such a virtual object for access by the operating system. When transferring to this level, a number of service functions are offered. The two most important Adaptec stack are build and clear.

- Clear writes to the entire new volume that is transferred to the OS, zeros.

- Build "builds" a new volume. For example, if it is RAID1, then the entire contents of the first container will be copied into the contents of the second. And so for all containers.

The figure - Virtual container type RAID1. Build operation

If you have new discs from the factory, then zeros are written in their sectors. Therefore, the result of both functions will be the same.

The difference between SimpleVolume and HBA mode.

These two modes are very similar to each other. Both can be used to create Software RAID solutions.

When a disk is running in HBA mode, no metadata is created on it. And there is no “cropping” of the capacity by the coercion function. This can cause a problem if you need to replace the disk with another manufacturer. The disk is directly transferred to the operating system. In this case, the controller cache will not work with it!

In the case of creating a Simple Volume through the configuration of volumes, a metadata area is created on the disk, the capacity is “trimmed” by the coertion function. Further, through the configuration utility, the Simple Volume is created using all available disk capacity. And after that, this object is transferred to the work of the central operating system.

Today, RAID controller stacks are constantly being improved. In its function, MaxCache plus, Adaptec has placed another level on the stack, the so-called tier level, with sublevels. This made it possible to take one RAID5 volume created on SSDs and another volume, for example, RAID60 created on SATA disks with 7200 rpm and collect from them an integrated, virtual volume where the most demanded data was stored on RAID5, and the least demanded on RAID60. In this case, the volume could be taken from different controllers. Today, this feature is not supported due to the transition of such capabilities to server operating systems. Naturally, the controller stack, as a virtualization mechanism, does not stand still and is constantly being improved both at the level of virtualization and at the level of basic and service functions.

The device is a modern RAID controller.

A modern RAID controller is an information system (simplified computer), which, due to the performance of its main functions: creating a virtual container, placing and processing information in containers (in fact, READING - RECORDING information) exchanges data with two other types of information systems:

1. With the operating system;

2. With HDD or SSD drives.

From the point of view of internal architecture, a modern RAID controller is the following set of main subsystems:

- RoC chip (RAIDonChip);

- RAM;

- Managing the “protection” of the cache memory (as a rule, it is a separate daughter card, but in the latest implementations for the 8th series of Adaptec controllers, this module is integrated into the RoC chip);

- Supercapacitor, as the power source for the cache protection module, is used in case of a main power failure;

- Flash-memory and nvSRAM (memory that does not lose information when power is turned off);

- SAS connectors, where each individual physical connector is built on the principle of four SAS ports in one physical connector;

- PCI-E slot.

Table - The main subsystem of the RAID controller.

Key features of modern Adaptec RAID controllers.

Support HBA mode.

We have already discussed above which RAID controller models support HBA mode. It is important to note that this is done independently for each disk. That is, on the controller, if you do not initialize part of the disks at the physical level, they will automatically fall into a program like the “disk manager” and will be visible and accessible there to work with them. You can use one part of such disks in HBA mode as single disks, and the other part to use for creating software RAID by means of the operating system. Initialized disks will be used to create RAID volumes by means of a RAID controller.

Hybrid volumes.

On the latest firmware versions, the Adaptec controller automatically creates a Hybrid RAID array when you create a RAID 1/10 from the same number of SSDs and HDDs (but in older firmware it was important that the RAIDD pairs the SSDs to be “master” and the HDD “slave” "). If you do not know how to check this - contact the service of those. Adaptec support. The Adaptec controller records simultaneously to the HDD and SSD. In the hybrid volume mode, reading is only with SSD! (for a volume of two HDDs, when a certain I / O threshold is exceeded, reading occurs from two disks. This is the main difference between the hybrid RAID1 / 10 mode). The result is a robust array with excellent read performance. Read like a single SSD drive. This is several orders of magnitude higher than that of the HDD. The feature comes standard with all Adaptec Series 2, 5.6, 7, 8 controllers.

Support for volume types that replace RAID5.

I hope you are well aware of the RAID5 container - this is a fairly classic solution.

Red shows the storage container created by the RAID controller. This is a RAID5 type container. The RAID5 volume itself consists of a large number of such virtual containers stacked in some kind of “stack”. A RAID5 container consists of a set of sectors of individual physical disks. The peculiarity of a RAID5 container is that it can “survive” the problems in a number of containers of hard disks of which it consists, that is, sectors of hard disks that are part of a RAID5 container lose their information, but the RAID5 container itself stores. This happens to a certain limit. With a certain number of “spoiled” sectors, the RAID5 container itself will no longer be able to guarantee 100% storage of information. Because of the transition from SCSI technology to SAS technology, the proposed basic quality of information storage by a RAID5 container has greatly deteriorated, literally, by several orders of magnitude.

This happened due to a number of objective reasons:

1. Due to the support of SATA disks, especially desktop class, the quality of storing information in a “disk sector” container has fallen markedly (SCSI controllers supported only high-quality SCSI disks);

2. The number of disks on the controller has grown many times (2-channel SCSI controller - a maximum of 26 disks, taking into account the performance of 8-10 (4-5 per channel));

3. Disk capacity has grown significantly. This means that much more RAID5 containers can fall into the RAID 5 volume (max. The capacity of SCSI disks is 400GB, the maximum capacity of a modern SATA hard disk is 8 TB).

All this increases the likelihood of problems occurring in a single RAID5 container, which significantly reduces the likelihood of storing information in a RAID5 volume. For this reason, solutions have been added to modern RAID controller stacks to eliminate the use of RAID5. These are RAID1E, RAID5EE and RAID6.

Previously, the only alternative to RAID5 was RAID10. Support for RAID 10 is naturally preserved.

Options for replacing RAID5:

Bad stripe

Previously, if even one container of the controller (stripe) lost information or could not guarantee its safety, this led to the situation of transferring the entire volume to offline mode from the RAID controller (stopping access, literally, meant - the controller cannot guarantee 100% integrity of user data on volume).

A modern controller “works out” this situation differently:

1. Access to this does not stop;

2. There is a special “bad stripe” marker on the volume, which means that there are special containers in the RAID volume that have lost information;

3. Such “damaged containers” are reported to the operating system so that it can evaluate possible measures to prevent the loss of information or to restore it.

The bad stripe marker cannot be removed from the volume. You can only delete and re-create such a volume. The appearance of the volume with the flag “bad stripe” indicates a serious error or problems at the design stage of the storage system or at the stage of its operation. As a rule, behind this situation there is a serious incompetence of the designer or system administrator.

The main source of this kind of problems is incorrectly designed RAID5.

Some implementations of RAID 5 (for example, RAID5 on desktop disks) are prohibited for volumes with user data. Requires at least RAID5 + Hot Spare, which does not make sense if you have RAID6. It turns out that where it was necessary to create RAID6, RAID5 was created, which after several years of operation led to the appearance of the BAD STRIPE marker.

SSD caching

The SSD caching feature is one of the most sought-after features for optimizing the performance of RAID volumes of powerful storage systems with a large number of users without significant cost drag, the number of solution units, without loss of storage system capacity and ensuring optimal power consumption.

To use SSD write caching, you must make sure that two conditions are met:

1. The application works in the "random read" mode;

2. Requests to data have an uneven nature - there are RAID-level containers that are accessed more often in order to read data from there, and there are those that are accessed less frequently.

It should be noted that the more users the system has, the more likely it is that requests for individual containers will take the form of a standard statistical distribution. By the parameter “number of requests per unit of time”, one can distinguish conditionally “hot data” (the number of calls to them will be more than a specified parameter) and “cold data” (the number of calls to them is less than a specified parameter).

The work of the SSD cache for reading consists in copying “hot data” onto SSD disks and further reading from the SSD, which speeds up the process at times. Since this is a copy, the read cache has natural protection, if the SSD disk that forms the SSD cache area fails, this only leads to a loss of performance, but not to a loss of data.

The basic settings of the SSD caching function for the 7Q, 8Q controllers:

1. First of all, make sure that you have “hot data” and what size they have. This is best done experimentally by placing a large enough SSD disk in the cache area and configuring it in Simple Volume mode. Integrator companies can do this job for you. About a week later, through the control function, you can remove the statistics for SSD caching. It will show if you have “hot data” and how much they occupy.

2. It is desirable to add 10–50% of capacity to this volume and, based on this data, adjust your caching scheme in case of an increase in the amount of “hot data” in the future, if there is such a tendency.

The settings allow you to “cut off” the required capacity from the capacity of your SSD drives, pick it up in the desired type of RAID volume, and the remaining capacity can be transferred to a regular RAID volume.

Next, you should evaluate whether it makes sense to use SSD cache for writing. As a rule, Internet applications are readable. The write cache is mainly used as an addition to the read cache, if the application uses writing in addition to reading. And in case of write cache, it is required to ensure cache protection. If something happens to the cache area, then the data placed there when caching the record will be lost. For protection, it is enough to use a RAID volume that provides redundancy on disks, for example, RAID1.

Possible configuration modes SSD cache area.

For individual volumes on the controller, you can independently enable and disable SSD cache for reading and writing depending on the needs and types of applications that work with each volume.

UEFI support.

All controllers and HBAs of the current Adaptec product line support uEFI BIOS mode for motherboards. Switching from MBR to uEFI allowed, for example, creating system and boot volumes larger than 2TB, which was impossible on boards with MBR BIOS (note that all Adaptec products fully support volumes> 2TB, this problem does not exist on the part of controllers and HBA). There are many other benefits of using the uEFI mode. For example, with the support of disks with a 4K sector size. All current Adaptec products support drives with a 4K sector, except for the 6th series of controllers.

It is important to remember that if the motherboard uses the MBR mode, then the controller configuration utility is called via Cntrl + A.

The figure shows the standard Adaptec configuration utility called via the Cntrl + A key combination.

In the case of the uEFI mode, the controller configurator and HBA are integrated into the motherboard BIOS. This utility is easy to find in lines containing the word "Adaptec" or "PMC". And, as can be seen in the example below, the uEFI utility has more advanced functionality than the utility called via Cntrl + A.

Hot Spare features.

Hot Spare disk acts as a passive RAID volume element, and gets into the RAID volume if something happens to one of the disks in the volume and it is no longer available to do its work. HOT SPARE is a disk that is installed on the controller, promoted and assigned to one or more volumes.

HOT SPARE, 3 :

Hot Spare daptc «» , . , RAID5 «degraded». , rescan . – HOT SPARE ( , , Global Hot Spare) , 100% «» . – Optimal. , – delete hot spare. HOT SPARE , RAID .

Power Management.

RAID volumes work differently. For example, volumes created to back up data can be used to move data, for example, two to three days per month. The question arises: how well is the power supply to the hard drives and keep the drives untwisted, if virtually all the remaining time they are not used?

The solution to this issue is the power management function. Its philosophy is that if disks are not used, their rotation can be slowed down (if such a function is supported by disks), and then completely stopped and held until they are needed, in the off state. The settings for this function are extremely simple.

First, the controller sets the time on days of the week, when this function is activated, and when it is not. This setting is tied to the work of a typical company. The parameters for setting the internal and external drives into operation are set - in pairs, three, four, etc., in order to distribute the load on the power supply units. In addition, three timer values are given. After the first one expires, if there are no I / O operations on the disks of this volume, then these disks will go into the “stand by” state, i.e. reduce their turnover by 50%. Not all disks support this mode; if it is not supported, then nothing will happen to the disk. After the second timer expires, the disks will completely stop and go to the “power off” state. The third timer is used to periodically check disks that have been turned off for a long time. The controller includes disks,carries out their non-destructive testing and, if everything is OK, then translates them back to the "power off" state.

After these settings, you can activate the power management scheme for each volume where it will be useful. Such volumes in the management system will be marked in green. This feature provides maximum benefits when used in data centers, allowing not only direct energy savings by stopping the drives, but also auxiliary savings by reducing the speed of the fans that blow the disks when the latter are turned off.

Adaptec storage management.

The management utilities that are included in the Max View Storage Manager (MSM) package are built on the most advanced standards and use the latest trends in improving principles and improving management efficiency. Therefore, we can easily use Adaptec Max View Storage Manager as a base model to look at the basic functions and methods in the field of storage management. The main control element is a RAID controller, which can exchange service information with disks, expanders and baskets and, thus, support management functions for the entire storage subsystem.

The main features of modern storage management systems:

- As a client application, a standard WEB browser is used.

- CIM . CIM MSM RAID - . , vmware.

- CLI (command line interface) . MS, , WEB , CLI – ARCCONF.EXE. , Adaptec . CLI ( ), , , .. , RAID .

- . C MSM Enterprise View «» RAID . IP , Auto Discovery.

• High level of detail, visualization and nesting of control objects. The administrator can see that the entire network segment has turned red, which means that there is a fault. If you expand the network segment icon, all servers will be visible. The problem server will be marked in red. If you click on this server, the RAID controllers installed in this system will be visible. The red color of one of them means some kind of problem. Further details will show the volumes created on this controller and the problem volume. And so up to the problem physical disk. Now the administrator knows exactly what happened, what the consequences were, and what disk to replace.

• . , , . . MSM . , HTTPS. .

• . «» MS WEB , E-mail. MSM , . Warning Error, RAID Degrade Failed. .

.

To be continued...

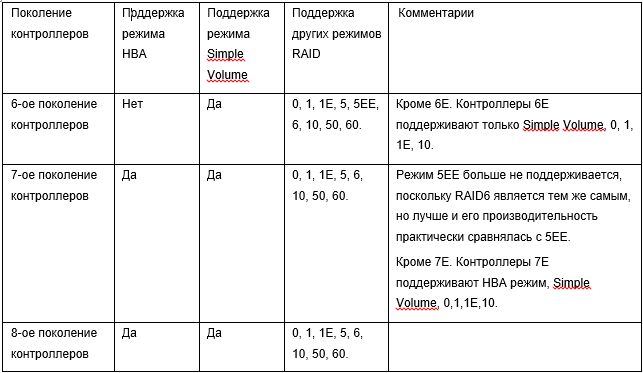

Adaptec. 6, 7 8 RAID Adaptec, 6 7 HBA Adaptec.Information provided by Adaptec by PMC (Russia). Adaptec

Source: https://habr.com/ru/post/263797/

All Articles