Modernization of operational data center

In our blog, we write a lot about building a cloud service 1cloud (for example, on the implementation of the server disk space management function on the fly), but many interesting things can be learned from the experience of working with infrastructure of other companies.

We have already talked about the imgix photoservice data center, described the search history of problems with SSD disks of the Algolia project, and today we will talk about upgrading the Stack Exchange data center.

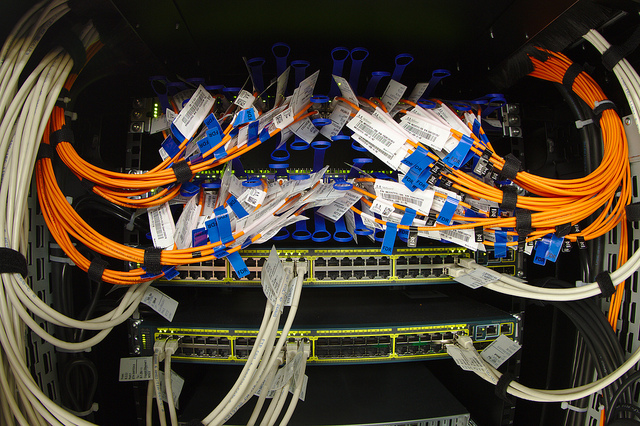

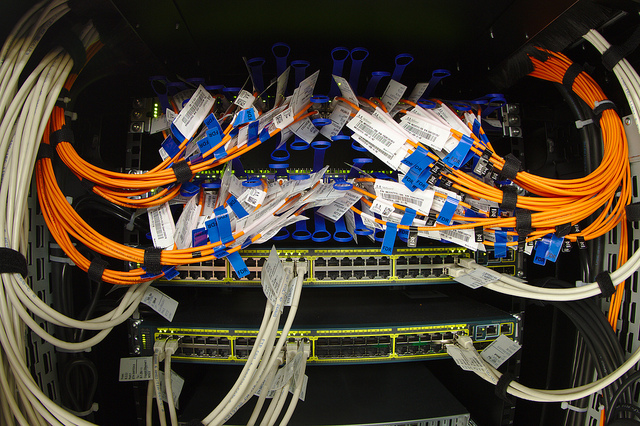

/ photo Dennis van Zuijlekom CC

')

Stack Exchange Network - a network of Q & A related websites across a wide range of areas of expertise. The first network site was launched in 2008, and in 2012 the network grew to 90 sections and 2 million users. Among the social functions, voting for questions and answers is available, and editing content is possible in wiki mode. One of the most popular platforms is Stack Overflow, which covers technological topics and questions on development and programming.

The base infrastructure of the company is located in New York and New Jersey, and due to the general approach to sharing experiences, the company decided to talk about how engineers upgraded their data center and transferred it from New York.

The decision to upgrade the equipment was made at headquarters in Denver. At that meeting, the optimal life cycle of the hardware was determined (approximately 4 years). This figure is based on the experience of operating the very first set, which just exceeded the mark of 4 years of work. Of course, attracted investments helped to make a decision.

The preparation of the plan took three days. The start of the update coincided with a warning about the snowfall , but the team decided to continue working. Before starting the upgrade, engineers solved an important task with the Redis servers, busy with Targeted Job ads. These machines were originally managed by Cassandra, and then Elasticsearch.

For these systems were ordered hard drives Samsung 840 Pro, which, as it turned out, have a serious bug in the firmware. Because of this bug, copying from server to server in a duplicate 10 Gbps network would be done at a speed of 12 MB / s, but it was decided to update the firmware. When the process was started, it went in the background, in parallel with another job (since it takes several tens of minutes to rebuild a RAID10 array with data on board, even if you use an SSD drive). As a result, it was possible to increase the speed of copying files to 100-200 MB / s.

In server rack C, it was planned to replace the SFP + 10 Gbps connection with an external channel width of 1 Gbps with a 10GBASE-T interface (RJ45 connector). This was done for several reasons: the cable used for SFP + is called twinaxial : it is very difficult to lay it and it is not easy to directly connect to the daughter cards of Dell servers. Also, SFP + FEX does not allow connecting 10GBASE-T standard devices that may appear later ( they are not in this rack , but they can be in others, for example, in a load balancer).

The plans were to simplify the network configuration, reduce the number of cables and the number of elements as a whole, while saving 4U of free space. During the work it was necessary to maintain the functioning of KVM, which temporarily relocated. Next, the SFP + FEX was lowered down in order to install the new 10GBASE-T FEX in its place. The principle of operation of the FEX technology allows us to allocate from 1 to 8 uplink ports for each switch. During the upgrade, the connection scheme will change as follows: 8/0 -> 4/4 -> 0/8.

It was decided to replace the old virtual servers with two Dell PowerEdge FX2 chassis (with two FC630 blades each). The blade server has two Intel E5-2698v3 18-core processors and 768 GB of RAM. Each hardware unit has uplink ports with a transfer rate of 80 Gbit / s and four dual-channel 10 Gigabit I / O modules. I / O aggregators , in their essence, are full switches with four external and eight internal ports at 10 Gbit / s (two for each blade server).

When the new blade servers went online, all the virtual machines migrated to these two hosts and were able to dismantle the old servers in order to free up more space. After that, the last two blade servers were launched. As a result, the company had much more powerful processors, a larger amount of memory (the volume increased from 512 GB to 3078 GB) and an upgraded network component (new blade servers with 20 GB trunk ports with all networks).

Next, the EqualLogic PS6210, the new storage unit, replaced the old PS6200 with twenty-four 10k HDDs for 900 GB and SFP + transceivers. The newer version supports the 10GBASE-T standard and contains twenty-four 10k HDDs at 1.2 TB, which means it works faster, has large disk space and the ability to connect / disconnect to / from the storage system. Along with the new storage system, a new server, NY-LOGSQL01, was installed, which replaced the outdated Dell R510. The space freed from the virtual machine hosts made it possible to install a new file server and support server.

Due to the deteriorating weather conditions, public transport stopped walking, and the storm even managed to cut down electricity. The team decided to continue working as far as possible in order to get ahead of schedule. The guys from QTS came to the rescue, they ordered the manager to find at least some place to sleep.

When all the virtual machines started working, the storage was set up, and several wires were torn out, the clock showed 9:30 am on Tuesday. The second day began with the assembly of web servers: the R620 upgrade, replacing the four gigabit daughter boards with two 10-gigabit and two gigabit.

At this stage, only the old storage system and the old network storage for SQL R510 remained connected, but there were some difficulties with installing a third PCIe card on the backup server. Everything came up because of the tape drive, which needed another SAS controller , which was simply forgotten.

The new plan included the use of existing 10 Gigabit network daughter cards (NDC), the removal of a PCIe SFP + adapter and the replacement of it with a new 12 Gigabit SAS controller. For this, it was necessary to independently make a replacement of the mounting bracket from pieces of metal (the cards are supplied without brackets). Further, it was decided to swap the last two SFP + units in the C rack with a backup server (including the new DAS MD1400).

The last element, which took its place on the rack C, was NY-GIT02 - a new server Gitlab and TeamCity. Then followed a long process of cable management, which had to deal with almost everything (and in relation to the previously previously connected equipment). After completing work on the two web servers, cable management and removal of network equipment, the clock showed 8:30 in the morning.

On the third day, the team returned to the data center around 5 pm. It remained to replace the Redis servers and “service servers” (the server for the tag engine implementation, the ElasticSearch index server, and others). One of the boxes designed for the tag engine was the already well-known R620, which received an upgrade to 10 Gbps a bit earlier, so now it has not been touched.

The array rebuilding process looked like this: deploy from a Windows 2012 R2 image, install updates and DSC, and then specialized software through scripts. StackServer (from the point of view of the sysadmin) is just a window service, and the build TeamCity controls the installation - there is a special flag for this. These boxes also have small instances of IIS for internal services, which are a simple add-in. Their last task is to organize shared access to the DFS storage.

The old Dell R610 web server solution with two Intel E5640 processors and 48 GB of RAM (updated over time) was replaced by a new one with two Intel 2678W v3 processors and 64 GB of DDR4 memory.

Day 4 went to work with stand D. It took a while to add cable sleeves (where they were missing) and meticulously replace most of the wires. How to understand that the cable is plugged into the correct port? How to know where his other end goes? Tags Lots and lots of tags. It took a lot of time, but it will save it in the future.

All photo report

Stream #SnowOps on Twitter

Upgrades to equipment of this magnitude do not go without problems. Similar to the situation in 2010, when the database server was updated, and the performance improvement was not the way I would like to see it, a bug was found with a system with BIOS versions 1.0.4 / 1.1.4 (where the performance settings are simply not taken into account).

Other issues included a problem when running DSC configuration scripts, starting a “bare” server with IIS, moving disks from a RAID array R610 to R630 (prompting for booting via PXE) and so on. In addition, it turned out that the DAS arrays of the Dell MD1400 (13th generation and 12Gbit / s) do not support connection to the 12th generation servers, for example, to the R620 backup server, and the diagnostics of the Dell hardware does not affect power supplies. By the way, the hypothesis was confirmed that it is quite problematic to turn over a web server opened upside down, when several important elements were unscrewed from it.

As a result, the team managed to reduce the page processing time from about 30-35 to 10-15 ms, but according to them, this is only a small tip of the iceberg.

PS We try not only the experience of working with the 1cloud virtual infrastructure service , but also the experience of Western experts in our blog on Habré. Do not forget to subscribe to updates, friends!

We have already talked about the imgix photoservice data center, described the search history of problems with SSD disks of the Algolia project, and today we will talk about upgrading the Stack Exchange data center.

/ photo Dennis van Zuijlekom CC

')

Stack Exchange Network - a network of Q & A related websites across a wide range of areas of expertise. The first network site was launched in 2008, and in 2012 the network grew to 90 sections and 2 million users. Among the social functions, voting for questions and answers is available, and editing content is possible in wiki mode. One of the most popular platforms is Stack Overflow, which covers technological topics and questions on development and programming.

The base infrastructure of the company is located in New York and New Jersey, and due to the general approach to sharing experiences, the company decided to talk about how engineers upgraded their data center and transferred it from New York.

The decision to upgrade the equipment was made at headquarters in Denver. At that meeting, the optimal life cycle of the hardware was determined (approximately 4 years). This figure is based on the experience of operating the very first set, which just exceeded the mark of 4 years of work. Of course, attracted investments helped to make a decision.

The preparation of the plan took three days. The start of the update coincided with a warning about the snowfall , but the team decided to continue working. Before starting the upgrade, engineers solved an important task with the Redis servers, busy with Targeted Job ads. These machines were originally managed by Cassandra, and then Elasticsearch.

For these systems were ordered hard drives Samsung 840 Pro, which, as it turned out, have a serious bug in the firmware. Because of this bug, copying from server to server in a duplicate 10 Gbps network would be done at a speed of 12 MB / s, but it was decided to update the firmware. When the process was started, it went in the background, in parallel with another job (since it takes several tens of minutes to rebuild a RAID10 array with data on board, even if you use an SSD drive). As a result, it was possible to increase the speed of copying files to 100-200 MB / s.

In server rack C, it was planned to replace the SFP + 10 Gbps connection with an external channel width of 1 Gbps with a 10GBASE-T interface (RJ45 connector). This was done for several reasons: the cable used for SFP + is called twinaxial : it is very difficult to lay it and it is not easy to directly connect to the daughter cards of Dell servers. Also, SFP + FEX does not allow connecting 10GBASE-T standard devices that may appear later ( they are not in this rack , but they can be in others, for example, in a load balancer).

The plans were to simplify the network configuration, reduce the number of cables and the number of elements as a whole, while saving 4U of free space. During the work it was necessary to maintain the functioning of KVM, which temporarily relocated. Next, the SFP + FEX was lowered down in order to install the new 10GBASE-T FEX in its place. The principle of operation of the FEX technology allows us to allocate from 1 to 8 uplink ports for each switch. During the upgrade, the connection scheme will change as follows: 8/0 -> 4/4 -> 0/8.

It was decided to replace the old virtual servers with two Dell PowerEdge FX2 chassis (with two FC630 blades each). The blade server has two Intel E5-2698v3 18-core processors and 768 GB of RAM. Each hardware unit has uplink ports with a transfer rate of 80 Gbit / s and four dual-channel 10 Gigabit I / O modules. I / O aggregators , in their essence, are full switches with four external and eight internal ports at 10 Gbit / s (two for each blade server).

When the new blade servers went online, all the virtual machines migrated to these two hosts and were able to dismantle the old servers in order to free up more space. After that, the last two blade servers were launched. As a result, the company had much more powerful processors, a larger amount of memory (the volume increased from 512 GB to 3078 GB) and an upgraded network component (new blade servers with 20 GB trunk ports with all networks).

Next, the EqualLogic PS6210, the new storage unit, replaced the old PS6200 with twenty-four 10k HDDs for 900 GB and SFP + transceivers. The newer version supports the 10GBASE-T standard and contains twenty-four 10k HDDs at 1.2 TB, which means it works faster, has large disk space and the ability to connect / disconnect to / from the storage system. Along with the new storage system, a new server, NY-LOGSQL01, was installed, which replaced the outdated Dell R510. The space freed from the virtual machine hosts made it possible to install a new file server and support server.

Due to the deteriorating weather conditions, public transport stopped walking, and the storm even managed to cut down electricity. The team decided to continue working as far as possible in order to get ahead of schedule. The guys from QTS came to the rescue, they ordered the manager to find at least some place to sleep.

When all the virtual machines started working, the storage was set up, and several wires were torn out, the clock showed 9:30 am on Tuesday. The second day began with the assembly of web servers: the R620 upgrade, replacing the four gigabit daughter boards with two 10-gigabit and two gigabit.

At this stage, only the old storage system and the old network storage for SQL R510 remained connected, but there were some difficulties with installing a third PCIe card on the backup server. Everything came up because of the tape drive, which needed another SAS controller , which was simply forgotten.

The new plan included the use of existing 10 Gigabit network daughter cards (NDC), the removal of a PCIe SFP + adapter and the replacement of it with a new 12 Gigabit SAS controller. For this, it was necessary to independently make a replacement of the mounting bracket from pieces of metal (the cards are supplied without brackets). Further, it was decided to swap the last two SFP + units in the C rack with a backup server (including the new DAS MD1400).

The last element, which took its place on the rack C, was NY-GIT02 - a new server Gitlab and TeamCity. Then followed a long process of cable management, which had to deal with almost everything (and in relation to the previously previously connected equipment). After completing work on the two web servers, cable management and removal of network equipment, the clock showed 8:30 in the morning.

On the third day, the team returned to the data center around 5 pm. It remained to replace the Redis servers and “service servers” (the server for the tag engine implementation, the ElasticSearch index server, and others). One of the boxes designed for the tag engine was the already well-known R620, which received an upgrade to 10 Gbps a bit earlier, so now it has not been touched.

The array rebuilding process looked like this: deploy from a Windows 2012 R2 image, install updates and DSC, and then specialized software through scripts. StackServer (from the point of view of the sysadmin) is just a window service, and the build TeamCity controls the installation - there is a special flag for this. These boxes also have small instances of IIS for internal services, which are a simple add-in. Their last task is to organize shared access to the DFS storage.

The old Dell R610 web server solution with two Intel E5640 processors and 48 GB of RAM (updated over time) was replaced by a new one with two Intel 2678W v3 processors and 64 GB of DDR4 memory.

Day 4 went to work with stand D. It took a while to add cable sleeves (where they were missing) and meticulously replace most of the wires. How to understand that the cable is plugged into the correct port? How to know where his other end goes? Tags Lots and lots of tags. It took a lot of time, but it will save it in the future.

All photo report

Stream #SnowOps on Twitter

Upgrades to equipment of this magnitude do not go without problems. Similar to the situation in 2010, when the database server was updated, and the performance improvement was not the way I would like to see it, a bug was found with a system with BIOS versions 1.0.4 / 1.1.4 (where the performance settings are simply not taken into account).

Other issues included a problem when running DSC configuration scripts, starting a “bare” server with IIS, moving disks from a RAID array R610 to R630 (prompting for booting via PXE) and so on. In addition, it turned out that the DAS arrays of the Dell MD1400 (13th generation and 12Gbit / s) do not support connection to the 12th generation servers, for example, to the R620 backup server, and the diagnostics of the Dell hardware does not affect power supplies. By the way, the hypothesis was confirmed that it is quite problematic to turn over a web server opened upside down, when several important elements were unscrewed from it.

As a result, the team managed to reduce the page processing time from about 30-35 to 10-15 ms, but according to them, this is only a small tip of the iceberg.

PS We try not only the experience of working with the 1cloud virtual infrastructure service , but also the experience of Western experts in our blog on Habré. Do not forget to subscribe to updates, friends!

Source: https://habr.com/ru/post/263701/

All Articles