Improving the convenience of working with Android applications: gesture recognition and more

The user turned the phone when received a call? Remove the sound. The device is raised as if they want to take a photo? Turn on, if for us it is not done in the old manner, the camera. How? Sensors to help us.

In mobile devices, there are usually quite a few sensors. Among them you can find an accelerometer, gyroscope, magnetometer, barometer, light sensor and others.

Owners of smartphones and tablets physically interact with them: move, shake, tilt. The information that comes from different sensors is well suited for recognizing actions taking place in the physical world. When the action is recognized, you can respond to it. Using sensor readings when developing applications allows you to equip programs with truly user-friendly features that will not leave users indifferent.

')

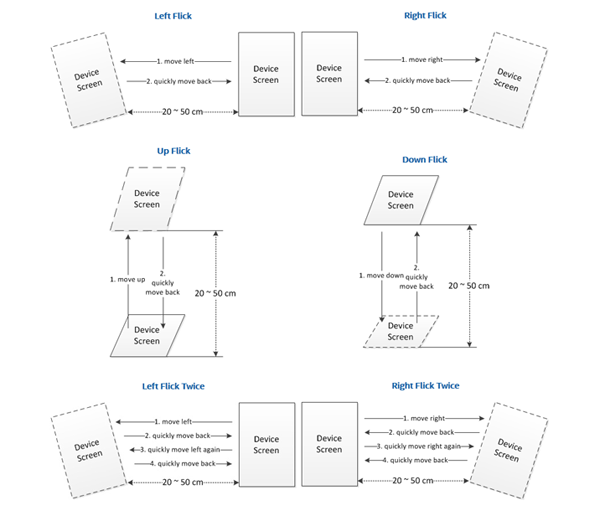

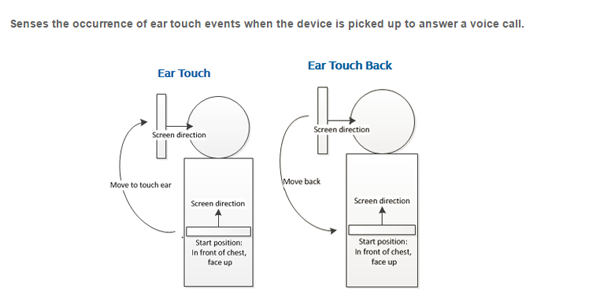

With the release of the Intel Context Sensing SDK for Android * v1.6.7, application creators have the opportunity to work with several new types of context-sensitive data. That is, data based on information about the environment and the user's actions. Among them is the position of the device in space (position), lifting it to the ear, as at the beginning of a conversation (ear touch), fast moving and returning to its original position (flick). Do not confuse the move gesture in space with the eponymous flick, related to the work with the touch display. Also, the new library supports the recognition by the device of drawing various characters (glyphs) in the air.

From this material you will learn how to extract from the sensor readings valuable information about what is happening with the device. In addition, we will look at examples using the Context Sensing SDK in detecting movements, device shakes, and character recognition.

However, Context Sensing SDK, even if limited to questions of the spatial position of the device, can be much more. For example, to determine what kind of physical activity a user is busy with. Is he walking, resting, riding a bike? Or maybe running, traveling by car or by train? Having such information about what is happening, you can take the interaction of the user and his mobile device on which your application is installed to a whole new level.

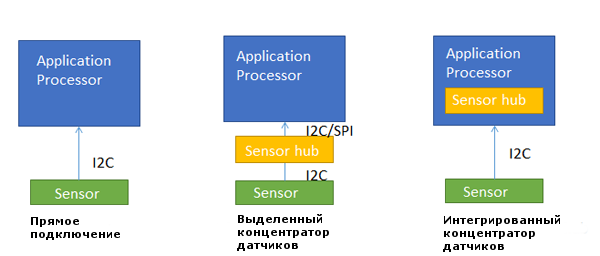

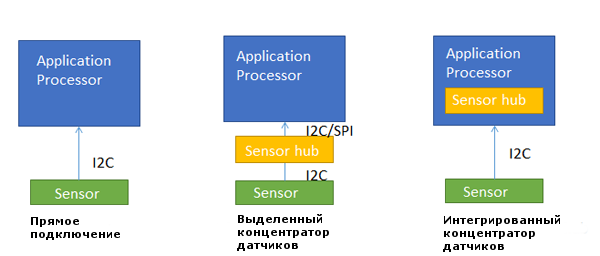

A common question that arises when working with sensors is to connect them to the application processor (AP) at the hardware level. Below you can see three ways to connect. Namely, it is a direct connection, the use of a dedicated sensor hub (discrete sensor hub) and a built-in processor hub (integrated sensor hub, ISH).

Comparison of different approaches to pairing sensors with a processor application.

If the sensors are attached directly to the AP, this is called a direct connection. However, there is one problem. It lies in the fact that the sensors consume processor resources to perform measurements.

The next more advanced method is to use a dedicated sensor hub. As a result, sensors can operate continuously, without overloading the processor. Even if the processor goes into sleep mode ( S3 ), the sensor hub can wake it up using the interrupt mechanism.

The next step in the development of interaction between processors and sensors is to use an integrated hub. This, among other benefits, leads to a decrease in the number of discrete components used and to a decrease in the cost of the device.

A sensor hub is essentially a microchip that serves to organize the interfacing of multiple devices (Multipoint Control Unit, MCU). For it, you can write programs in C / C ++ languages and upload the compiled code to the MCU.

In 2015, Intel launches the CherryTrail-T platform, designed for tablets, and the SkyLake platform, designed for two-in-one devices. These solutions use sensor hubs. You can learn more about integrated hubs by following the link .

Below the sensor coordinate system. In particular, it shows an accelerometer, which is able to measure acceleration along the X, Y and Z axes, and a gyroscope that tracks the device’s position in space, in particular, turns around the same axes.

The coordinate systems of the accelerometer and gyroscope.

Acceleroration values along the accelerometer axes at different positions of the device at rest. Read in PDF , without registration and SMS.

The table shows the new events caused by the physical movements of devices included in the Android OS Lollipop.

New events supported by Android Lollipop

The definition of these events can be found in the Android Lollipop source folder: /hardware/libhardware/include/hardware/sensor.h.

The process of gesture recognition can be divided into the following stages: preliminary processing of initial data (preprocessing), selection of characteristic features (feature extraction) and comparison with templates (template matching).

The process of recognizing gestures.

Consider the stages of the gesture recognition process in more detail.

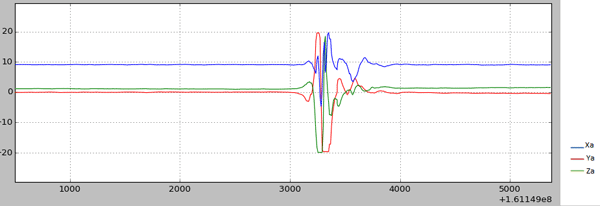

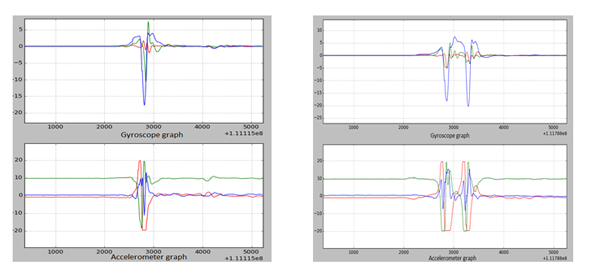

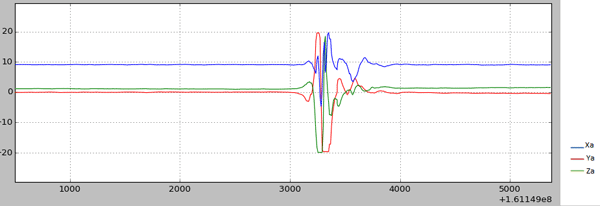

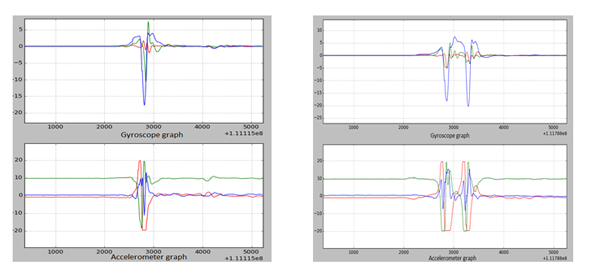

Pre-processing begins after raw data has been obtained from the sensor. Below you can see a graphic representation of the data received from the gyroscope after the device was once quickly tilted to the right and returned to its former state (flick gesture). The following is a graph for a similar gesture, but already constructed from the data obtained from the accelerometer.

Data obtained from the gyroscope (single tilt of the device to the right and a quick return to its original position, RIGHT FLICK ONCE).

The data obtained from the accelerometer (a single tilt of the device to the right and a quick return to its original position, RIGHT FLICK ONCE).

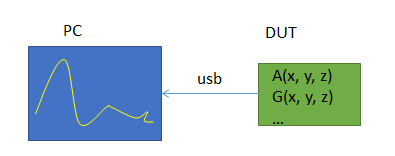

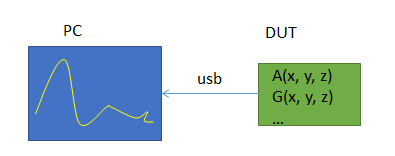

You can create a program that will send data received from the sensor on an Android device over the network, then write a script in Python * designed to work on a PC. This allows you to receive, for example, from a smartphone, changing sensor data and build graphs.

So, the following is involved in this step:

Dynamic display of data received from sensors.

At this stage, we remove the unusual values of the signals, and, as is often done, we use a filter to suppress interference. The graph below shows the sensor data obtained after the device was rotated 90 °, and then returned to its original position.

Elimination of gyro and noise.

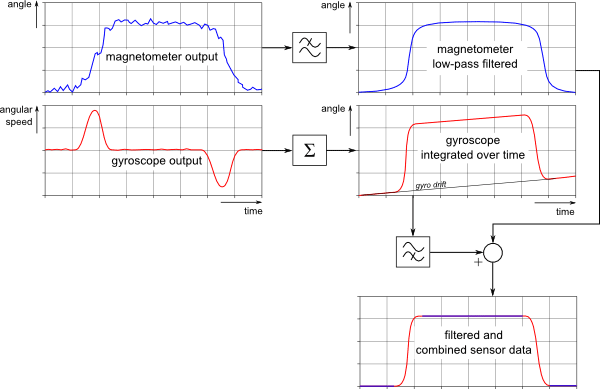

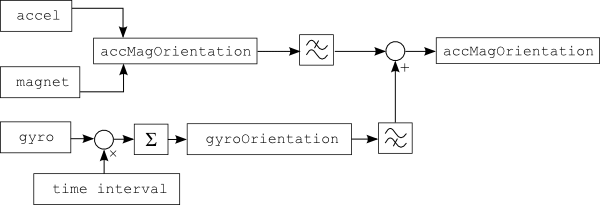

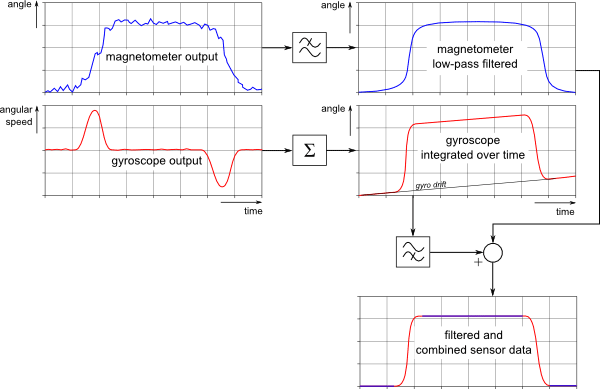

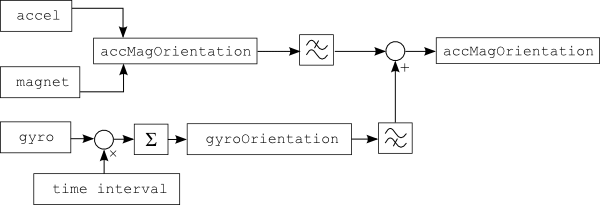

Noise may be present in the signal that the sensor produces, this can affect the recognition results. For example, characteristics such as FAR (False Acceptance Rate, False Pass Rate) and FRR (False Rejection Rate, False Rejection Rate) indicate the level of occurrence of failures in the recognition of signals. Combining data from different sensors, we can improve the accuracy of event recognition. Combining sensor data (sensor fusion; once a useful link and two ) has found application in many mobile devices. Below is an example of using an accelerometer, a magnetometer and a gyroscope to obtain information about the orientation of the device in space. Usually, in the process of extracting the characteristic features of signals, the FFT (Fast Fourier Transform, Fast Fourier Transform) method and zero-crossing analysis are used. Accelerometer and magnetometer are exposed to electromagnetic radiation. Usually these sensors need calibration.

Retrieving device orientation in space using a combination of sensor data.

Characteristic features of the signal include minimum and maximum values, peaks and valleys. After receiving this information, proceed to the next step.

After analyzing the graph data from the accelerometer, you can find the following:

From this it follows that it is realistic to design a very simple gesture recognition system based on a finite state machine. In comparison with the gesture recognition approach based on hidden Markov models (HMM), this approach is more reliable and has higher accuracy.

Charts of data from the accelerometer and gyroscope, obtained by a single or double tilt of the device with a return to its original position.

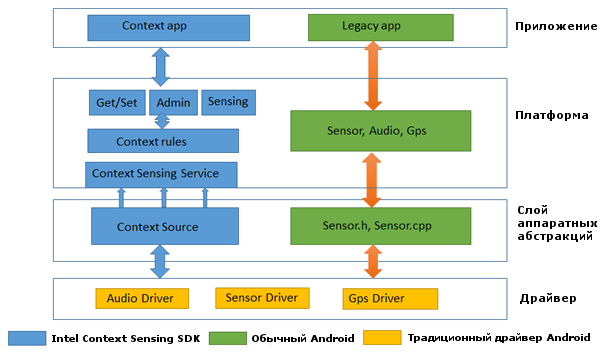

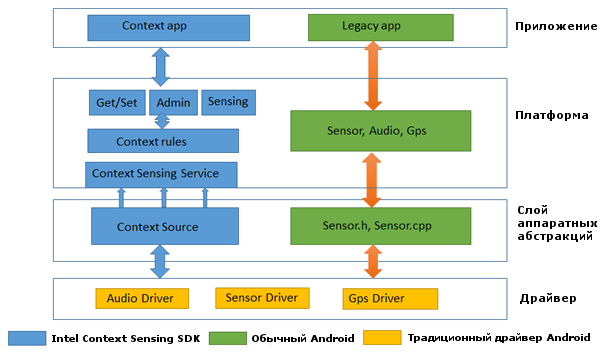

The Intel Context Sensing SDK uses information from sensors and acts as a data provider for context-oriented services. Below you can see a diagram of the architecture of the system, on which the traditional application and the context-oriented application are presented.

Comparison of Intel Context Sensing SDK and traditional Android architecture.

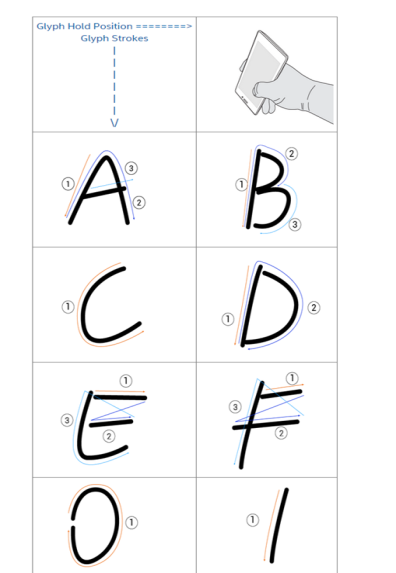

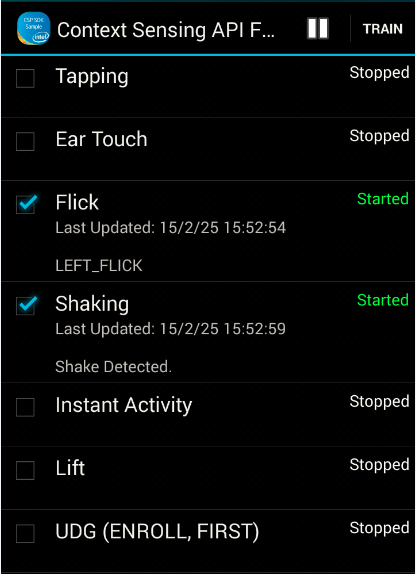

Currently, the SDK supports character recognition, which you can draw with a device in the air (glyph), tilt gestures with a return to its original state (flick), and a touch gesture with an ear device (ear_touch). The demonstration of these functions is implemented in the example ContextSensingApiFlowSample, which is designed to work with Android-devices.

In order to experience this and other examples of using the Intel Context Sensing SDK, you need to download the Context Sensing SDK that comes with the Intel Integrated Native Developer Experience ( Intel INDE ). After downloading and installing the package, provided you use the standard paths, everything you need can be found at C: \ Intel \ INDE \ context_sdk_1.6.7.x. In particular, there is a intel-context-sensing-1.6.7.x.jar JAVA library for connecting to Android projects, and a Samples folder containing the code for Android demo applications.

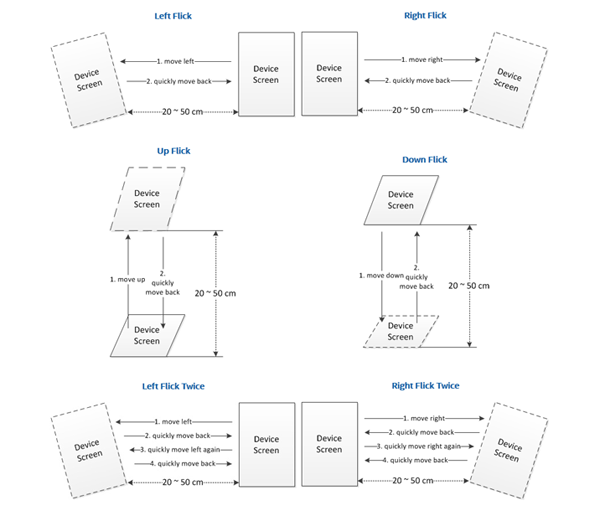

Tilt gesture support in the Intel Context SDK.

The Intel Context Sensing SDK supports tilt gesture recognition with a return to its original state in four directions. Namely - tilts left, right, up and down.

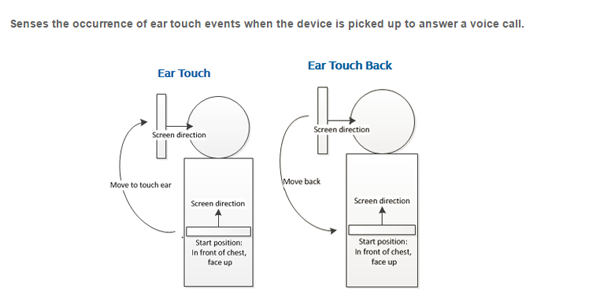

Ear Touch gesture support in the Intel Context SDK.

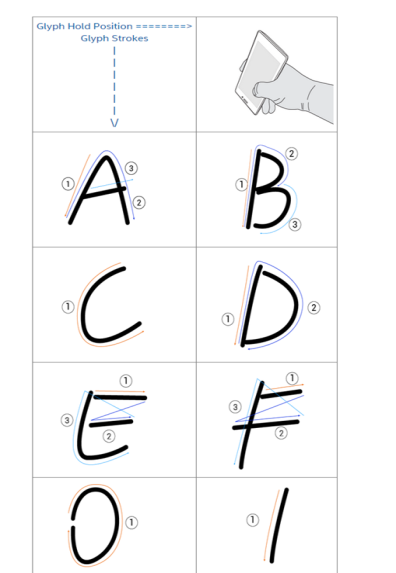

Support for drawing characters in the air in the Intel Context SDK.

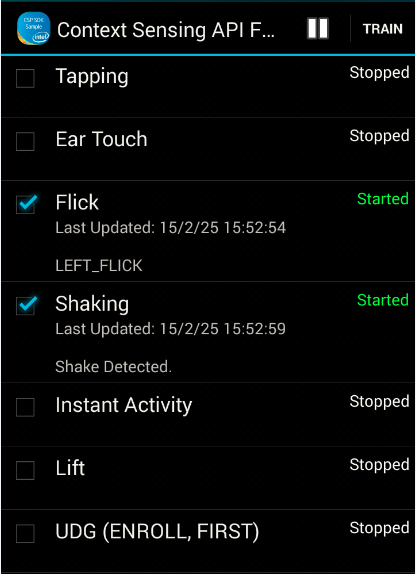

The ContextSensingApiFlowSample sample application using the Intel Context SDK.

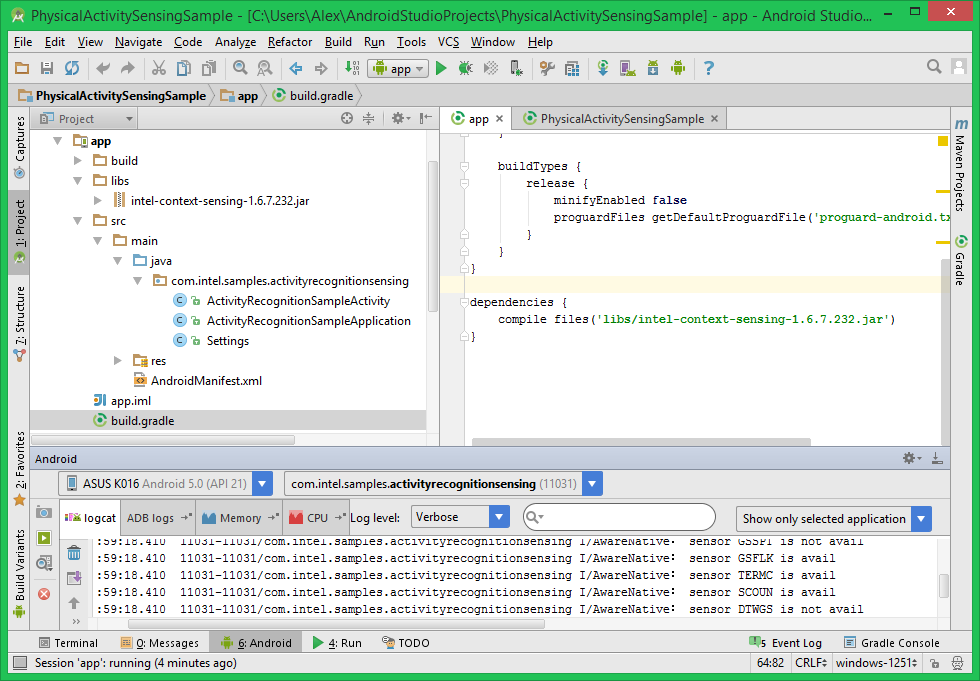

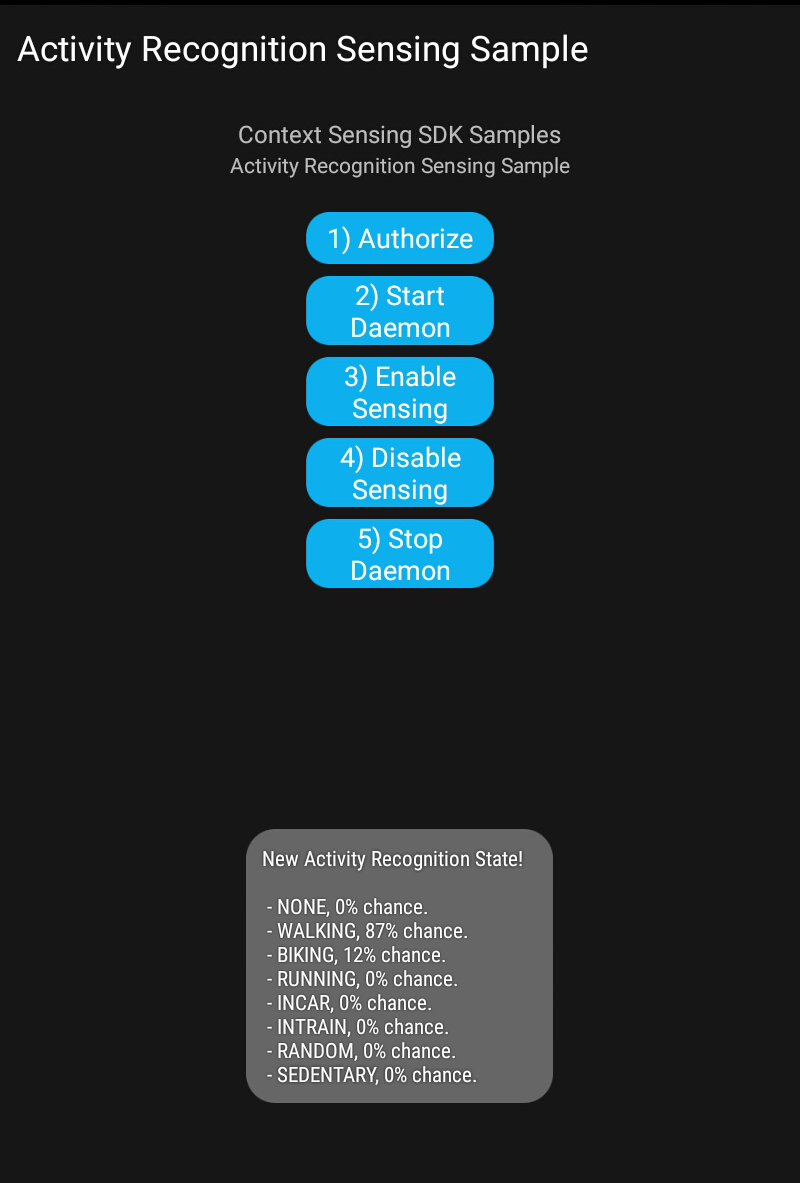

Now let's take a look at the example application PhysicalActivitySensingSample. As the name implies, it allows you to use the capabilities of the Intel Context SDK to recognize the user's physical activity (Activity Recognition). The data from the sensors are analyzed, after which the system issues a forecast, indicating the probabilities for various types of activity as a percentage.

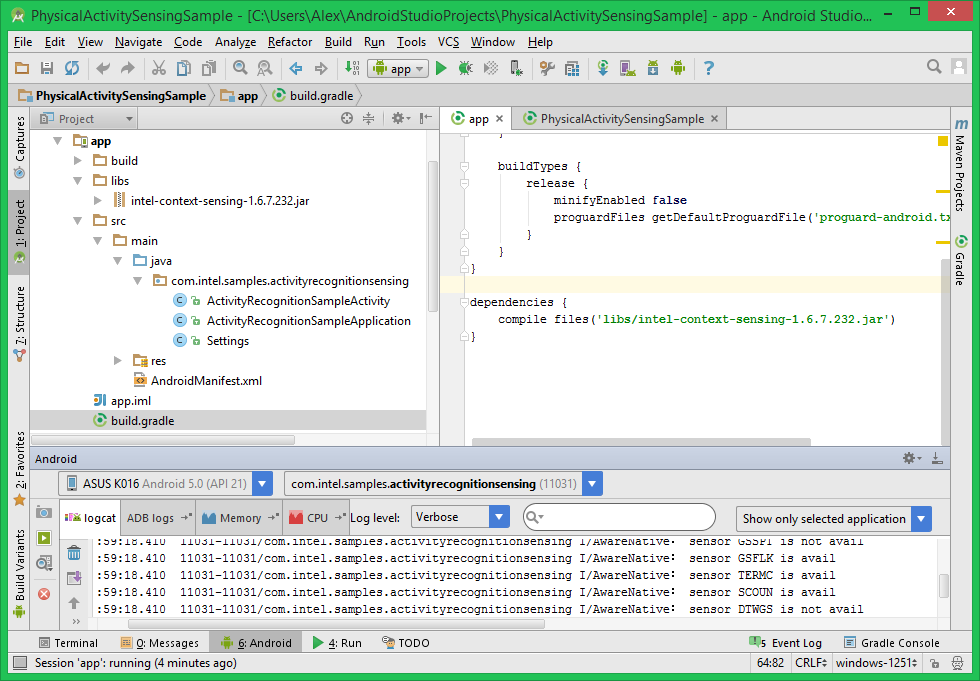

Implemented an example in the form of an Eclipse project. It can be imported into Android Studio, and in order for the code to work, you need to add the intel-context-sensing-1.6.7.x.jar library to the project and connect it to build.gradle:

Preparing the PhysicalActivitySensingSample project for launch in Android Studio.

The project contains two main elements. The first is an Activity that implements the user interface. The second is a class inherited from Application, containing several auxiliary inner classes. Its main task is to support the work with the object of the com.intel.context.Sensing class, which we access by pressing buttons, and which provides information about the user's physical activity.

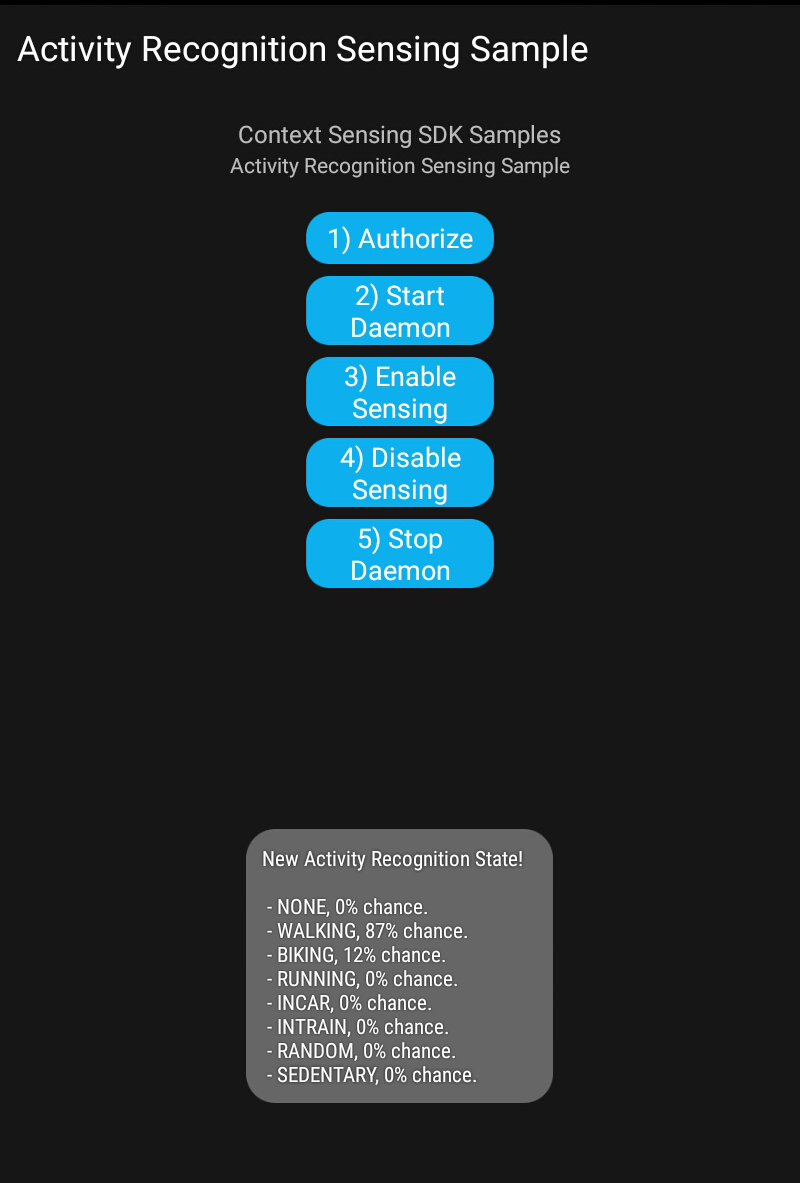

The test took place on the ASUS Fonepad 8 tablet, which is based on the Intel Atom Z3530 CPU. After starting the application, we need two buttons. First you need to click the Start Daemon button, then - Enable Sensing (respectively, after completing the experiments - Disable Sensing and Stop Daemon). Now you can expect a message with information about what, according to the program, we are busy.

Analysis of the user's physical activity.

During the experiments, the tablet was taken for a walk. As can be seen from the message, the program suggested that the user, with a probability of 87%, walks on foot (the WALKING parameter). If the device lies motionless, the system decides that the user is resting, setting a very high rate for the SEDENTARY parameter (100% with no movement at all). If you shake and rotate the device randomly, you can usually see a fairly high indicator of the RANDOM parameter.

Sensors are widely used in modern computing systems. Motion recognition implemented on their base, applied in mobile devices, can become a valuable competitive advantage of applications that attracts users. Working with sensors is a very important feature that can significantly improve the usability of mobile devices and applications. The recently released Intel Context Sensing SDK v1.6.7 allows you to reproach and simplify the creation of applications that use sensor data. This is good for developers and for those who use their applications.

» Sensor stack

» Feature Extraction

Overview

In mobile devices, there are usually quite a few sensors. Among them you can find an accelerometer, gyroscope, magnetometer, barometer, light sensor and others.

Owners of smartphones and tablets physically interact with them: move, shake, tilt. The information that comes from different sensors is well suited for recognizing actions taking place in the physical world. When the action is recognized, you can respond to it. Using sensor readings when developing applications allows you to equip programs with truly user-friendly features that will not leave users indifferent.

')

With the release of the Intel Context Sensing SDK for Android * v1.6.7, application creators have the opportunity to work with several new types of context-sensitive data. That is, data based on information about the environment and the user's actions. Among them is the position of the device in space (position), lifting it to the ear, as at the beginning of a conversation (ear touch), fast moving and returning to its original position (flick). Do not confuse the move gesture in space with the eponymous flick, related to the work with the touch display. Also, the new library supports the recognition by the device of drawing various characters (glyphs) in the air.

From this material you will learn how to extract from the sensor readings valuable information about what is happening with the device. In addition, we will look at examples using the Context Sensing SDK in detecting movements, device shakes, and character recognition.

However, Context Sensing SDK, even if limited to questions of the spatial position of the device, can be much more. For example, to determine what kind of physical activity a user is busy with. Is he walking, resting, riding a bike? Or maybe running, traveling by car or by train? Having such information about what is happening, you can take the interaction of the user and his mobile device on which your application is installed to a whole new level.

Preliminary Information

A common question that arises when working with sensors is to connect them to the application processor (AP) at the hardware level. Below you can see three ways to connect. Namely, it is a direct connection, the use of a dedicated sensor hub (discrete sensor hub) and a built-in processor hub (integrated sensor hub, ISH).

Comparison of different approaches to pairing sensors with a processor application.

If the sensors are attached directly to the AP, this is called a direct connection. However, there is one problem. It lies in the fact that the sensors consume processor resources to perform measurements.

The next more advanced method is to use a dedicated sensor hub. As a result, sensors can operate continuously, without overloading the processor. Even if the processor goes into sleep mode ( S3 ), the sensor hub can wake it up using the interrupt mechanism.

The next step in the development of interaction between processors and sensors is to use an integrated hub. This, among other benefits, leads to a decrease in the number of discrete components used and to a decrease in the cost of the device.

A sensor hub is essentially a microchip that serves to organize the interfacing of multiple devices (Multipoint Control Unit, MCU). For it, you can write programs in C / C ++ languages and upload the compiled code to the MCU.

In 2015, Intel launches the CherryTrail-T platform, designed for tablets, and the SkyLake platform, designed for two-in-one devices. These solutions use sensor hubs. You can learn more about integrated hubs by following the link .

Below the sensor coordinate system. In particular, it shows an accelerometer, which is able to measure acceleration along the X, Y and Z axes, and a gyroscope that tracks the device’s position in space, in particular, turns around the same axes.

The coordinate systems of the accelerometer and gyroscope.

Acceleroration values along the accelerometer axes at different positions of the device at rest. Read in PDF , without registration and SMS.

The table shows the new events caused by the physical movements of devices included in the Android OS Lollipop.

New events supported by Android Lollipop

| Title | Description |

| SENSOR_STRING_TYPE_PICK_UP_GESTURE | Called when the device is taken in hand, no matter where it was before (on the table, in your pocket, in your bag) |

| SENSOR_STRING_TYPE_GLANCE_GESTURE | It allows, based on a specific movement, to turn on the screen for a short time so that the user can look at it. |

| SENSOR_STRING_TYPE_WAKE_GESTURE | Allows you to unlock a device based on the specific movement of this device in space. |

| SENSOR_STRING_TYPE_TILT_DETECTOR | The corresponding event is generated each time the device is tilted. |

Gesture recognition process

The process of gesture recognition can be divided into the following stages: preliminary processing of initial data (preprocessing), selection of characteristic features (feature extraction) and comparison with templates (template matching).

The process of recognizing gestures.

Consider the stages of the gesture recognition process in more detail.

Preliminary processing of source data

Pre-processing begins after raw data has been obtained from the sensor. Below you can see a graphic representation of the data received from the gyroscope after the device was once quickly tilted to the right and returned to its former state (flick gesture). The following is a graph for a similar gesture, but already constructed from the data obtained from the accelerometer.

Data obtained from the gyroscope (single tilt of the device to the right and a quick return to its original position, RIGHT FLICK ONCE).

The data obtained from the accelerometer (a single tilt of the device to the right and a quick return to its original position, RIGHT FLICK ONCE).

You can create a program that will send data received from the sensor on an Android device over the network, then write a script in Python * designed to work on a PC. This allows you to receive, for example, from a smartphone, changing sensor data and build graphs.

So, the following is involved in this step:

- A computer running a Python script that receives data from sensors.

- An application that runs on a device under test (DUT-run application). It collects information from sensors and sends it over the network.

- Android test bridge (android debug bridge, ADB), configured to send data to the device and receive it. When you configure it, use the command: adb forward tcp: port tcp: port

Dynamic display of data received from sensors.

At this stage, we remove the unusual values of the signals, and, as is often done, we use a filter to suppress interference. The graph below shows the sensor data obtained after the device was rotated 90 °, and then returned to its original position.

Elimination of gyro and noise.

Selection of characteristic features

Noise may be present in the signal that the sensor produces, this can affect the recognition results. For example, characteristics such as FAR (False Acceptance Rate, False Pass Rate) and FRR (False Rejection Rate, False Rejection Rate) indicate the level of occurrence of failures in the recognition of signals. Combining data from different sensors, we can improve the accuracy of event recognition. Combining sensor data (sensor fusion; once a useful link and two ) has found application in many mobile devices. Below is an example of using an accelerometer, a magnetometer and a gyroscope to obtain information about the orientation of the device in space. Usually, in the process of extracting the characteristic features of signals, the FFT (Fast Fourier Transform, Fast Fourier Transform) method and zero-crossing analysis are used. Accelerometer and magnetometer are exposed to electromagnetic radiation. Usually these sensors need calibration.

Retrieving device orientation in space using a combination of sensor data.

Characteristic features of the signal include minimum and maximum values, peaks and valleys. After receiving this information, proceed to the next step.

Pattern matching

After analyzing the graph data from the accelerometer, you can find the following:

- A typical tilt of the device to the right with returning to the initial position gives a graph with two troughs and one peak.

- The same gesture, but performed twice, contains three cavities and two peaks.

From this it follows that it is realistic to design a very simple gesture recognition system based on a finite state machine. In comparison with the gesture recognition approach based on hidden Markov models (HMM), this approach is more reliable and has higher accuracy.

Charts of data from the accelerometer and gyroscope, obtained by a single or double tilt of the device with a return to its original position.

Examples: Intel® Context Sensing SDK in action

The Intel Context Sensing SDK uses information from sensors and acts as a data provider for context-oriented services. Below you can see a diagram of the architecture of the system, on which the traditional application and the context-oriented application are presented.

Comparison of Intel Context Sensing SDK and traditional Android architecture.

Currently, the SDK supports character recognition, which you can draw with a device in the air (glyph), tilt gestures with a return to its original state (flick), and a touch gesture with an ear device (ear_touch). The demonstration of these functions is implemented in the example ContextSensingApiFlowSample, which is designed to work with Android-devices.

In order to experience this and other examples of using the Intel Context Sensing SDK, you need to download the Context Sensing SDK that comes with the Intel Integrated Native Developer Experience ( Intel INDE ). After downloading and installing the package, provided you use the standard paths, everything you need can be found at C: \ Intel \ INDE \ context_sdk_1.6.7.x. In particular, there is a intel-context-sensing-1.6.7.x.jar JAVA library for connecting to Android projects, and a Samples folder containing the code for Android demo applications.

Tilt gesture support in the Intel Context SDK.

The Intel Context Sensing SDK supports tilt gesture recognition with a return to its original state in four directions. Namely - tilts left, right, up and down.

Ear Touch gesture support in the Intel Context SDK.

Support for drawing characters in the air in the Intel Context SDK.

The ContextSensingApiFlowSample sample application using the Intel Context SDK.

Now let's take a look at the example application PhysicalActivitySensingSample. As the name implies, it allows you to use the capabilities of the Intel Context SDK to recognize the user's physical activity (Activity Recognition). The data from the sensors are analyzed, after which the system issues a forecast, indicating the probabilities for various types of activity as a percentage.

Implemented an example in the form of an Eclipse project. It can be imported into Android Studio, and in order for the code to work, you need to add the intel-context-sensing-1.6.7.x.jar library to the project and connect it to build.gradle:

Preparing the PhysicalActivitySensingSample project for launch in Android Studio.

The project contains two main elements. The first is an Activity that implements the user interface. The second is a class inherited from Application, containing several auxiliary inner classes. Its main task is to support the work with the object of the com.intel.context.Sensing class, which we access by pressing buttons, and which provides information about the user's physical activity.

The test took place on the ASUS Fonepad 8 tablet, which is based on the Intel Atom Z3530 CPU. After starting the application, we need two buttons. First you need to click the Start Daemon button, then - Enable Sensing (respectively, after completing the experiments - Disable Sensing and Stop Daemon). Now you can expect a message with information about what, according to the program, we are busy.

Analysis of the user's physical activity.

During the experiments, the tablet was taken for a walk. As can be seen from the message, the program suggested that the user, with a probability of 87%, walks on foot (the WALKING parameter). If the device lies motionless, the system decides that the user is resting, setting a very high rate for the SEDENTARY parameter (100% with no movement at all). If you shake and rotate the device randomly, you can usually see a fairly high indicator of the RANDOM parameter.

Results

Sensors are widely used in modern computing systems. Motion recognition implemented on their base, applied in mobile devices, can become a valuable competitive advantage of applications that attracts users. Working with sensors is a very important feature that can significantly improve the usability of mobile devices and applications. The recently released Intel Context Sensing SDK v1.6.7 allows you to reproach and simplify the creation of applications that use sensor data. This is good for developers and for those who use their applications.

For home reading

» Sensor stack

» Feature Extraction

Source: https://habr.com/ru/post/263483/

All Articles