Algorithms of Mind

Science always accompanies technology, inventions give us new food for thought and create new phenomena that have yet to be explained.

So says Aram Harrow (Aram Harrow), professor of physics at MIT in his article “Why is the right time to study quantum computing” .

He believes that from a scientific point of view, entropy could not be fully studied until the steam engine technology gave impetus to the development of thermodynamics. Quantum computing came about because of the need to simulate quantum mechanics on a computer. So the algorithms of the human mind can be studied with the advent of neural networks. Entropy is used in many areas: for example, with smart cropping , in video and image encoding ; in statistics .

How does this relate to machine learning?

Just like steam engines, machine learning is a technology designed to solve narrowly focused tasks. Recent results in this industry can help to understand how the human brain works, perceives the world around it and learns. Machine learning technology provides new food for thought about the nature of human thought and imagination.

')

Computer imagination

Five years ago, a pioneer in depth learning, Jeff Hinton (a professor at the University of Toronto and a Google employee) published a video:

Hinton trained a 5-level neural network to recognize handwritten numbers from their bitmap images. Using a computer vision machine could read handwritten characters.

But, unlike other works, the Hinton neural network could not only recognize numbers, but also recreate the image of a number in its computer imagination based on its value. For example, the input is set to figure 8, and the output to the machine is its image:

Everything happens in the intermediate layers of the network. They work as an associative memory: from image to value, from value to image.

Can human imagination work in the same way?

Despite the simplified, but very inspiring technology of machine vision, the main question from a scientific point of view is whether human imagination and visualization work according to the same algorithm.

Isn't the human mind doing the same thing? When a person sees a number - he recognizes it. Conversely, when someone talks about the number 8, the mind draws the number 8 in the imagination.

Is it possible that the human brain, like a neural network, moves from an image to a picture (or sound, smell, sensation) using information encoded in layers? After all, neural networks already draw pictures , write music and even can create internal connections.

Contemplation and the phenomenon

If recognition and imagination are really just connections between the image and the image, what happens inside the layers? Can neural networks help to figure this out?

234 years ago, Immanuel Kant, in his book The Critique of the Pure Reason, argued that contemplation is only an idea of the phenomenon.

Kant believed that human knowledge determines not only rational and empirical thinking, but also intuition (contemplation). In his definition without contemplation, all knowledge will be deprived of objects and will remain empty and meaningless.

In our time, Professor Berkeley - Alesha Efros (specializes in VUE) noted that in the visible world there are many more things than words to describe them. Using words as markers for teaching models can lead to language restrictions. There are many things that do not have names in different languages. A popular example is the most capacious word in the world, Mamihlapinatapai .

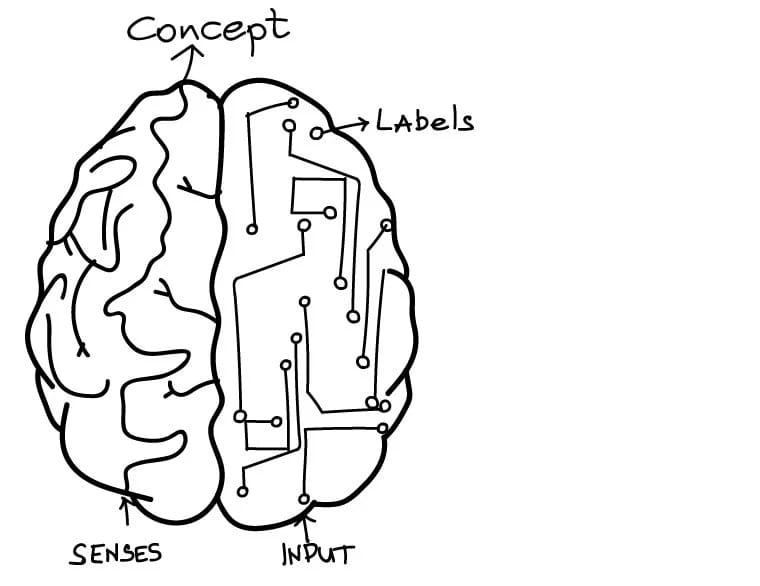

A parallel can be drawn between machine tags and phenomena, as well as coding and intuition (contemplation).

During the training of deep neural networks, for example, in the work on the recognition of cats, you can see that the processes go progressively from the lower to the upper levels.

The network for image recognition encodes pixels at a lower level, recognizes lines and angles at the next, then standard shapes, and so on. With each level all the more difficult task. Middle levels do not necessarily have links to the final image, for example, “cat” or “dog”. Only the last layer corresponds to labels defined by people and is limited to these labels.

Coding and labels overlap with concepts that Kant called contemplation and phenomenon.

The hype around the Sapir-Whorf hypothesis

As Efros noted, there are much more conceptual models than the words that describe them. If so, can words limit our thoughts?

This is the basic idea of the Sapir-Whorf linguistic relativity hypothesis. In its strictest form, the Sapir-Whorf hypothesis states that the structure of a language influences how people perceive and comprehend the world.

Is it so? Is it possible that language completely defines the limits of our consciousness or are we free to comprehend anything, regardless of the language we speak?

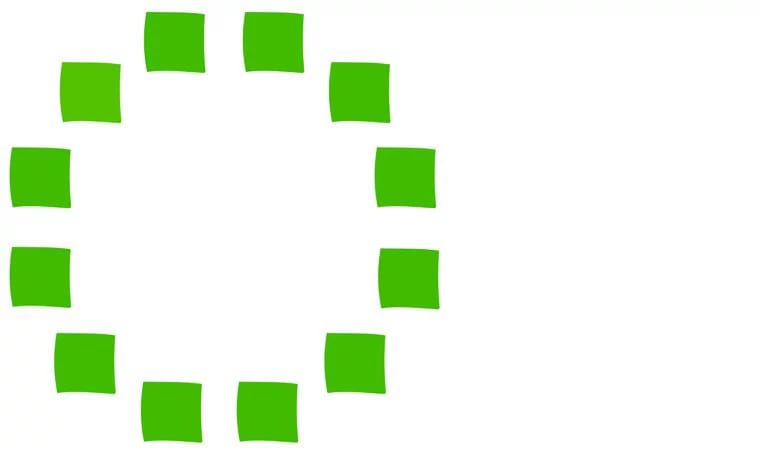

The picture shows 12 lettuce squares, one of which is different in color. Try to guess which one. The Himba tribe has two words for different shades of light green, so they give the correct answer much faster. Most of us will have to sweat before finding a square.

Correct answer

The theory is this: since there are two words to distinguish one shade from another, our mind will begin to train ourselves to distinguish these shades and over time the difference will become apparent. If you look with your mind, not with your eyes, then the language affects the result.

Another vivid example: the millennials generation found it difficult to get used to the CMYK color palette, since the cyan and magenta colors were not memorized from birth. Especially in Russian, these are complex composite colors that are hard to imagine: celadon and purple-red.

Look with your mind, not with your eyes

Something similar can be observed in machine learning. Models are trained to recognize pictures (text, audio ...) according to given tags or categories. Networks recognize categories with tags much more effectively than categories without tags. This is not surprising when learning with the "teacher" method. Language affects the human perception of the world, and the presence of labels affects the neural network's ability to recognize categories.

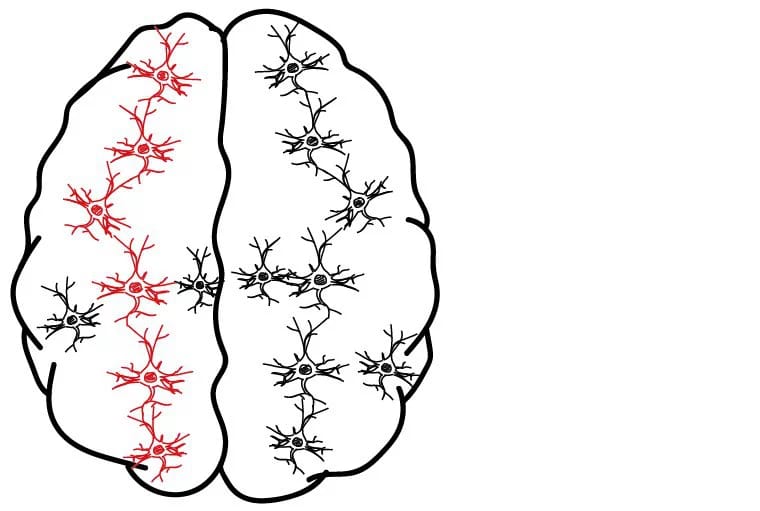

But the presence of tags - this is not a prerequisite. In the Google Koto-recognizing brain, the network has formed the concepts of "cat", "dog" and others completely independently, without specifying the required solutions (tags).

The network was trained without a teacher (only the situation is given). If you submit a picture belonging to a certain category to its input, for example, "cats", then only "cat-like" neurons will be activated. Receiving a large number of training images at the entrance, this network formed the basic characteristics of each category and the differences between them.

If you constantly show the child a plastic cup, then he will begin to recognize it, even if he does not know how this thing is called. Those. The image will not be matched with the title. In this case, the Sapir-Whorf hypothesis is incorrect - a person can investigate and investigates various images, even if there are no words to describe them.

Machine learning with and without a teacher suggests that the Sapir-Whorf hypothesis is applicable to human learning with a teacher and is not suitable for self-study. So it's time to stop the debate and debate about this.

Philosophers, psychologists, linguists and neuroscientists have been studying this topic for many years. A connection with machine learning and computer science has been discovered relatively recently, with advances in big data and in-depth learning. Some neural networks show excellent results in language translation, image classification and speech recognition.

Each new discovery in machine learning helps to understand a little more about the human mind.

Abstract

- The human brain, like a neural network, moves from an image to a picture.

- Coding is the same as contemplation in a person, and tags are phenomena.

- If you look with your mind, not with your eyes, then the language affects the result.

- The Sapir-Whorf hypothesis may be correct for learning with a teacher and fundamentally wrong for learning without a teacher.

Source: https://habr.com/ru/post/263229/

All Articles