Pacemaker-based HA-Cluster for LXC and Docker container virtualization

In this article, I will describe the installation and configuration of an Active / Active cluster based on Pacemaker, Corosync 2.x and CLVM using shared storage. I will show you how to adapt this cluster to work with LXC and Docker containers. I will describe the team to work with the cluster. And I remember those rakes into which I plunged, which, I hope, will facilitate the fate of the next rogues.

As server distributions I will use CentOS 7 + epel and the current versions of the packages in them. The basic tool for working with Pacemaker will be the PCS (pacemaker / corosync configuration system).

')

I used the configuration of two nodes, but their number can be increased as needed. Servers have shared shared storage connected via SAS. If this is not on hand, then you can use the store FC or iSCSI plugin. You will need two volumes, one for general needs, the other for Docker. It is possible to break one volume into two sections.

Install CentOS 7, epel repository and set up a network. Using bonding for network interfaces and multipath for SAS are desirable. To work with different vlans, we configure the appropriate bridges br0.VID, to which we will later tie the LXC container. I will not describe the details - everything is standard.

For LXC and Docker to work, you need to disable the regular firewald.

Immediately enter the required addresses and names in / etc / hosts on all nodes:

The work will require the STONITH mechanism (“Shoot The Other Node In The Head”), for which ipmi is used. Configure using ipmitool :

It is desirable to bring the network for ipmi into a separate vlan, firstly it will allow it to isolate, secondly there will be no connectivity problems if the IPMI BMC (baseboard management controller) shares the network interface with the server.

Check the connectivity as follows:

If you do not know what Pacemaker is, then it is advisable to read about it first. Well and in Russian about Pacemaker written here .

Install the packages from the epel repository on all nodes:

On all nodes we set a password for the hacluster cluster administrator. PCS works under this user, as well as a web-based management interface.

Further operations are performed on a single node.

Configure authentication:

Create and run a cluster “Cluster” of two nodes:

See the result:

There is one caveat, if https_proxy is set in the environment variables, pcs can lie about the status of the nodes, apparently trying to use a proxy.

We start and prescribe the pcsd daemon into autoload:

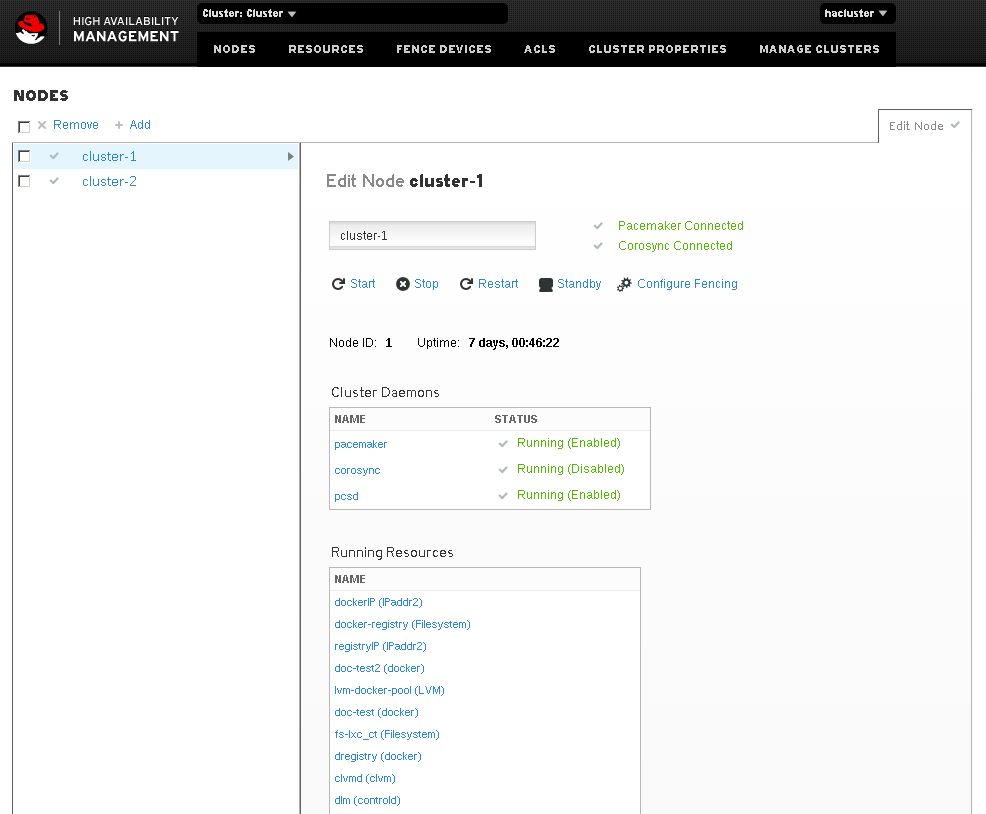

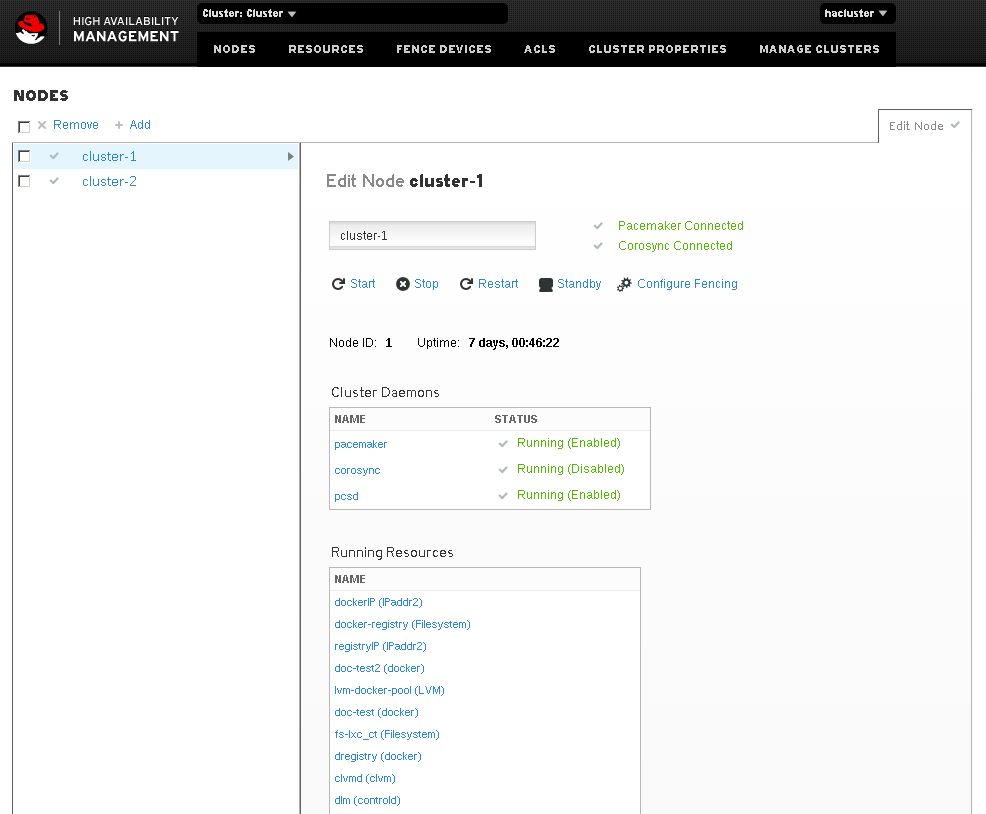

After that, the web-based cluster management interface is available at the address

The interface allows you to view the status of the cluster, add or change its parameters. Trifle, but nice.

Since we have only two nodes, we will not have a quorum, so we need to disable this policy:

To auto-start cluster nodes, just add pacemaker to autoload:

CLVM and GFS2 require DLM (Distributed Lock Manager). Both CLVM and DLM in RHEL7 (CentOS 7) as independent daemons are missing and are cluster resources. At the same time, DLM requires STONITH to work, otherwise the corresponding cluster resource will not start. Customize:

Why so, well described here . In short, we start two stonith resources, each responsible for its own node and prohibit them from working on the node that they must shoot.

Configure additional global options.

For experiments, it is useful to configure the resource migration after the first failure:

To ensure that a resource that has migrated to another node as a result of a failure does not come back, we will set up after restoring the node:

So that in the midst of bold experiments the nodes do not shoot each other, I recommend explicitly setting a policy for resource failure:

Install CLVM on each node:

We configure LVM to work in a cluster on each node:

We get the resources dlm and clvmd in the cluster:

We set the order of resource startup, in which clvmd is launched only after dlm . The commands already use the service name * -clone , which denotes a resource on a specific node:

As soon as resources are created, they are launched on all nodes, and if all is well, then we can start creating shared logical volumes and file systems. clvmd will monitor the integrity of the metadata and notify all nodes of changes, so we perform the operation on one node.

Initialize sections for use in LVM:

In general, working with a clustered LVM is almost the same as working with a regular LVM, with the only difference that if the volume group (VG) is marked as cluster, then its metadata is controlled by clvmd. Create volume groups:

The overall configuration of the cluster is completed, we will continue to fill it with resources. Resource from the point of view of Pacemaker, is any service, process, ip-address of which can be controlled by scripts. The resource scripts themselves are similar to init scripts and also perform a set of functions start, stop, monitor, and so on. The basic principle that we will follow is that the data needed by a resource running on one node is placed in the logical partition of the shared_vg group and any file system at will; The data that is needed on both nodes at the same time is put on GFS2. The integrity of the data in the first case will trace Pacemaker, which controls the number and location of running resources, including the used file systems. In the second case, the internal mechanisms of GFS2. The shared_vg-ex group will be completely dedicated to the logical partition for Docker. The fact is that Docker creates a sparse volume (thin provisioned), which can only be active in exclusive mode on a single node. And to place this volume in a separate group is convenient for further work and configuration.

We will work using the lxc- * utilities that are included in the lxc package. We put:

We set default parameters for future containers:

Placement, start and stop of containers will be controlled by Pacemaker, because we turn off autoloading of containers explicitly.

Each LXC container will live in its shared_vg logical volume. Set the default VG name:

This placement will allow the container to run on any node in the cluster. Container configuration files should also be shared, so we will create a common file system and configure its use on all nodes:

Create the first container:

At the time of this writing, the template from the centos package did not work for lvm, but the oracle also suited me.

If you need to deploy a system based on deb packages, you must first install the debootstrap utility. The prepared system is first deployed to

You can also use the special " download " template, which downloads already prepared system archives from the repository.

The container is ready. Containers can be managed with lxc- * utilities. Run in the background, see its state, stop:

We configure additional container parameters either in its console using lxc-console , or by mounting the lvm section of the container somewhere.

Now you can give control to Pacemaker. But first, take a fresh resource management file from GitHub :

The

So, we add a new resource to our cluster:

The resource will immediately start and its status can be found with the command

But you need to be careful with it, it ignores the cluster settings for resource allocation and runs it on the current node. And if the resource is already running on another node, then there may be surprises. The modifier

Although he manages the Pacemaker container, but you can still work with it with all the lxc- * utilities, of course, only on the node on which he is currently working and with an eye on Pacemaker.

The resulting resource container can be transferred to another node by executing:

Unfortunately, LXC does not have a decent live migration tool, but when it does, it will be possible to set up a migration. To do this, you will need to create another shared partition on GFS2, where the dumps will be placed and modify the lxc script resource, so that it will work out the migrate_to and migrate_from functions.

I looked at the CRIU project, but I could not achieve work on CentOS 7.

Create a new logical partition and transfer data there from the OpenVZ container (turned off):

Create a configuration file for the new container by copying and changing the contents of lxc-racktables:

In the configuration file, you must change the fields:

If necessary, adjust the restrictions and the desired network bridge. Also in the container, you need to change the network settings by rewriting them to the eth0 interface and correct the file

In principle, after that the container can already be launched, but for better compatibility with LXC it is necessary to modify the contents. As an example, I used a template to create a centos container (

If someone is too lazy to understand himself (but in vain), then you can use mine (at your own peril and risk). The MAC address of the container is best recorded in the configuration file.

You can work with LXC via

For managing via

Configuring Pacemaker for working with Docker is similar to configuring LXC, but there are some design differences.

To begin with, we will install Docker, since it is included in the RHEL / CentOS 7 distribution, there will be no problems.

Let's teach Docker to work with LVM. To do this, create the file

Run

Docker uses a sparse volume (thin provisioned), this imposes a restriction on use in a cluster. Such a volume cannot be active on multiple nodes at the same time. Configure LVM so that the volumes in the shared_vg-ex group are not automatically activated. To do this, you must explicitly specify the groups (or volumes separately) that will be activated automatically in the

Let us transfer control of this volume to Pacemaker:

Docker will use dedicated IP for NAT from containers from the external network. Fix it in the configuration:

In order for Docker to go to the Internet through a proxy server, we will set up environment variables for him. To do this, create a directory and the

The basic configuration is completed, we will fill the cluster with the appropriate resources.Since a set of resources will be responsible for Docker, it is convenient to put them into a group. All group resources are launched on the same node and run sequentially according to the order in the group. But keep in mind that when one of the group's resources fails, the whole group will gather to migrate to another node. And also, when you turn off any resource group, all subsequent resources also turn off. The first resource of the group will be the created LVM volume:

Create a resource - the IP address issued by Docker:

In addition to LVM volumes, Docker also uses the file system to store its data. For this you need to start another section running Pacemaker. Since this data is only needed by a running Dokcer, the resource will be normal.

Now you can add the Docker daemon itself:

After the successful launch of the group's resources, on the node where Docker settled we look at its status and make sure that everything is fine.

You can already work with Docker in the usual manner. But for the sake of completeness, we will enter and place in our cluster the Docker-registry. For the registry, we will use a separate IP and the name 10.1.2.11 (dregistry), and we will move the image file storage into a separate section.

Create a container registry on the node where Docker is running:

The output

Let's give control to them Pacemaker. First, download the latest resource script:

It is important to monitor the identity of the resource scripts on all nodes, and then there may be surprises. The docker resource script can create the required containers with the specified parameters by downloading them from the registry. Therefore, you can simply use Docker's constantly running on cluster nodes with a common registry and personal vaults. And Pacemaker only controls individual containers, but this is not so interesting, and it is redundant. I have not yet figured out what to do with one of the Docker.

So, we will hand over the management of the finished container to Pacemaker.

Let's write our local registry to the Docker configuration on the cluster nodes (

After this, we restart the Docker service

Now, using the example of Docker containers, I will show you how to work with templates in Pacemaker. Unfortunately, the utility features

Docker-resources should meet the following requirements:

Of course, this can be achieved by hanging dependencies on each container using

To begin with, we will create three experimental containers, which I used as a container with Nginx. The container is pre-downloaded and uploaded to the local registry:

In the newly uploaded xml add a pool of resources. Defining co-location (section

As server distributions I will use CentOS 7 + epel and the current versions of the packages in them. The basic tool for working with Pacemaker will be the PCS (pacemaker / corosync configuration system).

')

- Server Preparation

- Installation and basic configuration of Pacemaker and CLVM

- Working with LXC in a cluster

- Transfer OpenVZ Container to LXC

- Work with Docker in a cluster

- Crib

- Links

Server Preparation

I used the configuration of two nodes, but their number can be increased as needed. Servers have shared shared storage connected via SAS. If this is not on hand, then you can use the store FC or iSCSI plugin. You will need two volumes, one for general needs, the other for Docker. It is possible to break one volume into two sections.

Install CentOS 7, epel repository and set up a network. Using bonding for network interfaces and multipath for SAS are desirable. To work with different vlans, we configure the appropriate bridges br0.VID, to which we will later tie the LXC container. I will not describe the details - everything is standard.

For LXC and Docker to work, you need to disable the regular firewald.

# systemctl stop firewalld.service # systemctl disable firewalld.service # setenforce Permissive Immediately enter the required addresses and names in / etc / hosts on all nodes:

#nodes, vlan 10 10.1.0.1 cluster-1 10.1.0.2 cluster-2 #nodes ipmi, vlan 314 10.1.15.1 ipmi-1 10.1.15.2 ipmi-2 #docker, vlan 12 10.1.2.10 docker 10.1.2.11 dregistry The work will require the STONITH mechanism (“Shoot The Other Node In The Head”), for which ipmi is used. Configure using ipmitool :

# ipmitool shell impitool> user set name 2 admin impitool> user set password 2 ' ' # <uid> < privilege level> <channel number>) impitool> user priv 2 4 1 We get admin user (id = 2) and give him administrator rights (livel = 4) on the channel associated with the network interface (channel = 1).It is desirable to bring the network for ipmi into a separate vlan, firstly it will allow it to isolate, secondly there will be no connectivity problems if the IPMI BMC (baseboard management controller) shares the network interface with the server.

impitool> lan set 1 ipsrc static impitool> lan set 1 ipaddr 10.1.15.1 impitool> lan set 1 netmask 255.255.255.0 impitool> lan set 1 defgw ipaddr 10.1.15.254 impitool> lan set 1 vlan id 314 # : impitool> lan set 1 access on impitool> lan set 1 auth ADMIN MD5 ipmitool> channel setaccess 1 2 callin=on ipmi=on link=on privilege=4 On the other nodes is similar, only the IP is different.Check the connectivity as follows:

# ipmitool -I lan -U admin -P ' ' -H 10.1.15.1 bmc info Installation and basic configuration of Pacemaker and CLVM

If you do not know what Pacemaker is, then it is advisable to read about it first. Well and in Russian about Pacemaker written here .

Install the packages from the epel repository on all nodes:

# yum install pacemaker pcs resource-agents fence-agents-all On all nodes we set a password for the hacluster cluster administrator. PCS works under this user, as well as a web-based management interface.

echo CHANGEME | passwd --stdin hacluster Further operations are performed on a single node.

Configure authentication:

# pcs cluster auth cluster-1 cluster-2 -u hacluster -p CHANGEME --force Create and run a cluster “Cluster” of two nodes:

# pcs cluster setup --force --name Cluster cluster-1 cluster-2 # pcs cluster start --all See the result:

# pcs cluster status Cluster Status: Last updated: Wed Jul 8 14:16:32 2015 Last change: Wed Jul 8 10:01:20 2015 Stack: corosync Current DC: cluster-1 (1) - partition with quorum Version: 1.1.12-a14efad 2 Nodes configured 17 Resources configured ( ) PCSD Status: cluster-1: Online cluster-2: Online There is one caveat, if https_proxy is set in the environment variables, pcs can lie about the status of the nodes, apparently trying to use a proxy.

We start and prescribe the pcsd daemon into autoload:

# systemctl start pcsd # systemctl enable pcsd After that, the web-based cluster management interface is available at the address

"https://ip_:2224"

The interface allows you to view the status of the cluster, add or change its parameters. Trifle, but nice.

Since we have only two nodes, we will not have a quorum, so we need to disable this policy:

# pcs property set no-quorum-policy=ignore To auto-start cluster nodes, just add pacemaker to autoload:

# systemctl enable pacemaker CLVM and GFS2 require DLM (Distributed Lock Manager). Both CLVM and DLM in RHEL7 (CentOS 7) as independent daemons are missing and are cluster resources. At the same time, DLM requires STONITH to work, otherwise the corresponding cluster resource will not start. Customize:

# pcs property set stonith-enabled=true # pcs stonith create cluster-1.stonith fence_ipmilan ipaddr="ipmi-1" passwd=" ipmi" login="admin" action="reboot" method="cycle" pcmk_host_list=cluster-1 pcmk_host_check=static-list stonith-timeout=10s op monitor interval=10s # pcs stonith create cluster-2.stonith fence_ipmilan ipaddr="ipmi-2" passwd=" ipmi" login="admin" action="reboot" method="cycle" pcmk_host_list=cluster-2 pcmk_host_check=static-list stonith-timeout=10s op monitor interval=10s # pcs constraint location cluster-1.stonith avoids cluster-1=INFINITY # pcs constraint location cluster-2.stonith avoids cluster-2=INFINITY Why so, well described here . In short, we start two stonith resources, each responsible for its own node and prohibit them from working on the node that they must shoot.

Configure additional global options.

For experiments, it is useful to configure the resource migration after the first failure:

# pcs resource defaults migration-threshold=1 To ensure that a resource that has migrated to another node as a result of a failure does not come back, we will set up after restoring the node:

# pcs resource defaults resource-stickiness=100 Where “100” is a certain weight, on the basis of which Pacemaker calculates the behavior of the resource.So that in the midst of bold experiments the nodes do not shoot each other, I recommend explicitly setting a policy for resource failure:

# pcs resource op defaults on-fail=restart Otherwise, stonith will work on the most interesting place, which by default is on the failure of the “stop” command.Install CLVM on each node:

# yum install lvm2 lvm2-cluster We configure LVM to work in a cluster on each node:

# lvmconf --enable-cluster We get the resources dlm and clvmd in the cluster:

# pcs resource create dlm ocf:pacemaker:controld op monitor interval=30s on-fail=fence clone interleave=true ordered=true # pcs resource create clvmd ocf:heartbeat:clvm op monitor interval=30s on-fail=fence clone interleave=true ordered=true These are critical resources for our cluster, therefore for the situation of failure we explicitly set the stonith policy ( on-fail = fence ). The resource must be running on all nodes of the cluster, because it is declared cloned ( clone ). We start resources in turn, but not in parallel ( ordered = true ). If the resource depends on other clone-resources, then we do not wait for the launch of all resource samples on all nodes, but are content with the local one ( interleave = true ). Pay attention to the last two parameters, they can significantly affect the work of the cluster as a whole, and we still have clone-resources.We set the order of resource startup, in which clvmd is launched only after dlm . The commands already use the service name * -clone , which denotes a resource on a specific node:

# pcs constraint order start dlm-clone then clvmd-clone # pcs constraint colocation add clvmd-clone with dlm-clone We also commit to run the clvmd-clone resource along with dlm-clone on the same node. In our case of two nodes, this seems redundant, but in the general case * -clone resources may be less than the number of nodes and then co-location becomes critical.As soon as resources are created, they are launched on all nodes, and if all is well, then we can start creating shared logical volumes and file systems. clvmd will monitor the integrity of the metadata and notify all nodes of changes, so we perform the operation on one node.

Initialize sections for use in LVM:

# pvcreate /dev/mapper/mpatha1 # pvcreate /dev/mapper/mpatha2 In general, working with a clustered LVM is almost the same as working with a regular LVM, with the only difference that if the volume group (VG) is marked as cluster, then its metadata is controlled by clvmd. Create volume groups:

# vgcreate --clustered y shared_vg /dev/mapper/mpatha1 # vgcreate --clustered y shared_vg-ex /dev/mapper/mpatha2 The overall configuration of the cluster is completed, we will continue to fill it with resources. Resource from the point of view of Pacemaker, is any service, process, ip-address of which can be controlled by scripts. The resource scripts themselves are similar to init scripts and also perform a set of functions start, stop, monitor, and so on. The basic principle that we will follow is that the data needed by a resource running on one node is placed in the logical partition of the shared_vg group and any file system at will; The data that is needed on both nodes at the same time is put on GFS2. The integrity of the data in the first case will trace Pacemaker, which controls the number and location of running resources, including the used file systems. In the second case, the internal mechanisms of GFS2. The shared_vg-ex group will be completely dedicated to the logical partition for Docker. The fact is that Docker creates a sparse volume (thin provisioned), which can only be active in exclusive mode on a single node. And to place this volume in a separate group is convenient for further work and configuration.

Working with LXC in a cluster

We will work using the lxc- * utilities that are included in the lxc package. We put:

# yum install lxc lxc-templates We set default parameters for future containers:

# cat /etc/lxc/default.conf lxc.start.auto = 0 lxc.network.type = veth lxc.network.link = br0.10 lxc.network.flags = up # memory and swap lxc.cgroup.memory.limit_in_bytes = 256M lxc.cgroup.memory.memsw.limit_in_bytes = 256M The type of network will be veth - inside the container interface eth0, outside the container it will be attached to the bridge br0.10 . Of the limitations we have in the course of memory only, we specify them. If you wish, you can write any, supported by the kernel, on the principle lxc.cgroup.state-object-name = value . They can also be changed on the fly using lxc-cgroup . On the file system, these parameters are represented by the path /sys/fs/cgroup/TYPE/lxc/CT-NAME/object-name . An important point about the restrictions: the parameter memory.limit_in_bytes must be specified before memory.memsw.limit_in_bytes . And also, the second parameter is the sum of memory and swap, and must be greater than or equal to the first. Otherwise, the machine will start without memory limits.Placement, start and stop of containers will be controlled by Pacemaker, because we turn off autoloading of containers explicitly.

Each LXC container will live in its shared_vg logical volume. Set the default VG name:

# cat /etc/lxc/lxc.conf lxc.bdev.lvm.vg = shared_vg This placement will allow the container to run on any node in the cluster. Container configuration files should also be shared, so we will create a common file system and configure its use on all nodes:

# lvcreate -L 500M -n lxc_ct shared_vg # mkfs.gfs2 -p lock_dlm -j 2 -t Cluster:lxc_ct /dev/shared_vg/lxc_ct We select the lock_dlm lock protocol , since the storage is shared. We get two magazines, by the number of nodes ( -j2 ). We configure the name in the lock table, where Cluster is the name of our cluster. # pcs resource create fs-lxc_ct Filesystem fstype=gfs2 device=/dev/shared_vg/lxc_ct directory=/var/lib/lxc clone ordered=true interleave=true # pcs constraint order start clvmd-clone then fs-lxc_ct-clone We get another clone-resource, such as Filesystem , the device and directory fields are required and describe what to mount and where. And we specify the order of resource startup, because without clvmd, the file system will not mount. After that, on all nodes, the directory where LXC stores container settings is mounted.Create the first container:

# lxc-create -n lxc-racktables -t oracle -B lvm --fssize 2G --fstype ext4 --vgname shared_vg -- -R 6.6 Here lxc-racktables is the name of the container, oracle is the template used. -B specifies the type of storage used and the parameters. lxc-create will create an LVM partition and deploy the base system there, according to the template. After the "-" indicates the parameters of the template, in my case - version.At the time of this writing, the template from the centos package did not work for lvm, but the oracle also suited me.

If you need to deploy a system based on deb packages, you must first install the debootstrap utility. The prepared system is first deployed to

/var/cache/lxc/ and each time it starts up, lxc-create updates the system packages to the current versions. For yourself it is convenient to assemble your own template, with all the necessary presets. Standard templates are located here: /usr/share/lxc/templates .You can also use the special " download " template, which downloads already prepared system archives from the repository.

The container is ready. Containers can be managed with lxc- * utilities. Run in the background, see its state, stop:

# lxc-start -n lxc-racktables -d # lxc-info -n lxc-racktables Name: lxc-racktables State: RUNNING PID: 9364 CPU use: 0.04 seconds BlkIO use: 0 bytes Memory use: 1.19 MiB KMem use: 0 bytes Link: vethS7U8J1 TX bytes: 90 bytes RX bytes: 90 bytes Total bytes: 180 bytes # lxc-stop -n lxc-racktables We configure additional container parameters either in its console using lxc-console , or by mounting the lvm section of the container somewhere.

Now you can give control to Pacemaker. But first, take a fresh resource management file from GitHub :

# wget -O /usr/lib/ocf/resource.d/heartbeat/lxc https://raw.githubusercontent.com/ClusterLabs/resource-agents/master/heartbeat/lxc # chmod +x /usr/lib/ocf/resource.d/heartbeat/lxc The

/usr/lib/ocf/resource.d/ directory contains resource management files in the provider / type hierarchy. See the entire resource list with the pcs resource list command. View a description of a specific resource - pcs resource describe <standard:provider:type|type> .Example:

# pcs resource describe ocf:heartbeat:lxc ocf:heartbeat:lxc - Manages LXC containers Allows LXC containers to be managed by the cluster. If the container is running "init" it will also perform an orderly shutdown. It is 'assumed' that the 'init' system will do an orderly shudown if presented with a 'kill -PWR' signal. On a 'sysvinit' this would require the container to have an inittab file containing "p0::powerfail:/sbin/init 0" I have absolutly no idea how this is done with 'upstart' or 'systemd', YMMV if your container is using one of them. Resource options: container (required): The unique name for this 'Container Instance' eg 'test1'. config (required): Absolute path to the file holding the specific configuration for this container eg '/etc/lxc/test1/config'. log: Absolute path to the container log file use_screen: Provides the option of capturing the 'root console' from the container and showing it on a separate screen. To see the screen output run 'screen -r {container name}' The default value is set to 'false', change to 'true' to activate this option So, we add a new resource to our cluster:

# pcs resource create lxc-racktables lxc container=lxc-racktables config=/var/lib/lxc/lxc-racktables/config # pcs constraint order start fs-lxc_ct-clone then lxc-racktables And indicate the order of launch.The resource will immediately start and its status can be found with the command

pcs status . If the launch fails, then there will be a possible reason. The pcs resource debug-start <resource id> command allows you to start the resource with the result displayed on the screen: # pcs resource debug-start lxc-racktables Operation start for lxc-racktables (ocf:heartbeat:lxc) returned 0 > stderr: DEBUG: State of lxc-racktables: State: STOPPED > stderr: INFO: Starting lxc-racktables > stderr: DEBUG: State of lxc-racktables: State: RUNNING > stderr: DEBUG: lxc-racktables start : 0 But you need to be careful with it, it ignores the cluster settings for resource allocation and runs it on the current node. And if the resource is already running on another node, then there may be surprises. The modifier

"--full" will give a lot of additional information.Although he manages the Pacemaker container, but you can still work with it with all the lxc- * utilities, of course, only on the node on which he is currently working and with an eye on Pacemaker.

The resulting resource container can be transferred to another node by executing:

# pcs resource move <resource id> [destination node] In this case, the container will shut down correctly on one node, and start on the other.Unfortunately, LXC does not have a decent live migration tool, but when it does, it will be possible to set up a migration. To do this, you will need to create another shared partition on GFS2, where the dumps will be placed and modify the lxc script resource, so that it will work out the migrate_to and migrate_from functions.

I looked at the CRIU project, but I could not achieve work on CentOS 7.

Transfer OpenVZ Container to LXC

Create a new logical partition and transfer data there from the OpenVZ container (turned off):

# lvcreate -L 2G -n lxc-openvz shared_vg # mkfs.ext4 /dev/shared_vg/lxc-openvz # mount /dev/shared_vg/lxc-openvz /mnt/lxc-openvz # rsync -avh --numeric-ids -e 'ssh' openvz:/vz/private/<containerid>/ /mnt/lxc-openvz/ Create a configuration file for the new container by copying and changing the contents of lxc-racktables:

# mkdir /var/lib/lxc/lxc-openvz # cp /var/lib/lxc/lxc-racktables/config /var/lib/lxc/lxc-openvz/ In the configuration file, you must change the fields:

lxc.rootfs = /dev/shared_vg/lxc-openvz lxc.utsname = openvz #lxc.network.hwaddr If necessary, adjust the restrictions and the desired network bridge. Also in the container, you need to change the network settings by rewriting them to the eth0 interface and correct the file

etc/sysconfig/network .In principle, after that the container can already be launched, but for better compatibility with LXC it is necessary to modify the contents. As an example, I used a template to create a centos container (

/usr/share/lxc/templates/lxc-centos ), namely the contents of the configure_centos and configure_centos_init functions with a slight refinement. Pay special attention to the creation of the etc/init/power-status-changed.conf script, without it the container will not be able to shut down correctly. Or the container inittab should contain a rule of the form: "p0::powerfail:/sbin/init 0" (depends on the distribution kit)./etc/init/power-status-changed.conf

# power-status-changed - shutdown on SIGPWR

#

start on power-status-changed

exec / sbin / shutdown -h now "SIGPWR received"

#

start on power-status-changed

exec / sbin / shutdown -h now "SIGPWR received"

If someone is too lazy to understand himself (but in vain), then you can use mine (at your own peril and risk). The MAC address of the container is best recorded in the configuration file.

lxc-console containers may have problems with the console - it can not be obtained using lxc-console . I solve this problem using a agetty using agetty (alternative Linux getty), which was included in the portable container. And with the init settings, which starts the processes of the form: /sbin/agetty -8 38400 /dev/console /sbin/agetty -8 38400 /dev/tty1 The recipe and scripts /etc/init/ were borrowed from the newly created clean container and were converted to agetty ./etc/init/start-ttys.conf

#

This service starts the configured number of gettys.

#

# Do not edit this file directly. If you want to change the behavior,

# please create a file start-ttys.override and put your changes there.

start to stop rc RUNLEVEL = [2345]

env ACTIVE_CONSOLES = / dev / tty [1-6]

env X_TTY = / dev / tty1

task

script

. / etc / sysconfig / init

for tty in $ (echo $ ACTIVE_CONSOLES); do

["$ RUNLEVEL" = "5" -a "$ tty" = "$ X_TTY"] && continue

initctl start tty tty = $ tty

done

end script

This service starts the configured number of gettys.

#

# Do not edit this file directly. If you want to change the behavior,

# please create a file start-ttys.override and put your changes there.

start to stop rc RUNLEVEL = [2345]

env ACTIVE_CONSOLES = / dev / tty [1-6]

env X_TTY = / dev / tty1

task

script

. / etc / sysconfig / init

for tty in $ (echo $ ACTIVE_CONSOLES); do

["$ RUNLEVEL" = "5" -a "$ tty" = "$ X_TTY"] && continue

initctl start tty tty = $ tty

done

end script

/etc/init/console.conf

# console - getty

#

This is a service from

# started while it is shut down again.

start to stop rc RUNLEVEL = [2345]

stop on runlevel [! 2345]

env container

respawn

#exec / sbin / mingetty --nohangup --noclear / dev / console

exec / sbin / agetty -8 38400 / dev / console

#

This is a service from

# started while it is shut down again.

start to stop rc RUNLEVEL = [2345]

stop on runlevel [! 2345]

env container

respawn

#exec / sbin / mingetty --nohangup --noclear / dev / console

exec / sbin / agetty -8 38400 / dev / console

/etc/init/tty.conf

I tried to use # tty - getty

#

This service maintains a getty on the specified device.

#

# Do not edit this file directly. If you want to change the behavior,

# please create a file tty.override and put your changes there.

stop on runlevel [S016]

respawn

instance $ tty

#exec / sbin / mingetty --nohangup $ TTY

exec / sbin / agetty -8 38400 $ TTY

usage 'tty tty = / dev / ttyX - where X is console id'

#

This service maintains a getty on the specified device.

#

# Do not edit this file directly. If you want to change the behavior,

# please create a file tty.override and put your changes there.

stop on runlevel [S016]

respawn

instance $ tty

#exec / sbin / mingetty --nohangup $ TTY

exec / sbin / agetty -8 38400 $ TTY

usage 'tty tty = / dev / ttyX - where X is console id'

mingetty in a mingetty container from CentOS 6.6, but it refused to work with an error in the logs: # /sbin/mingetty --nohangup /dev/console console: no controlling tty: Operation not permitted You can work with LXC via

libvrt using the lxc: /// driver, but this method is dangerous and RedHat threatens to remove its support from the distribution.For managing via

libvrt in Pacemaker, there is a script resource ocf:heartbeat:VirtualDomain that can manage any VM, depending on the driver. Features include live migration for KVM. I think using Pacemaker to manage KVM would be the same, but it wasn’t necessary for me.Work with Docker in a cluster

Configuring Pacemaker for working with Docker is similar to configuring LXC, but there are some design differences.

To begin with, we will install Docker, since it is included in the RHEL / CentOS 7 distribution, there will be no problems.

# yum install docker Let's teach Docker to work with LVM. To do this, create the file

/etc/sysconfig/docker-storage-setup with the contents: VG=shared_vg-ex Where we specify in which group of Docker volumes to create our pool. Here you can also set additional parameters ( man docker-storage-setup ).Run

docker-storage-setup : # docker-storage-setup Rounding up size to full physical extent 716.00 MiB Logical volume "docker-poolmeta" created. Logical volume "docker-pool" created. WARNING: Converting logical volume shared_vg-ex/docker-pool and shared_vg-ex/docker-poolmeta to pools data and metadata volumes. THIS WILL DESTROY CONTENT OF LOGICAL VOLUME (filesystem etc.) Converted shared_vg-ex/docker-pool to thin pool. Logical volume "docker-pool" changed. # lvs | grep docker-pool docker-pool shared_vg-ex twi-aot--- 17.98g 14.41 2.54 Docker uses a sparse volume (thin provisioned), this imposes a restriction on use in a cluster. Such a volume cannot be active on multiple nodes at the same time. Configure LVM so that the volumes in the shared_vg-ex group are not automatically activated. To do this, you must explicitly specify the groups (or volumes separately) that will be activated automatically in the

/etc/lvm/lvm.conf file (on all nodes): auto_activation_volume_list = [ "shared_vg" ] Let us transfer control of this volume to Pacemaker:

# pcs resource create lvm-docker-pool LVM volgrpname=shared_vg-ex exclusive=yes # pcs constraint order start clvmd-clone then lvm-docker-pool # pcs constraint colocation add lvm-docker-pool with clvmd-clone Now the shared_vg-ex group volumes will be activated on the node where the lvm-docker-pool resource is running .Docker will use dedicated IP for NAT from containers from the external network. Fix it in the configuration:

# cat /etc/sysconfig/docker-network DOCKER_NETWORK_OPTIONS="--ip=10.1.2.10 —fixed-cidr=172.17.0.0/16" We will not configure a separate bridge, let it use docker0 by default, just fix the network for the containers. I tried to point out to me a convenient network for containers, but came across some unclear errors. Google suggested that I was not alone, so I was satisfied by simply fixing the network that Docker had chosen for himself. Docker also does not stop the bridge when it is turned off and does not remove the ip address, but since the bridge is not connected to any physical interface, this is not a problem. In other configurations, this must be taken into account.In order for Docker to go to the Internet through a proxy server, we will set up environment variables for him. To do this, create a directory and the

/etc/systemd/system/docker.service.d/http-proxy.conf file with the contents: [Service] Environment="http_proxy=http://ip_proxy:port" "https_proxy=http://ip_proxy:port" "NO_PROXY=localhost,127.0.0.0/8,dregistry" The basic configuration is completed, we will fill the cluster with the appropriate resources.Since a set of resources will be responsible for Docker, it is convenient to put them into a group. All group resources are launched on the same node and run sequentially according to the order in the group. But keep in mind that when one of the group's resources fails, the whole group will gather to migrate to another node. And also, when you turn off any resource group, all subsequent resources also turn off. The first resource of the group will be the created LVM volume:

# pcs resource group add docker lvm-docker-pool Create a resource - the IP address issued by Docker:

# pcs resource create dockerIP IPaddr2 --group docker --after lvm-docker-pool ip=10.1.2.10 cidr_netmask=24 nic=br0.12 In addition to LVM volumes, Docker also uses the file system to store its data. For this you need to start another section running Pacemaker. Since this data is only needed by a running Dokcer, the resource will be normal.

# lvcreate -L 500M -n docker-db shared_vg # mkfs.xfs /dev/shared_vg/docker-db # pcs resource create fs-docker-db Filesystem fstype=xfs device=/dev/shared_vg/docker-db directory=/var/lib/docker --group docker --after dockerIP Now you can add the Docker daemon itself:

# pcs resource create dockerd systemd:docker --group docker --after fs-docker-db After the successful launch of the group's resources, on the node where Docker settled we look at its status and make sure that everything is fine.

docker info:

# docker info Containers: 5 Images: 42 Storage Driver: devicemapper Pool Name: shared_vg--ex-docker--pool Pool Blocksize: 524.3 kB Backing Filesystem: xfs Data file: Metadata file: Data Space Used: 2.781 GB Data Space Total: 19.3 GB Data Space Available: 16.52 GB Metadata Space Used: 852 kB Metadata Space Total: 33.55 MB Metadata Space Available: 32.7 MB Udev Sync Supported: true Library Version: 1.02.93-RHEL7 (2015-01-28) Execution Driver: native-0.2 Kernel Version: 3.10.0-229.7.2.el7.x86_64 Operating System: CentOS Linux 7 (Core) CPUs: 4 Total Memory: 3.703 GiB Name: cluster-2 You can already work with Docker in the usual manner. But for the sake of completeness, we will enter and place in our cluster the Docker-registry. For the registry, we will use a separate IP and the name 10.1.2.11 (dregistry), and we will move the image file storage into a separate section.

# lvcreate -L 10G -n docker-registry shared_vg # mkfs.ext4 /dev/shared_vg/docker-registry # mkdir /mnt/docker-registry # pcs resource create docker-registry Filesystem fstype=ext4 device=/dev/shared_vg/docker-registry directory=/mnt/docker-registry --group=docker –after=dockerd # pcs resource create registryIP IPaddr2 --group docker --after docker-registry ip=10.1.2.11 cidr_netmask=24 nic=br0.12 Create a container registry on the node where Docker is running:

# docker create -p 10.1.2.11:80:5000 -e REGISTRY_STORAGE_FILESYSTEM_ROOTDIRECTORY=/var/lib/registry -v /mnt/docker-registry:/var/lib/registry -h dregistry --name=dregistry registry:2 We set port forwarding to the container (10.1.2.11:80 → 5000). Plugin directory /mnt/docker-registry. Host name and container name.The output

docker ps -awill show the created container, ready to run.Let's give control to them Pacemaker. First, download the latest resource script:

# wget -O /usr/lib/ocf/resource.d/heartbeat/docker https://raw.githubusercontent.com/ClusterLabs/resource-agents/master/heartbeat/docker # chmod +x /usr/lib/ocf/resource.d/heartbeat/docker It is important to monitor the identity of the resource scripts on all nodes, and then there may be surprises. The docker resource script can create the required containers with the specified parameters by downloading them from the registry. Therefore, you can simply use Docker's constantly running on cluster nodes with a common registry and personal vaults. And Pacemaker only controls individual containers, but this is not so interesting, and it is redundant. I have not yet figured out what to do with one of the Docker.

So, we will hand over the management of the finished container to Pacemaker.

# pcs resource create dregistry docker reuse=true image="docker.io/registry:2" --group docker --after registryIP reuse = true is an important parameter, otherwise the container will be deleted after stopping. image You must specify the full coordinates of the container, including the registry and tag. The resource script will pick up the finished container named dregistry and launch it.Let's write our local registry to the Docker configuration on the cluster nodes (

/etc/sysconfig/docker). ADD_REGISTRY='--add-registry dregistry' INSECURE_REGISTRY='--insecure-registry dregistry' We do not need HTTPS, so we disable it for the local registry.After this, we restart the Docker service

systemctl restart dockeron the node where it lives or pcs resource restart dockerdon any node of the cluster. And we can use the capabilities of our personal registry at 10.1.2.11 (dregistry).Now, using the example of Docker containers, I will show you how to work with templates in Pacemaker. Unfortunately, the utility features

pcshere are very limited. Templates as such, it does not know how to do it at all, and for constraint it allows you to create some joins, but working with them is pcsnot convenient. Fortunately, the ability to edit the cluster configuration directly in the xml file comes to the rescue: # pcs cluster cib > /tmp/cluster.xml # , # pcs cluster cib-push /tmp/cluster.xml Docker-resources should meet the following requirements:

- is on the same node with docker group resources

- run after all docker group resources

- container must be independent of each other’s status

Of course, this can be achieved by hanging dependencies on each container using

pcs constraint, but the configuration is cumbersome and difficult to read.To begin with, we will create three experimental containers, which I used as a container with Nginx. The container is pre-downloaded and uploaded to the local registry:

# docker pull nginx:latest # docker push nginx:latest # pcs resource create doc-test3 docker reuse=false image="dregistry/nginx:latest" --disabled # pcs resource create doc-test2 docker reuse=true image="dregistry/nginx:latest" --disabled # pcs resource create doc-test docker reuse=true image="dregistry/nginx:latest" --disabled Resources are created off, otherwise they will try to start and then as lucky with the node.In the newly uploaded xml add a pool of resources. Defining co-location (section

):

<rsc_colocation id="docker-col" score="INFINITY"> <resource_set id="docker-col-0" require-all="false" role="Started" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> <resource_set id="docker-col-1"> <resource_ref id="docker"/> </resource_set> </rsc_colocation> ( colocation set , id="docker-col" ) ( score="INFINITY" ). ( id="docker-col-0" ) :

( sequential="false" ) ( require-all="false" ) ( role="Started" )

resource_ref , . role="Started" .

( id="docker-col-1" ), docker .

role , ( ).

Ordering set , :

<rsc_order id="order_doc"> <resource_set id="order_doc-0"> <resource_ref id="docker"/> </resource_set> <resource_set id="order_doc-1" require-all="false" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> </rsc_order> , docker , .

, / . . , - . pcs :

# pcs constraint --full | grep -i set Resource Sets: set docker (id:order_doc-0) set doc-test doc-test2 doc-test3 sequential=false require-all=false (id:order_doc-1) (id:order_doc) Resource Sets: set doc-test doc-test2 doc-test3 role=Started sequential=false require-all=false (id:docker-col-0) set docker (id:docker-col-1) setoptions score=INFINITY (id:docker-col)

. LXC ( ):

<template id="lxc-template" class="ocf" provider="heartbeat" type="lxc"> <meta_attributes id="lxc-template-meta_attributes"> <nvpair id="lxc-template-meta_attributes-allow-migrate" name="use_screen" value="false"/> </meta_attributes> <operations> <op id="lxc-template-monitor-30s" interval="30s" name="monitor" timeout="20s"/> <op id="lxc-template-start-0" interval="0" name="start" timeout="20s"/> <op id="lxc-template-stop-0" interval="0" name="start" timeout="90s"/> </operations> </template>

. :

<primitive id="lxc-racktables" template="lxc-template"> <instance_attributes id="lxc-racktables-instance_attributes"> <nvpair id="lxc-racktables-instance_attributes-container" name="container" value="lxc-racktables"/> <nvpair id="lxc-racktables-instance_attributes-config" name="config" value="/var/lib/lxc/lxc-racktables/config"/> </instance_attributes> </primitive>

:

# pcs resource show lxc-racktables Resource: lxc-racktables (template=lxc-template) Attributes: container=lxc-racktables config=/var/lib/lxc/lxc-racktables/config

pcs .

LXC , Docker-.

Pacemaker- crmsh opensuse.org , , , .

:

# pcs status Cluster name: Cluster

Last updated: Thu Jul 16 12:29:33 2015

Last change: Thu Jul 16 10:23:40 2015

Stack: corosync

Current DC: cluster-1 (1) - partition with quorum

Version: 1.1.12-a14efad

2 Nodes configured

19 Resources configured

Online: [ cluster-1 cluster-2 ]

Full list of resources:

cluster-1.stonith (stonith:fence_ipmilan): Started cluster-2

cluster-2.stonith (stonith:fence_ipmilan): Started cluster-1

Clone Set: dlm-clone [dlm]

Started: [ cluster-1 cluster-2 ]

Clone Set: clvmd-clone [clvmd]

Started: [ cluster-1 cluster-2 ]

Clone Set: fs-lxc_ct-clone [fs-lxc_ct]

Started: [ cluster-1 cluster-2 ]

lxc-racktables (ocf::heartbeat:lxc): Started cluster-1

Resource Group: docker

lvm-docker-pool (ocf::heartbeat:LVM): Started cluster-2

dockerIP (ocf::heartbeat:IPaddr2): Started cluster-2

fs-docker-db (ocf::heartbeat:Filesystem): Started cluster-2

dockerd (systemd:docker): Started cluster-2

docker-registry (ocf::heartbeat:Filesystem): Started cluster-2

registryIP (ocf::heartbeat:IPaddr2): Started cluster-2

dregistry (ocf::heartbeat:docker): Started cluster-2

doc-test (ocf::heartbeat:docker): Started cluster-2

doc-test2 (ocf::heartbeat:docker): Started cluster-2

doc-test3 (ocf::heartbeat:docker): Stopped

PCSD Status:

cluster-1: Online

cluster-2: Online

Daemon Status:

corosync: active/disabled

pacemaker: active/enabled

pcsd: active/enabled

# pcs constraint Location Constraints:

Resource: cluster-1.stonith

Disabled on: cluster-1 (score:-INFINITY)

Resource: cluster-2.stonith

Disabled on: cluster-2 (score:-INFINITY)

Resource: doc-test

Enabled on: cluster-2 (score:INFINITY) (role: Started)

Ordering Constraints:

start dlm-clone then start clvmd-clone (kind:Mandatory)

start clvmd-clone then start fs-lxc_ct-clone (kind:Mandatory)

start fs-lxc_ct-clone then start lxc-racktables (kind:Mandatory)

start clvmd-clone then start lvm-docker-pool (kind:Mandatory)

Resource Sets:

set docker set doc-test doc-test2 doc-test3 sequential=false require-all=false

Colocation Constraints:

clvmd-clone with dlm-clone (score:INFINITY)

fs-lxc_ct-clone with clvmd-clone (score:INFINITY)

lvm-docker-pool with clvmd-clone (score:INFINITY)

Resource Sets:

set doc-test doc-test2 doc-test3 role=Started sequential=false require-all=false set docker setoptions score=INFINITY

.

:

# pcs resource show <resource id>

, :

# pcs resource update <resource id> op start start-delay="3s" interval=0s timeout=90 , , update .

# pcs resource restart <resource id> [node] clone- , , .

:

# pcs resource failcount show <resource id> [node] # pcs resource failcount reset <resource id> [node] , Pacemaker - .

:

# pcs resource cleanup [<resource id>] Pacemaker .

/ - :

# pcs resource disable [<resource id>] # pcs resource enable [<resource id>] / , .

:

# pcs resource move <resource id> [destination node] - , . , , . , constraint :

Resource: docker Enabled on: cluster-2 (score:INFINITY) (role: Started) (id:cli-prefer-docker) c . .

:

# pcs constraint –full

- id, :

# pcs constraint remove <constraint id>

:

# pcs cluster cib > /tmp/cluster.xml # pcs cluster cib-push /tmp/cluster.xml<source lang="bash">

C

Pacemaker, Clusters from Scratch Configuration Explained Pacemaker: Creating LVM Volumes in a Cluster Linux Containers with libvirt-lxc (deprecated) High Availability Add-On Reference High Availability Add-On Administration Docker Docs Get Started with Docker Formatted Container Images on Red Hat Systems LXC 1.0. GitHub ClusterLabs CRIU):

<rsc_colocation id="docker-col" score="INFINITY"> <resource_set id="docker-col-0" require-all="false" role="Started" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> <resource_set id="docker-col-1"> <resource_ref id="docker"/> </resource_set> </rsc_colocation> ( colocation set , id="docker-col" ) ( score="INFINITY" ). ( id="docker-col-0" ) :

( sequential="false" ) ( require-all="false" ) ( role="Started" )

resource_ref , . role="Started" .

( id="docker-col-1" ), docker .

role , ( ).

Ordering set , :

<rsc_order id="order_doc"> <resource_set id="order_doc-0"> <resource_ref id="docker"/> </resource_set> <resource_set id="order_doc-1" require-all="false" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> </rsc_order> , docker , .

, / . . , - . pcs :

# pcs constraint --full | grep -i set Resource Sets: set docker (id:order_doc-0) set doc-test doc-test2 doc-test3 sequential=false require-all=false (id:order_doc-1) (id:order_doc) Resource Sets: set doc-test doc-test2 doc-test3 role=Started sequential=false require-all=false (id:docker-col-0) set docker (id:docker-col-1) setoptions score=INFINITY (id:docker-col)

. LXC ( ):

<template id="lxc-template" class="ocf" provider="heartbeat" type="lxc"> <meta_attributes id="lxc-template-meta_attributes"> <nvpair id="lxc-template-meta_attributes-allow-migrate" name="use_screen" value="false"/> </meta_attributes> <operations> <op id="lxc-template-monitor-30s" interval="30s" name="monitor" timeout="20s"/> <op id="lxc-template-start-0" interval="0" name="start" timeout="20s"/> <op id="lxc-template-stop-0" interval="0" name="start" timeout="90s"/> </operations> </template>

. :

<primitive id="lxc-racktables" template="lxc-template"> <instance_attributes id="lxc-racktables-instance_attributes"> <nvpair id="lxc-racktables-instance_attributes-container" name="container" value="lxc-racktables"/> <nvpair id="lxc-racktables-instance_attributes-config" name="config" value="/var/lib/lxc/lxc-racktables/config"/> </instance_attributes> </primitive>

:

# pcs resource show lxc-racktables Resource: lxc-racktables (template=lxc-template) Attributes: container=lxc-racktables config=/var/lib/lxc/lxc-racktables/config

pcs .

LXC , Docker-.

Pacemaker- crmsh opensuse.org , , , .

:

# pcs status Cluster name: Cluster

Last updated: Thu Jul 16 12:29:33 2015

Last change: Thu Jul 16 10:23:40 2015

Stack: corosync

Current DC: cluster-1 (1) - partition with quorum

Version: 1.1.12-a14efad

2 Nodes configured

19 Resources configured

Online: [ cluster-1 cluster-2 ]

Full list of resources:

cluster-1.stonith (stonith:fence_ipmilan): Started cluster-2

cluster-2.stonith (stonith:fence_ipmilan): Started cluster-1

Clone Set: dlm-clone [dlm]

Started: [ cluster-1 cluster-2 ]

Clone Set: clvmd-clone [clvmd]

Started: [ cluster-1 cluster-2 ]

Clone Set: fs-lxc_ct-clone [fs-lxc_ct]

Started: [ cluster-1 cluster-2 ]

lxc-racktables (ocf::heartbeat:lxc): Started cluster-1

Resource Group: docker

lvm-docker-pool (ocf::heartbeat:LVM): Started cluster-2

dockerIP (ocf::heartbeat:IPaddr2): Started cluster-2

fs-docker-db (ocf::heartbeat:Filesystem): Started cluster-2

dockerd (systemd:docker): Started cluster-2

docker-registry (ocf::heartbeat:Filesystem): Started cluster-2

registryIP (ocf::heartbeat:IPaddr2): Started cluster-2

dregistry (ocf::heartbeat:docker): Started cluster-2

doc-test (ocf::heartbeat:docker): Started cluster-2

doc-test2 (ocf::heartbeat:docker): Started cluster-2

doc-test3 (ocf::heartbeat:docker): Stopped

PCSD Status:

cluster-1: Online

cluster-2: Online

Daemon Status:

corosync: active/disabled

pacemaker: active/enabled

pcsd: active/enabled

# pcs constraint Location Constraints:

Resource: cluster-1.stonith

Disabled on: cluster-1 (score:-INFINITY)

Resource: cluster-2.stonith

Disabled on: cluster-2 (score:-INFINITY)

Resource: doc-test

Enabled on: cluster-2 (score:INFINITY) (role: Started)

Ordering Constraints:

start dlm-clone then start clvmd-clone (kind:Mandatory)

start clvmd-clone then start fs-lxc_ct-clone (kind:Mandatory)

start fs-lxc_ct-clone then start lxc-racktables (kind:Mandatory)

start clvmd-clone then start lvm-docker-pool (kind:Mandatory)

Resource Sets:

set docker set doc-test doc-test2 doc-test3 sequential=false require-all=false

Colocation Constraints:

clvmd-clone with dlm-clone (score:INFINITY)

fs-lxc_ct-clone with clvmd-clone (score:INFINITY)

lvm-docker-pool with clvmd-clone (score:INFINITY)

Resource Sets:

set doc-test doc-test2 doc-test3 role=Started sequential=false require-all=false set docker setoptions score=INFINITY

.

:

# pcs resource show <resource id>

, :

# pcs resource update <resource id> op start start-delay="3s" interval=0s timeout=90 , , update .

# pcs resource restart <resource id> [node] clone- , , .

:

# pcs resource failcount show <resource id> [node] # pcs resource failcount reset <resource id> [node] , Pacemaker - .

:

# pcs resource cleanup [<resource id>] Pacemaker .

/ - :

# pcs resource disable [<resource id>] # pcs resource enable [<resource id>] / , .

:

# pcs resource move <resource id> [destination node] - , . , , . , constraint :

Resource: docker Enabled on: cluster-2 (score:INFINITY) (role: Started) (id:cli-prefer-docker) c . .

:

# pcs constraint –full

- id, :

# pcs constraint remove <constraint id>

:

# pcs cluster cib > /tmp/cluster.xml # pcs cluster cib-push /tmp/cluster.xml<source lang="bash">

C

Pacemaker, Clusters from Scratch Configuration Explained Pacemaker: Creating LVM Volumes in a Cluster Linux Containers with libvirt-lxc (deprecated) High Availability Add-On Reference High Availability Add-On Administration Docker Docs Get Started with Docker Formatted Container Images on Red Hat Systems LXC 1.0. GitHub ClusterLabs CRIU):( colocation set ,

<rsc_colocation id="docker-col" score="INFINITY"> <resource_set id="docker-col-0" require-all="false" role="Started" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> <resource_set id="docker-col-1"> <resource_ref id="docker"/> </resource_set> </rsc_colocation>id="docker-col") (score="INFINITY"). (id="docker-col-0") :

(sequential="false") (require-all="false") (role="Started")

resource_ref , .role="Started".

(id="docker-col-1"),docker.

role , ( ).

Ordering set , :<rsc_order id="order_doc"> <resource_set id="order_doc-0"> <resource_ref id="docker"/> </resource_set> <resource_set id="order_doc-1" require-all="false" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> </rsc_order>,docker, .

, / . . , - .pcs:# pcs constraint --full | grep -i set Resource Sets: set docker (id:order_doc-0) set doc-test doc-test2 doc-test3 sequential=false require-all=false (id:order_doc-1) (id:order_doc) Resource Sets: set doc-test doc-test2 doc-test3 role=Started sequential=false require-all=false (id:docker-col-0) set docker (id:docker-col-1) setoptions score=INFINITY (id:docker-col)

. LXC ():

<template id="lxc-template" class="ocf" provider="heartbeat" type="lxc"> <meta_attributes id="lxc-template-meta_attributes"> <nvpair id="lxc-template-meta_attributes-allow-migrate" name="use_screen" value="false"/> </meta_attributes> <operations> <op id="lxc-template-monitor-30s" interval="30s" name="monitor" timeout="20s"/> <op id="lxc-template-start-0" interval="0" name="start" timeout="20s"/> <op id="lxc-template-stop-0" interval="0" name="start" timeout="90s"/> </operations> </template>

. :<primitive id="lxc-racktables" template="lxc-template"> <instance_attributes id="lxc-racktables-instance_attributes"> <nvpair id="lxc-racktables-instance_attributes-container" name="container" value="lxc-racktables"/> <nvpair id="lxc-racktables-instance_attributes-config" name="config" value="/var/lib/lxc/lxc-racktables/config"/> </instance_attributes> </primitive>

:# pcs resource show lxc-racktables Resource: lxc-racktables (template=lxc-template) Attributes: container=lxc-racktables config=/var/lib/lxc/lxc-racktables/configpcs.

LXC , Docker-.

Pacemaker-crmshopensuse.org , , , .

:

# pcs status Cluster name: Cluster

Last updated: Thu Jul 16 12:29:33 2015

Last change: Thu Jul 16 10:23:40 2015

Stack: corosync

Current DC: cluster-1 (1) - partition with quorum

Version: 1.1.12-a14efad

2 Nodes configured

19 Resources configured

Online: [ cluster-1 cluster-2 ]

Full list of resources:

cluster-1.stonith (stonith:fence_ipmilan): Started cluster-2

cluster-2.stonith (stonith:fence_ipmilan): Started cluster-1

Clone Set: dlm-clone [dlm]

Started: [ cluster-1 cluster-2 ]

Clone Set: clvmd-clone [clvmd]

Started: [ cluster-1 cluster-2 ]

Clone Set: fs-lxc_ct-clone [fs-lxc_ct]

Started: [ cluster-1 cluster-2 ]

lxc-racktables (ocf::heartbeat:lxc): Started cluster-1

Resource Group: docker

lvm-docker-pool (ocf::heartbeat:LVM): Started cluster-2

dockerIP (ocf::heartbeat:IPaddr2): Started cluster-2

fs-docker-db (ocf::heartbeat:Filesystem): Started cluster-2

dockerd (systemd:docker): Started cluster-2

docker-registry (ocf::heartbeat:Filesystem): Started cluster-2

registryIP (ocf::heartbeat:IPaddr2): Started cluster-2

dregistry (ocf::heartbeat:docker): Started cluster-2

doc-test (ocf::heartbeat:docker): Started cluster-2

doc-test2 (ocf::heartbeat:docker): Started cluster-2

doc-test3 (ocf::heartbeat:docker): Stopped

PCSD Status:

cluster-1: Online

cluster-2: Online

Daemon Status:

corosync: active/disabled

pacemaker: active/enabled

pcsd: active/enabled

# pcs constraint Location Constraints:

Resource: cluster-1.stonith

Disabled on: cluster-1 (score:-INFINITY)

Resource: cluster-2.stonith

Disabled on: cluster-2 (score:-INFINITY)

Resource: doc-test

Enabled on: cluster-2 (score:INFINITY) (role: Started)

Ordering Constraints:

start dlm-clone then start clvmd-clone (kind:Mandatory)

start clvmd-clone then start fs-lxc_ct-clone (kind:Mandatory)

start fs-lxc_ct-clone then start lxc-racktables (kind:Mandatory)

start clvmd-clone then start lvm-docker-pool (kind:Mandatory)

Resource Sets:

set docker set doc-test doc-test2 doc-test3 sequential=false require-all=false

Colocation Constraints:

clvmd-clone with dlm-clone (score:INFINITY)

fs-lxc_ct-clone with clvmd-clone (score:INFINITY)

lvm-docker-pool with clvmd-clone (score:INFINITY)

Resource Sets:

set doc-test doc-test2 doc-test3 role=Started sequential=false require-all=false set docker setoptions score=INFINITY

.

:# pcs resource show <resource id>

, :# pcs resource update <resource id> op start start-delay="3s" interval=0s timeout=90, , update .# pcs resource restart <resource id> [node]clone- , , .

:# pcs resource failcount show <resource id> [node] # pcs resource failcount reset <resource id> [node], Pacemaker - .

:# pcs resource cleanup [<resource id>]Pacemaker .

/ - :# pcs resource disable [<resource id>] # pcs resource enable [<resource id>]/ , .

:# pcs resource move <resource id> [destination node]- , . , , . , constraint :Resource: docker Enabled on: cluster-2 (score:INFINITY) (role: Started) (id:cli-prefer-docker)c . .

:# pcs constraint –full

- id, :# pcs constraint remove <constraint id>

:# pcs cluster cib > /tmp/cluster.xml # pcs cluster cib-push /tmp/cluster.xml<source lang="bash">

C

Pacemaker, Clusters from Scratch Configuration Explained Pacemaker: Creating LVM Volumes in a Cluster Linux Containers with libvirt-lxc (deprecated) High Availability Add-On Reference High Availability Add-On Administration Docker Docs Get Started with Docker Formatted Container Images on Red Hat Systems LXC 1.0. GitHub ClusterLabs CRIU):( colocation set ,

<rsc_colocation id="docker-col" score="INFINITY"> <resource_set id="docker-col-0" require-all="false" role="Started" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> <resource_set id="docker-col-1"> <resource_ref id="docker"/> </resource_set> </rsc_colocation>id="docker-col") (score="INFINITY"). (id="docker-col-0") :

(sequential="false") (require-all="false") (role="Started")

resource_ref , .role="Started".

(id="docker-col-1"),docker.

role , ( ).

Ordering set , :<rsc_order id="order_doc"> <resource_set id="order_doc-0"> <resource_ref id="docker"/> </resource_set> <resource_set id="order_doc-1" require-all="false" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> </rsc_order>,docker, .

, / . . , - .pcs:# pcs constraint --full | grep -i set Resource Sets: set docker (id:order_doc-0) set doc-test doc-test2 doc-test3 sequential=false require-all=false (id:order_doc-1) (id:order_doc) Resource Sets: set doc-test doc-test2 doc-test3 role=Started sequential=false require-all=false (id:docker-col-0) set docker (id:docker-col-1) setoptions score=INFINITY (id:docker-col)

. LXC ():

<template id="lxc-template" class="ocf" provider="heartbeat" type="lxc"> <meta_attributes id="lxc-template-meta_attributes"> <nvpair id="lxc-template-meta_attributes-allow-migrate" name="use_screen" value="false"/> </meta_attributes> <operations> <op id="lxc-template-monitor-30s" interval="30s" name="monitor" timeout="20s"/> <op id="lxc-template-start-0" interval="0" name="start" timeout="20s"/> <op id="lxc-template-stop-0" interval="0" name="start" timeout="90s"/> </operations> </template>

. :<primitive id="lxc-racktables" template="lxc-template"> <instance_attributes id="lxc-racktables-instance_attributes"> <nvpair id="lxc-racktables-instance_attributes-container" name="container" value="lxc-racktables"/> <nvpair id="lxc-racktables-instance_attributes-config" name="config" value="/var/lib/lxc/lxc-racktables/config"/> </instance_attributes> </primitive>

:# pcs resource show lxc-racktables Resource: lxc-racktables (template=lxc-template) Attributes: container=lxc-racktables config=/var/lib/lxc/lxc-racktables/configpcs.

LXC , Docker-.

Pacemaker-crmshopensuse.org , , , .

:

# pcs status Cluster name: Cluster

Last updated: Thu Jul 16 12:29:33 2015

Last change: Thu Jul 16 10:23:40 2015

Stack: corosync

Current DC: cluster-1 (1) - partition with quorum

Version: 1.1.12-a14efad

2 Nodes configured

19 Resources configured

Online: [ cluster-1 cluster-2 ]

Full list of resources:

cluster-1.stonith (stonith:fence_ipmilan): Started cluster-2

cluster-2.stonith (stonith:fence_ipmilan): Started cluster-1

Clone Set: dlm-clone [dlm]

Started: [ cluster-1 cluster-2 ]

Clone Set: clvmd-clone [clvmd]

Started: [ cluster-1 cluster-2 ]

Clone Set: fs-lxc_ct-clone [fs-lxc_ct]

Started: [ cluster-1 cluster-2 ]

lxc-racktables (ocf::heartbeat:lxc): Started cluster-1

Resource Group: docker

lvm-docker-pool (ocf::heartbeat:LVM): Started cluster-2

dockerIP (ocf::heartbeat:IPaddr2): Started cluster-2

fs-docker-db (ocf::heartbeat:Filesystem): Started cluster-2

dockerd (systemd:docker): Started cluster-2

docker-registry (ocf::heartbeat:Filesystem): Started cluster-2

registryIP (ocf::heartbeat:IPaddr2): Started cluster-2

dregistry (ocf::heartbeat:docker): Started cluster-2

doc-test (ocf::heartbeat:docker): Started cluster-2

doc-test2 (ocf::heartbeat:docker): Started cluster-2

doc-test3 (ocf::heartbeat:docker): Stopped

PCSD Status:

cluster-1: Online

cluster-2: Online

Daemon Status:

corosync: active/disabled

pacemaker: active/enabled

pcsd: active/enabled

# pcs constraint Location Constraints:

Resource: cluster-1.stonith

Disabled on: cluster-1 (score:-INFINITY)

Resource: cluster-2.stonith

Disabled on: cluster-2 (score:-INFINITY)

Resource: doc-test

Enabled on: cluster-2 (score:INFINITY) (role: Started)

Ordering Constraints:

start dlm-clone then start clvmd-clone (kind:Mandatory)

start clvmd-clone then start fs-lxc_ct-clone (kind:Mandatory)

start fs-lxc_ct-clone then start lxc-racktables (kind:Mandatory)

start clvmd-clone then start lvm-docker-pool (kind:Mandatory)

Resource Sets:

set docker set doc-test doc-test2 doc-test3 sequential=false require-all=false

Colocation Constraints:

clvmd-clone with dlm-clone (score:INFINITY)

fs-lxc_ct-clone with clvmd-clone (score:INFINITY)

lvm-docker-pool with clvmd-clone (score:INFINITY)

Resource Sets:

set doc-test doc-test2 doc-test3 role=Started sequential=false require-all=false set docker setoptions score=INFINITY

.

:# pcs resource show <resource id>

, :# pcs resource update <resource id> op start start-delay="3s" interval=0s timeout=90, , update .# pcs resource restart <resource id> [node]clone- , , .

:# pcs resource failcount show <resource id> [node] # pcs resource failcount reset <resource id> [node], Pacemaker - .

:# pcs resource cleanup [<resource id>]Pacemaker .

/ - :# pcs resource disable [<resource id>] # pcs resource enable [<resource id>]/ , .

:# pcs resource move <resource id> [destination node]- , . , , . , constraint :Resource: docker Enabled on: cluster-2 (score:INFINITY) (role: Started) (id:cli-prefer-docker)c . .

:# pcs constraint –full

- id, :# pcs constraint remove <constraint id>

:# pcs cluster cib > /tmp/cluster.xml # pcs cluster cib-push /tmp/cluster.xml<source lang="bash">

C

Pacemaker, Clusters from Scratch Configuration Explained Pacemaker: Creating LVM Volumes in a Cluster Linux Containers with libvirt-lxc (deprecated) High Availability Add-On Reference High Availability Add-On Administration Docker Docs Get Started with Docker Formatted Container Images on Red Hat Systems LXC 1.0. GitHub ClusterLabs CRIU):( colocation set ,

<rsc_colocation id="docker-col" score="INFINITY"> <resource_set id="docker-col-0" require-all="false" role="Started" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> <resource_set id="docker-col-1"> <resource_ref id="docker"/> </resource_set> </rsc_colocation>id="docker-col") (score="INFINITY"). (id="docker-col-0") :

(sequential="false") (require-all="false") (role="Started")

resource_ref , .role="Started".

(id="docker-col-1"),docker.

role , ( ).

Ordering set , :<rsc_order id="order_doc"> <resource_set id="order_doc-0"> <resource_ref id="docker"/> </resource_set> <resource_set id="order_doc-1" require-all="false" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> </rsc_order>,docker, .

, / . . , - .pcs:# pcs constraint --full | grep -i set Resource Sets: set docker (id:order_doc-0) set doc-test doc-test2 doc-test3 sequential=false require-all=false (id:order_doc-1) (id:order_doc) Resource Sets: set doc-test doc-test2 doc-test3 role=Started sequential=false require-all=false (id:docker-col-0) set docker (id:docker-col-1) setoptions score=INFINITY (id:docker-col)

. LXC ():

<template id="lxc-template" class="ocf" provider="heartbeat" type="lxc"> <meta_attributes id="lxc-template-meta_attributes"> <nvpair id="lxc-template-meta_attributes-allow-migrate" name="use_screen" value="false"/> </meta_attributes> <operations> <op id="lxc-template-monitor-30s" interval="30s" name="monitor" timeout="20s"/> <op id="lxc-template-start-0" interval="0" name="start" timeout="20s"/> <op id="lxc-template-stop-0" interval="0" name="start" timeout="90s"/> </operations> </template>

. :<primitive id="lxc-racktables" template="lxc-template"> <instance_attributes id="lxc-racktables-instance_attributes"> <nvpair id="lxc-racktables-instance_attributes-container" name="container" value="lxc-racktables"/> <nvpair id="lxc-racktables-instance_attributes-config" name="config" value="/var/lib/lxc/lxc-racktables/config"/> </instance_attributes> </primitive>

:# pcs resource show lxc-racktables Resource: lxc-racktables (template=lxc-template) Attributes: container=lxc-racktables config=/var/lib/lxc/lxc-racktables/configpcs.

LXC , Docker-.

Pacemaker-crmshopensuse.org , , , .

:

# pcs status Cluster name: Cluster

Last updated: Thu Jul 16 12:29:33 2015

Last change: Thu Jul 16 10:23:40 2015

Stack: corosync

Current DC: cluster-1 (1) - partition with quorum

Version: 1.1.12-a14efad

2 Nodes configured

19 Resources configured

Online: [ cluster-1 cluster-2 ]

Full list of resources:

cluster-1.stonith (stonith:fence_ipmilan): Started cluster-2

cluster-2.stonith (stonith:fence_ipmilan): Started cluster-1

Clone Set: dlm-clone [dlm]

Started: [ cluster-1 cluster-2 ]

Clone Set: clvmd-clone [clvmd]

Started: [ cluster-1 cluster-2 ]

Clone Set: fs-lxc_ct-clone [fs-lxc_ct]

Started: [ cluster-1 cluster-2 ]

lxc-racktables (ocf::heartbeat:lxc): Started cluster-1

Resource Group: docker

lvm-docker-pool (ocf::heartbeat:LVM): Started cluster-2

dockerIP (ocf::heartbeat:IPaddr2): Started cluster-2

fs-docker-db (ocf::heartbeat:Filesystem): Started cluster-2

dockerd (systemd:docker): Started cluster-2

docker-registry (ocf::heartbeat:Filesystem): Started cluster-2

registryIP (ocf::heartbeat:IPaddr2): Started cluster-2

dregistry (ocf::heartbeat:docker): Started cluster-2

doc-test (ocf::heartbeat:docker): Started cluster-2

doc-test2 (ocf::heartbeat:docker): Started cluster-2

doc-test3 (ocf::heartbeat:docker): Stopped

PCSD Status:

cluster-1: Online

cluster-2: Online

Daemon Status:

corosync: active/disabled

pacemaker: active/enabled

pcsd: active/enabled

# pcs constraint Location Constraints:

Resource: cluster-1.stonith

Disabled on: cluster-1 (score:-INFINITY)

Resource: cluster-2.stonith

Disabled on: cluster-2 (score:-INFINITY)

Resource: doc-test

Enabled on: cluster-2 (score:INFINITY) (role: Started)

Ordering Constraints:

start dlm-clone then start clvmd-clone (kind:Mandatory)

start clvmd-clone then start fs-lxc_ct-clone (kind:Mandatory)

start fs-lxc_ct-clone then start lxc-racktables (kind:Mandatory)

start clvmd-clone then start lvm-docker-pool (kind:Mandatory)

Resource Sets:

set docker set doc-test doc-test2 doc-test3 sequential=false require-all=false

Colocation Constraints:

clvmd-clone with dlm-clone (score:INFINITY)

fs-lxc_ct-clone with clvmd-clone (score:INFINITY)

lvm-docker-pool with clvmd-clone (score:INFINITY)

Resource Sets:

set doc-test doc-test2 doc-test3 role=Started sequential=false require-all=false set docker setoptions score=INFINITY

.

:# pcs resource show <resource id>

, :# pcs resource update <resource id> op start start-delay="3s" interval=0s timeout=90, , update .# pcs resource restart <resource id> [node]clone- , , .

:# pcs resource failcount show <resource id> [node] # pcs resource failcount reset <resource id> [node], Pacemaker - .

:# pcs resource cleanup [<resource id>]Pacemaker .

/ - :# pcs resource disable [<resource id>] # pcs resource enable [<resource id>]/ , .

:# pcs resource move <resource id> [destination node]- , . , , . , constraint :Resource: docker Enabled on: cluster-2 (score:INFINITY) (role: Started) (id:cli-prefer-docker)c . .

:# pcs constraint –full

- id, :# pcs constraint remove <constraint id>

:# pcs cluster cib > /tmp/cluster.xml # pcs cluster cib-push /tmp/cluster.xml<source lang="bash">

C

Pacemaker, Clusters from Scratch Configuration Explained Pacemaker: Creating LVM Volumes in a Cluster Linux Containers with libvirt-lxc (deprecated) High Availability Add-On Reference High Availability Add-On Administration Docker Docs Get Started with Docker Formatted Container Images on Red Hat Systems LXC 1.0. GitHub ClusterLabs CRIU

):

<rsc_colocation id="docker-col" score="INFINITY"> <resource_set id="docker-col-0" require-all="false" role="Started" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> <resource_set id="docker-col-1"> <resource_ref id="docker"/> </resource_set> </rsc_colocation> ( colocation set , id="docker-col" ) ( score="INFINITY" ). ( id="docker-col-0" ) :

( sequential="false" ) ( require-all="false" ) ( role="Started" )

resource_ref , . role="Started" .

( id="docker-col-1" ), docker .

role , ( ).

Ordering set , :

<rsc_order id="order_doc"> <resource_set id="order_doc-0"> <resource_ref id="docker"/> </resource_set> <resource_set id="order_doc-1" require-all="false" sequential="false"> <resource_ref id="doc-test"/> <resource_ref id="doc-test2"/> <resource_ref id="doc-test3"/> </resource_set> </rsc_order> , docker , .

, / . . , - . pcs :

# pcs constraint --full | grep -i set Resource Sets: set docker (id:order_doc-0) set doc-test doc-test2 doc-test3 sequential=false require-all=false (id:order_doc-1) (id:order_doc) Resource Sets: set doc-test doc-test2 doc-test3 role=Started sequential=false require-all=false (id:docker-col-0) set docker (id:docker-col-1) setoptions score=INFINITY (id:docker-col)

. LXC ( ):