Overview of Docker Engine from 1.0 to 1.7. Introduction to Docker Compose

In previous articles we have already discussed what Docker is and how to use Dockerfile and communicate between containers .

These articles were written on Docker 1.1.2. Since then, a lot of useful things have appeared in Docker, which we will discuss in this article. We will also take a closer look at Docker Compose, a utility that allows you to define a multi-container application with all dependencies in one file and run this application in one command. Examples will be demonstrated on the cloud server in InfoboxCloud .

We recommend creating a virtual machine with CentOS 7 to install Docker in InfoboxCloud . Using CoreOS / Fedora Atomic / Ubuntu Snappy in production is still too risky. A virtual machine is now needed for Docker to work, so when creating a server, be sure to check the “Allow OS kernel management” box.

')

After creating a server with CentOS 7, connect to it via SSH .

We have prepared a script that will allow you to install Docker and useful utilities for working with Docker on such a server. The necessary settings will be made automatically.

Run the command to install Docker and Compose:

To update, run the script:

In this section, we will not touch on fixing bugs and improving the docker architecture, but will only talk about the main new features for the user. This will help update your Docker features.

This release includes significant security enhancements.

Now a set of docker utilities has finally been formed into separate Docker Engine, Registry, Compose, Swarm and Machine. About Compose described in the next section of the article. The remaining utilities will be discussed in the following articles.

This release includes rewritten network subsystem and volume subsystem, as well as increased stability and the ZFS driver.

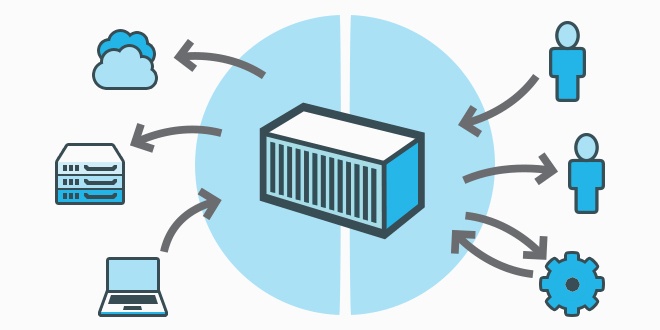

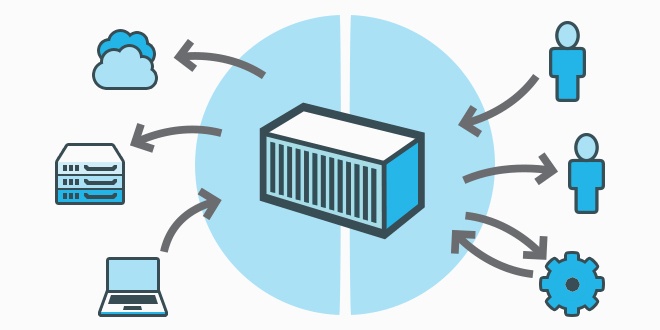

Distributed applications usually consist of several small services that work together. Docker converts these applications into individual containers and links together. Instead of building, running, and managing each container, Docker Compose allows you to define a multi-container application with all dependencies in one file and launch this application with one command up . Keeping the structure and configuration of the application together allows you to simply and repeatedly run it everywhere.

Using Compose usually consists of three steps:

With Compose, you can manage the life cycle of your application:

The docker-compose.yml file contains the rules for deploying applications in docker.

Each service in docker – compose.yml must include at least one image ( image ) or a build script. All other parameters are optional, by analogy with the parameters of the docker run command .

As with the docker run , the options specified in the Dockerfile (such as CMD, EXPOSE, VOLUME, ENV) are executed by default, there is no need to repeat them in docker-compose.yml.

The list of available docker – compose.yml parameters is described in the official documentation .

Let's move straight from theory to practice and write docker-compose for apache with PHP and MySQL.

Suppose we created a server in InfoboxCloud and installed docker and compose using one command specified at the beginning of the article. Now just copy the lamp folder with docker – compose.xml, the site and database folders and run the command

The LAMP stack will rise and our site will already work in it. Just like that! Do you want to move the site to another server or deploy it on ten servers? Just copy the LAMP folder to another server and run docker – compose again.

Thus, docker and compose allow you to package applications and entire systems into prepared environments and prescribe the configuration of their deployment. In this case, the deployment takes place in one command and even an unprepared user can do this. Deploying even complex systems in InfoboxCloud (and generally IaaS clouds) happens with the simplicity of PaaS, but you control all aspects of the infrastructure. This is the only deployment method that uses OnlyOffice.

In this case, see which containers are created. To do this, use the command:

You will see a container with a strange name other than the containers described in docker-compose.yml . In this case, the name "335698fa2d_lamp_db_1".

Delete it with the command:

After that, compose will perform the redeployment successfully.

In the following articles we will look at other useful utilities for Docker. If you find a mistake in the article or you have a question, write to us in the LAN or by email . If you can not leave comments on Habré - write in the Community .

Successful use of Docker!

These articles were written on Docker 1.1.2. Since then, a lot of useful things have appeared in Docker, which we will discuss in this article. We will also take a closer look at Docker Compose, a utility that allows you to define a multi-container application with all dependencies in one file and run this application in one command. Examples will be demonstrated on the cloud server in InfoboxCloud .

Installing Docker and Compose in InfoboxCloud

We recommend creating a virtual machine with CentOS 7 to install Docker in InfoboxCloud . Using CoreOS / Fedora Atomic / Ubuntu Snappy in production is still too risky. A virtual machine is now needed for Docker to work, so when creating a server, be sure to check the “Allow OS kernel management” box.

')

How to create a server in InfoboxCloud for Docker

If you do not have access to InfoboxCloud - order it .

Using the cloud is very convenient because there is no subscription fee. When registering, you simultaneously replenish your account with at least 500 rubles (by analogy with the purchase of a sim – card from a mobile operator) and then you can use the cloud as needed. Quickly calculate how much approximately a cloud server will cost for you per month here (specify the correct dimensions, for example 2 GHz of frequency, and not 2000 GHz). Payment is made on an hourly basis and is frozen on your account. Using autoscaling or changing the amount of available server resources manually, you can only pay for the necessary resources and further save and be able to get more resources when necessary.

After registration, you will receive data to access the control panel by email. Enter the control panel at: https://panel.infobox.ru

In the “Cloud Infrastructure” section of your subscription, click “New Server” (if necessary, the subscription changes in the upper right corner in the drop-down menu).

Set the required server parameters. Be sure to allocate a public IP address to the server and check the box “Allow OS kernel management” , as shown in the screenshot below.

In the list of available operating systems, select CentOS 7 and complete server creation.

After that, the data to access the server will come to your email.

Using the cloud is very convenient because there is no subscription fee. When registering, you simultaneously replenish your account with at least 500 rubles (by analogy with the purchase of a sim – card from a mobile operator) and then you can use the cloud as needed. Quickly calculate how much approximately a cloud server will cost for you per month here (specify the correct dimensions, for example 2 GHz of frequency, and not 2000 GHz). Payment is made on an hourly basis and is frozen on your account. Using autoscaling or changing the amount of available server resources manually, you can only pay for the necessary resources and further save and be able to get more resources when necessary.

After registration, you will receive data to access the control panel by email. Enter the control panel at: https://panel.infobox.ru

In the “Cloud Infrastructure” section of your subscription, click “New Server” (if necessary, the subscription changes in the upper right corner in the drop-down menu).

Set the required server parameters. Be sure to allocate a public IP address to the server and check the box “Allow OS kernel management” , as shown in the screenshot below.

In the list of available operating systems, select CentOS 7 and complete server creation.

After that, the data to access the server will come to your email.

After creating a server with CentOS 7, connect to it via SSH .

We have prepared a script that will allow you to install Docker and useful utilities for working with Docker on such a server. The necessary settings will be made automatically.

Run the command to install Docker and Compose:

bash <(curl -s http://repository.sandbox.infoboxcloud.ru/scripts/docker/centos7/install.sh) What the script does

1. Updates the OS.

2. Stops postfix and disables its autostart. Postfix takes port 25, but this port may need your docker services.

3. Adds the official Docker repository and installs the docker-engine.

5. Adds EPEL repository, installs pip, installs Docker Compose with pip.

6. Starts the Docker service and adds it to the autoload.

2. Stops postfix and disables its autostart. Postfix takes port 25, but this port may need your docker services.

3. Adds the official Docker repository and installs the docker-engine.

5. Adds EPEL repository, installs pip, installs Docker Compose with pip.

6. Starts the Docker service and adds it to the autoload.

Update Docker and Compose in InfoboxCloud

To update, run the script:

bash <(curl -s http://repository.sandbox.infoboxcloud.ru/scripts/docker/centos7/update.sh) New Docker features from version 1.0

In this section, we will not touch on fixing bugs and improving the docker architecture, but will only talk about the main new features for the user. This will help update your Docker features.

1.1

.Dockerignore support

You can add a .dockerignore file next to your Dockerfile and the docker will ignore the files and directories specified in the file when sending the build context to the docker daemon.

Container is paused at commit

Previously, commit for running containers was not recommended, because its integrity during simultaneous operation and commit could be compromised (for example, if a record was made during the commit process). Now at the moment of commit the containers are paused.

If you need to disable this feature, use the following command:

If you need to disable this feature, use the following command:

docker commit --pause=false <container_id> Display the last lines of logs

Now you can display the last lines of the container logs.

Previously, to view the logs, a command was used that displays the entire log:

Now you can display, for example, the last 10 lines of the log with the command:

You can also monitor what is added to the log now without reading the entire log:

Previously, to view the logs, a command was used that displays the entire log:

docker logs <container_id> Now you can display, for example, the last 10 lines of the log with the command:

docker logs --tail 10 <container_id> You can also monitor what is added to the log now without reading the entire log:

docker logs --tail 0 -f <container_id> Support tar file as context when building docker

Now you can pass the tar archive into the docker build process as a context.

The archive can be used to automate the assembly of docker templates, for example:

The archive can be used to automate the assembly of docker templates, for example:

cat context.tar | docker build - or docker run builder_image | docker build - The ability to mount the entire host file system into a container

Now in --volumes you can pass the root of the host file system / .

For example:

However, it is forbidden to mount the host file system to the root of the file system of the container / , you can only in some folder in the container.

For example:

docker run -v /:/host ubuntu:ro ls /my_host However, it is forbidden to mount the host file system to the root of the file system of the container / , you can only in some folder in the container.

1.2

Container Restart Policies

Previously, for each container, you had to write your own script to run when the system boots. Now it is possible to restart the container using the Docker – service.

For the docker run command , the --restart flag is added with the following policies:

The --restart flag for the docker daemon is now deprecated due to new options.

For example, a container with Redis will endlessly try to climb while it exists:

Another example: if the container is not properly completed and fell - try 5 times to get up.

For the docker run command , the --restart flag is added with the following policies:

- no - do not restart the container if it fell (by default).

- on – failure — restart the container if it ended with a non-zero code (i.e., non-standard). You can also specify the number of attempts to restart (on-failure: 5)

- always - always restart the container regardless of the exit code.

The --restart flag for the docker daemon is now deprecated due to new options.

For example, a container with Redis will endlessly try to climb while it exists:

docker run --restart=always redis Another example: if the container is not properly completed and fell - try 5 times to get up.

docker run --restart=on-failure:5 redis --cap-add - cap-drop

Prior to version 1.2, docker could get all privileges or only privileges from the white list, banning all others. Using the --privileged flag gave the container all privileges regardless of whitelisting. It is not recommended to do this in production and it is really unsafe: it is as if you are working directly on the host.

In version 1.2, two new flags have appeared for use with the docker run : --cap-add and --cap-drop , which give you more precise control over what you allow for the container.

For example, to change the status of container interfaces:

To prohibit chown in a container:

To resolve everything except mknod:

In version 1.2, two new flags have appeared for use with the docker run : --cap-add and --cap-drop , which give you more precise control over what you allow for the container.

For example, to change the status of container interfaces:

docker run --cap-add=NET_ADMIN ubuntu sh -c "ip link eth0 down" To prohibit chown in a container:

docker run --cap-drop=CHOWN ... To resolve everything except mknod:

docker run --cap-add=ALL --cap–drop=MKNOD ... --device

Previously, you could use devices inside containers, mounting them with -v in the --privileged container. Now you can use the --device flag with the docker run , which allows you to use a device without the --privileged flag.

For example, using a device inside a container:

For example, using a device inside a container:

docker run --device=/dev/snd:/dev/snd ... Available for writing / etc / hosts, / etc / hostname, /etc/resolv.conf

Now you can edit / etc / hosts, / etc / hostname, /etc/resolve.conf in the running container. This is useful if you need to install bind or other services that can overwrite these files.

Note that changes to these files are not saved during the container assembly and will not be represented in the resulting images. Changes will only manifest in running containers.

Note that changes to these files are not saved during the container assembly and will not be represented in the resulting images. Changes will only manifest in running containers.

1.3

Running an additional process through docker exec

Previously, for sshd (and not only) to work in a container, it was necessary to start the sshd process with a running application, which created additional risk and overhead. Access to the container is very important for debugging. Therefore, a new docker exec command has been added, which allows the user to start the process inside the container via docker api and CLI.

For example, this is the command that creates a bash – session inside a container:

The main recommended approach “one application per container” has not changed, but the developers have met users who sometimes need service processes for the application.

For example, this is the command that creates a bash – session inside a container:

docker exec -it ubuntu_bash bash The main recommended approach “one application per container” has not changed, but the developers have met users who sometimes need service processes for the application.

Docker create container lifecycle enhancements

The docker run command image_name creates a container and starts the process in it. Many users asked to separate these tasks for more precise control over the life cycle of the container. The docker create command makes this possible.

For example:

To run, run:

Thus, the docker create command gives the user and / or process the flexibility to use the docker start and docker stop commands to manage the life cycle.

For example:

docker create -t -i fedora bash , creates a container layer and displays the container ID, but does not launch it.To run, run:

docker start -a -i <container_id> Thus, the docker create command gives the user and / or process the flexibility to use the docker start and docker stop commands to manage the life cycle.

Security Options --security-opt

With this release, a new flag - security-opt has been added, allowing users to customize SELinux and AppArmor custom labels and profiles.

For example, below is a policy that allows the container process to listen only on Apache ports:

One advantage of this feature is that users can now run docker containers in docker containers without using privileged mode on kernels that support SELinux and AppArmor. By not giving the container the right --privileged you significantly reduce the attack surface of potential threats.

For example, below is a policy that allows the container process to listen only on Apache ports:

docker run --security-opt label:type:svirt_apache -i -t centos bash One advantage of this feature is that users can now run docker containers in docker containers without using privileged mode on kernels that support SELinux and AppArmor. By not giving the container the right --privileged you significantly reduce the attack surface of potential threats.

1.4

This release includes significant security enhancements.

1.5

IPv6 support

Now for each container, you can allocate an ipv6 address with a new flag --ipv6 . Inside the container, you can rezolvit ipv6 addresses.

Read-only containers

Using the --read-only flag, you can make the container file system read-only. This allows you to limit the places in the container where applications can write files. Using this function along with the volumes, you can be sure that the containers only write data to a controlled and known place.

Statistics API

Appeared API for statistics, providing data on the CPU, memory, network IO and disk IO container. Thus it is now possible to monitor containers.

Ability to specify which Dockerfile to use for building

One of the most requested functions has been implemented - the ability to use docker build not only for files by default. Using docker build -f, you can specify the file from which you want to build an image. For example, you can now use separate Dockerfile for testing and for use in production.

Open specification by image

Now the image specification is available publicly , which is useful for understanding what is contained within the image and how.

1.6

Now a set of docker utilities has finally been formed into separate Docker Engine, Registry, Compose, Swarm and Machine. About Compose described in the next section of the article. The remaining utilities will be discussed in the following articles.

Labels for containers and images

Labels allow you to add user-defined metadata to containers and images that can be used by your utilities.

Logging Drivers

There is a new option --log-driver , which includes three options:

Also appeared the logging API, allowing you to connect third-party loggers.

- json-file (default)

- syslog

- none

Also appeared the logging API, allowing you to connect third-party loggers.

Associative Image IDs: Digests

When you download, build or launch images, you can specify them in the form namespace / repository: tag or simply repository . Now it is possible to download, build and launch containers by a new identifier called “digest” with the syntax: namespace / repo @ digest . Digest - an immutable link to the content inside the image.

Excellent use for digests - patches and updates. If you want to release a security update, you can specify an image digest with a security update, ensuring that this update is installed on the server.

Excellent use for digests - patches and updates. If you want to release a security update, you can specify an image digest with a security update, ensuring that this update is installed on the server.

--cgroup-parent

Containers consist of a combination of namespaces, capabilities, and cgroups . Docker used to support arbitrary namespaces and features. Now cgroups support has been added with the --cgroup-parent flag. You can pass a specific cgroup to a container. This allows you to create and manage cgroups in containers. You can define resources for cgroups and put containers in a common parent group.

Ulimits

Until now, containers have inherited ulimit settings from a docker daemon. ulimit allows you to limit the use of the process. Restrictions can be very high for production loads, but not ideal for a container. With the new option, you can specify ulimit settings for all containers when setting up the docker daemon, for example:

These settings can be overloaded when creating a container:

docker -d --default-ulimit nproc=1024:2048 This command will set soft limit 1024 and hard limit to 2048 child processes for all containers. You can use the option multiple times for different parameters: --default-ulimit nproc=1024:2048 --default-ulimit nofile=100:200 These settings can be overloaded when creating a container:

docker run -d -ulimit nproc=2048:4096 httpd Now you can use dockerfile instructions for committing and importing.

Now you can make changes in the image on the fly without restructuring the entire image. This was made possible through the commit --change and import --change functions , which allow you to specify the changes that will be applied to the new image. Supported commit and import instructions that you can apply are listed here .

1.7

This release includes rewritten network subsystem and volume subsystem, as well as increased stability and the ZFS driver.

Docker compose

Distributed applications usually consist of several small services that work together. Docker converts these applications into individual containers and links together. Instead of building, running, and managing each container, Docker Compose allows you to define a multi-container application with all dependencies in one file and launch this application with one command up . Keeping the structure and configuration of the application together allows you to simply and repeatedly run it everywhere.

Using Compose usually consists of three steps:

- Define your application environments in a Dockerfile .

- Identify the services that need to be raised for the application to work in the docker-compose.yml file so that they can run together in an isolated environment.

- Run the docker-compose up command . Docker Compose will launch your applications.

With Compose, you can manage the life cycle of your application:

- Start, stop and rebuild services

- View the status of running services

- Broadcast logging of running services

- Run the necessary command on the service.

docker-compose.yml

The docker-compose.yml file contains the rules for deploying applications in docker.

Each service in docker – compose.yml must include at least one image ( image ) or a build script. All other parameters are optional, by analogy with the parameters of the docker run command .

As with the docker run , the options specified in the Dockerfile (such as CMD, EXPOSE, VOLUME, ENV) are executed by default, there is no need to repeat them in docker-compose.yml.

The list of available docker – compose.yml parameters is described in the official documentation .

Let's move straight from theory to practice and write docker-compose for apache with PHP and MySQL.

web: image: trukhinyuri/apache-php ports: - "80:80" - "443:443" volumes: - ./apache/www/:/var/www/ # - ./apache/conf/sites-enabled/:/etc/apache2/sites-enabled/ links: - db restart: always db: image: centurylink/mysql:latest volumes: - ./mysql/conf/:/etc/mysql/conf.d/ - ./mysql/data/:/var/lib/mysql/ environment: MYSQL_ROOT_PASSWORD: ********** # MYSQL_DATABASE: wordpress restart: always As we wrote docker-compose.xml for deploying LAMP

Put the site files, the apache configuration, the site database into the mounted folders. If the site is new - you can uncomment the environment variable with the name of the database, it will be created at the start of the container. Now we have a portable site that can be quickly deployed to the server.

- In this file, we define 2 docker containers: web and db .

- Specify the images from which they are built or the Dockerfile files. (Already ready images could be found on hub.docker.com )

- Each of the images indicate that they should always restart, no matter what happens.

- For the db container, we specify the password for the database access using the environment variable (we learned the environment variable from the Dockerhub image documentation ).

- In each of the images we mount the folders where the data and settings are stored on the host, in order to easily backup and change them without touching the application in the docker.

- In the web image, we specify a link to the db image. Thus, the host db will be automatically added to the / etc / hosts file and all open ports of the database will be available on it. When accessing the database from the web application, you can specify the host db as the MySQL server and the web application will find the database.

- In the web container, we forward ports 80 and 443 to the outside so that our site is accessible over the network.

Put the site files, the apache configuration, the site database into the mounted folders. If the site is new - you can uncomment the environment variable with the name of the database, it will be created at the start of the container. Now we have a portable site that can be quickly deployed to the server.

Suppose we created a server in InfoboxCloud and installed docker and compose using one command specified at the beginning of the article. Now just copy the lamp folder with docker – compose.xml, the site and database folders and run the command

docker-compose up -d The LAMP stack will rise and our site will already work in it. Just like that! Do you want to move the site to another server or deploy it on ten servers? Just copy the LAMP folder to another server and run docker – compose again.

Thus, docker and compose allow you to package applications and entire systems into prepared environments and prescribe the configuration of their deployment. In this case, the deployment takes place in one command and even an unprepared user can do this. Deploying even complex systems in InfoboxCloud (and generally IaaS clouds) happens with the simplicity of PaaS, but you control all aspects of the infrastructure. This is the only deployment method that uses OnlyOffice.

If during redeployment with compose you encountered a Duplicate bind mount error

In this case, see which containers are created. To do this, use the command:

docker ps -a You will see a container with a strange name other than the containers described in docker-compose.yml . In this case, the name "335698fa2d_lamp_db_1".

Delete it with the command:

docker rm 335698fa2d_lamp_db_1 where 335698fa2d_lamp_db_1 replace with the name of your container with a strange name.After that, compose will perform the redeployment successfully.

Conclusion

In the following articles we will look at other useful utilities for Docker. If you find a mistake in the article or you have a question, write to us in the LAN or by email . If you can not leave comments on Habré - write in the Community .

Successful use of Docker!

Source: https://habr.com/ru/post/263001/

All Articles