Intel RealSense from a developer’s perspective

Any modern technology, including Intel RealSense, has two sides. The first, external, sees the consumer. In most cases, it is bright, colorful, attracting with its novelty. The reverse, seamy side of the development of promising ideas can be seen only by people who directly apply them. This is a territory of hard work, trial and error, but the developers see much more, and their opinion is much more authoritative.

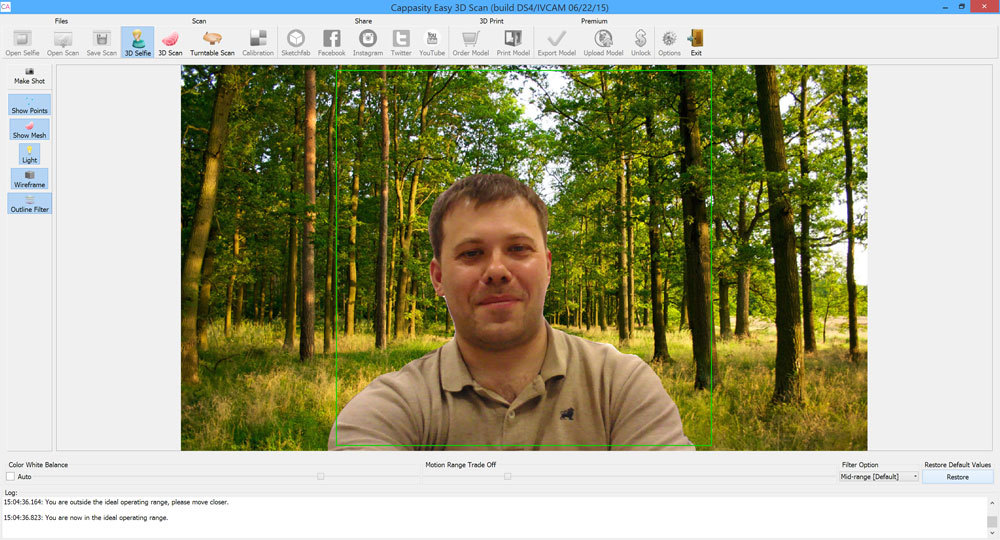

For a long time I had the idea to talk about what the RealSense technology is from the inside, why it is this and not the other, about its disadvantages and merits with a competent specialist. And now - hurray! - one of the largest Russian RealSense specialists Konstantin cappasity Popov agreed to answer my questions.

The idea of perceptual control has been in the air for a long time, the first attempts at implementation date back to the 2000s. Why is it now possible to bring it to life?

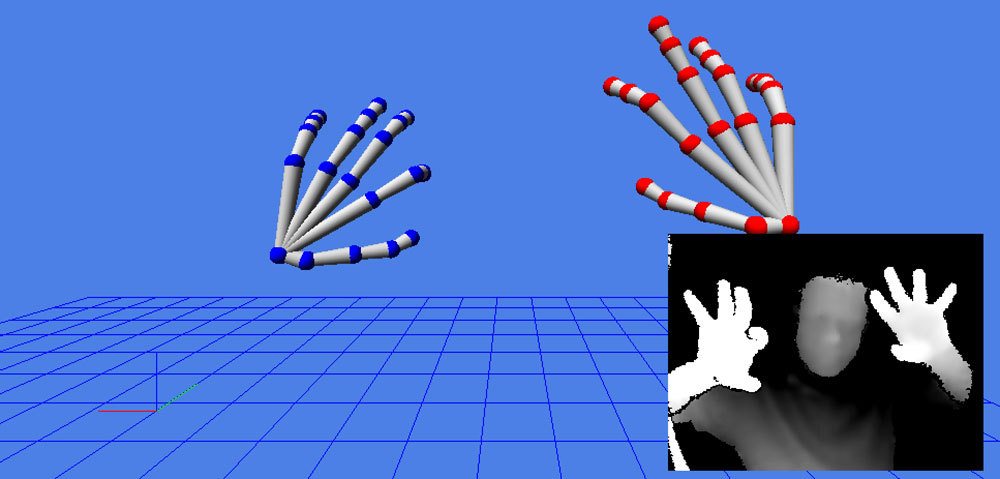

I started my first acquaintance with such control from games for the PlayStation 2. The main problem was the accuracy of the interaction - for example, the interface management was extremely inconvenient due to the long response. I saw the most successful implementation thanks to the first Kinect, created on the basis of technology from the company PrimeSense. The solution was really convenient, and, finally, it was possible to manage games conveniently). Next, Leap Motion entered the market, and now we see RealSense and how technology has moved from games to more serious applications.

')

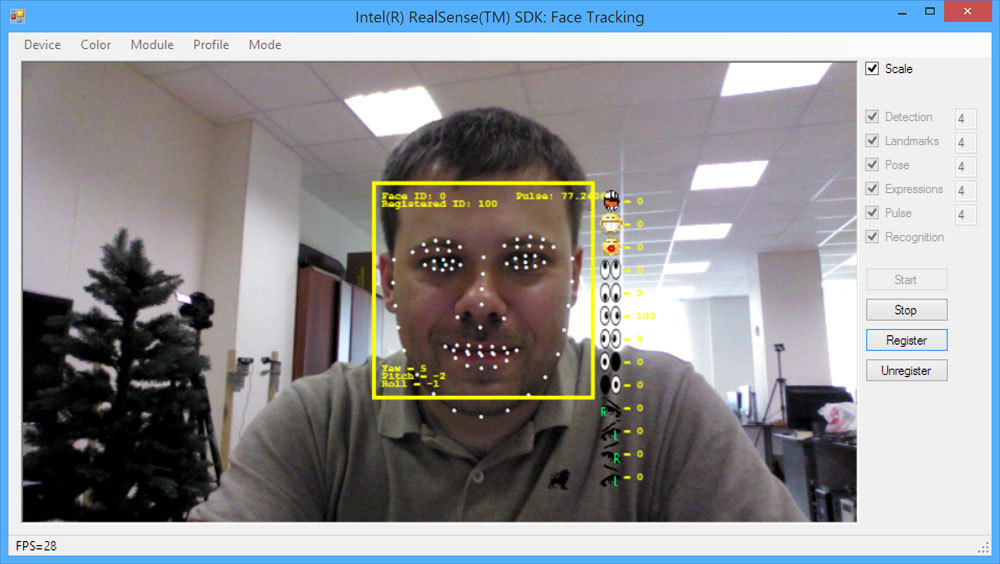

As far as I see the situation, there have always been two problems: a) technical b) marketing. From a technical point of view, it was necessary to have a cheap camera that would have sufficient accuracy for an instant response to user actions. That is, it was necessary to have a large FPS, as little noise as possible (to reduce the load on the post-processing of data) and a depth map with a resolution greater than VGA. PrimeSense were able to make the perfect product, and they still have followers like Structure.IO who sell sensors based on PrimeSense chips. But, alas, Apple bought PrimeSense and closed access to these cameras and further developer support. The second problem is the minimum distance. RealSense can determine the position of the hands (provide depth data) at a distance two times more than the glare than PrimeSense - and this is simply wonderful. The third problem is the convenience and functionality of the SDK. This is where Intel got around both OpenNI2 and Microsoft. In addition to the basic functionality there are many buns that are useful to the developer. Intel is captivating the developer with the fact that, in addition to the new control, it also provides functionality for speech recognition, emotion recognition, head and face detection, background segmentation, and so on. And it stimulates!

We now turn to the marketing component. What we (we - the Cappasity team) have always disliked is how to spend money on new technologies, fasten them and then not see user growth. We are ready to fasten something unnecessary if we are paid for it. But, as I know, most developers no one pays for the integration, and only talk about great features in the distant future. At one time, we went through both the Razer Hydra, and the Falcon controller, and so on. And here, Intel takes a very right step - they all say that RealSense is a device, not a separate camera. That is, we mean that this camera will be built into new ultrabooks, and, therefore, it becomes clear who will be the users of our product - these are ordinary people who buy ultrabooks in the same electronics store Best Buy. But developing, for example, under Kinect 2, we do not see our customers - they have to be created independently, in fact, stimulating sales of Microsoft devices.

That is, Intel became the first to start successfully building an ecosystem around RealSense technology, and so we threw everything and went headlong in support of Intel with its devices. We see real money, and this is the best incentive.

In which direction is RealSense moving? What is the main purpose of this technology?

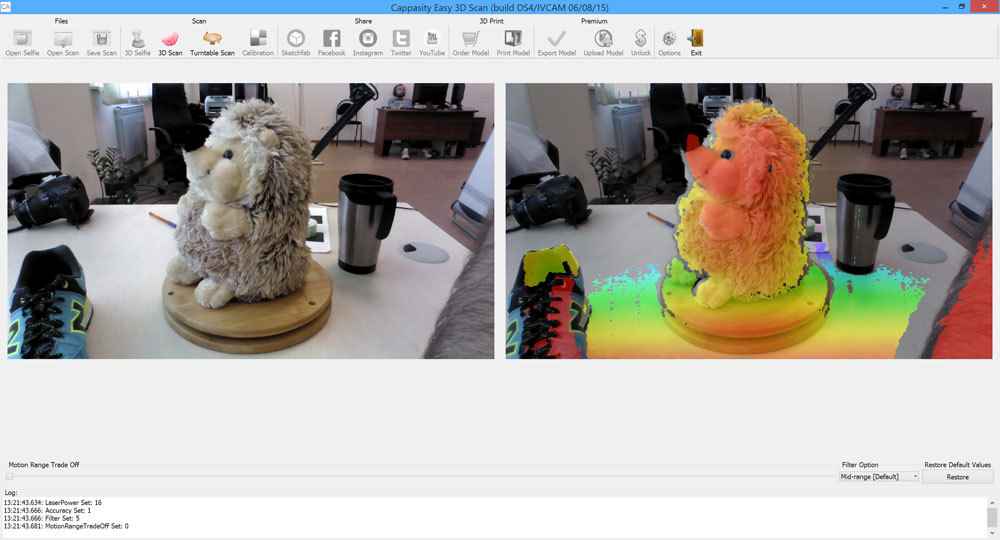

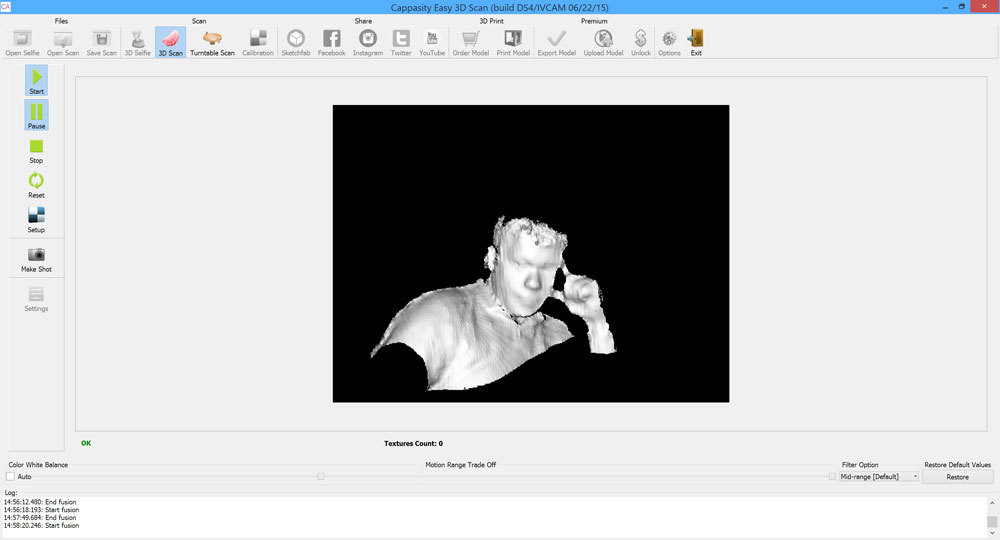

The main goal is to enable a new type of communication and interaction with the outside world. The SDK already has basic functionality for working with the definition of hands, head, face search, emotion recognition, basic functions for data segmentation, basic functions for scanning. Now a new camera is actively being promoted - the R200, which will soon be on tablets and mobile devices. It has a greater range, with the help of this camera you can make 3D photos, it is much more convenient to create 3D models of the surrounding world. I believe that we should expect additional functionality, which is still lacking in the RealSense SDK. It would be great if Intel included in it the ability to define a human skeleton and segmentation for people, that is, the basic functionality of the Kinect 2 SDK. I am sure that we can expect a serious step towards augmented reality, since Metaio technology was recently purchased by Apple (and from the end of the current year they will close access to this technology), and now only the Vuforia SDK (Qualcomm) remains. And for PC, the glade is completely empty. An open question is also about virtual reality, where working with RealSense hands can become a way for a user to interact with the surrounding space. It’s hard to imagine how much resources Intel has allocated for the development of the SDK, but we are watching the development of a very strong SDK, and this is great!

What problems are not solved in the current implementation of RealSense, what problems cannot be solved in principle?

Current problems are related to the principle of operation of IR cameras. IR cameras are afraid of the sun, black, shiny and transparent surfaces. These problems are typical for all cameras of this type, and Intel cannot become wizards here, unless, of course, they create a fundamentally new technology, where the missing data would be obtained by stereo matching data from two color cameras;)

If we compare F200 and R200, then the latter is no longer so afraid of dark hair. I would not discuss the R200 in detail yet, since the iron is still raw, and we are working with the alpha version of the firmware. Again, I compare in terms of using a camera for 3D scanning - perhaps, for the needs of control systems, everything is fine and good.

I do not like the quality of the current RGB camera, and how it adjusts to the lighting, but I heard that it is planned to improve the camera, and perhaps some OEMs will use their camera for the RGB channel.

Are there any competitors for RealSense, in what condition are they now?

For close distances there is only Leap Motion . The rest is all at the amateur level. But Leap Motion is, again, a separate camera.

From the point of view of integration into devices, there is a Google Project Project Tango , which all this time was more dead than alive due to performance problems and has a much weaker hardware. However, a month ago there was an announcement about their cooperation with Qualcomm, and this is encouraging. Moreover, Qualcomm works closely with the company M4D , which promises to release its tablets with 3D cameras. But I would say that we are dealing with prototypes that are still far from entering the market.

If we talk about individual cameras, then I saw a clone of PrimeSense - Orbbec . So far we have not received samples of their cameras, and it is difficult for me to judge about their SDK - usually all companies state their products nicely, but the quality of the functionality needs to be evaluated at least on samples. Orbbec is a cheap Chinese sensor, and I think it will find its user. Yes, it is compatible with OpenNI2, and if there is support for Linux, some community will gather around it.

A good SDK is for Structure.io, but I don’t understand what they will have next, because the stocks of PrimeSense chips, no matter how significant, will end sooner or later.

I almost forgot about Kinect 2, which could win in the nomination "The biggest external camera." The device is good, but if RealSense does support Human Tracking for the R200, then Kinect 2 support will lose any meaning. The R200 is already cheaper than the Kinect 2, and does not require a huge power supply. In addition, Kinect 2 for Windows has ceased to be sold, and you can buy only Kinect 2 for Xbox One and the adapter. This suggests that the topic of mass use of the device did not go.

So with the competition is not a lot. For Intel, it is now important not to lose the main battle for mobile devices.

Is it possible to create a common standard (for example, based on RealSense) and a consortium of companies to promote and develop perceptual technologies?

From the point of view of programming, everything is real, and no one, for example, prevented (except Apple) from supporting OpenNI2, which was well done and conceived as a standard for working with 3D sensors. But it is always difficult for large companies to come to an agreement, and so far I have little faith that we are waiting for a certain OpenSense standard;) But it would be great! For example, now in our SDK there are already three whole code branches, which complicates the code support.

How interesting is RealSense for the consumer? Is the user now ready to abandon traditional interfaces in favor of perceptual?

It depends on software manufacturers. Until they offer new convenient implementations of interfaces, users will use traditional ways of interaction. But we see that Microsoft is promoting the topic of holograms, and, perhaps, only the laziest is not currently engaged in virtual reality - I am sure that in the coming year users will adapt to the new interfaces.

From the point of view of a consumer who buys an ultrabook, it’s not a bad idea to get a 3D camera for the same money. While for many consumers it will remain a toy, but, for example, our potential customers will get a truly real tool for working with 3D objects. And I see that other companies have found an interesting and useful use of 3D cameras. Manufacturers of games, through the efforts of Intel, are beginning to pay attention to the ability to make the same avatars and add new controls, including voice. The ecosystem is not built in one year, and Intel has a lot of hard work to do.

How comfortable is the RealSense SDK now, what do developers expect from it in the future?

I’ve seen several requests for Linux support on the forums, since the guys working with robots love ROS. Many are also waiting for parts of the functionality from the open source PCL SDK, someone wants OpenCL optimization and more free computer vision algorithms;) But the last is already from the category of "buns".

In my opinion, small frameworks for popularizing the SDK wouldn't hurt. Again, I look at everything through the prism of my work - 3D scanning, work with meshes, and so on. When we spoke at GDC 2015, many visitors to our booth asked about the ready SDK for avatars. Many developers are lazy or do not have the proper knowledge to solve such things on their own. They are waiting for support in Unity, so that there is one click integration).

Also, everyone is waiting for mobile platforms. Many questions about Windows 10 tablets. That's when all this will be on the market, then there will be new tasks and wishes. Now everything is very good and not damp. RealSense R3 SDK has made a significant step compared with R2.

Are there any prospects for RealSense, what will be its distribution in the near future?

I'm waiting for the integration of the R200 into mobile devices. If something goes wrong, in any case, the PC market with ultrabooks and tablets on Windows 10 will remain. So I expect the market to grow, but the growth rate is still a topic for discussion. Looking forward to CES 2016;)

Source: https://habr.com/ru/post/262857/

All Articles