How can artificial intelligence be used to solve SEO problems?

The search model must be self-calibrating. That is, it should be able to take its own algorithms, their specific gravity and compare simulated data with publicly available search engines in order to identify the most accurate search engine that allows you to simulate any medium.

However, analyzing thousands of parameters, trying to find the best combination of them is astronomically expensive in terms of computational processing, and also very difficult.

So how, then, to create a self-calibrating search model? It turns out that the only thing left for us is to ask for help from the ... birds. Yes, yes, you heard right, it was to the birds!

')

Optimization using particle swarm ( PSO)

It often happens that the daunting problems find the most unexpected solutions. For example, you should pay attention to optimization using a swarm of particles, which is a method of artificial intelligence, first mentioned in 1995 and based on the socio-psychological behavioral model of the crowd. The technique is actually modeled on the basis of the concept of the behavior of birds in a flock.

In fact, all the algorithms that work on the rules that we have created today, still can not be used to search for at least approximate solutions to the most difficult problems of numerical maximization or minimization. But using such a simple model as a bird flock, you can immediately get an answer. We have often heard horrific predictions about how one day artificial intelligence captures our world. However, in this particular case, he just becomes our most valuable assistant.

Scientists were engaged in the development and implementation of a variety of projects dedicated to Swarm Intelligence. For example, in February 1998, the Millibot project, previously known as Cyberscout, was launched, a program run by the US Marine Corps. Cyberscout was, in fact, a legion of tiny robots that could infiltrate the building, covering its entire territory. The ability of these highly technical crumbs to communicate and transmit information to each other made it possible for the “swarm” of robots to act as one whole organism, turning the very laborious task of researching the whole building into a leisurely walk along the corridor (most of the robots had the opportunity to travel no more than a couple of meters).

Why does this work?

In PSO, the really cool thing is that the technique makes absolutely no assumptions about the problem you are trying to solve. It is a cross between a rule-based algorithm, trying to work out a solution, and neural networks of artificial intelligence, which aim to investigate problems. Thus, this algorithm is a compromise between exploratory and exploitative behavior.

Without a research nature, this optimization approach, the algorithm, would undoubtedly turn into what the statisticians call a “local maximum” (a solution that seems to be optimal, but is not really such).

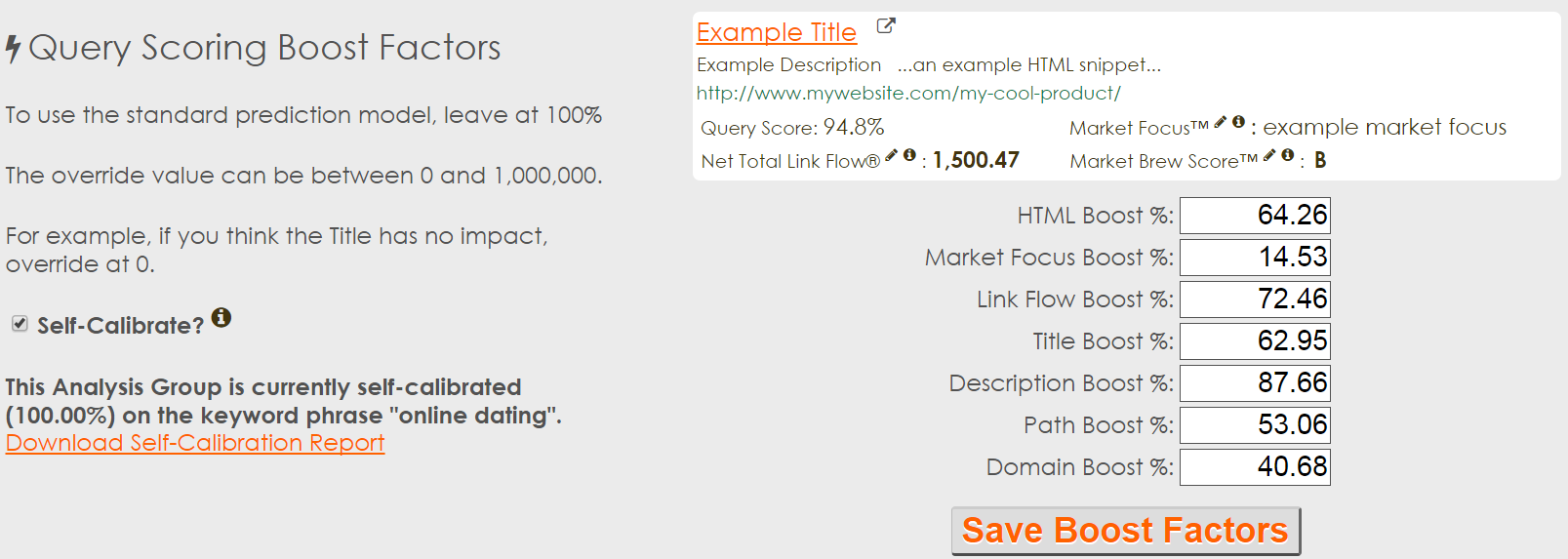

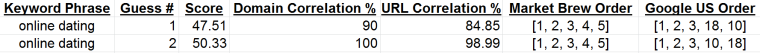

First of all, you start with a series of “packs” or conjectures. In the search model, these may be different weighting factors for scoring algorithms. For example, with 7 different inputs, you will begin with at least 7 different assumptions about these weights.

The idea behind PSO is to keep each of these assumptions as far as possible from the others. Without going into 7-dimensional calculations, you can use several techniques to ensure that your starting points are optimal.

After that, you will begin to develop your guesses. In the course of this, you will imitate the behavior of the birds in the flock in a situation when there was food near them. One of the random guesses (flocks) will be closer than the others, and each subsequent guess will be adjusted based on general information.

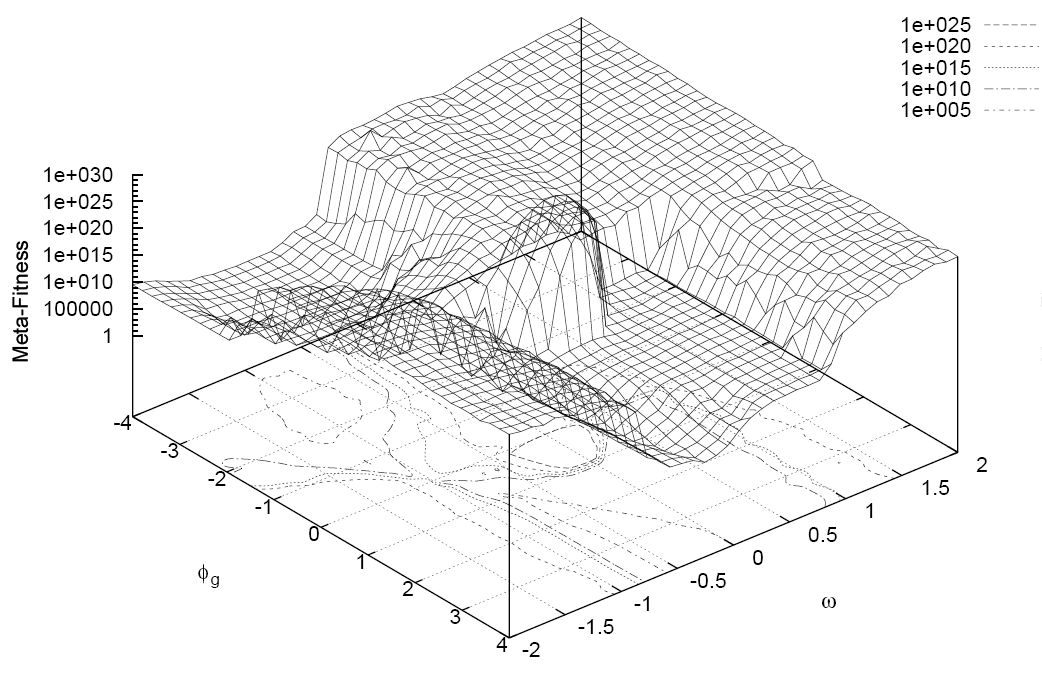

The visualization shown below clearly demonstrates this process.

Implementation

Fortunately, there are a number of possibilities for implementing this method in different programming languages. And the great thing about optimizing using a swarm of particles is that it is easy to translate into reality! The technique has a minimum of settings (it is an indicator of a strong algorithm) and a very small list of flaws.

Depending on your problem, the implementation of the idea may be in the local minimum (not the optimal solution). You can easily fix this by implementing the neighborhood topology, which will quickly reduce the feedback loop to the best of the nearby assumptions.

The main part of your work will consist in the development of an “adaptation function” or a ranking algorithm, which you will use to determine the degree of closeness to the target correlation. In our case with SEO, we will have to correlate the data with some given object, like the results of Google or any other search engine.

If you have a working scoring system, your PSO algorithm will try to maximize performance through trillions of potential combinations. The scoring system can be as simple as performing a Pearson correlation between your search model and the search results of network users. Or it may become as difficult as simultaneously activating these correlations and assigning points to each specific scenario.

Black Box Correlation

Recently, many SEO optimizers have been trying to perform a correlation with Google’s black box. These efforts, of course, have the right to life, but they are still quite useless. And that's why.

First, correlation does not always imply a causal relationship. Especially if the entry points to your black box are not too close to the exit points. Let's look at this with an example where the entry points are located very close to their corresponding exit points — the ice cream transport business. When it's warm outside, people buy more ice cream. It is easy to see here that the entry point (air temperature) is closely tied to the exit point (ice cream).

Unfortunately, most SEO optimizers do not use statistical proximity between their optimizations (inputs) and the corresponding search results (outputs).

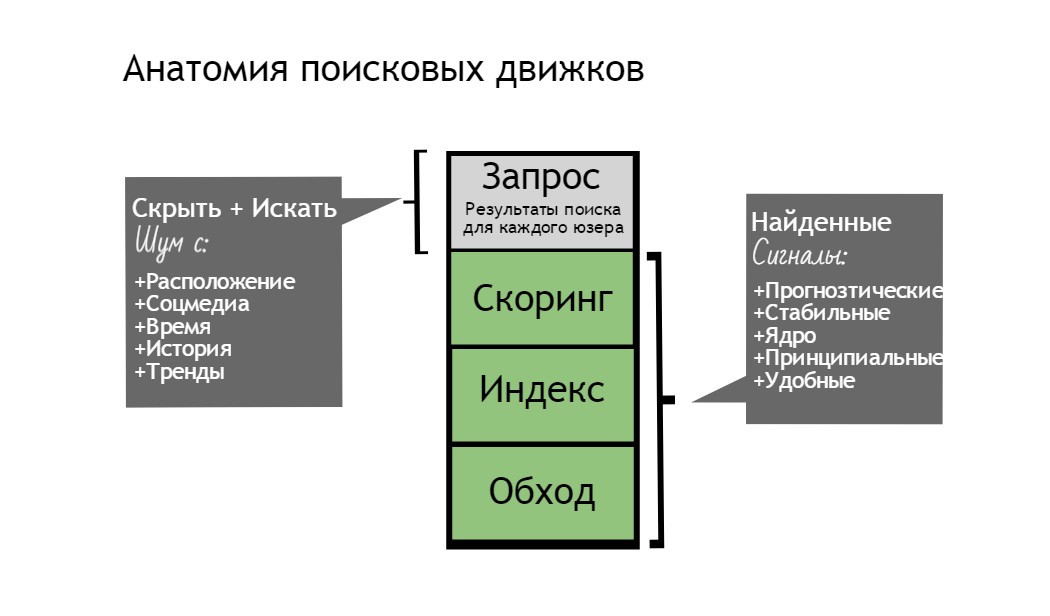

Moreover, their inputs or optimizations are in front of the search engine crawl components. In fact, a typical optimization should go through 4 levels: crawling, indexing, scoring and, ultimately, the real-time query level. Attempting a correlation in this way can not give anything but vain expectations.

In fact, Google provides a significant noise figure, just as the US government creates noise around its GPS network, so civilians are not able to get the same accurate data as the military. This is called the real-time query level. And this layer is becoming a serious deterrent to SEO correlation tactics.

An example is a garden hose. Being on the scoring layer of the search engine, you get a company look at what is happening around. The water coming out of the garden hose is organized and predictable - that is, you can change the position of the hose and predict the corresponding change in the movement of water flow (search results).

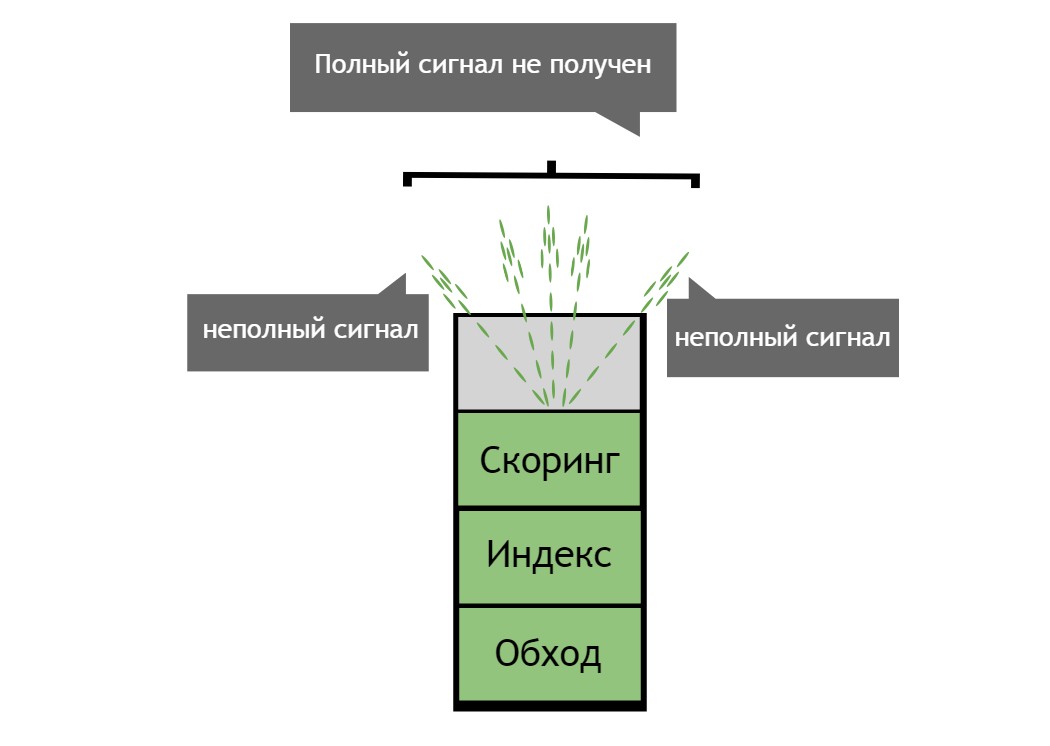

In our case, the query layer sprays this water (search results) into millions of droplets (variations of the search results), depending on the user. Most of the algorithms that are changing today arise on the basis of the level of queries, so that for the same number of users to produce more variations of search results. Google's Hummingbird algorithm is one example. Query-level shifts allow search engines to generate more trading platforms for their PPC ads.

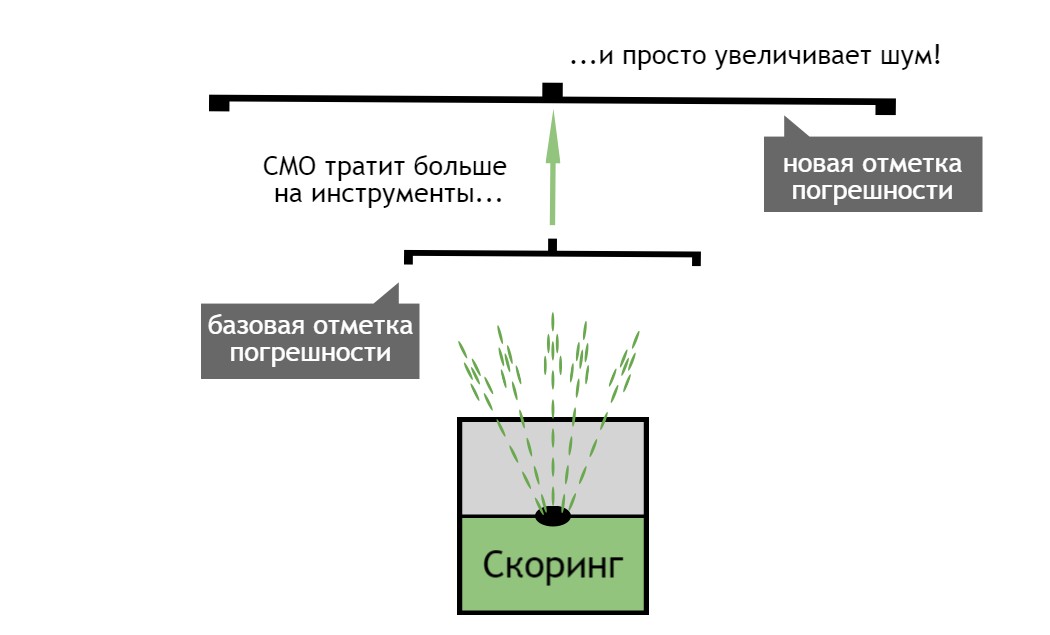

The query level is the opinion of users, not the company, on what is happening. Consequently, correlations derived in this way will very rarely have a causal relationship. And it is provided that you have one tool to find and simulate data. As a rule, seo-optimizers use a number of input data, which will contribute to increasing noise and reducing the likelihood of finding a causal relationship.

Search for cause and effect links in SEO

To get a correlation to work with a search engine model, you need to tighten the inputs and outputs as much as possible. In the search engine model, input or variable data must be in or above the scoring layer. How to do it? We must break the black box of the search engine into key components, and then build a search engine model from scratch.

Optimizing outputs is even harder due to the terrifying noise caused by the real-time query layer, which creates millions of variations at the expense of each user. At a minimum, we will need to make such entries for our search engine model, which will be located in front of the usual layer with variations of queries. This ensures that at least one of the compared sides is stable.

By building a search engine model from scratch, we will be able to display search results coming not from the level of queries, but directly from the scoring layer. This will give us a more stable and accurate relationship between the inputs and outputs that we are trying to relate. And then, thanks to these strong and illustrative relationships between inputs and outputs, the correlation will reflect a causal relationship. By focusing on one input, we get a direct link with the results that we see. Then we can do a classic seo analysis to determine the optimization option that will be beneficial for the existing search engine model.

Results

Situations when any simple thing in nature leads to scientific discoveries or technological breakthroughs can not but admire. Having a search engine model that allows us to openly connect scoring inputs to non-personalized search results, we can associate a correlation with a causal link.

Add to this the particle-particle optimization, and you have a technical breakthrough — a self-calibrating search model.

Source: https://habr.com/ru/post/262809/

All Articles