About the new push-server "1C-Bitrix"

Some time ago we had a need to develop a new push server for the Bitrix24 service. The previous version, implemented on the basis of the module for Nginx, had a number of features that gave us a lot of trouble. As a result, we realized - it's time to do a push-server. Here we want to talk about how this happened.

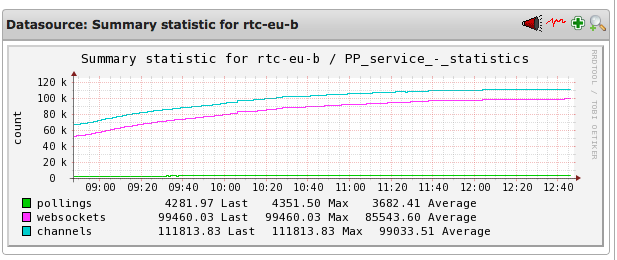

A push server (also known as pulling server, also known as an instant messaging server) is designed for instant messaging between users who access the portal through a browser or connect using desktop or mobile applications. Both browsers and applications establish and keep a permanent connection to the push server. This is usually done using WebSocket, and if this technology is not supported by the browser, then Long Polling is used - a permanent long poll. This is an Ajax request that waits for a response from the server for 40 seconds. In the case of a response or a timeout, the request is repeated. Now most of our clients are sitting on WebSocket.

')

Pollings - the number of compounds using long-polling technology.

Websockets - the number of connections using the WebSockets technology.

Channels - the number of channels.

What did not suit us

The previous server worked as follows: the system published messages for users to the nginx module, and it was already responsible for storing them and sending them to the recipients. If the desired user was online, then he received the message immediately. If the user was absent, the push server waited for him to appear in order to forward the accumulated messages. However, the nginx-module often fell, and thus all unsent messages were lost. This would be half the trouble, but after each fall of the module, the load on the PHP backend greatly increased.

It was connected with the features of the architecture of the push-server. When a client establishes a connection, it is assigned a unique channel identifier. That is, the user listens to a certain channel, and portals write messages to it. Moreover, only portals have this right; this is done for the sake of security, so that no one outside can write to this channel.

To create a channel, the portal must initialize it by sending a message. And when the nginx-module fell, then all channels were reset and all portals began to simultaneously create new channels in the push-server. It turned out a kind of DDoS: PHP on the backend, where the portals work, stopped responding. It was a serious problem.

Resetting messages accumulated on the push server was not a big problem, because they are duplicated on the portals, and the user still received them when the page was updated. But DDoS was a problem much more serious, because because of it portals could be inaccessible.

New push server

The new server was decided to write from scratch. As the development environment, we decided to take Node.js. Before that, we did not work with this platform, but we were bribed by the fact that with its help you can create a service that will hold a lot of connections. In addition, we have a lot of developers using JavaScript, so there was someone to support the new system.

An important condition was to maintain compatibility with the protocol, which worked with the previous server. This made it possible not to rewrite the client-side executed in the browser, implemented in JavaScript. It was also possible to leave the PHP part of the backend, which translates messages into a push server, intact.

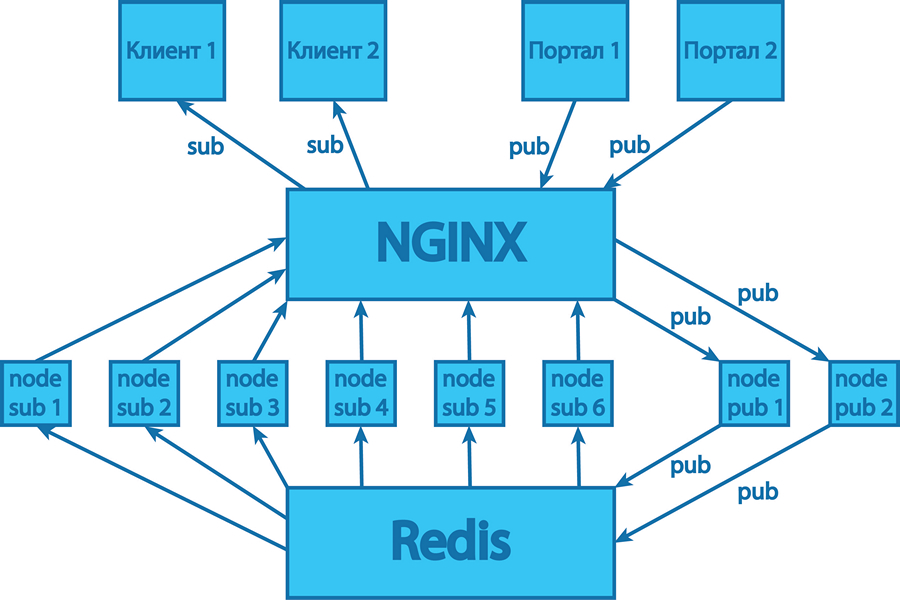

First, a small prototype was made to test performance. Its functionality was limited, and he kept all the messages in the memory of the Node.js process. Since the push server must hold tens of thousands of connections at the same time, we have implemented clustering support in our development. Now in the Russian segment there is a push-server, consisting of six processes of our Node.js-application, servicing incoming connections, and two processes responsible for publishing messages.

It was impossible to store messages in memory, as was done in the prototype, in the finished product. Suppose there are several users on the portal whose requests are processed by different processes. If these users are in the general chat, then all messages from it should be simultaneously sent to all participants. And since the memory area of each process is isolated, you cannot store messages in the memory of the push server. Of course, we could have done something like shared memory, but this approach is not very reliable and easy to implement, so we decided to keep all messages in Redis . This is a NoSQL key-value repository, like memcache, only more advanced. Not only a key-value can be stored in it, but also a key-dictionary, a key-list, that is, more complex data structures. Therefore, we use Redis to store all messages, channel statistics and online status.

Also in Redis there is a useful feature - a subscription to the channel . That is, all our eight processes Node.js applications have a permanent connection to Redis. And if a message is written to it, then it comes to all processes that are subscribed to add messages. The general scheme is this: the user writes some message on the portal, it goes to the backend in PHP, it is saved there, and then sent to the Node.js application. The message itself contains all the data attributes, i.e. author, addressee, etc. One of the two processes responsible for posting messages accepts this request, processes it and publishes it in Redis. He writes a message and informs the other six processes that a new message has arrived and can be sent to subscribers.

To work with WebSocket, we used an open source Node.js module called ws .

Difficulties encountered

We did not have any particular difficulties at the development stage. At the testing stage, we focused on high-load emulation, the tests showed good results. When deploying a new system, we secured ourselves, leaving the old server for the first time in operation. All messages were duplicated so that in case of a new server crash, it was possible to switch to the old one.

As it turned out, we insured for good reason. After deployment, it turned out that in a number of cases, when the load increased significantly, the system fell. On the one hand, the tests confirmed the ability of our server to hold a very large load. But still this is not a “real” load: users log in from different IP, channels, browsers, they have WebSocket support in different ways.

We never managed to figure out why the fall was happening. The problem arose during the installation of a TCP connection, so we decided to transfer its processing to a well-known and able to keep a lot of connections with an nginx server. At the same time, Node.js-processes began to act as backend-servers. In the new scheme, we removed several links, namely:

- The PM2 utility used to start the processes of a Node.js application in a cluster configuration. It monitors the state of the processes, shows beautiful graphics using the CPU and memory, is able to restart the fallen processes. We have replaced this utility with our own scripts.

- Cluster module included in Node.js and helping to run multiple processes of the same application. Query balancing is now handled by nginx itself.

- HTTPS connection processing module . Now this protocol is processed by nginx itself.

As a result, the scheme of the server began to look like this:

Improvements made

After the implementation of the service, it became clear what could be improved. For example, we optimized the protocol and began to save resources on some operations.

In particular, they limited the shelf life of different types of messages. Previously, all of our messages were stored for a long time, for example, a day. But not all messages were worth keeping on the server for so long. For example, service messages that someone started writing in a chat should not live more than two minutes, because they lose their relevance.

But personal messages need to be stored long enough to deliver them to the addressee even a few hours after sending. For example, the user closed the laptop and left work, in the morning he opened and immediately received the accumulated messages. Or turned off the application in a mobile phone, and in the evening I expanded and saw comments that catch on without reloading the page. But the message that someone went online or that someone started writing, should not live longer than a few minutes. So we saved on the number of messages stored in memory.

We also improved the security of working with the push-server. When a user joins, he gets a unique channel identifier - a random string of 32 characters. But if you intercept it, you can listen to other people's messages. Therefore, we have added a special signature unique to this particular channel ID. The channels themselves change regularly, as do their identifiers.

The channel itself is stored on the server for 24 hours, but recording in it is carried out no more than 12 hours. The remaining storage time is necessary for the user to be able to receive previously sent messages. After all, if in the evening the laptop fell asleep with one channel identifier, then in the morning it will wake up with it and turn to the server. After sending messages, the server closes the old channel and creates a new one for this user.

* * *

After all the improvements and optimizations we made, we received a new stable push server that can withstand high loads. However, its refinement is not over, there are a number of things that we plan to implement and optimize. But this is a story for the future.

Source: https://habr.com/ru/post/262551/

All Articles