Using Lisp in Production

At Grammarly, the core of our business - the central language engine - is written in Common Lisp. Now the engine processes more than a thousand sentences per second, it scales horizontally and reliably serves us in production for almost 3 years.

We noticed that there are almost no posts about deploying Lisp software in a modern cloud infrastructure, so we decided that sharing our experience would be a good idea. The runtime and Lisp programming environment provide several unique, somewhat unusual, possibilities for supporting production systems (for the impatient, they are described in the last part).

')

Contrary to popular belief, Lisp is an incredibly practical language for creating production systems. In general, there are a lot of Lisp-systems around us: when you search for a ticket to Hipmunk or go to the subway in London, Lisp programs are used.

Our Lisp services conceptually represent a classic AI application that functions on a huge amount of knowledge created by linguists and researchers. Its main resource used is the CPU, and it is one of the largest consumers of computing resources in our network.

The system runs on ordinary Linux images deployed in AWS. We use SBCL for production and CCL on most developers' machines. One of the nice moments when using Lisp is that you have a choice of several well-developed implementations with different pros and cons: in our case, we optimized the speed of the server and the compilation speed when developing (why this is critical for us is described later).

At Grammarly, we use a variety of programming languages to develop our services: in addition to languages for JVM and Javascript, we also write in Erlang, Python, and Go. Proper encapsulation of services allows us to use the language or platform that is best suited for the task. This approach has a certain price to maintain, but we value choice and freedom more than rules and patterns.

We also try to rely on simple, non-language, infrastructure utilities. This approach frees us from many problems in integrating this whole zoo technology into our platform. For example, statsd is an excellent example of an incredibly simple and useful service that is very easy to use. The other is Graylog2, it provides a smart specification for logging, and despite the fact that there was no ready-made library to work with from CL, it was very easy to assemble from what is available in the Lisp ecosystem. Here is all the code you need (and almost all of it is just word for word translation of the specification):

The lack of libraries in the ecosystem is one of the frequent complaints about Lisp. As you can see, 5 libraries are used only in this example for such things as encoding, compression, Unix-time retrieval and connection socket.

Lisp libraries do exist, but, as with all library integrations, we run into problems. For example, to connect Jenkins CI, we had to use xUnit and it was not very easy to find specifications for it. Fortunately, one question on Stackoverflow helped - in the end, we built it into our own test library should-test .

Another example is using HDF5 to exchange machine learning models: we spent some time adapting the hdf5-cffi low-level library to our realities, but we had to spend much more time updating our AMI (Amazon Machine Image) to support the current version of the C library.

Another principle that we follow in the Grammarly platform is the maximum decomposition of various services to ensure horizontal scalability and functional independence - this is my colleague's post . Thus, we do not need to interact with databases in critical parts of our language core services. However, we use MySQL, Postgres, Redis, and Mongo for internal storage, and we successfully used CLSQL , postmodern , cl-redis, and cl-mongo to access them from Lisp.

We use Quicklisp to manage external dependencies and a simple library source packaging system with a project for our own libraries and forks. The Quicklisp repository contains more than 1000 Lisp libraries: not a super huge number, but quite sufficient to meet all the needs of our production.

For deployment in production, we use a universal stack: the application is tested and assembled using Jenkins , delivered to the server thanks to Rundeck and launched there via Upstart as a normal Unix process.

In general, the problems we face when integrating Lisp applications into the cloud world do not radically differ from those that we see in many other technologies. If you want to use Lisp in production and enjoy writing Lisp code, there is no real technical reason not to do this!

However ideal this story may be, not everything is just about rainbows and unicorns.

We created an esoteric application (even by the standards of the Lisp-world) and in the process rested on some of the limitations of the platform. One such surprise was the exhaustion of memory at compile time. We rely heavily on macros, and the largest of them are expanded into thousands of lines of low-level code. It turned out that the SBCL compiler implements many optimizations, thanks to which we can enjoy fairly fast generated code, but some of them require exponential time and memory. Unfortunately, there is no way to turn them off or adjust them. Despite this, there is a well-known general solution, the call-with- * style , which allows you to sacrifice a little efficiency for the sake of better modularity (which turned out to be decisive in our case) and debugging.

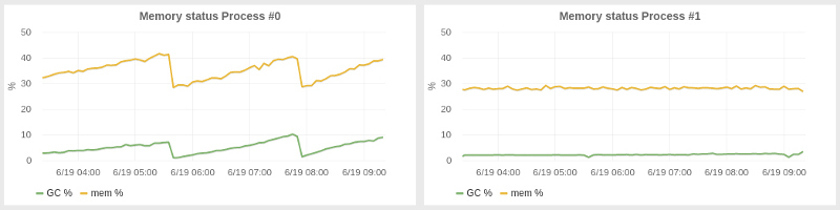

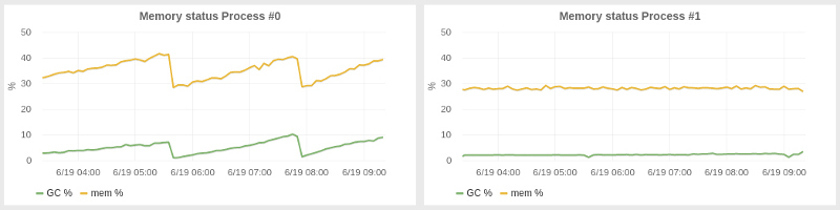

Adjusting the garbage collector to reduce delays and improve resource utilization in our system turned out to be a less unexpected problem, unlike taming the compiler. SBCL provides a generation-based garbage collector, although not as sophisticated as the JVM. We had to adjust the size of generations and it turned out that the use of a larger heap was the best option: our application consumes 2-4 gigabytes of memory, but we launched it with a heap of 25G, which automatically increased the size of the first generation. Another setting we had to make, much less obvious, was the launch of GC programmatically every N minutes. With an increased heap, we noticed a gradual increase in memory usage over periods of tens of minutes, which is why more and more time was spent on GC and application performance decreased. Our approach with periodic GC brought the system to a more stable state with almost constant memory consumption. On the left, you can see how the system is executed without our settings, and on the right, the effect of a periodic GC.

Of all these difficulties, the most unpleasant bug I encountered was a network bug. As usually happens in such situations, the bug was not in the application, but in the underlying platform (this time - SBCL). And, moreover, I ran into it twice in two different services, but for the first time I could not calculate it, so I had to go around it.

When we first started the launch of our service at significant workloads in production, after some time of normal operation, all the servers suddenly began to slow down and eventually became unavailable. After a lengthy investigation with suspicion of input data, we found that the problem was in the race in the low-level SBCL network code, specifically in the way that the function of the socket was called getprotobyname , which was not thread-safe. It was a very unlikely race, so it only showed itself in a high-load network service, when this function was called tens of thousands of times. It knocked out one workflow after another, gradually introducing the system into a coma.

Here is the fix on which we stopped, unfortunately, it cannot be used in a wider context as a library. (The bug was sent to the SBCL team and was fixed, but we still use this hack, just in case :)

Common Lisp systems implement many of the ideas of legendary Lisp machines. One of the most outstanding is the interactive environment of SLIME. While the industry is expecting the ripening of LightTable and similar tools, Lisp programmers have been quietly enjoying these features in SLIME for many years. Behold the power of this to the teeth of an armed and functioning combat station in action .

But SLIME is not just Lisp's approach to IDE. Being a client-server application, it allows you to run your backend on a remote machine and connect to it from your local Emacs (or Vim, if you have no choice, with SLIMV). Java programmers may think about JConsole, but here you are not constrained by a predefined set of operations and can make any introspection or change that you want. We could not catch the race in the socket function without these features.

Moreover, the remote console is not the only useful utility provided by SLIME. Like many IDEs, it can go into the source code of functions, but unlike Java or Python, I have SBCL source code on my machine, so I often look at the source code for the implementation, and this helps a lot in studying what is happening. ” under the hood. " For the case of a socket bug, this was also an important part of the debugging process.

Finally, another super useful utility for introspection and debug, which we use is TRACE . It completely changed my approach to debugging programs, now instead of tedious code execution step by step, I can analyze the whole picture. This tool also helped us localize our socket bug.

With trace, you specify a function to trace, execute code, and Lisp prints all calls to this function and its arguments and all the results it returns. It's something like a stack stack, but you don't need a full stack and you dynamically get a stream of traces without stopping the application. A trace is like a print on steroids, which allows you to quickly get into the insides of a code of any complexity and track complex paths for executing a program. Its only drawback is that you cannot traverse macros.

Here is a fragment of the trace that I made literally today to make sure that a JSON request to one of our services is formed correctly and returns the desired result:

So, to debug our horrible socket bug, I had to dig deep into the SBCL network code and examine the functions being called, then connect via SLIME to the dying server and try to trace these functions one by one. And when I received a call that did not return - that was it. As a result, after finding out in the manual that the function is not thread-safe and that I encountered several mentions about this in the comments of the SBCL source code, I was convinced of my hypothesis.

This article is about the fact that Lisp proved to be a surprisingly reliable platform for one of our most critical projects. It fully complies with the general requirements of a modern cloud infrastructure, and despite the fact that this stack is not very well known and popular, it has its strengths - you only need to learn how to use them. What can we say about the power of the Lisp-approach to solving complex problems, for which we love him so much. But this is a completely different story ...

Note translator:

I am doubly glad that people from the CIS write about the use of Common Lisp in production, and even people from the CIS write, because we have practically no experience with this stack. I hope after reading this article, someone will pay attention to this very undervalued technology.

We noticed that there are almost no posts about deploying Lisp software in a modern cloud infrastructure, so we decided that sharing our experience would be a good idea. The runtime and Lisp programming environment provide several unique, somewhat unusual, possibilities for supporting production systems (for the impatient, they are described in the last part).

Wut Lisp? !!

')

Contrary to popular belief, Lisp is an incredibly practical language for creating production systems. In general, there are a lot of Lisp-systems around us: when you search for a ticket to Hipmunk or go to the subway in London, Lisp programs are used.

Our Lisp services conceptually represent a classic AI application that functions on a huge amount of knowledge created by linguists and researchers. Its main resource used is the CPU, and it is one of the largest consumers of computing resources in our network.

The system runs on ordinary Linux images deployed in AWS. We use SBCL for production and CCL on most developers' machines. One of the nice moments when using Lisp is that you have a choice of several well-developed implementations with different pros and cons: in our case, we optimized the speed of the server and the compilation speed when developing (why this is critical for us is described later).

A stranger in a strange land

At Grammarly, we use a variety of programming languages to develop our services: in addition to languages for JVM and Javascript, we also write in Erlang, Python, and Go. Proper encapsulation of services allows us to use the language or platform that is best suited for the task. This approach has a certain price to maintain, but we value choice and freedom more than rules and patterns.

We also try to rely on simple, non-language, infrastructure utilities. This approach frees us from many problems in integrating this whole zoo technology into our platform. For example, statsd is an excellent example of an incredibly simple and useful service that is very easy to use. The other is Graylog2, it provides a smart specification for logging, and despite the fact that there was no ready-made library to work with from CL, it was very easy to assemble from what is available in the Lisp ecosystem. Here is all the code you need (and almost all of it is just word for word translation of the specification):

(defun graylog (message &key level backtrace file line-no) (let ((msg (salza2:compress-data (babel:string-to-octets (json:encode-json-to-string #{ :version "1.0" :facility "lisp" :host *hostname* :|short_message| message :|full_message| backtrace :timestamp (local-time:timestamp-to-unix (local-time:now)) :level level :file file :line line-no }) :encoding :utf-8) 'salza2:zlib-compressor))) (usocket:socket-send (usocket:socket-connect *graylog-host* *graylog-port* :protocol :datagram :element-type '(unsigned-byte 8)) msg (length msg)))) The lack of libraries in the ecosystem is one of the frequent complaints about Lisp. As you can see, 5 libraries are used only in this example for such things as encoding, compression, Unix-time retrieval and connection socket.

Lisp libraries do exist, but, as with all library integrations, we run into problems. For example, to connect Jenkins CI, we had to use xUnit and it was not very easy to find specifications for it. Fortunately, one question on Stackoverflow helped - in the end, we built it into our own test library should-test .

Another example is using HDF5 to exchange machine learning models: we spent some time adapting the hdf5-cffi low-level library to our realities, but we had to spend much more time updating our AMI (Amazon Machine Image) to support the current version of the C library.

Another principle that we follow in the Grammarly platform is the maximum decomposition of various services to ensure horizontal scalability and functional independence - this is my colleague's post . Thus, we do not need to interact with databases in critical parts of our language core services. However, we use MySQL, Postgres, Redis, and Mongo for internal storage, and we successfully used CLSQL , postmodern , cl-redis, and cl-mongo to access them from Lisp.

We use Quicklisp to manage external dependencies and a simple library source packaging system with a project for our own libraries and forks. The Quicklisp repository contains more than 1000 Lisp libraries: not a super huge number, but quite sufficient to meet all the needs of our production.

For deployment in production, we use a universal stack: the application is tested and assembled using Jenkins , delivered to the server thanks to Rundeck and launched there via Upstart as a normal Unix process.

In general, the problems we face when integrating Lisp applications into the cloud world do not radically differ from those that we see in many other technologies. If you want to use Lisp in production and enjoy writing Lisp code, there is no real technical reason not to do this!

The hardest bug I've ever debugged

However ideal this story may be, not everything is just about rainbows and unicorns.

We created an esoteric application (even by the standards of the Lisp-world) and in the process rested on some of the limitations of the platform. One such surprise was the exhaustion of memory at compile time. We rely heavily on macros, and the largest of them are expanded into thousands of lines of low-level code. It turned out that the SBCL compiler implements many optimizations, thanks to which we can enjoy fairly fast generated code, but some of them require exponential time and memory. Unfortunately, there is no way to turn them off or adjust them. Despite this, there is a well-known general solution, the call-with- * style , which allows you to sacrifice a little efficiency for the sake of better modularity (which turned out to be decisive in our case) and debugging.

Adjusting the garbage collector to reduce delays and improve resource utilization in our system turned out to be a less unexpected problem, unlike taming the compiler. SBCL provides a generation-based garbage collector, although not as sophisticated as the JVM. We had to adjust the size of generations and it turned out that the use of a larger heap was the best option: our application consumes 2-4 gigabytes of memory, but we launched it with a heap of 25G, which automatically increased the size of the first generation. Another setting we had to make, much less obvious, was the launch of GC programmatically every N minutes. With an increased heap, we noticed a gradual increase in memory usage over periods of tens of minutes, which is why more and more time was spent on GC and application performance decreased. Our approach with periodic GC brought the system to a more stable state with almost constant memory consumption. On the left, you can see how the system is executed without our settings, and on the right, the effect of a periodic GC.

Of all these difficulties, the most unpleasant bug I encountered was a network bug. As usually happens in such situations, the bug was not in the application, but in the underlying platform (this time - SBCL). And, moreover, I ran into it twice in two different services, but for the first time I could not calculate it, so I had to go around it.

When we first started the launch of our service at significant workloads in production, after some time of normal operation, all the servers suddenly began to slow down and eventually became unavailable. After a lengthy investigation with suspicion of input data, we found that the problem was in the race in the low-level SBCL network code, specifically in the way that the function of the socket was called getprotobyname , which was not thread-safe. It was a very unlikely race, so it only showed itself in a high-load network service, when this function was called tens of thousands of times. It knocked out one workflow after another, gradually introducing the system into a coma.

Here is the fix on which we stopped, unfortunately, it cannot be used in a wider context as a library. (The bug was sent to the SBCL team and was fixed, but we still use this hack, just in case :)

#+unix (defun sb-bsd-sockets:get-protocol-by-name (name) (case (mkeyw name) (:tcp 6) (:udp 17))) Back to the future

Common Lisp systems implement many of the ideas of legendary Lisp machines. One of the most outstanding is the interactive environment of SLIME. While the industry is expecting the ripening of LightTable and similar tools, Lisp programmers have been quietly enjoying these features in SLIME for many years. Behold the power of this to the teeth of an armed and functioning combat station in action .

But SLIME is not just Lisp's approach to IDE. Being a client-server application, it allows you to run your backend on a remote machine and connect to it from your local Emacs (or Vim, if you have no choice, with SLIMV). Java programmers may think about JConsole, but here you are not constrained by a predefined set of operations and can make any introspection or change that you want. We could not catch the race in the socket function without these features.

Moreover, the remote console is not the only useful utility provided by SLIME. Like many IDEs, it can go into the source code of functions, but unlike Java or Python, I have SBCL source code on my machine, so I often look at the source code for the implementation, and this helps a lot in studying what is happening. ” under the hood. " For the case of a socket bug, this was also an important part of the debugging process.

Finally, another super useful utility for introspection and debug, which we use is TRACE . It completely changed my approach to debugging programs, now instead of tedious code execution step by step, I can analyze the whole picture. This tool also helped us localize our socket bug.

With trace, you specify a function to trace, execute code, and Lisp prints all calls to this function and its arguments and all the results it returns. It's something like a stack stack, but you don't need a full stack and you dynamically get a stream of traces without stopping the application. A trace is like a print on steroids, which allows you to quickly get into the insides of a code of any complexity and track complex paths for executing a program. Its only drawback is that you cannot traverse macros.

Here is a fragment of the trace that I made literally today to make sure that a JSON request to one of our services is formed correctly and returns the desired result:

0: (GET-DEPS ("you think that's bad, hehe, i remember once i had an old 100MHZ dell unit i was using as a server in my room")) 1: (JSON:ENCODE-JSON-TO-STRING #<HASH-TABLE :TEST EQL :COUNT 2 {1037DD9383}>) 2: (JSON:ENCODE-JSON-TO-STRING "action") 2: JSON:ENCODE-JSON-TO-STRING returned "\"action\"" 2: (JSON:ENCODE-JSON-TO-STRING "sentences") 2: JSON:ENCODE-JSON-TO-STRING returned "\"sentences\"" 1: JSON:ENCODE-JSON-TO-STRING returned "{\"action\":\"deps\",\"sentences\":[\"you think that's bad, hehe, i remember once i had an old 100MHZ dell unit i was using as a server in my room\"]}" 0: GET-DEPS returned ((("nsubj" 1 0) ("ccomp" 9 1) ("nsubj" 3 2) ("ccomp" 1 3) ("acomp" 3 4) ("punct" 9 5) ("intj" 9 6) ("punct" 9 7) ("nsubj" 9 8) ("root" -1 9) ("advmod" 9 10) ("nsubj" 12 11) ("ccomp" 9 12) ("det" 17 13) ("amod" 17 14) ("nn" 16 15) ("nn" 17 16) ("dobj" 12 17) ("nsubj" 20 18) ("aux" 20 19) ("rcmod" 17 20) ("prep" 20 21) ("det" 23 22) ("pobj" 21 23) ("prep" 23 24) ("poss" 26 25) ("pobj" 24 26))) ((<you 0,3> <think 4,9> <that 10,14> <'s 14,16> <bad 17,20> <, 20,21> <hehe 22,26> <, 26,27> <i 28,29> <remember 30,38> <once 39,43> <i 44,45> <had 46,49> <an 50,52> <old 53,56> <100MHZ 57,63> <dell 64,68> <unit 69,73> <i 74,75> <was 76,79> <using 80,85> <as 86,88> <a 89,90> <server 91,97> <in 98,100> <my 101,103> <room 104,108>)) So, to debug our horrible socket bug, I had to dig deep into the SBCL network code and examine the functions being called, then connect via SLIME to the dying server and try to trace these functions one by one. And when I received a call that did not return - that was it. As a result, after finding out in the manual that the function is not thread-safe and that I encountered several mentions about this in the comments of the SBCL source code, I was convinced of my hypothesis.

This article is about the fact that Lisp proved to be a surprisingly reliable platform for one of our most critical projects. It fully complies with the general requirements of a modern cloud infrastructure, and despite the fact that this stack is not very well known and popular, it has its strengths - you only need to learn how to use them. What can we say about the power of the Lisp-approach to solving complex problems, for which we love him so much. But this is a completely different story ...

Note translator:

I am doubly glad that people from the CIS write about the use of Common Lisp in production, and even people from the CIS write, because we have practically no experience with this stack. I hope after reading this article, someone will pay attention to this very undervalued technology.

Source: https://habr.com/ru/post/262225/

All Articles