Build-Deploy-Test. Continuous integration

Continuous Integration (eng. Continuous Integration, hereinafter CI) is the practice of software development, which is to perform frequent automated project builds to quickly identify and solve the problems of integrating the results of several developers.

I emphasize that this is not a technique and not a standard, it is PRACTICE, and it implies the ongoing work and involvement of all team members. What for? Yes, in order not to wait for the end of the project for integration and a sudden global collapse. In addition, the task of CI is to protect against destructive changes as a result of refactoring, adding new functionality, changes in architecture and a heap of other unforeseen or known problems.

With the help of integration assemblies, you can get rid of the syndrome "I do not know, everything works on my machine." We also defend ourselves from “bad code”, often repeated bugs, “merge curves”. CI increases the possibility of feedback because it allows you to monitor the status of the project throughout the day.

')

How do we come to continuity

Our company has launched a new large-scale retail project on .NET using various new-fangled technologies, SOA. Development was carried out completely from scratch, with gradual integration between the components. The development and testing of components took place on different continents.

We developed core service components. In addition, it was necessary to test the performance and installation-removal of components. And also there was a requirement for development - 100% code coverage with unit tests. As a result, we have: autotests, unit tests, 4 test environments, partially implemented components, “falling” builds and bugs (where to without them?) And one tester for 14 developers. And I want everything to be collected, installed, tested and deleted. And, of course, I really want to give the most stable and high-quality result to the main testing team.

It’s difficult to test one on 4 systems, when we have only 15 people in the team - to put it mildly. Therefore, we decided to try the Microsoft approach - Build-Deploy-Test . The following tools helped to keep up with the development of the tester: TFS 2012, MSTest Manager 2012, Visual Studio 2012 Ultimate. Over time, moved to 2013.

We decided to make 2 CI servers: Jenkins and TFS server . At first, we had everything going once an hour in debug mode (debug mode). Then there was installation on the server, running smoke tests (tests that the application is running), and after that the uninstallation began. Sonar ran all the modular and integration tests once a day, at night. This solved the problem with the instability of the assembly and the early notification of developers about the problems. On the second CI server (TFS build server), we also had the build in debug mode. Then the installation was carried out on a test machine and the launch of functional autotests. After all the tests were completed (no matter whether it was successful or not), the uninstallation took place.

We decided to make 2 CI servers in order to separate the developers 'tests (modular and integration) from the testers' functional tests. Builds on Jenkins were done significantly more often to respond to falling tests immediately. And assemblies on TFS were carried out by the tester, if necessary, and daily at night, since the full cycle of functional testing takes a lot of time. In addition, so that everything was built in the Release configuration and the code, as a minimum, was always compiled, we introduced the Gated Check-in assembly. When programmers or testers upload changes to TFS, the entire system is first assembled in the Release configuration and changes are made to TFS only if it is successful. If there is a problem, the changes are not applied and the build in TFS remains working.

We began automating functional tests with a selection of their types. Microsoft offers the following:

As a result, having thought it over, we decided that generic and ordered tests would be more suitable for us.

Generic test need to specify the * .exe file and the parameters that he needs to feed. Each test case in TFS was associated with a generic test. For autotests, we had one project for each component and when we run the autotest from TM, a generic test is called, which passes the current autotest number to the required * .exe file, and then the required method is executed.

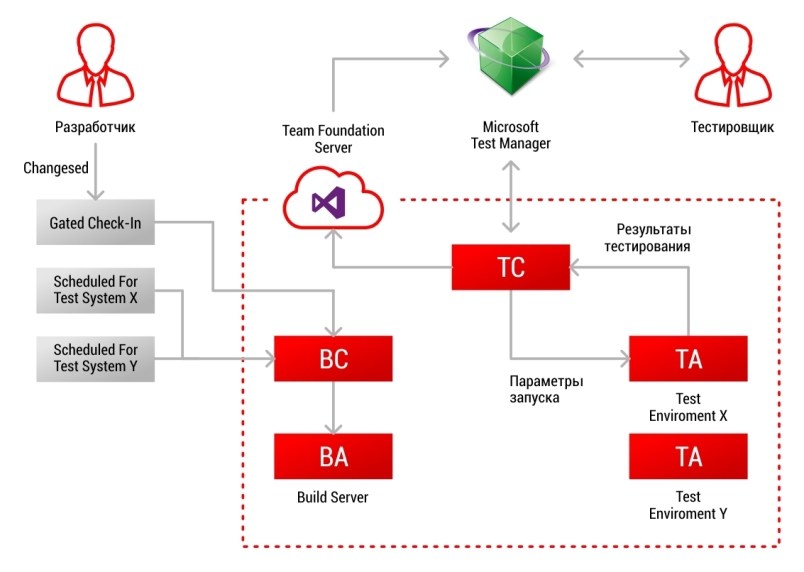

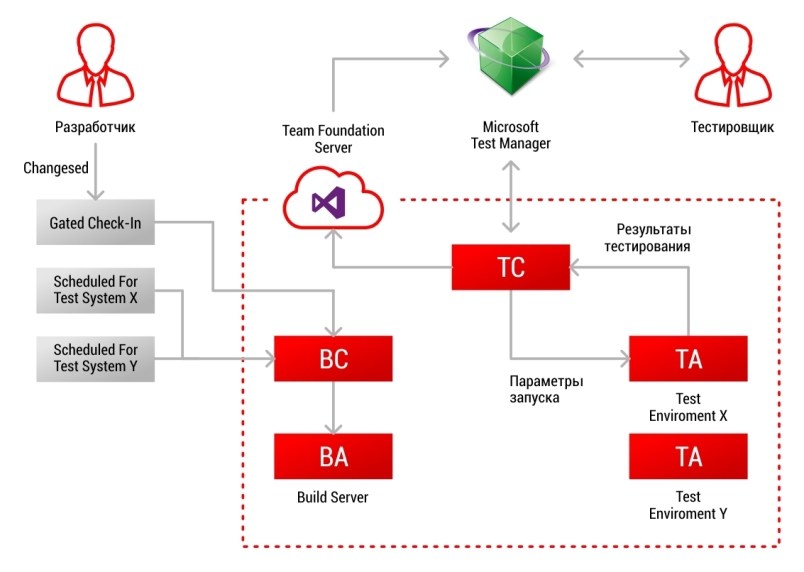

The Build-Deploy-Test process as a whole looks like this: developers make changes, before uploading changes, the Build Server collects everything in the Release configuration and if the build passes, the changes are uploaded to the server.

Next in functional testing.

According to the Build-Deploy-Test approach, there are 3 roles - Build Controller (BC) , Test Controller (TC) and Test Agent (TA) . Our Build Controller is the same as our Build Server and Test Controller.

Build Controller monitors the builds of the project, it collects the binaries in the build folder (Gated Check In is not suitable here, there were separate build configurations for testing). Test Controller is needed for launch management, storage of test results. And the Test Agent receives a command from the TS on the launch of a test suite, a deployment team and all launch parameters, including a generic test suite.

The tester opens Microsoft Test Manager, selects there a test plan that he needs, a configuration for running, an assembly to be used, and a test environment. And that's it! The rest is done by Test Controller. It receives a set of parameters for a test run (including the location of the folder with binaries) and starts the installation on the test environment. After the installation script runs, the Test Agent launches all the selected tests one by one. After all the tests have been completed, a cleanup script is launched that removes the application and, after clearing the test system, returns it to the initial state. Then Test Agent transfers control to Test Controller, informing it of its readiness. Test ontroller takes away all the temporary folders of the test run, puts the results in TFS (you can see them later through TM) and displays the result of tests in TM - successfully completed tests and not very successful ones.

Test environment settings

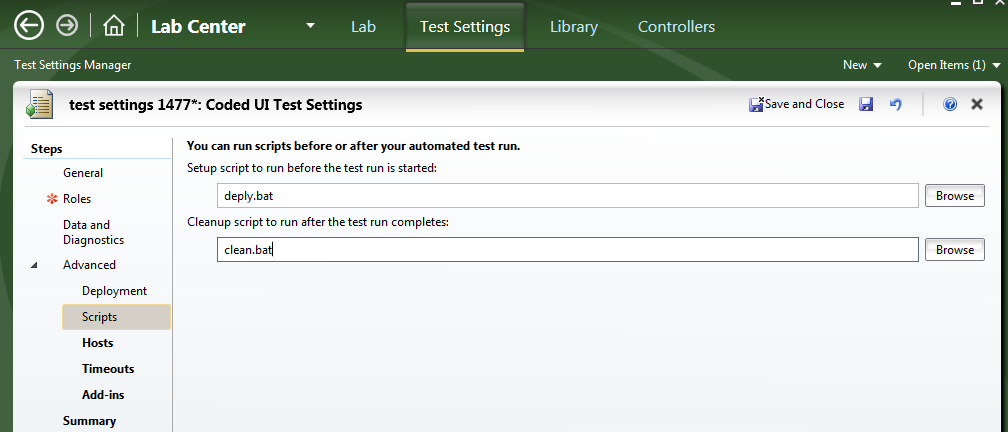

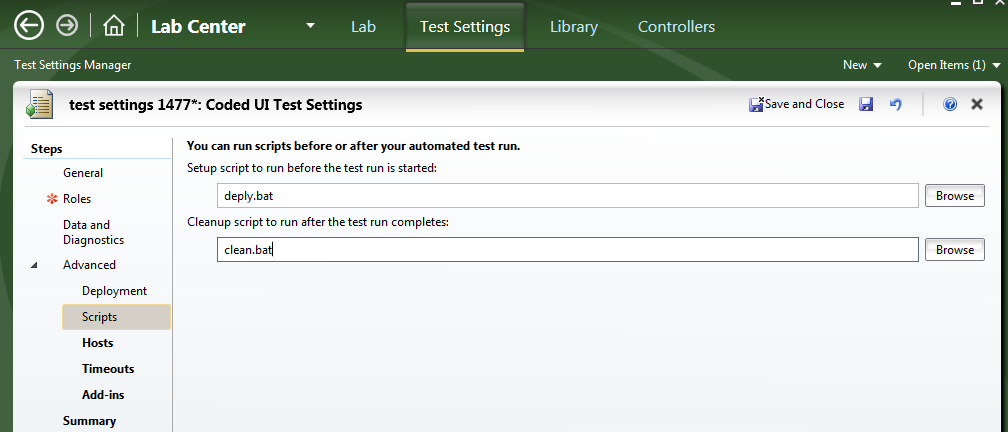

All our builds are stored on the Build Server. There are also generic tests. After running autotests in Microsoft Test Manager (TM), Test Controller creates a temporary folder on the test machine where it copies generic tests and creates its own deploy.bat and clean.bat files. These scripts consist of 2 parts. The first is the definition of all startup variables, including the name of the build directory, and the second part is the contents of our deploy file, which we specified for these test settings.

Thus, it turns out that TC creates a wrapper for the script, which we indicated to it as the deploy script (let's call it the Call Deploy script).

In our case, this script copies the entire build directory to the test machine, then it calls another install script - the Deploy script that was in this build. We need this in order for the deployment script to have versioning so that it can be updated along with the build.

This script already performs all the preparations for the installation, since all the scripts are implemented in the same environment, they have access to the variables that the TC passed to them. Using them, the script updates the configuration and installs the application. After the deployment is complete, the Test Controller starts work, which instructs the Test Agent to perform the tests (the same generic tests that it copied into the temporary folder). These generic tests call the * .exe file at the path specified by it and pass the number of the test case as a parameter to the program. Autotest causes a specific method depending on the parameter passed to it.

After all auto tests are completed, Test Controller begins the cleaning process. To do this, it calls the wrapper script that it created at the beginning. It determines the parameters and calls the Call Clean script, which lies on all test systems. Call Clean runs the Clean script, which uninstalls the application, then collects all the logs, archives them and moves to the temporary launch folder created by Test Controller, so that after the completion of the cleanup, all the logs are loaded into TFS and picked up to run the tests.

We have builds that run the Build-Deploy-Test approach on a scheduled basis. To do this, a special process template is specified in the assembly definition. Once a week such assembly is launched. Its configuration indicates which tests need to be performed, with which settings and on which test environment the selected tests should be run.

The whole process starts with the launch on the Build Controller, which provides the build parameters for the Build Agent (which projects to build, in what configuration - we have this Release / Debug). After the Build Agent collects everything, the Build Controller transfers control to the Test Controller along with The parameters for running auto tests are a set of auto tests, a test environment and test settings.

Our company uses its own test settings and its own build for each test environment.

After launching the Test Controller, it starts the deployment process, then passes testing to the Test Agent, and after that the Test Controller runs the system cleanup script.

If the test environment consists of 2 machines, then this should be specified in the Test Manager, for them a single test environment is created, in which there will be 2 machines. Each machine has its own Test Agent connected to TFS so that these machines can be seen in TM. Each machine is assigned a separate role, for example, the Database Server and the Client.

In this case, the same will happen at startup, but the test environment will consist of 2 machines and the tests will run on only one machine, and the logs can also be assembled different depending on the role.

Problems Configuring the Build-Test-Deploy Process

As it turned out, powershell scripts are not suitable for deploy / clean scripts, only cmd or bat are suitable for them. And, moreover, if you ask him to use the batch file and call the powershell script from the batch file, Test Manager ignores this call. Similarly, it ignores all sleep, wait, and even ping of a nonexistent host with a timer (we needed sleep after stopping / starting the services). It was necessary even for such simple things to write separate crutches that were launched from a batch file.

As the project evolved, the tests evolved. At first it was a set of libraries, so it was possible in the deployment script to simply copy them and run tests, and then delete them. And everyone was happy. Then our gallant developers wrapped everything in msi-packages that had to be run with a lot of parameters. And delete accordingly. Here, the task has already become complicated with changes in the parameters that need to be submitted. For this, we used the parameters that Test ontroller transmits Test Agent. That was enough.

Then a lot of msi installation packages appeared, which should be installed in a certain sequence and with a lot of parameters and configuration files. In order for the configuration to take place depending on the test system, we replaced all the connection strings from the default string to the required one, replaced all paths, parameters, and so on (all this was also taken from the auto-test launch parameters). Still it was necessary to make removal of MSMQ (Microsoft Message Queuing) of queues. Simple batch files do not solve this problem, so I wrote such a script on PS. But then another surprise was waiting for - well, MicroSoft Test Manager does not want to work with its illustrious powershell. Inexplicably. As a result, this was again solved in the autotests themselves.

The final (at the moment) stage of the installation was a single program with a user interface, in which the necessary components and parameters are selected and it launches all the necessary installer files with parameters. Similarly, the removal. We automated the installation without having to set values in the user interface. The installer is fed to the input a configured xml file with the necessary parameters (some of which were used that can be transferred from the Test Controller) were used for installation and removal. Everything else is the same.

So, we have automated and set the whole process. Of course, the means of achieving continuous integration may differ significantly from the project, from the preferences, traditions and policies of the company. And we can help decide what is needed for your company.

By rsarvarova

I emphasize that this is not a technique and not a standard, it is PRACTICE, and it implies the ongoing work and involvement of all team members. What for? Yes, in order not to wait for the end of the project for integration and a sudden global collapse. In addition, the task of CI is to protect against destructive changes as a result of refactoring, adding new functionality, changes in architecture and a heap of other unforeseen or known problems.

With the help of integration assemblies, you can get rid of the syndrome "I do not know, everything works on my machine." We also defend ourselves from “bad code”, often repeated bugs, “merge curves”. CI increases the possibility of feedback because it allows you to monitor the status of the project throughout the day.

')

How do we come to continuity

Our company has launched a new large-scale retail project on .NET using various new-fangled technologies, SOA. Development was carried out completely from scratch, with gradual integration between the components. The development and testing of components took place on different continents.

We developed core service components. In addition, it was necessary to test the performance and installation-removal of components. And also there was a requirement for development - 100% code coverage with unit tests. As a result, we have: autotests, unit tests, 4 test environments, partially implemented components, “falling” builds and bugs (where to without them?) And one tester for 14 developers. And I want everything to be collected, installed, tested and deleted. And, of course, I really want to give the most stable and high-quality result to the main testing team.

It’s difficult to test one on 4 systems, when we have only 15 people in the team - to put it mildly. Therefore, we decided to try the Microsoft approach - Build-Deploy-Test . The following tools helped to keep up with the development of the tester: TFS 2012, MSTest Manager 2012, Visual Studio 2012 Ultimate. Over time, moved to 2013.

We decided to make 2 CI servers: Jenkins and TFS server . At first, we had everything going once an hour in debug mode (debug mode). Then there was installation on the server, running smoke tests (tests that the application is running), and after that the uninstallation began. Sonar ran all the modular and integration tests once a day, at night. This solved the problem with the instability of the assembly and the early notification of developers about the problems. On the second CI server (TFS build server), we also had the build in debug mode. Then the installation was carried out on a test machine and the launch of functional autotests. After all the tests were completed (no matter whether it was successful or not), the uninstallation took place.

We decided to make 2 CI servers in order to separate the developers 'tests (modular and integration) from the testers' functional tests. Builds on Jenkins were done significantly more often to respond to falling tests immediately. And assemblies on TFS were carried out by the tester, if necessary, and daily at night, since the full cycle of functional testing takes a lot of time. In addition, so that everything was built in the Release configuration and the code, as a minimum, was always compiled, we introduced the Gated Check-in assembly. When programmers or testers upload changes to TFS, the entire system is first assembled in the Release configuration and changes are made to TFS only if it is successful. If there is a problem, the changes are not applied and the build in TFS remains working.

We began automating functional tests with a selection of their types. Microsoft offers the following:

- Unit tests that programmers write.

- Coded Ui Test is a type of test designed to automate functional tests and test the user interface.

- Web Performance Test - functional testing of web applications within the framework of the organization of load tests (Load Test).

- Load Test - tests for load testing web applications.

- Generic Test is a special kind of test that allows you to run external testing applications.

- Ordered Test - allows you to organize the execution of all written automatic tests in a specific order.

As a result, having thought it over, we decided that generic and ordered tests would be more suitable for us.

Generic test need to specify the * .exe file and the parameters that he needs to feed. Each test case in TFS was associated with a generic test. For autotests, we had one project for each component and when we run the autotest from TM, a generic test is called, which passes the current autotest number to the required * .exe file, and then the required method is executed.

The Build-Deploy-Test process as a whole looks like this: developers make changes, before uploading changes, the Build Server collects everything in the Release configuration and if the build passes, the changes are uploaded to the server.

Next in functional testing.

According to the Build-Deploy-Test approach, there are 3 roles - Build Controller (BC) , Test Controller (TC) and Test Agent (TA) . Our Build Controller is the same as our Build Server and Test Controller.

Build Controller monitors the builds of the project, it collects the binaries in the build folder (Gated Check In is not suitable here, there were separate build configurations for testing). Test Controller is needed for launch management, storage of test results. And the Test Agent receives a command from the TS on the launch of a test suite, a deployment team and all launch parameters, including a generic test suite.

The tester opens Microsoft Test Manager, selects there a test plan that he needs, a configuration for running, an assembly to be used, and a test environment. And that's it! The rest is done by Test Controller. It receives a set of parameters for a test run (including the location of the folder with binaries) and starts the installation on the test environment. After the installation script runs, the Test Agent launches all the selected tests one by one. After all the tests have been completed, a cleanup script is launched that removes the application and, after clearing the test system, returns it to the initial state. Then Test Agent transfers control to Test Controller, informing it of its readiness. Test ontroller takes away all the temporary folders of the test run, puts the results in TFS (you can see them later through TM) and displays the result of tests in TM - successfully completed tests and not very successful ones.

Test environment settings

All our builds are stored on the Build Server. There are also generic tests. After running autotests in Microsoft Test Manager (TM), Test Controller creates a temporary folder on the test machine where it copies generic tests and creates its own deploy.bat and clean.bat files. These scripts consist of 2 parts. The first is the definition of all startup variables, including the name of the build directory, and the second part is the contents of our deploy file, which we specified for these test settings.

Thus, it turns out that TC creates a wrapper for the script, which we indicated to it as the deploy script (let's call it the Call Deploy script).

In our case, this script copies the entire build directory to the test machine, then it calls another install script - the Deploy script that was in this build. We need this in order for the deployment script to have versioning so that it can be updated along with the build.

This script already performs all the preparations for the installation, since all the scripts are implemented in the same environment, they have access to the variables that the TC passed to them. Using them, the script updates the configuration and installs the application. After the deployment is complete, the Test Controller starts work, which instructs the Test Agent to perform the tests (the same generic tests that it copied into the temporary folder). These generic tests call the * .exe file at the path specified by it and pass the number of the test case as a parameter to the program. Autotest causes a specific method depending on the parameter passed to it.

After all auto tests are completed, Test Controller begins the cleaning process. To do this, it calls the wrapper script that it created at the beginning. It determines the parameters and calls the Call Clean script, which lies on all test systems. Call Clean runs the Clean script, which uninstalls the application, then collects all the logs, archives them and moves to the temporary launch folder created by Test Controller, so that after the completion of the cleanup, all the logs are loaded into TFS and picked up to run the tests.

We have builds that run the Build-Deploy-Test approach on a scheduled basis. To do this, a special process template is specified in the assembly definition. Once a week such assembly is launched. Its configuration indicates which tests need to be performed, with which settings and on which test environment the selected tests should be run.

The whole process starts with the launch on the Build Controller, which provides the build parameters for the Build Agent (which projects to build, in what configuration - we have this Release / Debug). After the Build Agent collects everything, the Build Controller transfers control to the Test Controller along with The parameters for running auto tests are a set of auto tests, a test environment and test settings.

Our company uses its own test settings and its own build for each test environment.

After launching the Test Controller, it starts the deployment process, then passes testing to the Test Agent, and after that the Test Controller runs the system cleanup script.

If the test environment consists of 2 machines, then this should be specified in the Test Manager, for them a single test environment is created, in which there will be 2 machines. Each machine has its own Test Agent connected to TFS so that these machines can be seen in TM. Each machine is assigned a separate role, for example, the Database Server and the Client.

In this case, the same will happen at startup, but the test environment will consist of 2 machines and the tests will run on only one machine, and the logs can also be assembled different depending on the role.

Problems Configuring the Build-Test-Deploy Process

As it turned out, powershell scripts are not suitable for deploy / clean scripts, only cmd or bat are suitable for them. And, moreover, if you ask him to use the batch file and call the powershell script from the batch file, Test Manager ignores this call. Similarly, it ignores all sleep, wait, and even ping of a nonexistent host with a timer (we needed sleep after stopping / starting the services). It was necessary even for such simple things to write separate crutches that were launched from a batch file.

As the project evolved, the tests evolved. At first it was a set of libraries, so it was possible in the deployment script to simply copy them and run tests, and then delete them. And everyone was happy. Then our gallant developers wrapped everything in msi-packages that had to be run with a lot of parameters. And delete accordingly. Here, the task has already become complicated with changes in the parameters that need to be submitted. For this, we used the parameters that Test ontroller transmits Test Agent. That was enough.

Then a lot of msi installation packages appeared, which should be installed in a certain sequence and with a lot of parameters and configuration files. In order for the configuration to take place depending on the test system, we replaced all the connection strings from the default string to the required one, replaced all paths, parameters, and so on (all this was also taken from the auto-test launch parameters). Still it was necessary to make removal of MSMQ (Microsoft Message Queuing) of queues. Simple batch files do not solve this problem, so I wrote such a script on PS. But then another surprise was waiting for - well, MicroSoft Test Manager does not want to work with its illustrious powershell. Inexplicably. As a result, this was again solved in the autotests themselves.

The final (at the moment) stage of the installation was a single program with a user interface, in which the necessary components and parameters are selected and it launches all the necessary installer files with parameters. Similarly, the removal. We automated the installation without having to set values in the user interface. The installer is fed to the input a configured xml file with the necessary parameters (some of which were used that can be transferred from the Test Controller) were used for installation and removal. Everything else is the same.

So, we have automated and set the whole process. Of course, the means of achieving continuous integration may differ significantly from the project, from the preferences, traditions and policies of the company. And we can help decide what is needed for your company.

By rsarvarova

Source: https://habr.com/ru/post/262173/

All Articles